Color and Texture Analysis of Textiles Using Image Acquisition and Spectral Analysis in Calibrated Sphere Imaging System-II

Abstract

:1. Introduction

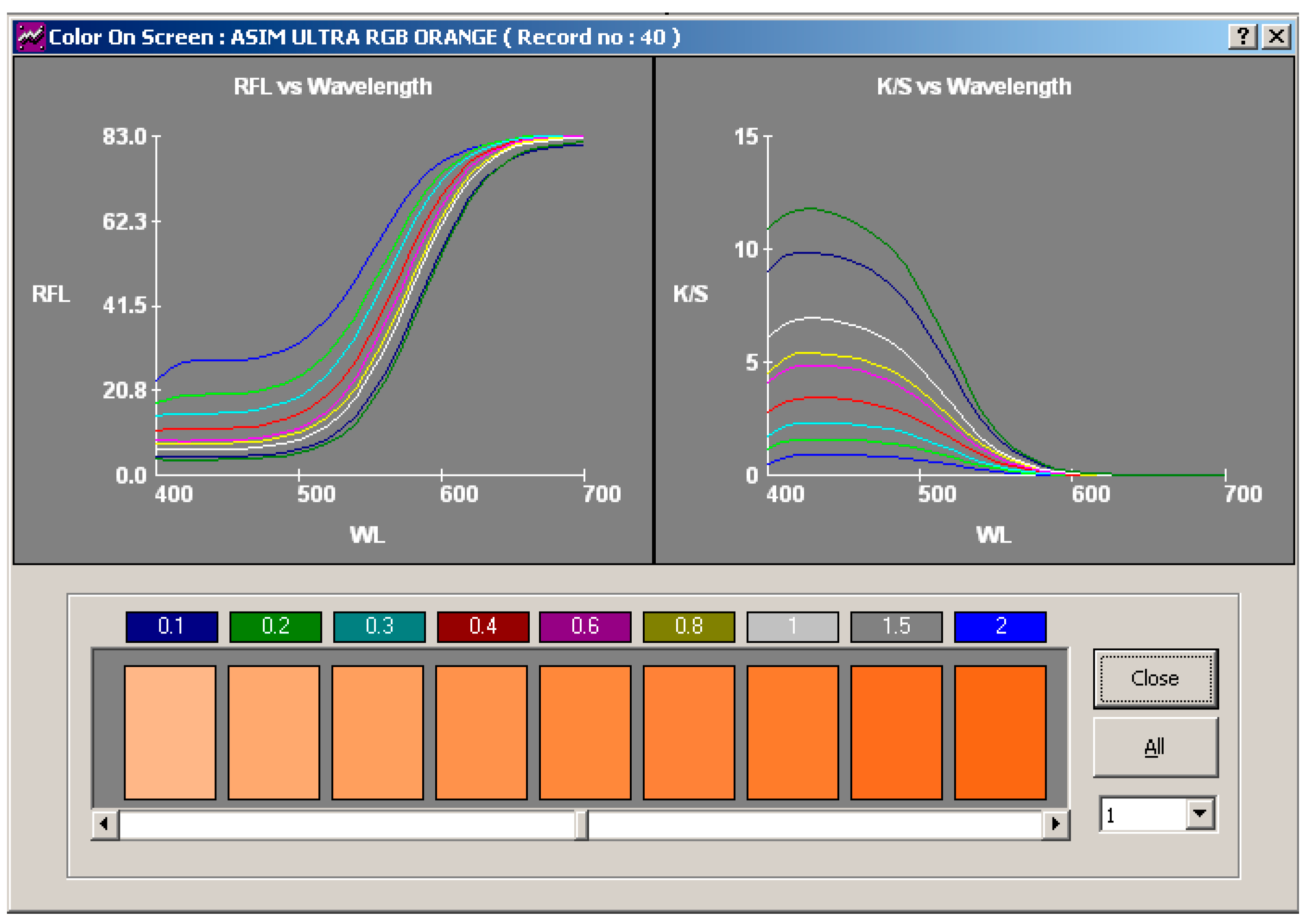

2. Materials and Methods

3. Results and Discussion

3.1. Three Types of Textures with Incremental Yellow Color Variations

3.2. Color Difference for Human Perception

3.3. Color Intensity for Quantitative Evaluation

- PLAIN: a = 181.97, b = 1.0034, c = −0.04.891; R2 = 0.996

- TWILL: a = 182.54, b = 1.008, c = −0.060; R2 = 0.9958

- M.TWILL: a = 183.04, b = 1.0084, c = −0.0626 R2 = 0.9942

3.4. Color Combinations and Verification of Various Color Space and CIE Chromaticity Visualizations

3.5. Reflectance Prediction in Terms of Calibrated RGB Polynomial Regression

- 3: R G B

- 8: R G B R×G×B R×G R×B G×B 1

- 11: R G B R×G×B R×G R×B G×B R2 G2 B2 1

- 20: R G B R×G×B R×G R×B G×B R2 G2 B2 R3

- G3 B3 G×R2 B×G2 R×B2 B×R2 R×G2 G×B2 1

- 23: R G B R×G×B R×G R×B G×B R2 G2 B2

- R3 G3 B3 G×R2 B×G2 R×B2 B×R2 R×G2 G×B2

- G×B×R2 B×R×G2 R×G×B2 1

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rout, N.; Baciu, G.; Pattanaik, P.; Nakkeeran, K.; Khandual, A. Color and Texture Analysis of Textiles Using Image Acquisition and Spectral Analysis in Calibrated Sphere Imaging System-I. Electronics 2022, 24, 3887. [Google Scholar] [CrossRef]

- Yao, P. Advanced Textile Image Analysis Based on Multispectral Color Reproduction. Ph.D. Dissertation, Hong Kong Polytechnic University, Hong Kong, China, 2022. [Google Scholar]

- Khandual, A.; Baciu, G.; Rout, N. Colorimetric processing of digital color image! Int. J. Adv. Res. Comp. Sc. Soft. Eng. 2013, 3, 103–107. [Google Scholar]

- Zhang, J.; Su, R.; Fu, Q.; Ren, W.; Heide, F.; Nie, Y. A survey on computational spectral reconstruction methods from RGB to hyperspectral imaging. Sci. Rep. 2022, 12, 11905. [Google Scholar] [CrossRef] [PubMed]

- Khandual, A.; Baciu, G.; Hu, J.; Zeng, E. Color Characterization for Scanners: Dpi and Color Co-Ordinate Issues. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2012, 2, 354–365. [Google Scholar]

- Ershov, E.; Savchik, A.; Shepelev, D.; Banić, N.; Brown, M.S.; Timofte, R.; Mudenagudi, U. NTIRE 2022 challenge on night photography rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1287–1300. [Google Scholar]

- Nayak, S.; Khandual, A.; Mishra, J. Ground truth study on fractal dimension of color images of similar texture. J. Text. Inst. 2018, 109, 1159–1167. [Google Scholar] [CrossRef]

- Nie, C.; Xu, C.; Li, Z.; Chu, L.; Hu, Y. Specular reflections detection and removal for endoscopic images based on brightness classification. Sensors 2023, 23, 974. [Google Scholar] [CrossRef] [PubMed]

- Abdulateef, S.K.; Hasoon, A.N. Comparison of the components of different color spaces to enhanced image representation. J. Image Process. Intell. Remote Sens. 2023, 3, 11–17. [Google Scholar]

- Lin, Y.T.; Finlayson, G.D. An investigation on worst-case spectral reconstruction from RGB images via Radiance Mondrian World assumption. Color Res. Appl. 2023, 48, 230–242. [Google Scholar] [CrossRef]

- Gupte, V.C. Color Technology: Tools, Techniques and Applications; Woodhead Publishing: Sawston, UK, 2008. [Google Scholar]

- Kandi, S.G. The Effect of Spectrophotometer Geometry on the Measured Colors for Textile Samples with Different Textures. J. Eng. Fibers Fabr. 2011, 6, 70–78. [Google Scholar] [CrossRef]

- Süsstrunk, S.; Buckley, R.; Swen, S. Standard RGB color spaces. In Proceedings of the IS&T;/SID 7th Color Imaging Conference, Lausanne, Switzerland, 16–19 November 1999; pp. 127–134. [Google Scholar]

- Sciuto, G.L.; Capizzi, G.; Gotleyb, D.; Linde, S.; Shikler, R.; Woźniak, M.; Połap, D. Combining SVD and co-occurrence matrix information to recognize organic solar cells defects with a elliptical basis function network classifier. In Proceedings of the Artificial Intelligence and Soft Computing: 16th International Conference, ICAISC 2017, , Proceedings, Part II 16, Zakopane, Poland, 11–15 June 2017; Springer International Publishing: Berlin/Heidelberg, Germany; pp. 518–532. [Google Scholar]

- Lo Sciuto, G.; Capizzi, G.; Shikler, R.; Napoli, C. Organic solar cells defects classification by using a new feature extraction algorithm and an EBNN with an innovative pruning algorithm. Int. J. Intell. Syst. 2021, 36, 2443–2464. [Google Scholar] [CrossRef]

- Bruce Justin Lindbloom’s Spectral Calculator Spreadsheet. Available online: http://www.brucelindbloom.com/ (accessed on 1 March 2023).

- Khandual, A.; Baciu, G.; Hu, J.; Zheng, D. Colour characterization for scanners: Validations on Textiles & Paints. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2001, 3, 1008–1013. [Google Scholar]

- Baciu, G.; Khandual, A.; Hu, J.; Xin, B. Device and Method for Testing Fabric Color. CN 101788341 B Patent 1 October 2012. [Google Scholar]

| Image Plain | R | G | B | L | a | b | DERGB | DE |

|---|---|---|---|---|---|---|---|---|

| Y42 | 204.533 | 209.373 | 157.184 | 82.264 | −10.591 | 40.672 | 0.000 | 0.000 |

| Y45 | 208.447 | 207.940 | 135.769 | 81.522 | −11.025 | 52.999 | 21.817 | 12.357 |

| Y48 | 209.246 | 207.691 | 130.600 | 81.427 | −10.942 | 56.523 | 27.051 | 15.877 |

| Y51 | 210.326 | 206.055 | 121.697 | 80.914 | −10.662 | 61.406 | 36.109 | 20.778 |

| Y54 | 211.899 | 205.708 | 116.833 | 80.870 | −10.024 | 64.534 | 41.182 | 23.910 |

| Y55 | 217.369 | 207.660 | 109.919 | 82.483 | −9.235 | 70.781 | 49.007 | 30.141 |

| Y58 | 219.379 | 205.177 | 99.177 | 82.355 | −8.232 | 75.616 | 60.023 | 35.024 |

| Y61 | 219.503 | 203.863 | 95.189 | 82.013 | −7.573 | 76.966 | 64.015 | 36.420 |

| Y64 | 219.910 | 201.003 | 88.265 | 81.746 | −7.075 | 79.624 | 71.108 | 39.114 |

| Y67 | 221.463 | 201.337 | 85.567 | 81.241 | −6.406 | 81.147 | 74.028 | 40.704 |

| Y70 | 221.886 | 199.651 | 80.806 | 80.811 | −5.926 | 82.224 | 78.926 | 41.839 |

| Image Twill | R | G | B | L | a | b | DERGB | DE |

| Y43 | 213.043 | 212.648 | 157.694 | 84.994 | −10.574 | 43.934 | 9.132 | 4.254 |

| Y46 | 214.214 | 211.979 | 136.878 | 84.005 | −10.878 | 56.473 | 22.646 | 15.899 |

| Y49 | 213.269 | 208.123 | 126.858 | 83.784 | −10.610 | 62.748 | 31.584 | 22.129 |

| Y52 | 215.311 | 209.160 | 120.162 | 82.911 | −10.050 | 66.032 | 38.559 | 25.374 |

| Y56 | 221.901 | 208.694 | 107.559 | 84.147 | −7.277 | 75.269 | 52.581 | 34.807 |

| Y59 | 225.645 | 204.784 | 91.718 | 83.460 | −5.961 | 80.494 | 68.939 | 40.108 |

| Y62 | 224.473 | 202.668 | 88.074 | 83.045 | −5.239 | 82.006 | 72.241 | 41.686 |

| Y65 | 226.816 | 201.740 | 84.951 | 82.349 | −4.162 | 82.504 | 75.977 | 42.324 |

| Y68 | 227.116 | 198.948 | 81.704 | 82.181 | −3.247 | 82.999 | 79.473 | 42.960 |

| Y71 | 226.662 | 195.977 | 75.430 | 81.522 | −2.684 | 85.341 | 85.749 | 45.370 |

| Image M. Twill | R | G | B | L | a | b | DERGB | DE |

| Y44 | 217.647 | 216.200 | 152.434 | 87.016 | −10.600 | 48.633 | 15.528 | 9.271 |

| Y47 | 218.018 | 213.410 | 129.578 | 85.588 | −9.775 | 63.200 | 30.987 | 22.787 |

| Y50 | 218.583 | 212.677 | 124.088 | 85.232 | −9.178 | 68.215 | 36.107 | 27.739 |

| Y53 | 219.456 | 210.864 | 115.429 | 84.960 | −8.427 | 70.903 | 44.367 | 30.428 |

| Y57 | 213.761 | 202.186 | 102.455 | 80.302 | −8.790 | 71.804 | 55.965 | 31.246 |

| Y60 | 213.628 | 200.362 | 96.945 | 79.861 | −8.503 | 73.383 | 61.585 | 32.866 |

| Y63 | 215.491 | 201.274 | 95.167 | 79.881 | −8.519 | 73.122 | 63.496 | 32.604 |

| Y66 | 215.868 | 199.699 | 89.600 | 79.372 | −7.628 | 76.591 | 69.208 | 36.157 |

| Y69 | 214.343 | 195.389 | 84.453 | 78.937 | −7.086 | 78.145 | 74.710 | 37.784 |

| Working | Reference | Range = [0.0, 1.0] | Range = [0, 255] | ||||

|---|---|---|---|---|---|---|---|

| Space | Illuminant | Red | Green | Blue | Red | Green | Blue |

| Adobe RGB (1998) | D65 | 0.4947 | 0.4219 | 0.4095 | 126 | 108 | 104 |

| Apple RGB | D65 | 0.4440 | 0.3459 | 0.3347 | 113 | 88 | 85 |

| Best RGB | D50 | 0.4933 | 0.4333 | 0.4120 | 126 | 110 | 105 |

| Beta RGB | D50 | 0.4913 | 0.4270 | 0.4115 | 125 | 109 | 105 |

| Bruce RGB | D65 | 0.5099 | 0.4219 | 0.4095 | 130 | 108 | 104 |

| CIE RGB | E | 0.5120 | 0.4322 | 0.4130 | 131 | 110 | 105 |

| ColorMatch RGB | D50 | 0.4444 | 0.3454 | 0.3333 | 113 | 88 | 85 |

| Don RGB 4 | D50 | 0.4934 | 0.4285 | 0.4115 | 126 | 109 | 105 |

| ECI RGB v2 | D50 | 0.5319 | 0.4586 | 0.4445 | 136 | 117 | 113 |

| Ekta Space PS5 RGB | D50 | 0.4983 | 0.4275 | 0.4114 | 127 | 109 | 105 |

| NTSC RGB | C | 0.4922 | 0.4251 | 0.4122 | 126 | 108 | 105 |

| PAL/SECAM RGB | D65 | 0.5166 | 0.4219 | 0.4088 | 132 | 108 | 104 |

| ProPhoto RGB | D50 | 0.4071 | 0.3599 | 0.3386 | 104 | 92 | 86 |

| SMPTE-C RGB | D65 | 0.5261 | 0.4199 | 0.4092 | 134 | 107 | 104 |

| sRGB | D65 | 0.5246 | 0.4233 | 0.4098 | 134 | 108 | 105 |

| Wide Gamut RGB | D50 | 0.4875 | 0.4326 | 0.4109 | 124 | 110 | 105 |

| Dye Conc. | Plain | Twill | M. Twill | |

|---|---|---|---|---|

| 0.25 | 194.656 | 199.113 | 200.631 | |

| 0.5 | 188.669 | 191.246 | 191.7 | |

| 0.75 | 185.828 | 186.492 | 186.726 | |

| 1 | 182.218 | 183.356 | 184.989 | |

| INTENSITY | 1.5 | 179.798 | 180.277 | 180.537 |

| 2 | 175.681 | 176.884 | 176.851 | |

| 3 | 174.65 | 176.792 | 177.01 | |

| 4 | 173.182 | 173.664 | 173.835 | |

| 5 | 170.434 | 172.941 | 173.575 | |

| 6 | 170.315 | 171.017 | 170.746 |

| Expt. RGB | Dye Conc. | |||||

|---|---|---|---|---|---|---|

| R1 | G1 | B1 | Dye A | Dye B | Dye C | |

| 244.94 | 245.19 | 245.07 | White plate Start | 0 | 0 | 0 |

| 212.67 | 215.70 | 220.67 | Plain | 0 | 0 | 0 |

| 221.82 | 224.30 | 229.30 | Twill | 0 | 0 | 0 |

| 222.30 | 223.41 | 228.29 | M.Twill | 0 | 0 | 0 |

| 225.8854 | 183.092725 | 161.5266 | 1 | |||

| 228.9698 | 171.01615 | 145.9466 | 2 | |||

| 231.4409 | 157.77435 | 128.4438 | 3 | |||

| 229.0542 | 151.03805 | 120.8743 | 4 | |||

| 132.9036 | 187.883875 | 199.5574 | 1 | |||

| 103.2905 | 171.487225 | 186.7164 | 2 | |||

| 81.85803 | 154.90475 | 171.7595 | 3 | |||

| 68.36905 | 147.47215 | 165.4398 | 4 | |||

| 226.5088 | 143.528725 | 168.8932 | 1 | |||

| 237.1594 | 109.479525 | 143.0099 | 2 | |||

| 237.0265 | 93.90495 | 128.7294 | 3 | |||

| 237.1948 | 76.637475 | 114.6642 | 4 | |||

| 66.98825 | 86.420775 | 68.52315 | 1 | 1 | ||

| 42.9088 | 54.638625 | 40.33238 | 2 | 2 | ||

| 32.21978 | 40.2767 | 31.8978 | 3 | 3 | ||

| 33.49718 | 37.416775 | 31.14613 | 4 | 4 | ||

| 236.8776 | 47.72975 | 53.10033 | 1 | 1 | ||

| 228.1448 | 32.008575 | 40.05553 | 2 | 2 | ||

| 219.2528 | 22.077875 | 32.17638 | 3 | 3 | ||

| 216.452 | 20.80095 | 30.01298 | 4 | 4 | ||

| 75.47473 | 56.261325 | 81.50173 | 1 | 1 | ||

| 51.27668 | 37.812475 | 56.64295 | 2 | 2 | ||

| 40.11753 | 31.79935 | 45.60925 | 3 | 3 | ||

| 34.06043 | 26.35805 | 38.14755 | 4 | 4 | ||

| 122.5145 | 90.82425 | 96.30858 | 0.5 | 0.5 | 0.5 | |

| 88.75988 | 60.102025 | 62.1925 | 1 | 1 | 1 | |

| 28.35833 | 33.9254 | 37.27445 | 2 | 2 | 2 | |

| 245.04 | 245.13 | 245.36 | White plate end |

: Dye A -Ultra-RGB Carmen, Dye -B - Ultra-RGB Navy Blue, Dye -C: Ultra-RGB Red.

: Dye A -Ultra-RGB Carmen, Dye -B - Ultra-RGB Navy Blue, Dye -C: Ultra-RGB Red.| D65_64 | 3 coeff | 8 coeff | 11 coeff | 20 coeff | 23 Coeff |

| DE Avg | 2.3401 | 1.5 | 1.3221 | 0.4106 | 0.3072 |

| DE Max | 4.0535 | 2.72 | 2.9029 | 1.0776 | 1.0373 |

| DE Min | 0.8291 | 0.4039 | 0.0873 | 0.1007 | 0.0595 |

| DE std | 0.848 | 0.7361 | 0.7566 | 0.2691 | 0.2486 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rout, N.; Hu, J.; Baciu, G.; Pattanaik, P.; Nakkeeran, K.; Khandual, A. Color and Texture Analysis of Textiles Using Image Acquisition and Spectral Analysis in Calibrated Sphere Imaging System-II. Electronics 2023, 12, 2135. https://doi.org/10.3390/electronics12092135

Rout N, Hu J, Baciu G, Pattanaik P, Nakkeeran K, Khandual A. Color and Texture Analysis of Textiles Using Image Acquisition and Spectral Analysis in Calibrated Sphere Imaging System-II. Electronics. 2023; 12(9):2135. https://doi.org/10.3390/electronics12092135

Chicago/Turabian StyleRout, Nibedita, Jinlian Hu, George Baciu, Priyabrata Pattanaik, K. Nakkeeran, and Asimananda Khandual. 2023. "Color and Texture Analysis of Textiles Using Image Acquisition and Spectral Analysis in Calibrated Sphere Imaging System-II" Electronics 12, no. 9: 2135. https://doi.org/10.3390/electronics12092135

APA StyleRout, N., Hu, J., Baciu, G., Pattanaik, P., Nakkeeran, K., & Khandual, A. (2023). Color and Texture Analysis of Textiles Using Image Acquisition and Spectral Analysis in Calibrated Sphere Imaging System-II. Electronics, 12(9), 2135. https://doi.org/10.3390/electronics12092135