1. Introduction

Converting color images to grayscale is a significant technique in the field of image processing. This transformation may seem simple, but the value and wide range of applications it encompasses should not be overlooked. Color images are typically composed of three color channels: red, green, and blue. Converting them to grayscale simplifies these into a single channel representing luminance. This simplification plays a key role in reducing data complexity, thereby greatly enhancing the efficiency of image processing and computation.

Grayscale images hold unique application value across multiple domains [

1]. Their importance is particularly evident in tasks such as pattern recognition [

2], moving target tracking [

3], and image segmentation [

4], where complex color information might interfere with the discernment of critical details. In the field of medical imaging [

5,

6], grayscale images are widely used as they enhance the visibility of structural details, aiding physicians in making more accurate diagnoses. Similarly, in satellite imagery and aerial photography [

7], grayscale images are favored for their high clarity and contrast, providing a clearer perspective for analysis and interpretation. Additionally, grayscale images have a unique aesthetic value in the art world [

8]. They can convey rich emotions and textures in a simple form, something that is often challenging for color images. In cutting-edge areas of computer vision and machine learning [

9,

10], the role of grayscale images is indispensable. These provide algorithms with a simplified form of visual data, making the processing more efficient while still retaining the essential structure and texture information of the images. This simplification not only improves the efficiency of algorithm training and reduces computational burden but often leads to significant improvements in the performance of machine learning models.

Existing decolorization methods primarily fall into two categories: global and local methods. Global methods, such as basic grayscale conversion and luminance-based approaches, apply a uniform transformation across the entire image. While these methods are computationally efficient, they often fail to preserve local image features like textures and structures, leading to a loss of detail in areas with subtle color differences. Local methods, including edge-aware decolorization and region-based techniques, focus on preserving edge information and regional contrasts. However, these methods can sometimes overemphasize edges or create artifacts, thereby distorting the texture and structural integrity of the original image. Moreover, many of these local methods are computationally intensive, making them less suitable for real-time applications.

Both global and local methods often struggle to balance the preservation of local details with overall smoothness, resulting in the loss of texture and structural information. This is because these existing decolorization techniques rely on simple linear transformations or fixed mappings, failing to fully consider the complex relationships between color channels and their impact on image features. For instance, many existing methods use luminance-based techniques, converting color images to grayscale based on brightness values. While effective in certain scenarios, these methods overlook chromatic information, which plays a crucial role in preserving texture details and structural information. Consequently, these methods can produce faded images and noticeable artifacts, such as color bleed or loss of fine details. Furthermore, most of these methods treat the three color channels (red, green, blue) as independent entities, disregarding their interrelations. This approach overlooks the inherent interdependency between channels, leading to disjointed handling of texture and structural information.

In summary, the main limitations of current color image grayscale conversion methods lie in information loss and the blurring of details. The decolorization method based on weighted averaging simplifies multiple color channels of a color image into a single grayscale channel. This simplification often results in the loss of a large amount of color information, making the converted grayscale image lack the rich details, color layers, and contrast of the original image. This loss of information not only reduces the visual effect of the image, but may also have a negative impact on the accuracy of subsequent image processing and analysis. In addition, existing decolorization methods only consider brightness information during the decolorization process, ignoring the interaction and correlation between color channels, often resulting in blurring and confusion of textures and details. In complex image scenes with rich natural textures or multiple similar color regions, this challenge is particularly evident, making it difficult to accurately distinguish the boundaries and details of different regions after decolorization, which in turn poses challenges for image segmentation, feature extraction, and object recognition tasks. Although there are many approaches that attempt to address these two major limitations, there has been no good solution due to the inherent complexity of balancing local detail preservation and global image integrity.

This paper addresses the shortcomings of existing decolorization methods, which fail to adequately maintain both local detail features and global smooth features simultaneously. It proposes a quaternion-based contrast-preserving decolorization method with singular value adaptive weighting, which more effectively preserves key information and thus enhances the quality and clarity of grayscale images. This method utilizes quaternion theory to overcome the limitations of existing color image processing methods that neglect the correlation between the three channels of color images, failing to maintain global smooth features. It also uses the theory of low-rank approximation decomposition of singular values to overcome the limitations of existing color image processing methods that fail to maintain local detail features of color images effectively. The contributions of this paper are primarily in the following four aspects:

- (1)

Proposing a contrast-preserving decolorization algorithm based on quaternion chromatic distance.

- (2)

Proposing a singular value-weighted fusion decolorization algorithm based on low-rank matrix approximation.

- (3)

Proposing an adaptive singular value-weighted fusion strategy to combine the above two algorithms.

- (4)

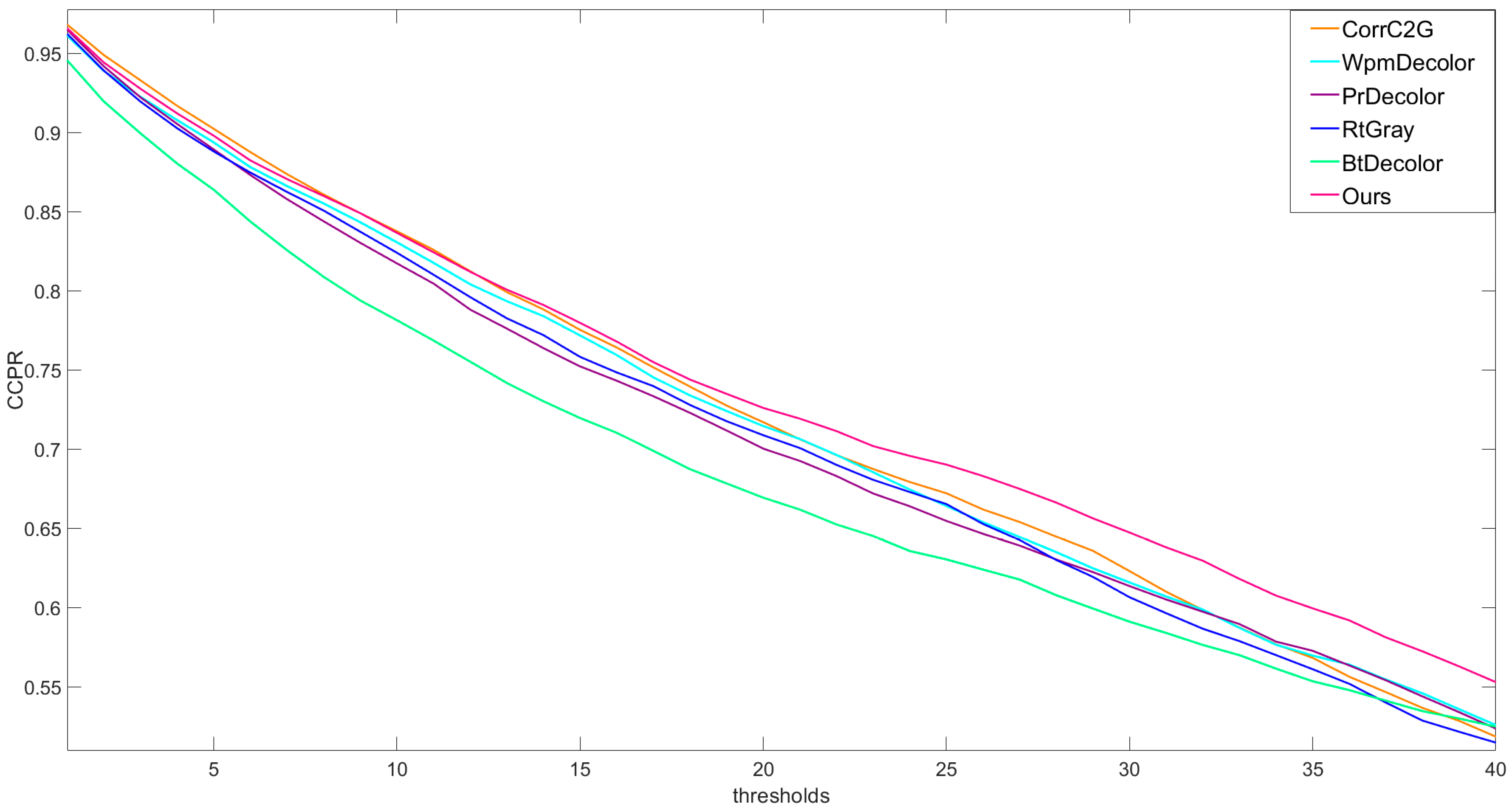

Conducting extensive experimental validation of the proposed decolorization method. The experimental results show that the quaternion-based contrast-preserving decolorization method with singular value adaptive weighting proposed in this paper achieves excellent results in both subjective visual perception and objective evaluation metrics.

This paper is organized as follows: In

Section 2, related studies on image decolorization are briefly introduced.

Section 3 introduces the decolorization method based on quaternion chromaticity contrast preservation.

Section 4 introduces the decolorization method based on singular value adaptive weighted fusion.

Section 5 introduces the strategy of fusing the two decolorization methods to obtain the final proposed singular value adaptive weighted quaternion chromaticity contrast preservation decolorization method.

Section 6 describes the experimental results and analysis. Finally, we provide our conclusions in

Section 7.

2. Related Work

Image decolorization is the conversion of a color image with three channels into a gray image with a single channel. With the advantages of low data redundancy and fast processing, graying images can be used for the efficient calculation of image gradient information. However, image decolorization shrinks the size of the input image and certainly cannot preserve full detail information of the original color images [

11]. To retain as much information as possible from the original input color images, a plethora of decolorization methods have been continuously proposed.

Traditional image decolorization algorithms typically extract the luminance channel values to produce a final grayscale image after converting the RGB color space of the input image to another color space [

12]. These methods are unable to discern between areas of the picture that have distinct colors but the same luminance. In addition to the traditional method, other researchers have proposed a variety of innovative image decolorization algorithms to better preserve the contrast, structure and other feature information of the original color image in the obtained grayscale images [

13]. Depending on whether the mapping function can be applied to all pixels of the input image, these algorithms can be classified into global mapping methods and local mapping methods.

Global mapping methods usually apply the same transformation to the over-all pixels of a color image. Kuk et al. [

14] have considered both local and global contrast and measure contrast by using gradient fields. Grund land et al. [

15] have proposed a dimensionality reduction analysis method to make real-time improvements using Gaussian pairing for image sampling and principal component analysis. Song et al. [

16] have presented a global energy function that transforms the color image grayscale problem into a supervised dimensionality reduction and regression problem. Lu et al. [

17] have developed a second-order multi-variate parametric model and have minimized the energy function to maintain image contrast. Zhou et al. have proposed a visual contrast model based on saliency [

18]. Chen et al. have chosen gradient and saliency as grayscale processes to preserve the features of local and global visual perception [

19]. Because it is the same mapping of all pixels, it is highly possible that the local features of the color image will not be preserved after decoloring the image.

In contrast with the global method, the local mapping methods use different mapping functions for pixels. Bala et al. [

12] have introduced high-frequency chromaticity information into the luminance channel, which locally preserved the differences between adjacent colors. Neumann et al. [

20] have solved the gradient field inconsistency problem by treating color and luminance as gradient fields. Ancuti et al. [

21] have improved the matching performance by local operators, which avoided the use of color quantization or the corruption of gradient information.

The grayscale image obtained by the local mapping method can maintain partial structural information and local contrast information of the image. However, these methods are often mapped unevenly, which leads to the problems of halos and noise in the grayscale results. Moreover, the application of local methods often requires a huge amount of computation.

In the field of image processing, mixed methods have effectively addressed both the global structure and local details in color images. Liu and Zhang [

22] have demonstrated the efficacy of a full convolutional network when integrating a diverse range of features, including global and local semantics, as well as exposure aspects, into their methodology. This approach marks a significant stride in capturing the essence of color images in a comprehensive manner. Yu et al. [

23] have developed a two-stage decolorization method involving histogram equalization and local variance maximization. Zhang and Wang [

24] have introduced a parametric model combining image entropy with the Canny edge retention ratio for decolorization. Additionally, Yu et al. [

25] have utilized an enhanced non-linear global mapping method for grayscale conversion, followed by a detail-enhancing algorithm based on rolling guided filtering.

3. Quaternion Chromaticity Contrast Preserving Decolorization

Many studies have shown that using the theory and methods of quaternions to study color image processing techniques can overcome many of the shortcomings and deficiencies of existing color image processing methods [

26,

27,

28]. Using the representation of quaternions, a color pixel with three components can be represented as a whole, and the relevant operation rules of quaternions can be used to process color images without destroying the correlation between the three channels of color pixels. A color pixel

with three channels

r,

g and

b, can be represented as a pure quaternion:

The main diagonal of a pure quaternion space is defined as a grayscale line, because all points on this line correspond to grayscale pixels. Based on the concept of the grayscale line, Sangwine et al. [

29] derived and analyzed the rotation theory of quaternions. According to the rotation theory of quaternions, a unit quaternion and its conjugate quaternion can be expressed as:

Using the related operations of quaternions, the result of

represents the new quaternion obtained by rotating the quaternion

by 180 degrees around the grayscale line. Using geometric calculation rules, the projection of any quaternion on the grayscale line can be obtained as

:

The grayscale line represents the brightness level of the color pixel, so

can represent the brightness component of the color pixel. In addition to the brightness component, the color pixel also contains a chromaticity component. Because, in the quaternion space, the color pixel and its brightness component are represented by appropriate amounts, it can be considered that the color pixel is obtained by adding the two vectors of the brightness component and the chromaticity component, that is,

where

represents the chromaticity component of the color pixel. According to Formulas (3) and (4), we can obtain the following formula for calculating the chromaticity component of a color pixel:

Therefore, the chromaticity difference between two color pixels

and

can be calculated by the following formula

where

represents the chromaticity component of color pixel

, and

represents the chromaticity component of color pixel

. It is clear that the chromaticity differences calculated by Equation (6) are all quaternions, and cannot be directly used for distance measurement, as distance is a scalar. A common approach is to use the magnitude of the quaternion to represent the distance, that is, the chromaticity distance

between two color pixels can be expressed as:

The constant in Formula (7) is used to normalize the distance, ensuring that the maximum value of the chromaticity distance is 255. This ensures that the chromaticity distance has the same dimension as the distance between single-channel grayscale pixels.

This article uses this chromaticity distance formula to improve the real-time contrast preserving grayscale method proposed by Lu et al. [

17] and proposes a contrast preserving grayscale method based on quaternion chromaticity distance. The specific implementation process is as follows:

Step 1: Construct an objective function for contrast preservation:

where

represents the input color image,

is the grayscale value corresponding to the three channels of the color image;

and

represent the positions of the two pixels;

represents a specified region;

represents the grayscale contrast between the two pixels after grayscale processing;

represents the chromaticity contrast between the two pixels;

is a given constant used to control the color distribution in the color image;

is a weight value determined according to the following formula:

Step 2: After reducing the image to a size of 64 × 64 using the nearest neighbor interpolation method, the color image is gray scaled according to the given combination coefficients,

. The gray-scaling process can be represented by the following formula:

Step 3: Selecting the optimal combination coefficients as the coefficients for grayscale conversion of the color image. The optimal combination coefficients refer to the set of coefficients that minimize the value of the objective function, which can be expressed mathematically as:

4. Singular Value Adaptive Weighted Refusion Decolorization

Singular value decomposition (SVD) is a matrix numerical analysis tool that is widely used in image processing [

30], typically focusing on image quality evaluation, image compression, image watermarking, and other fields. For a real matrix A with size

, the singular value decomposition can be expressed as:

where

is an

orthogonal matrix,

is an

orthogonal matrix, and

is an

diagonal matrix with singular values on the diagonal and 0 elsewhere. The singular values decrease sequentially down the diagonal. In image processing tasks based on singular value decomposition, most of the singular values in the

matrix are split, and then the rows and columns of the

matrix and

matrix are split to achieve matrix reorganization for extracting important features in the image. Let

and

. According to the matrix correlation operation rules, the matrix

can be further decomposed into the following vector product linear combination:

where

represents the singular value and satisfies the size relationship

. From the above formula, it can be seen that each singular value has its corresponding energy contribution when the matrix is restructured. In practical scenarios, the larger the singular value, the greater the energy contribution, and the more features are retained after restructuring. A single-channel image

can be viewed as a matrix. Let

, according to the Formula (13), we can obtain the following result:

where

represents the

nth restructuring matrix of image

. The amount of information contained in the original image is determined by the size of the singular value

corresponding to the restructuring matrix. The larger the singular value, the greater the information energy, representing the richer the features contained in the part. For color images, the size of the singular value for each channel represents the proportion of information energy in the entire color image. Based on this analysis, the proportion of the singular value size can be used as an adaptive weight for the fusion of each restructuring matrix. The calculation formula for the adaptive weight can be expressed as

where,

represent the

ith singular value of the

r,

g and

b channels, respectively. Using this adaptive weight, we can obtain the adaptive weighted reconstruction expression for the three channels of the color image:

where

are the reconstructed matrices of the

ith singular value of the

r,

g and

b channels, respectively, and

is the adaptive weight described above. Finally, the resulting channel adaptive weighting matrix is fused to obtain the final grayscale image

:

It is clear that the grayscale result obtained by this method is based on the distribution characteristics of information energy at the pixel level for three-channel fusion, so the resulting grayscale result can well preserve the local feature information of the original color image.

7. Conclusions

In this work, we introduce an innovative contrast-preserving image decolorization technique utilizing singular value decomposition (SVD). This method is the first to adopt the idea of image decomposition for color image decolorization. Through a novel application of SVD, we determine the energy weights of a three-channel image, thereby effectively capturing its subtle local details based on the energy contributions of singular values. Additionally, our method ingeniously integrates global weights derived from a quaternion chromatic distance-based decolorization approach, harnessing the benefits of global mapping in the decolorization process. The proposed method adeptly balances the reduction of image dimensionality with the preservation of intricate details. This balance renders it particularly advantageous for fast-paced and accuracy-demanding applications such as real-time monitoring, real-time image editing, and advanced image recognition systems. In these contexts, the preservation of structural details and contrast is paramount, and our approach adeptly processes images while minimizing the loss of these critical visual features.

Furthermore, the potential applications of this method extend to the realms of digital media and web design. Its efficacy in maintaining the visual attractiveness and informational richness of the original color images during grayscale conversion makes it an invaluable tool in these fields. Additionally, given its proficiency when conserving essential visual features, this technique is also highly applicable in sophisticated computer vision and digital imaging tasks, like automatic image analysis and processing, where the stakes for image quality are particularly high.

In summary, the method presented in this paper offers a technically sound and efficient solution for high-quality grayscale image conversion across various practical applications. This innovation is not merely a significant stride in image processing research but also a practical asset for diverse real-world applications.