How to Design and Evaluate mHealth Apps? A Case Study of a Mobile Personal Health Record App

Abstract

1. Introduction

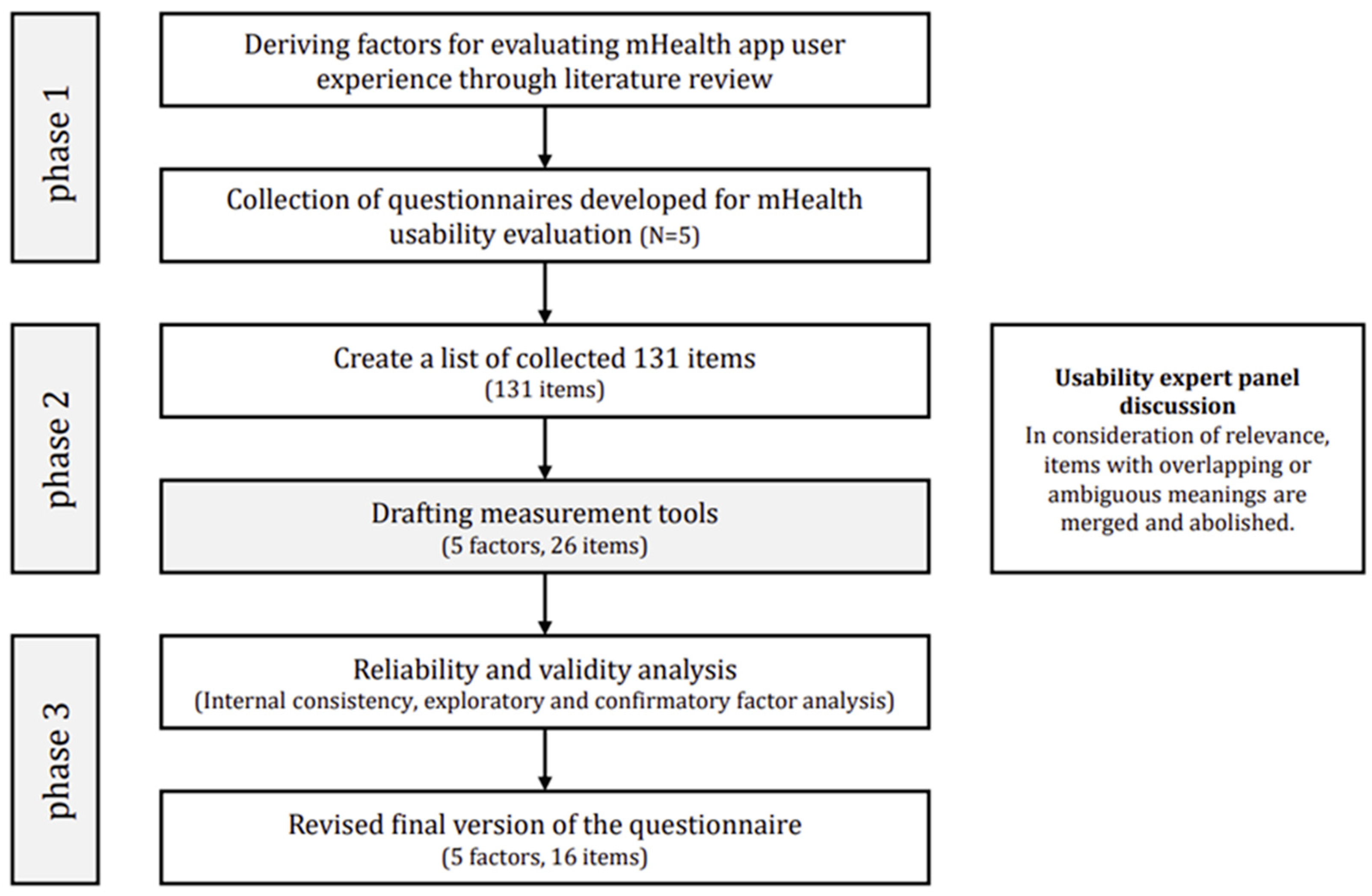

2. Materials and Methods

2.1. Literature Reviews on Previous Mobile Health App Measurements

2.2. Derivation of User Experience Evaluation Scale Factors

2.3. Development of the User Experience Evaluation Scale for mHealth Apps

2.4. Validation Study for the User Experience Evaluation Scale for mHealth Apps

2.4.1. Study Participants

2.4.2. Study Design

3. Results

3.1. Validation Study for the User Experience Evaluation Scale for mHealth Apps

3.1.1. Results of Exploratory Factor Analysis

3.1.2. Results of Confirmatory Factor Analysis

3.1.3. User Experience Evaluation Scale for mHealth Apps

3.2. Case Study

3.2.1. Usability Testing of mHealth Apps

3.2.2. Prototyping a PHR App That Reflects User Experience

3.2.3. User Testing of the Improved PHR App

4. Discussion

4.1. Principal Results

4.2. Limitations

4.2.1. Review of a Validation Study of the User Experience Evaluation Scale for mHealth Apps

4.2.2. Review of Case Study Results to Improve PHR Apps

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abb. | Definition |

| mHealth | Mobile health |

| PHRs | Personal health records |

| Questionnaires | |

| SUS | System Usability Scale |

| PSSUQ | Post-Study System Usability Questionnaire |

| Health-ITUES | Health Information Technology Usability Evaluation Scale |

| MARS | Mobile Application Rating Scale |

| uMARS | Mobile Application Rating Scale, User Version |

| MAUQ | mHealth App Usability Questionnaire |

| USE | Usefulness, Satisfaction, and Ease of Use |

| SUMI | Software Usability Measurement Inventory |

| Statistical terminology | |

| EFA | Exploratory factor analysis |

| CFA | Confirmatory factor analysis |

| KMO | Kaiser–Meyer–Olkin index |

| TLI | Tucker–Lewis Index |

| SE | Standard error |

| CR | Composite reliability |

| AVE | Average variance extracted |

| df | Degree of freedom |

| CFI | Comparative fit index |

| RMSEA | Root-mean-squared error of approximation |

| CMV | Common method variance |

References

- World Health Organization. [WHO Guideline]: Recommendations on Digital Interventions for Health System Strengthening; World Health Organization: Geneva, Switzerland, 2019.

- Schnall, R.; Bakken, S.; Rojas, M.; Travers, J.; Carballo-Dieguez, A. Mhealth technology as a persuasive tool for treatment, care and management of persons living with HIV. AIDS Behav. 2015, 19 (Suppl. S2), 81–89. [Google Scholar] [CrossRef]

- Aitken, M.; Lyle, J. Patient Adoption of Mhealth: Use, Evidence and Remaining Barriers to Mainstream Acceptance; IMS Institute for Healthcare Informatics: Parsippany, NJ, USA, 2015. [Google Scholar]

- Cortez, N.G.; Cohen, I.G.; Kesselheim, A.S. FDA regulation of mobile health technologies. New Engl. J. Med. 2014, 371, 372–379. [Google Scholar] [CrossRef]

- Byambasuren, O.; Sanders, S.; Beller, E.; Glasziou, P. Prescribable mhealth apps identified from an overview of systematic reviews. NPJ Digit. Med. 2018, 1, 12. [Google Scholar] [CrossRef]

- Cho, H.; Yen, P.Y.; Dowding, D.; Merrill, J.A.; Schnall, R. A multi-level usability evaluation of mobile health applications: A case study. J. Biomed. Inform. 2018, 86, 79–89. [Google Scholar] [CrossRef]

- Emerson, M.R.; Buckland, S.; Lawlor, M.A.; Dinkel, D.; Johnson, D.J.; Mickles, M.S.; Fok, L.; Watanabe-Galloway, S. Addressing and evaluating health literacy in mhealth: A scoping review. mHealth 2022, 8, 33. [Google Scholar] [CrossRef]

- Nutbeam, D. Health promotion glossary. Health Promot. 1986, 1, 113–127. [Google Scholar] [CrossRef]

- Pelikan, J.M.; Ganahl, K.; Roethlin, F. Health literacy as a determinant, mediator and/or moderator of health: Empirical models using the European Health Literacy Survey dataset. Glob. Health Promot. 2018, 25, 1757975918788300. [Google Scholar] [CrossRef]

- Smith, B.; Magnani, J.W. New technologies, new disparities: The intersection of electronic health and digital health literacy. Int. J. Cardiol. 2019, 292, 280–282. [Google Scholar] [CrossRef]

- Kim, H.; Xie, B. Health literacy in the ehealth era: A systematic review of the literature. Patient Educ. Couns. 2017, 100, 1073–1082. [Google Scholar] [CrossRef] [PubMed]

- Caligtan, C.A.; Dykes, P.C. Electronic health records and personal health records. Semin. Oncol. Nurs. 2011, 27, 218–228. [Google Scholar] [CrossRef] [PubMed]

- Yamin, C.K.; Emani, S.; Williams, D.H.; Lipsitz, S.R.; Karson, A.S.; Wald, J.S.; Bates, D.W. The digital divide in adoption and use of a personal health record. Arch. Intern. Med. 2011, 171, 568–574. [Google Scholar] [CrossRef] [PubMed]

- Heart, T.; Ben-Assuli, O.; Shabtai, I. A review of PHR, EMR and EHR integration: A more personalized healthcare and public health policy. Health Policy Technol. 2017, 6, 20–25. [Google Scholar] [CrossRef]

- Cho, J.; Park, D.; Lee, H.E. Cognitive factors of using health apps: Systematic analysis of relationships among health consciousness, health information orientation, ehealth literacy, and health app use efficacy. J. Med. Internet Res. 2014, 16, e125. [Google Scholar] [CrossRef] [PubMed]

- Hemsley, B.; Rollo, M.; Georgiou, A.; Balandin, S.; Hill, S. The health literacy demands of electronic personal health records (e-PHRs): An integrative review to inform future inclusive research. Patient Educ. Couns. 2018, 101, 2–15. [Google Scholar] [CrossRef] [PubMed]

- Hajesmaeel-Gohari, S.; Khordastan, F.; Fatehi, F.; Samzadeh, H.; Bahaadinbeigy, K. The most used questionnaires for evaluating satisfaction, usability, acceptance, and quality outcomes of mobile health. BMC Med. Inform. Decis. Mak. 2022, 22, 22. [Google Scholar] [CrossRef]

- Azad-Khaneghah, P.; Neubauer, N.; Miguel Cruz, A.; Liu, L. Mobile health app usability and quality rating scales: A systematic review. Disabil. Rehabil. Assist. Technol. 2021, 16, 712–721. [Google Scholar] [CrossRef]

- Stoyanov, S.R.; Hides, L.; Kavanagh, D.J.; Zelenko, O.; Tjondronegoro, D.; Mani, M. Mobile app rating scale: A new tool for assessing the quality of health mobile apps. JMIR mHealth uHealth 2015, 3, e27. [Google Scholar] [CrossRef]

- Stoyanov, S.R.; Hides, L.; Kavanagh, D.J.; Wilson, H. Development and validation of the user version of the Mobile Application Rating Scale (uMARS). JMIR mHealth uHealth 2016, 4, e72. [Google Scholar] [CrossRef]

- Zhou, L.; Bao, J.; Setiawan, I.M.A.; Saptono, A.; Parmanto, B. The Mhealth App Usability Questionnaire (MAUQ): Development and validation study. JMIR mHealth uHealth 2019, 7, e11500. [Google Scholar] [CrossRef]

- Schnall, R.; Cho, H.; Liu, J. Health Information Technology Usability Evaluation Scale (Health-ITUES) for usability assessment of mobile health technology: Validation study. JMIR mHealth uHealth 2018, 6, e4. [Google Scholar] [CrossRef]

- Kim, G.; Kim, H.K.; Shin, Y.; Park, G.; Park, S.; Lee, Y. Analyzing user satisfaction factors for mobile health apps. In Proceedings of the Korean Society of Broadcast Engineers Conference, Online, November 2021; pp. 129–131. [Google Scholar]

- Kayser, L.; Karnoe, A.; Furstrand, D.; Batterham, R.; Christensen, K.B.; Elsworth, G.; Osborne, R.H. A multidimensional tool based on the ehealth literacy framework: Development and initial validity testing of the ehealth literacy questionnaire (eHLQ). J. Med. Internet Res. 2018, 20, e36. [Google Scholar] [CrossRef] [PubMed]

- Sus, B.J. A quick and dirty usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, I.L., Eds.; CRC Press: London, UK, 1996. [Google Scholar]

- Lewis, J.R. Psychometric evaluation of the PSSUQ using data from five years of usability studies. Int. J. Hum. Comput. Interact. 2002, 14, 463–488. [Google Scholar] [CrossRef] [PubMed]

- Muro-Culebras, A.; Escriche-Escuder, A.; Martin-Martin, J.; Roldán-Jiménez, C.; De-Torres, I.; Ruiz-Muñoz, M.; Gonzalez-Sanchez, M.; Mayoral-Cleries, F.; Biró, A.; Tang, W.; et al. Tools for evaluating the content, efficacy, and usability of mobile health apps according to the consensus-based standards for the selection of health measurement instruments: Systematic review. JMIR mHealth uHealth 2021, 9, e15433. [Google Scholar] [CrossRef] [PubMed]

- Yen, P.Y.; Wantland, D.; Bakken, S. Development of a customizable health IT usability evaluation scale. AMIA Annu. Symp. Proc. 2010, 2010, 917–921. [Google Scholar] [PubMed]

- Terhorst, Y.; Philippi, P.; Sander, L.B.; Schultchen, D.; Paganini, S.; Bardus, M.; Santo, K.; Knitza, J.; Machado, G.C.; Schoeppe, S.; et al. Validation of the Mobile Application Rating Scale (MARS). PLoS ONE 2020, 15, e0241480. [Google Scholar] [CrossRef] [PubMed]

- Yen, P.Y.; Sousa, K.H.; Bakken, S. Examining construct and predictive validity of the Health-IT Usability Evaluation Scale: Confirmatory factor analysis and structural equation modeling results. J. Am. Med. Inform. Assoc. 2014, 21, e241–e248. [Google Scholar] [CrossRef] [PubMed]

- Lund, A.M. Measuring usability with the use questionnaire. Usability Interface 2001, 8, 3–6. [Google Scholar]

- Sumi, C.N. (Software Usability Measurement Inventory) as a Knowledge Elicitation Tool for Improving Usability; Department of Applied Psychology, University College Cork: Cork, Ireland, 1993. [Google Scholar]

- Monkman, H.; Kushniruk, A. Applying usability methods to identify health literacy issues: An example using a Personal Health Record. Stud. Health Technol. Inform. 2013, 183, 179–185. [Google Scholar]

- Wang, Q.; Liu, J.; Zhou, L.; Tian, J.; Chen, X.; Zhang, W.; Wang, H.; Zhou, W.; Gao, Y. Usability evaluation of mHealth apps for elderly individuals: A scoping review. BMC Med. Inform. Decis. Mak. 2022, 22, 317. [Google Scholar] [CrossRef]

- Sharma, S.; Barnett, K.G.; Maypole, J.J.; Mishuris, R.G. Evaluation of mHealth Apps for Diverse, Low-Income Patient Populations: Framework Development and Application Study. JMIR Form. Res. 2022, 6, e29922. [Google Scholar] [CrossRef]

- Voth, M.; Chisholm, S.; Sollid, H.; Jones, C.; Smith-MacDonald, L.; Brémault-Phillips, S. Efficacy, effectiveness, and quality of resilience-building mobile health apps for military, veteran, and public safety personnel populations: Scoping literature review and app evaluation. JMIR mHealth uHealth 2022, 10, e26453. [Google Scholar] [CrossRef] [PubMed]

- Tabachnick, B.G.; Fidell, L.S.; Ullman, J.B. Using Multivariate Statistics; Pearson: Boston, MA, USA, 2007; Volume 5. [Google Scholar]

- Tavakol, M.; Dennick, R. Making sense of Cronbach’s alpha. Int. J. Med. Educ. 2011, 2, 53–55. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.H. The criteria for selecting appropriate fit indices in structural equation modeling and their rationales. Korean J. Clin. Psychol. 2000, 19, 161–177. [Google Scholar]

- Bentler, P.M. Comparative fit indexes in structural models. Psychol. Bull. 1990, 107, 238–246. [Google Scholar] [CrossRef] [PubMed]

- Bollen, K.A.; Long, J.S. Testing Structural Equation Models; Sage: Newbury Park, CA, USA, 1993; Volume 154. [Google Scholar]

- Harman, H.H. Modern Factor Analysis; University of Chicago Press: Chicago, IL, USA, 1976. [Google Scholar]

- Malhotra, N.K.; Kim, S.S.; Patil, A. Common method variance in IS research: A comparison of alternative approaches and a reanalysis of past research. Manag. Sci. 2006, 52, 1865–1883. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E.; Tatham, R. Multivariate Data Analysis; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2006; Volume 6. [Google Scholar]

- Bauer, A.M.; Rue, T.; Munson, S.A.; Ghomi, R.H.; Keppel, G.A.; Cole, A.M.; Baldwin, L.M.; Katon, W. Patient-oriented health technologies: Patients’ perspectives and use. J. Mob. Technol. Med. 2017, 6, 1–10. [Google Scholar] [CrossRef]

- Wong, K.L.; Ong, S.F.; Kuek, T.Y. Constructing a survey questionnaire to collect data on service quality of business academics. Eur. J. Soc. Sci. 2012, 29, 209–221. [Google Scholar]

- Bolarinwa, O.A. Principles and methods of validity and reliability testing of questionnaires used in social and health science researches. Niger. Postgrad. Med. J. 2015, 22, 195–201. [Google Scholar] [CrossRef]

- Hogarty, K.Y.; Hines, C.V.; Kromrey, J.D.; Ferron, J.M.; Mumford, K.R. The quality of factor solutions in exploratory factor analysis: The influence of sample size, communality, and overdetermination. Educ. Psychol. Meas. 2005, 65, 202–226. [Google Scholar] [CrossRef]

- MacCallum, R.C.; Widaman, K.F.; Zhang, S.; Hong, S. Sample size in factor analysis. Psychol. Method 1999, 4, 84–99. [Google Scholar] [CrossRef]

- Arrindell, W.A.; Van der Ende, J. An empirical test of the utility of the observations-to-variables ratio in factor and components analysis. Appl. Psychol. Meas. 1985, 9, 165–178. [Google Scholar] [CrossRef]

- Barrett, P.T.; Kline, P. The observation to variable ratio in factor analysis. Personal. Stud. Group Behav. 1981, 1, 23–33. [Google Scholar]

- Kang, H. A guide on the use of factor analysis in the assessment of construct validity. J. Korean Acad. Nurs. 2013, 43, 587–594. [Google Scholar] [CrossRef] [PubMed]

- Zwick, W.R.; Velicer, W.F. Comparison of five rules for determining the number of components to retain. Psychol. Bull. 1986, 99, 432–442. [Google Scholar] [CrossRef]

- Tang, P.C.; Ash, J.S.; Bates, D.W.; Overhage, J.M.; Sands, D.Z. Personal health records: Definitions, benefits, and strategies for overcoming barriers to adoption. J. Am. Med. Inform. Assoc. 2006, 13, 121–126. [Google Scholar] [CrossRef]

- Hensher, M.; Cooper, P.; Dona, S.W.A.; Angeles, M.R.; Nguyen, D.; Heynsbergh, N.; Chatterton, M.L.; Peeters, A. Scoping review: Development and assessment of evaluation frameworks of mobile health apps for recommendations to consumers. J. Am. Med. Inform. Assoc. 2021, 28, 1318–1329. [Google Scholar] [CrossRef]

| Measurement | Author(s) | Dimensions (Number of Items) | Reliability Testing | Validity Testing |

|---|---|---|---|---|

| Health-ITUES | Yen et al. [28] | Impact (3) Perceived usefulness (9) Perceived ease of use (5) User control (3) | Internal consistency Inter-rater reliability | Construct validity Criterion validity |

| MARS | Stoyanov et al. [19] Terhorst et al. [29] | Engagement (5) Functionality (4) Aesthetics (3) Information (7) App subjective quality (4) | Internal consistency Inter-rater reliability | Construct validity Criterion validity |

| uMARS | Stoyanov et al. [20] | Engagement (5) Functionality (4) Aesthetics (3) Information (4) App subjective quality (4) | Internal consistency Test–retest reliability | None |

| MAUQ | Zhou et al. [21] | Ease of use and Satisfaction (8) System information Arrangement (6) Usefulness (7) | Internal consistency | Content validity Construct validity Criterion validity |

| Factors | Contents |

|---|---|

| Ease of Use and Satisfaction | Evaluate the overall usability and satisfaction, such as convenience and learnability. |

| Information Architecture | Evaluate the quality of factors such as interface and interaction. |

| Usefulness | Evaluate whether users have achieved the right results to meet their needs and expectations. |

| Ease of Information | Evaluate whether the design considers the user’s health literacy. |

| Aesthetic | Evaluate the app’s overall design and whether the color, font, and font size are user-appropriate. |

| Factor (Item) | Factor Loading | |

|---|---|---|

| Ease of Use and Satisfaction | ||

| Q1. This app was easy to use. | 0.848 | 0.900 (5 items) |

| Q2. I was able to use all the functions provided by the app. | 0.752 | |

| Q3. It is comfortable to use this app in everyday environments. | 0.806 | |

| Q4. The amount of time involved in using this app fits me. | 0.756 | |

| Q5. Overall, I am satisfied with this app. | 0.860 | |

| Information Architecture | ||

| Q6. Whenever I made a mistake using the app, I could recover easily and quickly. | 0.610 | 0.731 (2 items) |

| Q7. Moving between screens or between functions was consistently possible in the same way. | 0.770 | |

| Usefulness | ||

| Q8. This app has all the functions and capabilities I expect it to have. | 0.829 | 0.920 (5 items) |

| Q9. This app will be useful for my health care. | 0.886 | |

| Q10. This app will help me manage my health effectively. | 0.881 | |

| Q11. This mHealth app provided an acceptable way to receive health care services. | 0.728 | |

| Q12. This app has improved access to medical services. | 0.672 | |

| Ease of Information | ||

| Q13. This app’s medical/health information is well-written, accurate, and relevant to the app’s purpose. | 0.818 | 0.940 (4 items) |

| Q14. The information provided by this app is comprehensive and concise. | 0.859 | |

| Q15. The visual information provided by this app (charts, graphs, images, etc.) is logically and clearly descriptive | 0.825 | |

| Q16. The information provided by this app was easy to understand. | 0.847 | |

| Aesthetic | ||

| Q17. This app uses suitable colors. | 0.885 | 0.936 (2 items) |

| Q18. I like the menu structure and design of the app, and it’s easy to use. | 0.890 | |

| Variables | df | p | CFI | TLI | RMSEA | |

|---|---|---|---|---|---|---|

| Five-factor model (18 Items) | 186 | 125 | <0.001 | 0.944 | 0.931 | 0.084 |

| Five-factor model (16 Items) | 111 | 94 | 0.115 | 0.983 | 0.978 | 0.050 |

| Factor | Variables | Estimate | SE | t-Value | Standardized Estimate | CR | AVE |

|---|---|---|---|---|---|---|---|

| Ease of Use and Satisfaction | Q1 | 1.000 | 0.755 | 0.894 | 0.682 | ||

| Q2 | 1.425 | 0.210 | 6.80 *** | 0.767 | |||

| Q3 | 1.107 | 0.160 | 6.90 *** | 0.775 | |||

| Q5 | 1.191 | 0.138 | 8.66 *** | 0.984 | |||

| Information Architecture | Q6 | 1.000 | 0.790 | 0.733 | 0.579 | ||

| Q7 | 0.872 | 0.191 | 4.57 *** | 0.731 | |||

| Usefulness | Q8 | 1.000 | 0.900 | 0.934 | 0.782 | ||

| Q9 | 1.089 | 0.075 | 14.63 *** | 0.966 | |||

| Q10 | 1.247 | 0.086 | 14.52 *** | 0.962 | |||

| Q12 | 0.761 | 0.110 | 6.94 *** | 0.679 | |||

| Ease of Information | Q13 | 1.000 | 0.788 | 0.943 | 0.807 | ||

| Q14 | 1.034 | 0.112 | 9.21 *** | 0.924 | |||

| Q15 | 1.037 | 0.111 | 9.31 *** | 0.935 | |||

| Q16 | 1.170 | 0.125 | 9.34 *** | 0.937 | |||

| Aesthetic | Q17 | 1.000 | 0.953 | 0.937 | 0.881 | ||

| Q18 | 1.019 | 0.127 | 8.03 *** | 0.924 |

| Construct | Ease of Use and Satisfaction | Information Architecture | Usefulness | Ease of Information | Aesthetic |

|---|---|---|---|---|---|

| Ease of Use and Satisfaction | 0.826 * | - | - | - | - |

| Information Architecture | 0.568 | 0.761 * | - | - | - |

| Usefulness | 0.447 | 0.473 | 0.885 * | - | - |

| Ease of Information | 0.353 | 0.486 | 0.588 | 0.898 * | - |

| Aesthetic | 0.401 | 0.534 | 0.386 | 0.254 | 0.939 * |

| Components | Questions |

|---|---|

| Ease of Use and Satisfaction | 1. This app was easy to use. |

| 2. I was able to use all the functions provided by the app. | |

| 3. It is comfortable to use this app in everyday environments. | |

| 4. Overall, I am satisfied with this app. | |

| Information Architecture | 5. Whenever I made a mistake using the app, I could recover easily and quickly. |

| 6. Moving between screens or between functions was consistently possible in the same way. | |

| Usefulness | 7. This app has all the functions and capabilities I expect it to have. |

| 8. This app will be useful for my health care. | |

| 9. This app will help me manage my health effectively. | |

| 10. This app has improved access to medical services. | |

| Ease of Information | 11. This app’s medical/health information is well-written, accurate and relevant to the app’s purpose. |

| 12. The information provided by this app is comprehensive and concise. | |

| 13. The visual information provided by this app (charts, graphs, images, etc.) is logically and clearly descriptive | |

| 14. The information provided by this app was easy to understand. | |

| Aesthetic | 15. This app uses suitable colors. |

| 16. I like the menu structure and design of the app, and it’s easy to use. |

| Factor | Ease of Use and Satisfaction | Information Architecture | Usefulness | Ease of Information | Aesthetic |

|---|---|---|---|---|---|

| mean | 5.2 | 5.1 | 5.0 | 4.7 | 5.7 |

| Components | Contents |

|---|---|

| Ease of Use and Satisfaction | - Membership (digital one-pass) and login procedures are complicated. - Manual data entry is cumbersome. - The menu buttons are small, and the letters are hard to see, making it difficult to use. - The app is intuitive and easy to use in everyday environments. |

| Information Architecture | - Information sorting/classification is not user-friendly and difficult to understand. - Easy-to-find functions are provided by the app. |

| Usefulness | - The information provided by this app is unlikely to help me manage my health. - Lack of interaction and motivation with apps to manage health. - No guide for health care. |

| Ease of Information | - Lack of information on detailed medication (effectiveness and precautions unknown). - Lack of medical information (disease name, doctor’s opinion). - Drug terminology is difficult. - No detailed explanation on timing of the vaccine. - No information on what items and figures are in the medical check-up. - Information visibility (lack of visual elements). |

| Aesthetic | - Opinions of users about the design of the main screen are conflicting. - The app has too many colors, so the design is tacky. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, G.; Hwang, D.; Park, J.; Kim, H.K.; Hwang, E.-S. How to Design and Evaluate mHealth Apps? A Case Study of a Mobile Personal Health Record App. Electronics 2024, 13, 213. https://doi.org/10.3390/electronics13010213

Kim G, Hwang D, Park J, Kim HK, Hwang E-S. How to Design and Evaluate mHealth Apps? A Case Study of a Mobile Personal Health Record App. Electronics. 2024; 13(1):213. https://doi.org/10.3390/electronics13010213

Chicago/Turabian StyleKim, Guyeop, Dongwook Hwang, Jaehyun Park, Hyun K. Kim, and Eui-Seok Hwang. 2024. "How to Design and Evaluate mHealth Apps? A Case Study of a Mobile Personal Health Record App" Electronics 13, no. 1: 213. https://doi.org/10.3390/electronics13010213

APA StyleKim, G., Hwang, D., Park, J., Kim, H. K., & Hwang, E.-S. (2024). How to Design and Evaluate mHealth Apps? A Case Study of a Mobile Personal Health Record App. Electronics, 13(1), 213. https://doi.org/10.3390/electronics13010213