Brain–Computer Interface Based on PLV-Spatial Filter and LSTM Classification for Intuitive Control of Avatars

Abstract

1. Introduction

2. Materials and Methods

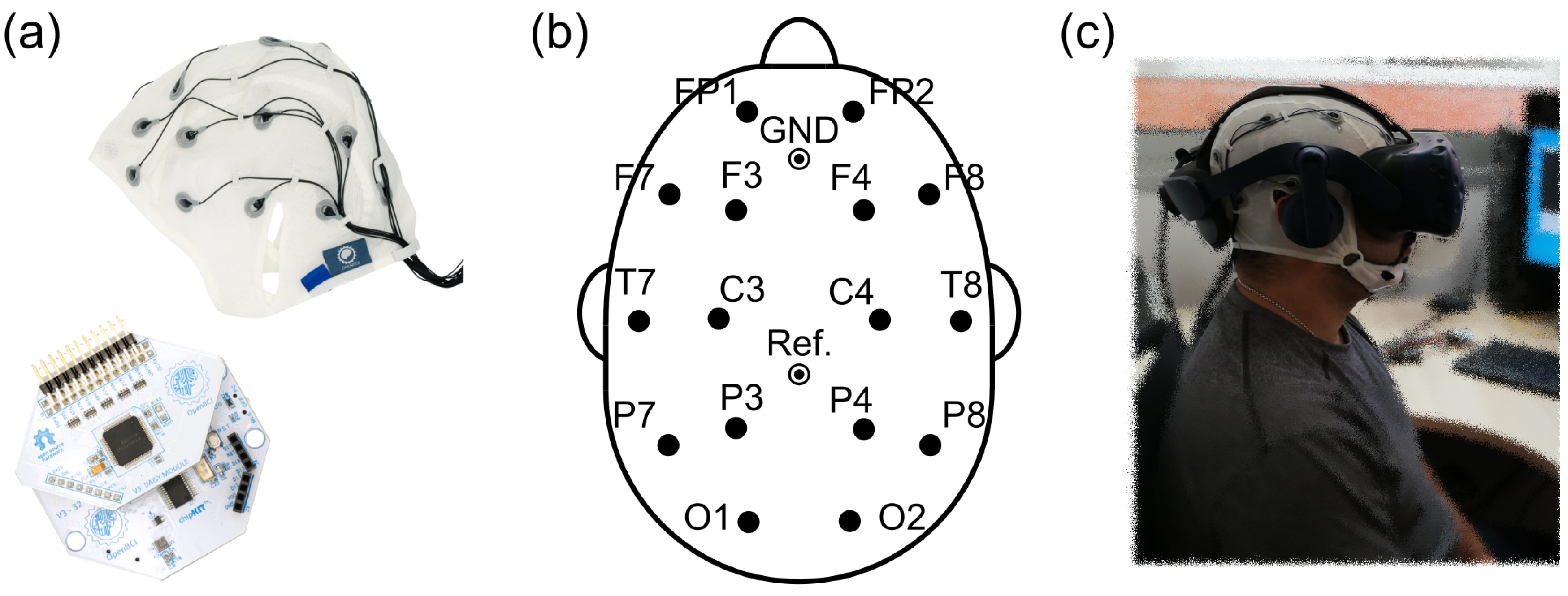

2.1. Equipment and Software

2.2. Participants

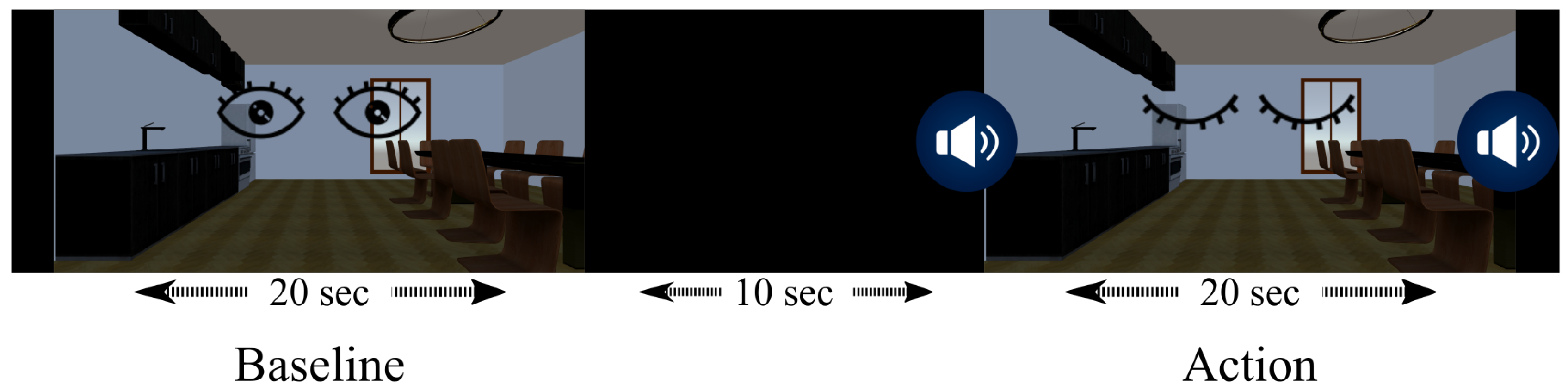

2.3. Experimental Training Protocol

2.4. Data Preprocessing

2.5. Features Extraction

2.6. Phase-Locking Value Spatial Filtering

2.7. LSTM Classification

2.8. Virtual Environment

3. Results

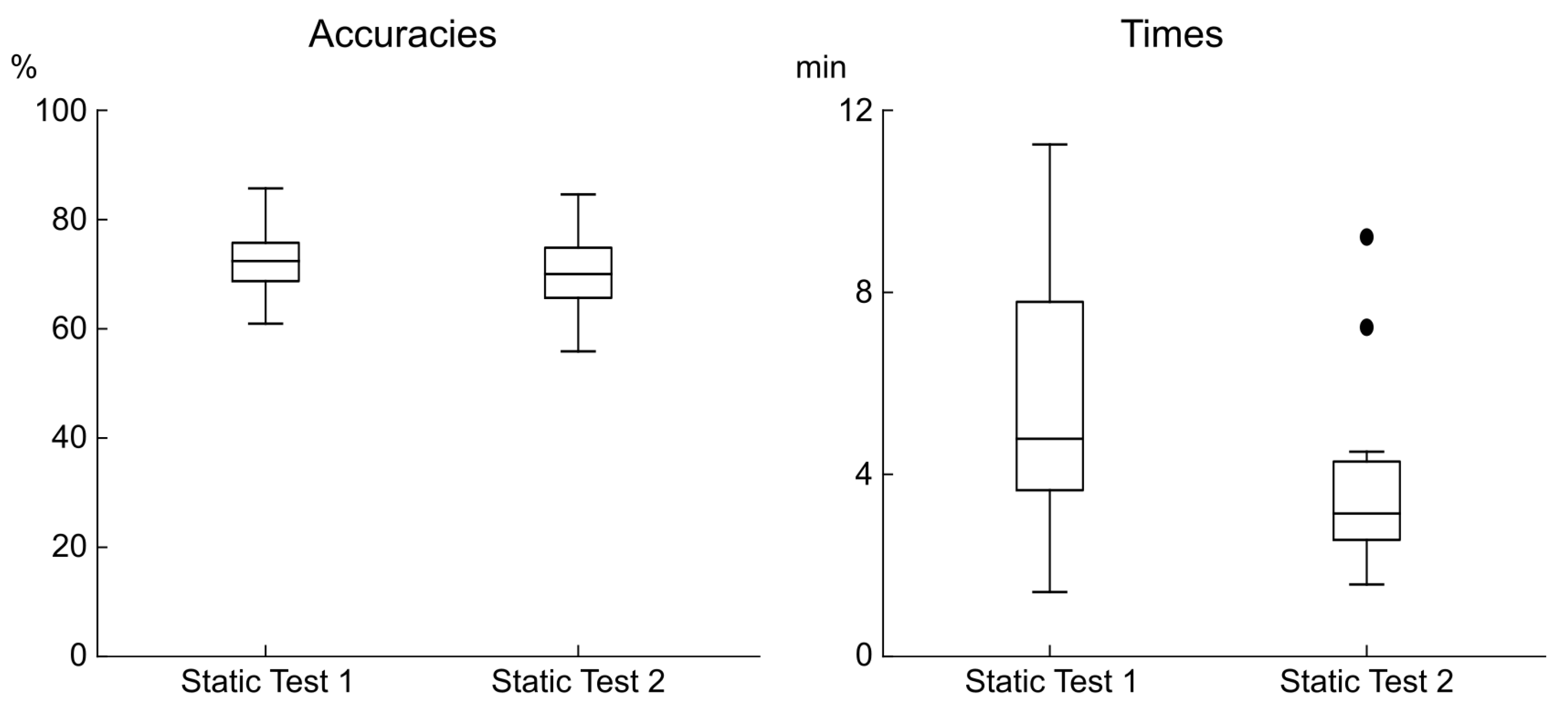

- Previous experience (Previous Exp) was used because it is essential to consider the previous experience of the volunteers with a BCI system because of its ability to reduce adaptation time and improve the handling of the technology.

- Training accuracy (Training Acc) is the benchmark metric of model training performance.

- The percentage of hits in the test (Test Acc) represents the correct classification at each moment for the classifier when the user is interacting with the menu (to achieve 100% accuracy in the test, the user must select all correct buttons on the first attempt, without selecting any incorrect buttons or omitting the selection of a correct button).

- Time refers to the minutes the user takes to complete the test.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BCI | Brain–computer interface |

| VR | Virtual reality |

| PLV-SF | Phase-locking value spatial filtering |

| LSTM | Long Short-Term Memory |

| EEG | Electroencephalography |

| EOG | Electrooculogram |

| SSVEP | Steady-state visual evoked potential |

| EMG | Electromyography |

| MI | Motor imagery |

| MEG | Magnetoencephalography |

| iEEG | Intracranial electroencephalography |

| NIRS | Near infrared spectroscopy |

| ANN | Artificial neural network |

| NFT | Neurofeedback training |

| WM | Working memory |

References

- Huang, Q.; Zhang, Z.; Yu, T.; He, S.; Li, Y. An EEG-/EOG-Based Hybrid Brain-Computer Interface: Application on Controlling an Integrated Wheelchair Robotic Arm System. Front. Neurosci. 2019, 13, 1243. [Google Scholar] [CrossRef]

- Yu, Y.; Zhou, Z.; Liu, Y.; Jiang, J.; Yin, E.; Zhang, N.; Wang, Z.; Liu, Y.; Wu, X.; Hu, D. Self-paced operation of a wheelchair based on a hybrid brain-computer interface combining motor imagery and P300 potential. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2516–2526. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Li, Y.; Long, J.; Yu, T.; Gu, Z. An asynchronous wheelchair control by hybrid EEG-EOG brain-computer interface. Cogn. Neurodynamics 2014, 8, 399–409. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Chen, S.K.; Liu, Y.H.; Chen, Y.J.; Chen, C.S. An Electric Wheelchair Manipulating System Using SSVEP-Based BCI System. Biosensors 2022, 12, 772. [Google Scholar] [CrossRef] [PubMed]

- Pawuś, D.; Paszkiel, S. BCI Wheelchair Control Using Expert System Classifying EEG Signals Based on Power Spectrum Estimation and Nervous Tics Detection. Appl. Sci. 2022, 12, 10385. [Google Scholar] [CrossRef]

- Wang, K.; Qiu, S.; Wei, W.; Zhang, Y.; Wang, S.; He, H.; Xu, M.; Jung, T.P.; Ming, D. A multimodal approach to estimating vigilance in SSVEP-based BCI. Expert Syst. Appl. 2023, 225, 120177. [Google Scholar] [CrossRef]

- Naser, M.Y.; Bhattacharya, S. Towards Practical BCI-Driven Wheelchairs: A Systematic Review Study. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 1030–1044. [Google Scholar] [CrossRef] [PubMed]

- Xiong, J.; Hsiang, E.L.; He, Z.; Zhan, T.; Wu, S.T. Augmented reality and virtual reality displays: Emerging technologies and future perspectives. Light. Sci. Appl. 2021, 10, 216. [Google Scholar] [CrossRef] [PubMed]

- Al-Ansi, A.M.; Jaboob, M.; Garad, A.; Al-Ansi, A. Analyzing augmented reality (AR) and virtual reality (VR) recent development in education. Soc. Sci. Humanit. Open 2023, 8, 100532. [Google Scholar] [CrossRef]

- Demeco, A.; Zola, L.; Frizziero, A.; Martini, C.; Palumbo, A.; Foresti, R.; Buccino, G.; Costantino, C. Immersive Virtual Reality in Post-Stroke Rehabilitation: A Systematic Review. Sensors 2023, 23, 1712. [Google Scholar] [CrossRef]

- Emmelkamp, P.M.; Meyerbröker, K. Virtual Reality Therapy in Mental Health. Annu. Rev. Clin. Psychol. 2021, 17, 495–519. [Google Scholar] [CrossRef]

- Juan, M.C.; Elexpuru, J.; Dias, P.; Santos, B.S.; Amorim, P. Immersive virtual reality for upper limb rehabilitation: Comparing hand and controller interaction. Virtual Real. 2023, 27, 1157–1171. [Google Scholar] [CrossRef] [PubMed]

- Ehioghae, M.; Montoya, A.; Keshav, R.; Vippa, T.K.; Manuk-Hakobyan, H.; Hasoon, J.; Kaye, A.D.; Urits, I. Effectiveness of Virtual Reality-Based Rehabilitation Interventions in Improving Postoperative Outcomes for Orthopedic Surgery Patients. Curr. Pain Headache Rep. 2024, 28, 37–45. [Google Scholar] [CrossRef]

- Chen, F.Q.; Leng, Y.F.; Ge, J.F.; Wang, D.W.; Li, C.; Chen, B.; Sun, Z.L. Effectiveness of virtual reality in nursing education: Meta-analysis. J. Med. Internet Res. 2020, 22, e18290. [Google Scholar] [CrossRef] [PubMed]

- Suno, H.; Ohno, N. Virtual Hydrogen, a virtual reality education tool in physics and chemistry. Procedia Comput. Sci. 2023, 225, 2283–2291. [Google Scholar] [CrossRef]

- Ntakakis, G.; Plomariti, C.; Frantzidis, C.; Antoniou, P.E.; Bamidis, P.D.; Tsoulfas, G. Exploring the use of virtual reality in surgical education. World J. Transplant. 2023, 13, 36–43. [Google Scholar] [CrossRef]

- Deng, T.; Huo, Z.; Zhang, L.; Dong, Z.; Niu, L.; Kang, X.; Huang, X. A VR-based BCI interactive system for UAV swarm control. Biomed. Signal Process. Control. 2023, 85, 104944. [Google Scholar] [CrossRef]

- Vourvopoulos, A.; Blanco-Mora, D.A.; Aldridge, A.; Jorge, C.; Figueiredo, P.; Badia, S.B.I. Enhancing Motor-Imagery Brain-Computer Interface Training With Embodied Virtual Reality: A Pilot Study With Older Adults. In Proceedings of the 2022 IEEE International Workshop on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering, MetroXRAINE 2022—Proceedings, Rome, Italy, 26–28 October 2022; pp. 157–162. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, L.; Wei, S.; Zhang, X.; Mao, L. Development and evaluation of BCI for operating VR flight simulator based on desktop VR equipment. Adv. Eng. Inform. 2022, 51, 101499. [Google Scholar] [CrossRef]

- Juliano, J.M.; Spicer, R.P.; Vourvopoulos, A.; Lefebvre, S.; Jann, K.; Ard, T.; Santarnecchi, E.; Krum, D.M.; Liew, S.L. Embodiment is related to better performance on a brain–computer interface in immersive virtual reality: A pilot study. Sensors 2020, 20, 1204. [Google Scholar] [CrossRef]

- Vourvopoulos, A.; Pardo, O.M.; Lefebvre, S.; Neureither, M.; Saldana, D.; Jahng, E.; Liew, S.L. Effects of a brain-computer interface with virtual reality (VR) neurofeedback: A pilot study in chronic stroke patients. Front. Hum. Neurosci. 2019, 13, 460405. [Google Scholar] [CrossRef]

- Karácsony, T.; Hansen, J.P.; Iversen, H.K.; Puthusserypady, S. Brain computer interface for neuro-rehabilitation with deep learning classification and virtual reality feedback. In Proceedings of the 10th Augmented Human International Conference 2019, Reims, France, 11–12 March 2019. [Google Scholar] [CrossRef]

- Gao, N.; Chen, P.; Liang, L. BCI–VR-Based Hand Soft Rehabilitation System with Its Applications in Hand Rehabilitation After Stroke. Int. J. Precis. Eng. Manuf. 2023, 24, 1403–1424. [Google Scholar] [CrossRef]

- Martin-Chinea, K.; Gómez-González, J.F.; Acosta, L. A New PLV-Spatial Filtering to Improve the Classification Performance in BCI Systems. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2275–2282. [Google Scholar] [CrossRef] [PubMed]

- Martín-Chinea, K.; Ortega, J.; Gómez-González, J.F.; Pereda, E.; Toledo, J.; Acosta, L. Effect of time windows in LSTM networks for EEG-based BCIs. Cogn. Neurodyn. 2022, 17, 385–398. [Google Scholar] [CrossRef] [PubMed]

- Gong, P.; Wang, P.; Zhou, Y.; Zhang, D. A Spiking Neural Network With Adaptive Graph Convolution and LSTM for EEG-Based Brain-Computer Interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 1440–1450. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Cheng, S.; Tian, J.; Gao, Y. A 2D CNN-LSTM hybrid algorithm using time series segments of EEG data for motor imagery classification. Biomed. Signal Process. Control. 2023, 83, 104627. [Google Scholar] [CrossRef]

- Guerrero-Mendez, C.D.; Blanco-Diaz, C.F.; Ruiz-Olaya, A.F.; López-Delis, A.; Jaramillo-Isaza, S.; Andrade, R.M.; Souza, A.F.D.; Delisle-Rodriguez, D.; Frizera-Neto, A.; Bastos-Filho, T.F. EEG motor imagery classification using deep learning approaches in naïve BCI users. Biomed. Phys. Eng. Express 2023, 9, 45029. [Google Scholar] [CrossRef] [PubMed]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 2011, 156869. [Google Scholar] [CrossRef]

- Di Flumeri, G.; Arico, P.; Borghini, G.; Colosimo, A.; Babiloni, F. A new regression-based method for the eye blinks artifacts correction in the EEG signal, without using any EOG channel. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Orlando, FL, USA, 16–20 August 2016; pp. 3187–3190. [Google Scholar] [CrossRef]

- Donders Centre for Cognitive Neuroimaging. FieldTrip: Automatic Artifact Rejection; Donders Centre for Cognitive Neuroimaging: Nijmegen, The Netherlands, 2019. [Google Scholar]

- Tallon-Baudry, C.; Bertrand, O. Oscillatory gamma activity in humans and its role in object representation. Trends Cogn. Sci. 1999, 3, 151–162. [Google Scholar] [CrossRef]

- Belcher, M.A.; Hwang, I.C.; Bhattacharya, S.; Hairston, W.D.; Metcalfe, J.S. EEG-based prediction of driving events from passenger cognitive state using Morlet Wavelet and Evoked Responses. Transp. Eng. 2022, 8, 100107. [Google Scholar] [CrossRef]

- Gosala, B.; Dindayal Kapgate, P.; Jain, P.; Nath Chaurasia, R.; Gupta, M. Wavelet transforms for feature engineering in EEG data processing: An application on Schizophrenia. Biomed. Signal Process. Control 2023, 85, 104811. [Google Scholar] [CrossRef]

- Barry, R.J.; Clarke, A.R.; Johnstone, S.J.; Magee, C.A.; Rushby, J.A. EEG differences between eyes-closed and eyes-open resting conditions. Clin. Neurophysiol. 2007, 118, 2765–2773. [Google Scholar] [CrossRef] [PubMed]

- Raufi, B.; Longo, L. An Evaluation of the EEG Alpha-to-Theta and Theta-to-Alpha Band Ratios as Indexes of Mental Workload. Front. Neuroinform. 2022, 16, 861967. [Google Scholar] [CrossRef] [PubMed]

- Mizokuchi, K.; Tanaka, T.; Sato, T.G.; Shiraki, Y. Alpha band modulation caused by selective attention to music enables EEG classification. Cogn. Neurodyn. 2023. [Google Scholar] [CrossRef]

- Mussigmann, T.; Bardel, B.; Lefaucheur, J.P. Resting-state electroencephalography (EEG) biomarkers of chronic neuropathic pain. A systematic review. NeuroImage 2022, 258, 119351. [Google Scholar] [CrossRef] [PubMed]

- García-Prieto, J.; Bajo, R.; Pereda, E. Efficient Computation of Functional Brain Networks: Toward Real-Time Functional Connectivity. Front. Neuroinform. 2017, 11, 8. [Google Scholar] [CrossRef] [PubMed]

- Esparza-Iaizzo, M.; Vigué-Guix, I.; Ruzzoli, M.; Torralba-Cuello, M.; Soto-Faraco, S. Long-Range α˙-Synchronization as Control Signal for BCI: A Feasibility Study. eNeuro 2023, 10, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Corsi, M.C.; Chevallier, S.; Fallani, F.D.V.; Yger, F. Functional Connectivity Ensemble Method to Enhance BCI Performance (FUCONE). IEEE Trans. Biomed. Eng. 2022, 69, 2826–2838. [Google Scholar] [CrossRef] [PubMed]

- Palva, J.M. Encyclopedia of Computational Neuroscience; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Kudo, M.; Toyama, J.; Shimbo, M. Multidimensional curve classification using passing-through regions. Pattern Recognit. Lett. 1999, 20, 1103–1111. [Google Scholar] [CrossRef]

- Bishop, C. Pattern Recognition and Machine Learning, 1st ed.; Springer: Singapore, 2006; p. 758. [Google Scholar]

- Moreno-Lumbreras, D.; Minelli, R.; Villaverde, A.; Gonzalez-Barahona, J.M.; Lanza, M. CodeCity: A comparison of on-screen and virtual reality. Inf. Softw. Technol. 2023, 153, 107064. [Google Scholar] [CrossRef]

- Erhardsson, M.; Alt Murphy, M.; Sunnerhagen, K.S. Commercial head-mounted display virtual reality for upper extremity rehabilitation in chronic stroke: A single-case design study. J. Neuroeng. Rehabil. 2020, 17, 154. [Google Scholar] [CrossRef] [PubMed]

- Meyer, O.A.; Omdahl, M.K.; Makransky, G. Investigating the effect of pre-training when learning through immersive virtual reality and video: A media and methods experiment. Comput. Educ. 2019, 140, 103603. [Google Scholar] [CrossRef]

- Frederiksen, J.G.; Sørensen, S.M.D.; Konge, L.; Svendsen, M.B.S.; Nobel-Jørgensen, M.; Bjerrum, F.; Andersen, S.A.W. Cognitive load and performance in immersive virtual reality versus conventional virtual reality simulation training of laparoscopic surgery: A randomized trial. Surg. Endosc. 2020, 34, 1244–1252. [Google Scholar] [CrossRef] [PubMed]

- Tortora, S.; Beraldo, G.; Bettella, F.; Formaggio, E.; Rubega, M.; Del Felice, A.; Masiero, S.; Carli, R.; Petrone, N.; Menegatti, E.; et al. Neural correlates of user learning during long-term BCI training for the Cybathlon competition. J. Neuroeng. Rehabil. 2022, 19, 69. [Google Scholar] [CrossRef] [PubMed]

- Eidel, M.; Kübler, A. Wheelchair Control in a Virtual Environment by Healthy Participants Using a P300-BCI Based on Tactile Stimulation: Training Effects and Usability. Front. Hum. Neurosci. 2020, 14, 265. [Google Scholar] [CrossRef]

- Chen, X.Y.; Sui, L. Alpha band neurofeedback training based on a portable device improves working memory performance of young people. Biomed. Signal Process. Control 2023, 80, 104308. [Google Scholar] [CrossRef]

| User | Previous Exp | Training Acc | Fixed Seq 1 | Random Seq | Fixed Seq 2 | |||

|---|---|---|---|---|---|---|---|---|

| Test Acc | Time | Test Acc | Time | Test Acc | Time | |||

| 1 | Yes | 100 | 69.23 | 4.08 | 71.88 | 3.37 | 61.76 | 3.57 |

| 2 | No | 94 | 74.51 | 5.48 | 88.46 | 2.77 | 69.23 | 2.72 |

| 3 | No | 83 | 80.95 | 2.20 | 74.19 | 3.28 | 64.71 | 3.63 |

| 4 | Yes | 100 | 75.00 | 3.82 | 72.22 | 3.85 | 75.00 | 2.48 |

| 5 | Yes | 95 | 85.71 | 1.42 | 82.61 | 2.47 | 70.83 | 2.53 |

| 6 | Yes | 85 | 76.00 | 8.12 | 75.00 | 2.58 | 68.60 | 9.22 |

| 7 | No | 88 | 70.31 | 6.82 | 77.55 | 5.10 | 84.62 | 2.65 |

| 8 | No | 99 | 68.57 | 3.60 | 64.71 | 5.42 | 74.42 | 4.50 |

| 9 | No | 62 | 63.75 | 8.60 | 67.27 | 5.82 | 80.00 | 1.58 |

| 10 | No | 95 | 60.95 | 11.25 | 56.52 | 4.80 | 55.88 | 7.23 |

| mean | 90.10 | 72.50 | 5.53 | 73.04 | 3.95 | 70.51 | 4.01 | |

| std | 11.59 | 7.52 | 3.11 | 9.02 | 1.24 | 8.52 | 2.40 | |

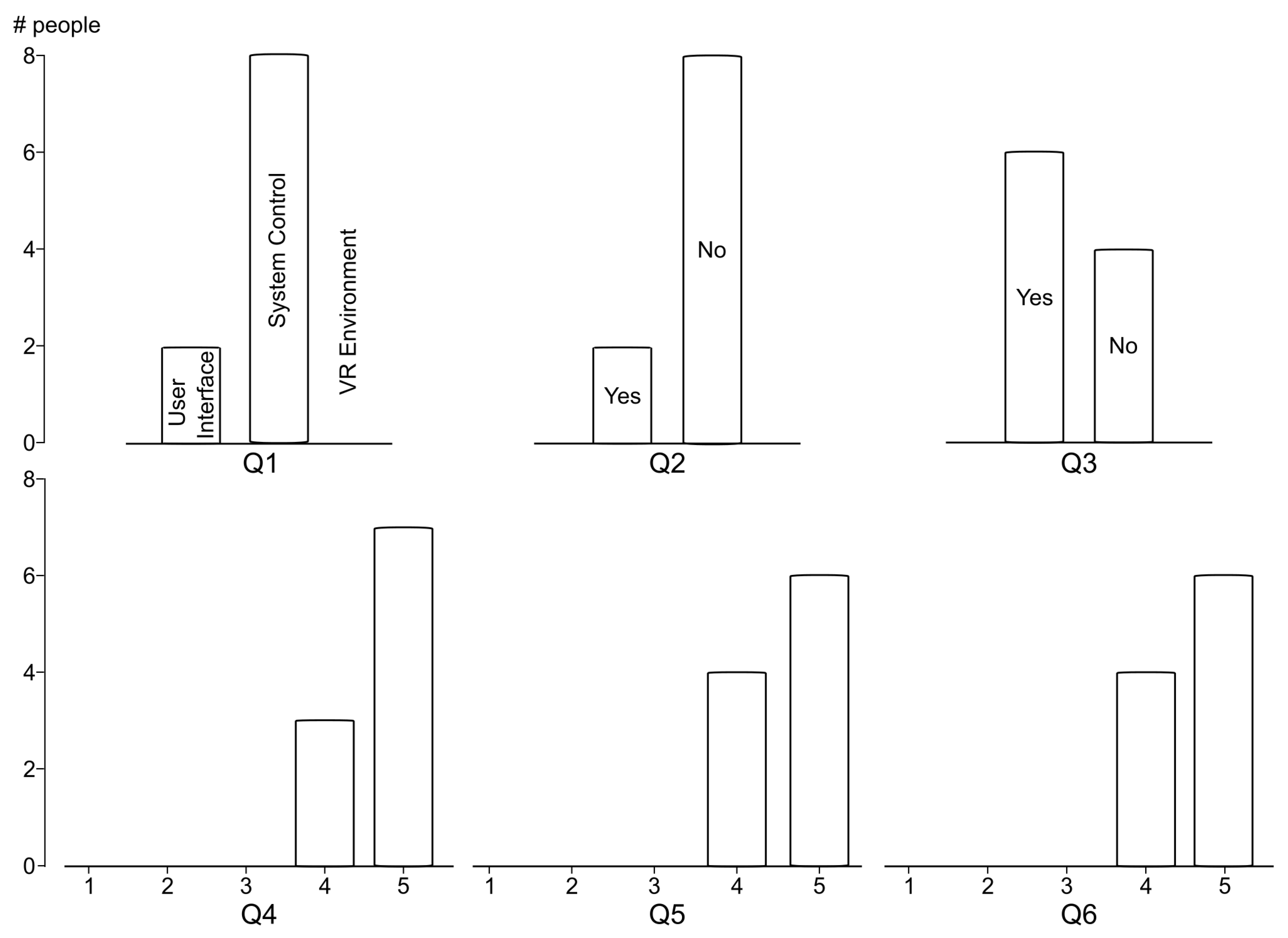

| # | Question |

|---|---|

| 1 | What do you consider most important in the system? |

| 2 | Did you have difficulties performing the test? Did the devices, the room, or other elements |

| make it difficult to perform the test? | |

| 3 | Was the speed of changes between buttons and selection time adequate for system control? |

| 4 | General evaluation of the use of the applied system (electroencephalograph and virtual reality |

| glasses) (rated from 1 to 5) | |

| 5 | Overall rating of the interface (from 1 to 5) |

| 6 | Overall rating of the system (from 1 to 5) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martín-Chinea, K.; Gómez-González, J.F.; Acosta, L. Brain–Computer Interface Based on PLV-Spatial Filter and LSTM Classification for Intuitive Control of Avatars. Electronics 2024, 13, 2088. https://doi.org/10.3390/electronics13112088

Martín-Chinea K, Gómez-González JF, Acosta L. Brain–Computer Interface Based on PLV-Spatial Filter and LSTM Classification for Intuitive Control of Avatars. Electronics. 2024; 13(11):2088. https://doi.org/10.3390/electronics13112088

Chicago/Turabian StyleMartín-Chinea, Kevin, José Francisco Gómez-González, and Leopoldo Acosta. 2024. "Brain–Computer Interface Based on PLV-Spatial Filter and LSTM Classification for Intuitive Control of Avatars" Electronics 13, no. 11: 2088. https://doi.org/10.3390/electronics13112088

APA StyleMartín-Chinea, K., Gómez-González, J. F., & Acosta, L. (2024). Brain–Computer Interface Based on PLV-Spatial Filter and LSTM Classification for Intuitive Control of Avatars. Electronics, 13(11), 2088. https://doi.org/10.3390/electronics13112088