Enhanced Hyperspectral Sharpening through Improved Relative Spectral Response Characteristic (R-SRC) Estimation for Long-Range Surveillance Applications

Abstract

1. Introduction

2. Methods and Materials

2.1. Spectral Unmixing Algorithm

Y Є RLm× Nm

Z Є RLh× Nm

2.2. Relative Spectral Response Characteristic (R-SRC) Estimation

2.2.1. R-SRC of Yokoya’s Original CNMF Algorithm (Y-CNMF)

2.2.2. Enhanced R-SRC Algorithm (E-CNMF)

2.2.3. Constrained Enhanced R-SRC Algorithm (CE-CNMF)

2.2.4. Second-Level CNMF with Constrained Enhanced R-SRC Algorithm (CEY-CNMF)

2.3. Implementation of CNMF for Spectral Unmixing: Second Level of Spectral Matching between LRHSI and HRMSI

| Algorithms 1. The short code for all algorithm utilized in the present work |

| Inputs: low-spatial-resolution hyperspectral data, X Є RLh × Nh; high-spatial-resolution multispectral data, Y Є RLm × Nm; threshold inner loop, ε1 = 1 × 10−8; threshold outer loop, ε2 = 1 × 10−2; threshold max N of inner loop, N1 = 200; threshold max N of outer loop, N2 = 1; max N of loops for R-SRC estimation, N3 = 1500. Outputs: endmember, E Є RLh × D, with D endmembers; abundance matrix, A Є RD × Nm

2: E-CNMF: adjust Y using Equation (8) 3: CE-CNMF: adjust Y using Equation (9) 4: CEY-CNMF: adjust Y and cascade end results for second level of NMF, as shown in the flow chart in (10).

|

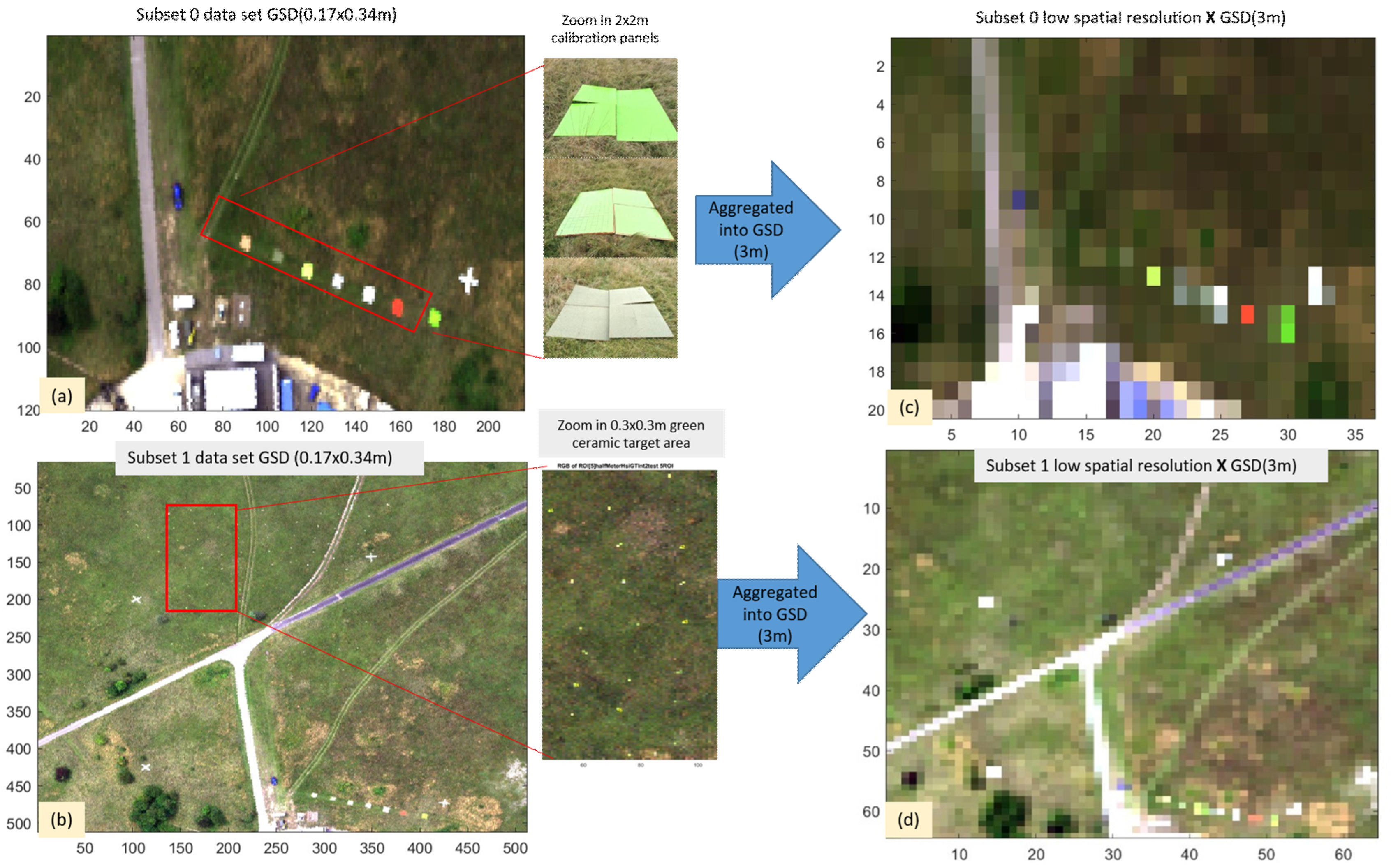

2.4. HSI Datasets

2.5. Assessment of the Effectiveness of Spectral-Unmixing-Based SR

3. Super-Resolution (SR) Results

3.1. Significance of R-SRC for the Effectiveness of SR

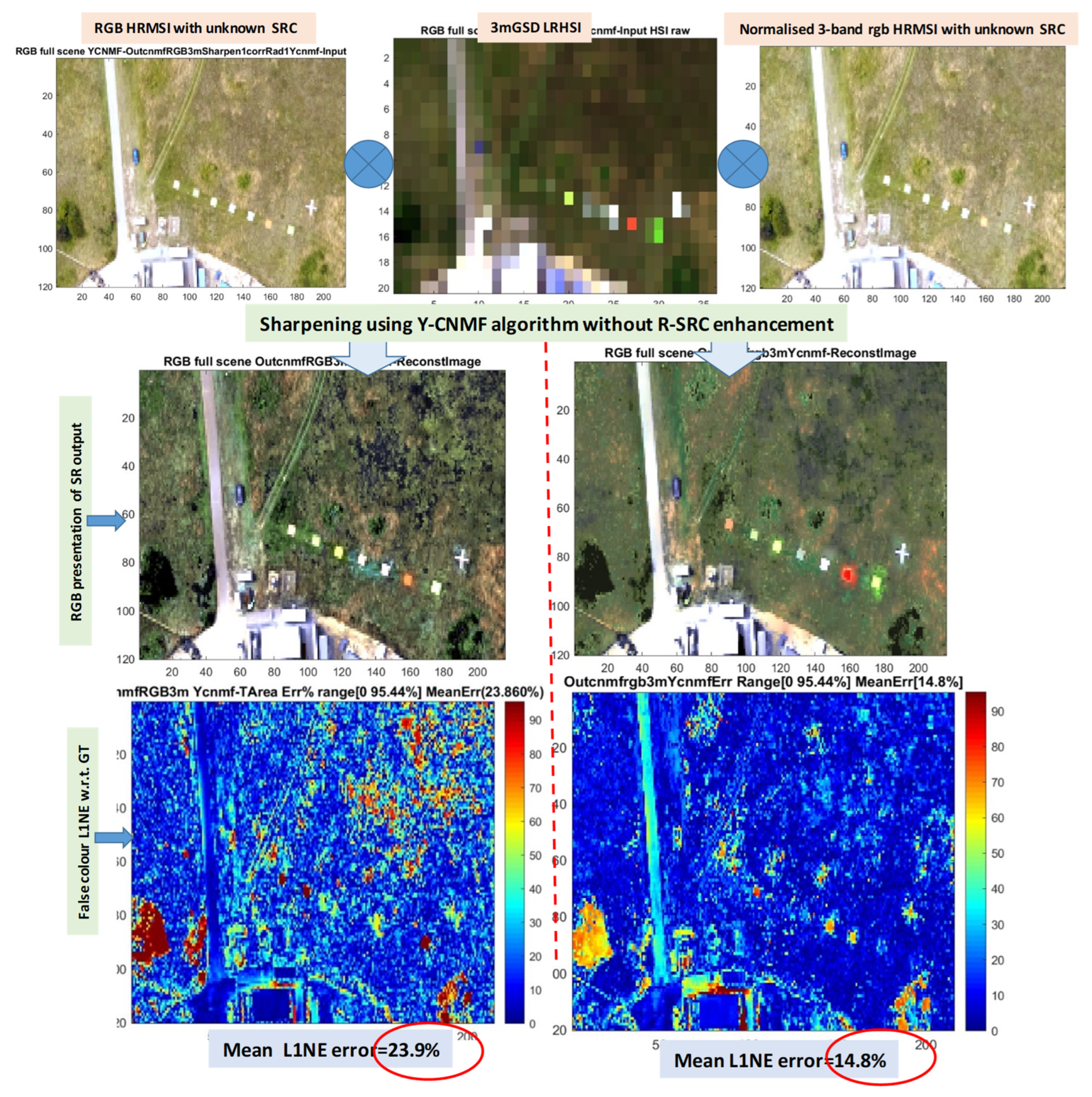

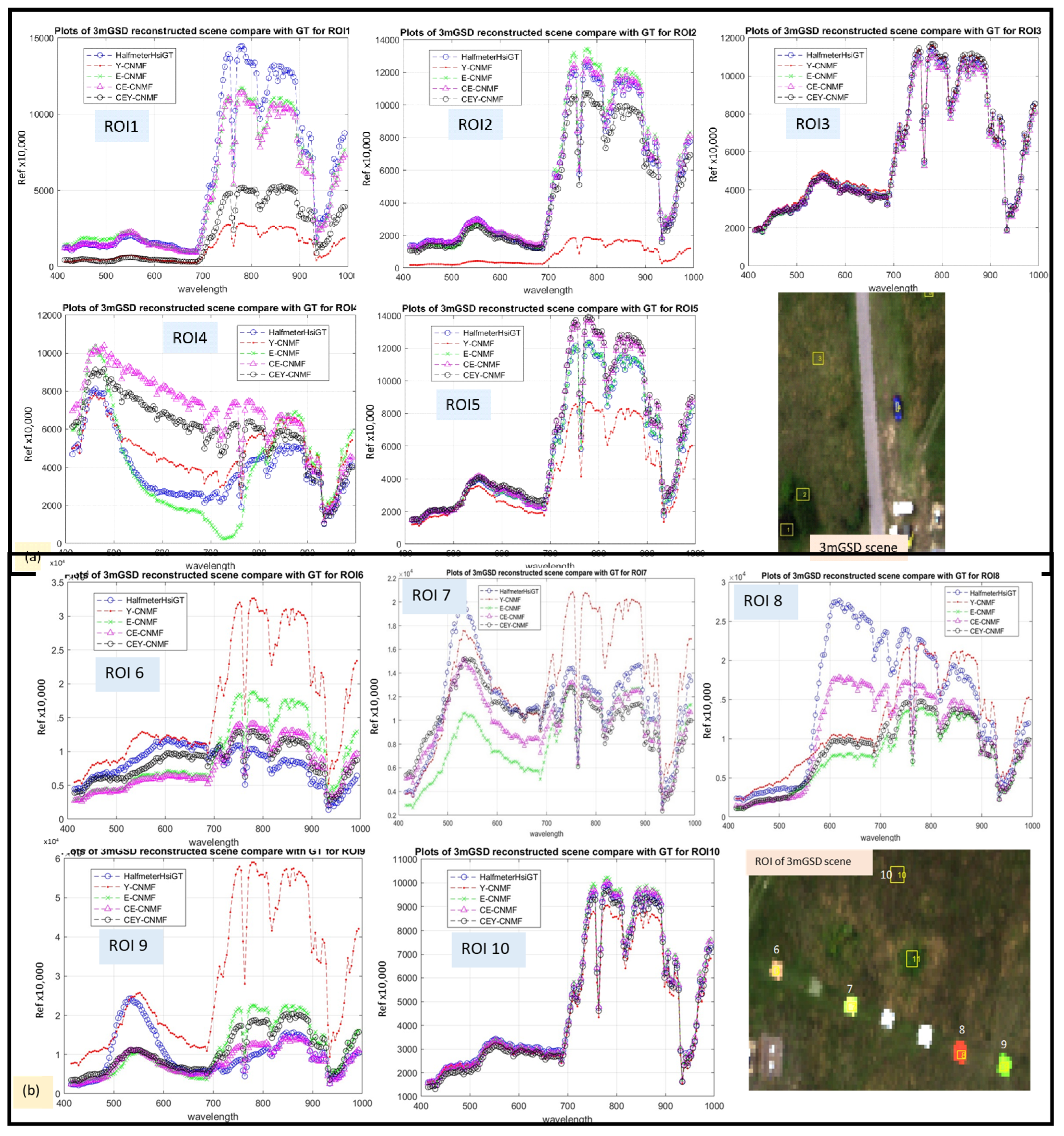

3.2. Subset 0: SR Performance of the Proposed E-CNMF, CE-CNMF, and CEY-CNMF vs. Y-CNMF

3.2.1. Performance of SR Assessed by L1NE vs. SAM

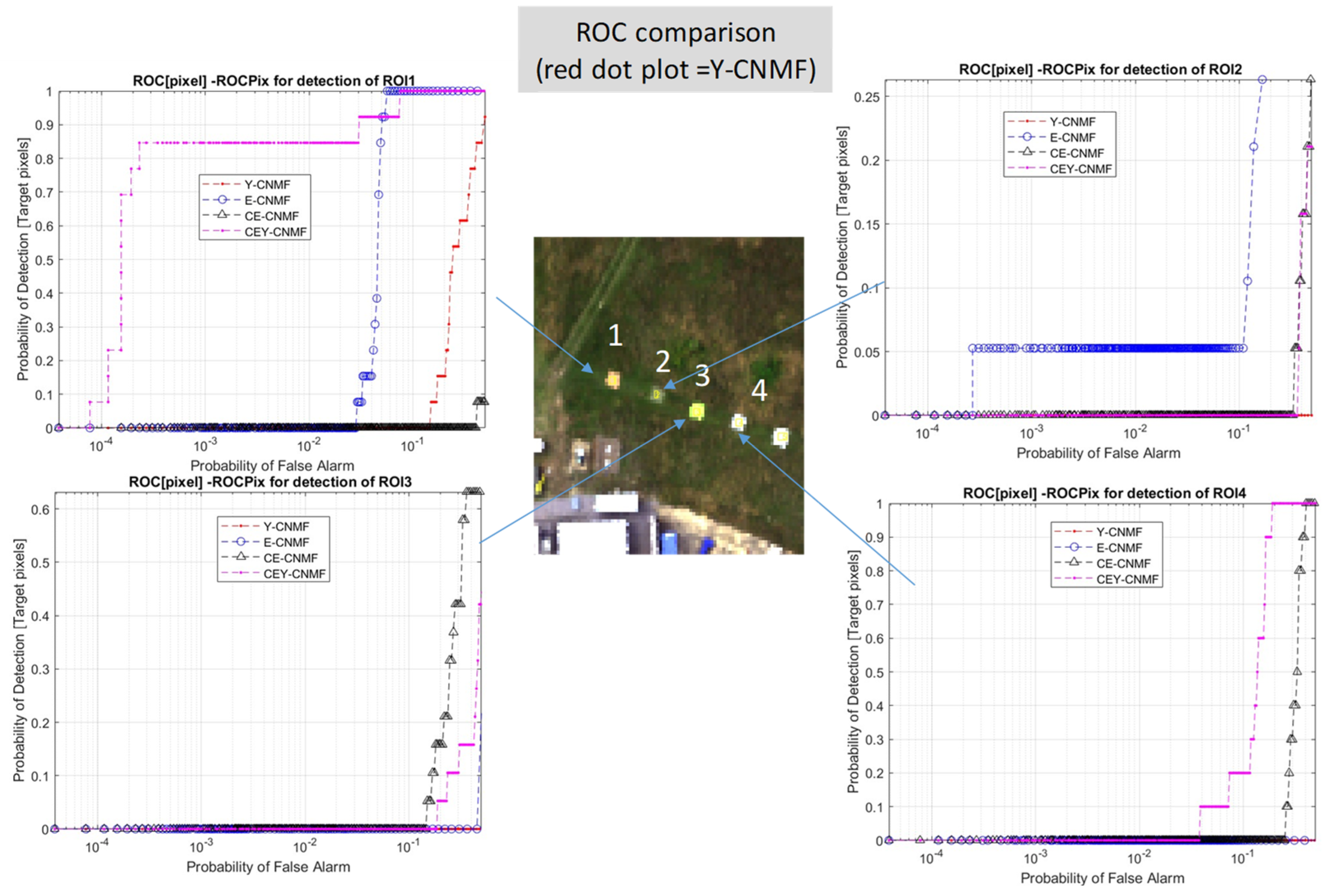

3.2.2. ROC Assessment for the Recovery of Large Targets with Abundance 0.44

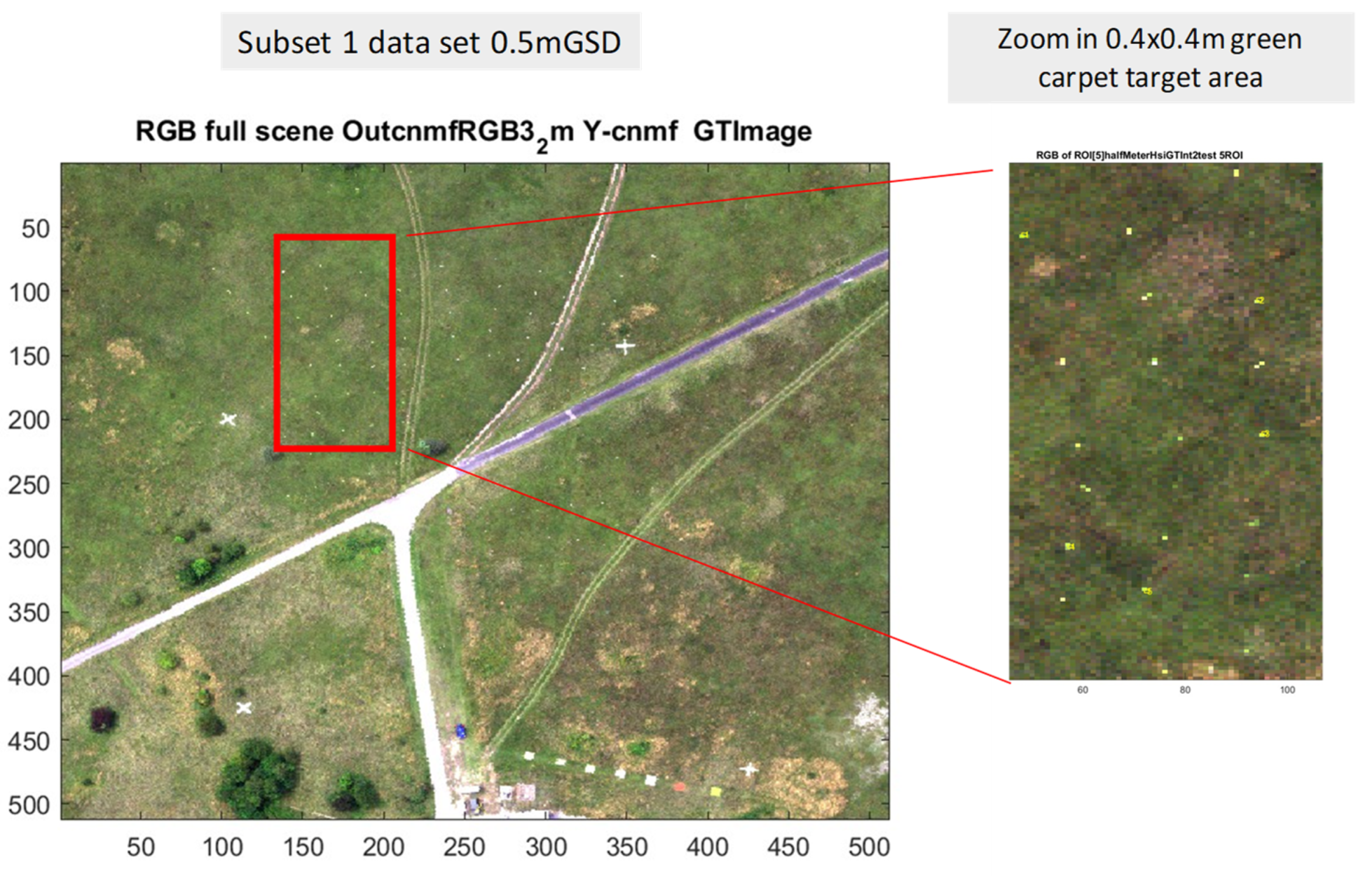

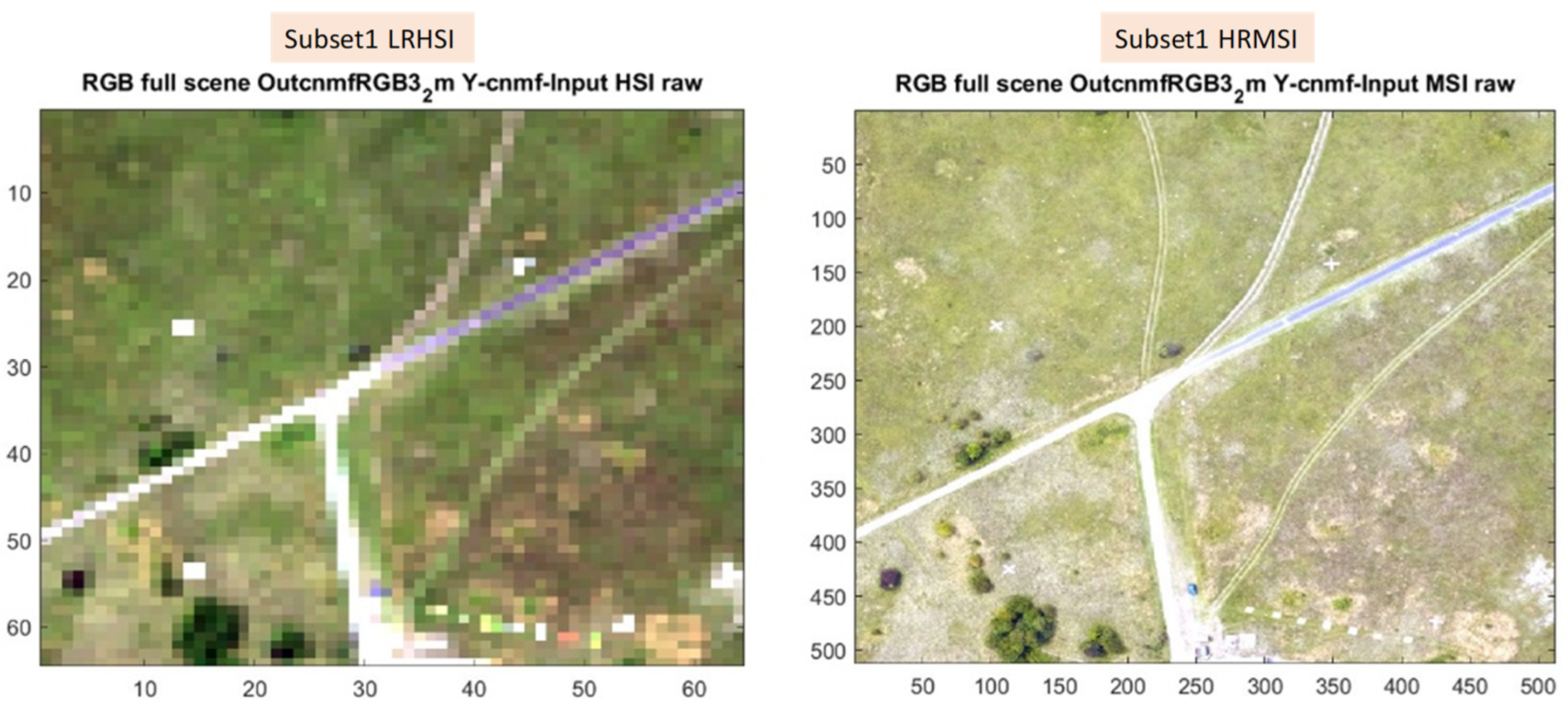

3.3. Subset 1: SR Performance of the Proposed E-CNMF, CE-CNMF, and CEY-CNMF vs. Y-CNMF

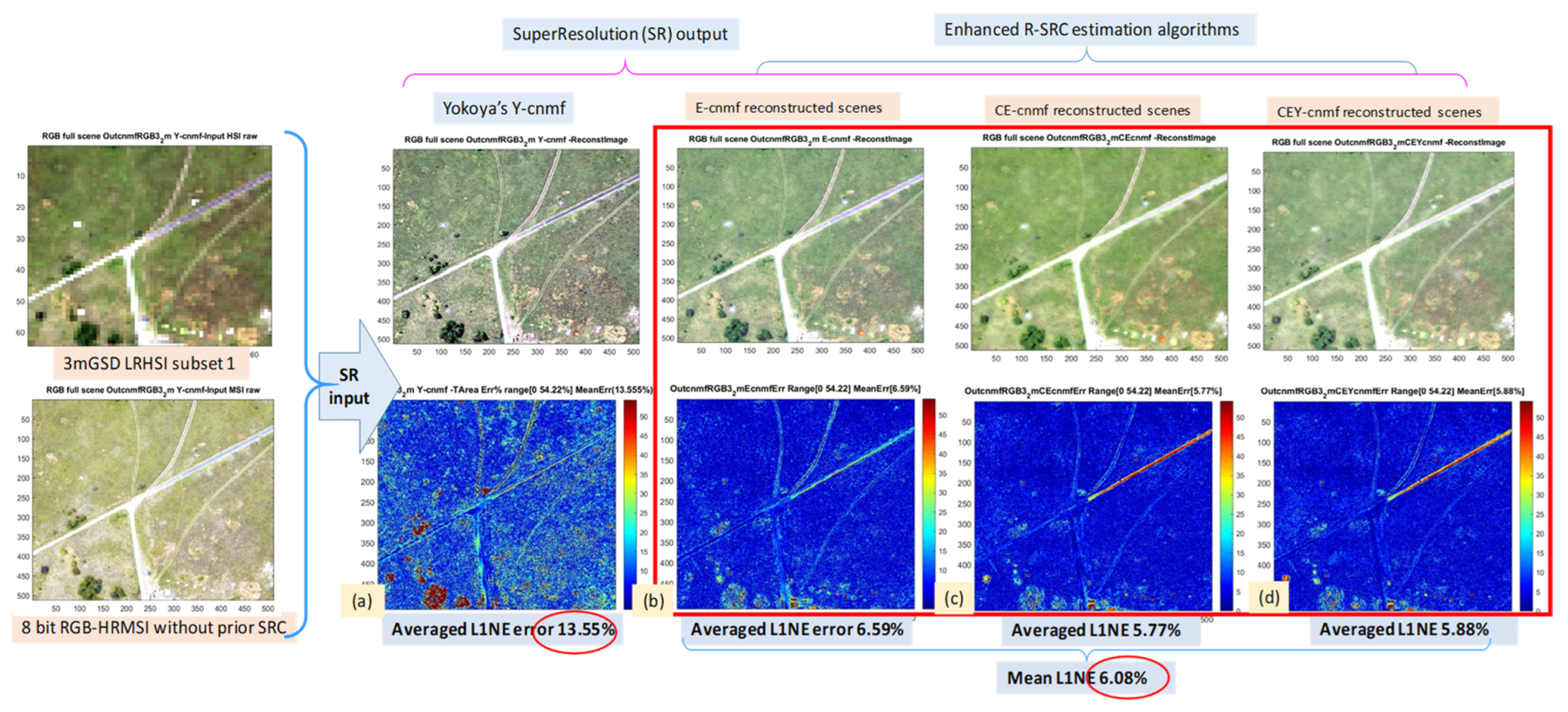

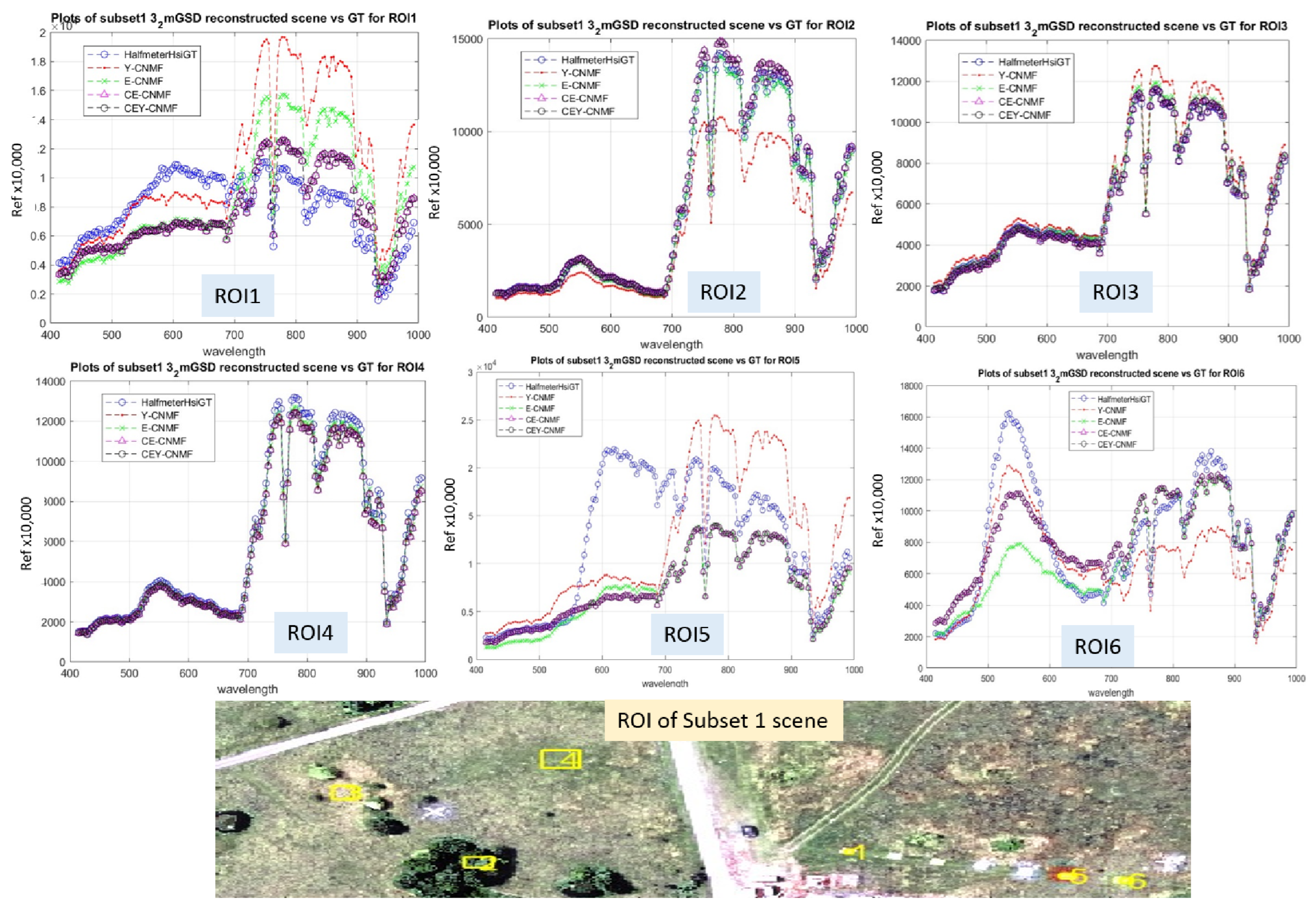

3.3.1. Subset 1: Performance of SR Assessed by L1NE

3.3.2. Subset 1: ROC Assessment for the Recovery of Large Targets with Abundance 0.44

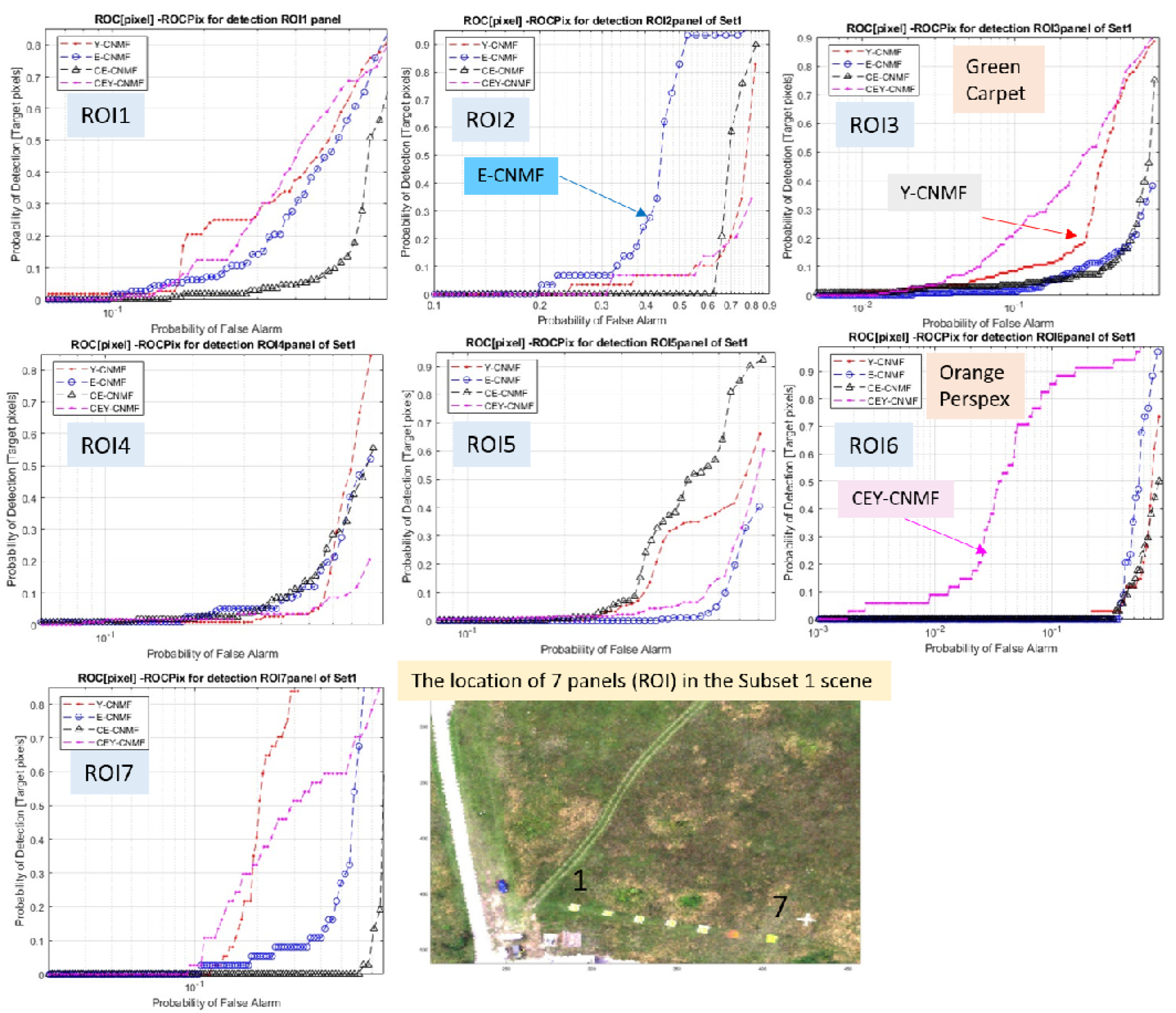

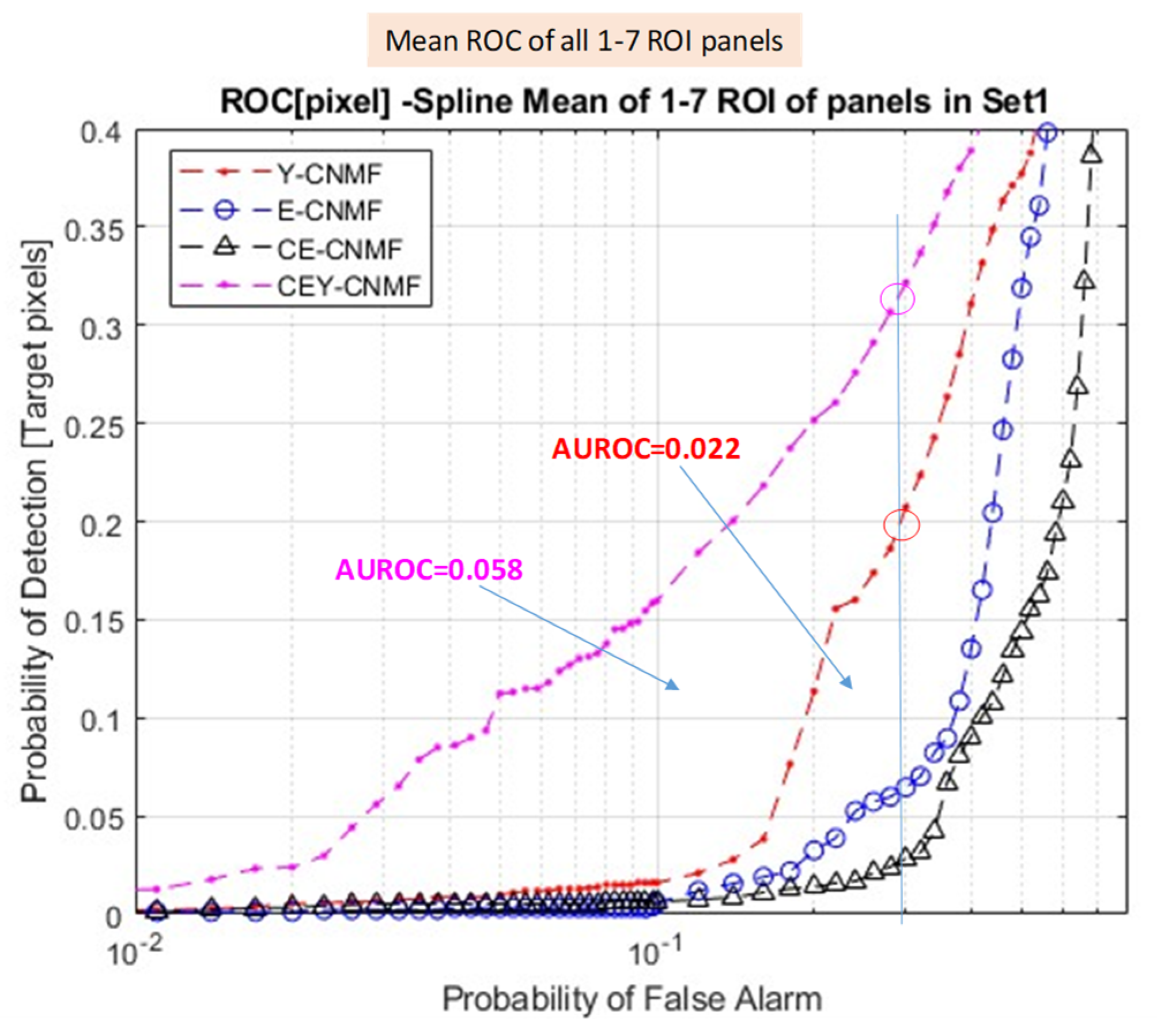

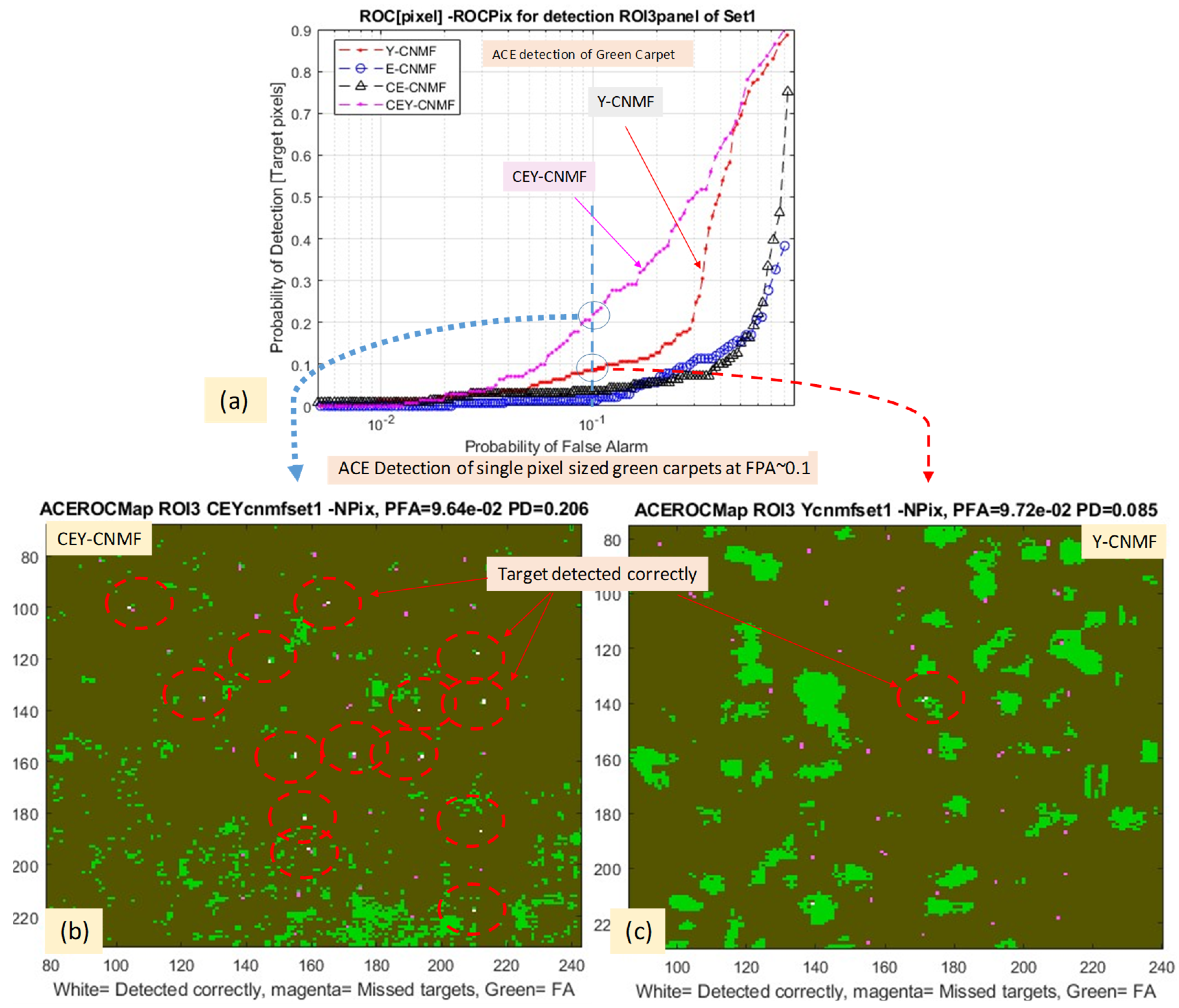

3.3.3. Subset 1: ROC Assessment for the Recovery of Small Targets with Abundance of 0.015

4. Discussion

- It appears that some objects in the scene, such as those ‘rare’ species, such as the manmade foot path and panels, exhibited larger reconstruction errors than the vegetation species (see Figure 4, Figure 6 and Figure 10). This might be due to the relatively small number of spectra of these materials in the multidimensional simplex enclosing the image spectra, which induced local mini-max when the E or Em in Equation (15) were evaluated. It is possible that additional processing, such as the partition of the scene through SLIC clustering [41], will be able to help solve this problem.

- It is intriguing to note the rather variable ROC statistics over the seven manmade panels that were recovered by the proposed algorithms: some panels appeared to be recovered much better than others, as revealed by the ROC (Figure 12). It is worthwhile to look deeper into whether this is caused by some specific spectral characteristics of the panels, or whether it is due to other factors that affect the accuracy of the reconstruction of the panels’ spectral properties.

- It is noted that the false alarm rate for target detection with sharpened data (Figure 14) was much higher (~one or two orders of magnitude) than for detection using the GT HRHSI (for a comparable detection rate). This may suggest that the CNMF algorithm and the R-SRC estimation both need to be improved in order to realize this technique for real-world applications [6]. However, it should also be noted that the targets exhibited an extremely low abundance in the LRHSI, making it unsurprising that detection was difficult.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Faltynkova, A.; Johnsen, G.; Wagner, M. Hyperspectral imaging as an emerging tool to analyze microplastics: A systematic review and recommendations for future development. Microplastics Nanoplastics 2021, 1, 13. [Google Scholar] [CrossRef]

- Yel, S.G.; Gormus, E.T. Exploiting hyperspectral and multispectral images in the detection of tree species: A review. Front. Remote Sens. 2023, 4, 1136289. [Google Scholar] [CrossRef]

- Yuen, P.W.; Richardson, M. An introduction to hyperspectral imaging and its application for security, surveillance and target acquisition. Imaging Sci. J. 2010, 58, 241–253. [Google Scholar] [CrossRef]

- Chen, C.; Wang, Y.; Zhang, N.; Zhang, Y.; Zhao, Z. A Review of Hyperspectral Image Super-Resolution Based on Deep Learning. Remote Sens. 2023, 15, 2853. [Google Scholar] [CrossRef]

- Chen, H.; He, X.; Qing, L.; Wu, Y.; Ren, C.; Sheriff, R.E.; Zhu, C. Real-world single image super-resolution: A brief review. Inf. Fusion 2021, 79, 124–145. [Google Scholar] [CrossRef]

- Aburaed, N.; Alkhatib, M.Q.; Marshall, S.; Zabalza, J.; Al Ahmad, H. A Review of Spatial Enhancement of Hyperspectral Remote Sensing Imaging Techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2275–2300. [Google Scholar] [CrossRef]

- Javan, F.D.; Samadzadegan, F.; Mehravar, S.; Toosi, A.; Khatami, R.; Stein, A. A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery. ISPRS J. Photogramm. Remote Sens. 2020, 171, 101–117. [Google Scholar] [CrossRef]

- Sara, D.; Mandava, A.K.; Kumar, A.; Duela, S.; Jude, A. Hyperspectral and multispectral image fusion techniques for high resolution applications: A review. Earth Sci. Inform. 2021, 14, 1685–1705. [Google Scholar] [CrossRef]

- Kahraman, S.; Ertürk, A. A comprehensive review of pansharpening algorithms for göktürk-2 satellite images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-4/W4, 263–270. [Google Scholar] [CrossRef]

- Kawakami, R.; Matsushita, Y.; Wright, J.; BenEzra, M.; Tai, Y.W.; Ikeuchi, K. High-resolution hyperspectral imaging via ma-trix factorization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 2329–2336. [Google Scholar]

- Shi, S.; Gong, X.; Mu, Y.; Finch, K.; Gamez, G. Geometric super-resolution on push-broom hyperspectral imaging for plasma optical emission spectroscopy. J. Anal. At. Spectrom. 2018, 33, 1745–1752. [Google Scholar] [CrossRef]

- Wang, L.; Wang, Y.; Dong, X.; Xu, Q.; Yang, J.; An, W.; Guo, Y. Unsupervised degradation representation learning for blind su-per-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral Pansharpening: A Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Mani, V.R.S. A Survey of Multi Sensor Satellite Image Fusion Techniques. Int. J. Sens. Sens. Netw. 2020, 8, 1–10. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, F.; Wan, W.; Yu, H.; Sun, J.; Del Ser, J.; Elyan, E.; Hussain, A. Panchromatic and multispectral image fusion for remote sensing and earth observation: Concepts, taxonomy, literature review, evaluation methodologies and challenges ahead. Inf. Fusion 2023, 93, 227–242. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled Nonnegative Matrix Factorization Unmixing for Hyperspectral and Multispectral Data Fusion. IEEE Trans. Geosci. Remote Sens. 2011, 50, 528–537. [Google Scholar] [CrossRef]

- Yokoya, N.; Mayumi, N.; Iwasaki, A. Cross-calibration for data fusion of EO-1/Hyperion and Terra/ASTER. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 419–426. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Hyperspectral, multispectral, and panchromatic data fusion based on non-negative matrix factorization. In Proceedings of the 2011 3rd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote sensing (WHISPERS), Lisbon, Portugal, 6–9 June 2011. [Google Scholar]

- He, C.; Wang, J.; Lai, S.; Ennadi, A. Image Fusion in Remote Sensing Based on Spectral Unmixing and Improved Nonnegative Matrix Factorization Algorithm. J. Eng. Sci. Technol. Rev. 2018, 11, 79–88. [Google Scholar] [CrossRef]

- Lu, L.; Ren, X.; Yeh, K.-H.; Tan, Z.; Chanussot, J. Exploring coupled images fusion based on joint tensor decomposition. Hum. -Centric Comput. Inf. Sci. 2020, 10, 1–26. [Google Scholar] [CrossRef]

- Qu, J.; Li, Y.; Du, Q.; Xia, H. Hyperspectral and Panchromatic Image Fusion via Adaptive Tensor and Multi-Scale Retinex Algorithm. IEEE Access 2020, 8, 30522–30532. [Google Scholar] [CrossRef]

- Guo, H.; Bao, W.; Feng, W.; Sun, S.; Mo, C.; Qu, K. Multispectral and Hyperspectral Image Fusion Based on Joint-Structured Sparse Block-Term Tensor Decomposition. Remote Sens. 2023, 15, 4610. [Google Scholar] [CrossRef]

- Priya, K.; Rajkumar, K.K. Hyperspectral image non-linear unmixing using joint extrinsic and intrinsic priors with L1/2-norms to non-negative matrix factorisation. J. Spectr. Imaging 2022, 11. [Google Scholar] [CrossRef]

- Wang, J.-J.; Dobigeon, N.; Chabert, M.; Wang, D.-C.; Huang, T.-Z.; Huang, J. CD-GAN: A robust fusion-based generative adversarial network for unsupervised remote sensing change detection with heterogeneous sensors. Inf. Fusion 2024, 107. [Google Scholar] [CrossRef]

- Khader, A.; Yang, J.; Xiao, L. NMF-DuNet: Nonnegative Matrix Factorization Inspired Deep Unrolling Networks for Hyperspectral and Multispectral Image Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5704–5720. [Google Scholar] [CrossRef]

- Fernandez-Beltran, R.; Fernandez, R.; Kang, J.; Pla, F. W-NetPan: Double-U network for inter-sensor self-supervised pan-sharpening. Neurocomputing 2023, 530, 125–138. [Google Scholar] [CrossRef]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Estimating the relative spatial and spectral sensor response for hyperspectral and mul-tispectral image fusion. In Proceedings of the 37th Asian Conference on Remote Sensing (ACRS), Colombo, Sri Lanka, 17–21 October 2016. [Google Scholar]

- Xu, Y.; Jiang, X.; Hou, J.; Sun, Y.; Zhu, X. Spatial-spectral dual path hyperspectral image super-resolution reconstruction network based on spectral response functions. Geocarto Int. 2023, 38, 2157497. [Google Scholar] [CrossRef]

- Qu, Y.; Qi, H.; Ayhan, B.; Kwan, C.; Kidd, R. Does multispectral/hyperspectral pansharpening improve the performance of anom-aly detection? In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Fort Worth, TX, USA, 23–28 July 2017; pp. 6130–6133. [Google Scholar]

- Ferraris, V.; Dobigeon, N.; Wei, Q.; Chabert, M. Robust Fusion of Multiband Images With Different Spatial and Spectral Resolutions for Change Detection. IEEE Trans. Comput. Imaging 2017, 3, 175–186. [Google Scholar] [CrossRef]

- Kraut, S.; Scharf, L.; Butler, R. The adaptive coherence estimator: A uniformly most-powerful-invariant adaptive detection statistic. IEEE Trans. Signal Process. 2005, 53, 427–438. [Google Scholar] [CrossRef]

- Lee, D.; Seung, H. Algorithms for Non-negative Matrix Factorization. Proc. Conf. Adv. Neural Inf. Process. Syst. 2001, 13, 556–562. [Google Scholar]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef]

- Nascimento, J.; Dias, J. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral Super-Resolution with Spectral Unmixing Constraints. Remote Sens. 2017, 9, 1196. [Google Scholar] [CrossRef]

- Piper, J. A new dataset for analysis of hyperspectral target detection performance. In Proceedings of the Hyperspectral Imaging and Applications Conference, Coventry, UK, 15–16 October 2014. [Google Scholar]

- Bernstein, L.S.; Jin, X.; Gregor, B.; Adler-Golden, S.M. Quick atmospheric correction code: Algorithm description and recent upgrades. Opt. Eng. 2012, 51, 111719-1–111719-11. [Google Scholar] [CrossRef]

- Zou, K.H.; O’Malley, A.J.; Mauri, L. Receiver-Operating Characteristic Analysis for Evaluating Diagnostic Tests and Predictive Models. Circulation 2007, 115, 654–657. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J. Hyperspectral-Image-Super-Resolution-Benchmark. Available online: https://github.com/junjun-jiang/Hyperspectral-Image-Super-Resolution-Benchmark (accessed on 2 January 2024).

- Piper, J. Computer implemented method of spectral unmixing. International patent WO2021/148769 A1, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuen, P.; Piper, J.; Yuen, C.; Cakir, M. Enhanced Hyperspectral Sharpening through Improved Relative Spectral Response Characteristic (R-SRC) Estimation for Long-Range Surveillance Applications. Electronics 2024, 13, 2113. https://doi.org/10.3390/electronics13112113

Yuen P, Piper J, Yuen C, Cakir M. Enhanced Hyperspectral Sharpening through Improved Relative Spectral Response Characteristic (R-SRC) Estimation for Long-Range Surveillance Applications. Electronics. 2024; 13(11):2113. https://doi.org/10.3390/electronics13112113

Chicago/Turabian StyleYuen, Peter, Jonathan Piper, Catherine Yuen, and Mehmet Cakir. 2024. "Enhanced Hyperspectral Sharpening through Improved Relative Spectral Response Characteristic (R-SRC) Estimation for Long-Range Surveillance Applications" Electronics 13, no. 11: 2113. https://doi.org/10.3390/electronics13112113

APA StyleYuen, P., Piper, J., Yuen, C., & Cakir, M. (2024). Enhanced Hyperspectral Sharpening through Improved Relative Spectral Response Characteristic (R-SRC) Estimation for Long-Range Surveillance Applications. Electronics, 13(11), 2113. https://doi.org/10.3390/electronics13112113