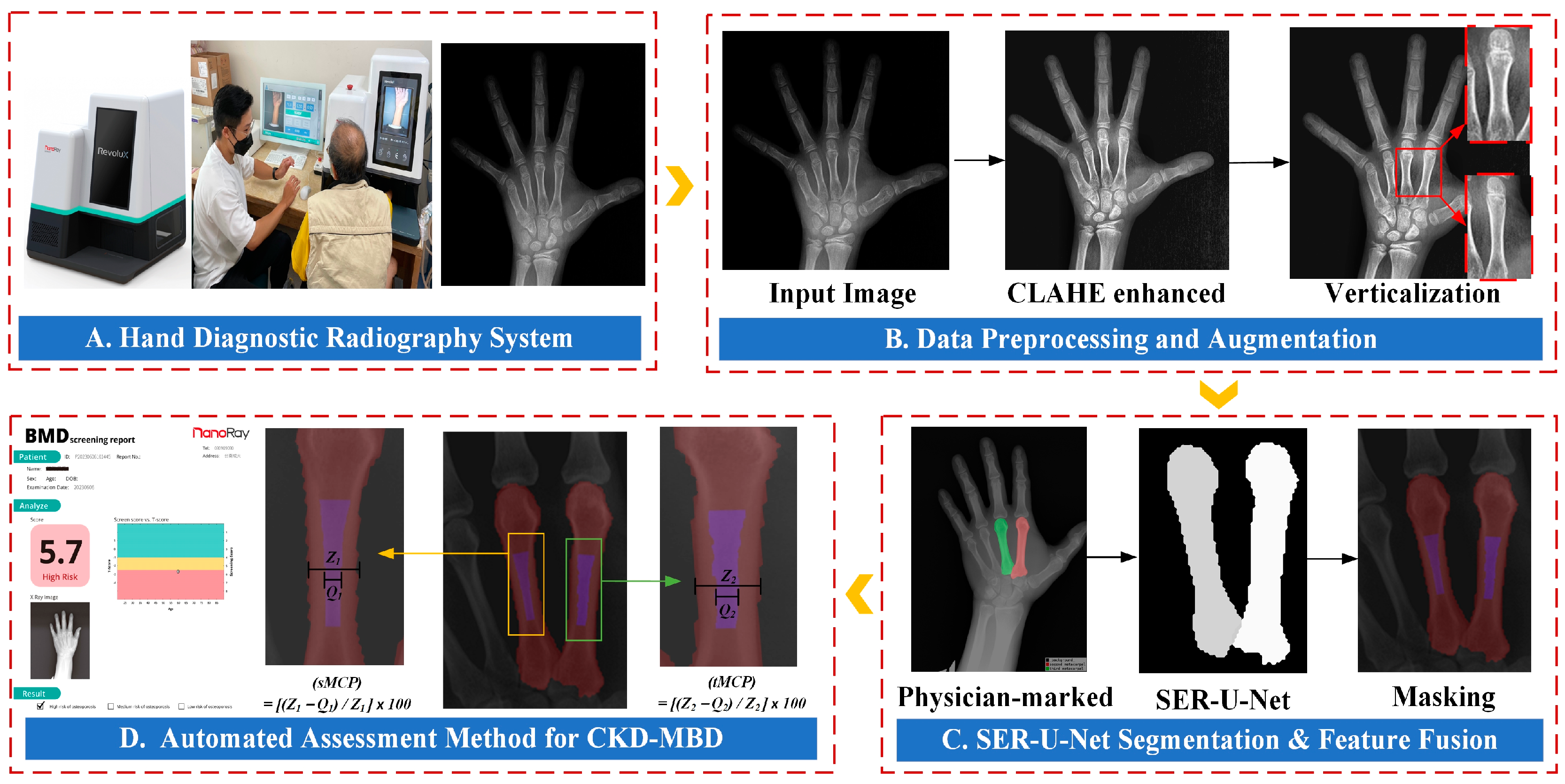

An Automated Assessment Method for Chronic Kidney Disease–Mineral and Bone Disorder (CKD-MBD) Utilizing Metacarpal Cortical Percentage

Abstract

1. Introduction

2. Related Work

- (1)

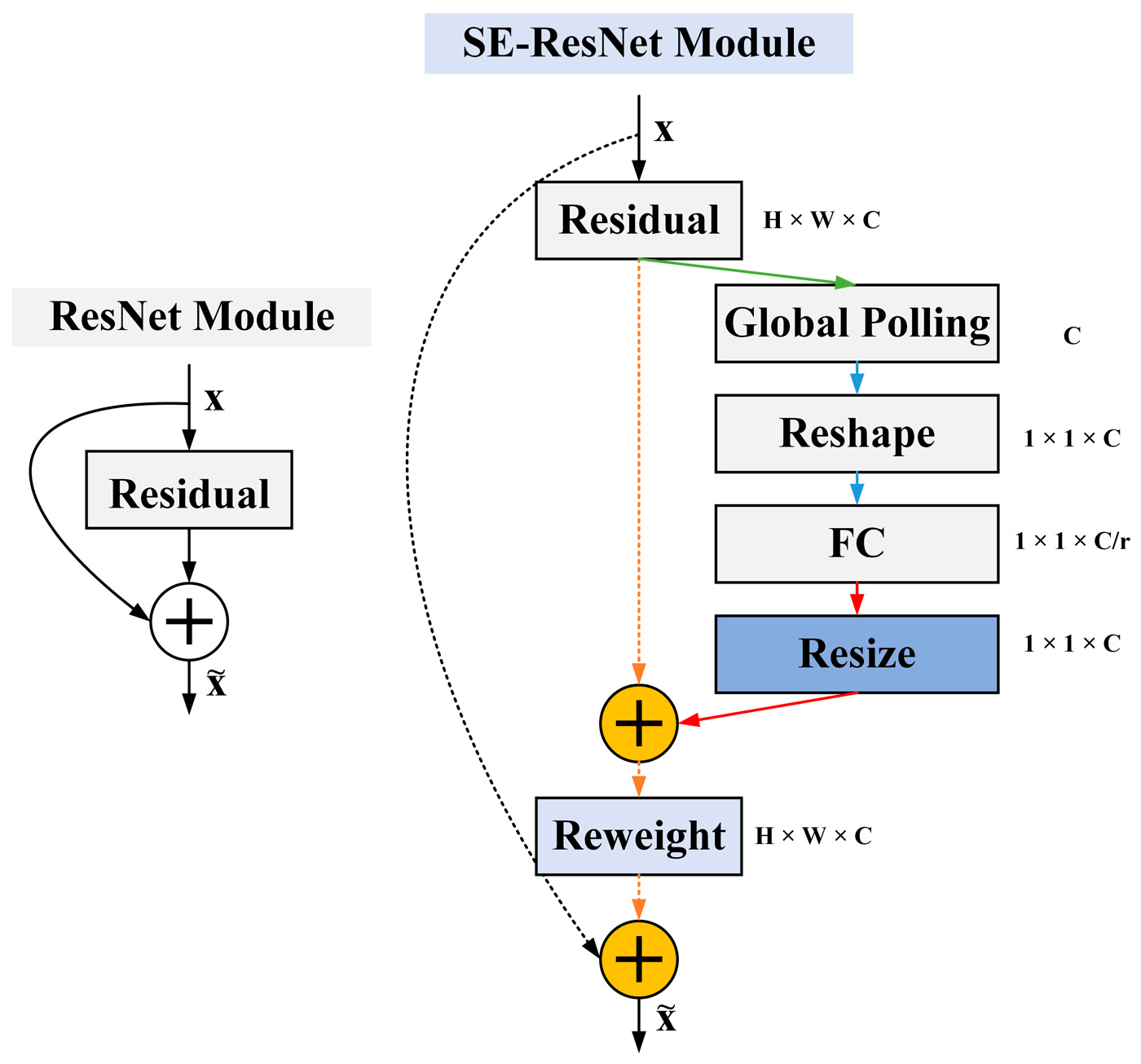

- Demonstrating the use of SE-ResNet for preprocessing hand X-ray images, replacing standard convolution layers with residual structures, and incorporating batch normalization layers to facilitate faster convergence, address the gradient vanishing problem, and improve metacarpal cortical segmentation accuracy by training deeper networks.

- (2)

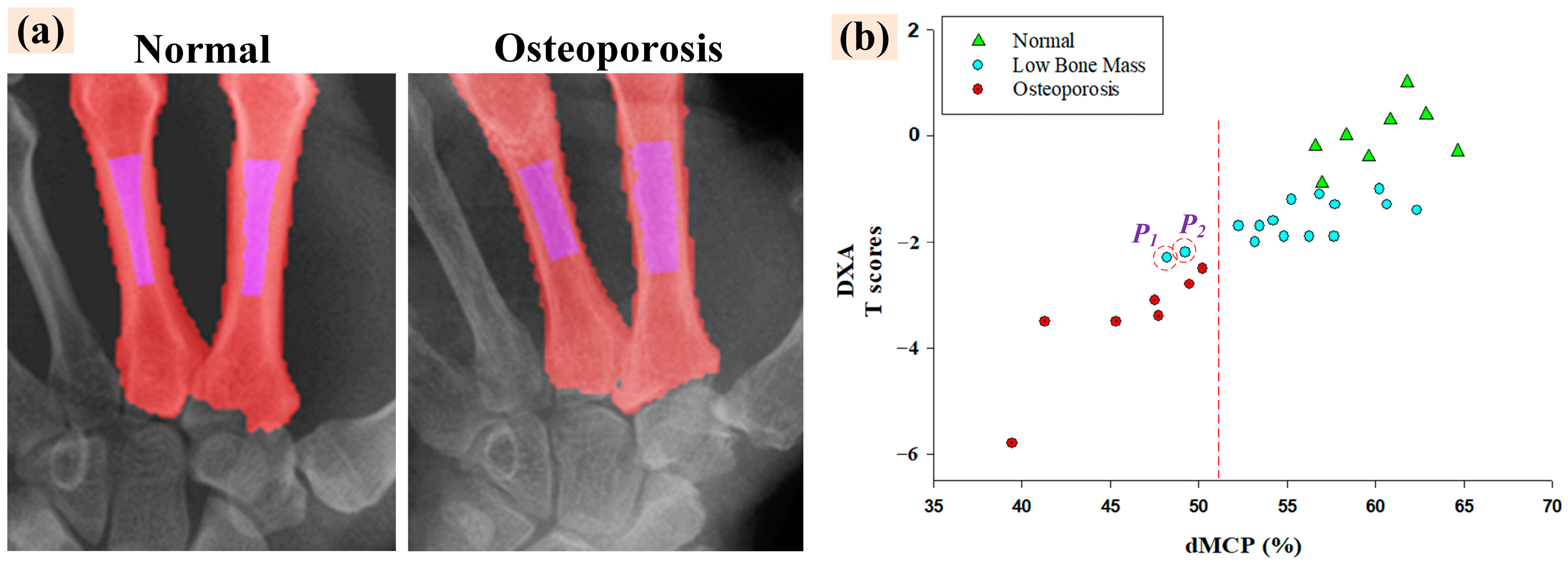

- Demonstrating the accuracy of our system through automated dMCP calculations and assessing its correlation with clinical longitudinal data of kidney disease patients’ hand X-ray images and the DXA dataset.

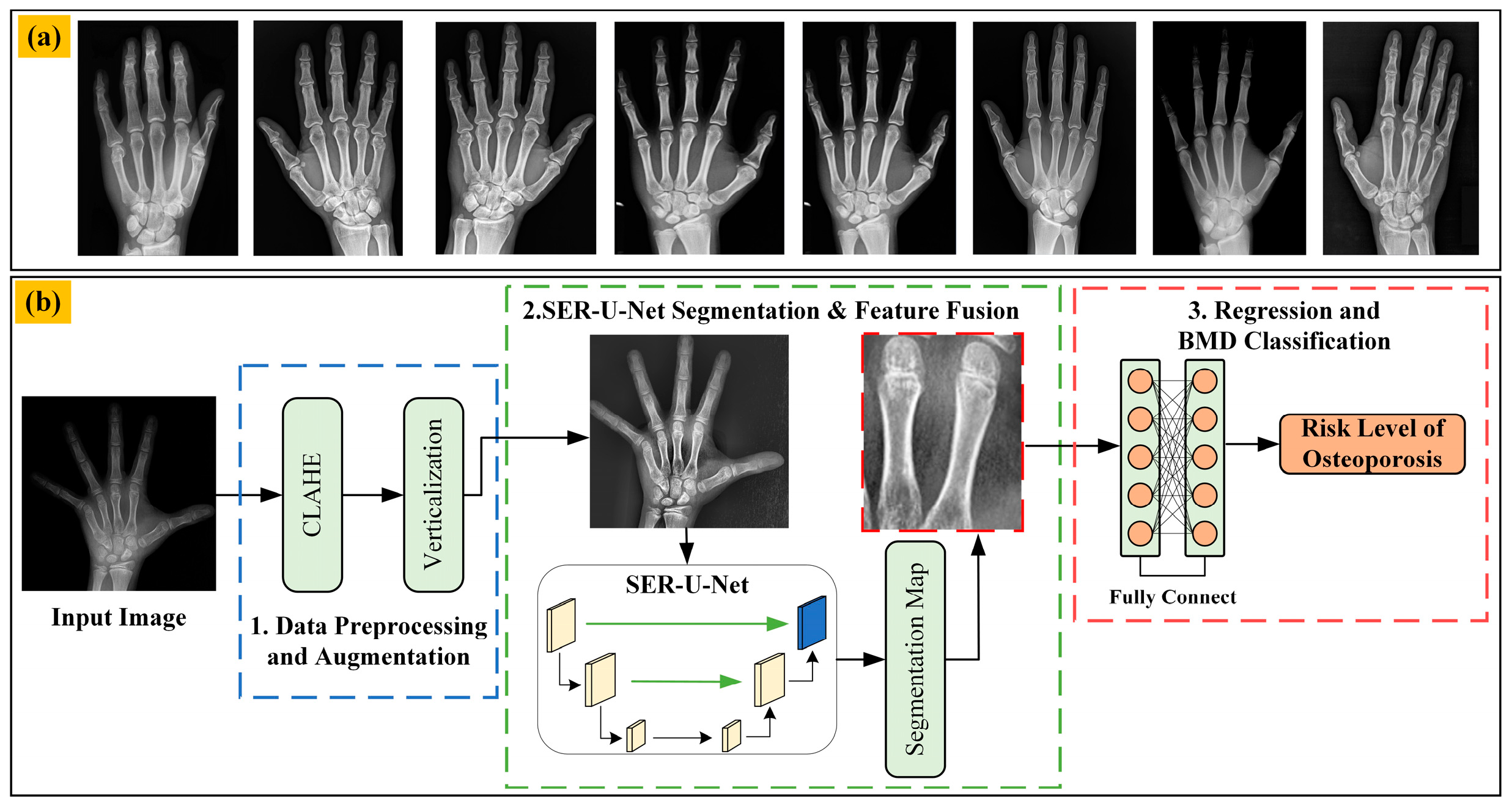

3. Materials and Methods

3.1. Datasets from the Public Internet

3.2. Data Preprocessing and Augmentation

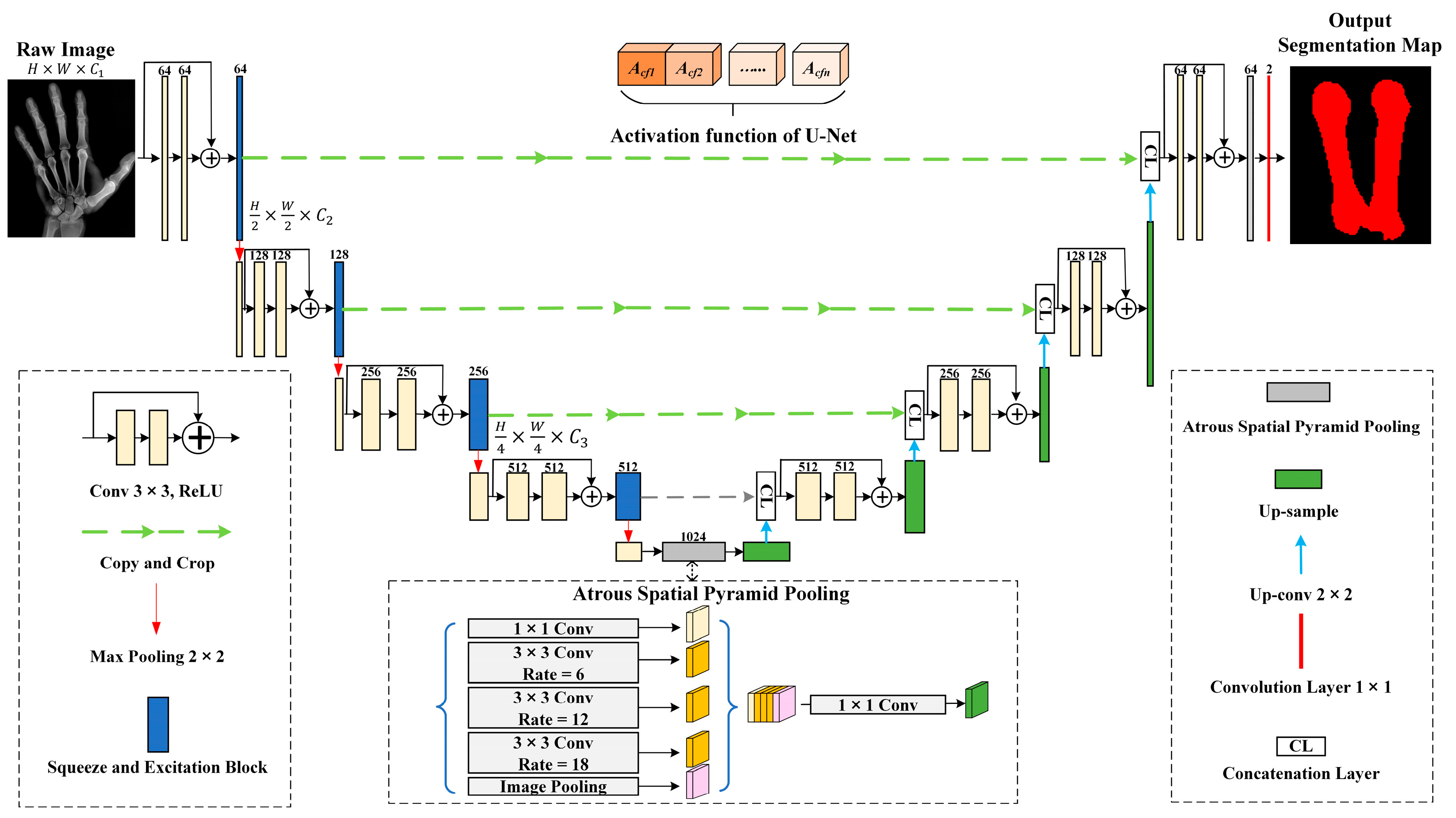

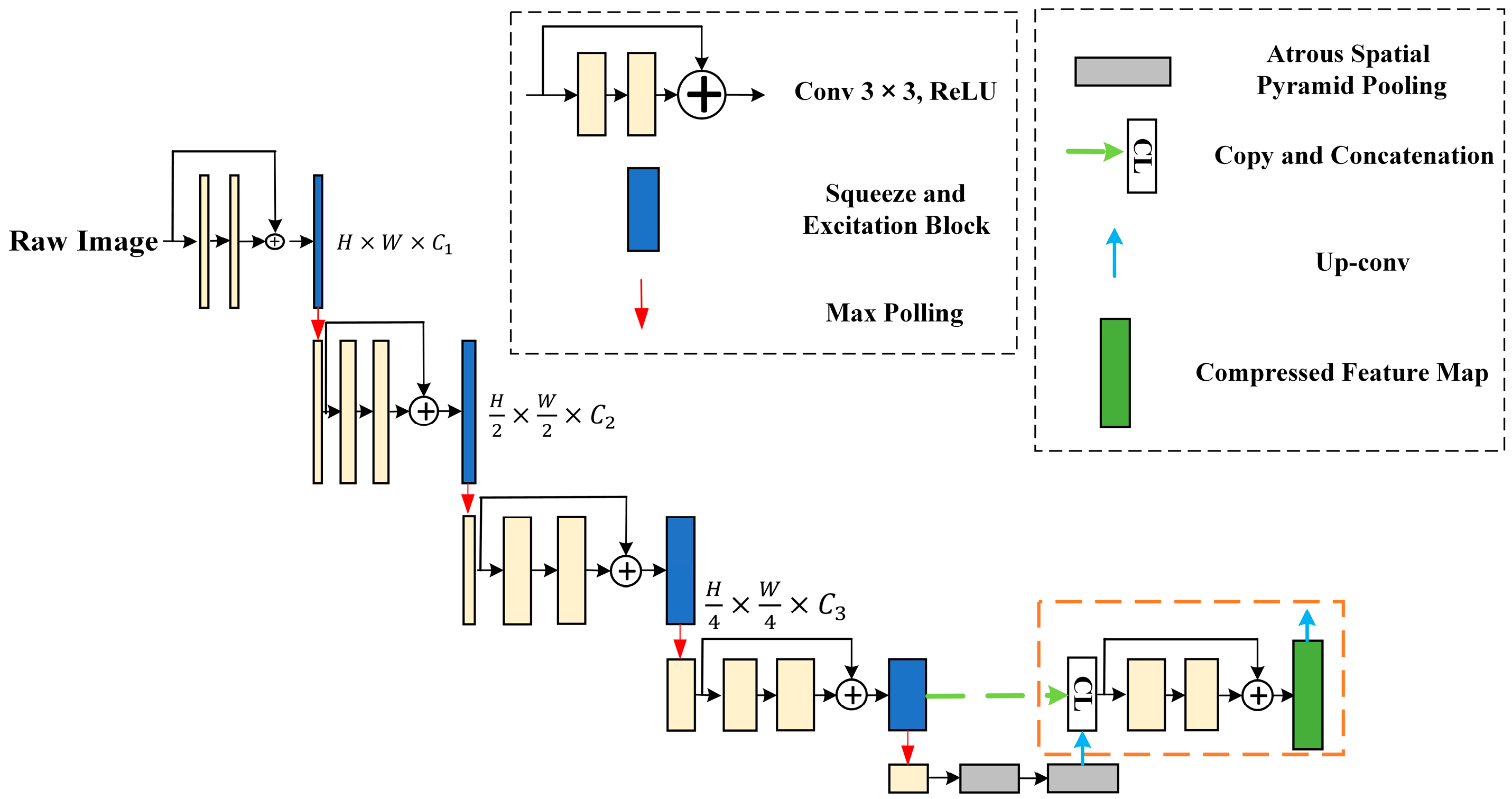

3.3. SER-U-Net Architecture

3.3.1. X-ray Image Compression Module for Feature Extraction

3.3.2. Associating and Learning Features between Channels with SE-ResNet

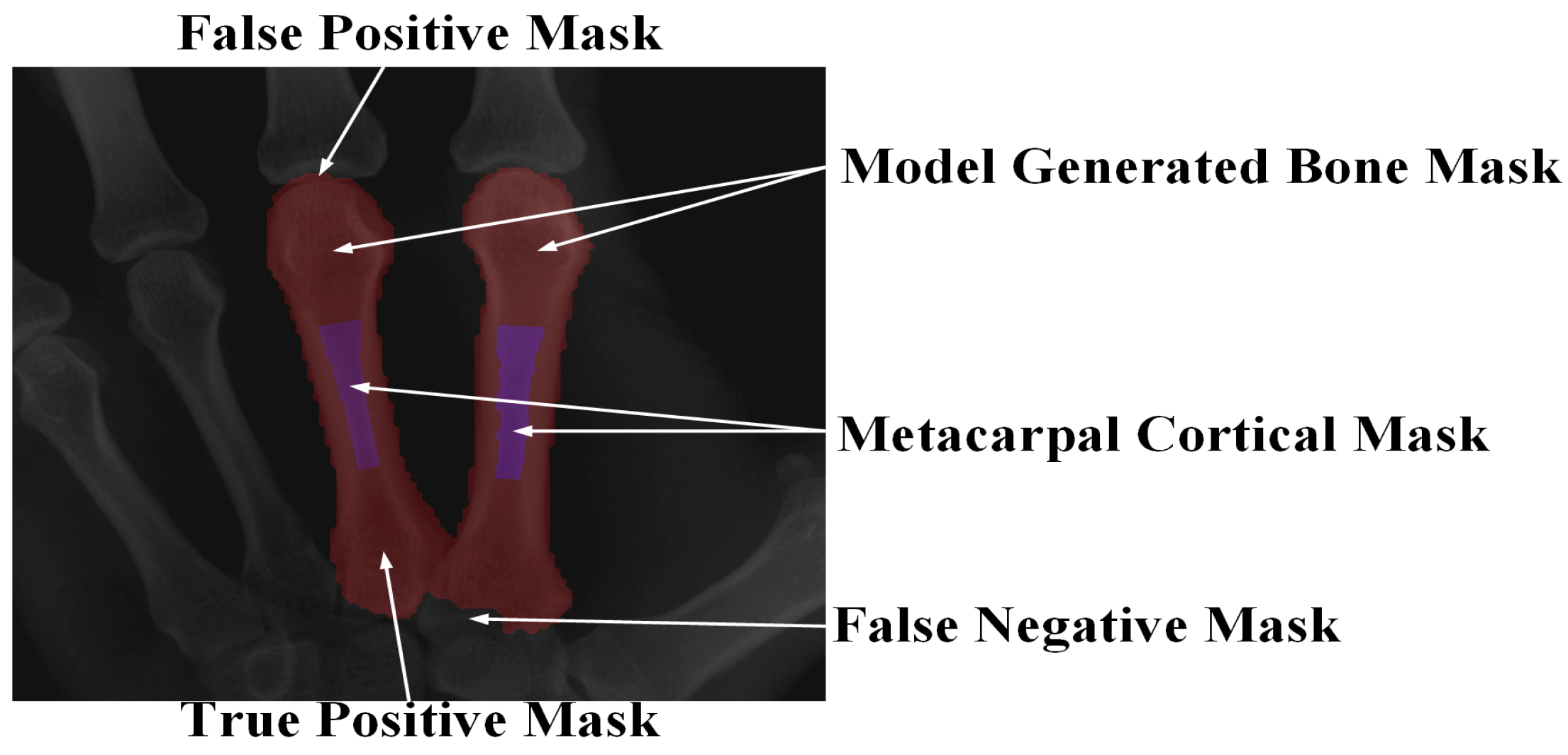

3.4. System Evaluation Indicators

| Algorithm 1: dMCP segmentation |

| Input: : The training data consist of a sample n composed of annotations by medical professionals. :Representing the initial unlabeled data as a sample m. Output: : Completed training of U-Nets. Repeat: Step 1. Train the model on L using the loss function defined in Equation (1) to optimize the performance of . Step 2. Assess the uncertainty among different U-Net models in the unlabeled data. We identify and select the data with the highest uncertainty. Step 3. Annotate the selected data and add them to the dataset, denoted as L. Until: dMCP segmentation is satisfied on U. |

3.5. Clinical Trial

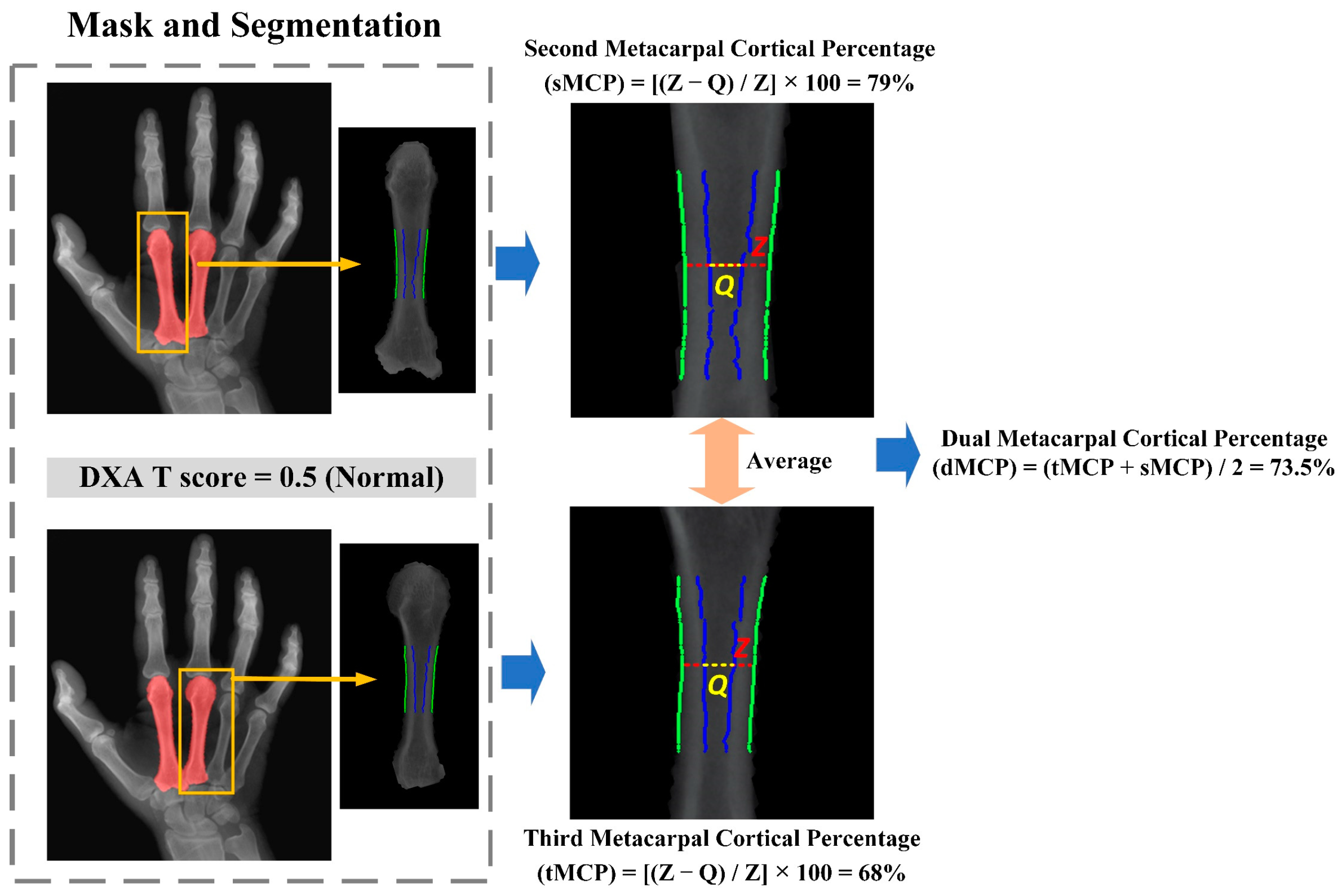

3.6. The Second and Third Metacarpal Cortical Percentage (dMCP) Calculation

4. Results

4.1. Assessing the Proposed Segmentation Model’s Performance in Comparison to Other Models

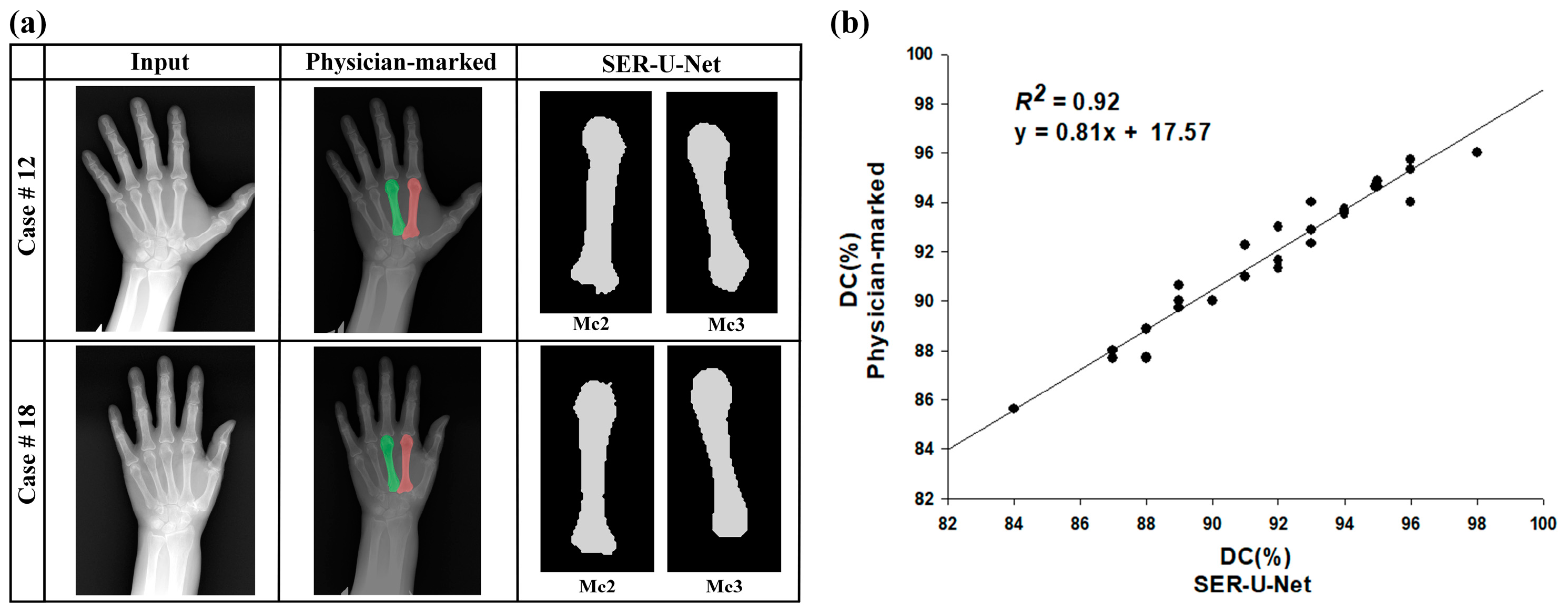

4.2. The SER-U-Net Segmentation Model’s Performance

4.3. Automatic BMD Classification Results of Clinical Renal Dialysis Patients

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hsu, C.Y.; Chen, L.R.; Chen, K.H. Osteoporosis in patients with chronic kidney diseases: A systemic review. Int. J. Mol. Sci. 2020, 21, 6846. [Google Scholar] [CrossRef]

- Cannata-Andía, J.B.; Martín-Carro, B.; Martín-Vírgala, J.; Rodríguez-Carrio, J.; Bande-Fernández, J.J.; Alonso-Montes, C.; Carrillo-López, N. Chronic kidney disease—Mineral and bone disorders: Pathogenesis and management. Calcif. Tissue Int. 2021, 108, 410–422. [Google Scholar] [CrossRef]

- Yen, T.Y.; Ho, C.S.; Chen, Y.P.; Pei, Y.C. Diagnostic Accuracy of Deep Learning for the Prediction of Osteoporosis Using Plain X-rays: A Systematic Review and Meta-Analysis. Diagnostics 2024, 14, 207. [Google Scholar] [CrossRef]

- Goode, S.C.; Wright, T.F.; Lynch, C. Osteoporosis screening and treatment: A collaborative approach. J. Nurse Pract. 2020, 16, 60–63. [Google Scholar] [CrossRef]

- Matsushita, K.; Ballew, S.H.; Wang, A.Y.M.; Kalyesubula, R.; Schaeffner, E.; Agarwal, R. Epidemiology and risk of cardiovascular disease in populations with chronic kidney disease. Nat. Rev. Nephrol. 2022, 18, 696–707. [Google Scholar] [CrossRef]

- Sprague, S.M.; Martin, K.J.; Coyne, D.W. Phosphate Balance and CKD–Mineral Bone Disease. Kidney Int. Rep. 2021, 6, 2049–2058. [Google Scholar] [CrossRef]

- Tsuchiya, K.; Akihisa, T. The importance of phosphate control in chronic kidney disease. Nutrients 2021, 13, 1670. [Google Scholar] [CrossRef]

- O’Mara, A.; Kerkhof, F.; Kenney, D.; Segovia, N.; Asbell, P.; Ladd, A.L. Opportunistic hand radiographs to screen for low forearm bone mineral density: A prospective and retrospective cohort study. BMC Musculoskelet. Disord. 2024, 25, 159. [Google Scholar] [CrossRef]

- Choi, H.G.; Kim, D.S.; Lee, B.; Youk, H.; Lee, J.W. High risk of hip and spinal fractures after distal radius fracture: A longitudinal follow-up study using a national sample cohort. Int. J. Environ. Res. Public Health 2021, 18, 7391. [Google Scholar] [CrossRef]

- Clynes, M.A.; Westbury, L.D.; Dennison, E.M.; Kanis, J.A.; Javaid, M.K.; Harvey, N.C.; Fujita, M.; Cooper, C.; Leslie, W.D.; Shuhart, C.R. International Society for Clinical Densitometry (ISCD) and the International Osteoporosis Foundation (IOF). Bone densitometry worldwide: A global survey by the ISCD and IOF. Osteoporos. Int. 2020, 31, 1779–1786. [Google Scholar] [CrossRef]

- Holubiac, I.Ș.; Leuciuc, F.V.; Crăciun, D.M.; Dobrescu, T. Effect of strength training protocol on bone mineral density for postmenopausal women with osteopenia/osteoporosis assessed by dual-energy X-ray absorptiometry (DEXA). Sensors 2022, 22, 1904. [Google Scholar] [CrossRef]

- Parikh, K.; Reinhardt, D.; Templeton, K.; Toby, B.; Brubacher, J. Rate of bone mineral density testing and subsequent fracture-free interval after distal forearm fracture in the Medicare population. J. Hand Surg. 2021, 46, 267–277. [Google Scholar] [CrossRef]

- Webber, T.; Patel, S.P.; Pensak, M.; Fajolu, O.; Rozental, T.D.; Wolf, J.M. Correlation between distal radial cortical thickness and bone mineral density. J. Hand Surg. 2015, 40, 493–499. [Google Scholar] [CrossRef]

- Sato, Y.; Yamamoto, N.; Inagaki, N.; Iesaki, Y.; Asamoto, T.; Suzuki, T.; Takahara, S. Deep learning for bone mineral density and T-score prediction from chest X-rays: A multicenter study. Biomedicines 2022, 10, 2323. [Google Scholar] [CrossRef]

- Roux, C.; Rozes, A.; Reizine, D.; Hajage, D.; Daniel, C.; Maire, A.; Bréant, S.; Taright, N.; Gordon, R.; Tubach, F.; et al. Fully automated opportunistic screening of vertebral fractures and osteoporosis on more than 150,000 routine computed tomography scans. Rheumatology 2022, 61, 3269–3278. [Google Scholar] [CrossRef]

- Kim, M.W.; Huh, J.W.; Noh, Y.M.; Seo, H.E.; Lee, D.H. Assessing Bone Mineral Density in Weight-Bearing Regions of the Body through Texture Analysis of Abdomen and Pelvis CT Hounsfield Unit. Diagnostics 2023, 13, 2968. [Google Scholar] [CrossRef]

- Ma, S.B.; Lee, S.K.; An, Y.S.; Kim, W.S.; Choy, W.S. The clinical necessity of a distal forearm DEXA scan for predicting distal radius fracture in elderly females: A retrospective case-control study. BMC Musculoskelet. Disord. 2023, 24, 177. [Google Scholar] [CrossRef]

- Yoshii, I.; Sawada, N.; Chijiwa, T.; Kokei, S. Usefulness of cortical thickness ratio of the third metacarpal bone for prediction of major osteoporotic fractures. Bone Rep. 2022, 16, 101162. [Google Scholar] [CrossRef]

- Burton, H.; Bodansky, D.; Silver, N.; Yao, J.; Horwitz, M. Assessing Bone Mineral Density Using Radiographs of the Hand: A Multicenter Validation. J. Hand Surg. 2023, 48, 1210–1216. [Google Scholar] [CrossRef]

- Massoptier, L.; Casciaro, S. A new fully automatic and robust algorithm for fast segmentation of liver tissue and tumors from CT scans. Eur. Radiol. 2008, 18, 1658–1665. [Google Scholar] [CrossRef]

- Li, X.; Huang, C.; Jia, F.; Li, Z.; Fang, C.; Fan, Y. Automatic liver segmentation using statistical prior models and free-form deformation. In Medical Computer Vision: Algorithms for Big Data: International Workshop, MCV 2014, Held in Conjunction with MICCAI 2014, Cambridge, MA, USA, September 18, 2014, Revised Selected Papers; Springer International Publishing: Cambridge, MA, USA, 2014; pp. 181–188. [Google Scholar]

- Wang, J.; Cheng, Y.; Guo, C.; Wang, Y.; Tamura, S. Shape–intensity prior level set combining probabilistic atlas and probability map constrains for automatic liver segmentation from abdominal CT images. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 817–826. [Google Scholar] [CrossRef]

- Aloysius, N.; Geetha, M. A review on deep convolutional neural networks. In Proceedings of the 2017 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 6–8 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 588–592. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic object classes in video: A high-definition ground truth database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

- Deng, L. Research on Image Recognition Algorithm Based on Deep Convolution Neural Network. Acad. J. Comput. Inf. Sci. 2020, 3. [Google Scholar]

- Shaaban, A.; Du, Y.C. An Optical Universal Plasmon-Based Biosensor for Virus Detection. J. Med. Biol. Eng. 2023, 43, 258–265. [Google Scholar] [CrossRef]

- Ding, L.; Zhao, K.; Zhang, X.; Wang, X.; Zhang, J. A lightweight U-Net architecture multi-scale convolutional network for pediatric hand bone segmentation in X-ray image. IEEE Access 2019, 7, 68436–68445. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Halabi, S.S.; Prevedello, L.M.; Kalpathy-Cramer, J.; Mamonov, A.B.; Bilbily, A.; Cicero, M.; Pan, I.; Pereira, L.A.; Sousa, R.T.; Flanders, A.E.; et al. The RSNA pediatric bone age machine learning challenge. Radiology 2019, 290, 498–503. [Google Scholar] [CrossRef]

- Serrano-Díaz, D.G.; Gómez, W.; Vera, A.; Leija, L. Contrast Enhancement of 3D X-ray Microtomography Using CLAHE for Trabecular Bone Segmentation. In Proceedings of the 2023 Global Medical Engineering Physics Exchanges/Pacific Health Care Engineering (GMEPE/PAHCE), Songdo, Republic of Korea, 27–31 March 2023; pp. 1–6. [Google Scholar]

- Aung, A.A.; Win, Z.M. Preprocessing with contrast enhancement methods in bone age assessment. Comput. Inf. Sci. 2020, 31–45. [Google Scholar] [CrossRef]

- He, J.; Jiang, D. Fully automatic model based on se-resnet for bone age assessment. IEEE Access 2021, 9, 62460–62466. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Jiang, Y.; Chen, L.; Zhang, H.; Xiao, X. Breast cancer histopathological image classification using convolutional neural networks with small SE-ResNet module. PLoS ONE 2019, 14, e0214587. [Google Scholar] [CrossRef]

- Almajalid, R.; Zhang, M.; Shan, J. Fully automatic knee bone detection and segmentation on three-dimensional MRI. Diagnostics 2022, 12, 123. [Google Scholar] [CrossRef]

- Lv, Y.; Wang, J.; Wu, W.; Pan, Y. Performance comparison of deep learning methods on hand bone segmentation and bone age assessment. In Proceedings of the 2022 International Conference on Culture-Oriented Science and Technology (CoST), Lanzhou, China, 18–21 August 2022; IEEE: Piscataway, NJ, USA, 2021; pp. 375–380. [Google Scholar]

- Fradi, M.; Zahzah, E.H.; Machhout, M. Real-time application based CNN architecture for automatic USCT bone image segmentation. Biomed. Signal Process. Control 2022, 71, 103123. [Google Scholar] [CrossRef]

- Meng, L.K.; Khalil, A.; Ahmad Nizar, M.H.; Nisham, M.K.; Pingguan-Murphy, B.; Hum, Y.C.; Salim, M.I.M.; Lai, K.W. Carpal bone segmentation using fully convolutional neural network. Curr. Med. Imaging 2019, 15, 983–989. [Google Scholar] [CrossRef]

- Deng, R.; Cui, C.; Liu, Q.; Yao, T.; Remedios, L.W.; Bao, S.; Landman, B.A.; Wheless, L.E.; Coburn, L.A.; Huo, Y.; et al. Segment anything model (sam) for digital pathology: Assess zero-shot segmentation on whole slide imaging. arXiv 2023, arXiv:2304.04155. [Google Scholar]

- He, S.; Bao, R.; Li, J.; Grant, P.E.; Ou, Y. Accuracy of segment-anything model (sam) in medical image segmentation tasks. arXiv 2023, arXiv:2304.09324. [Google Scholar]

- Hu, C.; Xia, T.; Ju, S.; Li, X. When sam meets medical images: An investigation of segment anything model (sam) on multi-phase liver tumor segmentation. arXiv 2023, arXiv:2304.08506. [Google Scholar]

- Zhou, T.; Zhang, Y.; Zhou, Y.; Wu, Y.; Gong, C. Can sam segment polyps? arXiv 2023, arXiv:2304.07583. [Google Scholar]

- Shin, D.; Kim, M.D.B.; Baek, S. CEmb-SAM: Segment Anything Model with Condition Embedding for Joint Learning from Heterogeneous Datasets. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer Nature: Cham, Switzerland, 2023; pp. 275–284. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Girshick, R.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef]

| Image Size | Time/s | MAE/m |

|---|---|---|

| 128 × 128 | 281 | 12.9 |

| 256 × 256 | 373 | 11.2 |

| 512 × 512 | 764 | 10.2 |

| 1024 × 1024 | 2344 | 9.6 |

| Results | Men | Women | Total | |

|---|---|---|---|---|

| Variable | ||||

| Age (average ± SD) | 63.2 ± 8.1 | 67.2 ± 12.9 | 64.2 ± 9.3 | |

| Sex (n; %) | 23; 76.7 | 7; 23.3 | 30; 100 | |

| Time on dialysis (years) | ||||

| Less than 1 year | 2; 50 | 2; 50 | 4; 13.3 | |

| 1~4 years | 11; 78.6 | 3; 21.4 | 14; 46.7 | |

| 5 years or above | 10; 83.3 | 2; 16.7 | 12; 40 | |

| DXA BMD (T-score) result | ||||

| Normal Bone Density | 9; 90 | 1; 10 | 10; 33.3 | |

| Osteopenia | 12; 92.3 | 1; 7.7 | 13; 43.3 | |

| Osteoporosis | 2; 28.6 | 5; 71.4 | 7; 23.3 | |

Exclusion criteria were:

| ||||

| TPR (%) | FPR (%) | FNR (%) | DC (%) | SI (%) | |

|---|---|---|---|---|---|

| SER-U-Net | 97.82 | 5.66 | 2.37 | 96.62 | 94.48 |

| U-Net | 98.16 | 15.28 | 0.62 | 88.41 | 92.75 |

| SegNet | 84.25 | 22.16 | 14.33 | 72.28 | 84.39 |

| FCN-8 | 91.75 | 4.31 | 7.50 | 90.37 | 93.06 |

| Manual Detection (Physician-Marked) | Automatic Detection (SER-U-Net) | p-Value | p-Value | |||

|---|---|---|---|---|---|---|

| SI (%) | DC (%) | SI (%) | DC (%) | (DC) | (SI) | |

| 2MC | 95.82 | 96.02 | 96.71 | 97.92 | 0.389 | 0.320 |

| 3MC | 96.03 | 97.71 | 95.91 | 96.83 | 0.304 | 0.249 |

| Mean | SD | 95% CI | ||

|---|---|---|---|---|

| Lower Limit | Upper Limit | |||

| SER-U-NET | 0.91 | 0.033 | 0.90 | 0.93 |

| Physician-marked | 0.92 | 0.027 | 0.91 | 0.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, M.-J.; Tseng, S.-C.; Gau, Y.-C.; Ciou, W.-S. An Automated Assessment Method for Chronic Kidney Disease–Mineral and Bone Disorder (CKD-MBD) Utilizing Metacarpal Cortical Percentage. Electronics 2024, 13, 2389. https://doi.org/10.3390/electronics13122389

Wu M-J, Tseng S-C, Gau Y-C, Ciou W-S. An Automated Assessment Method for Chronic Kidney Disease–Mineral and Bone Disorder (CKD-MBD) Utilizing Metacarpal Cortical Percentage. Electronics. 2024; 13(12):2389. https://doi.org/10.3390/electronics13122389

Chicago/Turabian StyleWu, Ming-Jui, Shao-Chun Tseng, Yan-Chin Gau, and Wei-Siang Ciou. 2024. "An Automated Assessment Method for Chronic Kidney Disease–Mineral and Bone Disorder (CKD-MBD) Utilizing Metacarpal Cortical Percentage" Electronics 13, no. 12: 2389. https://doi.org/10.3390/electronics13122389

APA StyleWu, M.-J., Tseng, S.-C., Gau, Y.-C., & Ciou, W.-S. (2024). An Automated Assessment Method for Chronic Kidney Disease–Mineral and Bone Disorder (CKD-MBD) Utilizing Metacarpal Cortical Percentage. Electronics, 13(12), 2389. https://doi.org/10.3390/electronics13122389