Abstract

A robust AR-DSNet (Augmented Reality method based on DSST and SiamFC networks) tracking registration method in complex scenarios is proposed to improve the ability of AR (Augmented Reality) tracking registration to distinguish target foreground and semantic interference background, and to address the issue of registration failure caused by similar target drift when obtaining scale information based on predicted target positions. Firstly, the pre-trained network in SiamFC (Siamese Fully-Convolutional) is utilized to obtain the response map of a larger search area and set a threshold to filter out the initial possible positions of the target; Then, combining the advantage of the DSST (Discriminative Scale Space Tracking) filter tracker to update the template online, a new scale filter is trained after collecting multi-scale images at the initial possible position of target to reason the target scale change. And linear interpolation is used to update the correlation coefficient to determine the final position of target tracking based on the difference between two frames. Finally, ORB (Oriented FAST and Rotated BRIEF) feature detection and matching are performed on the accurate target position image, and the registration matrix is calculated through matching relationships to overlay the virtual model onto the real scene, achieving enhancement of the real world. Simulation experiments show that in complex scenarios such as similar interference, target occlusion, and local deformation, the proposed AR-DSNet method can complete the registration of the target in AR 3D tracking, ensuring real-time performance while improving the robustness of the AR tracking registration algorithm.

1. Introduction

AR (Augmented Reality) is the core technology for constructing the metaverse space. By using computer graphics and computer vision to overlay virtual information onto real scenes, virtual–real fusion and human–computer interaction are achieved to enhance users’ perception of the real world [1]. Currently, AR has been applied in many fields such as healthcare, education, and navigation. AR mainly integrates three key technologies: tracking and registration that combine virtuality and reality, virtual object generation, and real-time interaction. In order to overlay virtual information in complex reality scenes for AR applications, it is necessary to use tracking registration in 3D space to register virtual information into real scenes. The existing robust 6D pose estimation methods for complex scenes can realize AR registration by estimating the pose of the target to be registered [2]. However, these methods often do not fully integrate geometry, direction, and other multivariate features, which can easily lead to AR registration failure in complex motion scenes such as similar interference, target occlusion, and local deformation. However, AR tracking registration algorithms can achieve the superposition of virtual information through phased tracking and feature extraction of the target to be registered. And the real-time performance and accuracy of the tracking algorithm, as well as the robustness of feature extraction of the target to be registered, determine the performance of the AR system [3,4]. Therefore, the main challenge of AR virtual–real fusion overlay lies in how to improve the accuracy of 3D tracking registration while ensuring real-time performance.

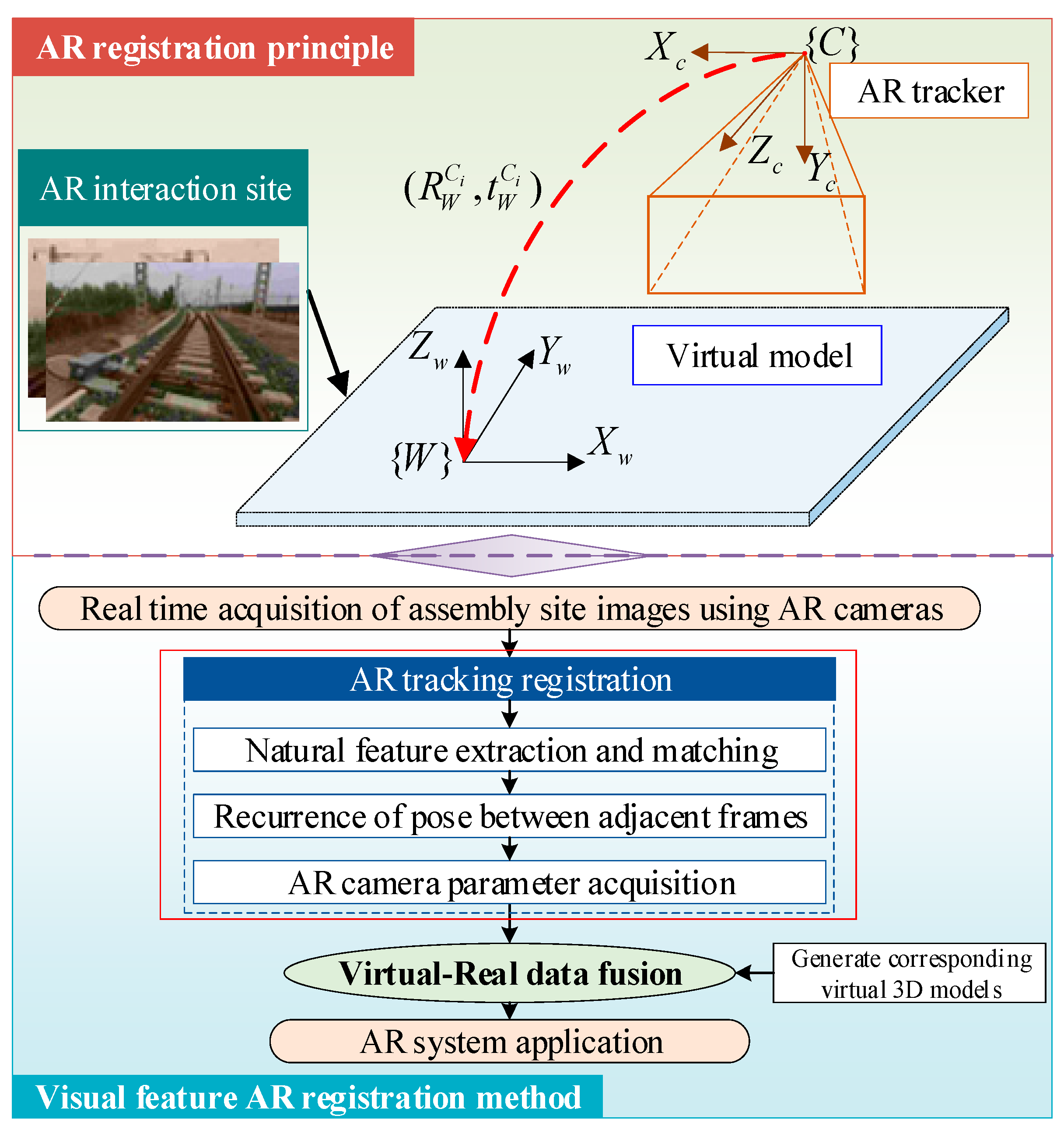

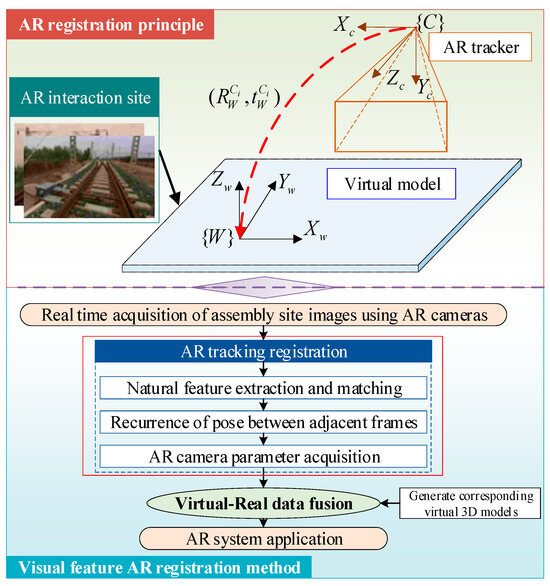

Usually, 2D spatial error in AR systems is small, but there will be significant errors when mapping to the 3D registration space [5]. Therefore, a stable and robust 3D tracking registration method in AR space is the focus of AR object perception and understanding [6]. The principle of commonly used registration methods based on visual features in existing AR systems is shown in Figure 1. The AR tracker extracts visual features from the captured real-scene image , constructs a mapping relationship between visual feature and real-scene feature , and solves the 2D-3D registration relationship of the AR camera relative to the real scene, thereby overlaying and integrating virtual information into the real scene.

Figure 1.

AR registration principle.

The 2D-3D registration method in AR space determines the performance of AR systems. By solving the orientation of assembly objects in AR space, real-time perception for AR tracking of target positions can be achieved, and multiple virtual auxiliary information (3D models, instructions, etc.) can be accurately overlaid and integrated into the real scene for rendering [7]. In this study, the integration of DSST (Discriminative Scale Space Tracking) filters is adopted to overcome the impact of similar interferences and scale on the accurate tracking of SiamFC (Siamese Fully-Convolutional) networks. Subsequently, a robust AR-DSNet (Augmented Reality method based on DSST and SiamFC networks) tracking registration method in complex scenarios is proposed to improve the accuracy of AR tracking registration methods and effectively solve the problems of target tracking failure in complex moving scenes caused by similar interference, target occlusion, and local deformation of targets in current AR 3D tracking registration algorithms. The proposed AR-DSNet method has better real-time performance and stability in 3D tracking registration. The main contributions of this study are as follows:

- Based on the distribution of local maximum values on the SiamFC tracking response map, a threshold is set to filter positions of potential targets ahead, so that better initial target positions are transferred to the DSST filter, which helps to reduce boundary effects on similar targets.

- In the prediction stage of the AR scale to be registered, multi-scale images are collected at the target location to form independent samples for training scale filters. And the scale of the target is inferred based on the response value of the scale filter of the samples, thereby adaptively tracking the scale changes of the target to be registered.

- By updating the relevant filtering coefficients through linear interpolation, the target is repositioned to obtain accurate target positions. After tracking the accurate target, the ORB (Oriented FAST and Rotated BRIEF) algorithm is used to perform feature detection and relationship matching, obtaining the registration matrix and overlaying virtual information to enhance the real world.

2. Literature Review

In recent years, research on the AR 3D tracking registration method has made rapid progress. Some existing algorithms first track the registered target, predict predefined key points on the image, and then use the RANSAC (Random Sampling Consistency Algorithm) and PnP (Perspective-n-Point) algorithm to establish the 2D–3D pose association relationship between the image and the assembly object, achieving AR virtual–real fusion overlay registration [8,9]. 3D tracking registration is the process of obtaining position information from tracked target objects in 3D space, and locating virtual objects in the real world [10]. Yuan et al. proposed an unlabeled AR system registration method based on projection reconstruction and the KLT (Kanade–Lucas–Tomasi) tracker [11]. Although the KLT tracker is a useful natural feature tracking method, all functions may be lost if the camera suddenly moves rapidly, and the system will encounter failures. The method does not consider robust tracking feature points and is susceptible to mismatched interference, resulting in registration being ineffective in the event of significant changes in lighting and perspective. In addition, regarding the accuracy and real-time performance of AR tracking registration in complex scenarios, Bang et al. combined the ORB algorithm with the optical flow method to achieve frame-to-frame pose tracking [12]. Under the influence of occlusion and lighting, the target can be tracked well, but the target model needs to be provided in advance, and objects that are prone to reflection and unclear textures cannot be modeled. Therefore, existing AR systems mainly adopt registration methods based on visual features [13], which track and determine the position of enhanced objects in real scenes by aligning 2D–3D coordinates in AR space, and then display the scene after AR virtual–real fusion overlay.

At present, the mainstream tracking algorithms in AR 3D tracking registration are mainly based on correlation filtering and deep learning. In filtering-based tracking algorithms, kernel correlation filtering tracking [14] uses multi-channel features in CF (correlation filter)-based trackers, which have good tracking speed and accuracy performance. However, how to adapt to changes in target scale needs improvement. DSST [15] introduces scale filters to respond to target scale changes, and has good portability. However, due to the limited scale pool, accurate tracking cannot be achieved when the target is moving rapidly. The LCT (Long-term Correlation Tracking) algorithm based on correlation filtering combines target context and scale transformation, but in complex situations, the tracking accuracy is not ideal when the target is occluded or out of view, resulting in poor stability and robustness of the AR system. In deep learning algorithms, HCF (Hierarchical Convolutional Features) integrates deep features into filters to improve tracking performance, but also has the problem of scale changes, which makes it less robust when large-scale changes occur in target tracking. The SiamFC target tracking based on Siamese networks utilizes a fully convolutional network structure for similarity prediction, enabling CNNs as a tracker to perform end-to-end tracking. At the same time, tracking methods that integrated CF and Siamese networks began to emerge. CFnet [16] and DCFnet [17] are two representative algorithms, both of which interpret correlation filters as differentiable layers in deep neural networks. And the network was trained to obtain the features that best match CF, achieving good tracking performance. However, due to boundary effects, the performance improvement is limited. It can be seen that the above methods based on correlation filtering and Siamese networks have good performance in target tracking, but each has different advantages in accuracy and efficiency. The tracking accuracy of the tracker based on Siamese networks is high, but due to semantic feature representation and lack of model updates, it tends to drift towards similar target regions. The tracking algorithm based on the correlation filtering framework is fast, but it lacks robustness to strong background edges and target deformation scenes due to the use of a single feature (HOG CN, etc.) and without occlusion for targets [18]. Therefore, in order to ensure that the AR system has sustained stability and robust 3D tracking and registration capabilities, constructing an AR tracking and registration model for complex scenes will be an objective need for the implementation and application of AR systems in fields such as intelligent manufacturing, robotics, and autonomous driving.

3. Methodology

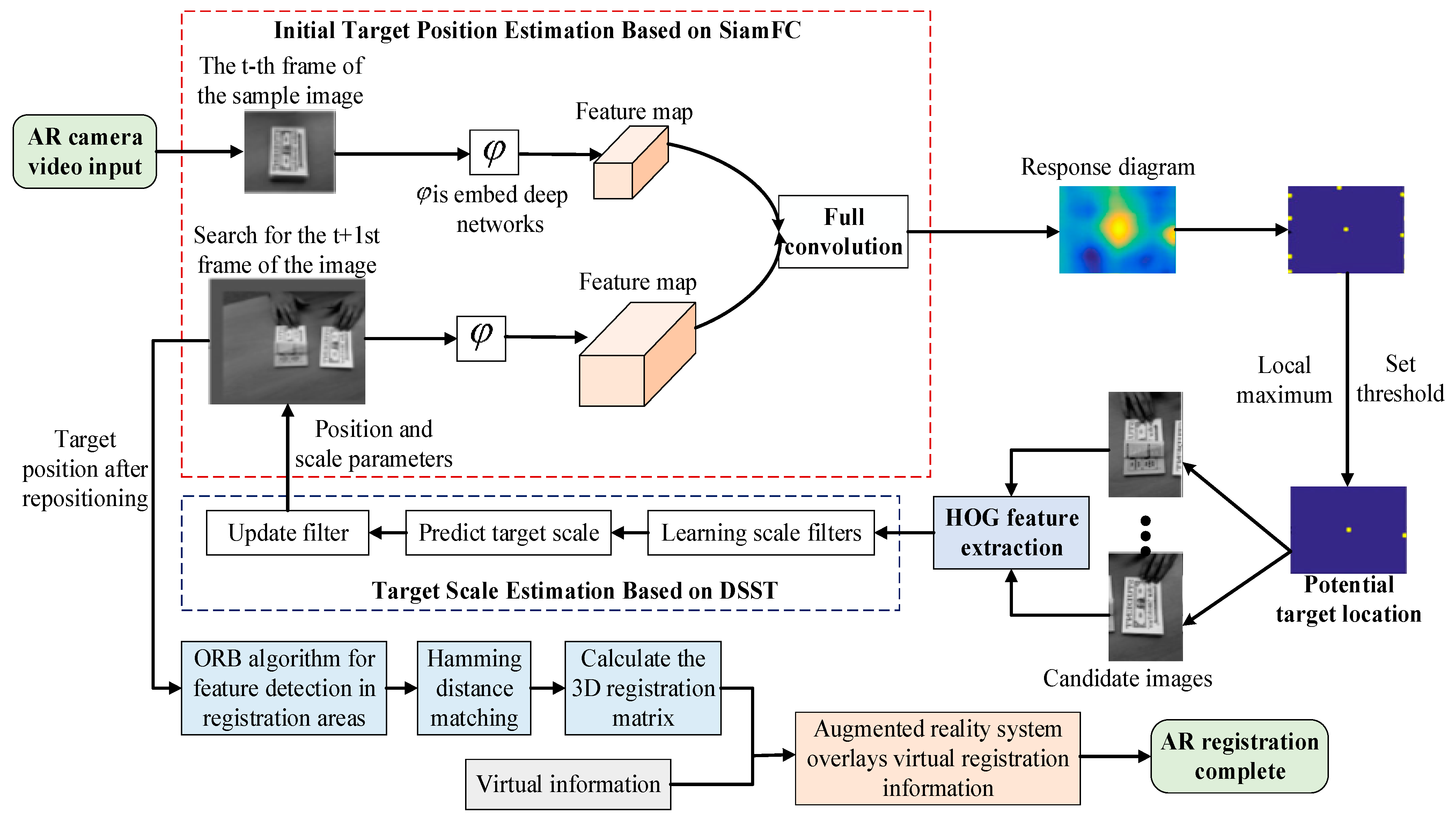

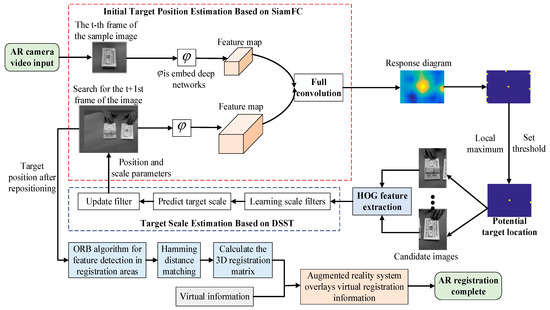

The AR tracking registration method collects complex real-world scene data, performs real-time tracking and target area feature detection on the AR object to be registered, and then solves for the 2D-3D spatial position transformation matrix between the AR device and the target to be registered. The virtual information is accurately superimposed on the target area to be registered in the real scene, and finally, the AR virtual–real fusion interactive application system is realized. Based on the AR system tracking registration method process flow, a robust AR-DSNet tracking registration method in complex scenarios is proposed in this article, which mainly includes four modules: initial target position inference based on SiamFC, target scale inference based on DSST, target relocalization, and ORB feature matching and registration. Interference perception tracking is achieved through the full utilization of end-to-end target tracking implemented by SiamFC, coupled with the benefits of online model updating facilitated by the DSST filter tracker. And HOG features are used to compensate for the shortcomings of deep features in SiamFC, achieving accurate target tracking. The registration matrix is matched and calculated using the ORB algorithm, and the AR 3D tracking registration is completed after rendering with the virtual model generated by OpenGL. The overall framework of the proposed AR-DSNet is shown in Figure 2.

Figure 2.

Overall structure of AR-DSNet.

3.1. AR-DSNet Initial Target Position Inference Based on SiamFC

3.1.1. SiamFC Network Tracking

The SiamFC network regards tracking as similarity learning and is trained offline through a deep Siamese network. In the process of online target tracking, the Siamese network that has been trained offline is used to match and correlate the searched image with the trained sample features, and the sample that is most similar to the marked target in the first frame is found from many candidate frames. Then, the two samples are convolved to generate a correlation response score map, and the target is located at the position with the highest response value. The implementation principle and network structure of SiamFC are relatively simple and have low complexity, so SiamFC has good real-time performance.

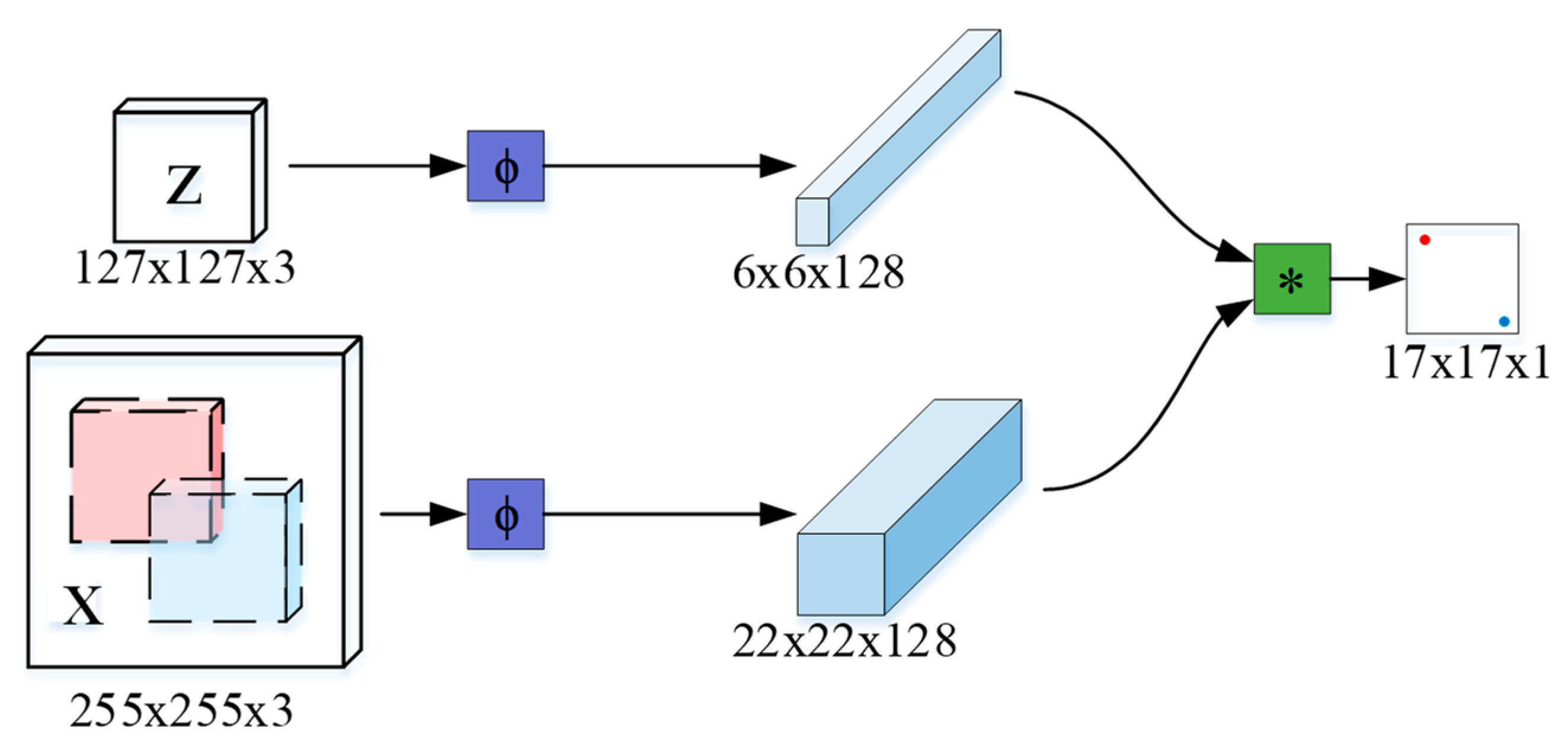

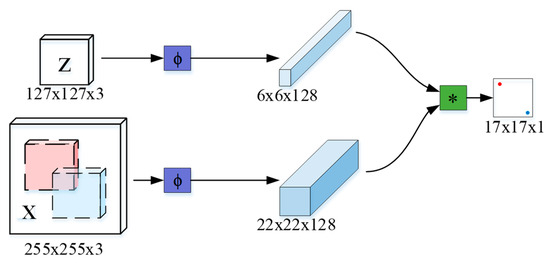

The SiamFC network structure is shown in Figure 3, which includes two CNN branches that share the same parameters to extract convolutional features from sample images and search images. The typical example image used as a target template is the ground truth in the first frame, and the search image is cropped from the next tracking frame. The key to the SiamFC algorithm is to calculate the similarity between the two branch features through the similarity function in Formula (1), and classify the similarity scores of each position into high or low scores. The highest score is the accurate location of the target.

where is the template image; is a search image; corresponds to feature extraction for embedded deep networks; represents convolution operation, which extracts the part closest to through convolution operation ; represents the value of each position in the score chart; and represents the similarity score between and . During the tracking process, the response score of the search image is calculated based on the center of the target position in the previous frame, and the possible position response value of the target is the highest.

Figure 3.

SiamFC network structure.

SiamFC adopts an offline preprocessing method with a logical loss function, which distinguishes positive and negative samples for each pixel within a certain range of the target using Formula (2), where is the total step size of the network, is the center of the target, and is all positions in the response score map. In the score map generated by in Figure 3, the positive sample indicated by red dots and the negative sample indicated by blue dots correspond to the rectangular area in search image , respectively.

After sample extraction and labeling, SiamFC trains the network on positive and negative image pairs [19], and the logical loss of a single point is defined as follows:

where , which represents the true sample category; is the response score for each search location . For the overall loss of response score graph, the mean logical loss of all points is used as follows:

where the final generated response score matrix is represented by , and the number of numerical values in this score matrix is represented by . The trained convolution parameter is obtained by minimized Formula (4) using the Random Gradient Descent (SGD) as follows:

3.1.2. Filtering Potential Target Locations

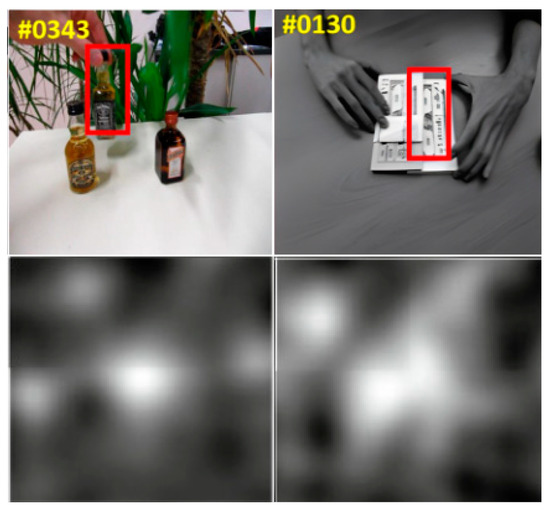

The use of a larger search area in SiamFC not only improves tracking accuracy, but also makes it easier to introduce similar interferences. According to experimental observations, when there are other objects of the same category in the search area, the score map of SiamFC will produce a significant response to all these objects, and the deep features of this sequence cannot provide effective information to distinguish interfering targets, resulting in tracking failure, as shown in Figure 4.

Figure 4.

Response diagram corresponding to SiamFC tracking results.

In this article, the maximum value that is obviously not the target position is suppressed in advance to improve tracking speed. By identifying the local maximum values on all response maps as the potential target locations initially, a threshold function is set to filter out locations with response values greater than the set threshold. Due to time consistency limitations, the local maximum value closer to the response center is more likely to be a potential target location in target tracking. According to this standard, a threshold is designed for each position on the response graph, which is the inverse Gaussian distribution that reaches its peak at the center of the graph. Using the matrix of to represent the response score map of frame , where represents the central position of the score map, the threshold at position is designed as follows:

where limiting the number of candidate objects to can reduce computational burden while maintaining the occurring frequency of interference in the image. Based on experimental experience, selecting different parameter settings can have an impact on tracking performance. is set to 3 for each response map, which means that the kernel width value for generating Gaussian function labels in KCF (Kernel Correlation Filter) is set to 0.1. When the number of selected positions is less than the threshold, the selected position is returned as the final candidate. Otherwise, the threshold position with the maximum response value is filtered out as a candidate, thereby filtering out potential target positions.

3.2. AR-DSNet Target Scale Inference Based on DSST

The relatively accurate candidate target positions filtered by SiamFC are transmitted to the DSST tracker for scale inference, greatly reducing the boundary effect of filter based trackers. In the scale prediction stage, multi-scale images are collected at the target location to form samples for the independent training of scale filters. The target scale is inferred based on the scale filter response values of samples [20]. The tracking speed of the method in this article is greatly limited by the selected correlation filter tracker, which requires an efficient correlation filter tracker. Basic filtering trackers KCF and DSST are focused on in this article. However, KCF only infers the target position and ignores the target size. Therefore, DSST is chosen as the baseline tracker and the HOG feature description in DSST is cited.

DSST is an accurate scale inference method in visual tracking, which first extracts multi-dimensional features of the image block where the target is located, and then constructs the optimal filter as follows:

where and are both matrices, represents a certain feature dimension, , the regularization coefficient is used to avoid the influence of zero frequency components on the results, and represents the convolution operation. Next, a filter is trained according to Formula (8), where and represent the discrete Fourier transform (DFT) of and , while and represent complex conjugation.

where the range of feature dimension values is , which will be split into numerator and denominator for iterative updates. The update method is shown in Formula (9), where represents the learning parameter set to 0.025.

The final extracted image feature for the new frame is . is obtained by taking the corresponding 2D discrete Fourier transform for each one-dimensional feature, and the response score of is calculated according to Formula (10), where the new target position corresponds to the maximum value.

In summary, a certain number of candidate positions are obtained through maximum value selection in this article. For each position, the correlation filter coefficients are updated with the image block centered on the new target position and their Gaussian markers according to Formula (10). When the target position is recognized, the DSST tracker is used to infer the target scale. The target position and scale inference by DSST are transmitted back to the SiamFC tracker to indicate the tracking of the next frame.

3.3. AR-DSNet Target Relocalization

After transferring the target position and scale back to the SiamFC network using the above method, linear interpolation is used to update the relevant filtering coefficients for target localization in this article. The calculated response map score based on Siamese neural network tracking and the response map score based on DSST tracking are fused according to Formula (11) by linear summation:

where the preset value of for the fusion disturbance perception model is 0.3, and extensive parameter tuning is performed to obtain the final value. We adopt the position with the highest score in the final response score graph for final target localization, specifically:

3.4. AR-DSNet Feature Matching and Registration

After obtaining a more accurate target area to be registered through the tracking method in this article, the ORB algorithm is used for feature detection and matching in order to enable the AR system to better stack virtual information at the registration location. When ORB detects feature points, the detector is located at the FAST corner point with fast running speed, and the directional information from the acceleration segment of testing FAST is added to the ORB operator. When using BRIEF with binary powerful independent basic features to describe feature points, the descriptor has scale and rotation invariance, and is not sensitive to image noise. This algorithm used in the proposed method has high real-time performance and is more suitable for AR systems [21]. After matching through Hamming distance, the mismatched pairs are eliminated using RANSAC, and the parameters of the registration matrix are calculated based on matching the feature relationship between adjacent frames. Finally, the tracked video sequence is rendered with the cube virtual model generated by OpenGL to complete the registration of virtual information.

4. Experimental Results and Analysis

The experiment is conducted on a machine with a Windows 11 (64 bit) operating system, Intel (R) Core (TM) i7-8750H @ 3.40GH, 32GB of memory, experimental platform Matlab 2019b and Visual Studio 2020, based on computer vision library (OpenCV), graphics library (OpenGL), and deep learning toolbox MatConvNet, using Logitech C270 camera. The experiment involves manually marking the position in the first frame of the target on the visual tracking benchmark dataset OTB2015. Qualitative analysis is conducted on video sequences with substantial changes, and AR-DSNet proposed in this article is quantitatively compared with SiamFC and DSST in terms of tracking accuracy and efficiency. Then, the ORB algorithm is used to detect features of the registered target location. Finally, a 3D registration matrix is calculated based on the matching relationship between feature points and overlaid with a virtual model rendered by OpenGL to achieve AR system registration [22]. In order to better demonstrate the robustness of AR-DSNet in AR applications, four types of interference changes, namely frontal registration, 180° rotation registration, certain perspective registration, and partial occlusion registration, are added to verify the AR tracking registration effect.

4.1. Results and Analysis of Moving Target Tracking

OTB2015 is a video that contains 100 fully annotated videos with substantial changes. The sequences in the dataset are annotated with 11 different attributes: scale change (SV), occlusion (OCC), deformation (DEF), fast motion (FM), in-plane rotation (IPR), out of view (OV), background clutter (BC), etc. The AR-DSNet method proposed in this paper is compared with deep learning algorithms such as CFnet, DCFnet, SiamFC, and related filter algorithms such as Staple, DSST, and KCF on OTB2015 to obtain the performance of different tracking algorithms.

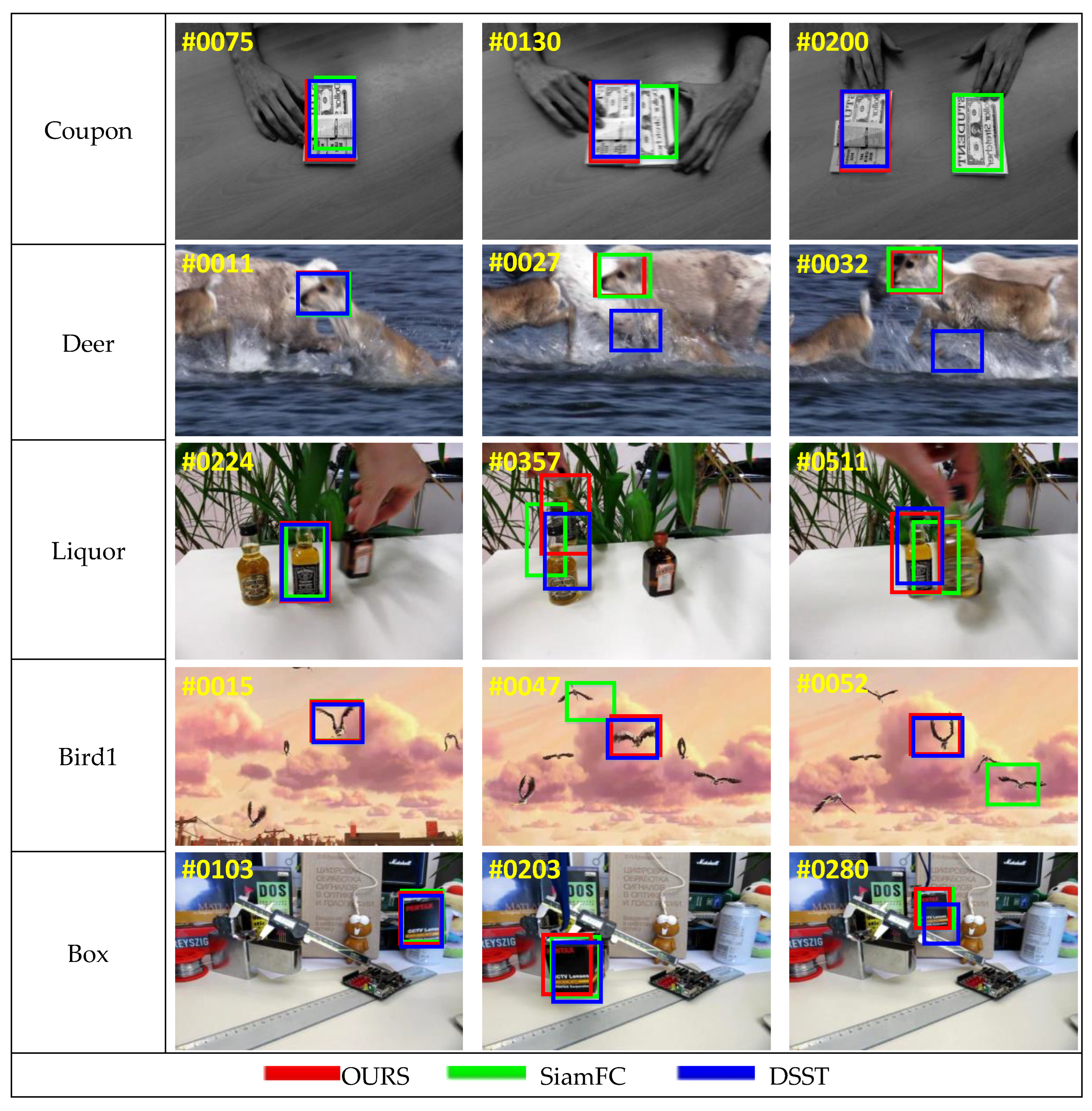

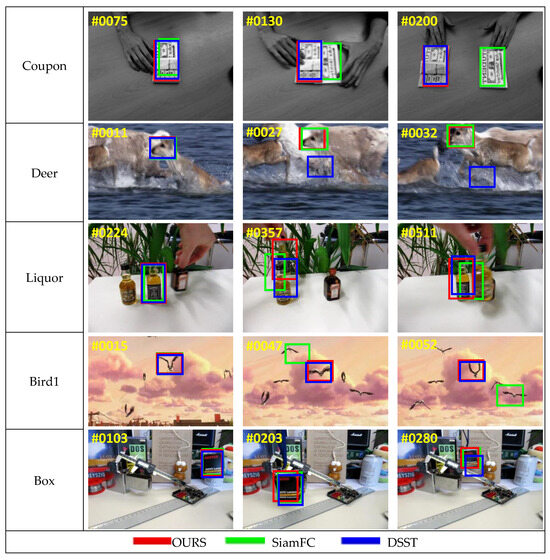

The tracking results are shown in Figure 5. SiamFC performs well in occlusion (Box), fast motion (Box, Deer), and moving blur sequences (Deer), but will fail when similar interferences (Coupon, Liquor, Bird1) appear due to semantic feature representation and lack of online model updates. The DSST tracker learns the correlation filter on HOG features. And DSST performs well in partial deformation and similar interference (Coupon, Bird1), but drifts when the target is severely occluded (Box, Deer) and fast moving (Liquor). Due to the complementary characteristics of SiamFC and DSST filters, the proposed AR-DSNet method overcomes the limitations of two trackers by introducing DSST into the tracking process of SiamFC. In complex scenarios such as similar target interference and partial occlusion, the experimental results show that the proposed AR-DSNet method can effectively track targets.

Figure 5.

Tracking results.

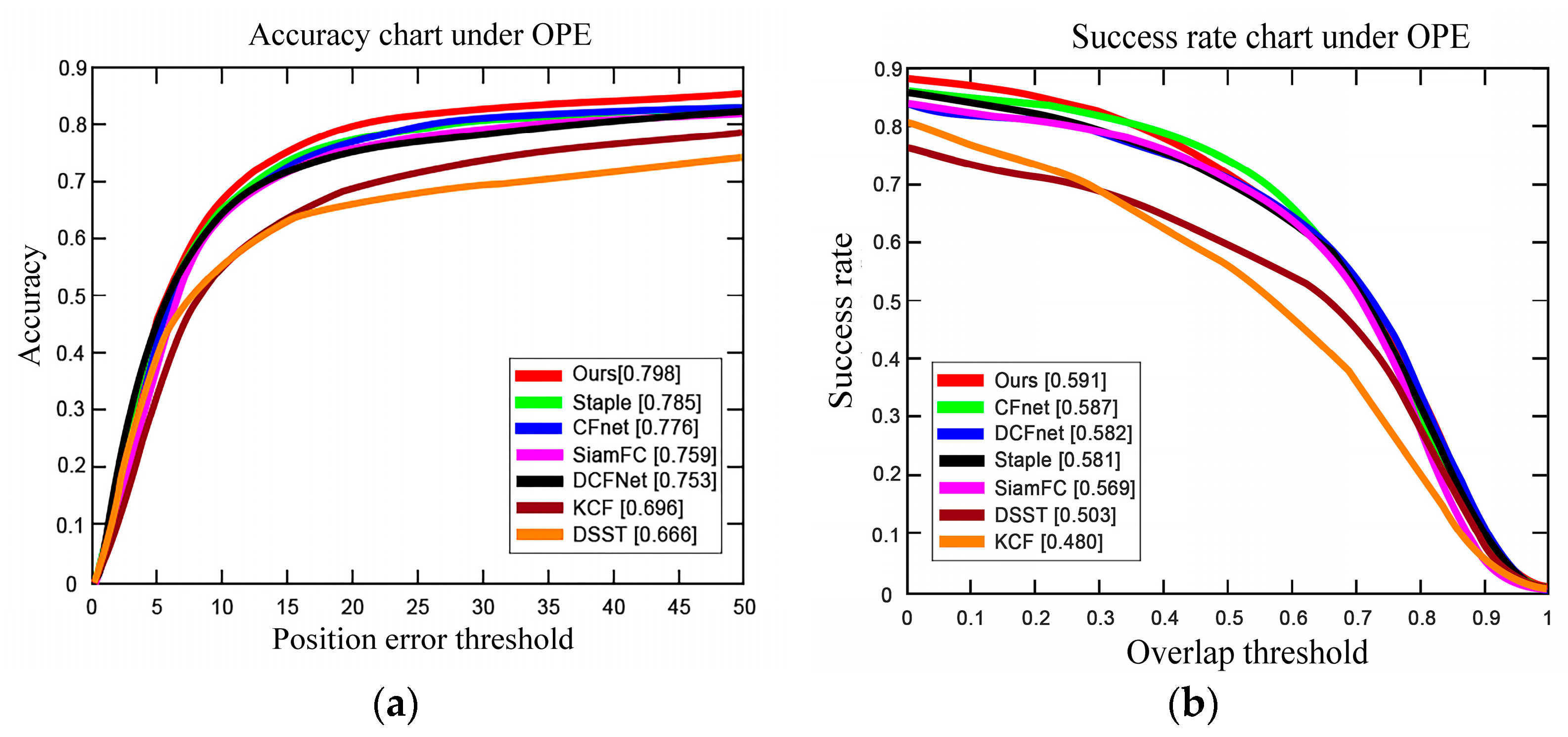

4.2. Tracking Results and Analysis of Classic Algorithms

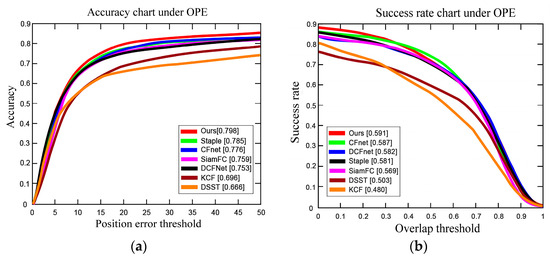

In order to more clearly represent the tracking performance of each tracking algorithm, the algorithm is evaluated based on two indicators: Accuracy represents the proportion of the number of frames within the accurate range of threshold to the total number of frames, and this article uses a 50-pixel threshold. The success rate represents the overlapped degree of the predicted targets in benchmark. One-Pass Evaluation (OPE) results are obtained based on the exact position of the ground truth initialized in the first frame, and then AR-DSNet is compared with other tracking algorithms with better performance. As shown in Figure 6, the accuracy chart and success rate chart are based on the OTB2015 benchmark. The numbers in the legend represent the representative accuracy of the 20-pixel accuracy chart and the area under the curve (AUC) of the success rate chart.

Figure 6.

Accuracy and success rate on OTB2015 benchmark. (a) Position error threshold. (b) Overlap threshold.

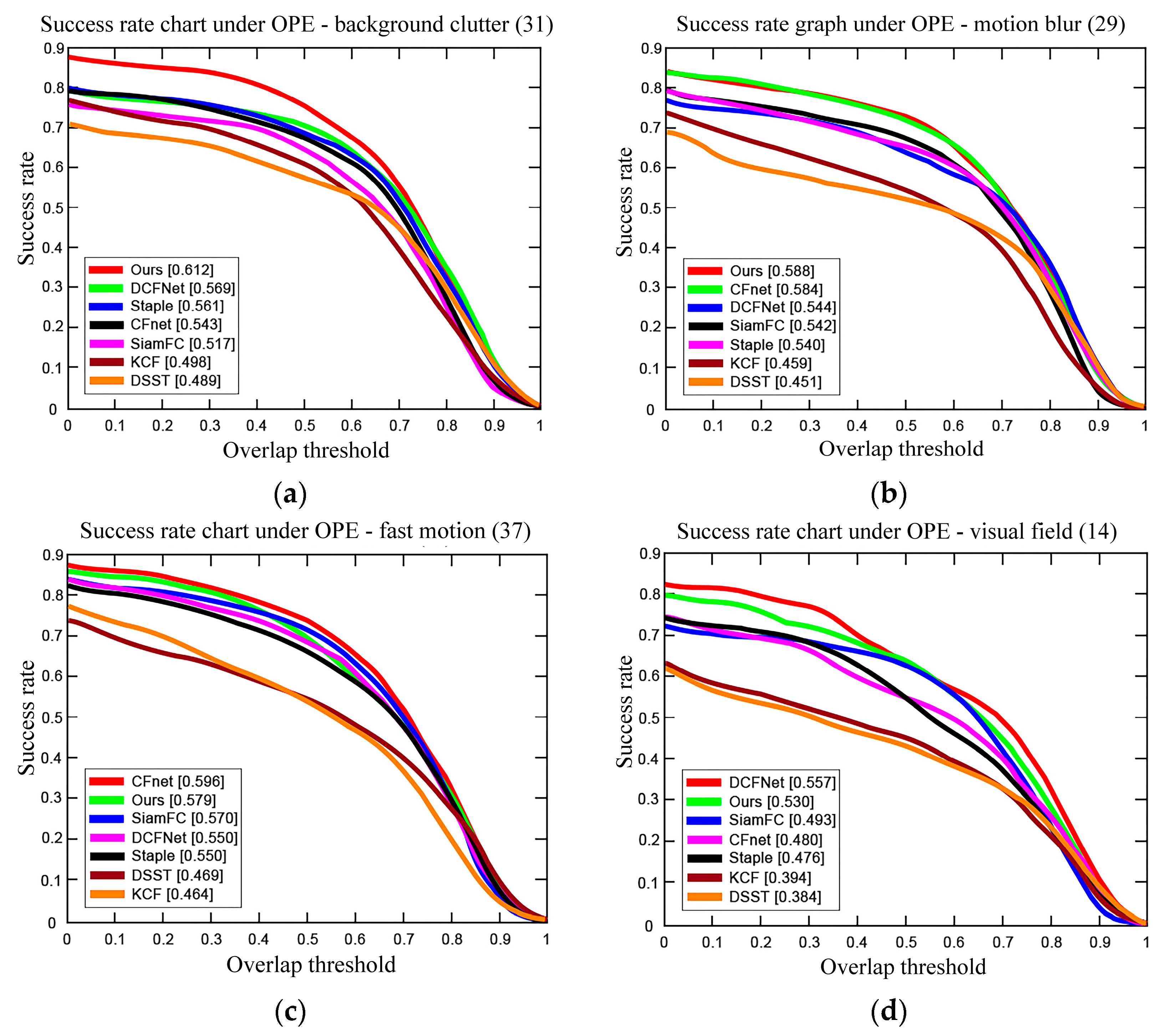

The comparison of success rate charts under four different attributes is shown in Figure 7. It can be observed that KCF and DSST related filters typically perform poorly in situations of fast motion, moving blur, and out of view because the search area is limited due to boundary effects. Staple [23] combines the features of HOG and CN (Color Histogram) to exhibit better performance. Except for situations where similar interferences are present in the background clutter, the overall performance of SiamFC is better than that of correlation filtering tracking algorithms, which can be explained as depth features being better than manually made features. AR-DSNet combines the advantages of SiamFC’s depth features and DSST’s HOG features to more effectively handle all challenging scenarios. In particular, AR-DSNet achieves an absolute gain of nearly 10% in situations of background clutter, which further proves the effectiveness of the proposed algorithm in mitigating the impact of similar interference.

Figure 7.

Comparison of success rates with four different attributes. (a) Overlap threshold (31), (b) overlap threshold (29), (c) overlap threshold (37), (d) overlap threshold (14).

In Table 1, a quantitative comparison is given between the overlap rate and 20-pixel accuracy under a 0.5 overlap threshold. In terms of accuracy and overlap rate scores, the AR-DSNet algorithm is superior. Specifically, compared to SiamFC, AR-DSNet has improved accuracy (DP) by 3.9% and overlap rate score (OS) by 1.4%, achieving an absolute gain of 13.2% accuracy and 13.1% overlap rate on the basis of DSST. In addition, the performance of AR-DSNet is better than the combination of DCFnet and CFnet based on the correlation filter tracker and Siamese network. Although the overlap rate score (OS) of AR-DSNet is relatively low compared to CFnet, it has a significant improvement in accuracy (DP), and the running speed of the proposed method in this article reaches 30 frames per second to meet the requirements of AR systems. The experimental results show that AR-DSNet has excellent performance and maintains real-time speed.

Table 1.

Quantitative comparison results of accuracy and success rate (unit: %).

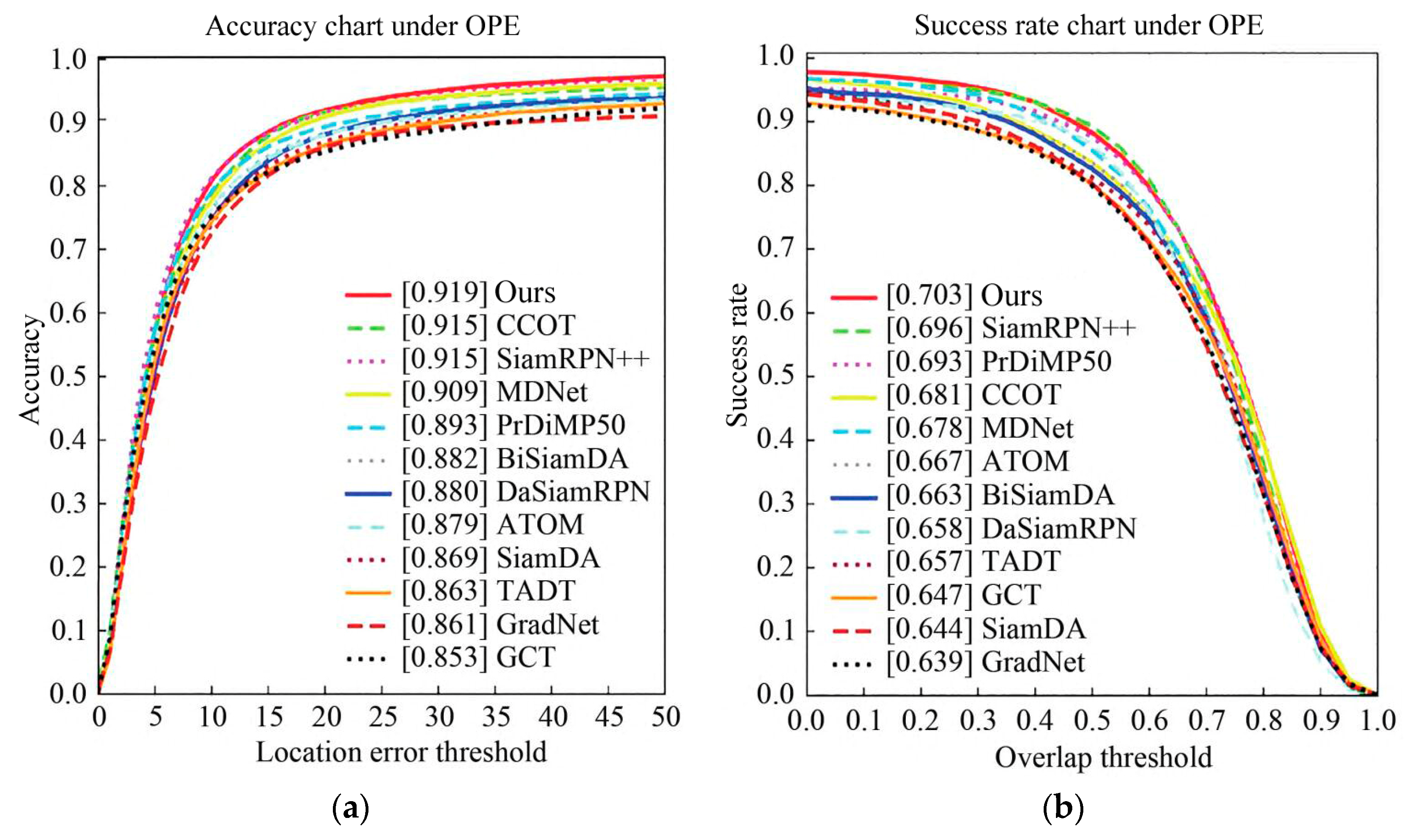

4.3. Siamese Network Tracking Results and Analysis

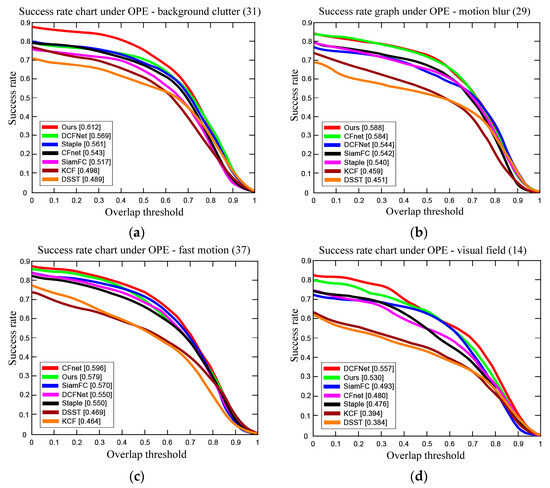

Several representative algorithms and other tracking algorithms are selected from three methods: target tracking based on attention mechanism and Siamese neural network, target tracking based on multi-scale inference and Siamese neural network, and target tracking based on template updating Siamese neural network for testing. The algorithms include SiamRPN++ [24], BiSiamDA [25], DaSiamRPN [26], SiamDA [25], GradNet [27], MDNet [28], PrDiMP50 [29], CCOT, ATOM [30], TADT [31], and UCT. Attention mechanism-based algorithms include SiamDA and BiSiamDA, hyperparametric inference-based algorithms include SiamRPN++ and DaSiamRPN, and template update-based algorithms include the proposed AR-DSNet, UreadNet, and PrDiMP50.

The tracking results of the 11 algorithms above and the AR-DSNet method on the dataset OTB-2015 are shown in Figure 8, including an accuracy chart and success rate chart. The horizontal axis of the accuracy chart represents the location error threshold, the vertical axis represents accuracy, and the curve represents the percentage of the distance between the center point of the target position inferred by the tracking algorithm and the ground truth that is less than the given threshold. The horizontal axis of the success rate chart represents the overlap threshold, the vertical axis represents the success rate, and the curve represents the overlap score extended to a given threshold. When the overlap rate of a certain frame is greater than the set threshold, the frame is considered successful. The different colors in the figure represent different algorithms, and the values in the boxes represent the metrics of the algorithm. The larger the metrics, the better the performance. From Figure 8, it can be seen that our AR-DSNet achieves the best performance with an accuracy of 0.919 and an OPE of 0.703, both higher than Siamese trackers such as SiamRPN++, SiamDA, and DaSiamRPN. SiamRPN++ based on hyperparameter inference achieves the second tracking result with 0.696 AUC, leading SiamDA based on attention mechanism with a difference of 0.052. The third track ranked is PrDiMP50, which combines connected structures and online template updates, with an OPE of 0.693. The OPE of SiamDA based on an attention mechanism is 0.644. Although the methods based on an attention mechanism and hyperparameter inference have shown good performance, the template-updating method performs better, indicating that future Siamese network-based single-target tracking algorithms will combine template updates. Target tracking algorithms based on attention mechanisms and hyperparameter inference can extract features without updating templates, and can be used for stable and persistent tracking in the environment. The target tracking algorithm based on template updates can respond to changes in the external environment, improving the performance of the tracker.

Figure 8.

Siamese network tracking results and analysis. (a) Accuracy chart. (b) Success rate chart.

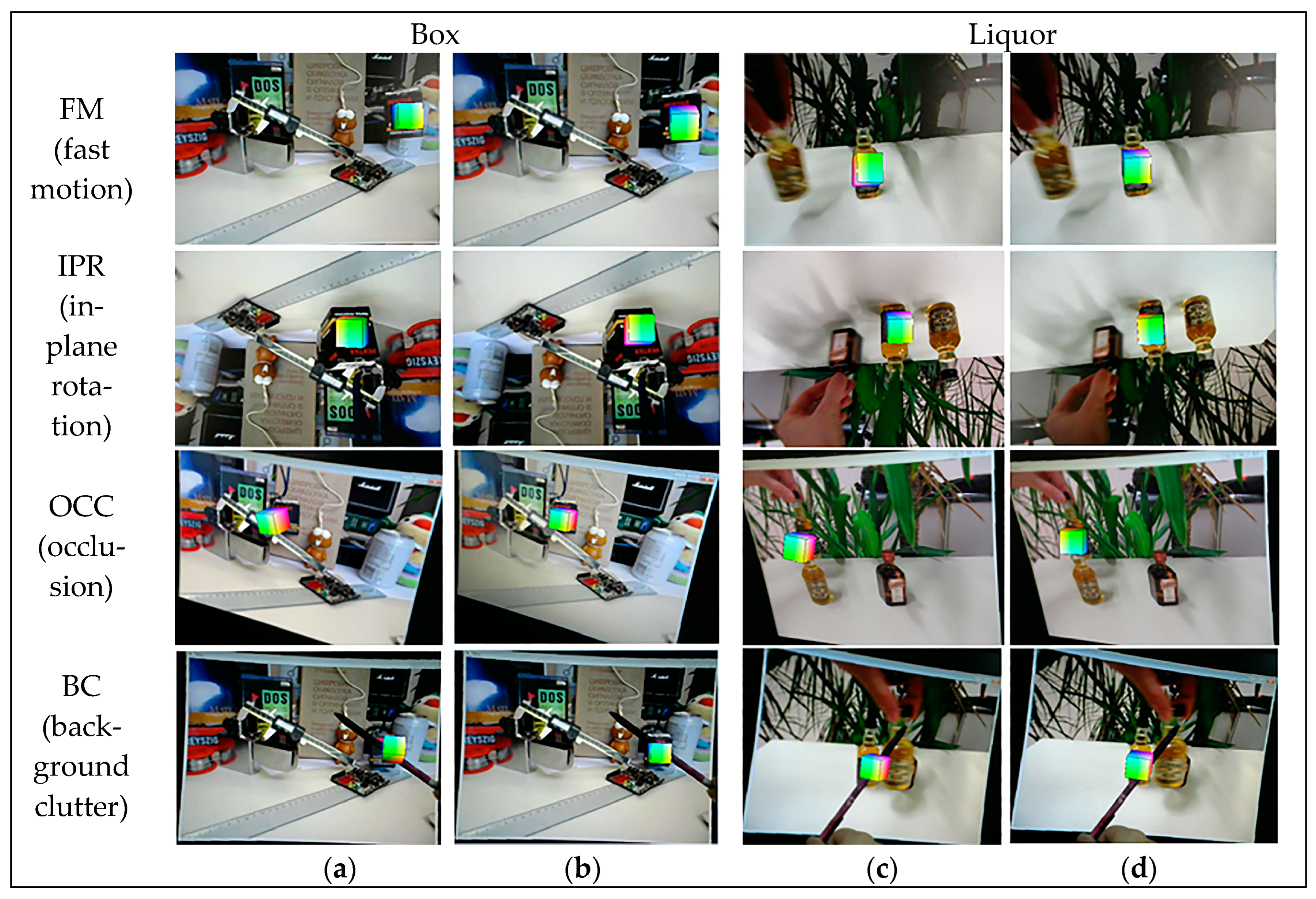

4.4. Analysis of Registration Results for Moving Targets

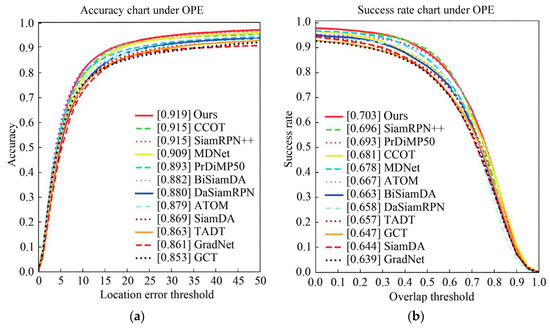

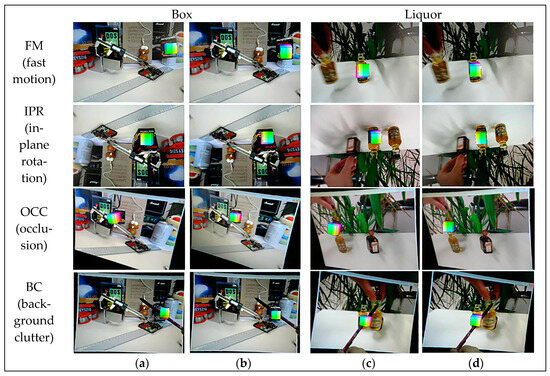

The registration result analysis selects Box and Liquor video sequences with attributes IV, SV, OCC, MB, IPR, and OPR on OTB2015, combined with ORB feature detection matching for mixed tracking registration. The rendered virtual cube is overlaid in front of the box and bottle, as shown in Figure 9. Box and Liquor video sequences gradually increase in frame rate from top to bottom, and compare the registration results in four situations: registering in front, rotating 180° before registration, changing the perspective for registration, and the target partially occluded by a pencil. Figure 9a,c show the registration results of the commonly used ORB features combined with the optical flow method in AR systems, and Figure 9b,d show the registration results of the proposed AR-DSNet method in this paper. It can be observed that during the target movement process, the tracking registration method in this article can still accurately track the target under four interference conditions, performs well, and can accurately overlay virtual colored cubes in the target area to be registered. As shown in Figure 9a, as the number of frames increases during optical flow tracking, mismatching occurs due to feature point tracking errors, resulting in the offset of the rendered colour cube and significant registration errors. In Figure 9b, AR-DSNet can accurately track the target under different interferences and overlay the virtual colored cube on the registered target area. In Figure 9c, when the Liquor sequence is in frame 357, the target is partially occluded, and ORB features change with the position of the colored cube rendered by optical flow. The error accumulates as the frame rate increases, and the colour cube at frame 511 cannot be rendered properly due to target tracking failure. In Figure 9d, the rendering of the colored cube in AR-DSNet is affected by interference, but in the subsequent process, the colored cube still accurately fuses with the registered target area. Overall, the AR tracking registration method in this article demonstrates good stability and robustness.

Figure 9.

AR tracking registration results. (a) Registration results of ORB features combined with the optical flow method using Box. (b) Registration results of AR-DSNet method using Box. (c) Registration results of ORB features combined with the optical flow method using Liquor. (d) Registration results of AR-DSNet method using Liquor.

5. Discussion

AR combines virtual and real objects in a real environment to enhance people’s perception of the real world. However, the existing tracking method based on SiamFC cannot accurately distinguish the target foreground from the semantic interference background, and the target is prone to drift and unable to perform scale inference. Therefore, a robust tracking registration method AR-DSNet in complex scenarios is proposed to implement interference perception and tracking by fully utilizing end-to-end tracking based on SiamFC and the advantages of model updating online based on DSST filter trackers. And HOG features are utilized to compensate for the shortcomings of deep features in SiamFC, which enable us to ensure real-time performance and improve the robustness of traditional AR tracking registration algorithms.

In recent years, with the rapid improvement of mobile device performance, the application of AR systems based on mobile devices has become a current research hotspot, widely applied in many fields. AR mainly includes three key technologies: target tracking registration, virtual–real fusion, and human–computer interaction. Mobile AR systems use these technologies to achieve virtual–real fusion, making mobile AR applications more diverse and people’s user experience of AR applications more realistic. Due to the complex changes in the real environment, there are still issues such as background clutter during tracking registration and inaccurate position information of the object to be registered when the target is occluded, which can lead to poor stability of the AR system in complex scenarios. Furthermore, based on the analysis and summary of this research, we propose the following considerations for future AR tracking and registration algorithms:

- (1)

- Improving performance of AR registration networks. As researchers continue to deeply explore different changes in AR tracking registration networks and optimize their architectures, we can expect to see performance improvements on higher-dimensional tasks. This may involve adjusting the information-sharing method on higher-dimensional inference between AR tracking and registration networks, or integrating the outputs of two or multiple networks in a more complex way, further improving the performance of AR registration networks.

- (2)

- Improving model generalization ability. At present, when the tracking algorithm of the AR registration network is used for feature extraction, the network generally has more layers and needs to be pre-trained on the ImageNet dataset. The training cycle is relatively long. In the future, unsupervised training or small-sample augmentation training can be used to improve the generalization ability of AR tracking registration methods and their applications in other fields, such as the AR CenterNet network [4], which trains relevant models for AR assembly industry applications.

- (3)

- Integration with other network architectures. AR tracking registration networks can be combined with other neural network architectures or attention mechanisms to create more complex models. For example, in the CoS-PVNet network, a global attention mechanism is used to deal with complex scene feature extraction, lacking features, or featureless scenes [2], which can provide better performance in certain tasks.

- (4)

- Optimizing AR registration backbone network. The AR tracking registration model can be lightweight processed, for example by using pruning, quantization, and other techniques to reduce redundant network calculations, thereby improving the real-time performance of tracking registration algorithms. Additionally, neural network search methods can be used to automatically search for specialized AR registration backbone networks for target tracking based on task characteristics.

In summary, the AR-DSNet 3D registration method proposed in this article can still accurately track and register AR targets in complex moving scenarios such as similar interference, target occlusion, and local deformation caused by motion and the external environment. The proposed method can effectively improve the accuracy of AR tracking registration and the accuracy of virtual–real fusion, which has great research significance and value for the application of mobile AR systems, and will effectively promote the transformation and upgrading of AR digital applications in related industries.

6. Conclusions

In this article, a robust tracking registration method AR-DSNet is proposed in complex scenarios. By introducing DSST filters into the Siamese network tracking process, HOG features are used to compensate for depth features in the Siamese network and suppress drift towards similar targets. The candidate targets screened by SiamFC are transferred to DSST, which can alleviate the boundary effect of DSST, effectively improving the ability of Siamese network-based tracking registration to distinguish target foreground and semantic interference background, and solving the problem of easy target drift and inability to obtain scale information only by predicting target positions. At the same time, the ORB algorithm is used to detect and match the registered target features, effectively improving the accuracy and robustness of traditional AR tracking and registration algorithms. The experimental results indicate that the AR-DSNet method can still accurately complete real-time registration with better stability and robustness in complex moving scenarios such as similar interference, target occlusion, and partial deformation. Therefore, the proposed AR-DSNet 3D tracking registration method can achieve stable and robust AR virtual–real fusion registration in complex scenarios, making the improved AR-DSNet registration method applicable to complex AR scenarios in fields such as industrial manufacturing, robot navigation, autonomous driving, and traffic monitoring. Next step, we plan to study the AR 3D tracking registration for multiple moving targets and achieve the application of multi-target AR tracking registration, further expanding the application range of AR application systems.

Author Contributions

Conceptualization, X.L.; methodology, X.L.; software, X.L. and J.Y.; validation, X.L.; formal analysis, W.L. and J.W.; data curation, J.Y.; writing—original draft preparation, X.L.; writing—review and editing, X.L., W.L., J.W. and J.Y.; visualization, X.L. and J.Y.; supervision, W.L. and J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China, grant numbers 62367005 and 62067006; in part by the Research Projects of the Humanities and Social Sciences Foundation of the Ministry of Education of China, grant numbers 21YJC880085; in part by the Natural Science Foundation of Gansu Province, grant numbers 23JRRA845; and in part by the Youth Science and Technology Talent Innovation Project of Lanzhou, grant numbers 2023-QN-117.

Data Availability Statement

The data are contained within this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Baroroh, D.K.; Chu, C.H.; Wang, L. Systematic literature review on augmented reality in smart manufacturing: Collaboration between human and computational intelligence. J. Manuf. Syst. 2021, 61, 696–711. [Google Scholar] [CrossRef]

- Yong, J.; Lei, X.; Dang, J.; Wang, Y. A Robust CoS-PVNet Pose Estimation Network in Complex Scenarios. Electronics 2024, 13, 2089. [Google Scholar] [CrossRef]

- Egger, J.; Masood, T. Augmented reality in support of intelligent manufacturing-a systematic literature review. J. Comput. Ind. Eng. 2020, 140, 106195. [Google Scholar] [CrossRef]

- Li, W.; Wang, J.; Liu, M.; Zhao, S.; Ding, X. Integrated registration and occlusion handling based on deep learning for augmented reality assisted assembly instruction. IEEE Trans. Ind. Inform. 2022, 19, 6825–6835. [Google Scholar] [CrossRef]

- Danielsson, O.; Holm, M.; Syberfeldt, A. Augmented reality smart glasses in industrial assembly: Current status and future challenges. J. Ind. Inf. Integr. 2020, 20, 100175. [Google Scholar] [CrossRef]

- Sizintsev, M.; Mithun, N.C.; Chiu, H.-P.; Samarasekera, S.; Kumar, R. Long-Range Augmented Reality with Dynamic Occlusion Rendering. J. IEEE Trans. Vis. Comput. Graph. 2021, 27, 4236–4244. [Google Scholar] [CrossRef]

- Wang, L.; Wu, X.; Zhang, Y.; Zhang, X.; Xu, L.; Wu, Z.; Fei, A. DeepAdaIn-Net: Deep Adaptive Device-Edge Collaborative Inference for Augmented Reality. J. IEEE J. Sel. Top. Signal Process 2023, 17, 1052–1063. [Google Scholar] [CrossRef]

- Thiel, K.K.; Naumann, F.; Jundt, E.; Guennemann, S.; Klinker, G.C. DOT-convolutional deep object tracker for augmented reality based purely on synthetic data. J. IEEE Trans. Vis. Comput. Graph. 2021, 28, 4434–4451. [Google Scholar] [CrossRef] [PubMed]

- Wei, H.; Liu, Y.; Xing, G.; Zhang, Y.; Huang, W. Simulating shadow interactions for outdoor augmented reality with RGB data. J. IEEE Access 2019, 7, 75292–75304. [Google Scholar] [CrossRef]

- Li, J.; Laganiere, R.; Roth, G. Online estimation of trifocal tensors for augmenting live video. In Proceedings of the Third IEEE and ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; pp. 182–190. [Google Scholar]

- Yuan, M.L.; Ong, S.K.; Nee, A.Y. Registration using natural features for augmented reality systems. J. IEEE Trans. Vis. Comput. Graph. 2006, 12, 569–580. [Google Scholar] [CrossRef] [PubMed]

- Bang, J.; Lee, D.; Kim, Y.; Lee, H. Camera pose estimation using optical flow and ORB descriptor in SLAM-based mobile AR game. In Proceedings of the 2017 International Conference on Platform Technology and Service (PlatCon), Busan, Republic of Korea, 13–15 February 2017; pp. 1–4. [Google Scholar]

- Jiang, J.; He, Z.; Zhao, X.; Zhang, S.; Wu, C.; Wang, Y. REG-Net: Improving 6DoF object pose estimation with 2D keypoint long-short-range-aware registration. J. IEEE Trans. Ind. Inform. 2023, 19, 328–338. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Pedro, M. High Speed Tracking with Kernelized Correlation Filters. J. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef]

- Huang, D.; Luo, L.; Chen, Z.Y. Applying detection proposals to visual tracking for scale and aspect ratio adaptability. J. Int. J. Comput. Vis. 2017, 122, 524–541. [Google Scholar] [CrossRef]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-end representation learning for Correlation Filter based tracking. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar]

- Wang, Q.; Gao, J.; Xing, J.; Zhang, M.; Hu, W. DCFNet: Discriminant Correlation Filters Network for Visual Tracking. arXiv 2017, arXiv:1704.04057. [Google Scholar]

- Yang, T.; Jia, S.; Yang, B.; Kan, C. Research on tracking and registration algorithm based on natural feature point. J. Intell. Autom. Soft Comput. 2021, 28, 683–692. [Google Scholar] [CrossRef]

- Kuai, Y.L.; Wen, G.J.; Li, D.D. When correlation filters meet fully-convolutional Siamese networks for distractor-aware tracking. Signal Process. Image Commun. 2018, 64, 107–117. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, S.; Yang, S.; He, W.; Bai, X. Mechanical assembly assistance using marker-less augmented reality system. J. Assem. Autom. 2018, 38, 77–87. [Google Scholar] [CrossRef]

- Xiao, R.; Schwarz, J.; Throm, N.; Wilson, A.D.; Benko, H. MRTouch: Adding touch input to head-mounted mixed reality. J. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1653–1660. [Google Scholar] [CrossRef]

- Fotouhi, J.; Mehrfard, A.; Song, T.; Johnson, A.; Osgood, G.; Unberath, M.; Armand, M.; Navab, N. Development and Pre-Clinical Analysis of Spatiotemporal-Aware Augmented Reality in Orthopedic Interventions. J. IEEE Trans. Med. Imaging 2021, 40, 765–778. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 116–124. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese visual tracking with very deep networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Pu, L.; Feng, X.; Hou, Z.; Yu, W.; Zha, Y. SiamDA: Dual attention Siamese network for real-time visual tracking. J. Signal Process. Image Commun. 2021, 95, 116293. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware Siamese networks for visual object tracking. In Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 103–109. [Google Scholar]

- Li, P.; Chen, B.; Ouyang, W.; Wang, D.; Yang, X.; Lu, H. GradNet: Gradient-guided network for visual object tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6162–6171. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Danelljan, M.; Van, G.; Timofte, R. Probabilistic regression for visual tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7183–7192. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate Tracking by Overlap Maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4655–4664. [Google Scholar]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M.-H. Target-Aware Deep Tracking. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1369–1378. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).