High-Precision Calibration Method and Error Analysis of Infrared Binocular Target Ranging Systems

Abstract

1. Introduction

2. Principles and Methods

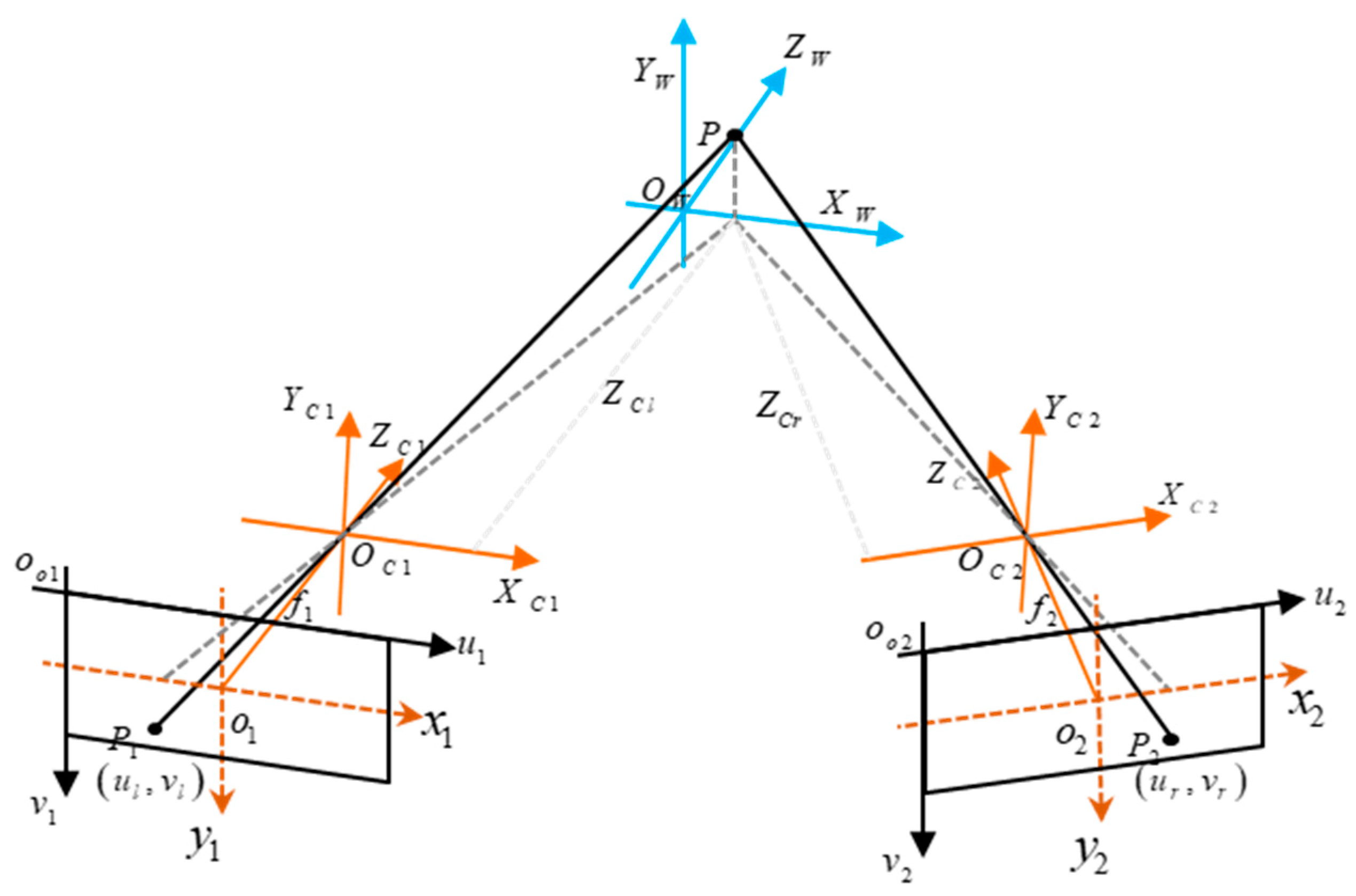

2.1. Infrared Binocular Camera Imaging Model

2.2. Principles and Steps for Camera Calibration and Optimization

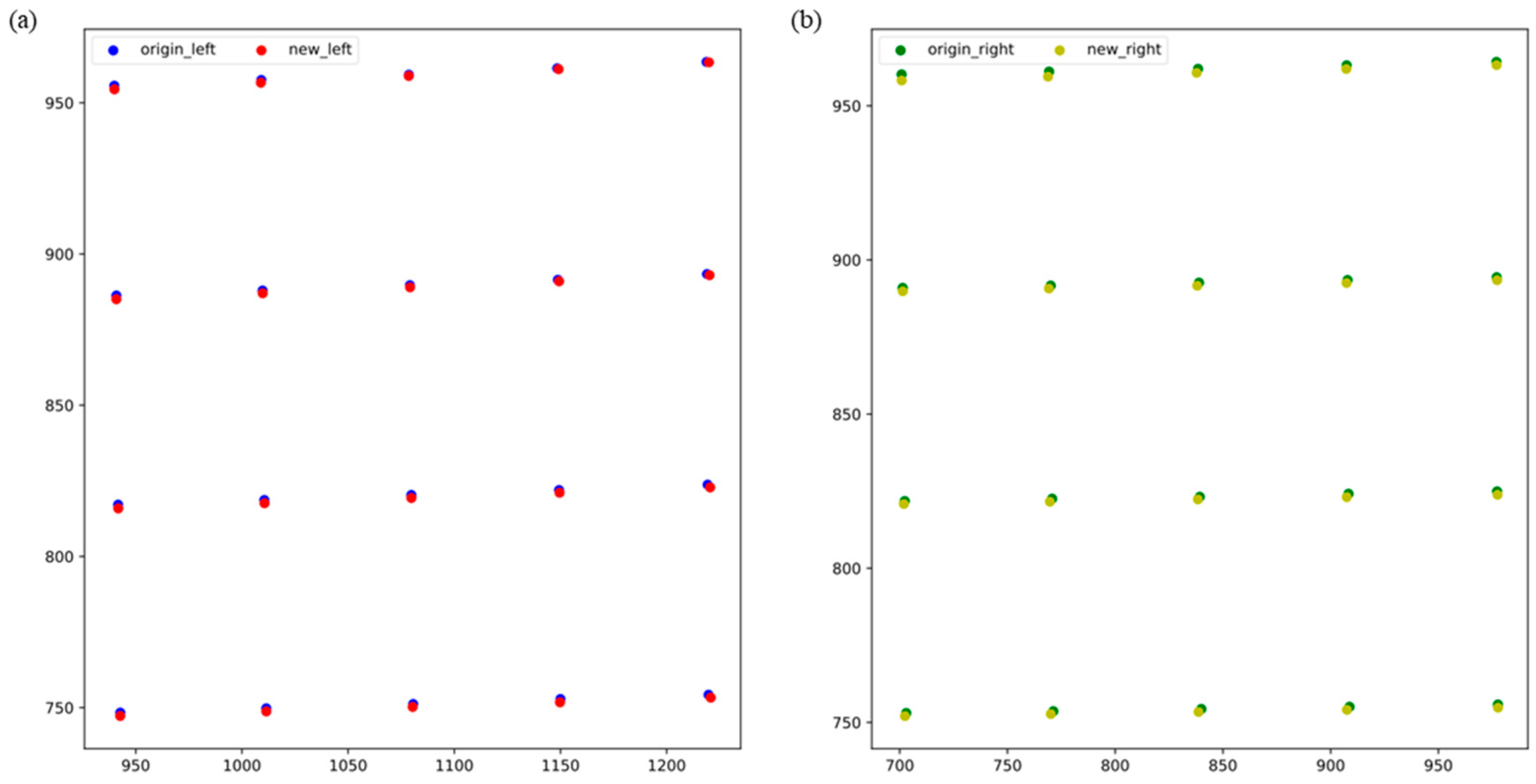

2.2.1. Optimization of Feature Point Pixel Coordinates

- 1.

- Sub-pixel Edge Detection of Ellipse

- 2.

- Ellipse Fitting Optimization

- 3.

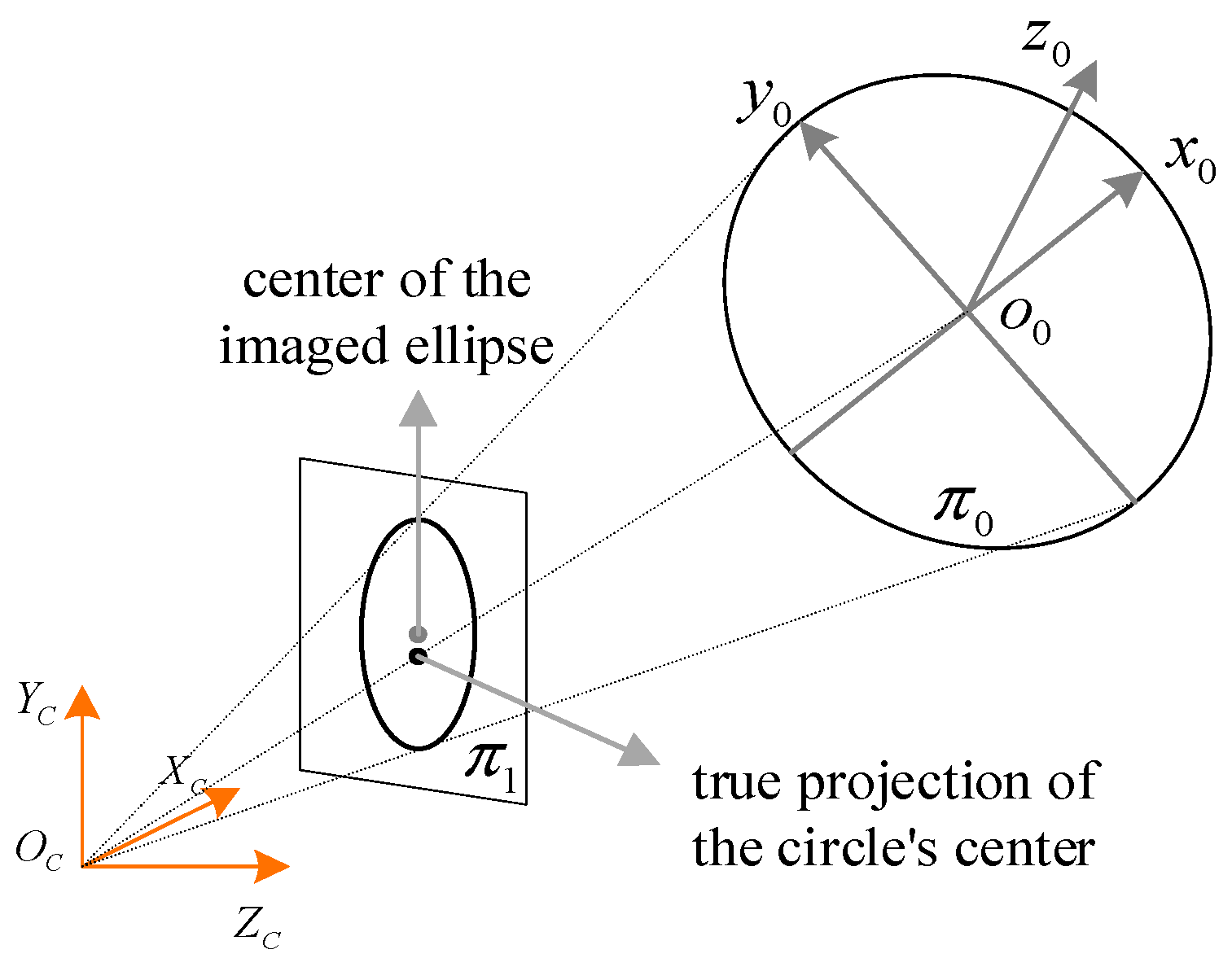

- True Projection of the Target’s Actual Center

2.2.2. Optimization of Feature Point World Coordinates

2.2.3. Steps for Optimizing Camera Calibration

- In pursuit of improved camera calibration accuracy, the approach from reference [32] is adopted, focusing on altering the calibration board’s orientation by rotating it around the and axes, instead of moving the board linearly or rotating it around the axis. This method is complemented by the rotation of the infrared binocular camera setup to capture calibration images, ensuring that the target is captured across various positions in the combined field of view of both cameras, thus gathering an extensive set of valid calibration images. Utilizing a sub-pixel edge estimation technique for each image, the sub-pixel coordinates of the elliptical edges are extracted and subsequently refined to ascertain the central coordinates of the ellipses. The initial intrinsic and extrinsic parameters of the camera, along with the preliminary rotation and translation matrices relating the binocular camera coordinate systems, are calibrated using the planar calibration approach;

- Employing the preliminary values derived from the prior step, we calculate the coordinates in the camera coordinate system of the points where the centers of the ellipses are inversely projected into three-dimensional space, as per Equation (4). Ideally, these inverse projections fall within the plane of the actual target. Given that the initial errors from the planar calibration method are confined within acceptable limits, to approximate the true plane more accurately and minimize the effects of noise, we utilize the RANSAC to eliminate outlier points with significant deviations of the inverse projections. The equation of the virtual plane in the camera’s coordinate system is thereby established through the least squares method;

- Given that there is inherent discrepancy between the coordinates of the true target’s center as imaged and the coordinates of the extracted ellipse center, the process involves inversely projecting the ellipse edges onto the virtual target plane. An elliptical fit is then applied to the features on this virtual plane, and a least squares linear optimization is conducted on the central feature points of the ellipse. This methodology results in obtaining the feature points aligned with the world coordinates;

- By reprojecting the refined feature points onto the image plane and leveraging the pixel coordinates of these projections along with their corresponding world coordinates, a re-calibration of the plane is performed to determine the ultimate calibration parameters for the infrared camera. Thereafter, the transformation parameters between the two infrared binocular cameras are acquired through the application of stereo calibration algorithms.

2.3. Error Analysis

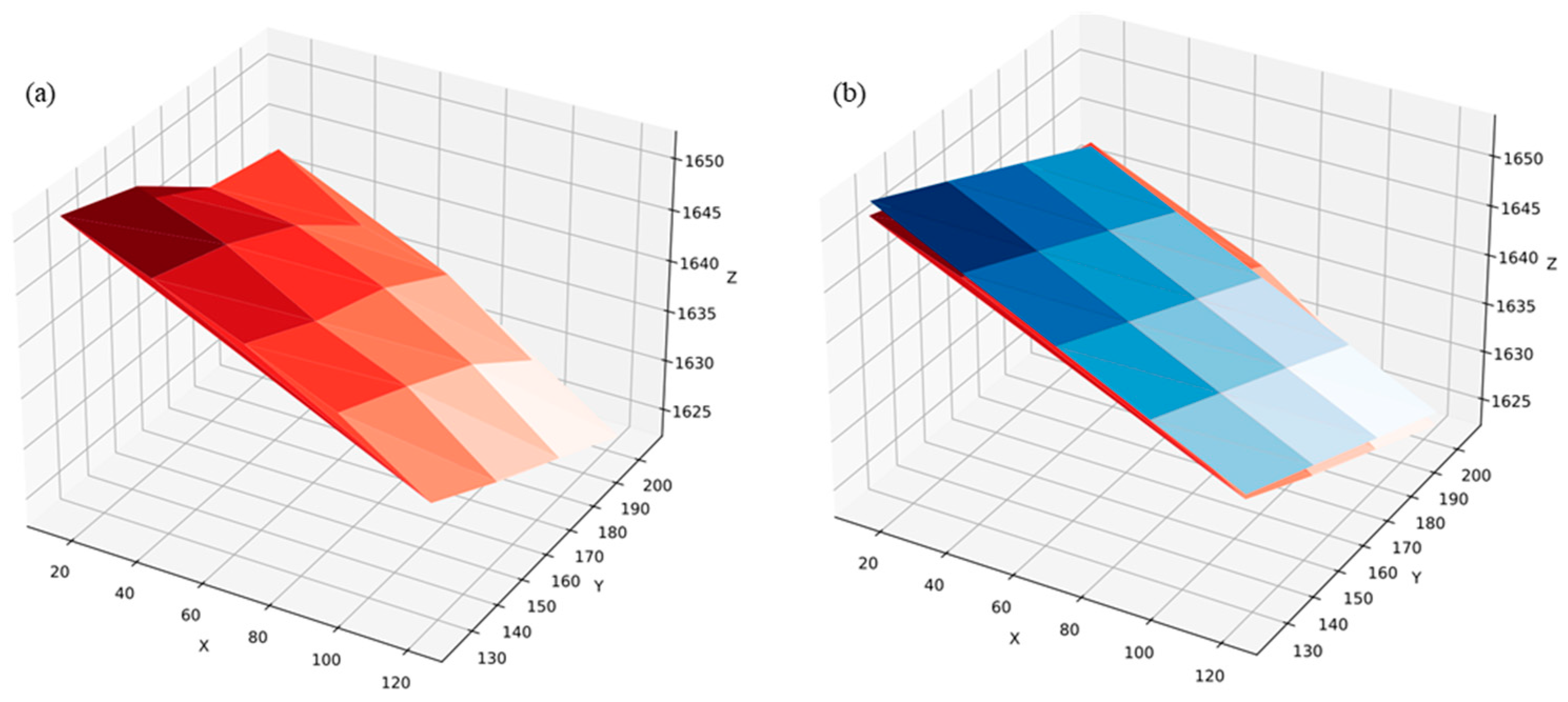

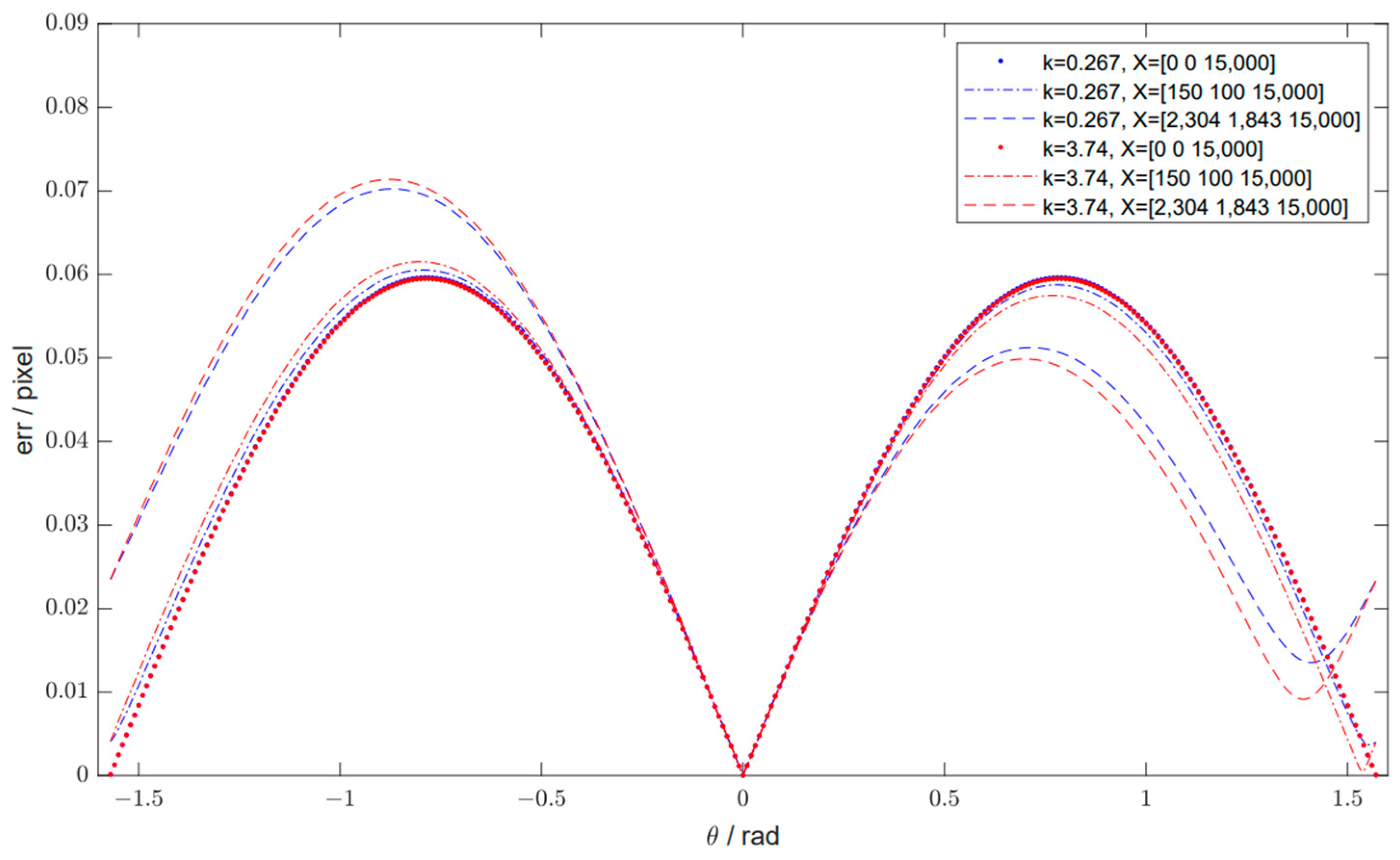

2.3.1. Feature Point World Coordinate Error

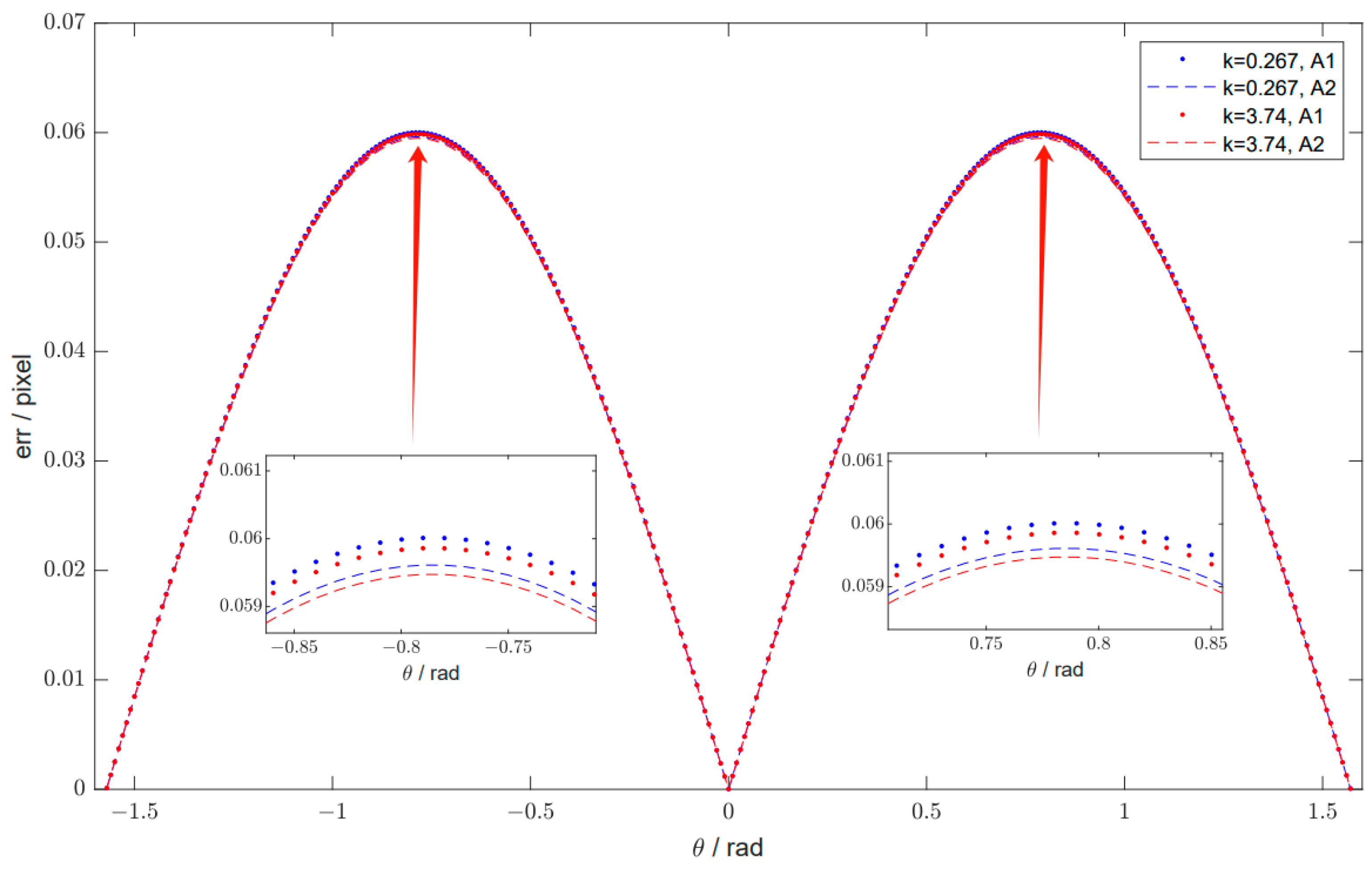

2.3.2. Feature Point Pixel Coordinate Error

2.3.3. Calibration Error

2.4. Infrared Binocular Ranging

3. Experiments and Results

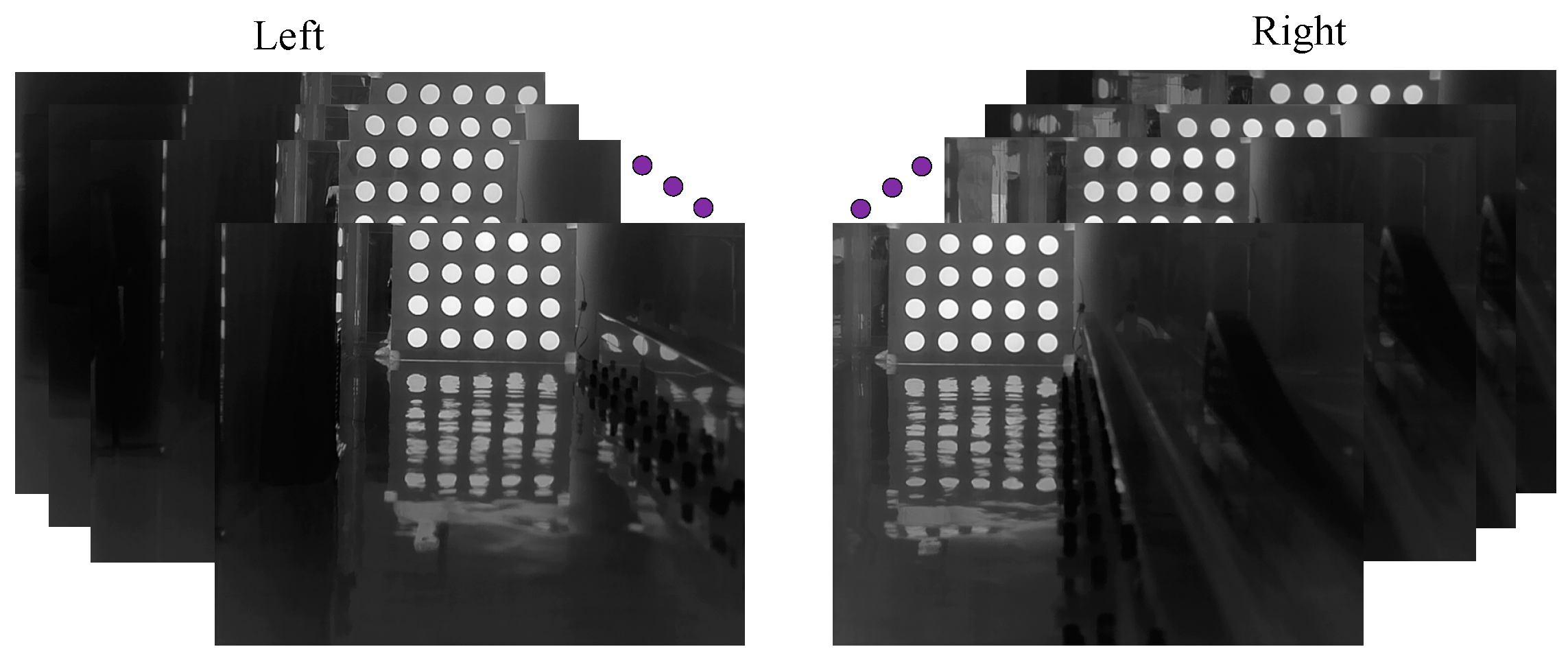

3.1. Preparations for Infrared Binocular Camera Calibration

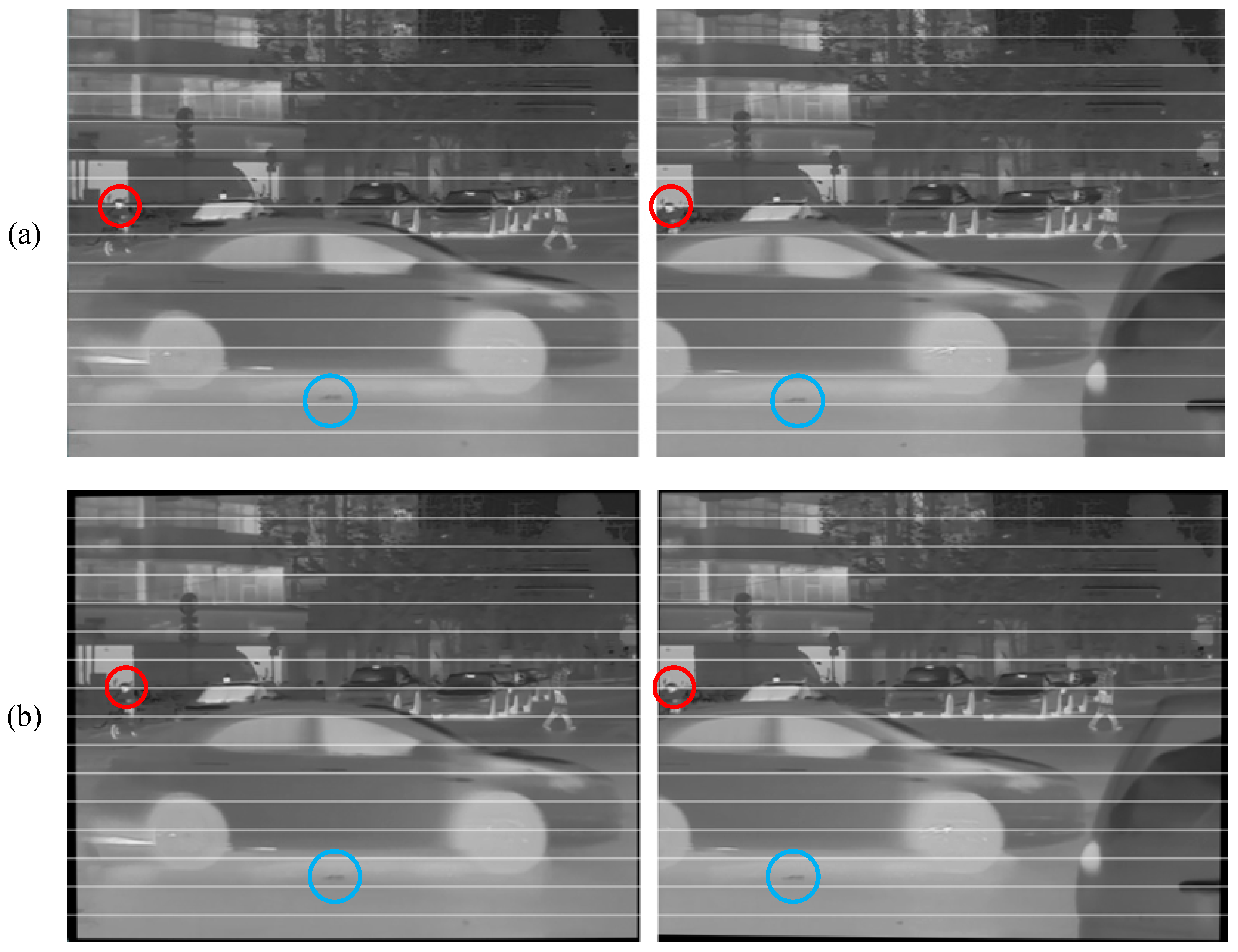

3.2. Infrared Binocular Camera Calibration Experiment

3.3. Results of Error Analysis

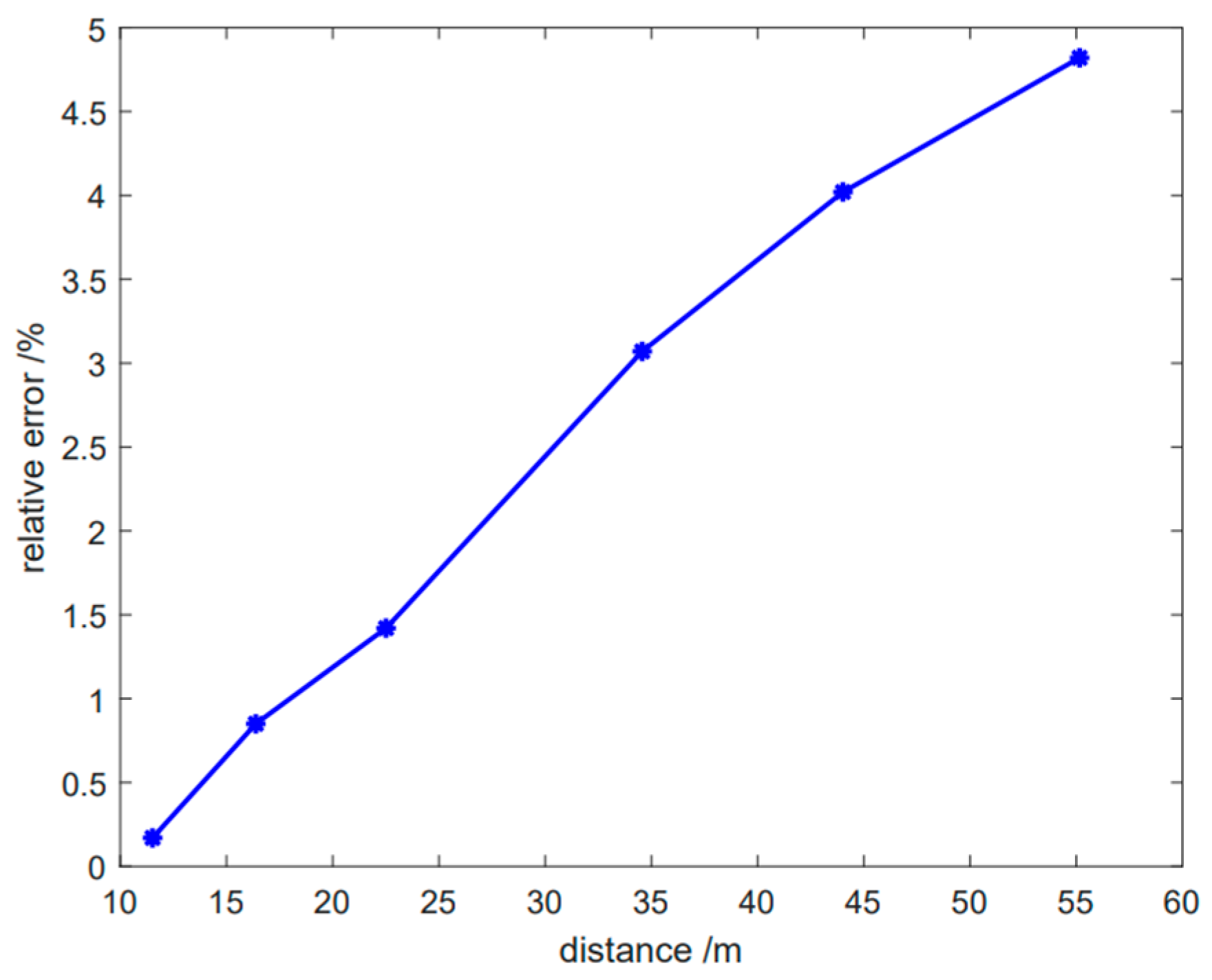

3.4. Infrared Binocular Camera Ranging Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bao, D.; Wang, P. Vehicle distance detection based on monocular vision. In Proceedings of the 2016 International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 23–25 December 2016; pp. 187–191. [Google Scholar]

- Chwa, D.; Dani, A.P.; Dixon, W.E. Range and motion estimation of a monocular camera using static and moving objects. IEEE Trans. Control Syst. Technol. 2015, 24, 1174–1183. [Google Scholar] [CrossRef]

- Ferrara, P.; Piva, A.; Argenti, F.; Kusuno, J.; Niccolini, M.; Ragaglia, M.; Uccheddu, F. Wide-angle and long-range real time pose estimation: A comparison between monocular and stereo vision systems. J. Vis. Commun. Image Represent. 2017, 48, 159–168. [Google Scholar] [CrossRef]

- Huang, L.; Zhe, T.; Wu, J.; Wu, Q.; Pei, C.; Chen, D. Robust inter-vehicle distance estimation method based on monocular vision. IEEE Access 2019, 7, 46059–46070. [Google Scholar] [CrossRef]

- Li, W.; Shan, S.; Liu, H. High-precision method of binocular camera calibration with a distortion model. Appl. Opt. 2017, 56, 2368–2377. [Google Scholar] [CrossRef] [PubMed]

- Xicai, L.; Qinqin, W.; Yuanqing, W. Binocular vision calibration method for a long-wavelength infrared camera and a visible spectrum camera with different resolutions. Opt. Express 2021, 29, 3855–3872. [Google Scholar] [CrossRef]

- Liu, F.; Lu, Z.; Lin, X. Vision-based environmental perception for autonomous driving. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2023, 0, 09544070231203059. [Google Scholar] [CrossRef]

- Wang, J.; Geng, K.; Yin, G.; Cheng, X.; Sun, Y.; Ding, P. Binocular Infrared Depth Estimation Based On Generative Adversarial Network. In Proceedings of the 2022 6th CAA International Conference on Vehicular Control and Intelligence (CVCI), Nanjing, China, 28–30 October 2022; pp. 1–6. [Google Scholar]

- Li, H.; Wang, S.; Bai, Z.; Wang, H.; Li, S.; Wen, S. Research on 3D reconstruction of binocular vision based on thermal infrared. Sensors 2023, 23, 7372. [Google Scholar] [CrossRef] [PubMed]

- Su, B.; Gong, Y.; Yu, S.; Li, H.; Wang, Z.; Liu, W.; Wang, J.; Kuang, S.; Yao, W.; Tang, J. 3D spatial positioning by binocular infrared cameras. In Proceedings of the 2021 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 11 September 2021; pp. 67–72. [Google Scholar]

- Wang, Z.; Liu, B.; Huang, F.; Chen, Y.; Zhang, S.; Cheng, Y. Corners positioning for binocular ultra-wide angle long-wave infrared camera calibration. Optik 2020, 206, 163441. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, H.; Li, L.; Jin, W.; Song, J.; Zhou, Y. A stereo vision depth estimation method of binocular wide-field infrared camera. In Proceedings of the Third International Computing Imaging Conference (CITA 2023), Sydney, Australia, 1–3 June 2023; pp. 252–264. [Google Scholar]

- Wu, Y.; Zhao, Q.; Jiang, J. Driver’s Head Behavior Detection Using Binocular Infrared Camera. In Proceedings of the 2018 IEEE International Conference on Information and Automation (ICIA), Wuyishan, China, 11–13 August 2018; pp. 565–569. [Google Scholar]

- Zhang, Y.-J. Camera calibration. In 3-D Computer Vision: Principles, Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2023; pp. 37–65. [Google Scholar]

- Li, X.; Yang, Y.; Ye, Y.; Ma, S.; Hu, T. An online visual measurement method for workpiece dimension based on deep learning. Measurement 2021, 185, 110032. [Google Scholar] [CrossRef]

- Yang, X.; Jiang, G. A practical 3D reconstruction method for weak texture scenes. Remote Sens. 2021, 13, 3103. [Google Scholar] [CrossRef]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 666–673. [Google Scholar]

- Vidas, S.; Lakemond, R.; Denman, S.; Fookes, C.; Sridharan, S.; Wark, T. A mask-based approach for the geometric calibration of thermal-infrared cameras. IEEE Trans. Instrum. Meas. 2012, 61, 1625–1635. [Google Scholar] [CrossRef]

- Ursine, W.; Calado, F.; Teixeira, G.; Diniz, H.; Silvino, S.; De Andrade, R. Thermal/visible autonomous stereo visio system calibration methodology for non-controlled environments. In Proceedings of the 11th International Conference on Quantitative Infrared Thermography, Naples, Italy, 11–14 June 2012; pp. 1–10. [Google Scholar]

- St-Laurent, L.; Mikhnevich, M.; Bubel, A.; Prévost, D. Passive calibration board for alignment of VIS-NIR, SWIR and LWIR images. Quant. InfraRed Thermogr. J. 2017, 14, 193–205. [Google Scholar] [CrossRef]

- Swamidoss, I.N.; Amro, A.B.; Sayadi, S. Systematic approach for thermal imaging camera calibration for machine vision applications. Optik 2021, 247, 168039. [Google Scholar] [CrossRef]

- Prakash, S.; Lee, P.Y.; Caelli, T.; Raupach, T. Robust thermal camera calibration and 3D mapping of object surface temperatures. In Proceedings of the Thermosense XXVIII, Kissimmee, FL, USA, 17–20 April 2006; pp. 182–189. [Google Scholar]

- Saponaro, P.; Sorensen, S.; Rhein, S.; Kambhamettu, C. Improving calibration of thermal stereo cameras using heated calibration board. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4718–4722. [Google Scholar]

- Herrmann, T.; Migniot, C.; Aubreton, O. Thermal camera calibration with cooled down chessboard. In Proceedings of the Quantitative InfraRed Thermography Conference, Tokyo, Japan, 1–5 July 2019. [Google Scholar]

- ElSheikh, A.; Abu-Nabah, B.A.; Hamdan, M.O.; Tian, G.-Y. Infrared camera geometric calibration: A review and a precise thermal radiation checkerboard target. Sensors 2023, 23, 3479. [Google Scholar] [CrossRef] [PubMed]

- Mallon, J.; Whelan, P.F. Which pattern? Biasing aspects of planar calibration patterns and detection methods. Pattern Recognit. Lett. 2007, 28, 921–930. [Google Scholar] [CrossRef]

- Lou, Q.; Lü, J.; Wen, L.; Xiao, J.; Zhang, G.; Hou, X. High-precision camera calibration method based on sub-pixel edge detection. Acta Opt. Sin. 2022, 42, 2012002. [Google Scholar]

- Barath, D.; Cavalli, L.; Pollefeys, M. Learning to find good models in RANSAC. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15744–15753. [Google Scholar]

- Huang, L.; Wu, G.; Liu, J.; Yang, S.; Cao, Q.; Ding, W.; Tang, W. Obstacle distance measurement based on binocular vision for high-voltage transmission lines using a cable inspection robot. Sci. Prog. 2020, 103, 0036850420936910. [Google Scholar] [CrossRef] [PubMed]

- Dawson-Howe, K.M.; Vernon, D. Simple pinhole camera calibration. Int. J. Imaging Syst. Technol. 1994, 5, 1–6. [Google Scholar] [CrossRef]

- He, H.; Li, H.; Huang, Y.; Huang, J.; Li, P. A novel efficient camera calibration approach based on K-SVD sparse dictionary learning. Measurement 2020, 159, 107798. [Google Scholar] [CrossRef]

- Xie, Z.; Lu, W.; Wang, X.; Liu, J. Analysis of pose selection on binocular stereo calibration. Chin. J. Lasers 2015, 42, 208001–208003. [Google Scholar]

- Fehér, A.; Lukács, N.; Somlai, L.; Fodor, T.; Szücs, M.; Fülöp, T.; Ván, P.; Kovács, R. Size effects and beyond-Fourier heat conduction in room-temperature experiments. J. Non-Equilib. Thermodyn. 2021, 46, 403–411. [Google Scholar] [CrossRef]

- Han, J.; Yang, H. Analysis method for the projection error of circle center in 3D vision measurement. Comput. Sci. 2010, 37, 247–249. [Google Scholar]

- Hong, C.; Daiqiang, W.; Yuqing, C. Research on the Influence of Calibration Image on Reprojection Error. In Proceedings of the 2021 International Conference on Big Data Engineering and Education (BDEE), Guiyang, China, 23–25 July 2021; pp. 60–66. [Google Scholar]

- Zhai, G.; Zhang, W.; Hu, W.; Ji, Z. Coal mine rescue robots based on binocular vision: A review of the state of the art. IEEE Access 2020, 8, 130561–130575. [Google Scholar] [CrossRef]

- Yuan, P.; Cai, D.; Cao, W.; Chen, C. Train Target Recognition and Ranging Technology Based on Binocular Stereoscopic Vision. J. Northeast. Univ. (Nat. Sci.) 2022, 43, 335. [Google Scholar]

| Effective Focal Length | 25 mm |

| Field Of View (640 * 512) | 17.6° (H) × 14.1° (V) |

| Wavelength band | 8~12 |

| F-Number | 1.0 |

| pixel size | 12 |

| aperture diameter (D) | 25 mm |

| Approach | fx | Fy | u0 | v0 | Reprojection Error |

|---|---|---|---|---|---|

| T.(l) | 4208.272 | 4220.292 | 638.959 | 444.632 | 0.025472 |

| T.(r) | 4191.947 | 4204.317 | 665.182 | 439.508 | 0.025529 |

| P.(l) | 4170.279 | 4180.970 | 631.355 | 489.283 | 0.019233 (−24.5%) |

| P.(r) | 4166.903 | 4177.129 | 675.926 | 491.014 | 0.019351 (−24.2%) |

| Approach | k1 | k2 | k3 | p1 | p2 |

|---|---|---|---|---|---|

| T.(l) | 0.10974 | 0.66015 | 12.30672 | −0.00747 | 0.00045 |

| T.(r) | 0.12118 | 0.04299 | 6.32299 | −0.01042 | 0.00115 |

| P.(l) | 0.08885 | 1.24429 | −7.72630 | −0.00178 | −0.00016 |

| P.(r) | 0.10674 | −0.31974 | 15.73494 | −0.00413 | 0.00219 |

| Approach | RC | TC | res |

|---|---|---|---|

| T. | [[0.9999396 0.0106574 −0.0026880] [−0.0106500 0.9999396 0.0027297] [0.0027169 −0.0027009 0.9999927]] | [[−102.1816259] [0.49134853] [−6.83686545]] | 0.450877 |

| P. | [[ 0.999922 0.0101889 −0.0071329] [−0.0101816 0.9999476 0.0010583] [0.0071433 −0.0009856 0.9999740]] | [[−102.00134939] [0.57938219] [−2.972963]] | 0.438399 (−2.8%) |

| Parameters | Values |

|---|---|

| A1′ | [[4.08556674 × 103 0.00000000 5.34163437 × 102 0.00000000] [0.00000000 4.08556674 × 103 4.89555637 × 102 0.00000000] [0.00000000 0.00000000 1.00000000 0.00000000]] |

| A2′ | [[4.08556674 × 103 0.00000000 5.34163437 × 102 4.23220829 × 105] [0.00000000 4.08556674 × 103 4.89555637 × 102 0.00000000] [0.00000000 0.00000000 1.00000000 0.00000000]] |

| RC′ | [[1.00000000 9.22250370 × 10−12 1.44598916 × 10−12] [9.23272358 × 10−12 1.00000000 1.75414967 × 10−12] [1.43979273 × 10−12 1.75546004 × 10−12 1.00000000]] |

| TC′ | [[−1.03589259 × 102] [−8.54871729 × 10−15] [ 3.63530766 × 10−13]] |

| World Coordinate Error | Pixel Coordinate Error | Calibration Error | |||

|---|---|---|---|---|---|

| Machining Inaccuracies | Thermal Deformation | Projection Distortion | Center Extraction Error | ||

| Error/pixel | <0.14 | ~0 | <0.07 | <1.4 | 0.019 |

| Actual Distance/m | Direct Ranging/m (Relative Error/%) | Ranging after Rectification/m (Relative Error/%) |

|---|---|---|

| 11.52 | 11.50 (−0.17) | 11.55 (+0.26) |

| 16.38 | 16.24 (−0.85) | 16.26 (−0.73) |

| 22.51 | 22.19 (−1.42) | 21.90 (−2.71) |

| 34.57 | 35.63 (+3.07) | 40.55 (+17.30) |

| 44.02 | 45.79 (+4.02) | 49.63 (+12.74) |

| 55.16 | 52.50 (−4.82) | 55.65 (+0.89) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, C.; Wei, R.; Gu, M.; Zhang, N.; Dai, Z. High-Precision Calibration Method and Error Analysis of Infrared Binocular Target Ranging Systems. Electronics 2024, 13, 3188. https://doi.org/10.3390/electronics13163188

Zeng C, Wei R, Gu M, Zhang N, Dai Z. High-Precision Calibration Method and Error Analysis of Infrared Binocular Target Ranging Systems. Electronics. 2024; 13(16):3188. https://doi.org/10.3390/electronics13163188

Chicago/Turabian StyleZeng, Changwen, Rongke Wei, Mingjian Gu, Nejie Zhang, and Zuoxiao Dai. 2024. "High-Precision Calibration Method and Error Analysis of Infrared Binocular Target Ranging Systems" Electronics 13, no. 16: 3188. https://doi.org/10.3390/electronics13163188

APA StyleZeng, C., Wei, R., Gu, M., Zhang, N., & Dai, Z. (2024). High-Precision Calibration Method and Error Analysis of Infrared Binocular Target Ranging Systems. Electronics, 13(16), 3188. https://doi.org/10.3390/electronics13163188