Abstract

Traditional road testing of autonomous vehicles faces significant limitations, including long testing cycles, high costs, and substantial risks. Consequently, autonomous driving simulators and dataset-based testing methods have gained attention for their efficiency, low cost, and reduced risk. Simulators can efficiently test extreme scenarios and provide quick feedback, while datasets offer valuable real-world driving data for algorithm training and optimization. However, existing research often provides brief and limited overviews of simulators and datasets. Additionally, while the role of virtual autonomous driving competitions in advancing autonomous driving technology is recognized, comprehensive surveys on these competitions are scarce. This survey paper addresses these gaps by presenting an in-depth analysis of 22 mainstream autonomous driving simulators, focusing on their accessibility, physics engines, and rendering engines. It also compiles 35 open-source datasets, detailing key features in scenes and data-collecting sensors. Furthermore, the paper surveys 10 notable virtual competitions, highlighting essential information on the involved simulators, datasets, and tested scenarios involved. Additionally, this review analyzes the challenges in developing autonomous driving simulators, datasets, and virtual competitions. The aim is to provide researchers with a comprehensive perspective, aiding in the selection of suitable tools and resources to advance autonomous driving technology and its commercial implementation.

1. Introduction

Autonomous vehicles integrate environmental perception, decision-making [1], and motion control technologies, offering advantages such as enhanced driving safety, alleviated traffic congestion, and reduced traffic accidents [2]. They hold substantial potential in future intelligent transportation systems [3]. However, in real-world driving scenarios, rare but high-risk events, such as sudden roadblocks, unexpected pedestrian or animal crossings, and emergency maneuvers under complex conditions, can lead to severe traffic accidents [4]. Therefore, before autonomous vehicles can be commercialized, they must undergo rigorous and comprehensive testing to ensure reliability across various scenarios [5].

Validating the reliability of autonomous vehicles requires billions of miles of testing on public roads [6]. Traditional road-testing methods present several limitations. Firstly, the testing cycle is prolonged due to the need to evaluate performance under diverse weather, road, and traffic conditions, requiring substantial time and resources [7]. Secondly, the costs are high, encompassing vehicle and equipment investments, personnel wages, insurance, and potential accident liabilities [8]. Lastly, testing carries significant risks, as accidents can occur even under strict testing conditions, potentially harming test personnel and damaging the reputation of autonomous vehicle suppliers [9]. Thus, relying solely on road tests is insufficient for efficiently validating autonomous vehicle performance [10].

New testing methods and strategies are being explored to more effectively assess the reliability of autonomous vehicles [11]. Currently, companies widely use autonomous driving simulators and datasets to evaluate the performance of autonomous driving modules [12]. These simulators are capable of offering high-fidelity virtual environments, accurate vehicle dynamics and sensor models, and realistic simulations of weather conditions, lighting variations, and traffic scenarios [13]. Furthermore, simulators can rapidly generate and repeatedly test extreme scenarios that are difficult and risky to replicate in reality, significantly enhancing testing efficiency and addressing the lengthy, resource-intensive, and less reproducible nature of road tests [14]. Therefore, simulators can provide a safe and cost-effective environment for performance evaluation [15].

Simulators serve as efficient virtual testing platforms, but they require specific data to accurately simulate the authentic environment surrounding automated vehicles. In this context, datasets comprising sensor data collected from real driving scenarios are essential to reconstruct the diverse situations a vehicle may encounter. This approach enables the validation and optimization of autonomous driving hardware and software in realistic simulated environments [16]. Under such circumstances, simulations involving specific datasets enhance the performance of autonomous driving systems in real-world applications, ensuring algorithms can operate reliably under complex and variable driving conditions [17]. Furthermore, they help identify potential defects and limitations in algorithms, providing researchers with valuable insights for improvement [18].

Simulators and datasets significantly enhance the efficiency of research and development in autonomous driving systems, particularly within enterprises. Road testing typically serves as the final validation stage for these systems. However, research groups in academic institutions often lack the personnel and resources to perform extensive road tests. Consequently, virtual autonomous driving competitions have emerged, allowing research groups to compete in their research outputs, particularly autonomous driving algorithms, against those from enterprises [19]. These competitions, which provide a practical platform for technology development and foster technical exchange and progress, rely on virtual testing platforms and realistic data from efficient datasets [20]. Based on real and critical driving and traffic accident scenarios, virtual competitions allow participants to demonstrate their algorithms in a controlled environment. Furthermore, compared to real-vehicle competitions, virtual competitions do not require significant investment in or maintenance of physical vehicles, nor do they incur related operational costs [21].

1.1. Related Works

This subsection surveys previous reviews related to autonomous driving simulators, datasets, and competitions.

In terms of simulators, Rosique et al. [22] focused on the simulation of perception systems in autonomous vehicles, highlighting key elements and briefly comparing 11 simulators based on open-source status, physics/graphics engines, scripting languages, and sensor simulation. However, their study lacks detailed introductions to the functional features of each simulator and a comprehensive analysis of these dimensions. Yang et al. [23] categorized common autonomous driving simulators into point cloud-based and 3D engine-based types, providing a brief functional overview. Unfortunately, their survey was incomplete and did not thoroughly cover the basic functions or highlight distinctive features of the simulators, limiting readers’ understanding of their specific characteristics and advantages. Kaur et al. [24] provided detailed descriptions of six common simulators: Matlab/Simulink, CarSim, PreScan, CARLA, Gazebo, and LGSVL. They compared CARLA and LGSVL across simulation environment, sensor simulation, map generation, and software architecture. Similarly, Zhou et al. [25] summarized the features of seven common simulators and compared them across several dimensions, such as environment and scenario generation, sensor setup, and traffic participant control. Despite their detailed functional descriptions and comparative analyses, these studies surveyed a limited number of simulators and provided only practical functional comparisons without deeply exploring the differences in simulation principles.

In the domain of autonomous driving datasets, Janai et al. [26] surveyed datasets related to computer vision applications in autonomous driving. Yin et al. [27] provided a summary of several open-source datasets primarily collected on public roads. Kang et al. [28] expanded this work, including more datasets and simulators, and offered comparative summaries based on key influencing factors. Guo et al. [29] introduced the concept of drivability in autonomous driving and categorized an even greater number of open-source datasets. Liu et al. [30] provided an overview of over a hundred datasets, classifying them by sensing domains and use cases, and proposed an innovative evaluation metric to assess their impact. While these studies have made significant progress in collecting, categorizing, and summarizing datasets, they often present key information in a simplified form. Although these summaries are useful for quick reference, they basically omit essential details about data collection methods, labeling, and usage restrictions.

Regarding autonomous driving competitions, most existing surveys focus on physical autonomous racing. For instance, Li et al. [31] reviewed governance rules of autonomous racing competitions to enhance their educational value and proposed recommendations for rule-making. Betz et al. [32] conducted a comprehensive survey of algorithms and methods used in perception, planning, control, and end-to-end learning in autonomous racing, and they summarized various autonomous racing competitions and related vehicles. These studies primarily concentrate on the field of autonomous racing, while virtual autonomous driving competitions using simulators and datasets are scarcely addressed.

1.2. Motivations and Contributions

The effective evaluation and selection of autonomous driving simulators require a comprehensive understanding of their functional modules and underlying technologies. However, existing studies often lack a thorough explanation of these functional modules and overlook in-depth discussions of core components such as physics engines and rendering engines. To address these gaps, this study expands on the content of simulator surveys, providing a detailed exploration of their physics and rendering engines. Furthermore, a deep understanding of key functions is crucial for the practical application of simulators. Existing research frequently falls short in its discussion of these functions. Thus, this study focuses on key functionalities such as scenario simulation, sensor simulation, and the implementation of vehicle dynamics simulation. Additionally, we highlight simulators that excel in these functional domains, showcasing their specific applications.

Existing surveys on autonomous driving datasets either cover a limited number of datasets or omit essential information. This study provides a comprehensive introduction to datasets, encompassing various aspects such as dataset types, collection periods, covered scenarios, usage restrictions, and data scales.

Finally, while virtual autonomous driving competitions play a significant role in advancing simulators and datasets, reviews in this area are scarce. This study investigates and summarizes existing virtual autonomous driving competitions.

Our work presents the following contributions:

- This survey is the first to systematically investigate autonomous driving simulators by providing a deep analysis of their physics and rendering engines to support informed simulator selection.

- This survey provides an in-depth analysis of three key functions in autonomous driving simulators: scenario simulation, sensor simulation, and the implementation of vehicle dynamics simulation.

- This survey is the first to systematically review virtual autonomous driving competitions that are valuable for virtually testing autonomous driving systems.

1.3. Organizations

In the rest of the article, Section 2 introduces the main functions of 22 mainstream autonomous driving simulators, providing key information on their physics and rendering engines and key functions. Section 3 presents a timeline-based summary of 35 datasets, categorizing them according to specific task requirements and analyzing their construction methods, key features, and applicable scenarios. Section 4 reviews 10 virtual autonomous driving competitions. Section 5 addresses perspectives in the development of autonomous driving simulators, datasets, and virtual competitions. Finally, Section 6 concludes the survey.

2. Autonomous Driving Simulators

This section provides an overview of 22 autonomous driving simulators, categorized into two groups: open-source and proprietary, which are presented in Section 2.1 and Section 2.2. Additionally, Section 2.3 discusses four critical aspects: accessibility, physics engines, rendering engines, and the essential capabilities required for comprehensive autonomous driving simulators.

2.1. Open Source Simulators

- AirSim

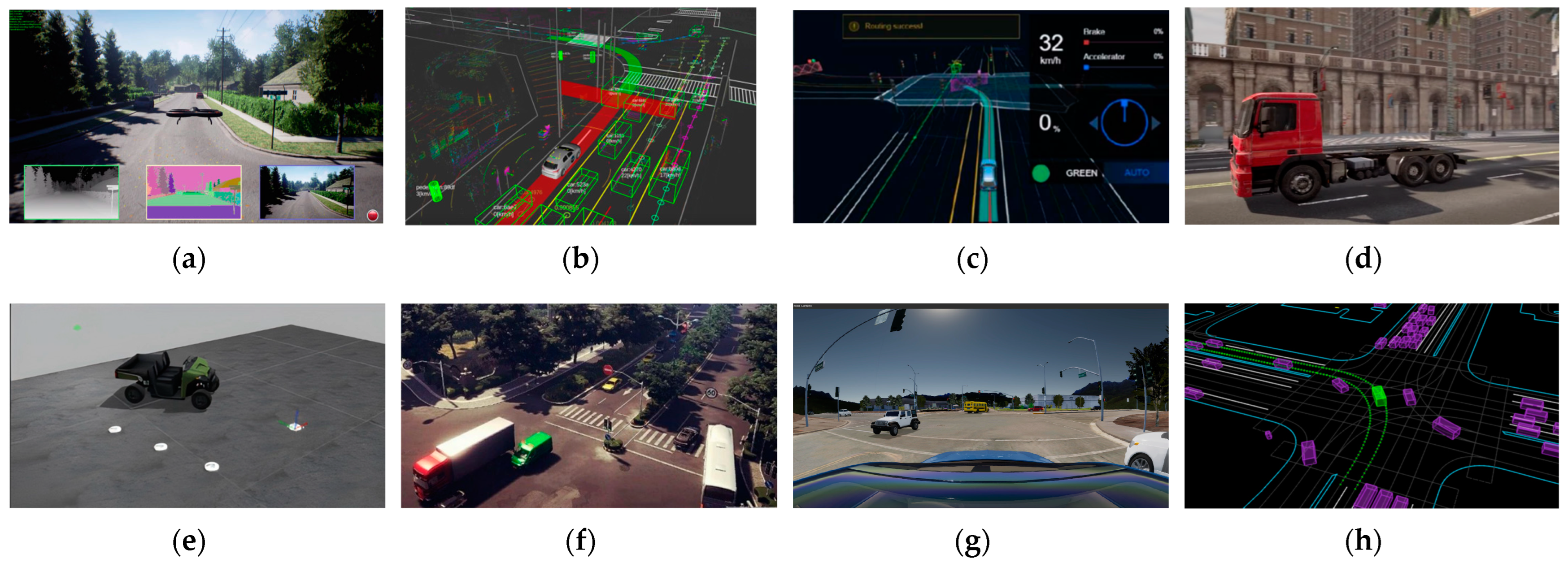

AirSim (v 1.8.1, Figure 1a) [33] is engineered by Microsoft (Redmond, WA, USA) on the Unreal Engine framework, which is specifically tailored for the development and testing of autonomous driving algorithms. AirSim is distinguished by its modular architecture, which encompasses comprehensive environmental, vehicular, and sensor models.

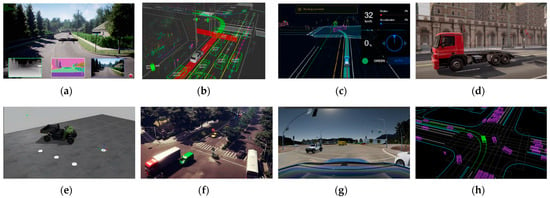

Figure 1.

User interfaces of the open-source simulators: (a) AirSim [33]; (b) Autoware [34]; (c) Baidu Apollo [35]; (d) CARLA [36]; (e) Gazebo [37]; (f) 51Sim-One [38]; (g) LGSVL [39]; (h) Waymax [40].

The simulator is designed for high extensibility, featuring dedicated interfaces for rendering, a universal API layer, and interfaces for vehicle firmware. This facilitates seamless adaptation to a diverse range of vehicles, hardware configurations, and software protocols. Additionally, AirSim’s plugin-based structure allows for straightforward integration into any project utilizing Unreal Engine 4. This platform further enhances the simulation experience by offering hundreds of test scenarios, predominantly crafted through photogrammetry to achieve a high-fidelity replication of real-world environments.

- Autoware

Autoware (v 1.0, Figure 1b) [41], developed on the Robot Operating System (ROS), was created by a research team at Nagoya University. It is designed for scalable deployment across a variety of autonomous applications, with a strong emphasis on practices and standards to ensure quality and safety in real-world implementations. The platform exhibits a high degree of modularity, featuring dedicated modules for perception, localization, planning, and control, each equipped with clearly defined interfaces and APIs. Autoware facilitates direct integration with vehicle hardware, focusing on both the deployment and testing of autonomous systems on actual vehicles.

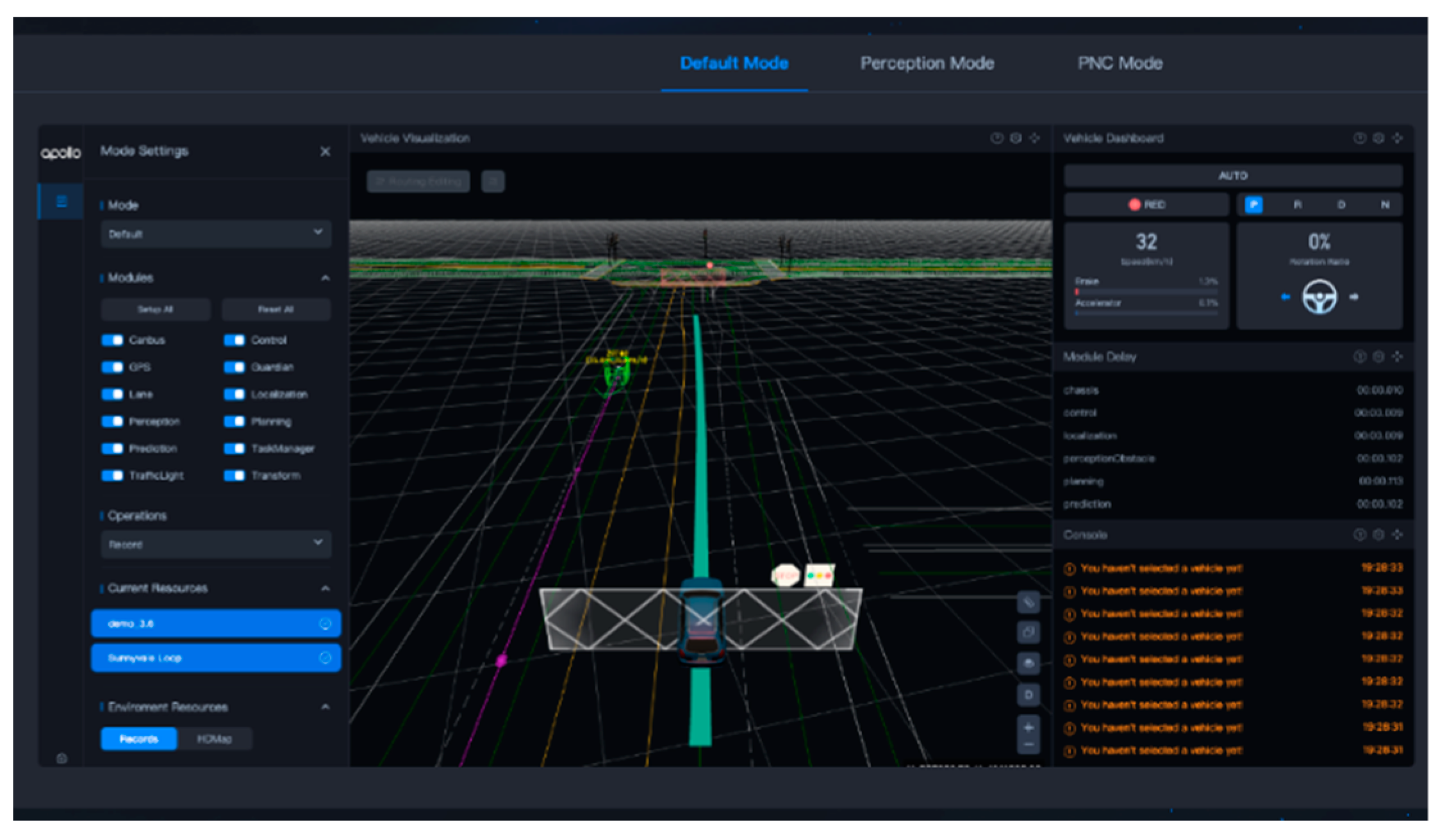

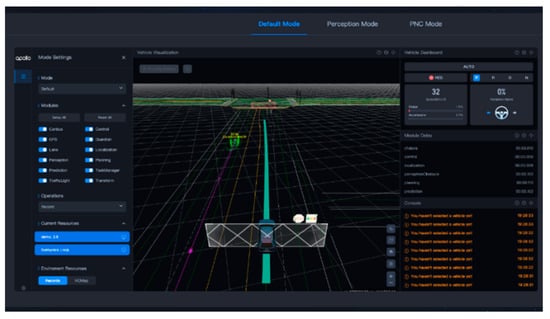

- Baidu Apollo

Baidu Apollo (v 9.0, Figure 1c) [42] was designed for autonomous driving simulation and testing. It offers standards for drive-by-wire vehicles and open interfaces for vehicle hardware, such as the response time of the chassis with control signal inputs. Additionally, it includes specifications for sensors and computing units with functional, performance, and safety indicators to ensure they meet the necessary performance and reliability standards for autonomous driving. The software suite includes modules for environmental perception, decision-making, planning, and vehicle control, allowing for customizable integration based on project needs. Apollo also features high-precision mapping, simulation, and a data pipeline for autonomous driving cloud services. These capabilities enable the mass training of models and vehicle setups in the cloud, which can be directly deployed to vehicles post-simulation.

- CARLA

CARLA (v 0.9.12, Figure 1d) [43] is designed for the development, training, and validation of autonomous driving systems. It was developed jointly by Intel Labs in Santa Clara, USA and the Computer Vision Center in Barcelona, Spain. With the support of Unreal Engine 4 for simulation testing in CARLA, users can create diverse simulation environments with 3D models, including buildings, vegetation, traffic signs, infrastructure, vehicles, and pedestrians. Pedestrians and vehicles in CARLA exhibit realistic behavior patterns, allowing for testing of autonomous driving systems under various weather and lighting conditions to assess performance across diverse environments.

CARLA also offers a variety of sensor models matched with real sensors for generating machine learning training data, which users can flexibly configure according to their needs. Furthermore, CARLA supports integration with the ROS and provides a robust API, enabling users to control the simulation environment through scripting.

- Gazebo

Gazebo (v 11.0, Figure 1e) [44], initially developed by Andrew Howard and Nate Koenig at the University of Southern California, provides a 3D virtual environment for simulating and testing robotic systems. Users can easily create and import various robot models in Gazebo. It supports multiple physics engines, such as DART [45], ODE [46], and Bullet [47], to simulate the motion and behavior of robots in the real world. Additionally, Gazebo supports the simulation of various sensors, such as cameras and LiDAR.

Gazebo seamlessly integrates with ROS, offering a robust simulation platform for developers using ROS for robotics development. Additionally, Gazebo also provides rich plugin interfaces and APIs.

- 51Sim-One

51Sim-One (v 2.2.3, Figure 1f) [38], developed by 51WORLD (Beijing, China), features a rich library of scenarios and supports the import of both static and dynamic data. Furthermore, the scenario generation function in 51Sim-One follows the OpenX standard.

For perception simulation, the platform supports sensor simulation including physical-level cameras, LiDAR, and mmWave radar, and can generate virtual datasets. For motion planning and tracking control simulation, 51Sim-One provides data interfaces, traffic flow simulation, vehicle dynamics simulation, driver models, and an extensive library of evaluation metrics for user configuration. Traffic flow simulation supports integration with software such as SUMO and PTV Vissim, while the vehicle dynamics simulation module includes a 26-degree-of-freedom model for fuel and electric vehicles, supporting co-simulation with software like CarSim, CarMaker, and VI-grade. Moreover, 51Sim-One supports large-scale cloud simulation testing, with daily accumulated test mileage reaching up to one hundred thousand kilometers.

- LGSVL

LGSVL (v 2021.03, Figure 1g) [39] was developed by LG Silicon Valley Lab (Santa Clara, CA, USA). Leveraging Unity physics engine technology, LGSVL supports scenario, sensor, vehicle dynamics, and tracking control simulation, enabling the creation of high-fidelity simulation testing scenarios. These generated testing scenarios include various factors, such as time, weather, road conditions, as well as the distribution and movement of pedestrians and vehicles. Additionally, LGSVL provides a Python API, allowing users to modify scene parameters according to their specific requirements. Furthermore, LGSVL allows users to customize the platform’s built-in 3D HD maps, vehicle dynamics models, and sensor models. LGSVL also offers interfaces with open-source autonomous driving system platforms, such as Apollo and Autoware.

- Waymax

Waymax (0.1.0, Figure 1h) [40], developed by Waymo (Mountain View, CA, USA), focuses on data-driven autonomous driving multi-agent systems. It primarily concentrates on large-scale simulation and testing of the autonomous driving decision-making and planning modules. Unlike generating high-fidelity simulation scenes, Waymax’s simulation scenes are rather simple, consisting mainly of straight lines, curves, or blocks. However, the realism and complexity of Waymax are primarily reflected in the interaction of multiple agents.

Waymax is implemented using a machine learning framework named JAX, allowing for simulations to fully exploit the powerful computing capabilities of hardware accelerators such as GPUs and TPUs, thereby speeding up simulation execution. Furthermore, Waymax utilizes Waymo’s real driving dataset WOMD to construct simulation scenarios, and it provides data loading and processing capabilities for other datasets. Additionally, Waymax offers general benchmarks and simulation agents to facilitate users in evaluating the effectiveness of planning methods. Finally, Waymax can train the behavior of interactive agents in different scenarios using methods such as imitation learning and reinforcement learning.

2.2. Non-Open-Source Simulators

- Ansys Autonomy

Ansys Autonomy (v 2024R2, Figure 2a) [48] is capable of performing real-time closed-loop simulations with multiple sensors, traffic objects, scenarios, and environments. It features a rich library of scene resources and utilizes physically accurate 3D scene modeling. Users can customize and generate realistic simulation scenes through the scenario editor module. Moreover, Ansys Autonomy supports the import of 3D high-precision maps such as OpenStreetMap, automatically generating road models that match the map.

For traffic scenario simulation, Ansys Autonomy provides semantic-based road traffic flow design, enabling simulation of pedestrian, vehicle, and traffic sign behaviors, as well as interaction behaviors between pedestrians and vehicles, and pedestrians and the environment [49]. Ansys Autonomy also features a built-in script language interface, supporting interface control with languages such as Python and C++ to define traffic behaviors. Furthermore, various vehicle dynamics models and sensor models are pre-installed in Ansys Autonomy, allowing users to customize parameters and import external vehicle dynamics models (such as CarSim) and user-developed models.

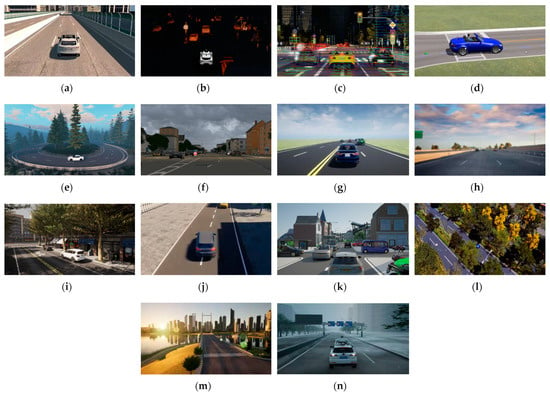

Figure 2.

User interfaces of the non-open-source simulators: (a) Ansys Autonomy [50]; (b) CarCraft [51]; (c) Cognata [52]; (d) CarSim [53]; (e) CarMaker [54]; (f) HUAWEI Octopus [55]; (g) Matlab Automated Driving Toolbox [56]; (h) NVIDA DRIVE Constellation [57]; (i) Oasis Sim [58]; (j) PanoSim [59]; (k) PreScan [60]; (l) PDGaiA [61]; (m) SCANeR Studio [62]; (n) TAD Sim 2.0 [63].

Figure 2.

User interfaces of the non-open-source simulators: (a) Ansys Autonomy [50]; (b) CarCraft [51]; (c) Cognata [52]; (d) CarSim [53]; (e) CarMaker [54]; (f) HUAWEI Octopus [55]; (g) Matlab Automated Driving Toolbox [56]; (h) NVIDA DRIVE Constellation [57]; (i) Oasis Sim [58]; (j) PanoSim [59]; (k) PreScan [60]; (l) PDGaiA [61]; (m) SCANeR Studio [62]; (n) TAD Sim 2.0 [63].

- CarCraft

Developed by the autonomous driving team at Waymo(Mountain View, USA), CarCraft (Figure 2b) is a simulation platform exclusive to Waymo’s use for training autonomous vehicles [64]. First revealed in 2017, it has not been made open-source or commercialized. CarCraft is primarily employed for the large-scale training of autonomous vehicles. It enhances the vehicles’ capability to navigate through various scenarios by constructing traffic scenes within the platform and continuously altering the motion states of pedestrians and vehicles within these scenes. On CarCraft, up to 25,000 or more virtual autonomous vehicles can operate continuously, accumulating over eight million miles of training daily [65].

- Cognata

Cognata (Figure 2c) [52], developed by an Israeli company Cognata, enables large-scale simulations in the cloud, covering a vast array of driving scenarios to accelerate the commercialization of autonomous vehicles. The platform employs digital twin technology and deep neural networks to generate high-fidelity simulation scenarios and includes a customizable library of scenes that users can modify or augment with their custom scenarios. Additionally, Cognata provides extensive traffic models and a database for AI interactions with scenarios, allowing for accurate simulation of drivers, cyclists, and pedestrians and their behavioral habits within these settings. Moreover, the platform offers a variety of sensors and sensor fusion models, including RGB HD cameras, fisheye cameras, lens distortion corrections, support for mmWave radar, LiDAR, Long-Wave Infrared Cameras, and ultrasonic cameras, along with a deep neural network-based radar sensor model. It also provides a toolkit for rapid sensor creation. Cognata features robust data analytics and visualization capabilities, offers ready-to-use autonomous driving verification standards, and allows users to customize rule-writing. Users can create specific rules or conditions, such as setting rules according to specific data ranges, thresholds, or other parameters to filter, classify, or analyze data. Integration with model interfaces (e.g., Simulink, ROS) and tool interfaces (e.g., SUMO), along with support for OpenDrive and OpenScenario standards, facilitates convenient interaction across different platforms.

- CarSim

CarSim (v 2024.2, Figure 2d) [66], developed by Mechanical Simulation Corporation (Ann Arbor, USA), features a solver developed based on extensive real vehicle data. It supports various testing environments, including software-in-the-loop (SIL), model-in-the-loop (MIL), and hardware-in-the-loop (HIL). CarSim features a set of vehicle dynamics models, and users can modify component parameters according to specific requirements. The software also boasts significant extensibility; it is configured with specific data interfaces for real-time data exchange with external software, facilitating multifunctional simulation tests, such as joint simulations with PreScan and Matlab/Simulink.

- CarMaker

CarMaker (v 13.0, Figure 2e) [67], developed by IPG Automotive in Karlsruhe, Germany, focuses on vehicle dynamics, Advanced Driver Assistance Systems (ADAS), and autonomous driving simulation. The platform provides vehicle dynamics models with extensive customization options, allowing users to replace components with specific models or hardware as required. The platform includes two specialized modules for traffic scenario construction: IPG Road and IPG Traffic. The IPG Road module enables the editing of various road types and conditions, while IPG Traffic offers selections of traffic elements. Additionally, CarMaker is equipped with MovieNX, a visualization tool capable of generating realistic environmental changes such as daily weather or lighting variations. The platform’s extensibility supports integration with Matlab/Simulink, Unreal Engine, and dSPACE, suitable for use in MIL, SIL, HIL, and Vehicle in the Loop (VIL) configurations. CarMaker also excels in data analytics, not only storing vast amounts of data but also enabling real-time viewing, comparison, and analysis through the integrated IPG Control tool.

- HUAWEI Octopus

HUAWEI Octopus (Figure 2f) [68] was developed by Huawei (Shenzhen, China ) to address challenges in data collection, processing, and model training in the autonomous driving development process. The platform offers three main services to users: data services, training services, and simulation services. The data service processes data collected from autonomous vehicles and refines new training datasets through a labeling platform. The training service provides online algorithm management and model training, continually iterating labeling algorithms to produce datasets and enhance model accuracy. This service also accelerates training efficiency through both software and hardware enhancements. The simulation service includes a preset library of 20,000 simulation scenarios, offers online decision-making and rule-control algorithm simulation testing, and conducts large-scale parallel simulations in the cloud to accelerate iteration speed.

- Matlab

Matlab (v R2024a, Figure 2g) [69] Automated Driving Toolbox [70] and Deep Learning Toolbox [71] were developed by MathWorks (Natick, MA, USA). The primary functionalities of the Matlab Automated Driving Toolbox include the design and testing of perception systems based on computer vision, LiDAR, and radar; providing object tracking and multi-sensor fusion algorithms; accessing high-definition map data; and offering reference applications for common ADAS and autonomous driving functions. The Deep Learning Toolbox, on the other hand, focuses on the design, training, analysis, and simulation of deep learning networks. It features a deep network designer, support for importing various pre-trained models, flexible API configuration and model training, multi-GPU accelerated training, automatic generation of optimized code, visualization tools, and methods for interpreting network results.

- NVIDIA DRIVE Constellation

NVIDIA DRIVE Constellation (Figure 2h) [72] is a real-time HIL simulation testing platform built on two distinct servers designed for rapid and efficient large-scale testing and validation. On one server, NVIDIA DRIVE Sim software simulates real-world scenarios and the sensors of autonomous vehicles. This software generates data streams to create test scenarios capable of simulating various weather conditions, changes in environmental lighting, complex road and traffic scenarios, and sudden or extreme events during driving. The other server hosts the NVIDIA DRIVE Pegasus AI automotive computing platform, which runs a complete autonomous vehicle software stack and processes the sensor data output from the NVIDIA DRIVE Sim software. DRIVE Sim creates the test scenarios and feeds the sensor data generated by the vehicle models within these scenarios to the target vehicle hardware in NVIDIA DRIVE Pegasus AI. After processing the data, NVIDIA DRIVE Pegasus AI sends the decision-making and planning results back to NVIDIA DRIVE Sim, thereby validating the developed autonomous driving software and hardware systems.

- Oasis Sim

Oasis Sim (v 3.0, Figure 2i) [58] is an autonomous driving simulation platform developed by SYNKROTRON (Xi’an, China) based on CARLA. This platform offers digital twin environments that can simulate changes in lighting and weather conditions. Oasis Sim features a multi-degree-of-freedom vehicle dynamics model and supports co-simulation with vehicle dynamics software such as CarSim and CarMaker.

Additionally, Oasis Sim provides various AI-enhanced, physics-based sensor models, including cameras and LiDAR, driven by real-world data. For scenario construction, Oasis Sim includes a rich library of scenarios, such as annotated regulatory scenarios, natural driving scenarios, and hazardous condition scenarios. It also features intelligent traffic flow and AI driver models.

Oasis Sim supports AI-driven automated generation of edge-case scenarios and includes a graphical scenario editing tool. For large-scale simulation testing, Oasis Sim supports cloud-based large-scale concurrency, enabling automated testing of massive scenarios. The platform is equipped with extensive interfaces, supporting ROS, C++, Simulink, and Python, and is compatible with OpenDrive and OpenScenario formats.

- PanoSim

PanoSim (v 5.0, Figure 2j) [73], developed by the company PanoSim (Jiaxing, China), offers a variety of vehicle dynamics models, driving scenarios, traffic models, and a wide range of sensor models, with rich testing scenarios. PanoSim can also conduct joint simulations with Matlab/Simulink, providing an integrated solution that includes offline simulation, MIL, SIL, HIL, and VIL.

- PreScan

Originally developed by TASS International (Helmond, The Netherlands), PreScan (v 2407, Figure 2k) [74] offers a wide range of sensor models, such as radar, LiDAR, cameras, and ultrasonic sensors, which users can customize in terms of parameters and installation locations. PreScan also features an extensive array of scenario configuration resources, including environmental elements like weather and lighting, as well as static objects such as buildings, vegetation, roads, traffic signs, and infrastructure, plus dynamic objects like pedestrians, motor vehicles, and bicycles.

Additionally, PreScan provides various vehicle models and dynamic simulation capabilities and supports integration with Matlab/Simulink and CarSim for interactive simulations. The platform is also compatible with MIL, SIL, and HIL.

- PDGaiA

Developed by Peidai Automotive (Shanghai, China), PDGaiA (v 7.0, Figure 2l) [61] provides cloud-based simulations and computational services, allowing users to access cloud data through independent tools and perform local tests or to engage in parallel processing and algorithm validation using cloud computing centers.

PDGaiA includes a suite of full-physics sensor models, including mmWave radar, ground-truth sensors for algorithm validation, cameras, LiDAR, and GPS. These models support the simulation of various visual effects and dynamic sensor performance.

Additionally, PDGaiA enables the creation of static and dynamic environments, weather conditions, and a diverse library of scenarios. The platform also includes tool modules for event logging and playback during tests and features that allow for the importation of real-world data.

- SCANeR Studio

SCANeR Studio (v 2024.1, Figure 2m) [62], developed by Boulogne-Billancourt, France’s AVSimulation, is organized into five modules: TERRAIN, VEHICLE, SCENARIO, SIMULATION, and ANALYSIS. The TERRAIN module enables road creation, allowing users to adjust road segment parameters and combine various segments to construct different road networks. The VEHICLE module facilitates the construction of vehicle dynamics models. The SCENARIO module, dedicated to scenario creation, offers a resource library for setting up road facilities, weather conditions, pedestrians, vehicles, and interactions among dynamic objects. The SIMULATION module executes simulations. The ANALYSIS module saves 3D animations of the simulation process, supports playback, and provides curves of various parameter changes during the simulation for result analysis. Additionally, SCANeR Studio includes script control capabilities, enabling users to manipulate scene changes through scripting, such as initiating rain at specific times or turning off streetlights. The platform also integrates interfaces for closed-loop simulations with external software, such as Matlab/Simulink.

- TAD Sim 2.0

TAD Sim 2.0 (v 2.0, Figure 2n) [23], developed by Tencent (Shenzhen, China), is established using Unreal 4 physics engine, virtual reality, and cloud gaming technologies. The platform employs a data-driven approach to facilitate closed-loop simulation testing of autonomous driving modules including perception, decision-making, planning, and control. TAD Sim 2.0 incorporates various industrial-grade vehicle dynamics models and supports simulations of sensor models such as LiDAR, mmWave radar, and cameras, offering detailed test scenarios. These scenarios include simulations of real-world conditions like weather and sunlight. In addition to supporting conventional scene editing and traffic flow simulation, TAD Sim 2.0 uses Agent AI technology to train traffic flow AI (including pedestrians, vehicles, etc.) based on road data collection. Additionally, the platform features the TAD Viz visualization component and standard international interface formats (e.g., OpenDrive, OpenCRG, OpenSCENARIO).

2.3. Discussions of Autonomous Driving Simulators

Table 1 summarizes key information on the above discussed autonomous driving simulators from six aspects: accessibility, operating system, programming language used, engines, and sensor models. The discussion of these autonomous driving simulators in terms of accessibility, simulation engines, and key functions are as follows.

Table 1.

Features of autonomous driving simulators.

2.3.1. Accessibility

Accessibility is a prime consideration when selecting an autonomous driving simulator. Open-source simulators provide a freely accessible environment and, due to their cost-free nature, benefit from extensive community support. This community can continuously improve and optimize the simulators, allowing for them to rapidly adapt to new technologies and market demands. However, open-source simulators may have drawbacks, such as a lack of professional technical support, training services, and customized solutions, which can make it difficult for users to receive timely assistance when facing complex issues [28]. Moreover, open-source simulators often prioritize generality and flexibility, potentially falling short in providing customized solutions for specific application scenarios.

In contrast, proprietary simulators often excel in stability and customization. They are typically developed by professional teams and come with comprehensive technical support, training, and consulting services, making them more advantageous for addressing complex problems and meeting specific needs [13]. For example, CarCraft was developed by the Waymo and Google teams specifically for their private testing activities [64]. However, proprietary simulators also have clear disadvantages. They are often expensive, which can be a significant burden for academic and research projects. Additionally, as proprietary software, they may not adapt as easily to new research or development needs as open-source simulators.

When choosing between these types of simulators, it is essential to carefully consider the specific requirements and goals of the project [75]. For academic and research projects, open-source simulators might be more appealing due to their cost-effectiveness and flexibility. For instance, the CARLA simulator is a popular open-source option, offering a wide range of tools and community support that allows researchers to build and test autonomous driving systems more easily [76]. On the other hand, for enterprises, proprietary simulators may be more attractive because they offer higher stability and customization services [77]. For example, PanoSim provides Nezha Automobile with an integrated simulation system for vehicle-in-the-loop development and testing [59], and Autoware offers ADASTEC, a Level 4 full-size commercial vehicle automation platform [78].

2.3.2. Physics Engines

The physical engine is a core component of the simulator, responsible for simulating physical phenomena in the virtual environment such as gravity, collisions, and rigid body dynamics to ensure that the motion and behavior of objects in the virtual environment conform to real-world physical laws. An overview of several common physics engines applied to the previous simulators is presented as follows.

- ODE

The Open Dynamics Engine (ODE, v 0.5) [46] is an open-source library for simulating rigid body dynamics, developed by Russell Smith with contributions from several other developers. It is notable for its performance, flexibility, and robustness, making it a prominent tool in game development, virtual reality, robotics, and other simulation applications [79]. ODE is capable of simulating rigid body dynamics as well as collision primitives. ODE uses constraint equations to simulate joint and contact constraints, determining the new location and orientation of rigid bodies at each simulation step [80]. The simulation process involves applying external control forces or torques to each rigid body to simulate external physical forces, performing collision detection to identify contacts between rigid bodies, creating contact joints for detected collisions to simulate contact forces, building Jacobian matrices for constraints, solving the linear complementarity problem (LCP [81]) to determine contact forces that satisfy all constraints, and calculating the next moment’s velocities and angular velocities to compute the next positions and rotation states through numerical integration. ODE provides two LCP solvers: an iterative Projected Gauss–Seidel solver [82] and a Lemke algorithm-based solver [83].

- Bullet

Bullet (v 3.25) [47] is an open-source physics simulation engine developed by Erwin Coumans in 2005. Bullet supports GPU acceleration, enhancing the performance and efficiency of physical simulations through parallel computation, especially suitable for large-scale scenes and complex objects. It offers functionalities for rigid-body dynamics simulation, collision detection, constraint solving, and soft body simulation. Bullet uses a time-stepping scheme to solve rigid body systems [84], with a simulation process similar to ODE but provides a broader range of LCP solvers, including Sequential Impulse [85], Lemke, Dantzig [86], and Projected Gauss–Seidel solvers.

- DART

The Dynamic Animation and Robotics Toolkit (DART, v 6.14.5) [45] is an open-source C++ library designed for 3D physics simulation and robotics. Developed by the Graphics Lab and Humanoid Robotics Lab at the Georgia Institute of Technology, it supports platforms such as Ubuntu, Archlinux, FreeBSD, macOS, and Windows, and it seamlessly integrates with Gazebo. DART supports URDF and SDF model formats and offers various extensible APIs, including default and additional numerical integration methods (semi-implicit Euler and RK4), 3D visualization APIs using OpenGL and OpenSceneGraph with ImGui support, nonlinear programming and multi-objective optimization APIs for handling optimization problems, and collision detection using FCL, Bullet, and ODE collision detectors. DART also supports constraint solvers based on Lemke, Dantzig, and PSG methods.

- PhysX

The PhysX engine (v 5.4.1) [87], initially developed by AGEIA and later acquired by Nvidia (Santa Clara, USA), has been upgraded to its fifth generation. PhysX can simulate various physical phenomena in real time, including rigid body dynamics, soft body dynamics, fluid dynamics, particle systems, joint dynamics, collision detection, and response. It leverages GPU processing power to accelerate physical simulations and computations [88]. For solving the LCP problem, PhysX uses the sequential impulse method. Both the Unity Engine [89] for 3D physical simulation and the Unreal Engine prior to its fifth generation have used PhysX as their physics engine.

- Unigine Engine

The Unigine Engine [90], developed by the company UNIGINE (Clemency, Luxembourg), supports high-performance physical simulation, making it suitable for various game development genres. Currently, it has been iterated to version 2.18.1. Unigine Engine utilizes its built-in physics module for physical simulation, excelling in scenarios like collision detection, elastic collision simulation, and basic physical phenomena simulation. For high-precision physical simulation, flight dynamics simulation, and gravitational field simulation, it relies on external physical engines or alternative methods [91].

- Chaos Physics

Chaos Physics [92] was introduced in Unreal Engine starting from version 4.23, and by Unreal Engine 5, it became the default physics engine, replacing PhysX. Developed by Epic Games (Cary, NC, USA), Chaos Physics is a technology capable of modeling complex physical phenomena such as collisions, gravity, friction, and fluid dynamics. Chaos Physics also offers powerful particle simulation capabilities for creating realistic fire, smoke, water flow, and other effects. It also supports multi-threaded computation and GPU acceleration.

- Selection of Physics Engines

In autonomous driving simulations, selecting the appropriate physics engine requires careful consideration of simulation accuracy, computational resources, real-time performance, and the need to simulate physical phenomena. For early development stages or simpler scenarios, ODE offers certain advantages due to its stability and ease of use. However, ODE’s use of the maximum coordinate method may result in inefficiencies when dealing with highly complex systems or when high-precision simulations are required, particularly in large-scale scenarios or complex vehicle dynamics [93]. Additionally, the lack of GPU acceleration support may render ODE less suitable for modern high-performance simulations. Nevertheless, its open-source nature makes it attractive for resource-limited small projects.

Bullet’s GPU acceleration and soft body simulation capabilities make it excel in multi-object systems. However, while Bullet supports soft body physics simulation, its effectiveness and precision may be limited when simulating complex deformations or high-precision soft body interactions [94]. Additionally, the Bullet physics engine’s documentation is not always intuitive, which may require developers to spend more time familiarizing themselves with its principles and API in the initial stages.

DART offers significant advantages in robotics and complex dynamics simulations, making it suitable for autonomous driving simulations related to robotics technology [95]. However, DART’s high computational overhead may impact real-time simulation performance, limiting its application in scenarios where efficient real-time response is critical for autonomous driving simulation [96].

The Unigine engine comes with a powerful built-in physics module capable of handling high-performance simulations. However, achieving a high precision in certain scenarios often requires the use of external engines or alternative methods, adding complexity to the development process, which may increase both development time and costs and demand higher hardware resources [91].

The PhysX and Chaos Physics engines perform exceptionally well in handling complex environments and high-precision physical simulations, particularly in applications with high real-time and performance requirements. PhysX effectively accelerates physical simulation and computation processes by employing GPUs, making it effective in simulating large-scale scenarios [97]. Additionally, PhysX’s collision detection algorithms and constraint solvers excel in complex scenarios involving vehicle dynamics, collision response, and multi-body system interactions [98].

Chaos Physics excels in simulating destruction effects, fracturing, and particle systems. By utilizing multi-threading and GPU acceleration technologies, Chaos Physics also enhances the performance in handling large-scale concurrent computations [92]. This makes Chaos Physics suitable for generating realistic scenarios.

2.3.3. Rendering Engines

Rendering engines focus on the display and rendering of 3D graphics. Through a series of complex calculations and processes, they transform 3D models into realistic images displayed on screens. In this section, we introduce the rendering features of Unigine Engine, Unreal Engine, and Unity Engine, as well as two rendering engines supported by Gazebo: OGRE and OptiX.

- Unigine Engine

Unigine Engine [90] is a powerful cross-platform real-time 3D engine known for its photo-realistic graphics and rich rendering features. It supports physically based rendering (PBR) and provides advanced lighting technologies, including SSRTGI and voxel-based GI, detailed vegetation effects, and cinematic post-processing effects.

CarMaker integrated the Unigine Engine in its 10.0 version, utilizing Unigine’s PBR and real-world camera parameters. CarMaker’s visualization tool, MovieNX, uses real-world camera parameters (exposure, ISO, etc.) for scene rendering [99].

- Unreal Engine

Unreal Engine [100] offers rendering capabilities, animation systems, and a comprehensive development toolchain. Currently, it has been upgraded to its fifth generation [101]. Unreal Engine’s rendering capabilities integrate PBR, real-time ray tracing, Nanite virtualized micro-polygon geometry, and Lumen real-time global illumination [102]. Additionally, optimization strategies like multi-threaded rendering, level of detail control, and occlusion culling are also integrated.

Several simulators adopt Unreal Engine. For example, TAD Sim 2.0 employs Unreal Engine to simulate lighting conditions, weather changes, and real-world physical laws [63].

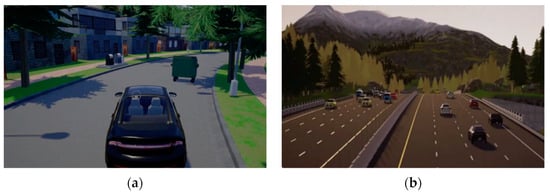

- Unity Engine

The Unity Engine (v 6000.0.17) [103] rendering engine is known for its flexibility and efficiency. It supports multiple rendering pipelines, including the Built-in Render Pipeline, Universal Render Pipeline, and High-Definition Render Pipeline. Unity’s rendering engine offers graphics rendering features and effects, such as high-quality 2D and 3D graphics, real-time lighting, shadows, particle systems, and PBR. Unity’s rendering performance is enhanced through multi-threaded rendering and dynamic batching technologies. Additionally, Unity boasts a rich asset store and plugin ecosystem, providing developers with extensive resources.

- OGRE

OGRE (Object-Oriented Graphics Rendering Engine, v 14.2.6) [104], developed by OGRE Team, is an open-source, cross-platform real-time 3D graphics rendering engine. It can run on multiple operating systems, including Windows, Linux, and Mac OS X. By abstracting underlying graphics APIs like OpenGL, Direct3D, and Vulkan, OGRE achieves efficient 3D graphics rendering. The OGRE engine provides scene and resource management systems and supports plugin architecture and scripting systems. OGRE has a stable and reliable codebase and a large developer community, offering extensive tutorials, sample code, and third-party plugins.

- OptiX

OptiX (v 8.0) [105] was developed by NVIDIA (Santa Clara, USA) for achieving optimal ray tracing performance on GPUs. The OptiX engine can handle complex scenes and light interactions. OptiX provides a programmable GPU-accelerated ray-tracing pipeline. It scales transparently across multiple GPUs, supporting large-scene rendering through an NVLink and GPU memory combination. OptiX is optimized for NVIDIA GPU architectures. Starting with OptiX 5.0, it includes an AI-based denoiser that uses GPU-accelerated artificial intelligence technology to reduce rendering iteration requirements.

- Selection of Rendering Engines

In the application of autonomous driving simulators, Unigine and Unreal Engine excel in scenarios requiring high realism and complex lighting simulations. For instance, when simulating autonomous driving in urban environments, these engines can reproduce light reflections, shadow variations, and environmental effects under various weather conditions. The Unigine engine is famous for its global illumination technology and physics-based rendering capabilities [106], while Unreal Engine is notable for its Nanite virtualized geometry and Lumen real-time global illumination technology [107].

In contrast, Unity Engine is better suited for medium-scale autonomous driving simulation projects, especially when multi-platform support or high development efficiency is required. While Unity’s high-definition rendering pipeline can deliver reasonably good visual quality, it may not match the realism of Unigine or Unreal Engine in simulation scenarios with high precision requirements, such as complex urban traffic simulations. This makes Unity more suitable for autonomous driving simulation applications that prioritize flexibility and quick development cycles [108].

The OGRE engine, with its open-source and straightforward architecture, is well-suited for small-scale or highly customized simulation projects. In small simulation systems that require specific functionality customization, OGRE’s flexibility and extensibility allow developers to quickly implement specific rendering needs [104]. However, OGRE’s limitations in rendering capabilities make it challenging to tackle large-scale autonomous driving simulation tasks.

OptiX stands out in autonomous driving simulation scenarios that require precise ray tracing and high-performance computing. When simulating light reflections during nighttime driving or under extreme weather conditions, OptiX’s ray tracing capabilities can deliver exceptionally realistic visual effects [105]. However, due to its complexity and high computational resource requirements, OptiX is more suitable for advanced autonomous driving simulation applications rather than general simulation tasks. In these specialized applications, OptiX’s high-precision ray tracing can enhance the realism of simulations.

2.3.4. Critical Functions

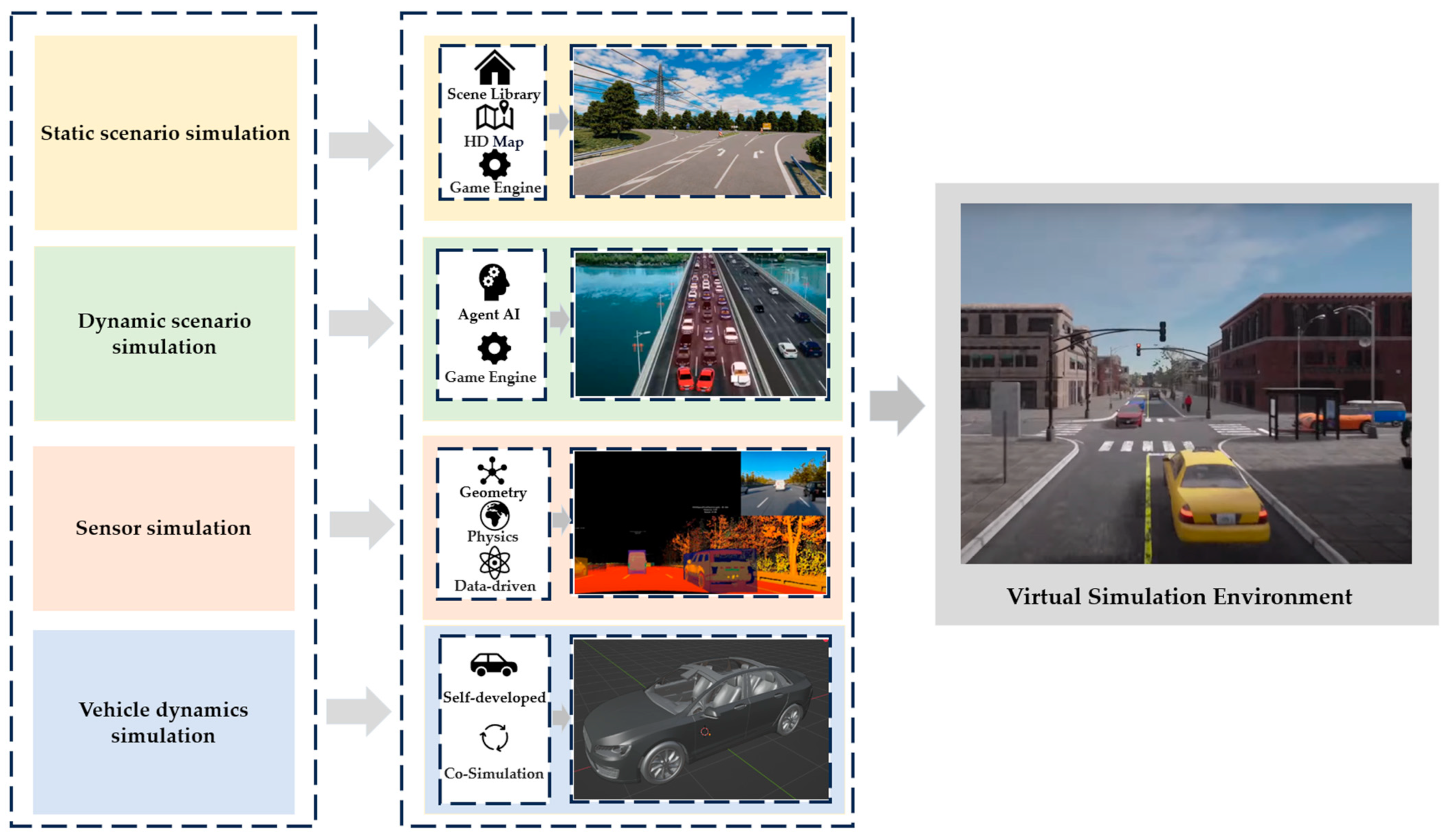

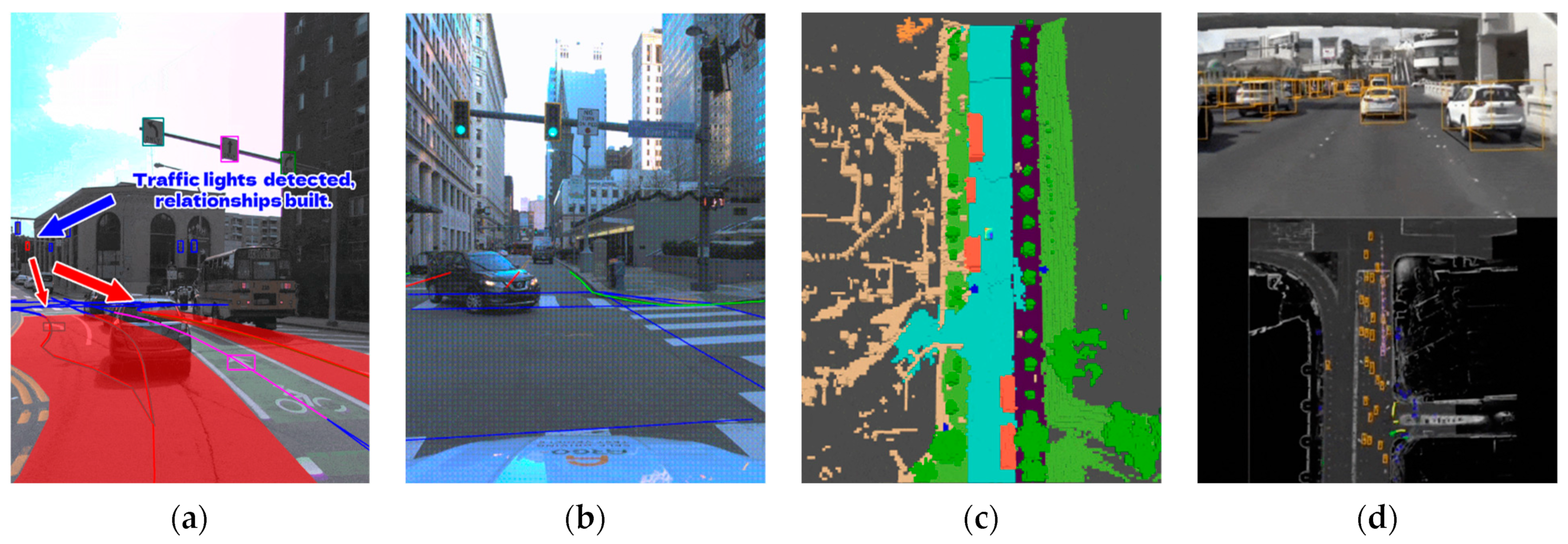

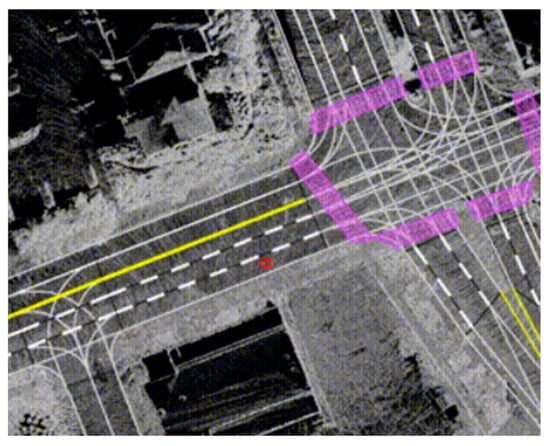

This subsection reviews the critical functions (as shown in Figure 3) for a completed autonomous driving simulator, including scenario simulation, sensor simulation, and implementation of vehicle dynamics simulation.

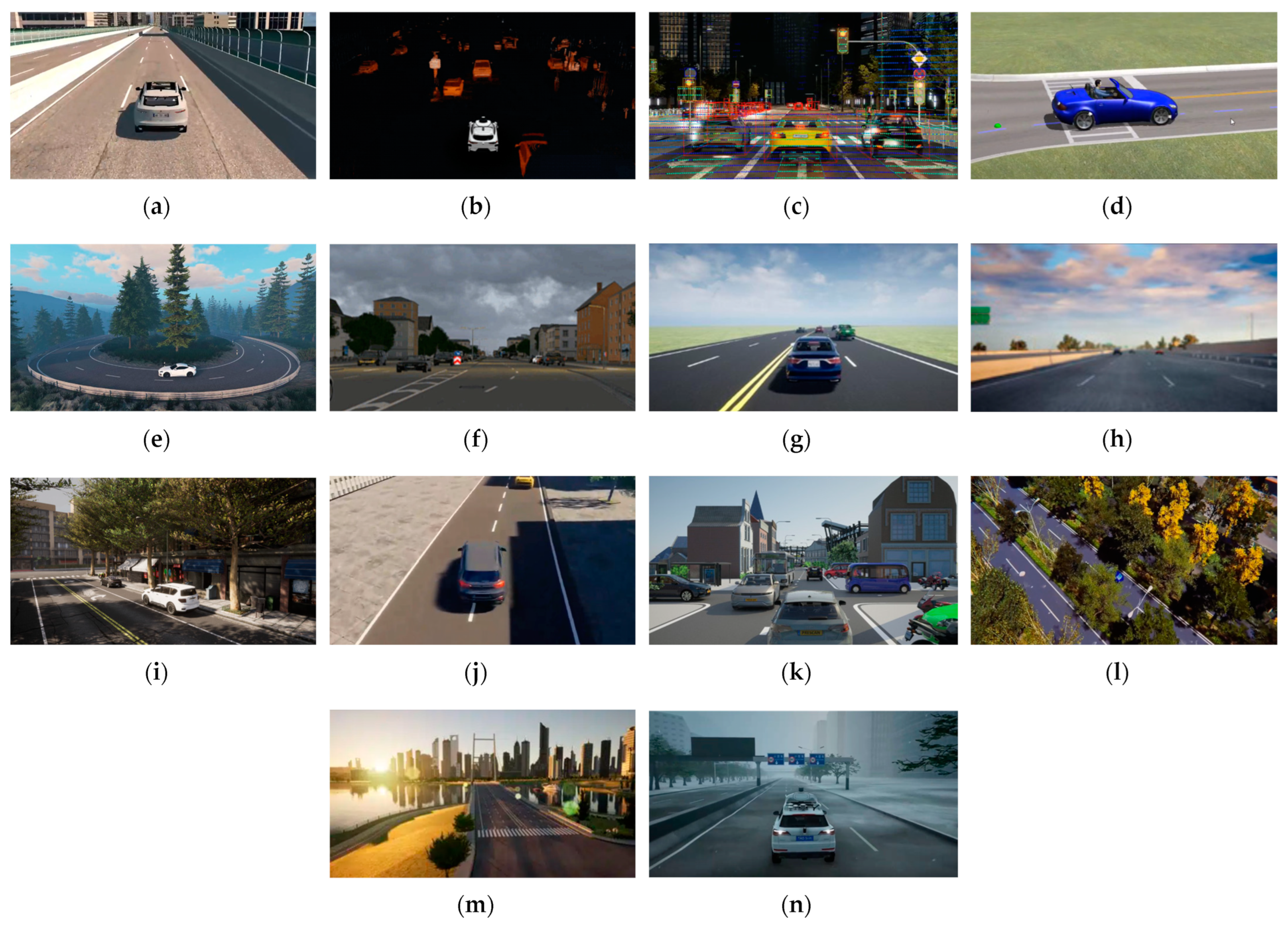

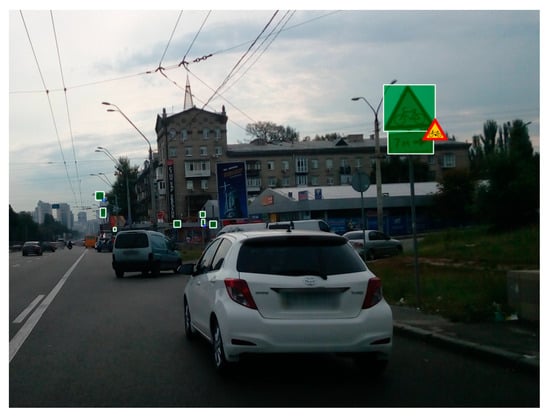

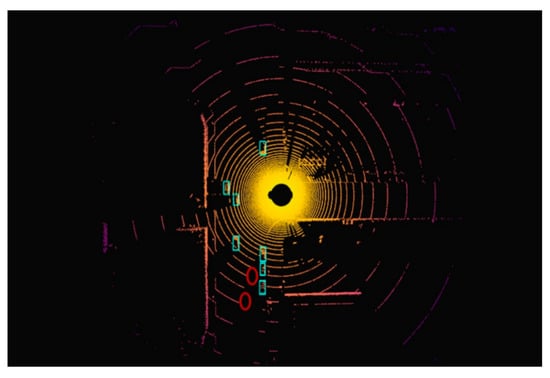

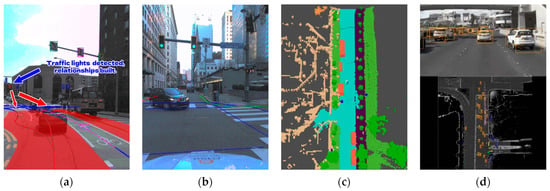

Figure 3.

Critical functions for autonomous driving simulator [36,52,63,99].

- Scenario Simulation

The scenario simulation is divided into two parts: static and dynamic scenario simulations.

The static scenario simulation involves constructing and simulating the static elements within different road environments, such as road networks, traffic signs, buildings, streetlights, and vegetation [109]. The realism of these static scenes is critical, as it directly affects the quality of sensor data during simulation. If the scene modeling is not detailed or realistic enough, it can lead to discrepancies between simulated sensor data and real-world data, negatively impacting the training and testing of perception algorithms. The primary method currently used to build static scenarios involves creating a library of scene assets with professional 3D modeling software, reconstructing road elements based on vectorized high-definition maps, and then importing and optimizing these static elements in physics and rendering engines like Unity or Unreal Engine to produce visually realistic and physically accurate scenario s [110]. In addition, Waymo is exploring another approach that leverages neural rendering techniques, such as Surfel GAN and Block NeRF, to transform real-world camera and LiDAR data into 3D scenes [111].

The dynamic scenario simulation, on the other hand, refers to the simulation of varying environmental conditions (e.g., weather changes, lighting variations) and dynamic objects (e.g., pedestrians, vehicles, non-motorized vehicles, animals), ensuring that these elements’ actions and impacts strictly adhere to real-world physical laws and behavioral logic [112]. This type of simulation depends on high-precision physics engines and Agent AI technology [113]. Physics engines assign realistic physical properties to each object in the scene, ensuring that dynamic elements like weather, lighting changes, and the movement, collision, and deformation of vehicles and pedestrians are consistent with real-world behavior. While many simulators support predefined behaviors before simulation begins, some simulators have already integrated Agent AI technology. This technology is used to simulate the decision-making [114] and behavior of traffic participants, such as vehicles and pedestrians [115]. For example, when the autonomous vehicle under test attempts to overtake, the vehicles controlled by Agent AI can respond with realistic avoidance or other strategic behaviors. At present, TAD Sim 2.0 uses Unreal Engine to simulate realistic weather and lighting changes, and it leverages Agent AI technology to train pedestrians, vehicles, and other scene elements using real-world data [116].

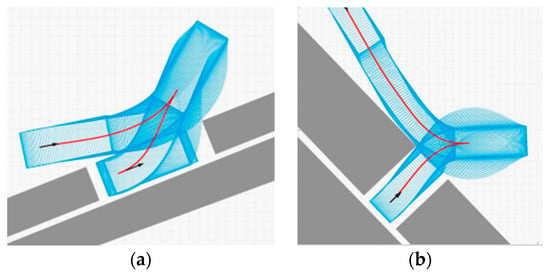

- Sensor Simulation

Sensor simulation involves the virtual replication of various sensors (e.g., LiDAR, radar, cameras) equipped on vehicles. The goal is to closely replicate real-world sensor performance to ensure the reliability and safety of autonomous systems in actual environments. Sensor simulation can be categorized into three types: geometric-level simulation, physics-based simulation, and data-driven simulation.

Geometric-level simulation is the most basic approach, primarily using simple geometric models to simulate sensor field of view, detection range, and coverage area [117]. While geometric-level simulation is fast, it often overlooks complex physical phenomena, limiting its ability to accurately simulate sensor performance in complex environments.

Physics-based simulation introduces physical principles from optics, electronics, and electromagnetics to perform more detailed sensor modeling [118]. It can simulate phenomena such as light reflection, refraction, scattering, multipath effects of electromagnetic waves, and the impact of complex weather conditions (e.g., rain, fog, snow) on sensors [119]. This approach provides a more realistic sensor behavior in different environments. For example, the PD GaiA simulator uses proprietary PlenRay technology to achieve full physics-based sensor modeling, achieving a simulation fidelity of 95% [120]. Ansys Autonomy also provides physics-based sensor simulation, including LiDAR, radar, and camera sensors [121]. This technology generally uses Ansys’ multiphysics solutions for the physics-level of the signal replications [122]. Similarly, 51Sim-One provides physics-based models for cameras, LiDAR, and mmWave radar, calibrated using real sensor data [38].

Data-driven simulation leverages actual sensor data for modeling and simulation, combining real-world sensor data with virtual scenarios to generate realistic simulation results [123]. For example, TAD Sim’s sensor simulation combines collected real data with 3D reconstruction technology to build models, which are dynamically loaded into testing scenarios through Unreal engine.

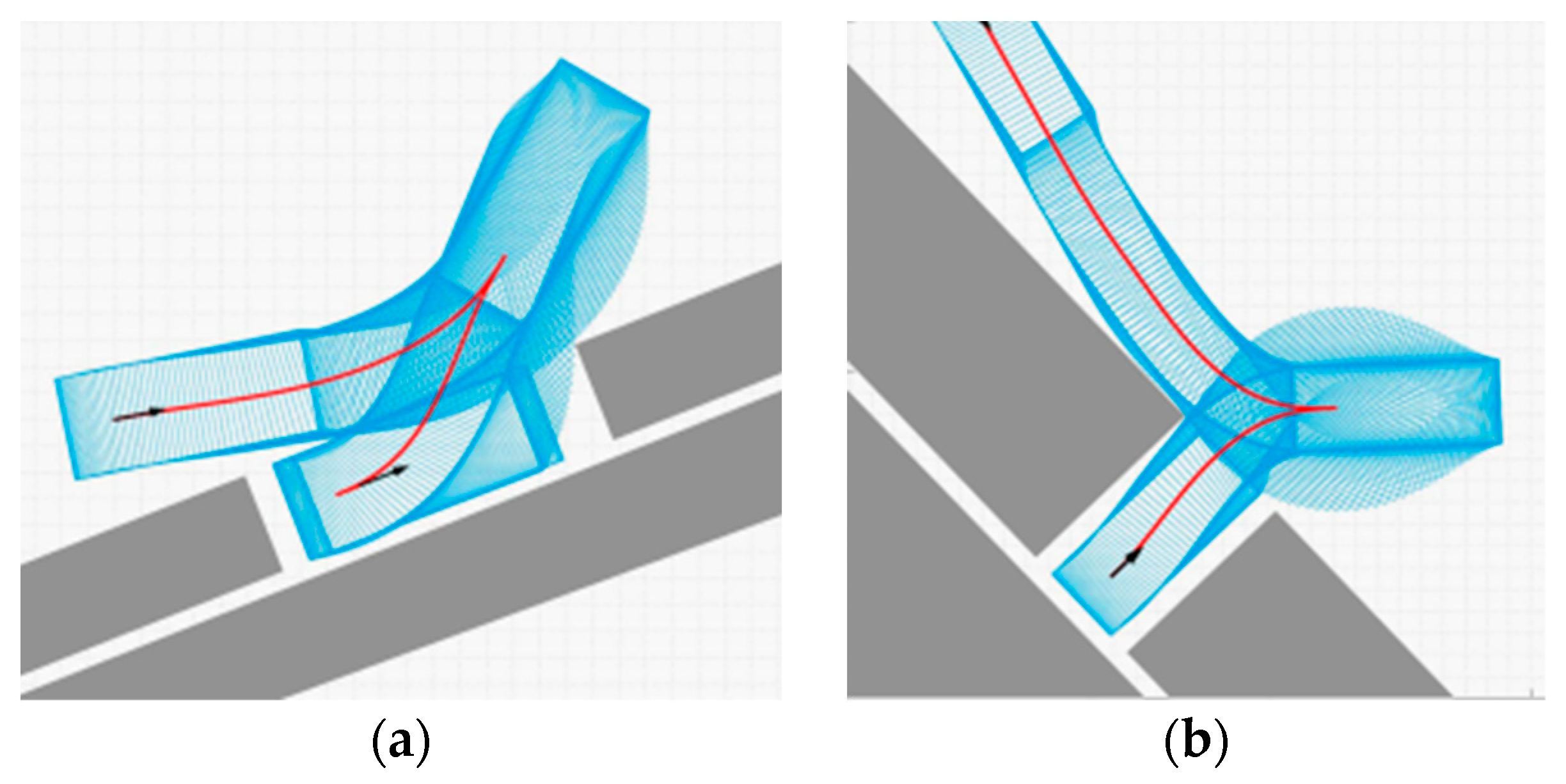

- Implementation of Vehicle Dynamics Simulation

The vehicle dynamics simulation is a crucial part of autonomous driving simulators, directly influencing the accurate prediction of vehicle behavior under various driving conditions. The quality of the vehicle dynamics model in a simulator directly affects the accuracy of dynamic behavior predictions. Therefore, using high-precision vehicle dynamics models is essential for improving the accuracy and reliability of a simulator. Since this area has been deeply investigated, only the implementation of vehicle dynamics simulation is discussed here. There are two main approaches in this field for autonomous driving simulators: developing models in-house or relying on third-party software.

The self-developed vehicle dynamics modeling techniques still have a high industry threshold due to their reliance on experimental data accumulated over long-term engineering practice. Traditional simulation providers continue to offer precise and stable vehicle dynamics models [124]. A typical vehicle dynamics simulator is CarSim, whose solver was developed based on real vehicle data with high reliability [125]. Users can select the vehicle model they need and modify critical parameters accordingly. Many autonomous driving simulators currently have interfaces reserved for joint simulation with CarSim, making it common practice to import CarSim’s vehicle dynamics models into specific simulators for joint simulation. Additionally, the PanoSim simulator offers a high-precision 27-degree-of-freedom 3D nonlinear vehicle dynamics model [59]. The 51Sim-One simulator features an in-house-developed vehicle dynamics simulation engine, while also supporting joint simulation with third-party dynamics modules [38]. However, among the simulators surveyed, some do not provide vehicle dynamics models or reserve interfaces for joint simulation. For example, CARLA and AirSim do not have built-in vehicle dynamics models, but they can integrate CarSim’s vehicle dynamics models through the Unreal Plugin [126,127].

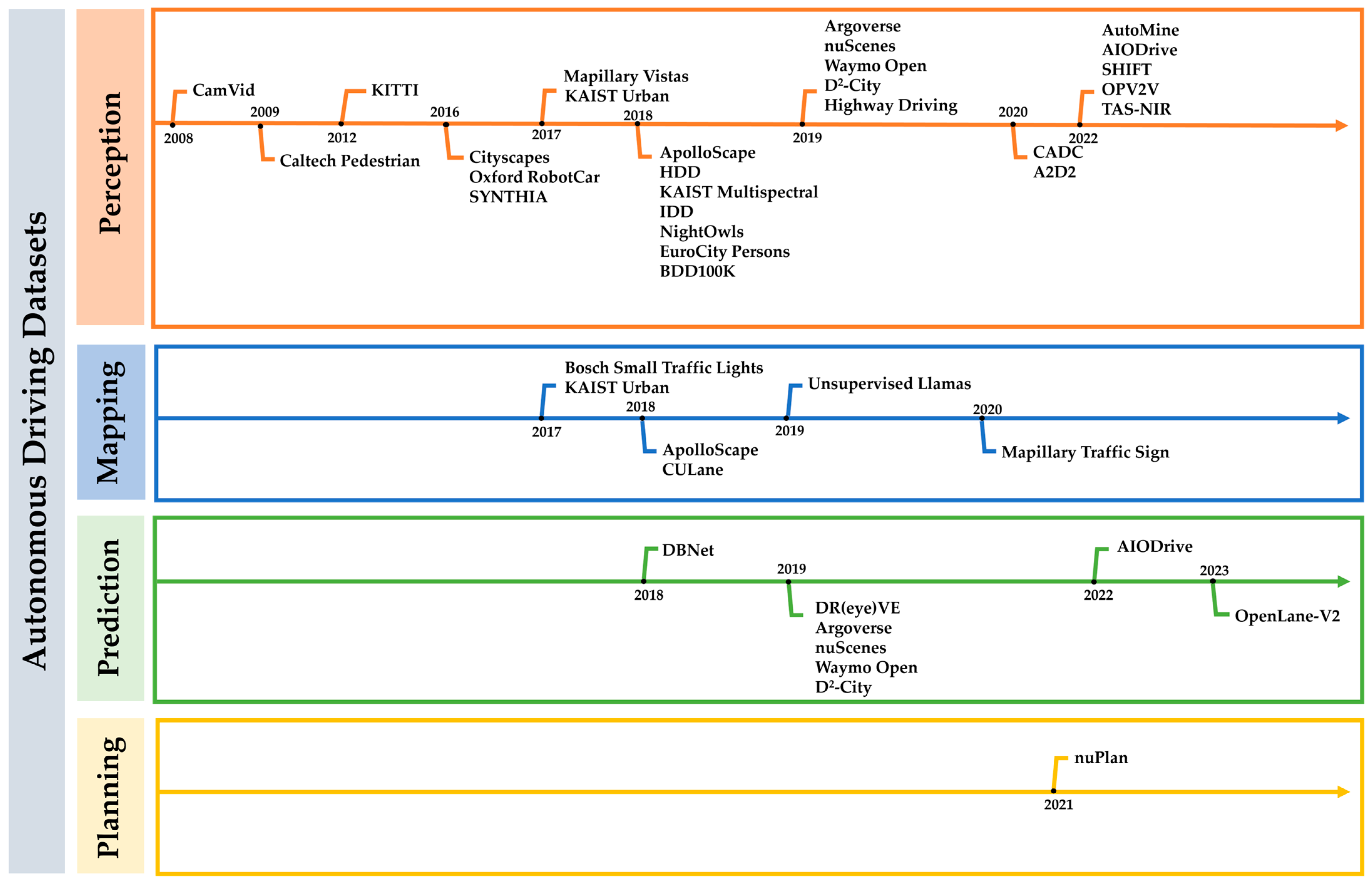

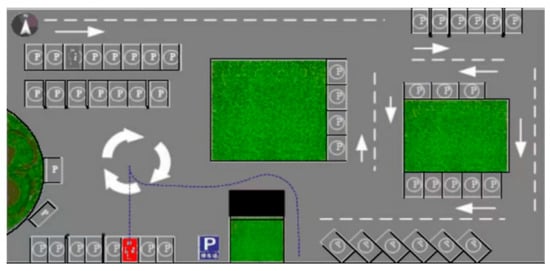

3. Autonomous Driving Datasets

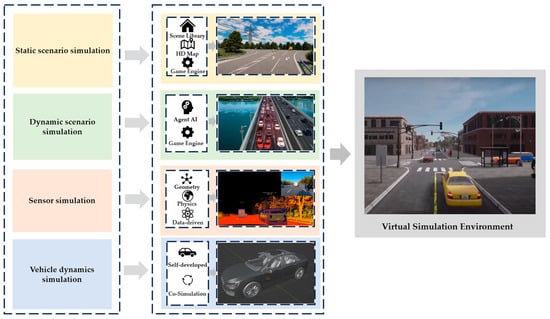

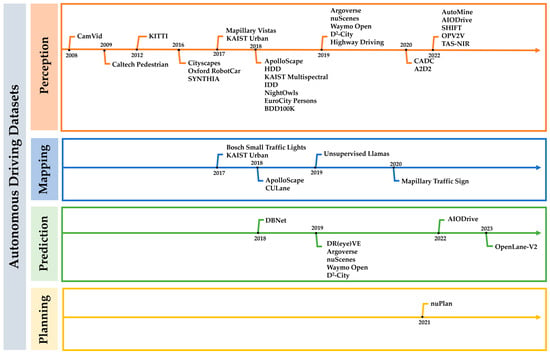

The datasets used in the field of autonomous driving are categorized based on specific tasks, including perception, mapping, prediction, and planning (as shown in Figure 4), and they are summarized in Section 3.1, covering various aspects such as their sources, scale, functions, and characteristics. The application of these scenarios is specifically discussed in Section 3.2.

Figure 4.

Datasets categorized based on tasks [128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162].

3.1. Datasets

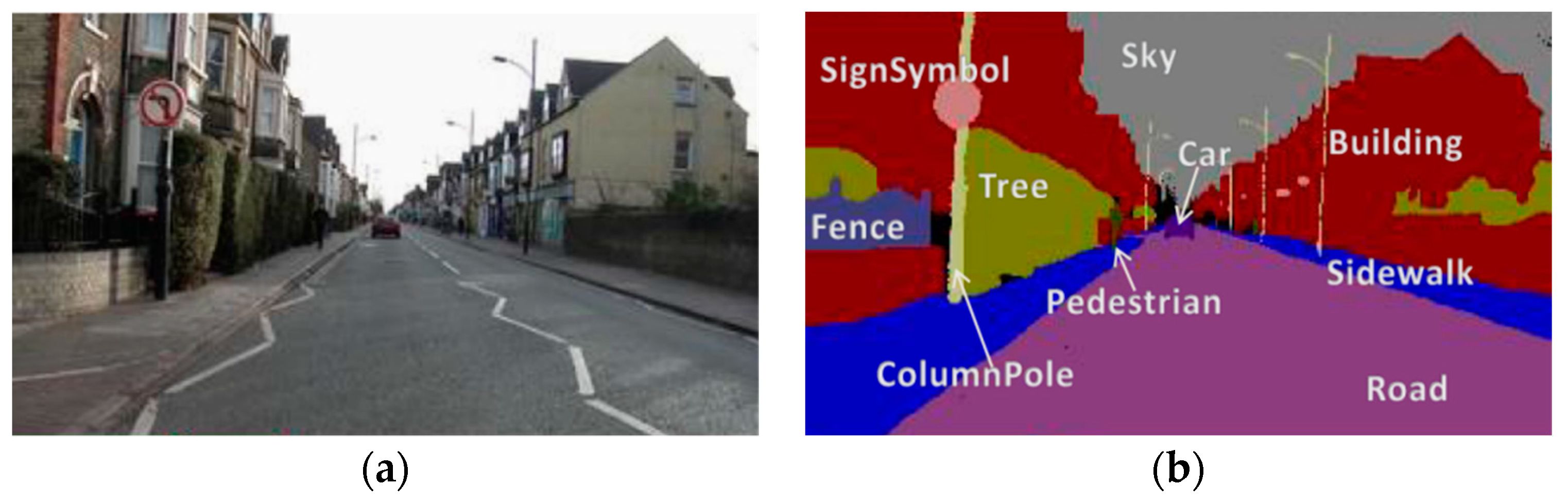

- CamVid Dataset

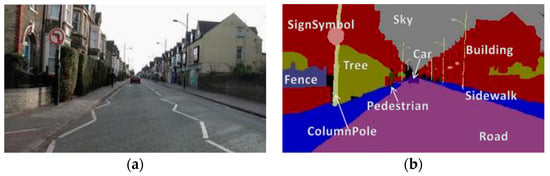

The CamVid dataset (Figure 5) [128], collected and publicly released by the University of Cambridge, is the first video collection to include semantic labels for object classes. It provides 32 ground truth semantic labels, associating each pixel with one of these categories. This dataset addresses the need for experimental data to quantitatively evaluate emerging algorithms. The footage was captured from the driver’s perspective, comprising approximately 86 min of video, which includes 55 min recorded during daylight and 31 min during dusk.

Figure 5.

CamVid dataset sample segmentation results [128]: (a) Test image; (b) Ground truth.

- Caltech Pedestrian Dataset

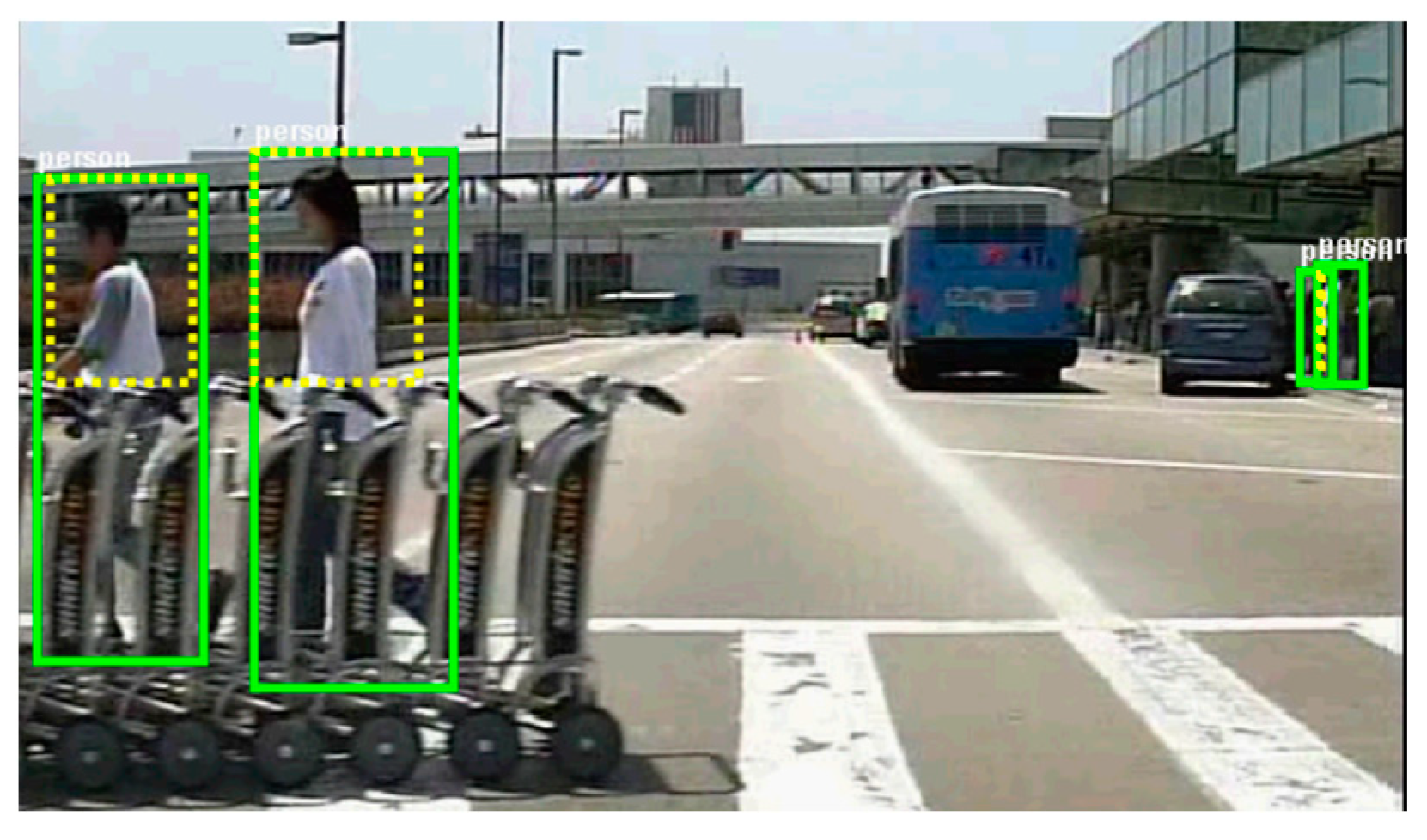

The Caltech Pedestrian dataset (Figure 6) [129], collected and publicly released by the California Institute of Technology, was designed for pedestrian detection research. It comprises approximately 10 h of 30 Hz video captured from vehicles traveling through urban environments. This dataset features annotated videos recorded from moving vehicles, including images that are low-resolution and often obscured, presenting challenging conditions for analysis. Annotations are provided for 250,000 frames across 137 segments of approximately a minute in length each, totaling 350,000 labeled bounding boxes and 2300 unique pedestrians.

Figure 6.

Caltech Pedestrian dataset example image and corresponding annotations [129].

- KITTI Dataset

The KITTI dataset [130], developed collaboratively by the Karlsruhe Institute of Technology in Germany and Toyota Research Institute in the US, includes extensive data from car-mounted sensors like LiDAR, cameras, and GPS/IMU. It is used for evaluating technologies such as stereo vision, 3D object detection, and 3D tracking.

- Cityscapes Dataset

The Cityscapes dataset [131], collected by researchers from the Mercedes-Benz Research Center and the Technical University of Darmstadt, provides a large-scale benchmark for training and evaluating methods for pixel-level and instance-level semantic labeling. It captures complex urban traffic scenarios from 50 different cities, with 5000 images having high-quality annotations and 20,000 with coarse annotations.

- Oxford RobotCar Dataset

The Oxford RobotCar dataset [132], collected by the Oxford Mobile Robotics Group, features data collected over a year by navigating a consistent route in Oxford, UK. This extensive dataset encompasses 23 TB of multimodal sensory information, including nearly 20 million images, LiDAR scans, and GPS readings. It captures a diverse array of weather and lighting conditions, such as rain, snow, direct sunlight, overcast skies, and nighttime settings, providing detailed environmental data for research purposes.

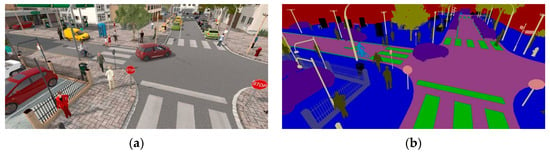

- SYNTHIA Dataset

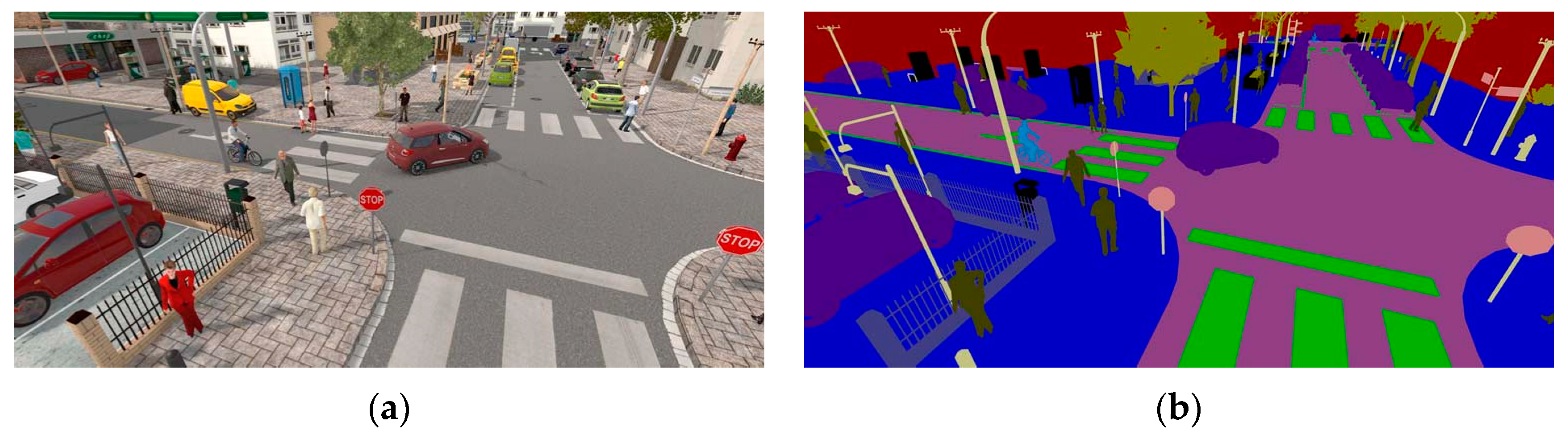

The SYNTHIA dataset (Figure 7) [133], collected by researchers from the Autonomous University of Barcelona and the University of Vienna, consists of frames rendered from a virtual city to facilitate semantic segmentation research in driving scenes. It provides pixel-accurate semantic annotations for 13 categories including sky, buildings, roads, sidewalks, fences, vegetation, lane markings, poles, cars, traffic signs, pedestrians, cyclists, and others. Frames are captured from multiple viewpoints at each location, with up to eight different views available. Each frame also includes a corresponding depth map. This dataset comprises over 213,400 synthetic images, featuring a mix of random snapshots and video sequences from virtual cityscapes. The images were generated to simulate various seasons, weather conditions, and lighting scenarios, enhancing the dataset’s utility for testing and developing computer vision algorithms under diverse conditions.

Figure 7.

Example images from SYNTHIA dataset [133]: (a) Sample frame; (b) Semantic labels.

- Mapillary Vistas Dataset

The Mapillary Vistas dataset (Figure 8) [134] is a large and diverse collection of street-level images aimed at advancing the research and training of computer vision models across varying urban, suburban, and rural scenes. This dataset contains 25,000 high-resolution images, split into 18,000 for training, 2000 for validation, and 5000 for testing. The dataset covers a wide range of environmental settings and weather conditions such as sunny, rainy, and foggy days. It includes extensive annotations for semantic segmentation, enhancing the understanding of visual road scenes.

Figure 8.

Qualitative labeling examples from Mapillary Vistas dataset [134]: (a) Original image; (b) Labeled image.

- Bosch Small Traffic Lights Dataset

The Bosch Small Traffic Lights dataset [135], collected by researchers in Bosch, focuses on the detection, classification, and tracking of traffic lights. It includes 5000 training images and a video sequence of 8334 frames for testing, labeled with 10,756 traffic lights at a resolution of 1280 × 720. The test set includes 13,493 traffic lights annotated with four states: red, green, yellow, and off.

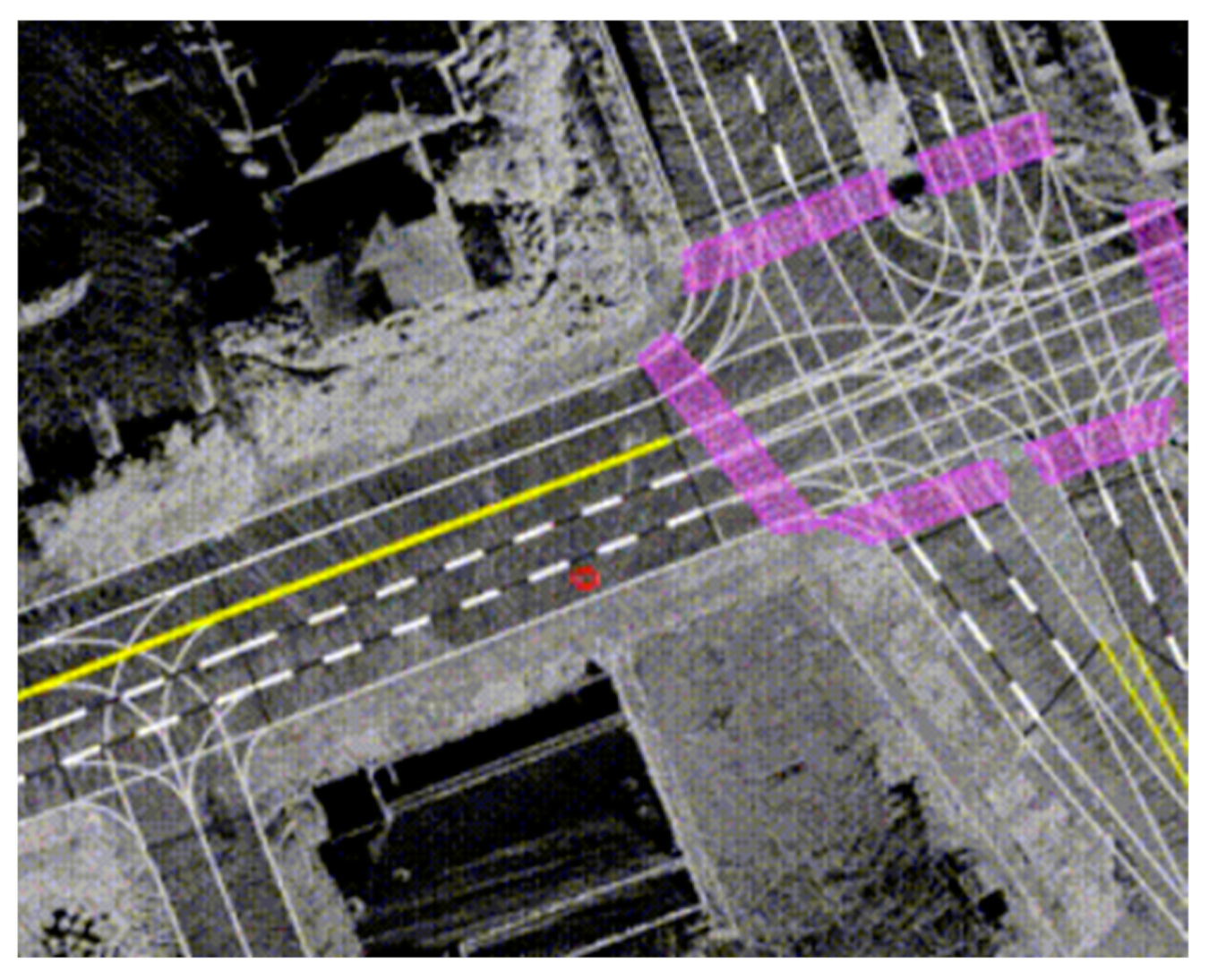

- KAIST Urban Dataset

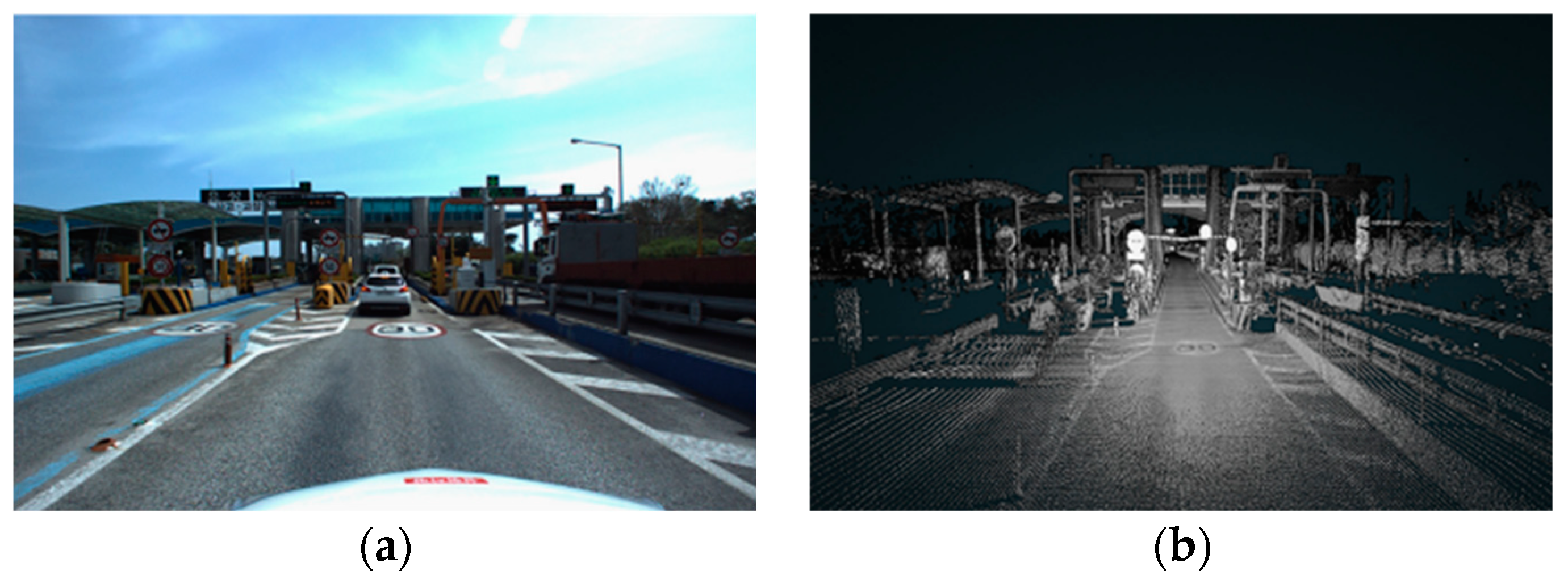

The KAIST Urban dataset (Figure 9) [136] caters to tasks such as simultaneous localization and mapping (SLAM), featuring diverse urban features captured from various complex urban environments. It includes LiDAR and image data from city centers, residential complexes, and underground parking lots. It provides not only raw sensor data but also reconstructed 3D points and vehicle positions estimated using SLAM techniques.

Figure 9.

Sampled highway toll station information from the KAIST Urban dataset [136]: (a) Stereo image; (b) 3D point clouds.

- ApolloScape Dataset

The ApolloScape dataset [137], collected by Baidu, is distinguished by its extensive and detailed annotations, which include semantic dense point clouds, stereo imagery, per-pixel semantic annotations, lane marking annotations, instance segmentation, and 3D car instances. These features are provided for each frame across a variety of urban sites and daytime driving videos, enriched with high-precision location data. ApolloScape’s continuous development has led to a dataset that integrates multi-sensor fusion and offers annotations across diverse scenes and weather conditions.

- CULane Dataset

The CULane dataset (Figure 10) [138], created by the Multimedia Laboratory at the Chinese University of Hong Kong, is a large-scale dataset designed for road lane detection. Cameras were mounted on six different vehicles, driven by different drivers, to collect over 55 h of video across various time sections in Beijing, which is one of the world’s largest and busiest cities. This collection resulted in 133,235 frames of image data. The dataset is uniquely annotated: each frame was manually marked with cubic splines to highlight the four most critical lane markings, while other lane markings are not annotated. It includes 88,880 frames for training, 9675 for validation, and 34,680 for testing, covering a wide range of traffic scenes including urban areas, rural roads, and highways.

Figure 10.

Example images from the CULane dataset [138]: (a) Normal; (b) Crowded.

- DBNet Dataset

The DBNet dataset [139], released by Shanghai Jiao Tong University and Xiamen University, provides a vast collection of LiDAR point clouds and recorded video data captured on vehicles driven by experienced drivers. This dataset is designed for driving behavior strategy learning and evaluating the gap between model predictions and expert driving behaviors.

- HDD Dataset

The Honda Research Institute Driving Dataset (HDD) (Figure 11) [140], collected by Honda Research Institute USA, contains 104 h of video from sensor-equipped vehicles navigating the San Francisco Bay Area, aiming to study human driver behaviors and interactions with traffic participants. The dataset includes 137 sessions, each averaging 45 min, corresponding to different navigation tasks. Additionally, a novel annotation method breaks down driver behaviors into four layers: goal-oriented, stimulus-driven, causal, and attentional.

Figure 11.

Example video frames from the HDD dataset [140]: (a) Cyclists; (b) Pedestrians crossing the street.

- KAIST Multispectral Dataset

The KAIST Multispectral dataset (Figure 12) [141], collected by RCV Lab in KAIST, provides large-scale, varied data of drivable areas, capturing scenes in urban, campus, and residential areas under both well lit and poorly lit conditions. The dataset features GPS measurements, IMU accelerations, and object annotations including type, size, location, and occlusion, addressing the lack of multispectral data suitable for diverse lighting conditions.

Figure 12.

Example images in different scenes from the KAIST Multispectral dataset [141]: (a) Campus; (b) Residential.

- IDD Dataset

The IDD dataset (Figure 13) [142] was collected by researchers from the International Institute of Information Technology, Hyderabad, Intel Bangalore, and the University of California, San Diego. It is designed to address autonomous navigation challenges in unstructured driving conditions commonly found on Indian roads. It includes 10,004 images from 182 driving sequences, meticulously annotated across 34 categories. Unlike datasets focused on structured road environments, IDD offers a broader range of labels. The dataset introduces a four-level hierarchical labeling system. The first level consists of seven broad categories: drivable, non-drivable, living things, vehicles, roadside objects, far objects, and sky. The second level refines these into 16 more specific labels, such as breaking down “living things” into person, animal, and rider. The third level provides even more detail with 26 labels. The fourth and most detailed level contains 30 labels. Each level in the hierarchy groups ambiguous or hard-to-classify labels into the subsequent, more detailed level. Additionally, the IDD dataset supports research into domain adaptation, few-shot learning, and behavior prediction in road scenes.

Figure 13.

Example image of an unstructured scene from the IDD dataset [142].

- NightOwls Dataset

The NightOwls dataset [143], collected by researchers from the University of Oxford, Nanjing University of Science and Technology, and the Max Planck Institute for Informatics, addresses the significant gap in pedestrian detection datasets for nighttime conditions, which are typically underrepresented compared to those available for daytime. Captured using automotive industry-standard cameras with a resolution of 1024 × 640, this dataset includes 279,000 frames, each fully annotated with details such as occlusions, poses, difficulty levels, and tracking information. The dataset provides diversity scenarios over three European countries, which are the UK, Germany, and The Netherlands, across various lighting conditions at dawn and night. It also covers all four seasons and includes adverse weather conditions such as rain and snow.

- EuroCity Persons Dataset

The EuroCity Persons dataset [144], developed in a collaboration between the Intelligent Vehicles Research Group at Delft University of Technology and the Environment Perception Research Group at Daimler AG, is a diverse pedestrian detection dataset gathered from 31 cities across 12 European countries, annotated with 238,200 human instances in over 47,300 images under different lighting and weather conditions.

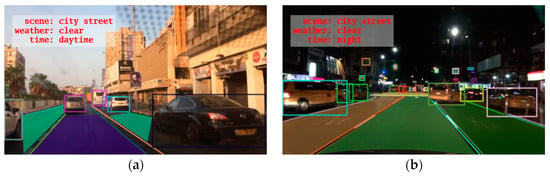

- BDD100K Dataset

The BDD100K dataset (Figure 14) [145], collected by the Berkeley Artificial Intelligence Research lab at the University of California, Berkeley, is a large-scale driving video dataset comprising 100,000 videos over 10 different tasks. It features extensive annotations for heterogeneous task learning, aimed at facilitating research into multi-task learning and understanding the impact of domain differences on object detection. The dataset is organized into categories based on time of day and scene types, with urban street scenes during daylight hours used for validation. The data collection is crowd-sourced exclusively from drivers, linking each annotated image to a video sequence.

Figure 14.

Example driving videos in different scenes from the BDD100K dataset [145]: (a) Urban street in the day; (b) Urban street at night.

- DR(eye)VE Dataset

The DR(eye)VE dataset [146], collected by the ImageLab group in the University of Modena and Reggio Emilia, is the first publicly released dataset focused on predicting driver attention. It comprises 555,000 frames, each captured using precise eye-tracking devices to record the driver’s gaze, correlated with external views from rooftop cameras. The dataset encompasses diverse environments such as city centers, rural areas, and highways under varying weather conditions (sunny, rainy, cloudy) and times of day (day and night).

- Argoverse Dataset

The Argoverse dataset [147], jointly collected by Argo AI, Carnegie Mellon University, and the Georgia Institute of Technology, offers extensive mapping details, including lane centerlines, ground elevation, and drivable areas, with 3D object tracking annotations. It features a significant amount of data collected using LiDAR, 360-degree cameras, and stereo cameras. Additionally, the dataset establishes a large-scale trajectory prediction benchmark that captures complex driving scenarios like intersection turns, nearby traffic, and lane changes. This dataset is notable as the first to gather full panoramic, high-frame-rate large-scale data on outdoor vehicles, facilitating new approaches to photometric urban reconstruction using direct methods.

- nuScenes Dataset

The nuScenes dataset (Figure 15) [148], provided by Motional (Boston, MA, USA), is an all-weather, all-lighting dataset. It is the first dataset collected from autonomous vehicles approved for testing on public roads and includes a complete 360-degree sensor suite (LiDAR, cameras, and radar). The data were gathered in Singapore and Boston, cities known for their busy urban traffic, including 1000 driving scenes, each approximately 20 s long, with nearly 1.4 million RGB images. nuScenes marks a significant advancement in dataset size and complexity, being the first to offer 360-degree coverage from a full sensor suite. It is also the first dataset to include modalities for nighttime and rainy conditions, with annotations for object types, locations, attributes, and scene descriptions.

Figure 15.

Example image from the nuScenes dataset [148]: (a) Daylight; (b) Night.

- Waymo Open Dataset

The Waymo Open dataset [149], released in 2019 by the company Waymo (Mountain View, CA, USA), is a large-scale multimodal camera-LiDAR dataset, surpassing all similar existing datasets in size, quality, and geographic diversity at the time of its release. It includes data collected from Waymo vehicles over millions of miles in diverse environments such as San Francisco, Phoenix, Mountain View, and Kirkland. This dataset captures a wide range of conditions, including day and night, dawn and dusk, and various weather conditions like sunny and rainy days, across both urban and suburban settings.

- Unsupervised Llamas Dataset

The Unsupervised Llamas dataset [150], collected by Bosch N.A. Research, is a large high-quality dataset for lane markings, including 100,042 marked images from approximately 350 km of highway driving. This dataset utilizes high-precision maps and additional optimization steps to automatically annotate lane markings in images, enhancing annotation accuracy. It offers a variety of information, including 2D and 3D lines, individual dashed lines, pixel-level segmentation, and lane associations.

- D2-City Dataset

The D2-City dataset [151] is a comprehensive collection of dashcam video footage gathered from vehicles on the DiDi platform, featuring over 10,000 video segments. Approximately 1000 of these videos provide frames annotated with bounding boxes and tracking labels for 12 types of objects, while the key frames of the remaining videos offer detection annotations. This dataset was collected across more than ten cities in China under various weather conditions and traffic scenarios, capturing the diversity and complexity of real-world Chinese traffic environments.

- Highway Driving Dataset

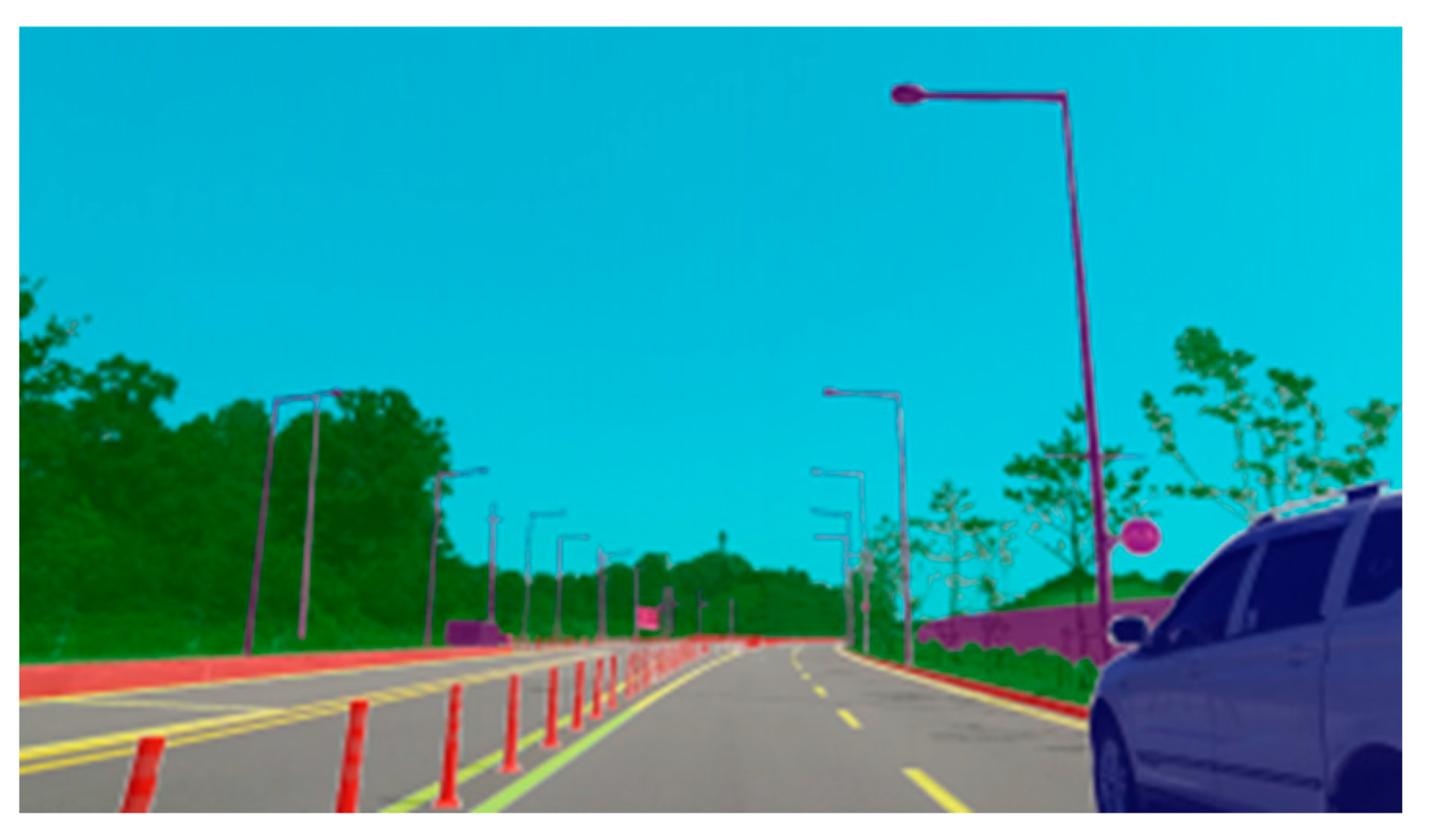

The Highway Driving dataset (Figure 16) [152], collected by KAIST (Daejeon, Republic of Korea), is a semantic video dataset composed of 20 video sequences captured in highway settings at a frame rate of 30 Hz. It features annotations for ten categories including roads, lanes, sky, barriers, buildings, traffic signs, cars, trucks, vegetation, and unspecified objects. Each frame is densely annotated in both spatial and temporal dimensions, with attention to the coherence between consecutive frames. This dataset addresses the gap in semantic segmentation research, which had previously focused predominantly on images rather than videos.

Figure 16.

Sample image from the Highway Driving dataset [152].

- CADC Dataset

The CADC dataset [153], jointly collected by the Toronto-Waterloo AI Institute and the Waterloo Intelligent Systems Engineering Lab at the University of Waterloo, comprises 7000 annotated frames collected under various winter weather conditions, covering 75 driving sequences and over 20 km of driving distance in traffic and snow. This dataset is specifically designed for studying autonomous driving under adverse driving conditions, enabling researchers to test their object detection, localization, and mapping technologies in challenging winter weather scenarios.

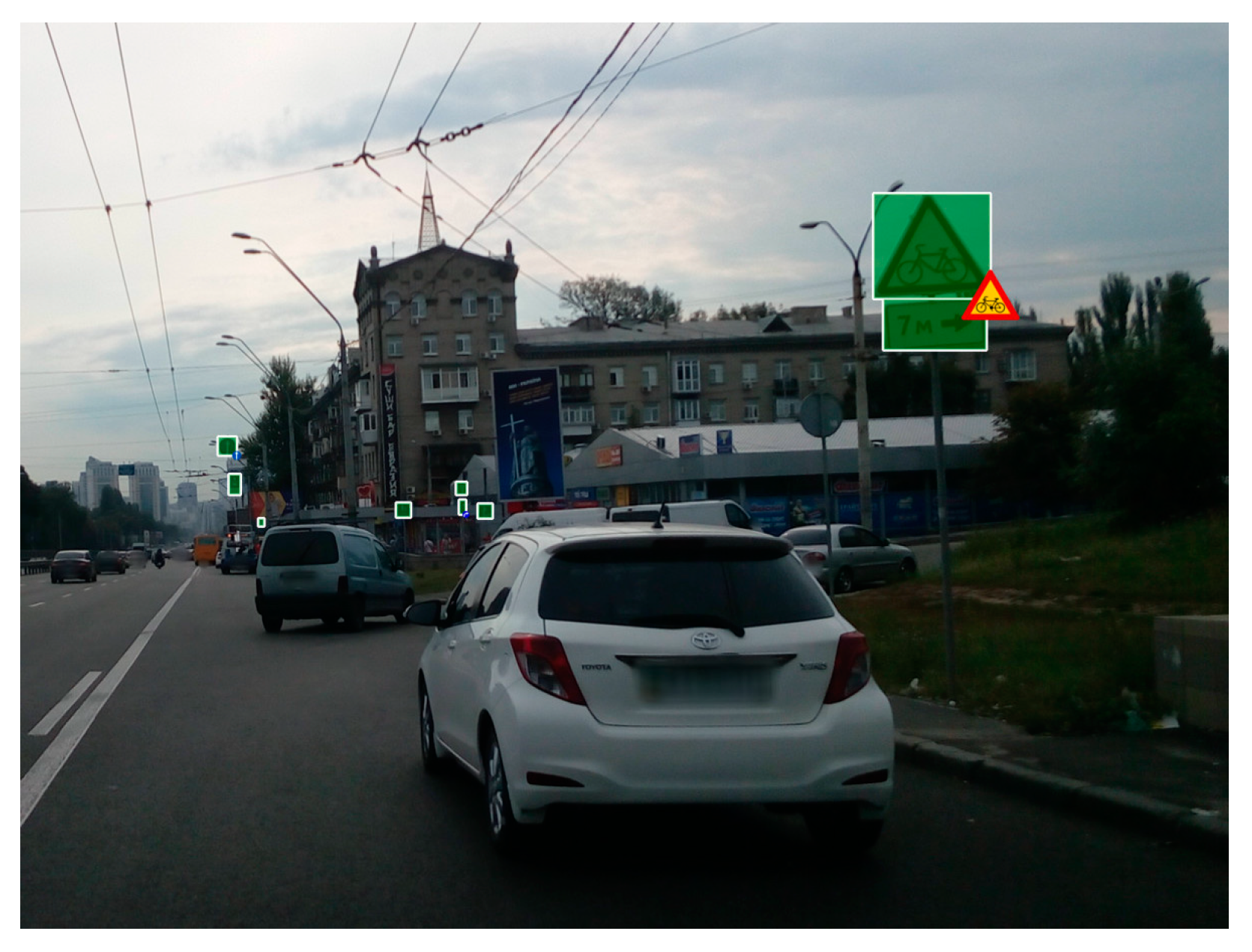

- Mapillary Traffic Sign Dataset

The Mapillary Traffic Sign dataset (Figure 17) [154], collected by Mapillary AB (Malmö, Sweden), is a large-scale, diverse benchmark dataset for traffic signs. It contains 100,000 high-resolution images, with over 52,000 fully annotated and about 48,000 partially annotated images. This dataset includes 313 different traffic sign categories from around the world, covering all inhabited continents with 20% from North America, 20% from Europe, 20% from Asia, 15% from South America, 15% from Oceania, and 10% from Africa. It features data from various settings such as urban and rural roads across different weather conditions, seasons, and times of day.

Figure 17.

Sample image from Mapillary Traffic Sign dataset [154].

- A2D2 Dataset

The A2D2 dataset [155], collected by Audi (Ingolstadt, Germany), consists of images and 3D point clouds, 3D bounding boxes, semantic segmentation, instance segmentation, and data extracted from the vehicle bus, totaling 2.3 TB of data across various road scenes such as highways, rural areas, and urban areas. It includes 41,227 annotated non-sequential frames with semantic segmentation labels and point cloud annotations. Of these, 12,497 frames include 3D bounding boxes for objects within the field of view of the front camera, along with 392,556 frames of continuous unannotated sensor data. This dataset provides rich annotation details, including 3D bounding boxes and semantic and instance segmentation, which are valuable for research and development in multiple visual tasks.

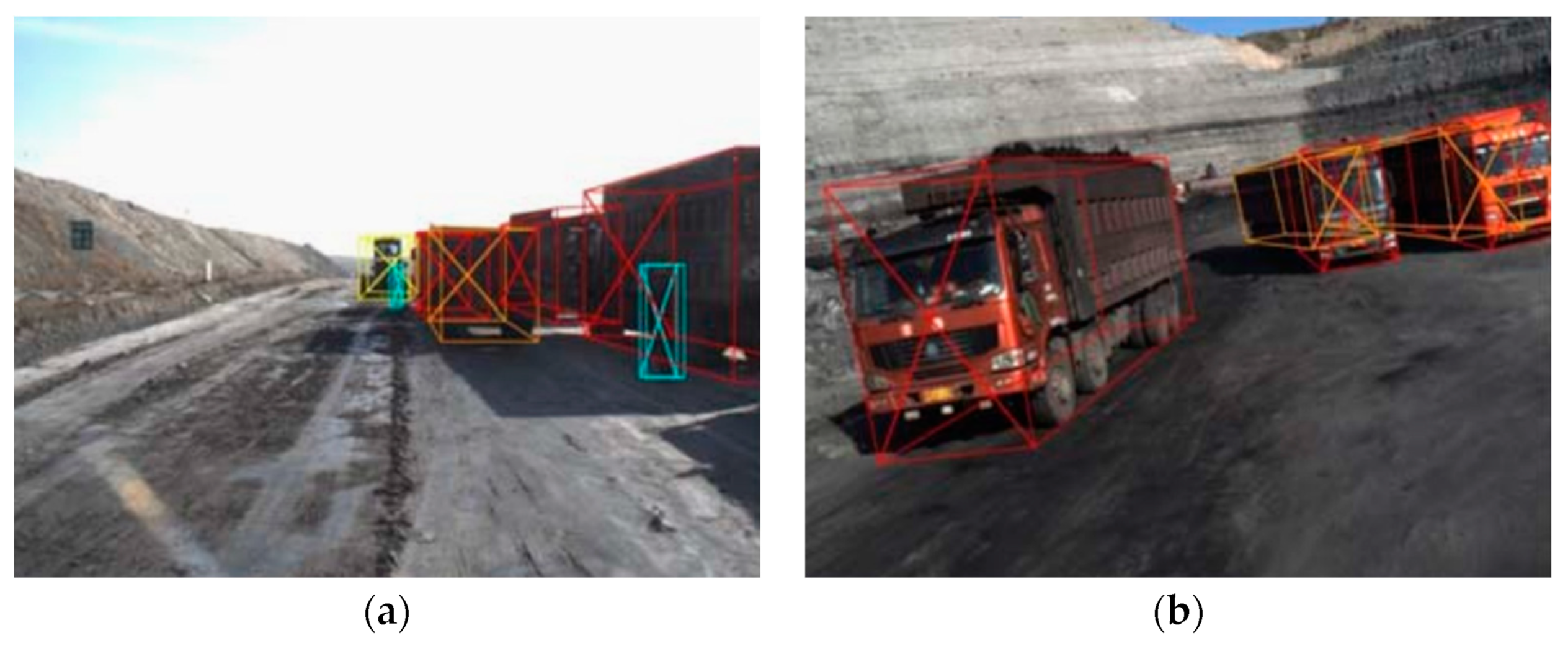

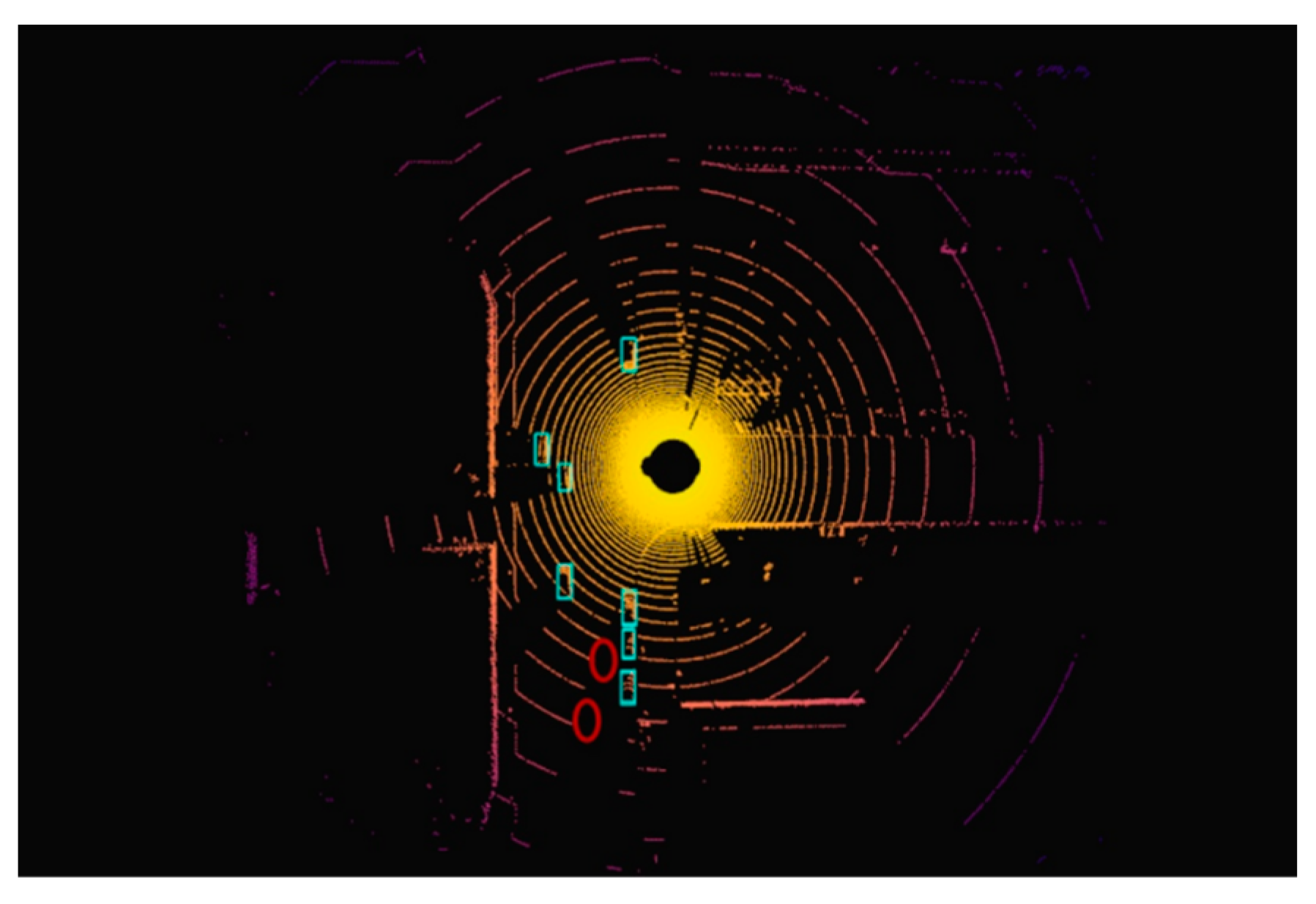

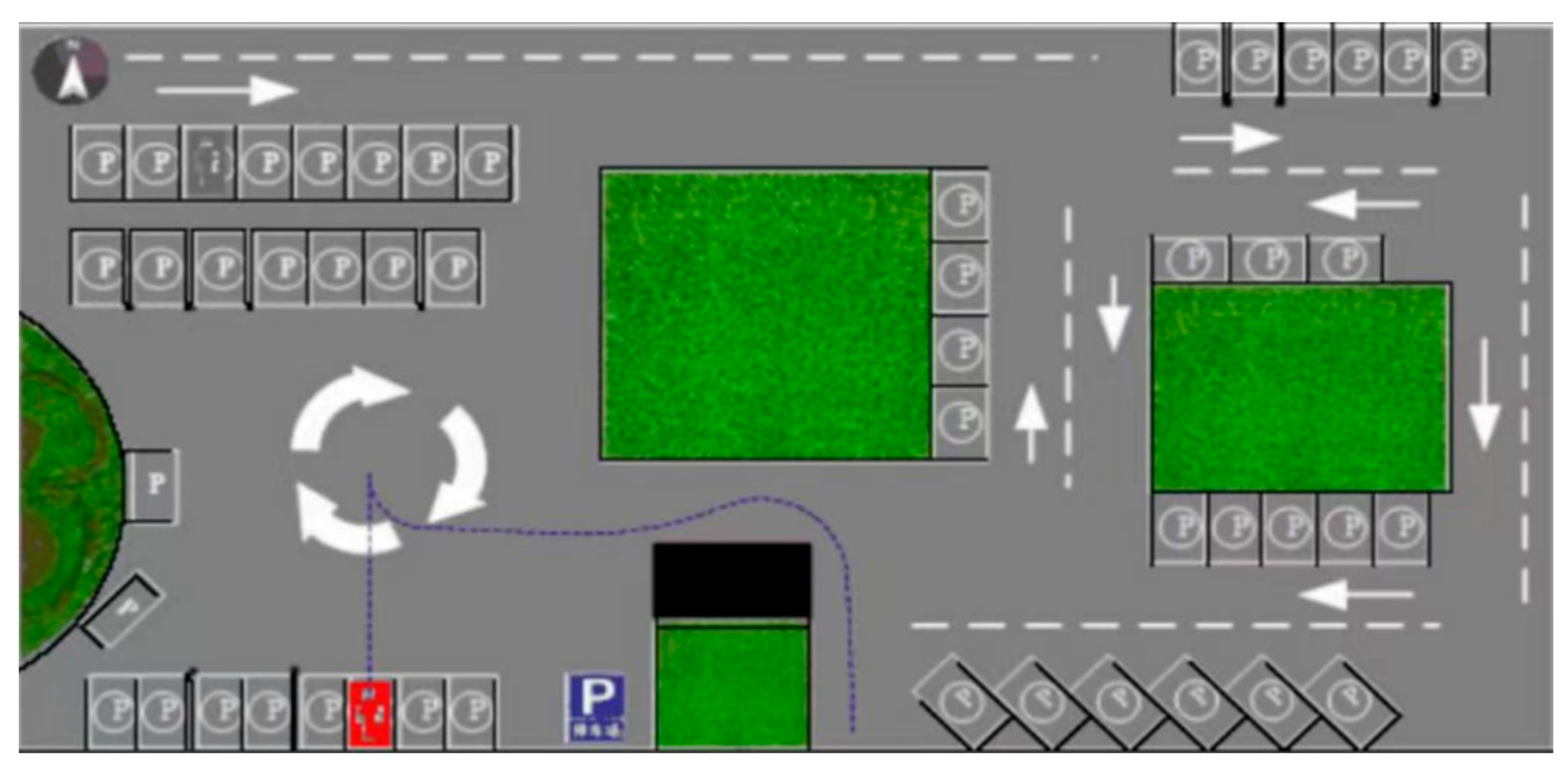

- nuPlan Dataset