Maritime Object Detection by Exploiting Electro-Optical and Near-Infrared Sensors Using Ensemble Learning

Abstract

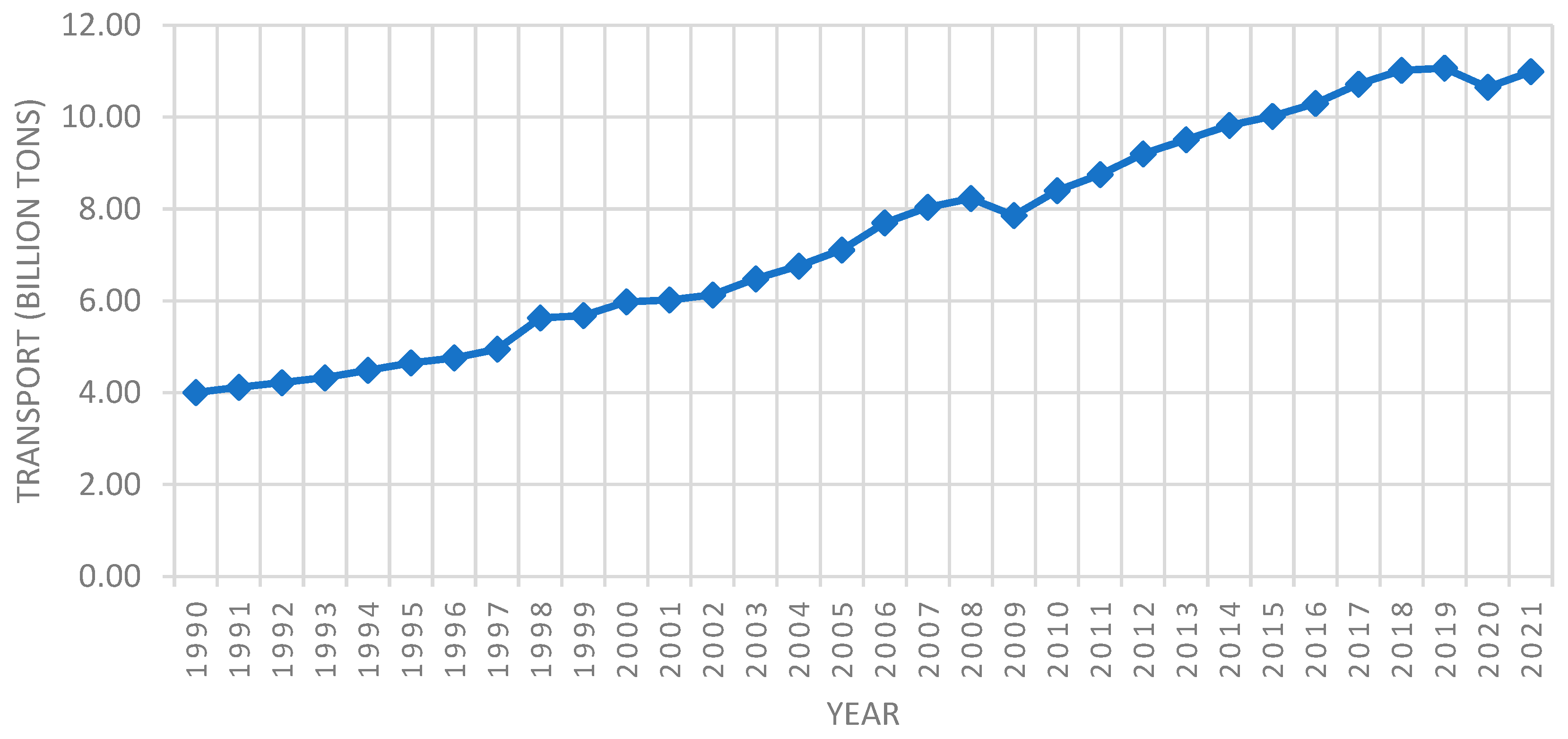

1. Introduction

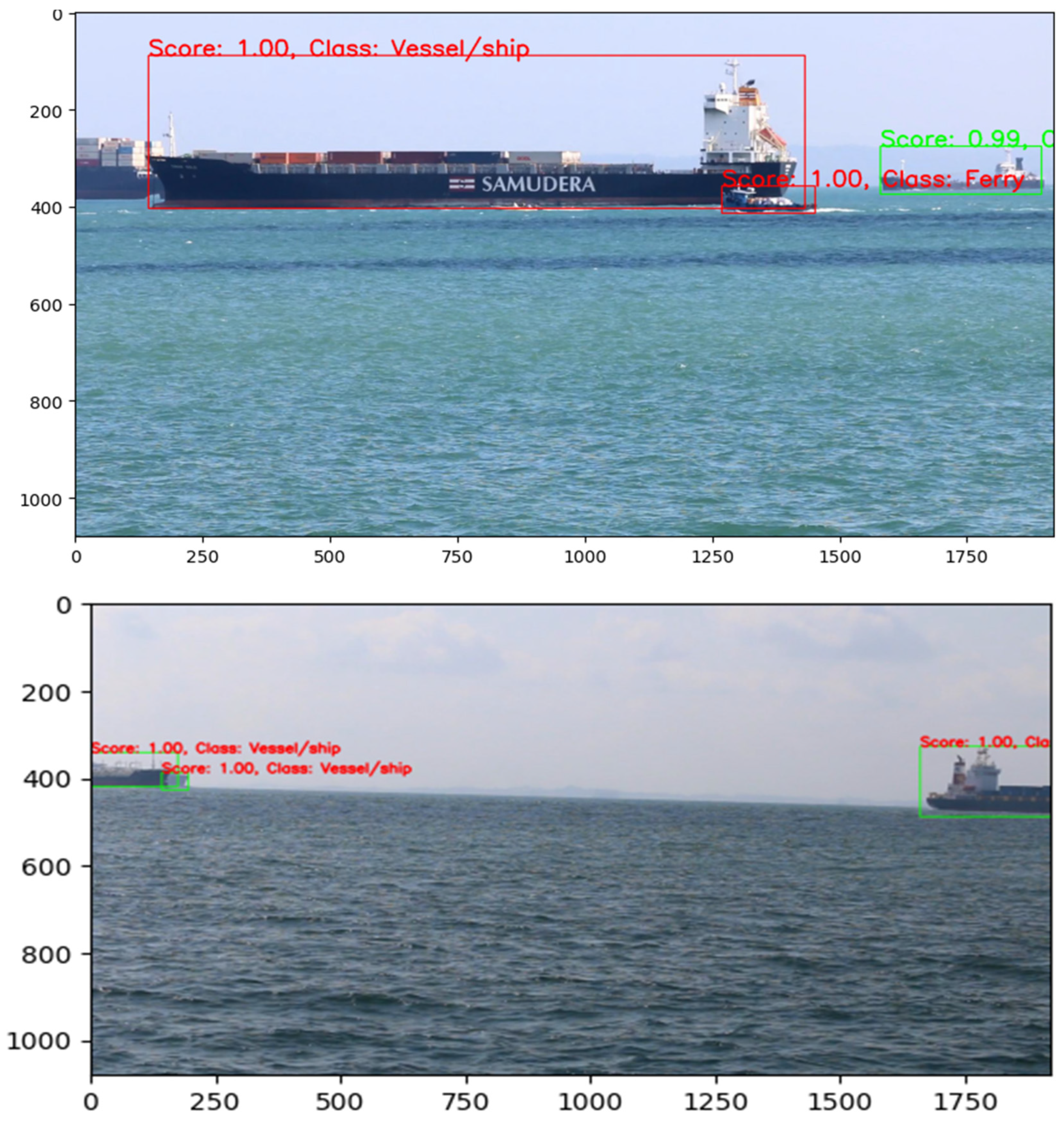

- Accurate detection and classification of relatively small objects.

- Classification of maritime objects in different weather conditions.

2. Related Work

2.1. Traditional Computer Vision Approaches

2.2. Deep Learning Advancements

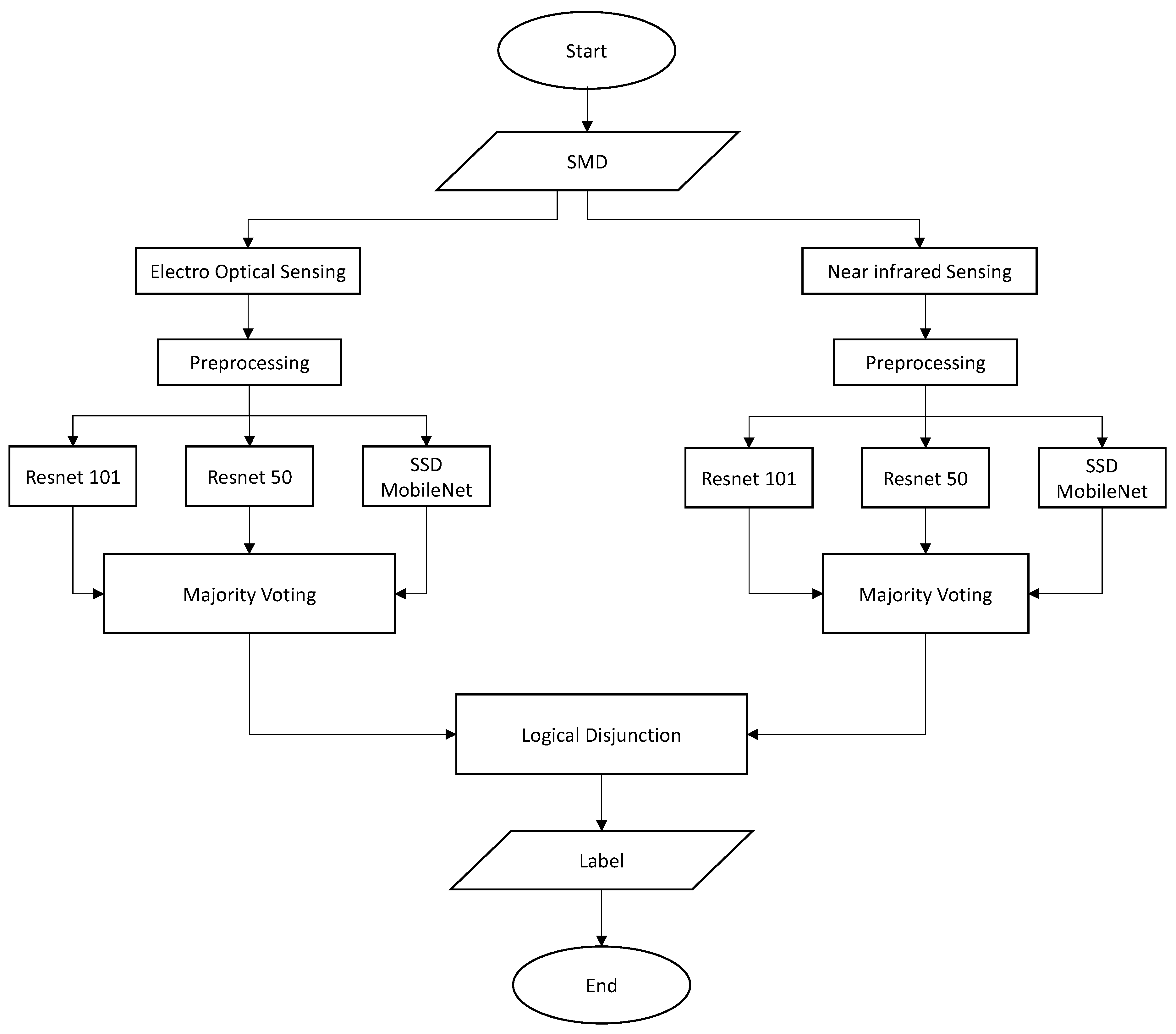

3. The Proposed Methodology

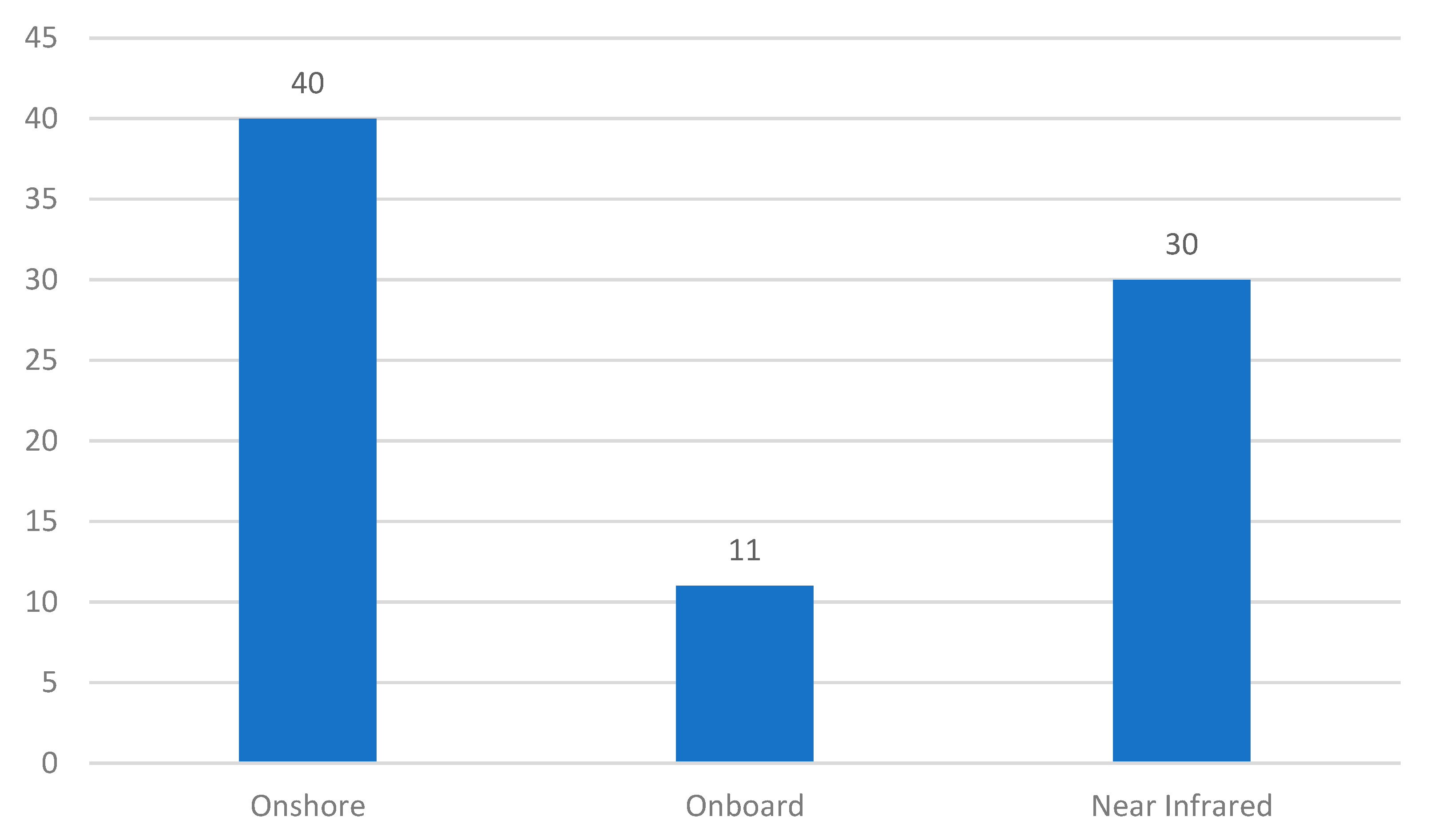

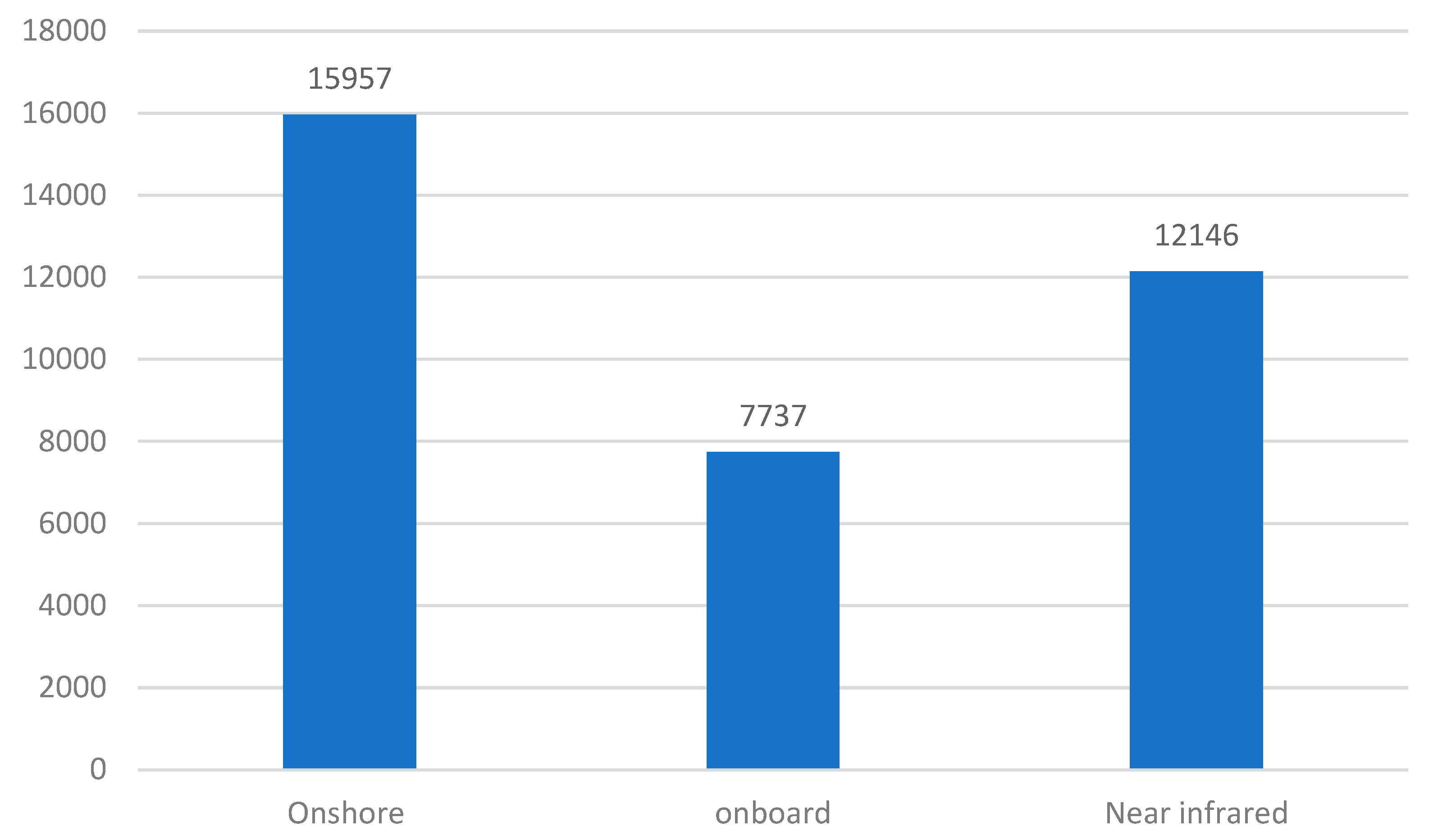

3.1. Dataset Description

3.2. SSD MobileNet (Single-Shot MultiBox Detector with MobileNet Backbone)

3.3. Faster R-CNN (ResNet 50)

3.4. Faster R-CNN (ResNet 101)

3.5. Models Hyperparameter Tuning

3.6. Computational Complexity Reduction

3.7. Ensemble Methodology

3.7.1. Dedicated Majority Voting for Each Sensor

3.7.2. Logical Disjunction

4. Results and Discussion

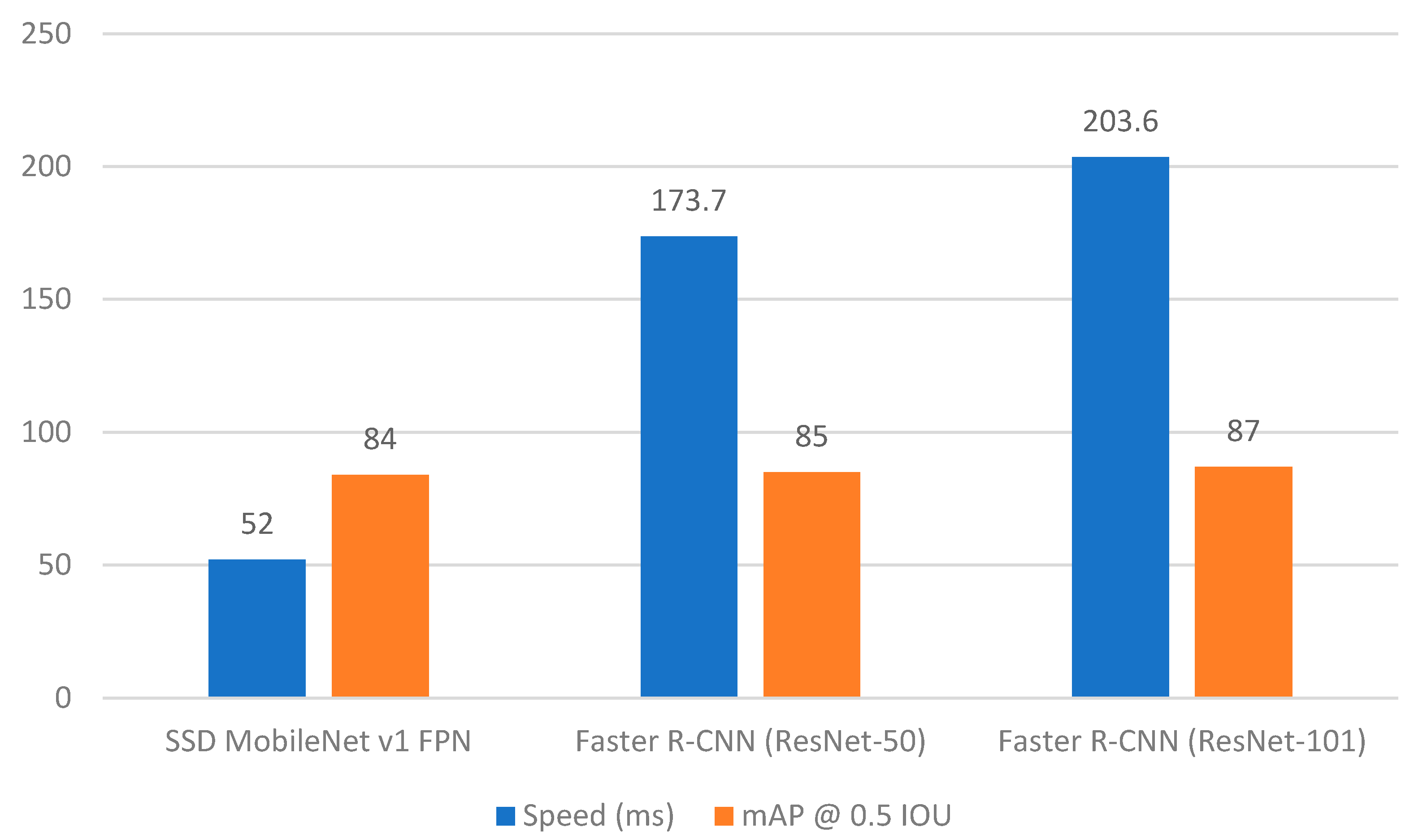

4.1. Base Learners Performance on NIR Sensor

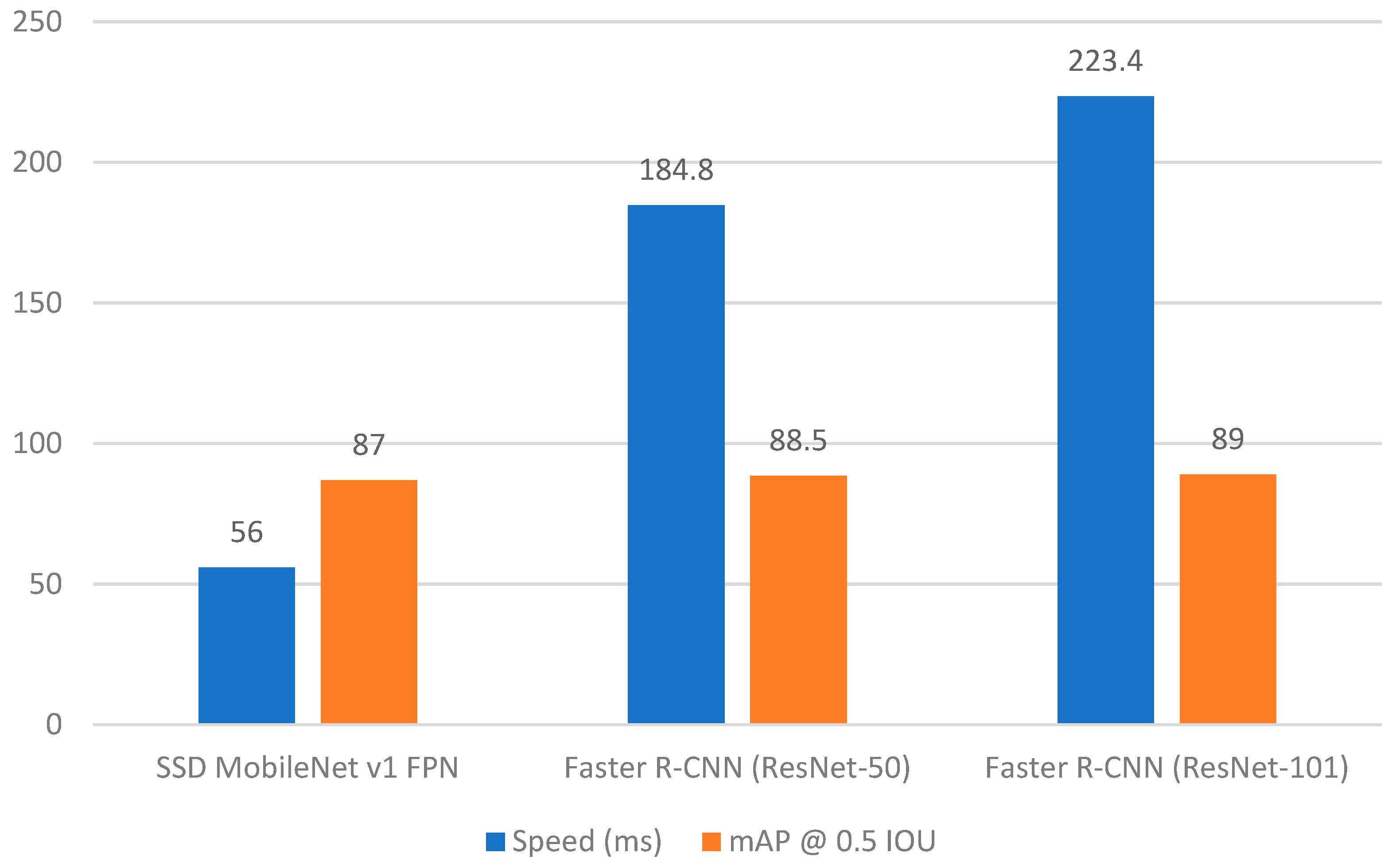

4.2. Base Learners Performance on Electro-Optical Sensor

4.3. Ensembl Results

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Meersman, H. Maritime traffic and the world economy. In Future Challenges for the Port and Shipping Sector; Informa Law from Routledge: Boca Raton, FL, USA, 2014; pp. 1–25. [Google Scholar]

- Dalaklis, D. Safety and security in shipping operations. In Shipping Operations Management; Springer: Berlin/Heidelberg, Germany, 2017; pp. 197–213. [Google Scholar]

- United Nations. Review of Maritime Transport. In Proceedings of the United Nations Conference on Trade and Development. 2022. Available online: https://www.un-ilibrary.org/content/books/9789210021470 (accessed on 3 September 2024).

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video processing from electro-optical sensors for object detection and tracking in a maritime environment: A survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef]

- Wang, N.; Wang, Y.; Er, M.J. Review on deep learning techniques for marine object recognition: Architectures and algorithms. Control Eng. Pract. 2022, 118, 104458. [Google Scholar] [CrossRef]

- Rekavandi, A.M.; Xu, L.; Boussaid, F.; Seghouane, A.-K.; Hoefs, S.; Bennamoun, M. A guide to image and video based small object detection using deep learning: Case study of maritime surveillance. arXiv 2022, arXiv:2207.12926. [Google Scholar]

- Zhao, C.; Liu, R.W.; Qu, J.; Gao, R. Deep learning-based object detection in maritime unmanned aerial vehicle imagery: Review and experimental comparisons. Eng. Appl. Artif. Intell. 2024, 128, 107513. [Google Scholar] [CrossRef]

- Negahdaripour, S.; Xu, X.; Khamene, A. A vision system for real-time positioning, navigation, and video mosaicing of sea floor imagery in the application of ROVs/AUVs. In Proceedings of the Fourth IEEE Workshop on Applications of Computer Vision, WACV’98 (Cat. No. 98EX201), Princeton, NJ, USA, 19–21 October 1998; pp. 248–249. [Google Scholar]

- Cozman, F.; Krotkov, E. Robot localization using a computer vision sextant. In Proceedings of the 1995 IEEE International Conference on Robotics and Automation, Aichi, Japan, 21–27 May 1995; Volume 1, pp. 106–111. [Google Scholar]

- González-Sabbagh, S.P.; Robles-Kelly, A. A survey on underwater computer vision. ACM Comput. Surv. 2023, 55, 1–39. [Google Scholar] [CrossRef]

- Prasad, D.K.; Prasath, C.K.; Rajan, D.; Rachmawati, L.; Rajabaly, E.; Quek, C. Challenges in video based object detection in maritime scenario using computer vision. arXiv 2016, arXiv:1608.01079. [Google Scholar]

- Moosbauer, S.; Konig, D.; Jakel, J.; Teutsch, M. A benchmark for deep learning based object detection in maritime environments. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Kim, J.-H.; Kim, N.; Park, Y.W.; Won, C.S. Object detection and classification based on YOLO-V5 with improved maritime dataset. J. Mar. Sci. Eng. 2022, 10, 377. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, H.; Zhao, Y. Yolov7-sea: Object detection of maritime uav images based on improved yolov7. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 233–238. [Google Scholar]

- Yu, Y.; Zhao, J.; Gong, Q.; Huang, C.; Zheng, G.; Ma, J. Real-time underwater maritime object detection in side-scan sonar images based on transformer-YOLOv5. Remote Sens. 2021, 13, 3555. [Google Scholar] [CrossRef]

- Shin, H.-C.; Lee, K.-I.; Lee, C.-E. Data augmentation method of object detection for deep learning in maritime image. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing (BigComp), Busan, Republic of Korea, 19–22 February 2020; pp. 463–466. [Google Scholar]

- Haghbayan, M.-H.; Farahnakian, F.; Poikonen, J.; Laurinen, M.; Nevalainen, P.; Plosila, J.; Heikkonen, J. An efficient multi-sensor fusion approach for object detection in maritime environments. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2163–2170. [Google Scholar]

- Cane, T.; Ferryman, J. Evaluating deep semantic segmentation networks for object detection in maritime surveillance. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Vasilopoulos, E.; Vosinakis, G.; Krommyda, M.; Karagiannidis, L.; Ouzounoglou, E.; Amditis, A. A comparative study of autonomous object detection algorithms in the maritime environment using a UAV platform. Computation 2022, 10, 42. [Google Scholar] [CrossRef]

- Iancu, B.; Winsten, J.; Soloviev, V.; Lilius, J. A Benchmark for Maritime Object Detection with Centernet on an Improved Dataset, ABOships-PLUS. J. Mar. Sci. Eng. 2023, 11, 1638. [Google Scholar] [CrossRef]

- Rahman, A.; Lu, Y.; Wang, H. Performance evaluation of deep learning object detectors for weed detection for cotton. Smart Agric. Technol. 2023, 3, 100126. [Google Scholar] [CrossRef]

- Khosravi, B.; Mickley, J.P.; Rouzrokh, P.; Taunton, M.J.; Larson, A.N.; Erickson, B.J.; Wyles, C.C. Anonymizing radiographs using an object detection deep learning algorithm. Radiol. Artif. Intell. 2023, 5, e230085. [Google Scholar] [CrossRef] [PubMed]

- Ma, P.; Li, C.; Rahaman, M.M.; Yao, Y.; Zhang, J.; Zou, S.; Zhao, X.; Grzegorzek, M. A state-of-the-art survey of object detection techniques in microorganism image analysis: From classical methods to deep learning approaches. Artif. Intell. Rev. 2023, 56, 1627–1698. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Zhang, L.; Wu, C.; Cui, Z.; Niu, C. A new lightweight deep neural network for surface scratch detection. Int. J. Adv. Manuf. Technol. 2022, 123, 1999–2015. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. Automated Machine Learning: Methods, Systems, Challenges; Springer Nature: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Shuja, J.; Bilal, K.; Alasmary, W.; Sinky, H.; Alanazi, E. Applying machine learning techniques for caching in next-generation edge networks: A comprehensive survey. J. Netw. Comput. Appl. 2021, 181, 103005. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Polikar, R. Ensemble learning. In Ensemble Machine Learning: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–34. [Google Scholar]

- Murtza, I.; Kim, J.-Y.; Adnan, M. Predicting the Performance of Ensemble Classification Using Conditional Joint Probability. Mathematics 2024, 12, 2586. [Google Scholar] [CrossRef]

| Sr. # | Model | Speed (ms) | mAP @ 0.5 IOU |

|---|---|---|---|

| 01 | SSD MobileNet v1 FPN | 52 | 84 |

| 02 | Faster R-CNN (ResNet-50) | 173.7 | 85 |

| 03 | Faster R-CNN (ResNet-101) | 203.6 | 87 |

| Sr. # | Model | Speed (ms) | mAP @ 0.5 IOU |

|---|---|---|---|

| 01 | SSD MobileNet v1 FPN | 56 | 87 |

| 02 | Faster R-CNN (ResNet-50) | 184.8 | 88.5 |

| 03 | Faster R-CNN (ResNet-101) | 223.4 | 89 |

| Domain | Metric |

|---|---|

| NIR images | 243 Speed (ms) |

| 91.5 mAP @ 0.5 IOU | |

| Electro-optical images | 256 Speed (ms) |

| 92.2 mAP @ 0.5 IOU |

| EO Ensemble | NIR Ensemble | Logical Disjunction | EO Ensemble | NIR Ensemble | Logical Disjunction | |

| FN | 74 | 73 | 6 | 112 | 112 | 35 |

| TP | 651 | 652 | 719 | 261 | 261 | 338 |

| FP | 120 | 72 | 192 | 110 | 165 | 270 |

| TN | 2287 | 2335 | 2215 | 2649 | 2594 | 2489 |

| Precision | 84.44 | 90.06 | 78.92 | 70.35 | 61.27 | 55.59 |

| Recall | 89.79 | 89.93 | 99.17 | 69.97 | 69.97 | 90.62 |

| Accuracy | 93.81 | 95.37 | 93.68 | 92.91 | 91.16 | 90.26 |

| (a) ferry | (b) Buoy | |||||

| EO Ensemble | NIR Ensemble | Logical Disjunction | EO Ensemble | NIR Ensemble | Logical Disjunction | |

| FN | 184 | 340 | 19 | 38 | 38 | 0 |

| TP | 2714 | 2558 | 2879 | 340 | 340 | 378 |

| FP | 218 | 202 | 233 | 165 | 110 | 266 |

| TN | 16 | 32 | 1 | 2589 | 2644 | 2488 |

| Precision | 92.56 | 92.68 | 92.51 | 67.33 | 75.56 | 58.70 |

| Recall | 93.65 | 88.27 | 99.34 | 89.95 | 89.95 | 100.00 |

| Accuracy | 87.16 | 82.69 | 91.95 | 93.52 | 95.27 | 91.51 |

| (c) Vessel/Ship | (d) Speed Boat | |||||

| EO Ensemble | NIR Ensemble | Logical Disjunction | EO Ensemble | NIR Ensemble | Logical Disjunction | |

| FN | 173 | 173 | 119 | 66 | 66 | 3 |

| TP | 73 | 73 | 127 | 586 | 586 | 649 |

| FP | 86 | 202 | 284 | 74 | 173 | 242 |

| TN | 2800 | 2684 | 2602 | 2406 | 2307 | 2238 |

| Precision | 45.91 | 26.55 | 30.90 | 88.79 | 77.21 | 72.84 |

| Recall | 29.67 | 29.67 | 51.63 | 89.88 | 89.88 | 99.54 |

| Accuracy | 91.73 | 88.03 | 87.13 | 95.53 | 92.37 | 92.18 |

| (e) Boat | (f) Kayak | |||||

| EO Ensemble | NIR Ensemble | Logical Disjunction | EO Ensemble | NIR Ensemble | Logical Disjunction | |

| FN | 194 | 194 | 59 | 93 | 91 | 52 |

| TP | 450 | 450 | 585 | 66 | 68 | 107 |

| FP | 124 | 74 | 194 | 118 | 144 | 256 |

| TN | 2364 | 2414 | 2294 | 2855 | 2829 | 2717 |

| Precision | 78.40 | 85.88 | 75.10 | 35.87 | 32.08 | 29.48 |

| Recall | 69.88 | 69.88 | 90.84 | 41.51 | 42.77 | 67.30 |

| Accuracy | 89.85 | 91.44 | 91.92 | 93.26 | 92.50 | 90.17 |

| (g) Sail Boat | (h) Flying Plane | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Javed, M.F.; Imam, M.O.; Adnan, M.; Murtza, I.; Kim, J.-Y. Maritime Object Detection by Exploiting Electro-Optical and Near-Infrared Sensors Using Ensemble Learning. Electronics 2024, 13, 3615. https://doi.org/10.3390/electronics13183615

Javed MF, Imam MO, Adnan M, Murtza I, Kim J-Y. Maritime Object Detection by Exploiting Electro-Optical and Near-Infrared Sensors Using Ensemble Learning. Electronics. 2024; 13(18):3615. https://doi.org/10.3390/electronics13183615

Chicago/Turabian StyleJaved, Muhammad Furqan, Muhammad Osama Imam, Muhammad Adnan, Iqbal Murtza, and Jin-Young Kim. 2024. "Maritime Object Detection by Exploiting Electro-Optical and Near-Infrared Sensors Using Ensemble Learning" Electronics 13, no. 18: 3615. https://doi.org/10.3390/electronics13183615

APA StyleJaved, M. F., Imam, M. O., Adnan, M., Murtza, I., & Kim, J.-Y. (2024). Maritime Object Detection by Exploiting Electro-Optical and Near-Infrared Sensors Using Ensemble Learning. Electronics, 13(18), 3615. https://doi.org/10.3390/electronics13183615