Research on Vehicle Detection in Infrared Aerial Images in Complex Urban and Road Backgrounds

Abstract

:1. Introduction

- (1)

- Variability in Vehicle Aspect Ratios: Some vehicle types exhibit a wide range of aspect ratios, such as truck and freight cars, making it challenging for the model to extract universal features for these vehicle types. This difficulty in model training results in a lower accuracy in target detection.

- (2)

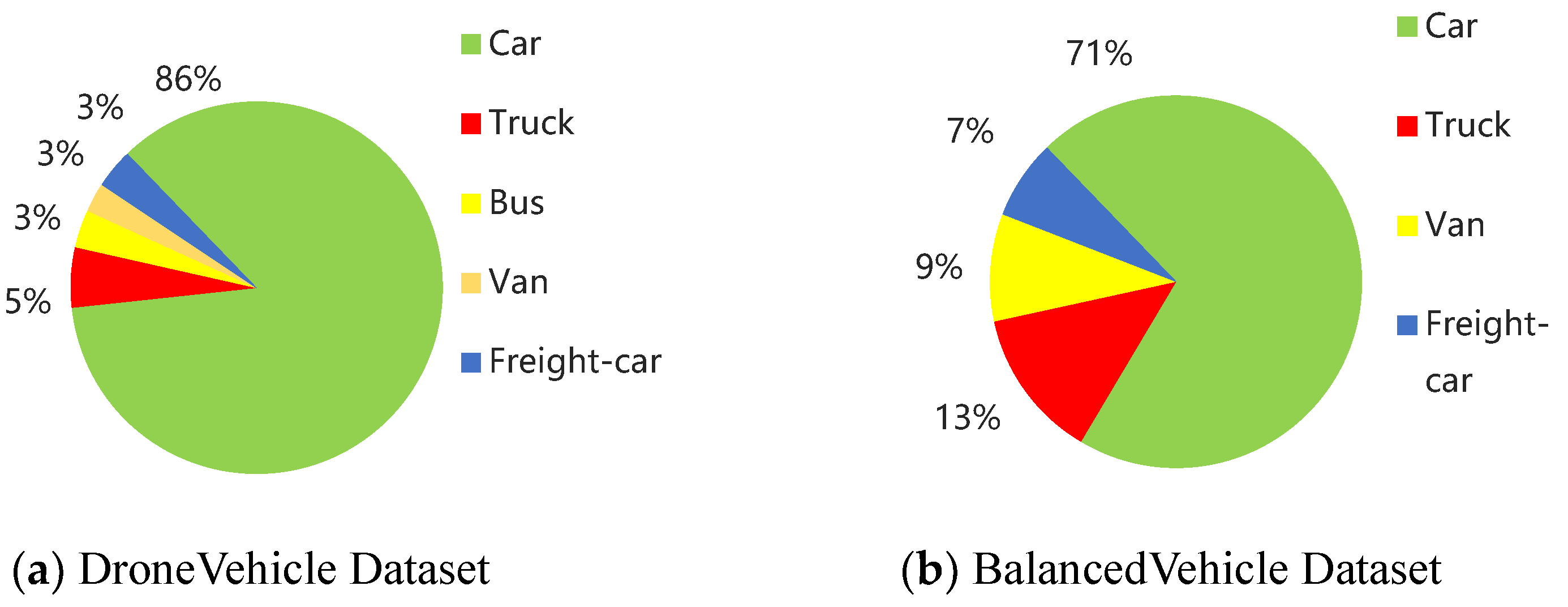

- Imbalanced Distribution of Single Vehicle Types: The data are heavily concentrated towards a single category of vehicles, causing the model training to be biased. This leads to the low detection accuracy of some vehicle categories and a higher likelihood of severe overfitting.

- (3)

- Complex Aerial Scene Conditions: Infrared remote sensing images of vehicles in aerial scenes feature complex backgrounds, including streets, buildings, trees, roads, crosswalks, pedestrians, and various other background areas. The relatively small size of vehicle targets, susceptibility to partial occlusion, and the potential confusion between background and targets pose significant challenges to vehicle detection.

- (4)

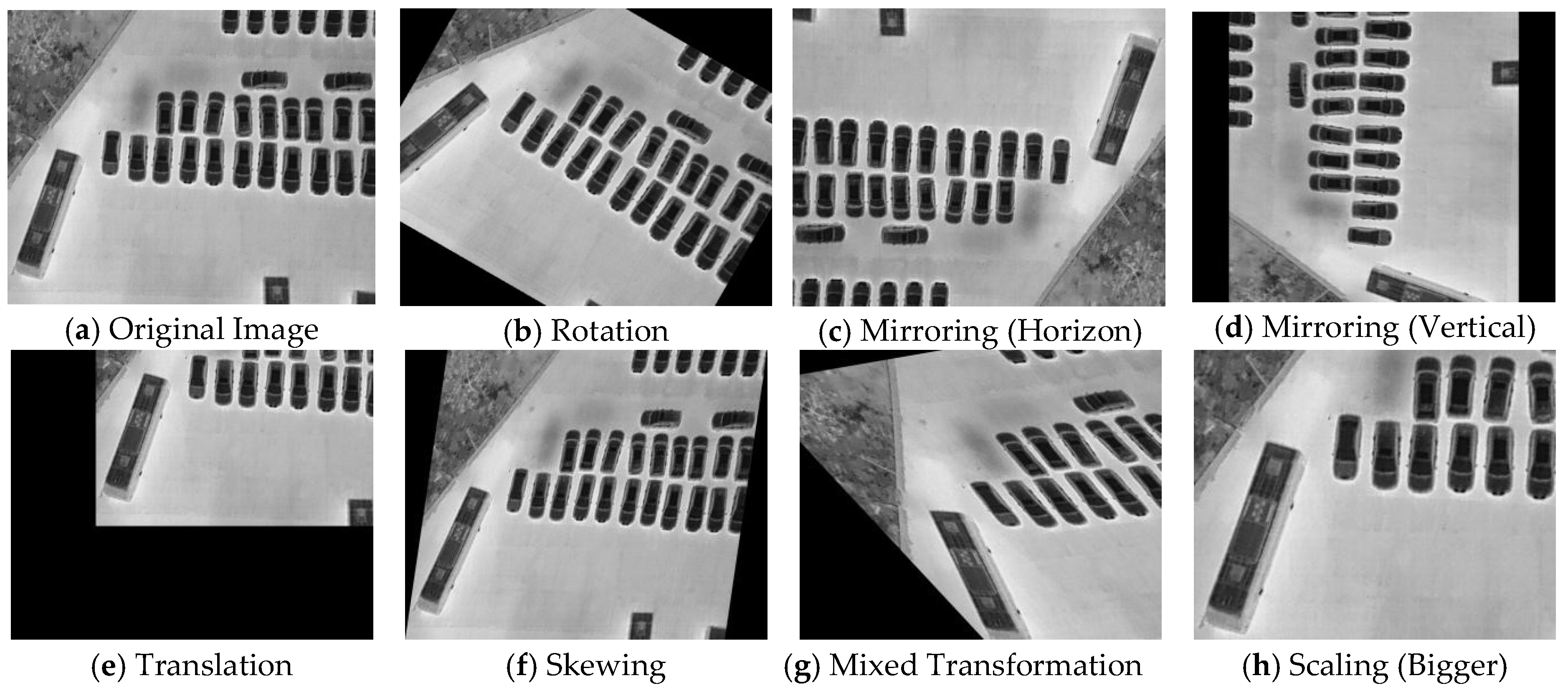

- Diverse Drone Shooting Angles: Drones capture ground areas from different heights and perspectives. Additionally, variations in shooting angles may occur due to wind conditions during drone flights, resulting in slight deviations in the shooting angles. These factors contribute to less-than-ideal shooting conditions, which impact the effectiveness of image capturing.

- Reconstructing the infrared remote sensing vehicle BalancedVehicle dataset by balancing the proportion of each type of different vehicle to tackle the problem of significant imbalances in different vehicle types.

- Introducing the automatic adaptive histogram equalization (AAHE) method during the model’s data loading phase, computing local maxima and minima as dynamic upper and lower boundaries in order to highlight the infrared vehicle target while inhibiting background interference.

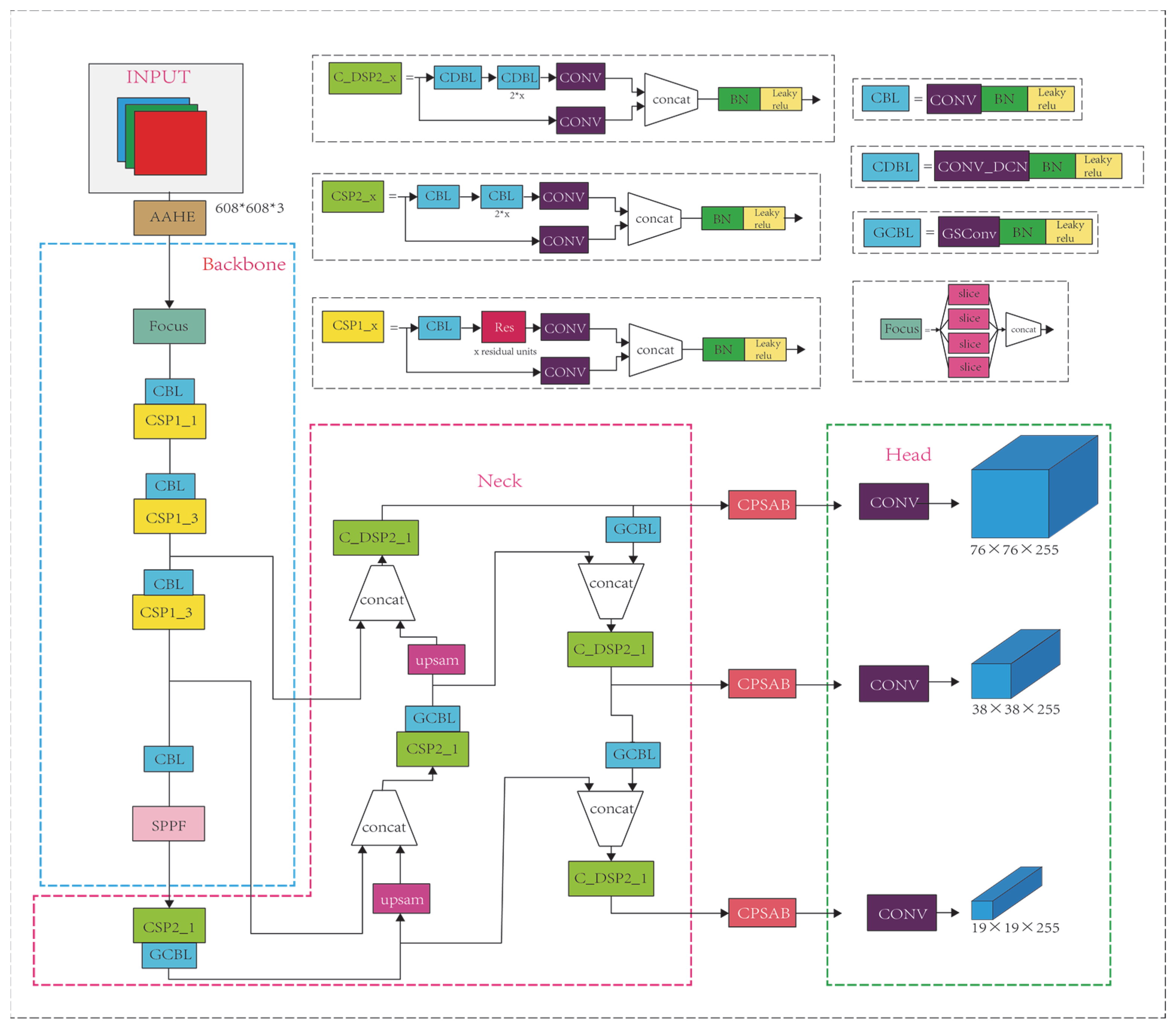

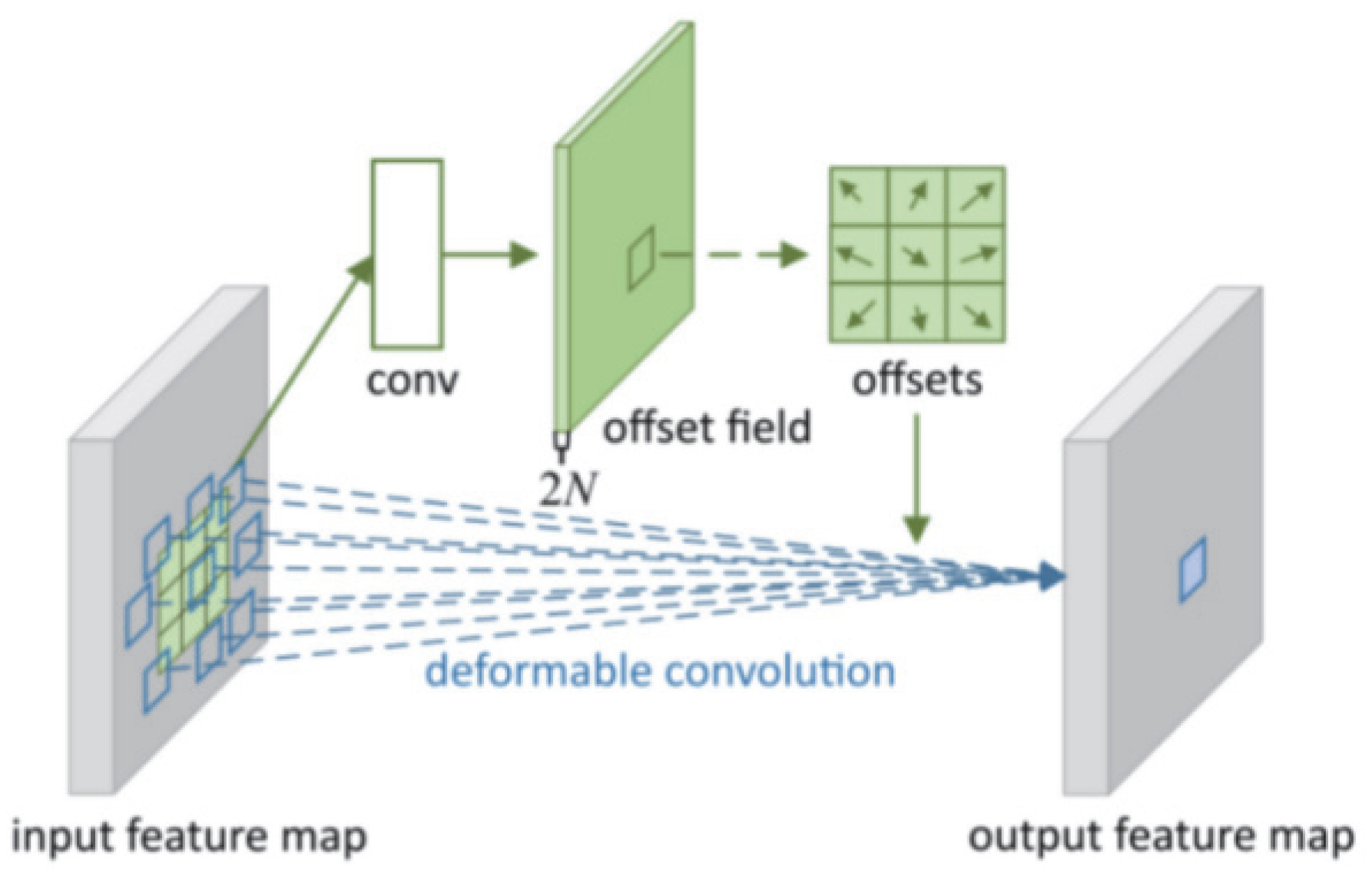

- Incorporating the Deformable Convolutional Networkv2 (DCNv2) [13] module to let our model obtain stronger spatial transformation capabilities and enable adaptive adjustment of the convolutional kernel’s scale. This could address issues related to variability in vehicle aspect ratios and significantly reduce the impact of UAV capturing problem.

- Proposing a convolutional polarized self-attention block (CPSAB), which is different from the original polarized self-attention (PSA) [14] module, as the average and max pooling modules are further added to the PSA, making the information better integrated so that it can better enhance the model’s target detection capabilities in various complex backgrounds.

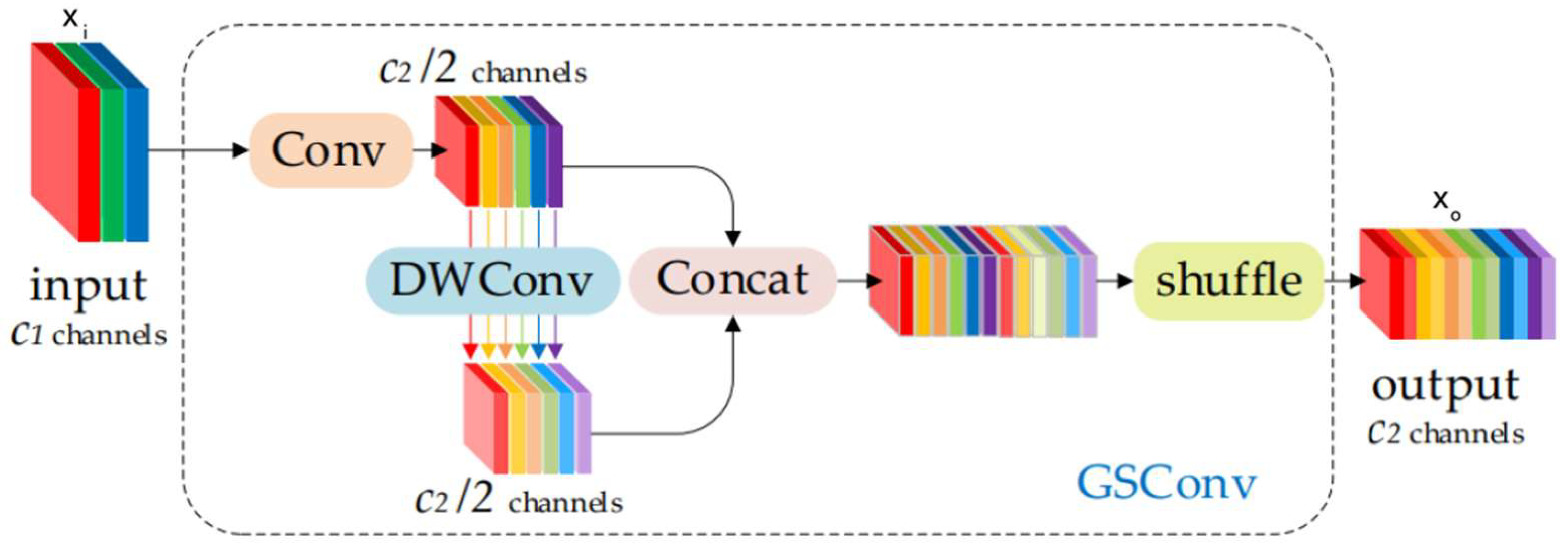

- Utilizing the lightweight convolutional GSConv [15], which combines standard convolution (SC) and depth-wise separable convolution (DWConv) together, so as to reduce model parameters while achieving noticeable accuracy, facilitating the practical deployment of the model in subsequent stages.

2. Related Works

2.1. Anchor-Free Rotation-Based Detection Methods

2.2. One-Stage Rotation-Based Detection Methods

2.3. Two-Stage Rotation-Based Detection Methods

3. Materials and Methods

3.1. RYOLOv5_D Model

3.1.1. Model Framework

3.1.2. Loss Function

- Angle Loss:

- Boundary Box Loss: Employ complete IoU (CIoU) to calculate the boundary box loss, incorporating the overlap area, center point distance, and aspect ratio simultaneously in the computation to enhance training stability and convergence speed.

- Confidence Loss:

- Classification Loss: Considering that a target may belong to multiple categories, a binary cross-entropy loss is used to treat each category.

3.2. Data Pre-Processing

3.3. Data Augmentation for Infrared Images

| Algorithm 1. Implementation steps of the AAHE algorithm. | |

| 1: | Input: Original image {(img1),(img2), ....., (imgN)}, Grayscale M, R=Q=([0.,0.,...])M*1 |

| 2: | Output: Enhanced image {(img1′), (img2′), (img3′), ....., (imgN’)} |

| 3: | Begin: |

| 4: | For img(x) in [1,N] do |

| 5: | Im_h,Im_w ← img(x).shape [0], img(x).shape[1] |

| 6: | For j in [1,Im_h] do |

| 7: | For k in [1,Im_w] do |

| 8: | R[img(i,j)] ← R[img(i,j)]+1, End For |

| 9: | N ← R[R>0], M ← N.shape, Max_sum = Min_sum = Max=Min ← 0, End for |

| 10: | For k in [0,N-2] do |

| 11: | Obtain local maxima and statistical maxima If (N[k+1] > N[k+2]) &(N[k+1] > N[i]) then |

| 12: | Max_sum ← Max_sum + N[k+1], Max ← Max + 1 |

| 13: | Obtain local minimum values and count the number of minimum values If (N[k+1] <N[k+2]) &(N[k+1] < N[k]) then |

| 14: | Min_sum ← Min_sum + N[k+1], Min ← Min + 1 |

| 15: | up_boundary ← Max_sum/Max, down_boundary ← Min_sum/Min, End for |

| 16: | For m in [1,M] do |

| 17: | If R[m] > up_boundary then Q[m] ← Q[m] + Up_boundary |

| 18: | else down_boundary < R[m] < Up_boundary then Q[m] ← Q[m] + R[m] |

| 19: | else R[m] <down_boundary then Q[m] ← Q[m] + down_boundary, End for |

| 20: | For i in [1,Im_h] do |

| 21: | For j in [1,Im_w] do |

| 22: | Obtain a new histogram of the gray-scale distribution Q[i, j] ← 255 * R[img(i,j)]/Q[m], End for, End for |

| 23: | End for |

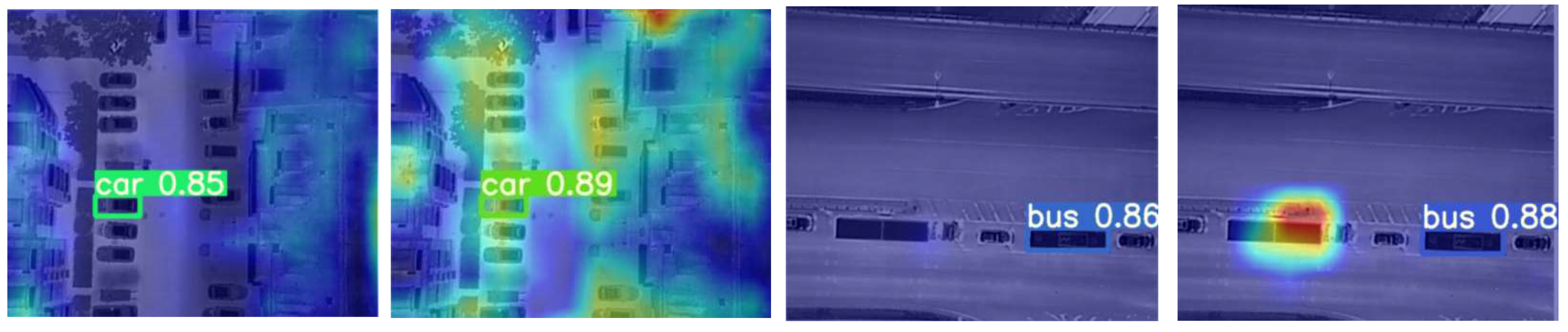

3.4. Deformable Convolution Network (DCN)

3.5. Convolutional Polarized Self-Attention Block (CPSAB)

3.6. Lightweight Module (GSConv)

4. Vehicle Object Detection Evaluation

4.1. Model Size Evaluation Metrics

4.2. Model Size Evaluation Metrics

4.3. Model Real-Time Performance Metrics

5. Experiment

5.1. Experimental Configuration

5.2. Related Dataset

5.2.1. DroneVehicle Dataset

5.2.2. VEDAI Dataset

5.2.3. BalancedVehicle Self-Built Dataset

5.3. Ablation Experiments

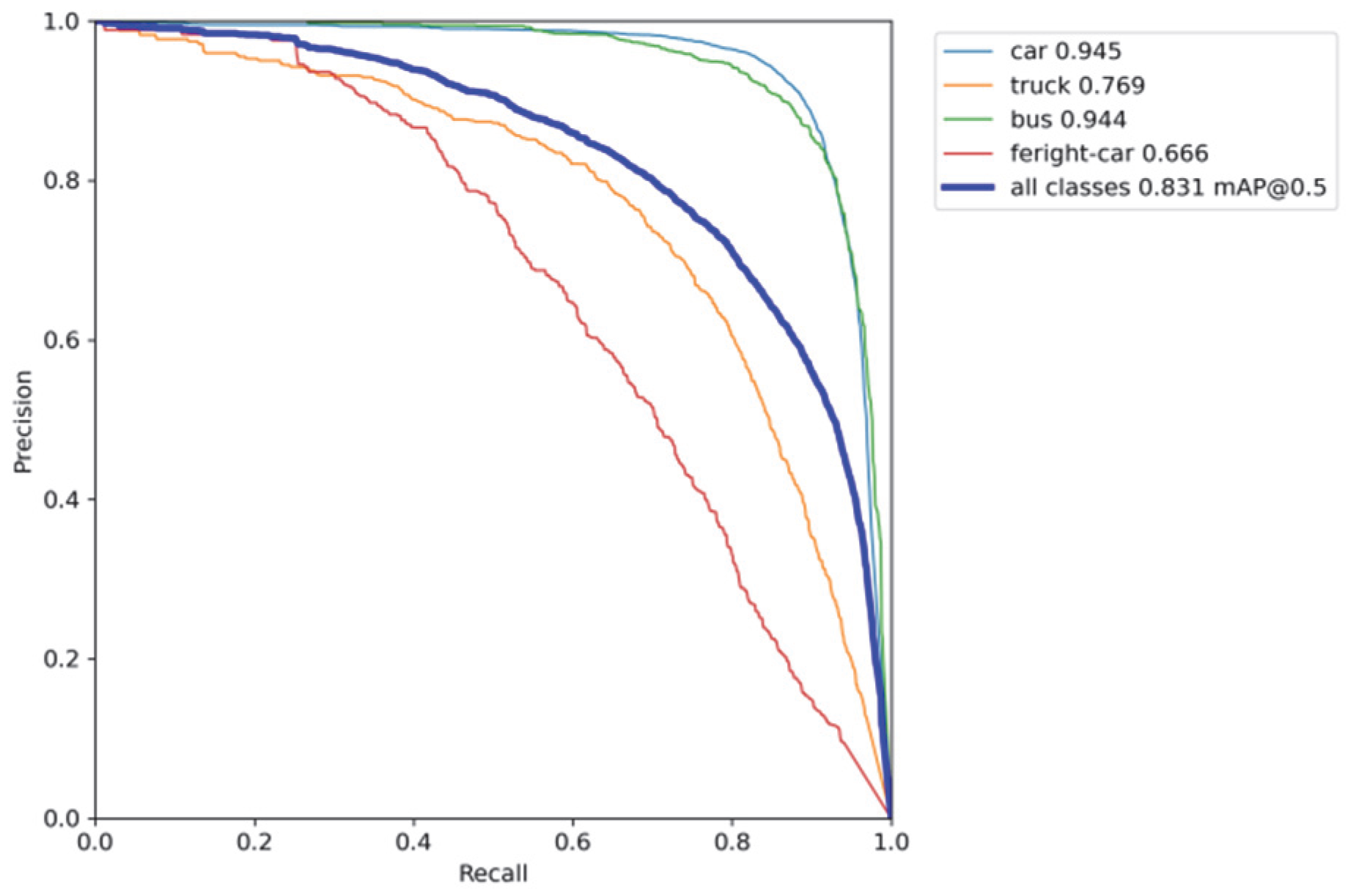

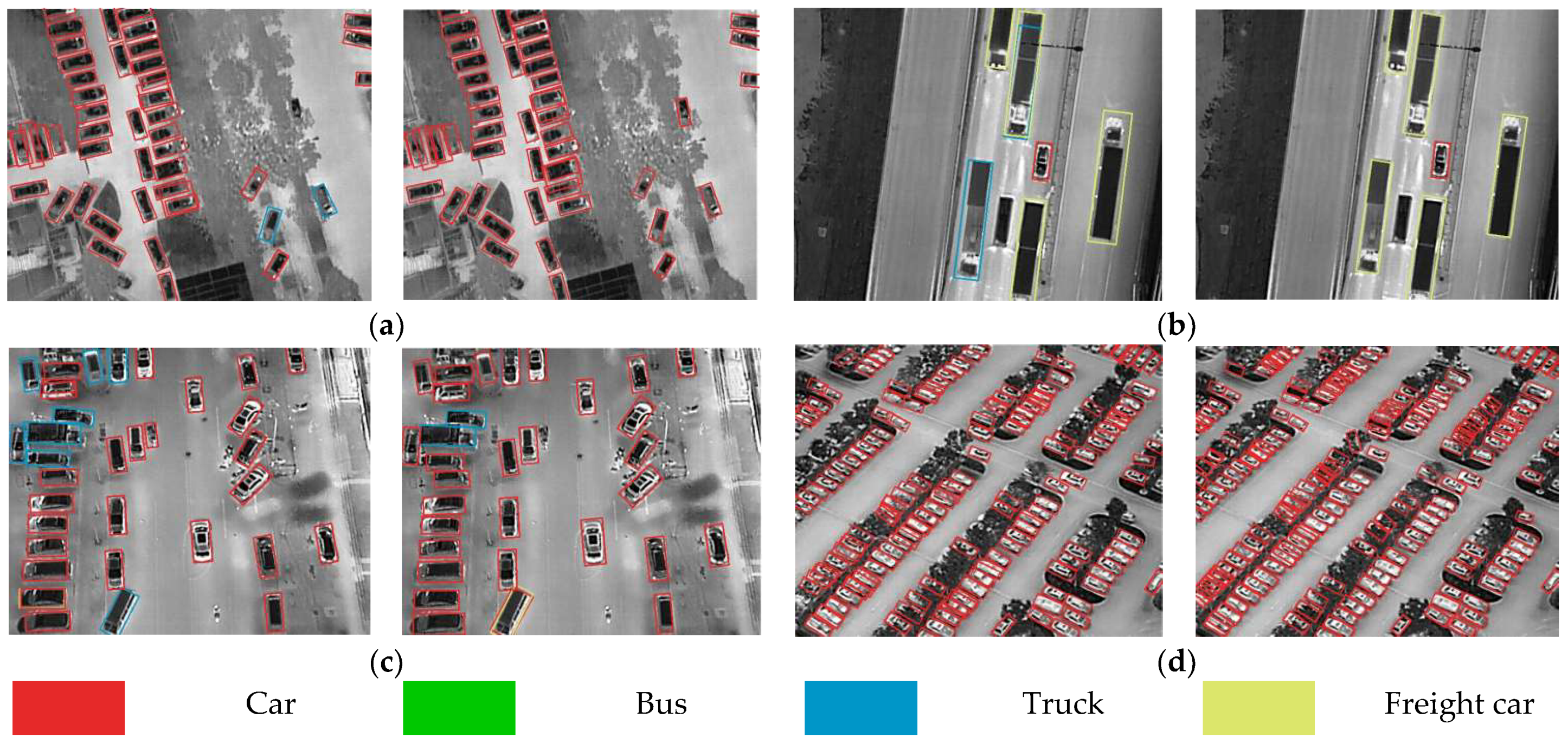

5.4. Comparison of Detection Performance on the BalancedVehicle Dataset

5.5. Experimental Comparison of Different Models on the BalancedVehicle Dataset

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vollmer, M. Infrared. Eur. J. Phys. 2013, 34, S49. [Google Scholar] [CrossRef]

- Ajakwe, S.O.; Ihekoronye, V.U.; Akter, R.; Kim, D.-S.; Lee, J.M. Adaptive Drone Identification and Neutralization Scheme for Real-Time Military Tactical Operations. In Proceedings of the 2022 International Conference on Information Networking (ICOIN), Jeju-si, Republic of Korea, 12–15 January 2022; pp. 380–384. [Google Scholar]

- Mo, N.; Yan, L. Improved Faster RCNN Based on Feature Amplification and Oversampling Data Augmentation for Oriented Vehicle Detection in Aerial Images. Remote Sens. 2020, 12, 2558. [Google Scholar] [CrossRef]

- Wang, B.; Gu, Y. An Improved FBPN-Based Detection Network for Vehicles in Aerial Images. Sensors 2020, 20, 4709. [Google Scholar] [CrossRef]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Zou, H. Toward Fast and Accurate Vehicle Detection in Aerial Images Using Coupled Region-Based Convolutional Neural Networks. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2017, 10, 3652–3664. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Q.; Yang, F.; Zhang, W.; Zuo, W. Data Augmentation for Object Detection via Progressive and Selective Instance-Switching. arXiv 2019, arXiv:1906.00358. [Google Scholar]

- Zhong, J.; Lei, T.; Yao, G. Robust Vehicle Detection in Aerial Images Based on Cascaded Convolutional Neural Networks. Sensors 2017, 17, 2720. [Google Scholar] [CrossRef] [PubMed]

- Shen, J.; Liu, N.; Sun, H.; Tao, X.; Li, Q. Vehicle Detection in Aerial Images Based on Hyper Feature Map in Deep Convolutional Network. KSII Trans. Internet Inf. Syst. (TIIS) 2019, 13, 1989–2011. [Google Scholar]

- Musunuri, Y.R.; Kwon, O.-S.; Kung, S.-Y. SRODNet: Object Detection Network Based on Super Resolution for Autonomous Vehicles. Remote Sens. 2022, 14, 6270. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Z.; Tian, Y.; Xu, Y.; Wen, Y.; Wang, S. Target-Guided Feature Super-Resolution for Vehicle Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Mostofa, M.; Ferdous, S.N.; Riggan, B.S.; Nasrabadi, N.M. Joint-SRVDNet: Joint Super Resolution and Vehicle Detection Network. IEEE Access 2020, 8, 82306–82319. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Chen, Q.; Maldague, X. Infrared Image Enhancement Using Adaptive Histogram Partition and Brightness Correction. Remote Sens. 2018, 10, 682. [Google Scholar] [CrossRef]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable Convnets v2: More Deformable, Better Results. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9308–9316. [Google Scholar]

- Liu, H.; Liu, F.; Fan, X.; Huang, D. Polarized Self-Attention: Towards High-Quality Pixel-Wise Regression. arXiv 2021, arXiv:2107.00782. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-Neck by GSConv: A Better Design Paradigm of Detector Architectures for Autonomous Vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Mateus, B.C.; Mendes, M.; Farinha, J.T.; Cardoso, A.J.M.; Assis, R.; da Costa, L.M. Forecasting Steel Production in the World—Assessments Based on Shallow and Deep Neural Networks. Appl. Sci. 2022, 13, 178. [Google Scholar] [CrossRef]

- Wei, H.; Zhang, Y.; Chang, Z.; Li, H.; Wang, H.; Sun, X. Oriented Objects as Pairs of Middle Lines. ISPRS J. Photogramm. Remote Sens. 2020, 169, 268–279. [Google Scholar] [CrossRef]

- Lin, Y.; Feng, P.; Guan, J.; Wang, W.; Chambers, J. IENet: Interacting Embranchment One Stage Anchor Free Detector for Orientation Aerial Object Detection. arXiv 2019, arXiv:1912.00969. [Google Scholar]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. Rtmdet: An Empirical Study of Designing Real-Time Object Detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 3163–3171. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.-S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, J.; Weng, L.; Yang, Y. Rotated Region Based CNN for Ship Detection. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 900–904. [Google Scholar]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv 2017, arXiv:1706.09579. [Google Scholar]

- Nabati, R.; Qi, H. Rrpn: Radar Region Proposal Network for Object Detection in Autonomous Vehicles. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3093–3097. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.-S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Li, X.; Cai, Z.; Zhao, X. Oriented-YOLOv5: A Real-Time Oriented Detector Based on YOLOv5. In Proceedings of the 2022 7th International Conference on Computer and Communication Systems (ICCCS), Wuhan, China, 22–25 April 2022; pp. 216–222. [Google Scholar]

- Zhao, X.; Xia, Y.; Zhang, W.; Zheng, C.; Zhang, Z. YOLO-ViT-Based Method for Unmanned Aerial Vehicle Infrared Vehicle Target Detection. Remote Sens. 2023, 15, 3778. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M. Mobilevit: Light-Weight, General-Purpose and Mobile-Friendly Vision Transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Bao, C.; Cao, J.; Hao, Q.; Cheng, Y.; Ning, Y.; Zhao, T. Dual-YOLO Architecture from Infrared and Visible Images for Object Detection. Sensors 2023, 23, 2934. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J. Arbitrary-Oriented Object Detection with Circular Smooth Label; Springer: Berlin/Heidelberg, Germany, 2020; pp. 677–694. [Google Scholar]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Drone-Based RGB-Infrared Cross-Modality Vehicle Detection via Uncertainty-Aware Learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6700–6713. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle Detection in Aerial Imagery: A Small Target Detection Benchmark. J. Visual Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Lee, S.; Lee, S.; Song, B.C. Cfa: Coupled-Hypersphere-Based Feature Adaptation for Target-Oriented Anomaly Localization. IEEE Access 2022, 10, 78446–78454. [Google Scholar] [CrossRef]

- Li, Z.; Hou, B.; Wu, Z.; Ren, B.; Yang, C. FCOSR: A Simple Anchor-Free Rotated Detector for Aerial Object Detection. Remote Sens. 2023, 15, 5499. [Google Scholar] [CrossRef]

- Li, W.; Chen, Y.; Hu, K.; Zhu, J. Oriented Reppoints for Aerial Object Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1829–1838. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G.-S. Redet: A Rotation-Equivariant Detector for Aerial Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2786–2795. [Google Scholar]

| Images | Entropy (EN) | Standard Deviation (SD) | Spatial Frequency (SF) |

|---|---|---|---|

| Before | 7.62 | 54.45 | 16.11 |

| After | 7.93 | 69.69 | 20.65 |

| Hardware | CPU | 12th Gen Intel Core i7-12700 |

| Memory | 32 G | |

| GPU | NVIDIA 3080Ti | |

| Graphic memory | 16 G | |

| Software | System | Win 10 |

| Deep learning framework | Pytorch 1.10, Python 3.8, Pycharm 2022, C++(2019), MMRotate 0.3.4 | |

| Dataset | BalancedVehicle | Train set: 2132 images |

| Val set: 850 images | ||

| Test set: 288 images |

| Training Parameters | Parameter Values |

|---|---|

| Epoch | 300 |

| Batch size | 8 |

| IOU threshold | 0.4 |

| NMS (non-maximum suppression) | 0.5 |

| Batch size | 8 |

| Learning rate | 0.0025 |

| Data augmentation method | Mosaic |

| Optimizer | SGD |

| Category | Infrared Image Vehicle Count |

|---|---|

| Car | 30,374 |

| Truck | 5584 |

| Bus | 3998 |

| Freight car | 2946 |

| All | 42,902 |

| Methods | YOLOv5s-obb | YOLOv5s_Im1 | YOLOv5s_Im2 | YOLOv5s_Im3 | RYOLOv5s_D |

|---|---|---|---|---|---|

| AAHE | - | √ | √ | √ | √ |

| CSPAB | - | - | √ | √ | √ |

| DCNv2 | - | - | - | √ | √ |

| GSConv | - | - | - | - | √ |

| Car AP (%) | 93.5 | 93.0 | 93.3 | 93.9 | 94.4 |

| Truck AP (%) | 58.4 | 61.8 | 61.5 | 67.5 | 69.8 |

| Bus AP (%) | 91.5 | 89.8 | 90.8 | 91.3 | 91.8 |

| Freight car AP (%) | 50.0 | 53.2 | 55.7 | 55.1 | 58.0 |

| mAP (%) | 73.6 | 74.4 | 75.3 | 76.9 | 78.5 |

| mAP0.5:0.95 (%) | 48.8 | 49.4 | 50.4 | 52.2 | 54.3 |

| Param (M) | 7.64 | 7.64 | 8.21 | 9.16 | 7.87 |

| Methods | Backbone | Param (M) | GFLOPS | FPS | Map | F1 |

|---|---|---|---|---|---|---|

| RYOLOv5s_D | CSPDarknet-S | 7.87 | 16.8 | 59.52 | 78.5 | 0.759 |

| RYOLOv5m_D | CSPDarknet-M | 22.48 | 46.1 | 44.64 | 82.6 | 0.806 |

| RYOLOv5l_D | CSPDarknet-L | 48.70 | 98.0 | 29.85 | 84.3 | 0.822 |

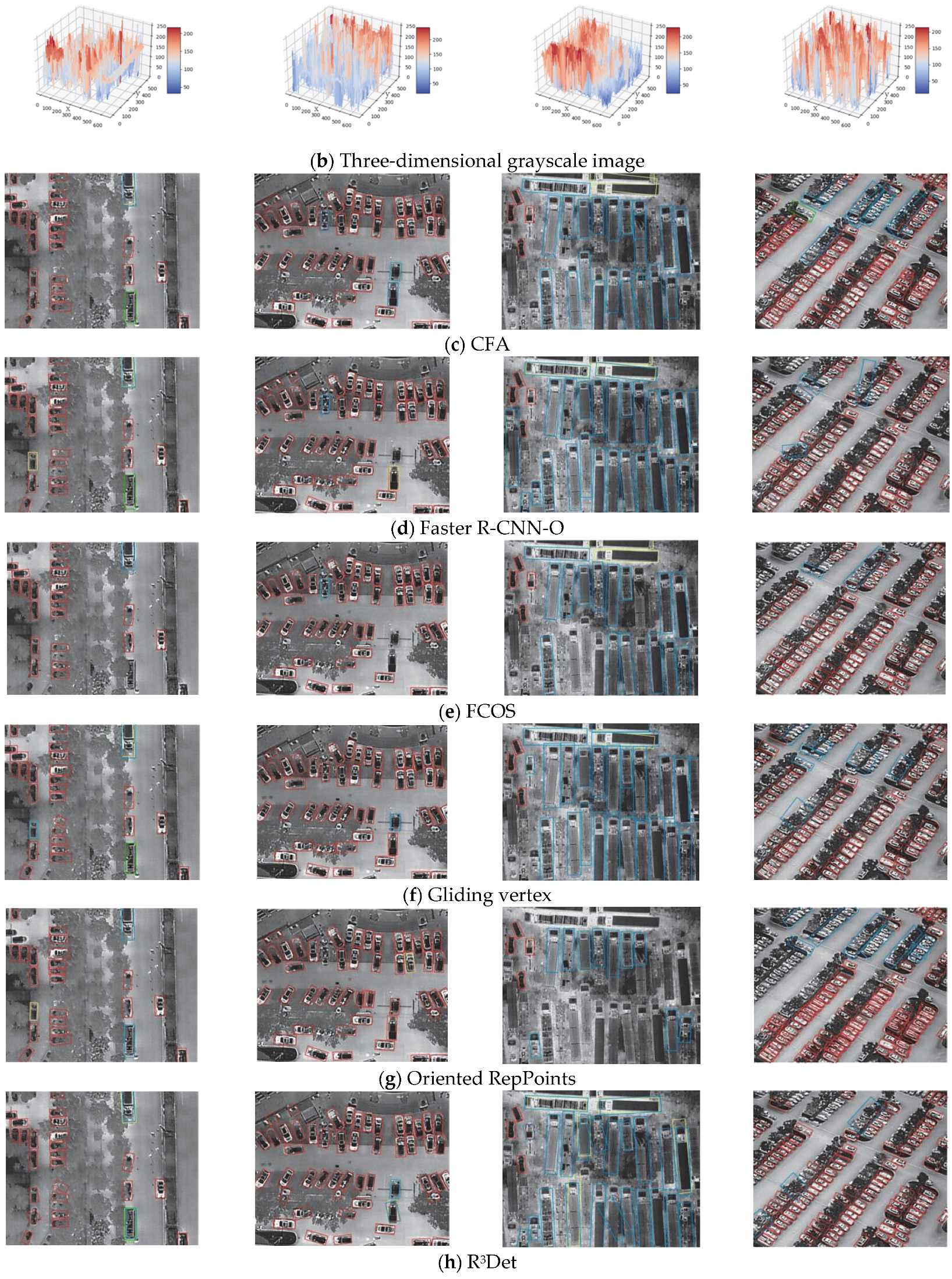

| Methods | Backbone | Car | Bus | Truck | Freight Car | Param (M) | mAP (%) | GFLOPS |

|---|---|---|---|---|---|---|---|---|

| Anchor-free method | ||||||||

| CFA [34] | R101-FPN | 0.890 | 0.864 | 0.604 | 0.614 | 55.60 | 74.3 | 165.2 |

| Rotated-FCOS [35] | R101-FPN | 0.880 | 0.859 | 0.656 | 0.603 | 50.89 | 75.0 | 172.5 |

| Rotated-FCOS+ [35] | R152-FPN | 0.888 | 0.870 | 0.683 | 0.606 | 66.53 | 76.2 | 219.8 |

| Oriented-RepPoints [36] | R101-FPN | 0.854 | 0.501 | 0.340 | 0.315 | 55.60 | 50.2 | 165.2 |

| RTMDet-S [19] | CSPNext-S | 0.899 | 0.900 | 0.810 | 0.701 | 8.86 | 82.7 | 22.1 |

| RTMDet-L [19] | CSPNext-L | 0.904 | 0.902 | 0.832 | 0.729 | 52.26 | 84.4 | 122.9 |

| Anchor-based one-stage method | ||||||||

| R3Det [20] | R101-FPN | 0.868 | 0.820 | 0.581 | 0.517 | 55.92 | 69.6 | 185.1 |

| R3Det+ [20] | R152-FPN | 0.884 | 0.834 | 0.614 | 0.540 | 71.57 | 71.8 | 232.4 |

| S2Anet [21] | R152-FPN | 0.888 | 0.872 | 0.754 | 0.573 | 73.19 | 77.2 | 213.8 |

| Anchor-based two-stage method | ||||||||

| ReDet [37] | R50-FPN | 0.857 | 0.803 | 0.651 | 0.484 | 31.56 | 69.9 | 33.8 |

| ReDet+ [37] | R152-FPN | 0.862 | 0.802 | 0.652 | 0.493 | 35.64 | 70.2 | 34.1 |

| Gliding vertex | R101-FPN | 0.809 | 0.876 | 0.696 | 0.622 | 60.12 | 75.1 | 167.9 |

| RoI Transformer [25] | Swin Tiny-FPN | 0.885 | 0.865 | 0.722 | 0.595 | 58.67 | 76.7 | 123.8 |

| RoI Transformer+ [25] | R152-FPN | 0.812 | 0.872 | 0.788 | 0.646 | 74.04 | 78.0 | 169.0 |

| O-RCNN [23] | R101-FPN | 0.889 | 0.872 | 0.780 | 0.612 | 60.12 | 78.8 | 168.1 |

| O-RCNN+ [23] | R152-FPN | 0.892 | 0.882 | 0.789 | 0.620 | 75.76 | 79.6 | 215.3 |

| Faster R-CNN-O [3] | R101-FPN | 0.810 | 0.852 | 0.756 | 0.602 | 60.12 | 75.5 | 167.9 |

| Faster R-CNN-O+ [3] | R152-FPN | 0.890 | 0.890 | 0.762 | 0.631 | 75.76 | 79.3 | 215.3 |

| YOLOv5s-obb [26] | CSPDarknet53-S | 0.935 | 0.915 | 0.584 | 0.508 | 7.64 | 73.6 | 17.4 |

| RYOLOv5s_D+ (ours) | CSPDarknet53-S | 0.944 | 0.918 | 0.698 | 0.580 | 7.87 | 78.5 | 16.8 |

| YOLOv5m-obb [26] | CSPDarknet53-M | 0.947 | 0.937 | 0.721 | 0.641 | 21.61 | 81.1 | 50.6 |

| RYOLOv5m_D+ (ours) | CSPDarknet53-M | 0.944 | 0.954 | 0.757 | 0.647 | 22.48 | 82.6 | 46.1 |

| YOLOv5l-obb [26] | CSPDarknet53-L | 0.942 | 0.948 | 0.757 | 0.679 | 47.10 | 83.1 | 110.8 |

| RYOLOv5l_D+ (ours) | CSPDarknet53-L | 0.954 | 0.959 | 0.770 | 0.699 | 48.70 | 84.3 | 98.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, C.; Jiang, X.; Wu, F.; Fu, Y.; Zhang, Y.; Li, X.; Fu, T.; Pei, J. Research on Vehicle Detection in Infrared Aerial Images in Complex Urban and Road Backgrounds. Electronics 2024, 13, 319. https://doi.org/10.3390/electronics13020319

Yu C, Jiang X, Wu F, Fu Y, Zhang Y, Li X, Fu T, Pei J. Research on Vehicle Detection in Infrared Aerial Images in Complex Urban and Road Backgrounds. Electronics. 2024; 13(2):319. https://doi.org/10.3390/electronics13020319

Chicago/Turabian StyleYu, Chengrui, Xiaonan Jiang, Fanlu Wu, Yao Fu, Yu Zhang, Xiangzhi Li, Tianjiao Fu, and Junyan Pei. 2024. "Research on Vehicle Detection in Infrared Aerial Images in Complex Urban and Road Backgrounds" Electronics 13, no. 2: 319. https://doi.org/10.3390/electronics13020319

APA StyleYu, C., Jiang, X., Wu, F., Fu, Y., Zhang, Y., Li, X., Fu, T., & Pei, J. (2024). Research on Vehicle Detection in Infrared Aerial Images in Complex Urban and Road Backgrounds. Electronics, 13(2), 319. https://doi.org/10.3390/electronics13020319