Distributed Multi-Robot SLAM Algorithm with Lightweight Communication and Optimization

Abstract

1. Introduction

- 1.

- A 2.5D grid map of the keyframe-based submap is used for inter-machine communication, reducing bandwidth while achieving a balance between bandwidth and the robustness of map fusion.

- 2.

- Sliding window optimization is used to update the poses of robots, ensuring the efficiency of pose optimization and enhancing the real-time performance of the algorithm.

- 3.

- An extensive evaluation of the system’s overall performance is conducted under different conditions through a real-world experiment.

2. Related Work

3. Approach

3.1. Inter-Robot Place Recognition

3.2. Relative Pose Optimization

3.3. Efficient, Consistent Map

4. Experiments

4.1. Real Environment

4.1.1. Implementation Details

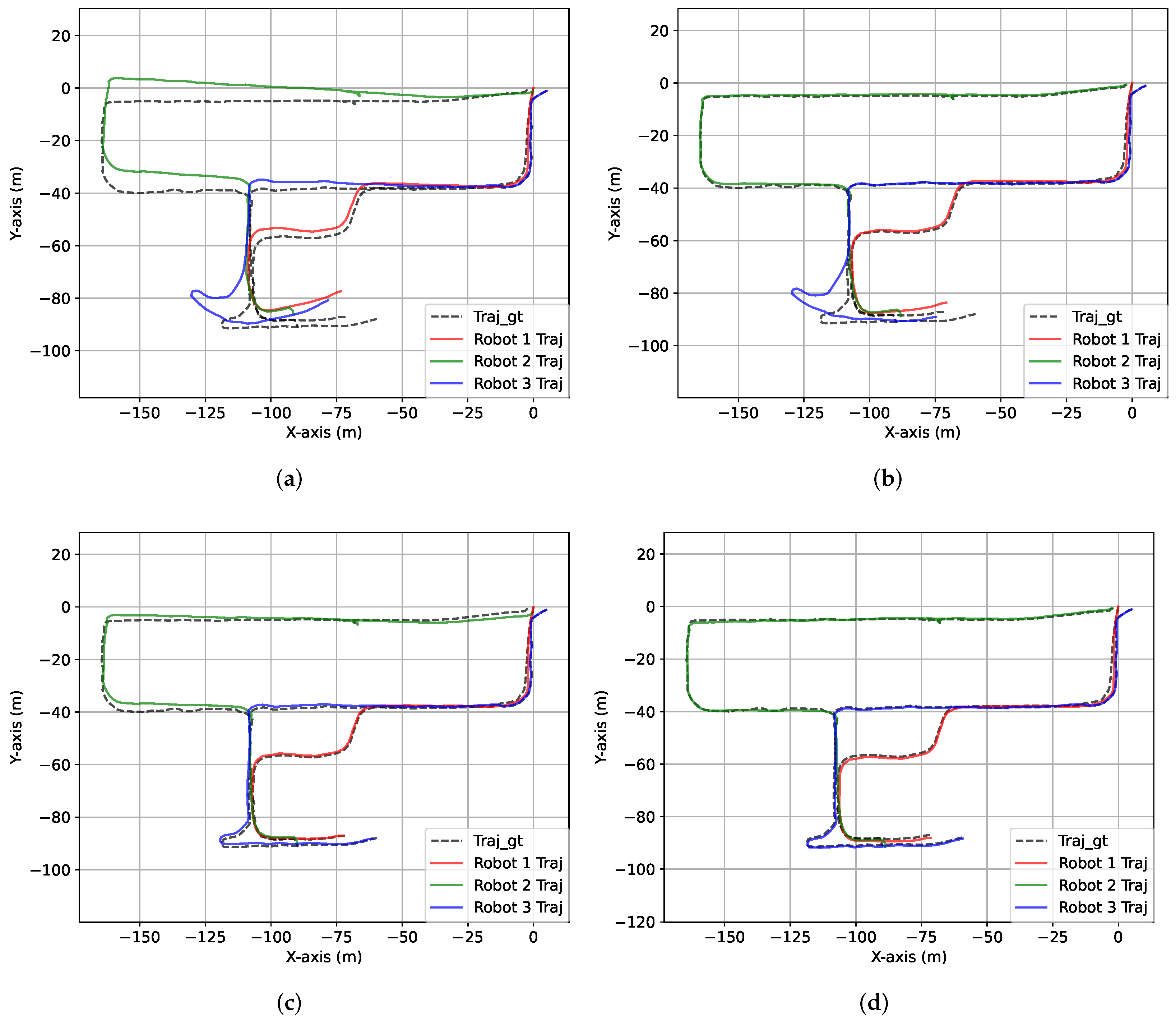

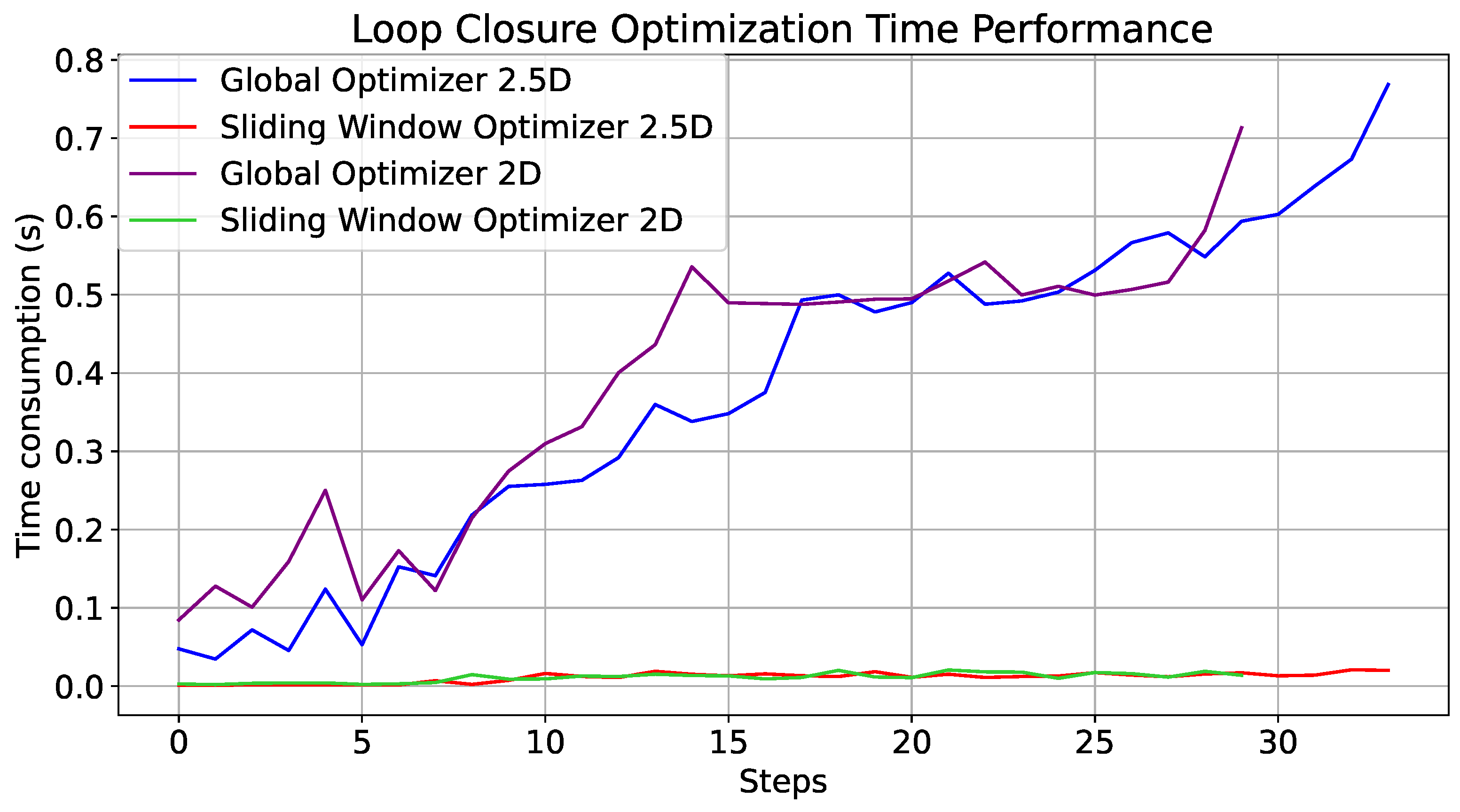

4.1.2. Results and Discussion

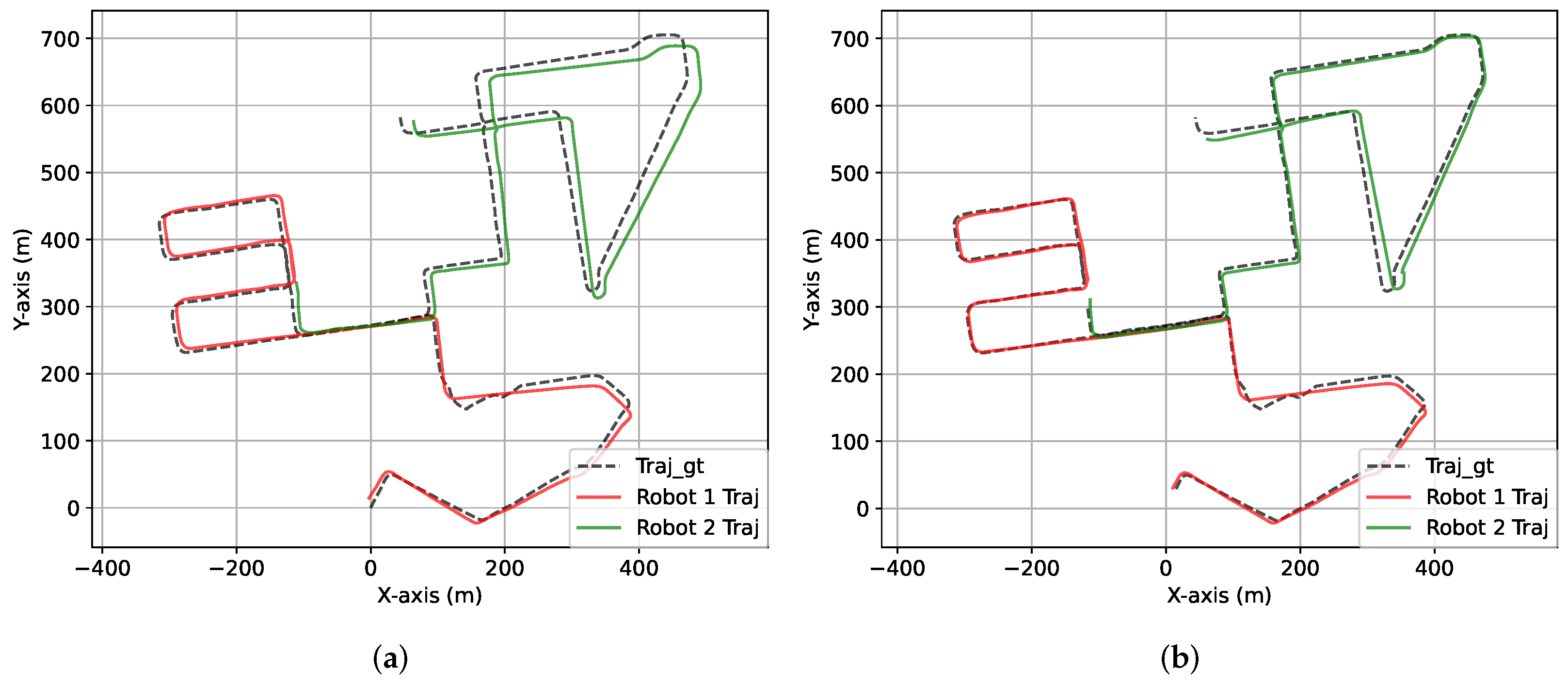

4.2. Public Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in real-time. In Proceedings of the Robotics: Science and Systems Conference (RSS), Berkeley, CA, USA, 12–16 July 2014; pp. 109–111. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Daniela, R. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: New York, NY, USA, 2020; pp. 5135–5142. [Google Scholar]

- Dubé, R.; Gawel, A.; Sommer, H.; Nieto, J.; Siegwart, R.; Cadena, C. An online multi-robot SLAM system for 3D LiDARs. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1004–1011. [Google Scholar]

- Zhang, P.; Wang, H.; Ding, B.; Shang, S. Cloud-Based Framework for Scalable and Real-Time Multi-Robot SLAM. In Proceedings of the 2018 IEEE International Conference on Web Services (ICWS), San Francisco, CA, USA, 2–7 July 2018; pp. 147–154. [Google Scholar]

- Lajoie, P.Y.; Ramtoula, B.; Chang, Y.; Carlone, L.; Beltrame, G. DOOR-SLAM: Distributed, Online, and Outlier Resilient SLAM for Robotic Teams. IEEE Robot. Autom. Lett. 2020, 5, 1656–1663. [Google Scholar] [CrossRef]

- Chang, Y.; Tian, Y.; How, J.P.; Carlone, L. Kimera-Multi: A System for Distributed Multi-Robot Metric-Semantic Simultaneous Localization and Mapping. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation, Xi’an, China, 30 May–5 June 2021; pp. 11210–11218. [Google Scholar]

- Lu, S.; Xu, X.; Yin, H.; Chen, Z.; Xiong, R.; Wang, Y. One RING to Rule Them All: Radon Sinogram for Place Recognition, Orientation and Translation Estimation. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 2778–2785. [Google Scholar]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN architecture for weakly supervised place recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5297–5307. [Google Scholar]

- Jégou, H.; Douze, M.; Schmid, C.; Pérez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3304–3311. [Google Scholar]

- Keetha, N.; Mishra, A.; Karhade, J.; Jatavallabhula, K.M.; Scherer, S.; Krishna, M.; Garg, S. AnyLoc: Towards Universal Visual Place Recognition. arXiv 2023, arXiv:2308.00688. [Google Scholar] [CrossRef]

- Kim, G.; Kim, A. Scan Context: Egocentric Spatial Descriptor for Place Recognition Within 3D Point Cloud Map. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4802–4809. [Google Scholar]

- Uy, M.; Lee, G. PointNetVLAD: Deep Point Cloud Based Retrieval for Large-Scale Place Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4470–4479. [Google Scholar]

- Xu, X.; Yin, H.; Chen, Z.; Li, Y.; Wang, Y.; Xiong, R. DiSCO: Differentiable Scan Context with Orientation. IEEE Robot. Autom. Lett. 2021, 6, 2791–2798. [Google Scholar] [CrossRef]

- Huang, Y.; Shan, T.; Chen, F.; Englot, B. DiSCo-SLAM: Distributed Scan Context-Enabled Multi-Robot LiDAR SLAM With Two-Stage Global-Local Graph Optimization. IEEE Robot. Autom. Lett. 2022, 7, 1150–1157. [Google Scholar] [CrossRef]

- McConnell, J.; Huang, Y.; Szenher, P.; Collado-Gonzalez, I.; Englot, B. DRACo-SLAM: Distributed Robust Acoustic Communication-efficient SLAM for Imaging Sonar Equipped Underwater Robot Teams. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 8457–8464. [Google Scholar] [CrossRef]

- Tian, Y.; Chang, Y.; Herrera Arias, F.; Nieto-Granda, C.; How, J.P.; Carlone, L. Kimera-Multi: Robust, Distributed, Dense Metric-Semantic SLAM for Multi-Robot Systems. IEEE Trans. Robot. 2022, 38, 2022–2038. [Google Scholar] [CrossRef]

- Tian, Y.; Chang, Y.; Quang, L.; Schang, A.; Nieto-Granda, C.; How, J.P.; Carlone, L. Resilient and Distributed Multi-Robot Visual SLAM: Datasets, Experiments, and Lessons Learned. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 11027–11034. [Google Scholar] [CrossRef]

- Bozzi, A.; Zero, E.; Sacile, R.; Bersani, C. Real-time Robust Trajectory Control for Vehicle Platoons: A Linear Matrix Inequality-based Approach. In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics, Paris, France, 6–8 July 2021. [Google Scholar]

- Cramariuc, A.; Bernreiter, L.; Tschopp, F.; Fehr, M.; Reijgwart, V.; Nieto, J.; Siegwart, R.; Cadena, C. maplab 2.0—A Modular and Multi-Modal Mapping Framework. IEEE Robot. Autom. Lett. 2023, 8, 520–527. [Google Scholar] [CrossRef]

- Lajoie, P.Y.; Beltrame, G. Swarm-SLAM: Sparse Decentralized Collaborative Simultaneous Localization and Mapping Framework for Multi-Robot Systems. IEEE Robot. Autom. Lett. 2024, 9, 475–482. [Google Scholar] [CrossRef]

- Zhong, S.; Qi, Y.; Chen, Z.; Wu, J.; Chen, H.; Liu, M. DCL-SLAM: A Distributed Collaborative LiDAR SLAM Framework for a Robotic Swarm. IEEE Sensors J. 2024, 24, 4786–4797. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Z.; Xu, C.Z.; Sarma, S.E.; Yang, J.; Kong, H. LiDAR Iris for Loop-Closure Detection. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 5769–5775. [Google Scholar]

- He, L.; Wang, X.; Zhang, H. M2DP: A novel 3D point cloud descriptor and its application in loop closure detection. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 231–237. [Google Scholar]

- Yu, J.; Tong, J.; Xu, Y.; Xu, Z.; Dong, H.; Yang, T.; Wang, Y. SMMR-Explore: SubMap-based Multi-Robot Exploration System with Multi-robot Multi-target Potential Field Exploration Method. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 8779–8785. [Google Scholar]

- Mangelson, J.G.; Dominic, D.; Eustice, R.M.; Vasudevan, R. Pairwise Consistent Measurement Set Maximization for Robust Multi-Robot Map Merging. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 2916–2923. [Google Scholar]

- Chen, K.; Nemiroff, R.; Lopez, B.T. Direct LiDAR-Inertial Odometry: Lightweight LIO with Continuous-Time Motion Correction. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 3983–3989. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Rob. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

| SWO | 2.5 D | Mapping Error [m] | Robot Localization Error [m] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1–2 | 3–4 | 5–6 | 7–8 | 9–10 | AE | Robot1 | Robot2 | Robot3 | AE | ||

| ✗ | ✗ | — | 0.34 | — | 0.28 | — | 0.31 | 2.78 | 5.65 | 6.08 | 4.84 |

| ✓ | ✗ | — | 0.16 | — | 0.14 | — | 0.15 | 1.02 | 0.99 | 4.54 | 2.18 |

| ✗ | ✓ | 0.14 | 0.12 | 0.15 | 0.16 | 0.07 | 0.13 | 0.88 | 1.86 | 0.92 | 1.22 |

| ✓ | ✓ | 0.14 | 0.10 | 0.04 | 0.13 | 0.01 | 0.08 | 0.62 | 0.64 | 0.44 | 0.57 |

| Traj | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | AE |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Robot1 | 0.29 | 1.20 | 0.40 | 0.22 | 0.33 | 0.58 | 0.79 | 0.72 | 0.56 | 0.99 | 0.61 |

| Robot2 | 0.53 | 0.44 | 0.68 | 0.41 | 0.59 | 0.75 | 0.65 | 1.16 | 0.72 | 0.46 | 0.64 |

| Robot3 | 0.29 | 0.28 | 0.34 | 0.30 | 0.28 | 0.43 | 0.51 | 0.48 | 0.49 | 0.61 | 0.41 |

| AE | 0.37 | 0.64 | 0.47 | 0.31 | 0.40 | 0.59 | 0.65 | 0.78 | 0.59 | 0.69 | 0.55 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.; Ma, C.; Zou, D.; Jiao, S.; Chen, C.; Wang, J. Distributed Multi-Robot SLAM Algorithm with Lightweight Communication and Optimization. Electronics 2024, 13, 4129. https://doi.org/10.3390/electronics13204129

Han J, Ma C, Zou D, Jiao S, Chen C, Wang J. Distributed Multi-Robot SLAM Algorithm with Lightweight Communication and Optimization. Electronics. 2024; 13(20):4129. https://doi.org/10.3390/electronics13204129

Chicago/Turabian StyleHan, Jin, Chongyang Ma, Dan Zou, Song Jiao, Chao Chen, and Jun Wang. 2024. "Distributed Multi-Robot SLAM Algorithm with Lightweight Communication and Optimization" Electronics 13, no. 20: 4129. https://doi.org/10.3390/electronics13204129

APA StyleHan, J., Ma, C., Zou, D., Jiao, S., Chen, C., & Wang, J. (2024). Distributed Multi-Robot SLAM Algorithm with Lightweight Communication and Optimization. Electronics, 13(20), 4129. https://doi.org/10.3390/electronics13204129