A Study of Recommendation Methods Based on Graph Hybrid Neural Networks and Deep Crossing

Abstract

1. Introduction

- (1)

- Traditional recommendation methods mainly focus on dealing with interactions between features, but they cannot capture the interactions of sequential information well, and these problems limit the performance and effectiveness of the recommendation system, leading to poor performance of the model when dealing with sequential-type data [20].

- (2)

- Traditional recommendation models mainly learn the relationship between users and items through feature interactions, usually relying on simple interactions between features, but they cannot directly model the implicit relationships between users and items, such as the user’s interest preferences, potential attributes of items, etc., which leads to the model’s inability to accurately predict the user’s preferences for items.

- (1)

- Innovatively considers the higher-order relationship features between users and items, and effectively extracts the higher-order feature representations of users and items through GCN. These higher-order features capture the complex interactions between users and items, thus helping to improve the overall performance of the model.

- (2)

- Introducing the gated loop unit module, the complex sequence information and intrinsic associations between features are mined, which can effectively capture the changing trends of user interest preferences, thus enhancing the model’s understanding of user behavior and matching of items.

- (3)

- The high-order features extracted by GCN are input into Deep Crossing model for multi-level feature interaction and deep learning. This mechanism not only enhances the interaction between features and improves the expressive ability of the model, but also ensures the accuracy and diversity of the recommendation results and avoids falling into local optimal solutions.

2. Related Work

2.1. Recommendation Methods Based on Deep Crossing

2.2. A Recommendation Method Based on GCN

3. Recommendation Method Based on Hybrid Neural Network with Deep Crossing

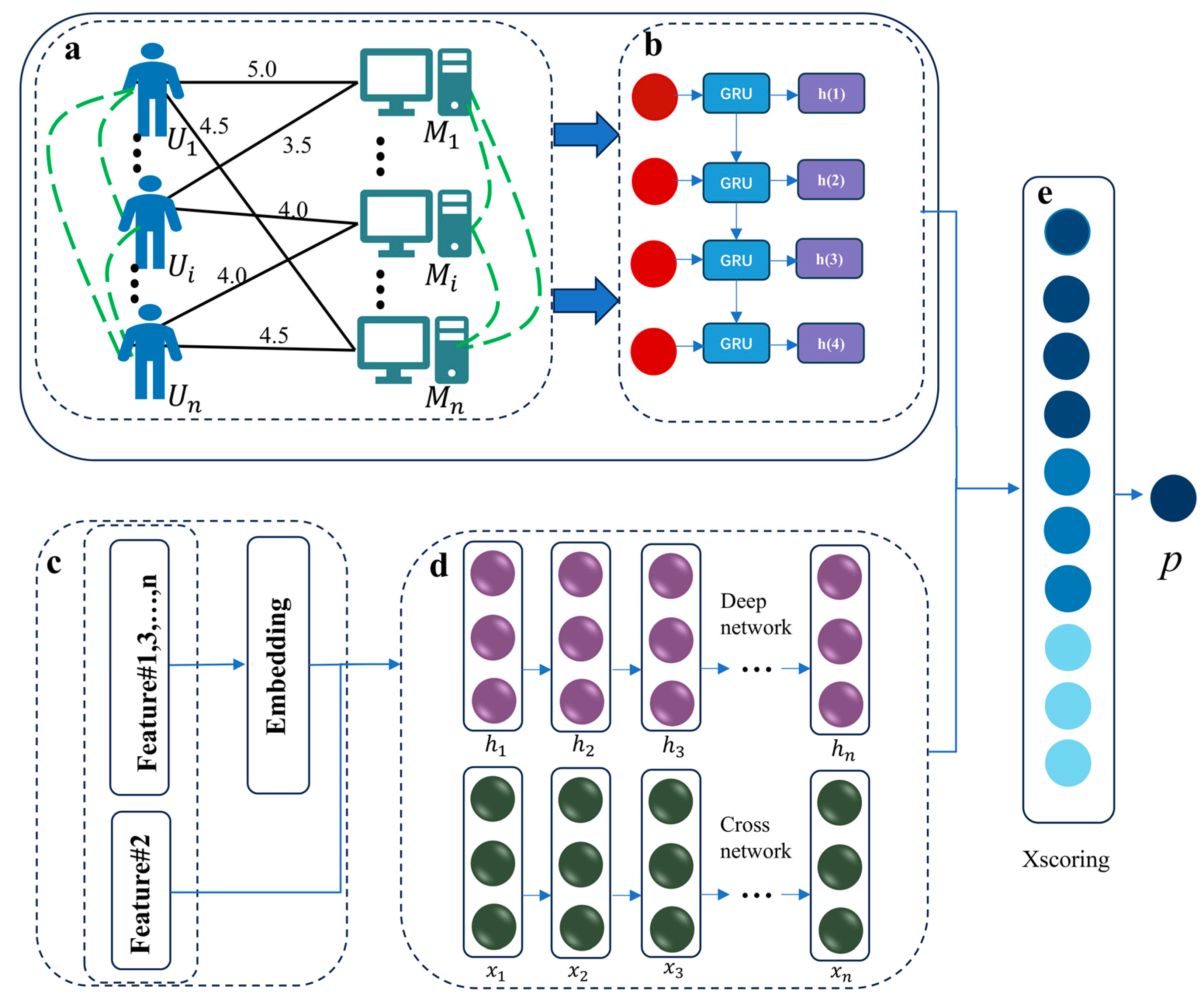

3.1. Overall Framework

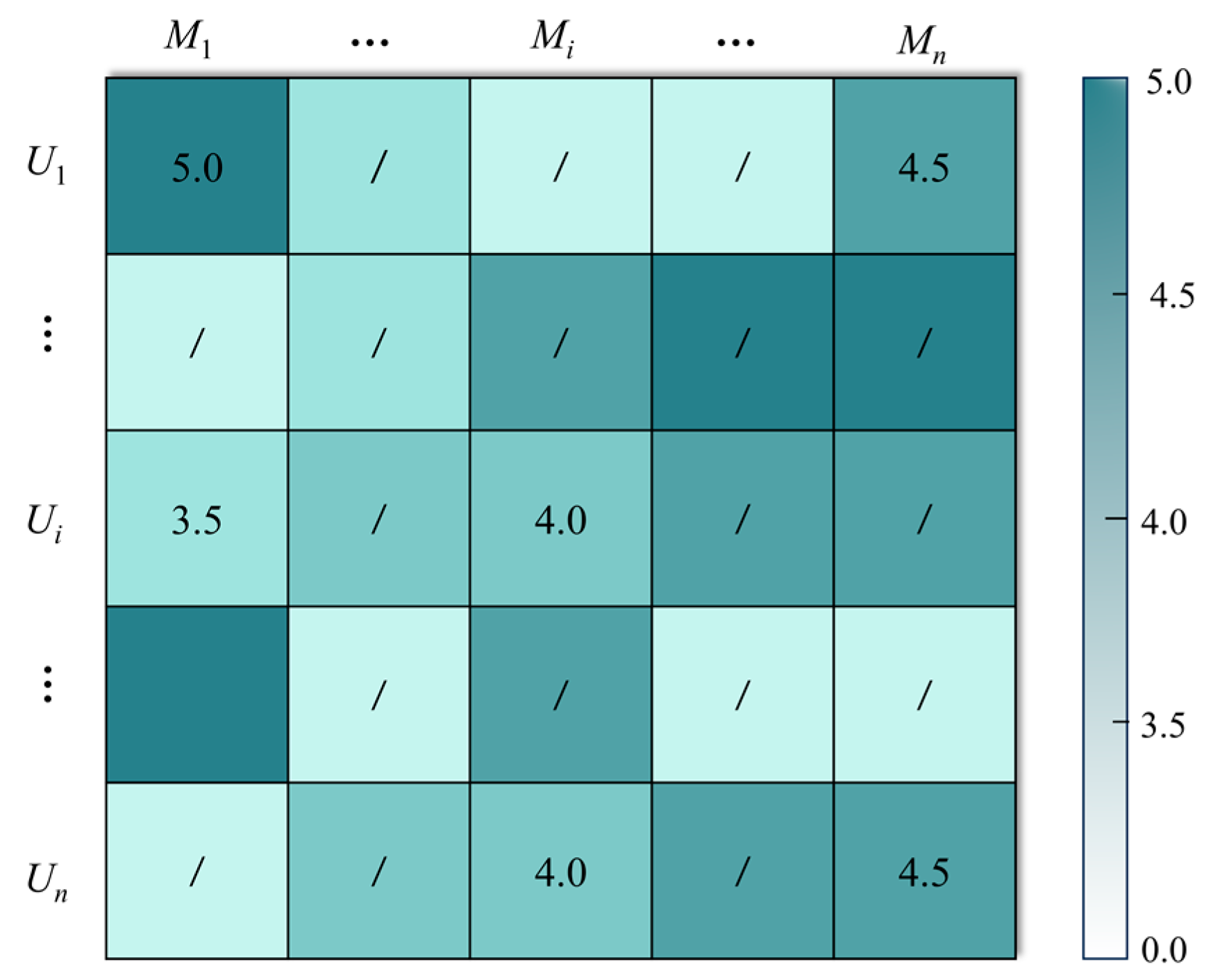

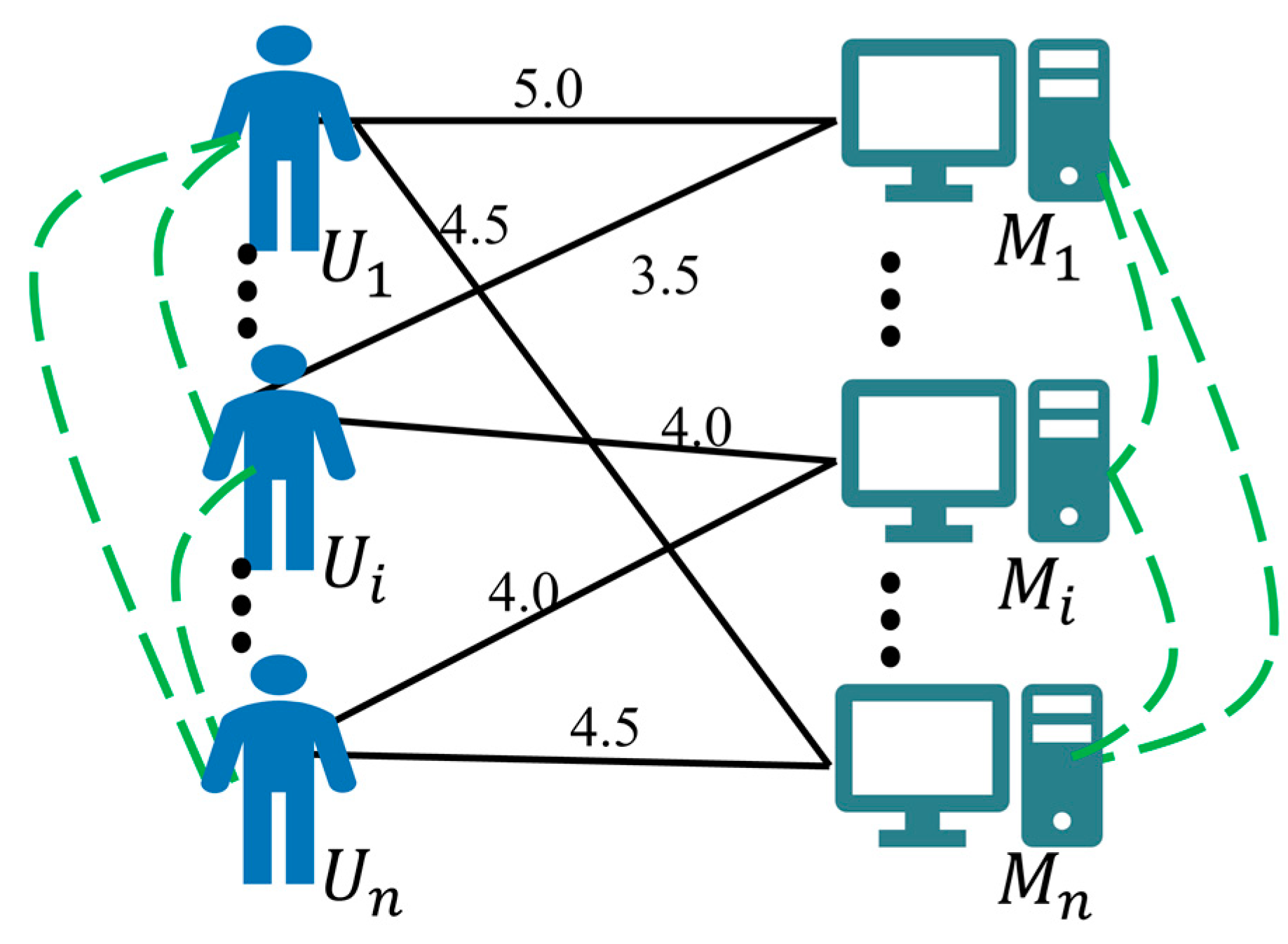

3.2. Constructing a Network Diagram of User Items

3.3. GCN-Based User Feature Learning

3.4. Introduction of GRU for Sequence Modeling

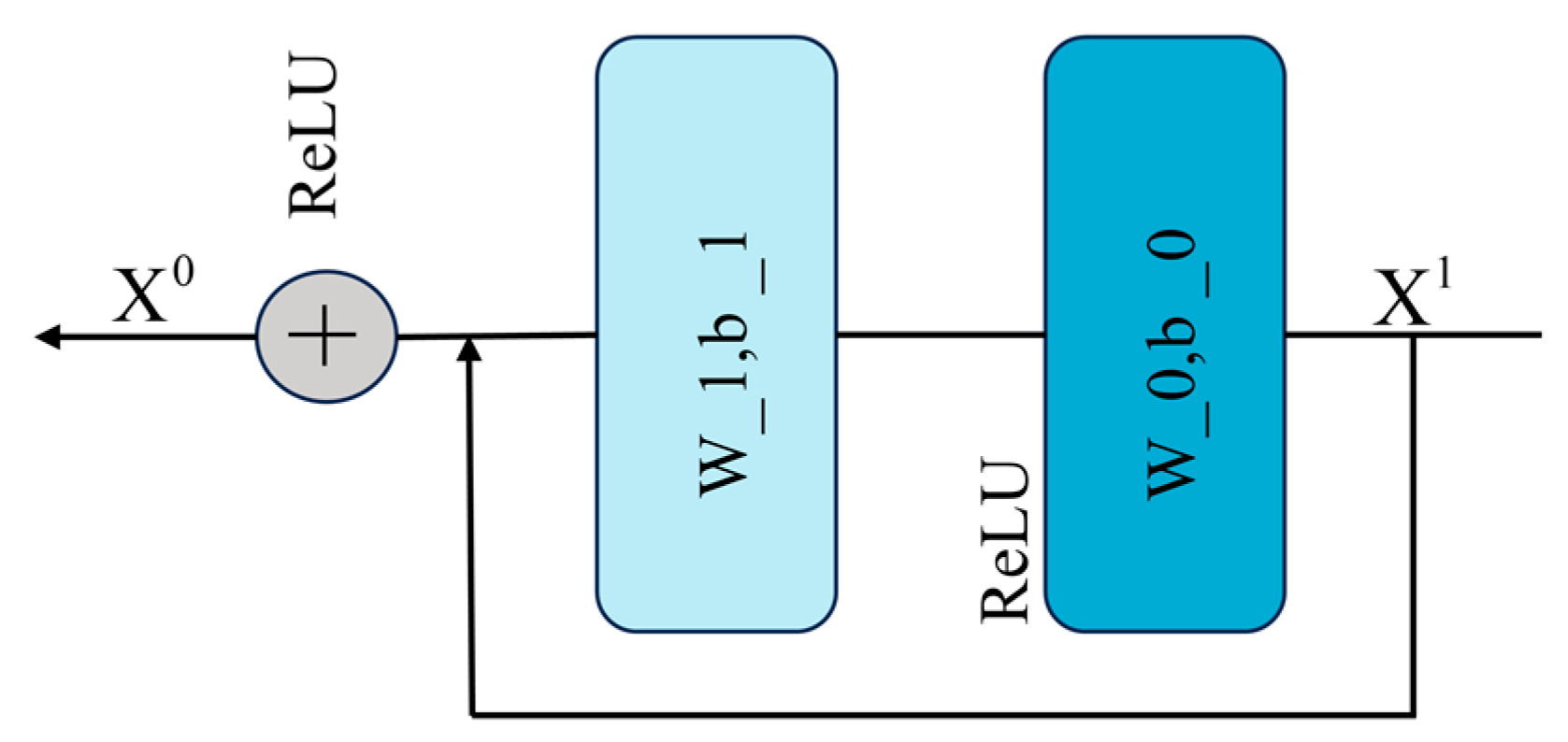

3.5. Feature Combination Based on Deep Crossing Modeling

3.6. DCGCN-GRU Personalized Recommendations

4. Experiments and Analysis of Results

4.1. The Experimental Setup as Well as the Dataset

4.2. Comparison Models

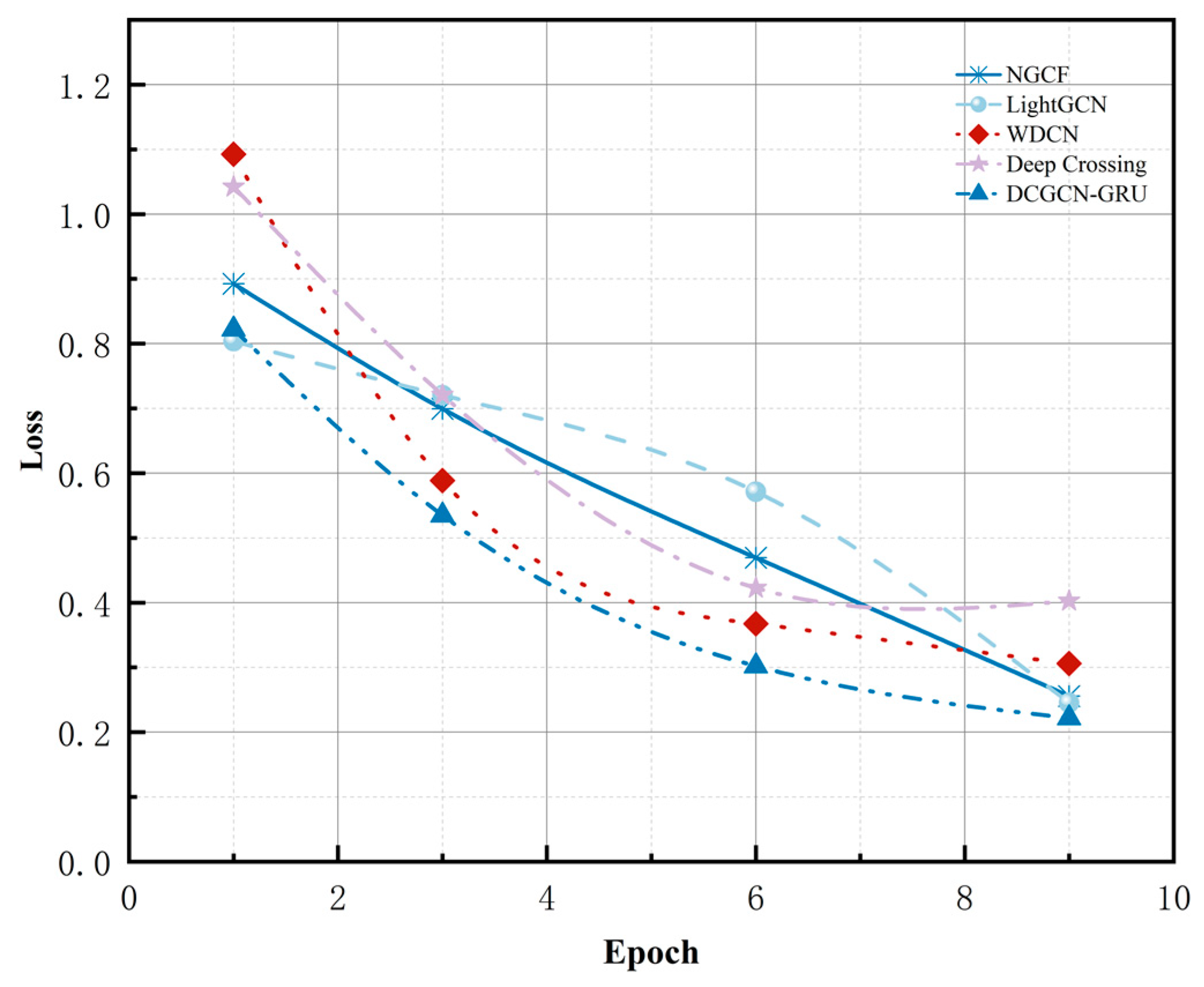

4.3. Performance Comparisons

4.4. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, M.; He, W.; Zhou, X.; Cui, X.; Wu, K.; Zhou, W. A review of recommender systems. Comput. Appl. 2022, 42, 1898–1913. [Google Scholar]

- Chai, W.; Zhang, Z. Recommender system based on graph attention convolutional neural network. Comput. Appl. Softw. 2023, 40, 201–206. [Google Scholar]

- Gao, W.; Wang, R.; Zu, J.; Zu, J.; Dong, C.; Hu, G. A service recommendation algorithm based on multimetric feature intersection. Comput. Sci. 2023, 50, 834–840. [Google Scholar]

- Huang, L.; Jiang, B.; Lu, S.; Liu, Y.; Li, D. A research review of deep learning-based recommender systems. J. Comput. 2018, 41, 1619–1647. [Google Scholar]

- Zhu, F.; Wang, Y.; Chen, C.; Liu, G.; Zheng, X. A graphical and attentional framework for dual-target cross-domain recommendation. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCA1’20), Yokohma, Japan, 7–15 January 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 3001–3008. [Google Scholar]

- Wang, P.; Yu, Q. A bi-objective cross-domain recommendation algorithm incorporating graph convolutional neural networks. J. Tianjin Polytech. Univ. 1–10. Available online: https://cdmd.cnki.com.cn/Article/CDMD-10060-1023752246.htm (accessed on 12 August 2024).

- Hochreiter, S.; S, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014. [Google Scholar] [CrossRef]

- Zhang, H.; Qi, Z.; Tan, X.; He, F. A session recommendation model based on Bi-GRU and external attention network. J. Nanjing Univ. Posts Telecommun. (Nat. Sci. Ed.) 2023, 43, 92–101. [Google Scholar] [CrossRef]

- Shan, Y.; Hoens, T.R.; Jiao, J.; Wang, H.; Yu, D.; Mao, J. Deep Crossing: Web-Scale Modeling without Manually Crafted Combinatorial Features. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’16), San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 255–262. [Google Scholar]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y. Deep Learning Based Recommender System: A Survey and New Perspectives. ACM Comput. Surv. 2019, 52, 1–38. [Google Scholar] [CrossRef]

- Cheng, H.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; ACM: New York, NY, USA, 2016; pp. 7–10. [Google Scholar]

- Wang, T.; Yu, H.; Wang, K.; Su, H. Fault localization based on wide & deep learning model by mining software behavior. Future Gener. Comput. Syst. 2022, 127, 309–319. [Google Scholar]

- Gao, Y.; Zhang, Z.; Wang, Y. Cross-DeepFM-based military training recommendation model. Comput. Eng. Sci. 2022, 44, 1364–1371. [Google Scholar]

- Liu, M.; Cai, S.; Lai, Z.; Qiu, L.; Hu, Z.; Yi, D. A joint learning model for click-through prediction in display advertising. Neurocomputing 2021, 445, 206–219. [Google Scholar] [CrossRef]

- Liu, T.; Zhou, N. A deep input-aware factorization machine based on Setwise ranking. Comput. Eng. Sci. 2023, 45, 1891–1900. [Google Scholar]

- Wang, R.; Shivanna, R.; Cheng, D.Z.; Jain, S.; Lin, D.; Hong, L.; Chi, E.H. DCN-M: Improved Deep & Cross Network for Feature Cross Learning in Web-scale Learning to Rank Systems. arXiv 2020, arXiv:2008.13535. [Google Scholar]

- Wang, R.; Fu, B.; Fu, G.; Wang, M. Deep & Cross Network for Ad Click Predictions. In Proceedings of the KDD ’17: The 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017. [Google Scholar]

- Pei, Z.; Song, X.; Ji, Y.; Yin, T.; Tian, S.; Li, G. Wide and deep cross network for the rate of penetration prediction. Geoenergy Sci. Eng. 2023, 229, 212066. [Google Scholar] [CrossRef]

- Li, T.; Tang, Y.; Liu, B. Multi-interaction hybrid recommendation model based on deep learning. Comput. Eng. Appl. 2019, 55, 135–141. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tang, Y.; Wu, Z. A lightweight graph convolution recommendation method based on residual networks. Comput. Eng. Appl. 2024, 60, 205–212. [Google Scholar]

- Gao, X.; Qiu, Y. Research on the design of recommendation algorithm for particle swarm optimization deep cross neural network. J. Xichang Coll. (Nat. Sci. Ed.) 2021, 35, 75–82. [Google Scholar] [CrossRef]

- dos Santos, K.L.; dos Santos Silva, M.P. Deep Cross-Training: An Approach to Improve Deep Neural Network Classification on Mammographic Images. Expert Syst. Appl. 2024, 238, 122142. [Google Scholar] [CrossRef]

- Li, X.; Sun, L.; Ling, M.; Peng, Y. A survey of graph neural network based recommendation in social networks. Neurocomputing 2023, 549, 126441. [Google Scholar] [CrossRef]

- Fan, W.; Ma, Y.; Li, Q.; He, Y.; Zhao, E.; Tang, J.; Yin, D. Graph neural networks for social recommendation. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; ACM Press: New York, NY, USA; pp. 417–426. [Google Scholar]

- Zhang, Z.; BU, J.; Ester, M.; Zhang, J.; Yao, C.; Yu, Z.; Wang, C. Hierarchical graph pooling with structure learning. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI: New York, NY, USA, 2020; pp. 545–559. [Google Scholar]

- Wang, X.; Ji, H.; Shi, C.; Wang, B.; Ye, Y.; Cui, P.; Yu, P.S. Heterogeneous graph attention network. In Proceedings of the International Conference of World Wide Web, San Francisco, CA, USA, 13–17 May 2019. [Google Scholar]

- Wu, L.; Sun, P.; Hong, R.; Fu, Y.; Wang, X.; Wang, M. SocialGCN: An efficient graph convolutional network based model for social recommendation. arXiv 2018, arXiv:1811.02815. [Google Scholar]

- Tang, J.; Huang, J.; Qin, J.; Lu, H. Session recommendation based on graph co-occurrence augmented multilayer perceptron. Comput. Appl. 2024, 44, 2357–2364. [Google Scholar]

- Liu, X.; Wang, D. Universal consistency of deep ReLU neural networks. Chin. Sci. Inf. Sci. 2024, 54, 638–652. [Google Scholar] [CrossRef]

- Wang, X.; He, X.; Wang, M.; Feng, F.; Chua, T.-S. Neural graph collabora-tive filtering. In Proceedings of the 42nd International ACM SIGIR Confer-ence on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; ACM Press: New York, NY, USA, 2019; pp. 165–174. [Google Scholar]

- He, X.; Deng, K.; Wang, X.; Li, Y.; Zhang, Y.; Wang, M. LightGCN: Simplifying and powering graph convolution network for recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event China, 25–30 July 2020; ACM Press: New York, NY, USA, 2020; pp. 639–648. [Google Scholar]

| Data Set | Users | Items | Rating |

|---|---|---|---|

| MovieLens | 162,541 | 60,000 | 25,000,095 |

| Book-crossing | 278,858 | 271,679 | 1,149,780 |

| Amazon Reviews’23 | 362,000 | 112,600 | 701,500 |

| Data Set | MovieLens | Book-Crossings | Amazon Reviews’23 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | ACC | MSE | MAE | ACC | MSE | MAE | ACC | MAE | MSE |

| NGCF | 0.7343 | 0.0358 | 0.1506 | 0.6985 | 0.0483 | 0.1745 | 0.6721 | 0.0525 | 0.1820 |

| LightGCN | 0.7611 | 0.0289 | 0.1336 | 0.7201 | 0.0438 | 0.1620 | 0.6923 | 0.0461 | 0.1704 |

| WDCN | 0.7769 | 0.0417 | 0.1628 | 0.7332 | 0.0512 | 0.1832 | 0.7105 | 0.0553 | 0.1908 |

| Deep Crossing | 0.7659 | 0.0400 | 0.1682 | 0.7265 | 0.0465 | 0.1787 | 0.7017 | 0.0500 | 0.1865 |

| DCGCN-GRU | 0.8001 | 0.0200 | 0.1289 | 0.7450 | 0.0345 | 0.1456 | 0.7359 | 0.0310 | 0.1400 |

| Data Set | MovieLens | Book-Crossings | Amazon Reviews’23 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | ACC | MSE | MAE | ACC | MSE | MAE | ACC | MAE | MSE |

| DCGCN-GRU-1 | 0.7502 | 0.0355 | 0.2523 | 0.7123 | 0.0415 | 0.1617 | 0.6920 | 0.0398 | 0.1584 |

| DCGCN-GRU-2 | 0.7689 | 0.0200 | 0.1405 | 0.7258 | 0.0340 | 0.1536 | 0.7102 | 0.0364 | 0.1493 |

| DCGCN-LSTM | 0.7803 | 0.0203 | 0.1359 | 0.7400 | 0.0325 | 0.1512 | 0.7256 | 0.0320 | 0.1455 |

| DCGCN-GRU | 0.8001 | 0.0200 | 0.1289 | 0.7450 | 0.0345 | 0.1456 | 0.7359 | 0.0310 | 0.1400 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hai, Y.; Wang, D.; Liu, Z.; Zheng, J.; Ding, C. A Study of Recommendation Methods Based on Graph Hybrid Neural Networks and Deep Crossing. Electronics 2024, 13, 4224. https://doi.org/10.3390/electronics13214224

Hai Y, Wang D, Liu Z, Zheng J, Ding C. A Study of Recommendation Methods Based on Graph Hybrid Neural Networks and Deep Crossing. Electronics. 2024; 13(21):4224. https://doi.org/10.3390/electronics13214224

Chicago/Turabian StyleHai, Yan, Dongyang Wang, Zhizhong Liu, Jitao Zheng, and Chengrui Ding. 2024. "A Study of Recommendation Methods Based on Graph Hybrid Neural Networks and Deep Crossing" Electronics 13, no. 21: 4224. https://doi.org/10.3390/electronics13214224

APA StyleHai, Y., Wang, D., Liu, Z., Zheng, J., & Ding, C. (2024). A Study of Recommendation Methods Based on Graph Hybrid Neural Networks and Deep Crossing. Electronics, 13(21), 4224. https://doi.org/10.3390/electronics13214224