Abstract

Traffic forecasting’s key challenge is to extract dynamic spatial-temporal features within intricate traffic systems. This paper introduces a novel framework for traffic prediction, named Local-Global Spatial-Temporal Graph Convolutional Network (LGSTGCN). The framework consists of three core components. Firstly, a graph attention residual network layer is proposed to capture global spatial dependencies by evaluating traffic mode correlations between different nodes. The context information added in the residual connection can improve the generalization ability of the model. Secondly, a T-GCN module, combining a Graph Convolution Network (GCN) with a Gated Recurrent Unit (GRU), is introduced to capture real-time local spatial-temporal dependencies. Finally, a transformer layer is designed to extract long-term temporal dependence and to identify the sequence characteristics of traffic data through positional encoding. Experiments conducted on four real traffic datasets validate the forecasting performance of the LGSTGCN model. The results demonstrate that LGSTGCN can achieve good performance and be applicable to traffic forecasting tasks.

1. Introduction

The increase in the number of vehicles and changes in travel patterns have imposed great pressure on urban road capacity. Intelligent Transportation Systems (ITSs) [1] present a solution to enhance transportation operational efficiency while preserving environmental resources. An integral component of ITSs is traffic forecasting, which utilizes historical data to predict future traffic flow [2]. Accurate traffic prediction not only serves as a decision-making foundation for travel planning [3] but also contributes to enhancing the efficiency of city road traffic [4].

In early days, traffic flow prediction relied on methods based on statistics [5] and machine learning [6,7]. However, their predictive performances were suboptimal due to the intricate nonlinearity of traffic data and spatial-temporal dependencies. Deep learning techniques [8,9,10] have emerged as effective solutions to address these challenges, showcasing significant success in diverse domains, such as target detection [11] and machine translation [12]. Presently, deep learning approaches are progressively displacing conventional traffic flow forecasting methods [13,14].

Traffic forecasting is primarily influenced by the interplay of traffic flow across time and space. Temporally, historical flow exerts a significant impact on current traffic patterns. Meanwhile, in spatial terms, the interactions among road nodes contribute to fluctuations in traffic flow. Various spatial-temporal prediction models [15,16] have emerged to extract traffic features by considering the inherent dependency in both temporal and spatial embeddings. Effectively capturing the spatial-temporal correlations during the evolution of traffic patterns is a critical factor for obtaining accurate prediction results.

Recently, the utilization of Graph Neural Networks (GNNs) has gained prominence for capturing spatial features within traffic systems, particularly in the interaction among road sensors, owing to its exceptional performance on graph structures. Additionally, the extraction of traffic spatial-temporal dependencies [17,18] has been enhanced by integrating Convolutional Neural Networks (CNNs) [19,20], Recurrent Neural Networks (RNNs) [21,22], and an attention mechanism [23,24]. Notable examples include the Diffusion Convolutional Recurrent Neural Network (DCRNN) [25] and Temporal Graph Convolutional Network (T-GCN) [26], which leverage graph convolutional techniques and the Gated Recurrent Unit (GRU) [27] to extract spatial-temporal dependencies. Although these methods have been proven effective in traffic prediction, three significant challenges remain to be addressed.

Firstly, traffic patterns between roads in the transportation network are interrelated, and two regions far away from each other in the city will also have related traffic patterns [28,29]. For example, during peak commuting hours, people often travel between residential and commercial areas. These two areas are usually not adjacent to each other, but traffic changes between the two areas are closely related. However, most traffic forecasting approaches only focus on the neighborhood information, ignoring the global cross-regional spatial dependence, and fail to completely extract spatial features. Thus, it is necessary to enhance global spatial relationship modeling.

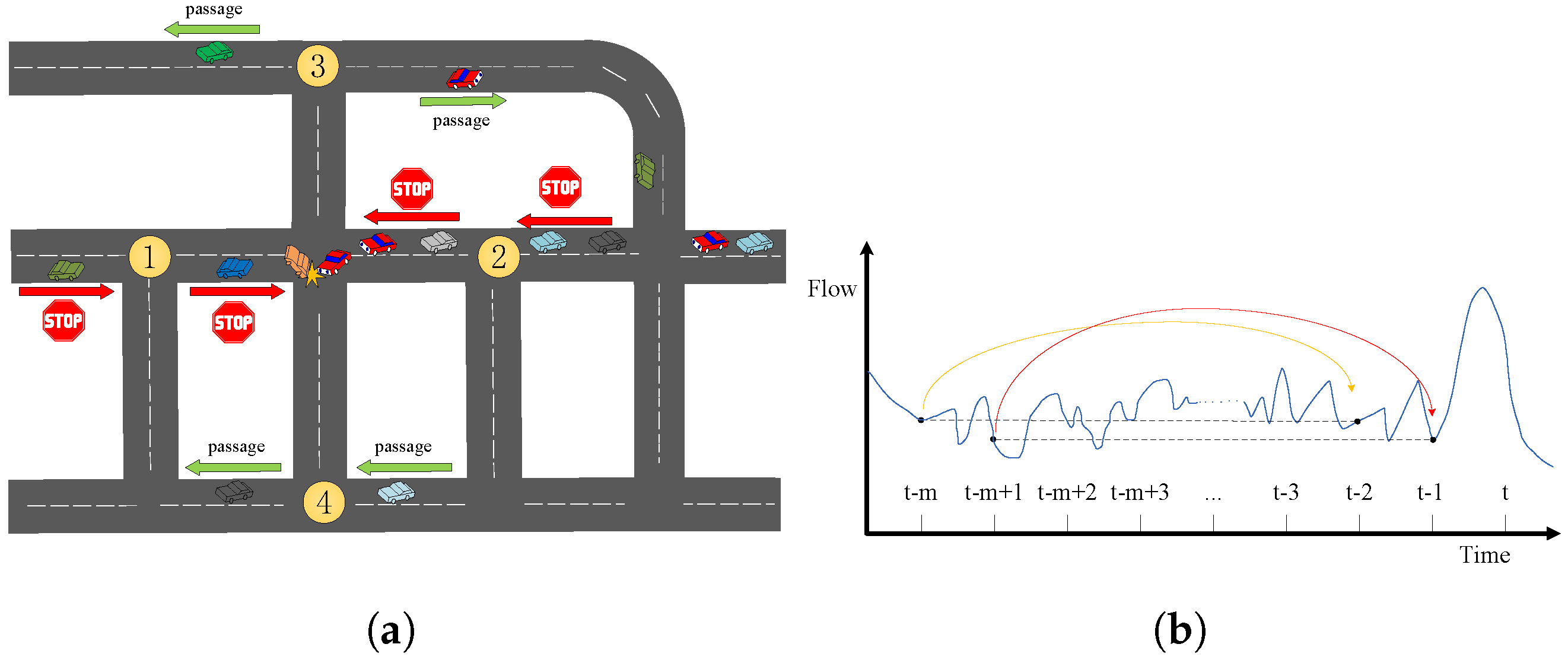

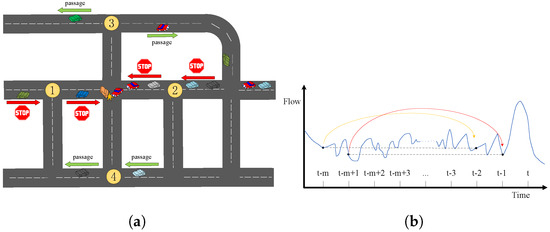

Secondly, when an emergent event (e.g., a car accident) occurs at a road node, the traffic patterns on adjacent roads may change rapidly in a short period of time. This indicates that the neighborhood information shows stronger real-time dependency compared with the distant regions. Moreover, traffic conditions at two locations that are far apart should not affect each other within a short period of time. Thus, it is essential to extract real-time neighborhood information for the global region. However, a lot of traffic prediction approaches only consider the static spatial relationships between roads and ignore the dynamic influence between neighbors [30]. As shown in Figure 1a, the accident at the central road node makes the adjacent road nodes 1 and 2 impassable, but the vehicles at nodes 3 and 4 still pass normally. This indicates that traffic flow should be dynamically influenced by the spatial relationships between roads. It is not enough to capture spatial features only based on the static distance between roads.

Figure 1.

Impact of changes in traffic and flow. (a) Different traffic modes on adjacent roads in case of accident. (b) Long-term correlation of traffic flow on roads.

Thirdly, GRU and Long Short-Term Memory (LSTM) [31] have been widely adopted in various spatial-temporal sequence modeling. However, in those models, a fixed long recurrent structure leads to extremely long input and output components. The long-term temporal dependence cannot be extracted effectively due to gradient vanishing [21,30]. As shown in Figure 1b, the flow values at the timestamp t-m and t-2, pointed out by the yellow curve, and the timestamps t-m+1 and t-1, pointed out by the red curve, have similar distributions. Although they are far apart, there may be hidden correlations in them. Therefore, the global temporal dependence of traffic data is also important in traffic forecasting.

This paper introduces a novel traffic forecasting framework based on Local-Global Spatial-Temporal Graph Convolution Network (LGSTGCN). The LGSTGCN framework encompasses three key components: a Graph Attention Residual Network layer, a T-GCN module, and a Transformer layer. These components are designed to capture the spatial dependence of global regions, local spatial-temporal characteristics, and global temporal dependence, respectively.

The primary contributions of this article can be summarized as follows:

- A graph attention residual network layer is proposed for capturing global spatial dependencies, and richer information about the spatial semantics of different channels can be learned through residual connections.

- A module based on T-GCN is added for extracting real-time spatial-temporal dependence. The graph convolution of the module can learn the dynamic influence of neighboring roads in the current time step.

- A transformer layer is presented for extracting the global temporal dependence of the traffic flow data. The positional encoding scheme used in the layer enables the included self-attention mechanism to identify the position characteristics of the traffic sequence.

- Experiments of different baseline methods, different hyperparameter settings, and ablation study of model modules are conducted. The experimental results show the accuracy and validation of the proposed model.

The remaining sections of this article are structured as follows: In Section 2, we conduct a review of the existing literature pertaining to traffic prediction. Section 3 provides a definition of the traffic forecasting problem. Section 4 details the presentation of the LGSTGCN model. In Section 5, the experimental comparison between the baseline approach and the LGSTGCN model is analyzed. In Section 6, the conclusion is drawn and future research is discussed.

2. Related Work

2.1. Traditional Approaches

At the outset of traffic forecasting, it was initially approached as a regression statistics issue. Early classical statistical methods like the Autoregressive Integrated Moving Average (ARIMA) [5] model and Historical Average (HA) [32] model were utilized. However, these statistically based approaches, which rely on the assumption of stationarity, fail to adequately capture the highly nonlinear nature of traffic data.

Consequently, machine learning approaches such as the K-Nearest Neighbors (KNN) [6] algorithm and Support Vector Regression (SVR) [7] algorithm have been employed for traffic forecasting. These approaches proved to be more efficient; however, they relied heavily on manually designed features. Nonetheless, traditional methods generally fail to capture the complex spatial-temporal characteristics of traffic systems.

2.2. Deep Learning Approaches

Deep learning methods, particularly those leveraging techniques such as Recurrent Neural Networks (RNNs) and its variants like GRU, have shown excellent performance in traffic prediction. This is attributed to their adaptive learning capabilities concerning the temporal and spatial features of traffic data. To address the intricate temporal aspects of traffic patterns, RNNs and its variants like GRU are favored for their superior sequence information processing. For example, Ma et al. [33] introduced an LSTM-based prediction method, effectively capturing traffic sequence correlations. Fu et al. [31] utilized both LSTM and GRU to forecast traffic flow. In addition to RNN-based approaches, certain CNN methods have found application in traffic forecasting. Zhang et al. [13] employed LSTM and CNN to capture spatial-temporal characteristics for traffic prediction. Another approach by Zhang et al. [34] transformed the traffic network into an image grid, extracting spatial correlation through residual convolution cells. It is worth noting, however, that CNNs are more suitable for two-dimensional grid spaces, whereas real road networks exhibit irregular topologies.

GNNs have garnered increasing attention in the context of traffic prediction. Zhao et al. [26] introduced a T-GCN model, combining GRU and GCN to extract both temporal and spatial features of traffic data. Li et al. [25] proposed a DCRNN model, which incorporated diffusion on a directed graph to simulate traffic flow. Wang et al. [35] utilized bidirectional graph message passing to capture fine-grained positional spatial interactions, employing cyclic aggregation for real-time fusion of spatial-temporal embeddings. Cui et al. [36] defined graph convolutional operators based on traffic network topology, integrating LSTM for spatial-temporal traffic prediction. Liu et al. [37] constructed a physical graph directly based on realistic road topology and built a similarity graph and correlation graph using virtual topology. All the complementary graphs are merged into the graph convolution gated recurrent unit for spatial-temporal representation learning. However, these GNN-based methods usually focus on local spatial information and ignore the global spatial dependency while extracting spatial features, which leads to missing the hidden cross-region spatial correlation features.

To address this limitation, certain studies have shifted their focus towards enhancing the representation of spatial features from local to global perspectives. For instance, Zhang et al. [29] devised a multi-scale network that integrated a graph attention network with a graph diffusion mechanism based on convolution, effectively preserving both local and global dependencies of spatial features. Zhao et al. [38] introduced a model that used adaptive correlation and spatial attention to capture local spatial dependencies and global spatial dependencies, respectively. For temporal dependencies, bidirectional gated recurrent layers and temporal attention mechanisms were utilized. Another approach by Zhang et al. [39] involved capturing spatial features from local and global perspectives using attention graph neural networks and convolutional networks. However, these methods overlooked the contextual features of global spatial dependencies, limiting the model’s generalization ability.

2.3. Attention Mechanism

The attention mechanism, recognized for its ability to assess the significance of traffic flow data and adjust information distribution, has proven effective in aggregating data information across various research domains, including person re-identification [40] and action recognition [41]. Recently, attention mechanisms have gained widespread use in traffic prediction. For instance, Bai et al. [42] introduced the A3T-GCN model, combining GCN and GRU to capture dynamic spatial-temporal correlations. The model incorporated an attention mechanism to capture long-term temporal dependence. Ye et al. [43] developed a meta graph transformer structure for traffic forecasting by embedding meta-learning into three self-attention mechanisms. Additionally, Xu et al. [44] extracted directed spatial dependency using a self-attention mechanism and used a transformer framework to capture long-term bidirectional temporal dependencies. However, relying solely on a single attention mechanism neglects the sequential features of traffic flow. Moreover, a model exclusively based on the transformer architecture may struggle to effectively capture local information, thereby limiting the model’s overall learning ability.

Based on the analysis and discussions of the current research, the primary challenges in traffic forecasting involve effectively modeling global spatial dependence and capturing local-to-global temporal dependence while preserving the sequential position information of traffic data. Aiming at these challenges, this paper introduces a new traffic prediction framework based on the Local-Global Spatial-Temporal Graph Convolutional Network (LGSTGCN). In the temporal dimension, a combination of GRU and transformer architectures is employed to extract local-to-global temporal dependence. In the spatial dimension, a graph attention residual network layer is specifically designed to capture global spatial dependence, incorporating context information. Additionally, graph convolution is utilized to further extract dynamic local spatial dependence. This comprehensive framework aims to tackle the identified challenges in traffic forecasting by integrating both global and local spatial-temporal dependencies.

3. Problem Definition

Define the real traffic road topology as graph , where V is the collection of road nodes and , is the set of edges corresponding to the connections between nodes. is the adjacency matrix of the graph. Any elements in A denote the connectivity between nodes and (= 1 means and are connected, = 0 means and are not connected). The traffic flow of the nodes in the graph G is represented as a feature matrix , where F denotes the length of the traffic flow sequence on the road.

Traffic forecasting aims to forecast future traffic sequences by using the road topology graph G and the historical traffic flow sequences , where M is the length of the historical traffic flow sequence and T denotes the length of the future sequence to be predicted.

The mapping function f from the historical series to the predicted series can be formulated as Equation (1):

4. Methodology

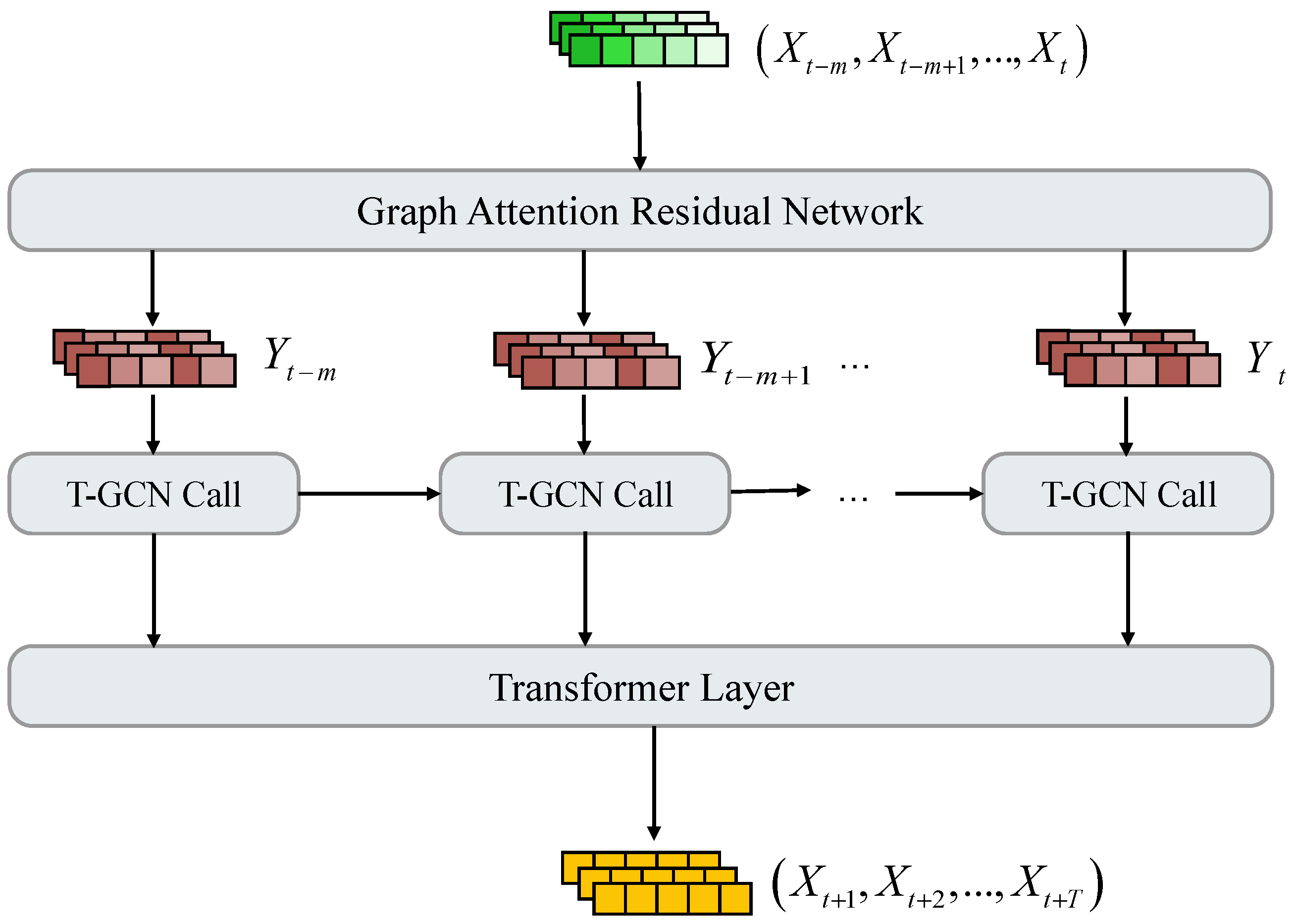

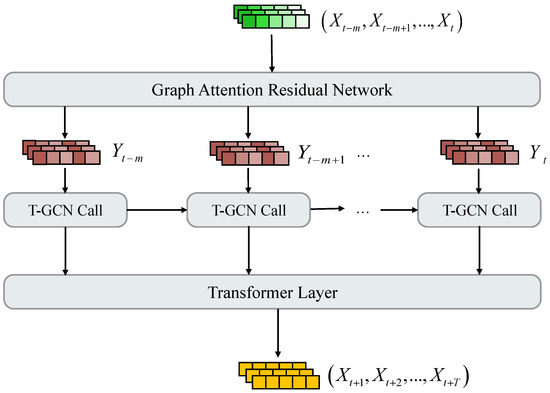

A traffic forecasting model based on the Local-Global Spatial-Temporal Graph Convolutional Network (LGSTGCN) is presented to capture the spatial-temporal dependence of traffic flow data from local to global. The general architecture of the LGSTGCN model is shown in Figure 2. The LGSTGCN model consists of the following three parts.

Figure 2.

The architecture of LGSTGCN.

Global spatial dependency modeling. The graph attention residual network layer is designed to capture global spatial dependencies by calculating feature correlations between different nodes. Furthermore, the inclusion of spatial context information through residual connections is implemented to enhance the generalization capability of the LGSTGCN model.

Real-time local spatial-temporal dependency modeling. In the graph attention residual network layer, because the features of neighboring nodes can be updated according to their correlations with the central node, the graph convolution pays more attention to those neighborhood nodes with stronger correlations when aggregating neighborhood information. In addition, combining with GRU, the real-time local spatial-temporal dependence in the current time step can be extracted.

Global temporal dependency modeling. The transformer layer is designed to capture the global temporal dependence of a traffic sequence. Different attention levels are designated to different positions in the traffic sequence in parallel through positional encoding.

4.1. Global Spatial Dependency Modeling

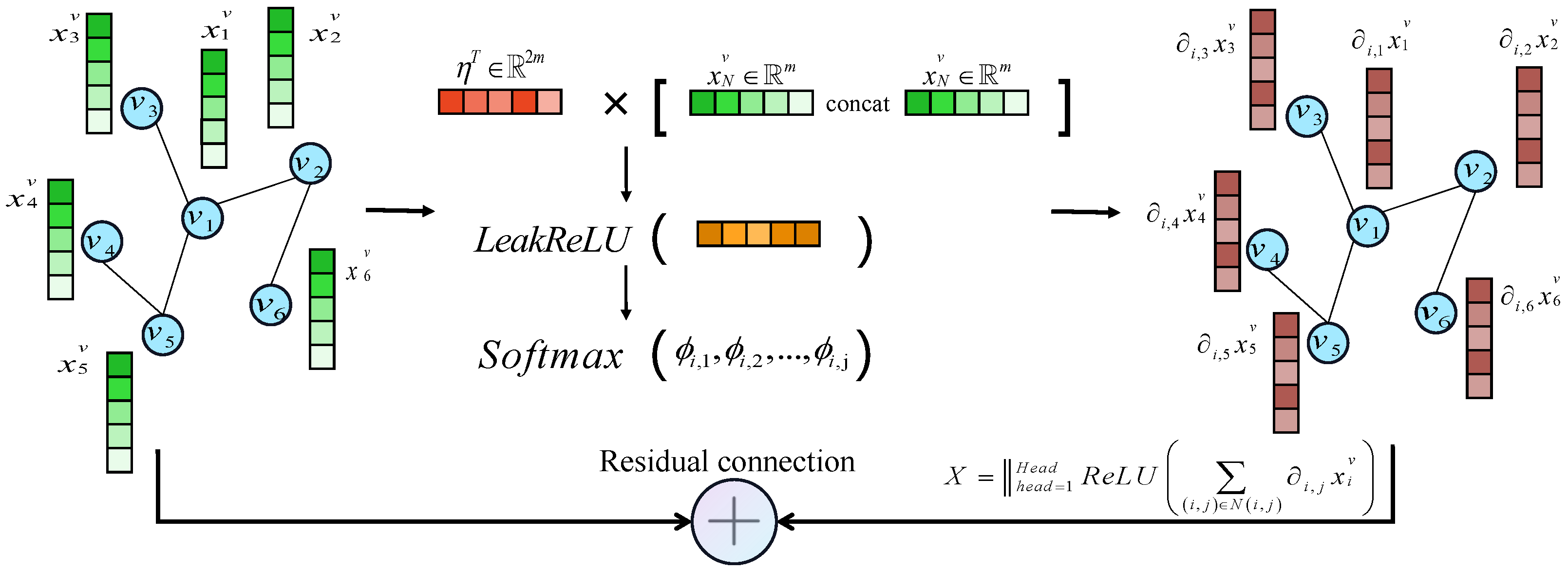

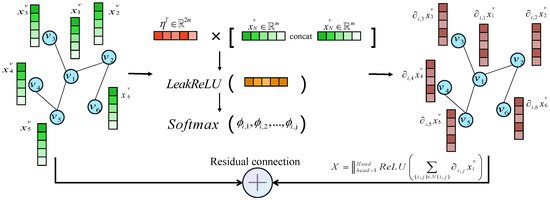

A graph attention residual network layer is introduced to extract the global spatial dependence of nodes’ cross-regions in a traffic system, as illustrated in Figure 3. The feature matrix is the input of the layer.

Figure 3.

The graph attention residual network layer capturing global spatial dependence.

To obtain sufficient spatial feature representation, the feature vector of each node is mapped to a higher-level feature space by a linear transformation, as shown in Equation (2):

where denotes the linear transformation weight matrix.

The graph attention mechanism assesses the pairwise correlation between every two nodes in the traffic topology graph [45]. Define as the correlation coefficient between nodes and . can be expressed as Equation (3):

where ‖ is the feature vector concatenation, is the weight vector, and is the activation function.

Define as the attention weight between nodes and , and it can be denoted as Equation (4):

where is the activation function. Equation (4) means that the attention weight of two nodes is associated with the correlation coefficient between them.

The attention is embedded in a multi-headed way to enrich the representation of spatial features of the model. The node feature matrix containing the global spatial dependency is defined as Equation (5):

where is the number of attention heads and ‖ represents the concatenation of the feature matrices of the different headspaces. denotes the activation function. In Equation (5), the feature vector of node is first multiplied with the attention weight in each subspace, and then the feature fitting is performed by using the activation function. Finally, the feature matrix that contains the global spatial dependency is obtained by embedding the feature representations of multiple subspaces jointly.

In order to enhance the generalization ability of the spatial features of the model, the residual connection is used to add spatial context information to the model. Define Y as the feature matrix containing global spatial context information, as shown in Equation (6):

4.2. Real-Time Local Spatial-Temporal Dependency Modeling

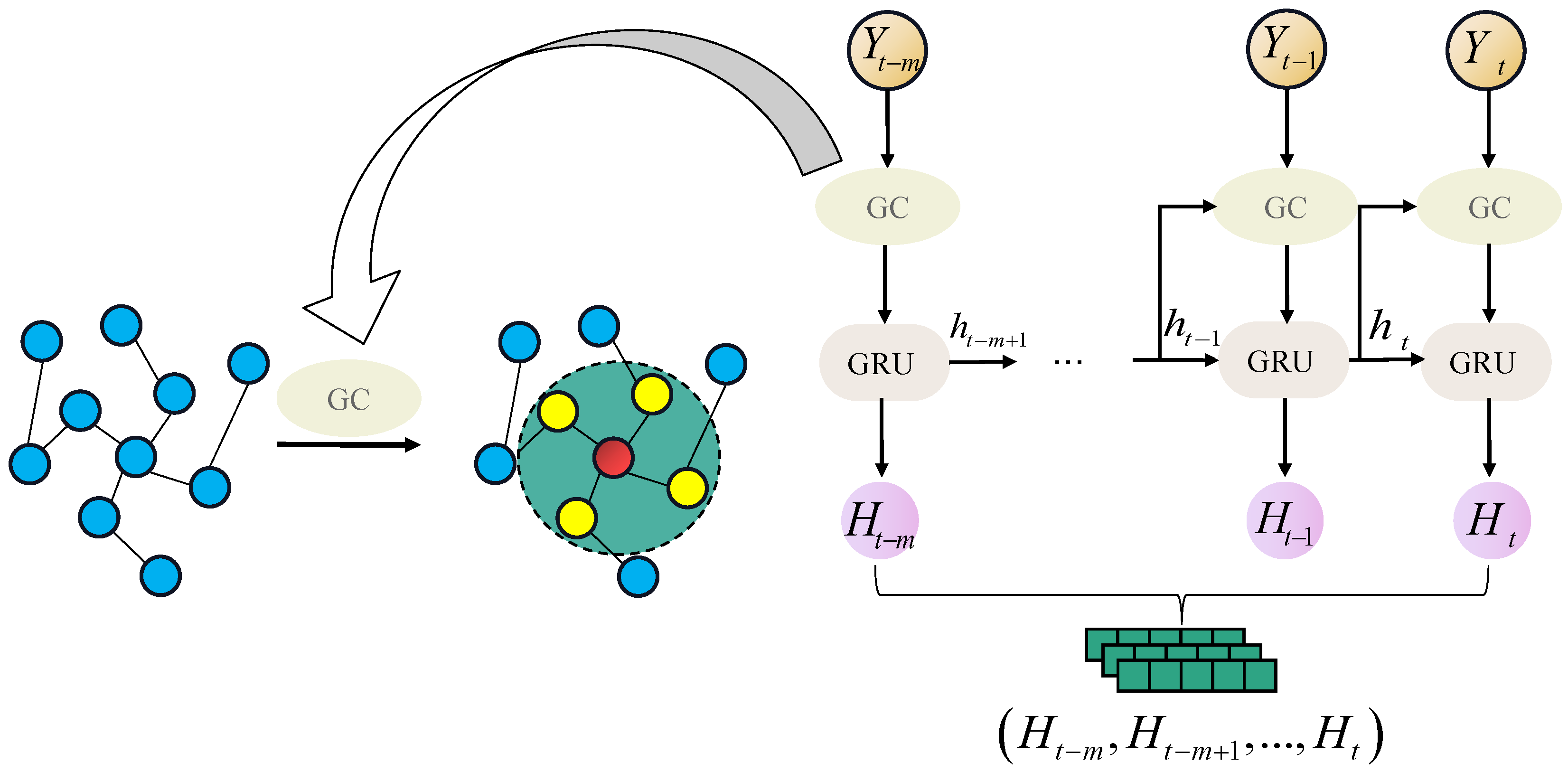

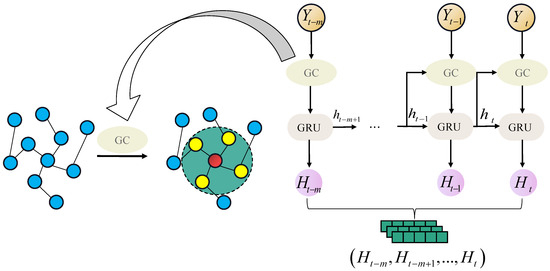

The framework of T-GCN [26] is shown in Figure 4 The T-GCN module is designed to extract real-time spatial-temporal dependence of local traffic. The GCN is embedded into a linear representation of the input and hidden state of the GRU to capture local spatial-temporal dependence in the current time step. Before graph convolution, the neighborhood nodes with greater correlation are updated by the graph attention residual network layer, which allows the graph convolution to learn neighborhood feature information dynamically instead of static features.

Figure 4.

The framework of the T-GCN module.

4.2.1. Local Spatial Dependency

The first-order neighborhood features of aggregated nodes in the GCN layer are used to capture local spatial dependency. is the graph convolution operation, as shown in Equation (7):

where is the learnable matrix and is the sigmoid activation function. The characteristic normalization of the Laplace matrix is shown in Equation (8):

where is the identity matrix and D is the degree matrix of .

4.2.2. Local Temporal Dependency

For capturing the real-time local temporal dependence, the sequence information is extracted in short time steps using the GRU. The GRU captures the temporal information by keeping the hidden state through the gating mechanism. The linear transformation of the GRU is replaced by graph convolution. Define , , and as the reset gate, update gate, and candidate hidden state of the lth time step, respectively, which are calculated by Equations (9)–(11):

where are the biases and tanh is the activation function. The hidden state of the previous time step and the input are connected as the input of the graph convolution operation.

Denote as the hidden state of the GRU at the current time step t, and it is computed by Equation (12):

The ultimate output of this module is a spatial-temporal feature vector containing nodes.

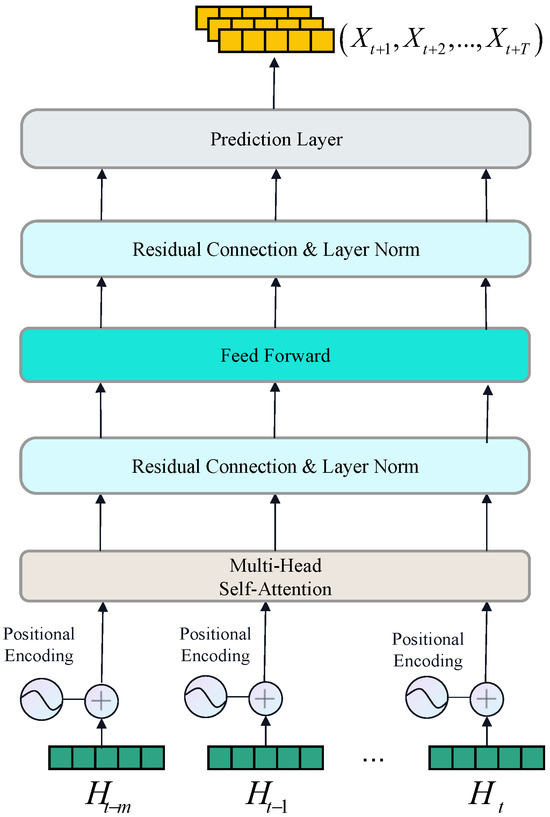

4.3. Global Temporal Dependency Modeling

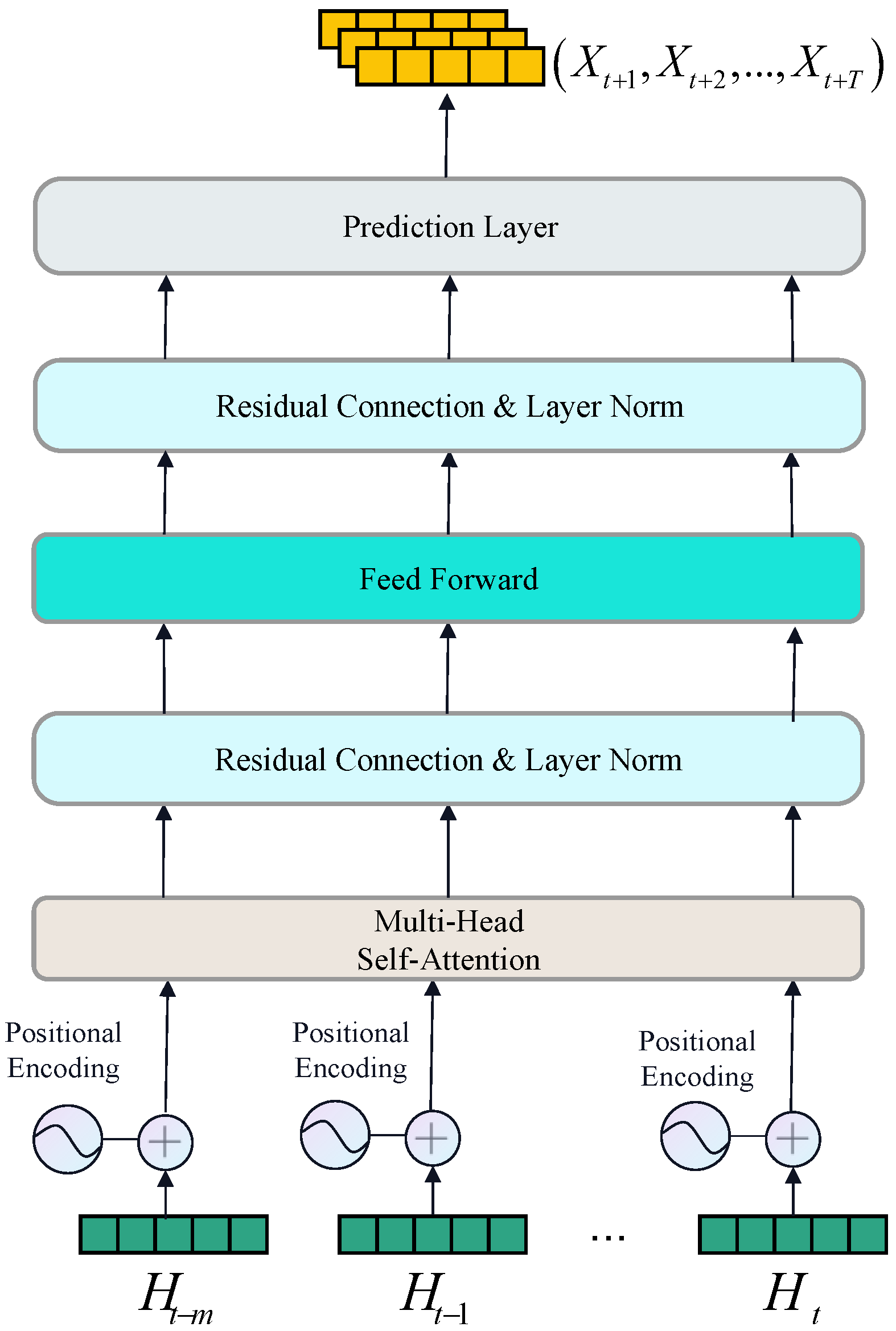

The GRU can capture local temporal information sequentially based on the recurrent structure. However, the GRU cannot capture long-term dependency well due to its long path between input and output components [46]. Actually, traffic temporal dependence may be correlated not only sequentially in a short period of time [30] but also over a long time interval. Therefore, a transformer layer is proposed to capture global temporal dependency. The transformer layer focuses on all time positions based on the attention approach. This global attention approach has strong modeling capabilities for long sequences, thereby effectively learning long-term temporal dependencies. In addition, the multi-head attention mechanism enables the model to focus on several different subspaces and learn different feature information. The output of the T-GCN is denoted as the spatial-temporal feature matrix , which is taken as the input of the transformer layer. As illustrated in Figure 5, the transformer layer contains a multi-head self-attention sublayer, two normalization sublayers, a feed-forward neural sublayer, and a prediction sublayer.

Figure 5.

The transformer layer capturing global temporal dependence.

4.3.1. Positional Encoding

Parallelization of attention may lead to the neglect of the relative position of sequence information. A thermal encoding time position information is embedded into the input.

Define as the positional encoding matrix of the for each position i, as shown in Equation (13):

where sin and cos are the trigonometric functions, is the node location, is the feature size of the transformer layer, and i is the position of the node feature vector element. Define as the spatial-temporal feature matrix containing the thermal code, as illustrated in Equation (14):

contains the position information of the traffic flow sequence, which makes the attention no longer treat the position of each sequence equally.

4.3.2. Multi-Head Self-Attention Mechanism

The scaled dot-product attention is adopted in the multi-head self-attention layer. In order to improve the model learning ability, the spatial-temporal feature matrix containing positional encoding is mapped into three new feature spaces, which are denoted as queries (Q), keys (K), and values (V), respectively. They are illustrated in Equation (15):

where , , and are the matrices to be learned.

The self-attention score is defined as Equation (16):

where is the training gradient stability factor.

The temporal information is captured from different subspaces using the multi-head attention mechanism, and the ultimate output is a linear aggregation of each subspace. Define as the spatial-temporal feature matrix containing global temporal dependencies. is obtained by concatenating the feature matrix of all heads, as shown in Equation (17):

4.3.3. Normalization Layer and Feed Forward Network Layer

Layer normalization is further adopted to stabilize the training of the neural network, and the residual connection is used to interact the characteristics of the lower layer with the higher layer neural network [39]. To improve the nonlinear modeling ability of the model, the features after interaction are taken as the input into the feed forward layer. Define and as the outputs of the layer normalization and feed forward layer, respectively. and are obtained by Equations (18) and (19):

where is the layer normalization operation. are the learnable matrices, are the biases.

The structure of the transformer layer is shown in Figure 5. To improve the learning capability of the model, there are two normalization sublayers and one feed forward neural sublayer in this layer.

A fully connected layer is employed as the output layer to generate the final prediction result. Define as the final output of the model, and it can be calculated by Equation (20):

where , are the parameter matrix and the bias, respectively. The transformer layer focuses on different positional locations in the time series in parallel through the attention mechanism so that the global temporal dependency can be captured.

The aim of the model is to minimize the traffic prediction error. The loss function L is defined as Equation (21):

where y and represent truth traffic flow and predicted value, respectively. represents the vector of penalty terms. W is the weight parameter matrix.

5. Experiments

5.1. Datasets

The four real-world datasets Los-loop, SZ-taxi, METR-LA, and PEMS-BAY are used in this paper to assess the prediction performance of the LGSTGCN model. Since these four datasets have been widely used in traffic flow prediction, the fairness of the data can be guaranteed. All data are detected by real road sensors to ensure that the data are representative of and characterized by the speed of vehicles on the traffic roads. The feature matrices and adjacency matrices are also included in the datasets. The brief descriptions of the four datasets are as follows:

Los-loop. This dataset comprises highway traffic flow from Los Angeles County. The temporal scope spans from 1 March to 7 March 2012 with traffic flow recorded at 5 min intervals. There are 207 road sensors in total. The adjacency matrix is obtained based on the distance between the road sensors.

SZ-taxi. This dataset was collected from Luohu District, Shenzhen, encompassing information from 156 major roads. The traffic vehicle speed is recorded at a 15 min time interval, covering the month from 1 January to 31 January 2015.

METR-LA. This dataset includes traffic information collected at five-minute intervals from 207 loop detectors on Los Angeles highways. The time spans of data are from 1 March to 30 June 2012.

PEMS-BAY. This dataset is sourced from the California Transportation Agencies Performance Measurement System and comprises traffic information collected at five-minute intervals with 325 sensors in the Bay Area. The data used for the experiments are from 1 January 2017 to 31 May 2017.

The details of the four datasets are listed in Table 1.

Table 1.

Traffic datasets.

5.2. Baseline Methods and Evaluation Metrics

For the datasets Los-loop and SZ-taxi, we select traditional methods (HA [32] and ARIMA [47]), a machine learning approach (SVR [48]), and deep learning approaches (GRU [27], T-GCN [26], A3T-GCN [42], DCRNN [25]) as the baseline methods.

Several models based on deep learning, including STGCN [19], SLCNN [49], DCRNN [25], Graph WaveNet [50], MRA-BGCN [51], GMAN [52], STGRAT [53], FC-GAGA [54], TSE-SC [55], STGNN [30], and STFGNN [56], are tested on the datasets METR-LA and PEMS-BAY to further verify the performance of the LGSTGCN model.

Six metrics, including Root Mean Square Error (), Mean Absolute Error (), Accuracy (), Coefficient of Determination (), Explained Variance Score (), and Mean Absolute Percentage Error () are used to assess the prediction performance of each method. The definitions of these metrics are provided below in Equations (22)–(27):

where is the number of predicted value. For , , and that evaluate the prediction errors of the model, smaller values indicate better performance. and represent precision and the fitting degree between the predicted value and truth value, respectively. is used to measure the extent to which the model interprets fluctuations in the dataset. In these three metrics, higher values mean better forecasting performance.

5.3. Experimental Settings

In the experiments, the four datasets are partitioned into training set and test set with the ratio of 0.8:0.2. The LGSTGCN model is implemented in the Pytorch framework with an NVIDIA 1050 GPU. The task is to predict traffic speeds for the next 15, 30, and 60 min. The experimental settings of the LGSTGCN model are outlined as follows:

The training epoch is 3000, the learning rate is set to 0.001, and the number of attention heads is 4. For the Los-loop dataset, the batch size is set to 64, the number of hidden units is set to 64, and the feature size of the transformer layer is 32. For the SZ-taxi dataset, the above three hyperparameters are set to 64, 96, and 96, respectively. The METR-LA and PEMS-BAY datasets share the same three hyperparameters: 16 attention heads, 64 hidden units, and a transformer layer feature size of 32. The Adam optimizer is employed for training the model, and an early stopping strategy is utilized.

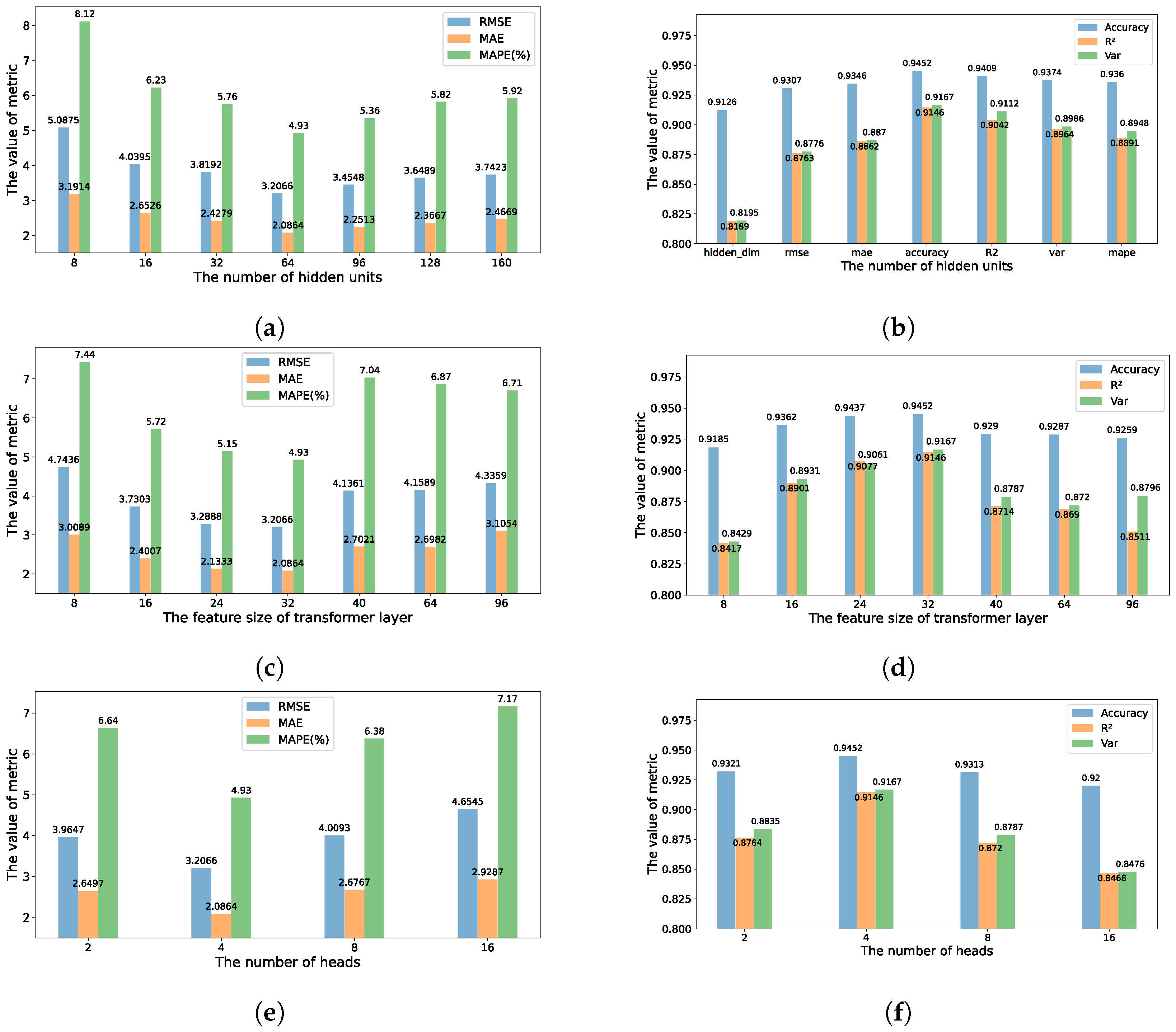

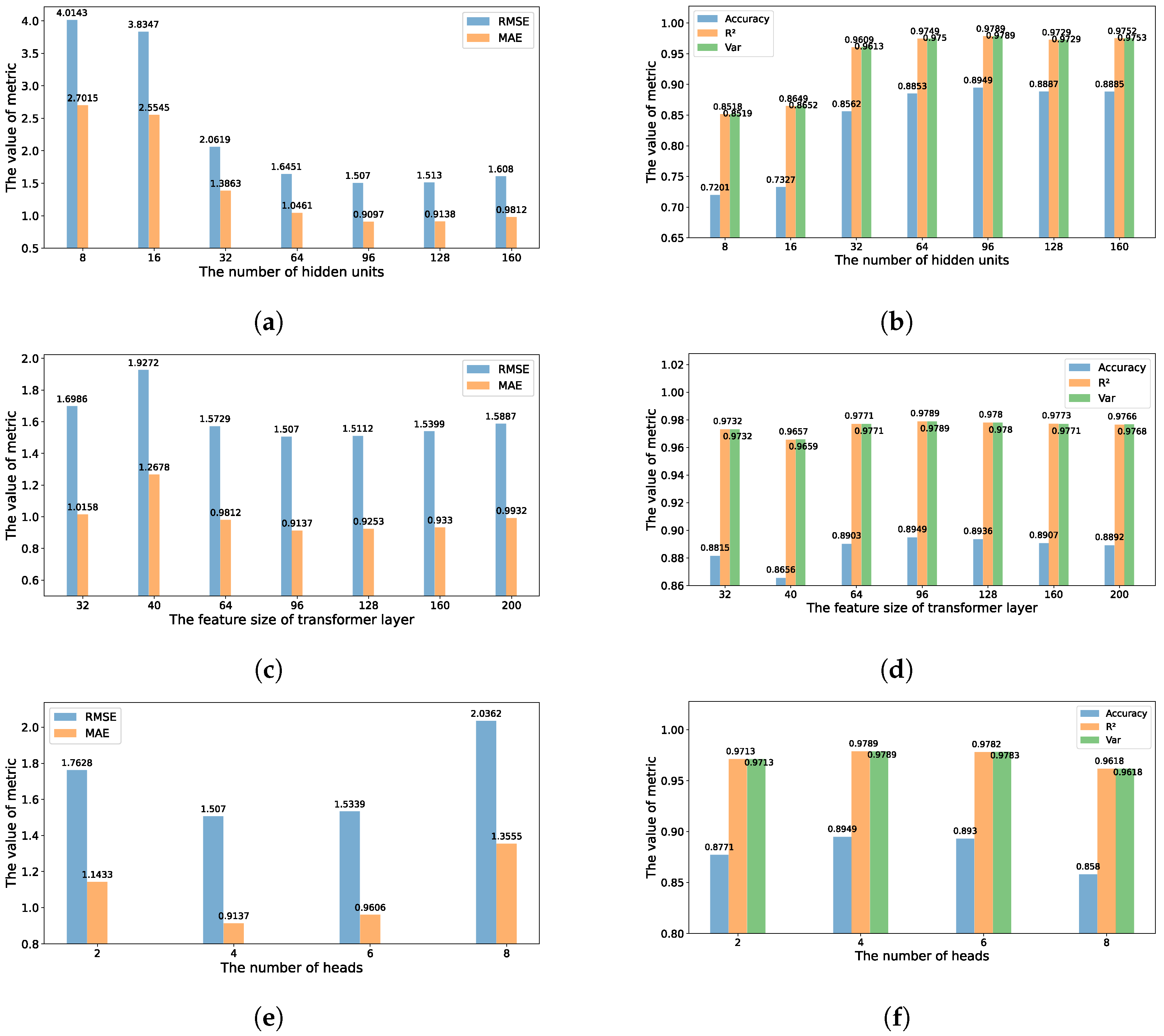

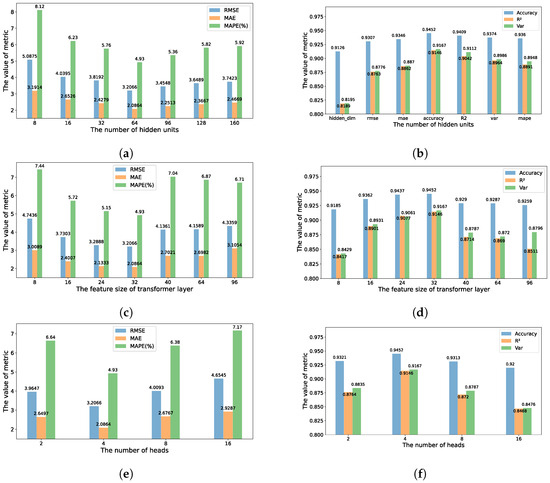

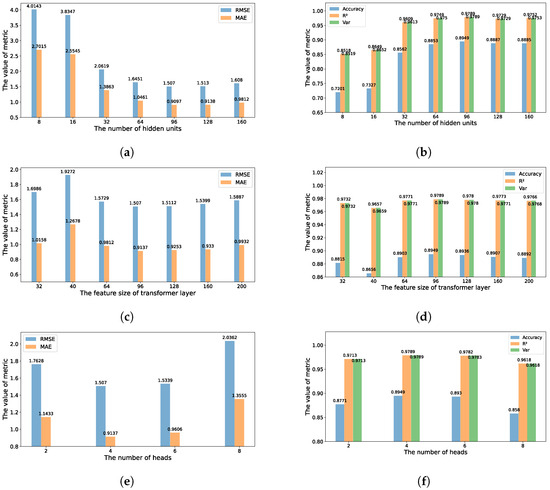

5.4. Hyperparameters Analysis

Three key hyperparameters of the LGSTGCN model, including the number of hidden units, the feature size of the transformer layer, and the number of attention heads , are tested on the Los-loop dataset and SZ-taxi dataset in order to analyze their impact on the model prediction accuracy and obtain the optimal parameter settings. Figure 6 and Figure 7 show the prediction performance with different key hyperparameter settings on the Los-loop dataset and SZ-taxi dataset. The horizontal axes represent the values of three hyperparameters and the vertical axes represent the values metrics.

Figure 6.

Evaluation metric values with different hyperparameter settings on the Los-loop dataset. (a) Impacts of different numbers of hidden units on , , and . (b) Effects of different numbers of hidden units on Accuracy, , and . (c) Influence of different feature sizes of the transformer layer on , , and . (d) Influence of different feature sizes of the transformer layer on Accuracy, , and . (e) Influence of different numbers of heads on , , and . (f) Influence of different numbers of heads on Accuracy, , and .

Figure 7.

Evaluation metric values with different hyperparameter settings on the SZ-taxi dataset. (a) Impacts of different numbers of hidden units on , , and . (b) Effects of different numbers of hidden units on Accuracy, , and . (c) Influence of different feature sizes of the transformer layer on , , and . (d) Influence of different feature sizes of the transformer layer on Accuracy, , and . (e) Influence of different numbers of heads on , , and . (f) Influence of different numbers of heads on Accuracy, , and .

- The number of hidden units. The number of hidden units in a GRU model is a crucial hyperparameter influencing the model’s susceptibility to overfitting. An analysis was conducted by varying the number of hidden units within the range of [8, 16, 32, 64, 96, 128, 160] to examine the impact on the model’s prediction performance. Figure 6a,b and Figure 7a,b show the variation of evaluation metrics with different numbers of hidden units for the Los-loop and SZ-taxi datasets, respectively. It shows that the model prediction performance achieves its best when the number of hidden units in the two datasets is 64 or 96.

- The feature size of the transformer layer. The feature size of the transformer layer represents the feature dimensional information contained in the transformer layer. is set to the values in the range of [8, 16, 24, 32, 40, 64, 96] for the Los-loop dataset and [32, 40, 64, 96, 128, 160, 200] for the SZ-taxi dataset, respectively, to analyze the effects of transformer layers containing different numbers of feature information on prediction performance. Figure 6c,d and Figure 7c,d show the variation of metrics with different feature sizes of the transformer layer on the two datasets, respectively. It shows that the best prediction performance can be obtained with a feature size of the transformer layer of 32 on the Los-loop dataset, and 96 on the SZ-taxi dataset.

- The number of heads. The is also the number of subspaces in the multi-headed attention. To analyze the effects of different numbers of heads on the model’s performance, it is set to 2, 4, 8, and 16. Figure 6e,f and Figure 7e,f show the values of metrics with different numbers of heads on the two datasets. It shows that the best number of attention heads is four.

The forecasting capabilities of the LGSTGCN model are improved with the growing values of key hyperparameters. However, too high values of the hyperparameters may lead to overfitting of the model. The appropriate hyperparameter settings are crucial to prediction performance, and the optimal hyperparameter values should be tested and selected for different datasets.

5.5. Experimental Results

Table 2 and Table 3 demonstrate the forecasting results of the LGSTGCN model and the baseline models at 15, 30, and 60 min on the Los-loop and SZ-taxi datasets. HA and ARIMA methods predict traffic flows based on the assumption of smooth series, which limits their nonlinear modeling ability for traffic data. The machine learning method SVR uses a linear kernel function to automatically learn statistical laws, which provides better prediction performance compared to the traditional methods. For 15 min prediction, the RMSEs of LGSTGCN are 52.14% and 63.81% lower than those of SVR on the Los-loop and SZ-taxi datasets, while the MAE of LGSTGCN are 40.98% and 66.23% lower. LGSTGCN can achieve the best performance because of the excellent generalizability and feature extraction capability of the neural network. Deep learning approaches show better prediction performance. However, comparing with GRU, LGSTGCN has lower RMSE and higher accuracy in 15-min prediction for both datasets since LGSTGCN takes into account not only temporal dependence but also spatial dependence of traffic roads. The GNN-based deep learning models consider the spatial dependence of traffic roads and in turn achieve better prediction performance. For example, T-GCN and DCRNN combine GNN with RNN to extract the local spatial-temporal dependence. However, the LGSTGCN model shows better performance than T-GCN and DCRNN, owing to its capacity of capturing both global and local spatial-temporal dependency. Take the 30 min prediction results in Table 2 and Table 3 as an example, the RMSE and MAE of LGSTGCN have decreased by 31.36%, 50.92% on the Los-loop dataset, and 30.90%, 53.73% on the SZ-taxi dataset, comparing with T-CCN. As for DCRNN, the RMSE and MAE of LGSTGCN have reduced by 34.12% and 51.23%, 19.54% and 50.81% on the two datasets, respectively. Although the A3T-GCN model uses the attention mechanism to adjust the importance of different time steps to capture global time dependency, the attention mechanism treats all time points equally and cannot identify the sequential characteristics of traffic flow data. As shown in Table 2 and Table 3, comparing with A3T-GCN, the RMSE and MAE of LGSTGCN in 60 min prediction on the two datasets have reduced by 24.25%, 21.96% and 6.89%, 5.51%, while the accuracy has improved by 3.41% and 1.83%, respectively. The experimental results of LGSTGCN indicate that the spatial-temporal forecasting capabilities of the model can be enhanced by capturing global spatial-temporal features.

Table 2.

The prediction performance of different models on the Los-loop dataset.

Table 3.

The prediction performance of different models on the SZ-taxi dataset.

The MAPE values of all models on the SZ-taxi dataset are missing because the dataset has many missing values of zero and some noise figures. However, comparison on other evaluation metrics can be sufficient to analyze the performances of different models.

Table 4 shows the results of the LGSTGCN model and the baseline methods on METR-LA and PEMS-BAY datasets. STGCN can capture the traffic features by stacking multiple layers of convolution, but it focuses on the features of neighboring roads and ignores the global spatial dependence. GMAN captures global spatial-temporal correlation by using a multi-head attention mechanism. However, the attention mechanism always focuses on all time nodes and cannot capture important local spatial-temporal dependence. The LGSTGCN model captures both local and global spatial-temporal dependence. Comparing with STGCN and GMAN, the RMSE of LGSTGCN for 15 min prediction on the METR-LA dataset is reduced by 42.68% and 39.96%, respectively. STFGNN can capture global information due to the introduction of dilation convolution. However, since dilation convolution has a certain dilation limit, it makes it that so the global information of two regions that are far apart may still not be fully captured. LGSTGCN is able to capture the global dependence more completely by computing the region correlation based on all nodes. Compared to STFGNN, LGSTGCN reduces the RMSE of the 30 min prediction on the PEMS-BAY dataset by about 28.8%.

Table 4.

The prediction performance of different models on the METR-LA and PEMS-BAY datasets.

The forecasting capabilities of LGSTGCN has excellent performance in shorter-term predictions compared with traffic spatial-temporal prediction models in recent years (except for one MAE metric in the 30 min prediction of the PEMS-BAY dataset, which is slightly larger), such as in the 15 min prediction of the METR-LA dataset, where LGSTGCN reduces RMSE, MAE, and MAPE compared to the optimal results in the baseline by 30.44%, 28.02%, and 44.55%, and in the 30 min prediction by 14.1%, 9.89%, and 1.74%, respectively.

The forecasting capabilities of LGSTGCN are poor in the 60 min prediction on both datasets. The main reason is that the temporal and spatial correlations of road traffic flow become more complex when the prediction step is increased. Since LGSTGCN captures both local and global features in time and space, the global noise data in long-term prediction lead to larger overall error of the model. In the 15 min short-term prediction, the global noise data are less, and the comprehensive feature capturing capacity of the LGSTGCN model makes its prediction performance better, comparing with all baseline models.

5.6. Ablation Study

The ablation experiments of the LGSTGCN model on the two datasets are conducted to evaluate the effectiveness of key components. The five variants of the LGSTGCN model are designed as follows:

- NP_GS: In this variant, the graph attention residual network layer, which is used to capture global spatial dependency, is removed from LGSTGCN.

- NO_LST: This is a variant without T-GCN, which is used to capture real-time local spatial-temporal dependency.

- NO_GT: The transformer layer that can capture global temporal dependence is removed.

- NO_PE: The positional encoding of the transformer layer that can recognize the positional characteristics of the sequence is removed.

- NO_NC: This is a variant exchanging the graph attention residual network layer and T-GCN layer. Its graph convolution operation aggregates neighborhood information only according to static initial neighborhood features, ignoring the dynamic neighborhood features.

Table 5 and Table 6 list the comparison of five LGSTGCN variants, and the conclusions are as follows:

Table 5.

The results of ablation study on the Los-loop dataset.

Table 6.

The results of ablation study on the SZ-taxi dataset.

- Cross-regional spatial dependency is effective. The LGSTGCN model has a smaller prediction error compared to the NO_GS variant on both datasets. It indicates that cross-regional spatial dependence is effective to increase the prediction performance.

- Real-time local spatial-temporal dependency is significant. As shown in Table 5, for the Los-loop dataset, the forecasting capability of the NO_LST is worse than that of the LGSTGCN model. It means that the lack of local spatial-temporal information can lead to degradation in prediction performance. For the SZ-taxi dataset, the LGSTGCN model has the best performance in both 15 min and 30 min prediction. However, NO_LST is better than LGSTGCN in 60 min prediction. It may be because the correlations between the traffic flow data are reduced as the prediction horizon increases on the one hand. On the other hand, the wrong information at the current time may be learned due to the continuous missing values and noise figures existing in the dataset.

- Global temporal dependency is necessary. The forecasting capability of the NO_GT on the two datasets degrades significantly, which shows that the global temporal dependence module in the LGSTGCN model can improve the forecasting capabilities greatly.

- Positional information is effective. The NO_PE without positional encoding is unable to identify the order between related sequences when processing global temporal information. Thus, it is necessary to consider sequence position information.

- Dynamic neighborhood correlation is important. The NO_NC only aggregates information of neighborhood nodes based on static spatial distance and cannot capture the dynamic features. Thus, the prediction results of the NO_NC are poor. It indicates that it is important to take the influence of neighborhood nodes into account.

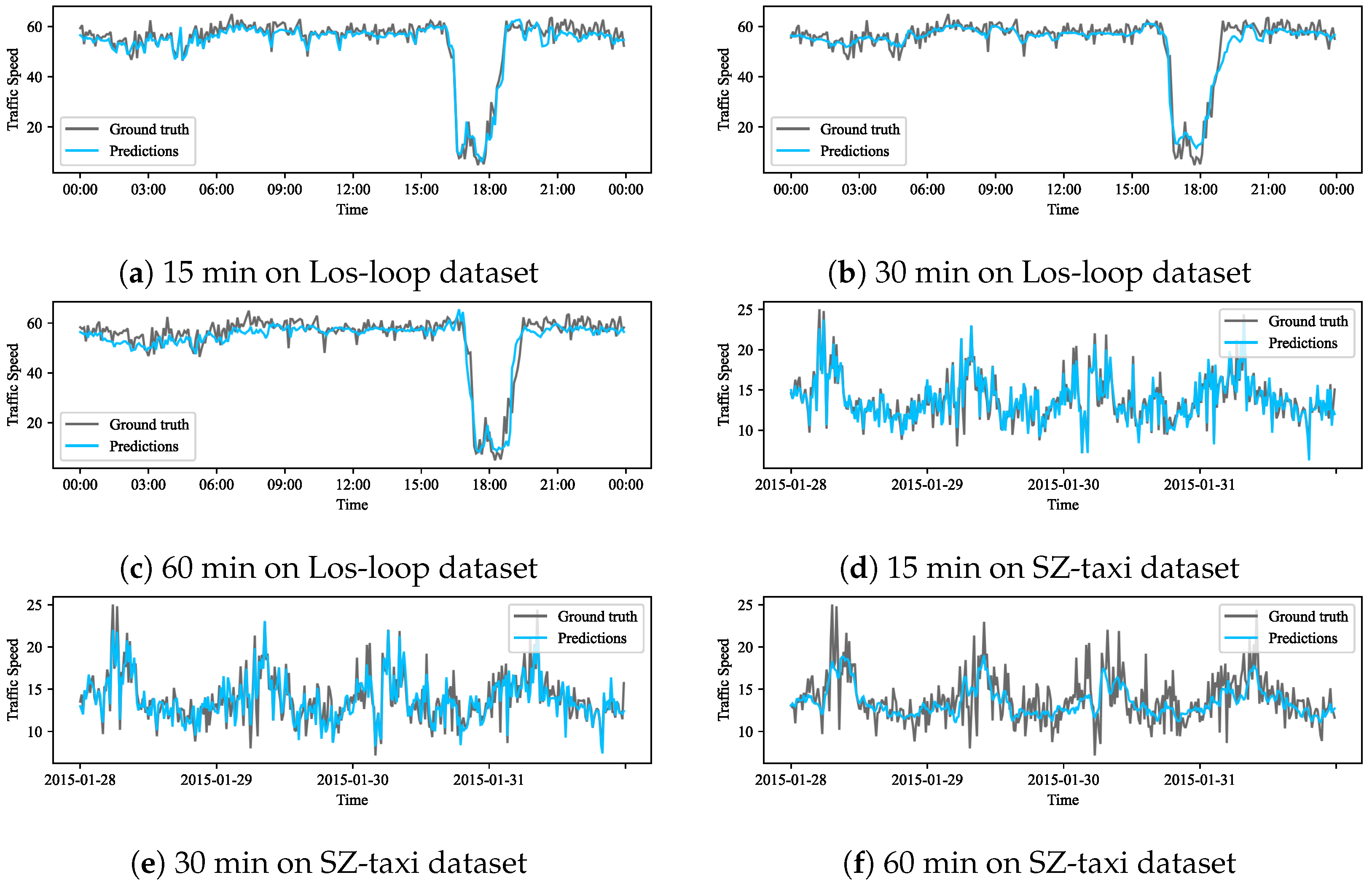

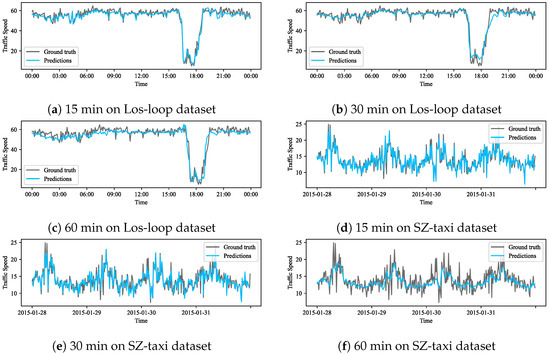

5.7. Analysis of Visualization

To better elucidate the LGSTGCN model, we specifically choose two roads from each dataset and visualize the prediction outcomes across various horizons. We utilize the full-day data on 7 March 2021 from the Los-loop dataset and traffic flow data spanning 28 January 2015 to 31 January 2015 from the SZ-taxi dataset. The visualizations at 15 min, 30 min, and 60 min intervals are presented in Figure 8. The LGSTGCN model demonstrates impressive predictive capabilities for nonlinear traffic speed across different time intervals. Notably, the prediction results exhibit non-smooth patterns in the 15 min prediction, indicating the model’s accuracy in capturing short-term traffic variations. As the prediction horizon extends, the results gradually smooth out while still maintaining a consistent trend with actual traffic flow. Furthermore, the visualization results on the Los-loop dataset demonstrate that the model is adaptive to the situations involving sudden drops in traffic speed, making it valuable for predicting traffic congestion.

Figure 8.

Visualization results with different prediction horizons on the Los-loop and SZ-taxi datasets.

6. Conclusions

A novel framework called Local-Global Spatial-Temporal Graph Convolutional Network (LGSTGCN) for traffic prediction is presented in this paper. In the model, a graph attention residual network layer is proposed to capture global spatial dependency. Moreover, a T-GCN module is used to extract local spatial-temporal dependency adaptively. In addition, a transformer layer is introduced to capture the global temporal features of all traffic road nodes. Therefore, the LGSTGCN model can extract local and global traffic flow information in both temporal and spatial terms. The experimental results on all datasets show that the LGSTGCN model outperforms the existing traffic prediction methods. It cannot only adapt to short-term changes in traffic speed but also capture long-term temporal dependence. Furthermore, the LGSTGCN model can maintain the cross-regional global spatial dependence and capture the real-time local spatial information. The analysis on the ablation study shows that both local and global spatial-temporal dependence is necessary for traffic prediction. The results of hyperparameter experiments show that different hyperparameter settings are required for different datasets to achieve optimal performance.

The future research mainly focuses on the traffic prediction modeling involving external information, such as weather, holidays, etc. These external factors allow the model to learn more realistic and richer traffic features. Research on prediction methods that further improves prediction performance is also an important challenging issue.

Author Contributions

Conceptualization, X.Z. and Z.C.; methodology, X.Z. and Z.C.; software, F.Y.; validation, X.Z., Z.C. and F.Y.; formal analysis, X.Z.; investigation, Z.C. and F.Y.; resources, Z.C.; data curation, F.Y.; writing—original draft preparation, X.Z. and Z.C.; writing—review and editing, X.Z. and S.W.; visualization, Z.C.; supervision, X.Z. and S.W.; project administration, X.Z. and S.W.; funding acquisition, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the National Natural Science Foundation of China under Grant (62376089 and 62202147) and in part by the Hubei Provincial Science and Technology Plan Project under Grant (2023BCB04100).

Data Availability Statement

Los-loop, SZ-taxi, METR-LA, and PEMS-BAY datasets can be obtained at https://github.com/lrhan321/LGSTGCN-2023.git (accessed on 29 December 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sepideh Kaffash, A.T.N.; Zhu, J. Big data algorithms and applications in intelligent transportation system: A review and bibliometric analysis. Int. J. Prod. Econ. 2021, 231, 107868. [Google Scholar] [CrossRef]

- Chandra Shit, R. Crowd intelligence for sustainable futuristic intelligent transportation system: A review. IET Intel. Transp. Syst. 2020, 14, 480–494. [Google Scholar] [CrossRef]

- Kong, X.; Wang, K.; Hou, M.; Xia, F.; Karmakar, G.; Li, J. Exploring Human Mobility for Multi-Pattern Passenger Prediction: A Graph Learning Framework. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16148–16160. [Google Scholar] [CrossRef]

- Lv, Z.; Lou, R.; Singh, A.K. AI Empowered Communication Systems for Intelligent Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4579–4587. [Google Scholar] [CrossRef]

- Hamed, M.M.; Al-Masaeid, H.R.; Said, Z.M.B. Short-Term Prediction of Traffic Volume in Urban Arterials. J. Transp. Eng. 1995, 121, 249–254. [Google Scholar] [CrossRef]

- Xiaoyu, H.; Yisheng, W.; Siyu, H. Short-term Traffic Flow Forecasting based on Two-tier K-nearest Neighbor Algorithm. Proc. Soc. Behav. Sci. 2013, 96, 2529–2536. [Google Scholar] [CrossRef]

- Wu, C.H.; Ho, J.M.; Lee, D. Travel-time prediction with support vector regression. IEEE Trans. Intell. Transp. Syst. 2004, 5, 276–281. [Google Scholar] [CrossRef]

- Yao, H.; Tang, X.; Wei, H.; Zheng, G.; Li, Z. Revisiting Spatial-Temporal Similarity: A Deep Learning Framework for Traffic Prediction. Proc. AAAI Conf. Artif. Intell. 2019, 33, 5668–5675. [Google Scholar] [CrossRef]

- Chen, C.; Liu, Z.; Wan, S.; Luan, J.; Pei, Q. Traffic Flow Prediction Based on Deep Learning in Internet of Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3776–3789. [Google Scholar] [CrossRef]

- Gu, Y.; Lu, W.; Xu, X.; Qin, L.; Shao, Z.; Zhang, H. An Improved Bayesian Combination Model for Short-Term Traffic Prediction With Deep Learning. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1332–1342. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Mpesiana, T.A. COVID-19: Automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020, 43, 635–640. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual Denoising Pre-training for Neural Machine Translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S.; Chen, B.; Cao, J.; Huang, Z. TrafficGAN: Network-Scale Deep Traffic Prediction With Generative Adversarial Nets. IEEE Trans. Intell. Transp. Syst. 2021, 22, 219–230. [Google Scholar] [CrossRef]

- Liu, Y.; Yu, J.J.Q.; Kang, J.; Niyato, D.; Zhang, S. Privacy-Preserving Traffic Flow Prediction: A Federated Learning Approach. IEEE Internet Things J. 2020, 7, 7751–7763. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, Z.; Zheng, L. Short-Term Prediction of Passenger Demand in Multi-Zone Level: Temporal Convolutional Neural Network With Multi-Task Learning. IEEE Trans. Intell. Transp. Syst. 2020, 21, 1480–1490. [Google Scholar] [CrossRef]

- Cui, Z.; Ke, R.; Pu, Z.; Wang, Y. Stacked bidirectional and unidirectional LSTM recurrent neural network for forecasting network-wide traffic state with missing values. Transp. Res. Part C Emerg. Technol. 2020, 118, 102674. [Google Scholar] [CrossRef]

- Liu, C.; Xiao, Z.; Wang, D.; Wang, L.; Jiang, H.; Chen, H.; Yu, J. Exploiting Spatiotemporal Correlations of Arrive-Stay-Leave Behaviors for Private Car Flow Prediction. IEEE Trans. Netw. Sci. Eng. 2022, 9, 834–847. [Google Scholar] [CrossRef]

- Guo, S.; Lin, Y.; Wan, H.; Li, X.; Cong, G. Learning Dynamics and Heterogeneity of Spatial-Temporal Graph Data for Traffic Forecasting. IEEE Trans. Knowl. Data Eng. 2022, 34, 5415–5428. [Google Scholar] [CrossRef]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. arXiv 2017, arXiv:1709.04875. [Google Scholar]

- Wu, Z.; Pan, S.; Long, G.; Jiang, J.; Chang, X.; Zhang, C. Connecting the Dots: Multivariate Time Series Forecasting with Graph Neural Networks. In Proceedings of the 26th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Virtual, 6–10 July 2020; pp. 753–763. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, M.; Lin, X.; Wang, Y.; He, F. Multistep speed prediction on traffic networks: A graph convolutional sequence-to-sequence learning approach with attention mechanism. arXiv 2018, arXiv:1810.10237. [Google Scholar]

- Geng, X.; Li, Y.; Wang, L.; Zhang, L.; Yang, Q.; Ye, J.; Liu, Y. Spatiotemporal Multi-Graph Convolution Network for Ride-Hailing Demand Forecasting. Proc. AAAI Conf. Artif. Intell. 2019, 33, 3656–3663. [Google Scholar] [CrossRef]

- Guo, K.; Hu, Y.; Qian, Z.; Sun, Y.; Gao, J.; Yin, B. Dynamic Graph Convolution Network for Traffic Forecasting Based on Latent Network of Laplace Matrix Estimation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 1009–1018. [Google Scholar] [CrossRef]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention Based Spatial-Temporal Graph Convolutional Networks for Traffic Flow Forecasting. Proc. AAAI Conf. Artif. Intell. 2019, 33, 922–929. [Google Scholar] [CrossRef]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In Proceedings of the International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Yao, H.; Wu, F.; Ke, J.; Tang, X.; Jia, Y.; Lu, S.; Gong, P.; Ye, J.; Chuxing, D.; Li, Z. Deep Multi-View Spatial-Temporal Network for Taxi Demand Prediction. Intl. Conf. Artif. Intell. 2018, 32, 11836. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, C.; Xu, Y.; Xia, L.; Dai, P.; Bo, L.; Zhang, J.; Zheng, Y. Traffic Flow Forecasting with Spatial-Temporal Graph Diffusion Network. Proc. AAAI Conf. Artif. Intell. 2021, 35, 15008–15015. [Google Scholar] [CrossRef]

- Wang, X.; Ma, Y.; Wang, Y.; Jin, W.; Wang, X.; Tang, J.; Jia, C.; Yu, J. Traffic Flow Prediction via Spatial Temporal Graph Neural Network. In Proceedings of the Web Conference, New York, NY, USA, 18 May 2020; pp. 1082–1092. [Google Scholar] [CrossRef]

- Fu, R.; Zhang, Z.; Li, L. Using LSTM and GRU neural network methods for traffic flow prediction. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 324–328. [Google Scholar] [CrossRef]

- Wei, G. A Summary of Traffic Flow Forecasting Methods. J. Highw. Transp. Res. Dev. 2004, 21, 82–85. [Google Scholar]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, Y.; Qi, D.; Li, R.; Yi, X.; Li, T. Predicting citywide crowd flows using deep spatio-temporal residual networks. Artif. Intell. 2018, 259, 147–166. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, J.; Du, Y.; Huang, C.; Li, P. Traffic-GGNN: Predicting Traffic Flow via Attentional Spatial-Temporal Gated Graph Neural Networks. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18423–18432. [Google Scholar] [CrossRef]

- Cui, Z.; Henrickson, K.; Ke, R.; Wang, Y. Traffic Graph Convolutional Recurrent Neural Network: A Deep Learning Framework for Network-Scale Traffic Learning and Forecasting. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4883–4894. [Google Scholar] [CrossRef]

- Liu, L.; Chen, J.; Wu, H.; Zhen, J.; Li, G.; Lin, L. Physical-Virtual Collaboration Modeling for Intra- and Inter-Station Metro Ridership Prediction. IEEE Trans. Intell. Transp. Syst. 2022, 23, 3377–3391. [Google Scholar] [CrossRef]

- Zhao, J.; Chen, C.; Liao, C.; Huang, H.; Ma, J.; Pu, H.; Luo, J.; Zhu, T.; Wang, S. 2F-TP: Learning Flexible Spatiotemporal Dependency for Flexible Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2022, 24, 15379–15391. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Y.; Shao, Y. Forecasting traffic flow with spatial–temporal convolutional graph attention networks. Neural. Comput. Appl. 2022, 34, 15457–15479. [Google Scholar] [CrossRef]

- Chen, G.; Gu, T.; Lu, J.; Bao, J.A.; Zhou, J. Person Re-Identification via Attention Pyramid. IEEE Trans. Image Process. 2021, 30, 7663–7676. [Google Scholar] [CrossRef]

- Li, J.; Wei, P.; Zheng, N. Nesting spatiotemporal attention networks for action recognition. Neurocomputing 2021, 459, 338–348. [Google Scholar] [CrossRef]

- Bai, J.; Zhu, J.; Song, Y.; Zhao, L.; Hou, Z.; Du, R.; Li, H. A3T-GCN: Attention Temporal Graph Convolutional Network for Traffic Forecasting. ISPRS Int. J. Geoinf. 2021, 10, 485. [Google Scholar] [CrossRef]

- Ye, X.; Fang, S.; Sun, F.; Zhang, C.; Xiang, S. Meta Graph Transformer: A Novel Framework for Spatial–Temporal Traffic Prediction. Neurocomputing 2022, 491, 544–563. [Google Scholar] [CrossRef]

- Xu, M.; Dai, W.; Liu, C.; Gao, X.; Lin, W.; Qi, G.J.; Xiong, H. Spatial-temporal transformer networks for traffic flow forecasting. arXiv 2020, arXiv:2001.02908. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. arXiv 2017, arXiv:1710.10903. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Ahmed, M.S.; Cook, A.R. Analysis of freeway traffic time-series data by using Box-Jenkins techniques. Transp. Res. Rec. 1979, 1–9. [Google Scholar]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Statist. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Zhang, Q.; Chang, J.; Meng, G.; Xiang, S.; Pan, C. Spatio-Temporal Graph Structure Learning for Traffic Forecasting. Proc. AAAI Conf. Artif. Intell. 2020, 34, 1177–1185. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Long, G.; Jiang, J.; Zhang, C. Graph WaveNet for Deep Spatial-Temporal Graph Modeling. arXiv 2019, arXiv:1906.00121. [Google Scholar]

- Chen, W.; Chen, L.; Xie, Y.; Cao, W.; Gao, Y.; Feng, X. Multi-Range Attentive Bicomponent Graph Convolutional Network for Traffic Forecasting. Proc. AAAI Conf. Artif. Intell. 2020, 34, 3529–3536. [Google Scholar] [CrossRef]

- Zheng, C.; Fan, X.; Wang, C.; Qi, J. GMAN: A Graph Multi-Attention Network for Traffic Prediction. Proc. AAAI Conf. Artif. Intell. 2020, 34, 1234–1241. [Google Scholar] [CrossRef]

- Park, C.; Lee, C.; Bahng, H.; Tae, Y.; Jin, S.; Kim, K.; Ko, S.; Choo, J. ST-GRAT: A Novel Spatio-Temporal Graph Attention Networks for Accurately Forecasting Dynamically Changing Road Speed. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual, 19–23 October 2020; pp. 1215–1224. [Google Scholar] [CrossRef]

- Oreshkin, B.N.; Amini, A.; Coyle, L.; Coates, M. FC-GAGA: Fully Connected Gated Graph Architecture for Spatio-Temporal Traffic Forecasting. Proc. AAAI Conf. Artif. Intell. 2021, 35, 9233–9241. [Google Scholar] [CrossRef]

- Cai, L.; Janowicz, K.; Mai, G.; Yan, B.; Zhu, R. Traffic transformer: Capturing the continuity and periodicity of time series for traffic forecasting. Trans. GIS 2020, 24, 736–755. [Google Scholar] [CrossRef]

- Li, M.; Zhu, Z. Spatial-Temporal Fusion Graph Neural Networks for Traffic Flow Forecasting. Proc. AAAI Conf. Artif. Intell. 2021, 35, 4189–4196. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).