Abstract

The analysis of the state of the literature in the field of methods of perception and control of the movement of autonomous vehicles shows the possibilities of improving them by using an artificial neural network to generate domains of prohibited maneuvers of passing objects, contributing to increasing the safety of autonomous driving in various real conditions of the surrounding environment. This article concerns radar perception, which involves receiving information about the movement of many autonomous objects, then identifying and assigning them a collision risk and preparing a maneuvering response. In the identification process, each object is assigned a domain generated by a previously trained neural network. The size of the domain is proportional to the risk of collisions and distance changes during autonomous driving. Then, an optimal trajectory is determined from among the possible safe paths, ensuring control in a minimum of time. The presented solution to the radar perception task was illustrated with a computer simulation of autonomous driving in a situation of passing many objects. The main achievements presented in this article are the synthesis of a radar perception algorithm mapping the neural domains of autonomous objects characterizing their collision risk and the assessment of the degree of radar perception on the example of multi-object autonomous driving simulation.

1. Introduction

One of the most important issues in autonomous driving is the use of the detection and tracking of other objects and the surrounding environment, which constitute radar perception, in order to ensure the safety of the autonomous vehicle, both in an Advanced Driver Assistance System (ADAS) and in a fully Autonomous Driving System (ADS). To achieve this, it is necessary to faithfully reproduce the subjectivity of the assessment of a multi-object situation subject to the influence of various environmental disturbances. This can be achieved by using a previously trained artificial neural network or a game control model. The article concerns the first proposal for the synthesis of a safe autonomous driving algorithm with the radar perception of individual passing objects by assigning them forbidden approach domains corresponding to the collision risk value.

1.1. State of Knowledge

The literature review will cover, first, ADAS systems, and then ADS systems, in the field of Radar, Lidar and Video perception and methods of safe autonomous driving.

An analysis of the development of automated vehicles and intelligent driver assistance in the Advanced Driver Assistance System (ADAS) is presented by Aufrere et al. in [1]. The basis of these systems is close-range sensing, ensuring safe autonomous driving. To achieve this, a video sensor, laser rangefinders, a rangefinder with light strips, sensor information processing software, a map-based fusion system and an event probability prediction model are used.

Marcano et al. in [2] propose improving the Advanced Driver Assistance System (ADAS) by using real, complex traffic scenarios to ensure safe autonomous driving in all conditions. For this purpose, simulation platforms were used in three techniques for longitudinal control of an autonomous vehicle at low speed. A classic PID controller, adaptive network fuzzy inference system and model predictive control were used to control an autonomous vehicle.

Manghat, in [3], analyzes the perception of the surroundings of an autonomous vehicle using devices such as radar, lidar, sonar, GPS, odometry and inertial. The following technological solutions were used for driver assistance in the field of safety: adaptive cruise control, forward collision warning and collision mitigation by breaking.

Sligar, in [4], presents a radar perception algorithm based on machine learning for detecting objects and then creating high-dimensional virtual datasets used in training and testing of advanced driver assistance system (ADAS).

In terms of various detection and tracking perception techniques in an Autonomous Driving System (ADS), the following works can be mentioned in the last few years.

Manjunath et al. in [5] deal with radar perception in terms of object grouping and tracking in the most common multi-object scenarios and testing typical driving maneuvers.

Hussain et al. in [6] present the application of deep learning algorithms to detect and track objects in perception using video, lidar and radar devices in difficult weather conditions.

Yang et al. in [7], to enhance radar and lidar perception capabilities, used voxel-based early fusion and attention-based late fusion, which learn from the input data.

Kramer, in [8], presents radar perception in the millimeter wave range, which enables the implementation of radar–inertial assessment of the state in smoke and fog, estimation of the relative change in the radar position and detection of moving obstacles along the path of an autonomous vehicle.

Scheiner et al. in [9], paid particular attention to the quality of input data to the radar perception process and the use of deeper and more complex neural network structures. For sparsity, high-resolution processing or the use of low-level data layers and polarimetric radars are used.

Gupta et al. in [10], provided an up-to-date review of the applications of deep learning in object detection and scene perception in autonomous vehicles. The implemented image perception while driving ensures safe driving without human intervention.

Yao et al. in [11], proposed combining radar perception and signal processing of radar measurements through deep learning. The essence of their considerations is to generate the following five radar representations, ADC signal, radar tensor, point cloud, grid map and a micro-Doppler signature, to which data sets are assigned.

Tu et al. in [12], analyze the concept of multi-sensor detection by placing an adversary object on the host vehicle. To obtain more reliable multimodal perception, adversarial training with feature denoising is used. which increases resistance to such attacks.

Hoss et al. in [13], to take into account the uncertain perception of the environment, propose the use of verification and validation at the interface of perception and planning from the point of view of test criteria and metrics, test scenarios and reference data.

Gao, in [14], synthesizes various radar perception algorithms using a multi-perspective radar convolutional neural network model that extracts location and class information of an autonomous object from a sequence of range, velocity and angle heatmaps. The developed an autonomous vehicle anti-collision support algorithm consisting of pre-processed backscattered received radar signal to generate four-dimensional point clouds, three-dimensional radar ego-motion estimation and a notch filter.

Li et al. in [15], emphasize that radar perception is robust in all weather conditions, but radar signals are characterized by low angular resolution and precision in recognizing surrounding objects. To improve this, the authors use temporal information from successive egocentric bird’s-eye radar image frames to recognize radar objects. Then, the consistency of the object’s existence and attributes, size and orientation (size, orientation, etc.), is exploited and a temporal relational layer is proposed to model the relationships between objects in subsequent radar images.

Zhou et al. in [16], compare three main challenges in realizing deep radar perception, multipath effects, uncertainty problems and adverse weather effects, and then propose methods to solve them.

Huang, in [17], used cooperative perception with vehicle-to-everything technology to take into account physical occlusion and the limited field of view of the sensor.

Zhang et al. [18] presented modern deep learning algorithms and methods for improving the radar perception of autonomous vehicles, taking into account the type and state of weather and the type of remote sensing technique.

Gragnaniello et al. in [19], conducted a comparative evaluation of twenty-two multi-object detection and tracking algorithms using metrics that highlight the contributions and limitations of each of their modules. The result of this analysis is the indication of the combination of ConvNext and QDTrack algorithms as the best perception method.

Pandharipande et al. in [20], studied the efficiency of the perception process and the movement safety of an autonomous vehicle for various types of sensors in the form of camera, radar and lidar and with related data processing techniques.

Barbosa and Osorio, in [21], study radar-based perception and radar-camera fusion, as applied to the navigation of an autonomous vehicle moving in unfavorable lighting and weather conditions.

Sun et al. in [22], developed a frontal collision warning algorithm for an autonomous vehicle, which consists of vision and radar tracking algorithms, which uses a memory index based on the information entropy of the adaptive Kalman filter.

A very important issue in the operation of autonomous vehicles is the synthesis of appropriate algorithms for controlling their safe movement in real conditions of the surrounding environment.

Gebregziabher, in [23], presents a developed algorithm for predictive collision prevention of an autonomous vehicle based on lidar perception and prediction of its speed and future positions. In order to increase the efficiency of the collision prevention process, a modification of the dynamic windowing algorithm was used.

Snider, in [24], performs a comparative analysis of synthesis methods of safe control of an autonomous vehicle in real conditions of the surrounding environment.

Lombard et al. [25] present an algorithm for controlling the steering angle of an autonomous vehicle with adaptation of its speed, in order to ensure operation on straights, sections and curves, using only the GNSS positioning system.

Lee et al. [26] present a developed machine learning model integrating multi-task convolutional neural networks and an algorithm controlling the stable driving of an autonomous vehicle. In particular, programmable logic controller algorithms are proposed to prevent collisions of autonomous vehicles in real time.

Muzahid et al. [27] perform a comparative analysis of various methods of planning the movement of an autonomous vehicle to prevent rear-end collisions, leading to collisions of many vehicles, by taking into account possible strategies of cooperation of many vehicles.

Sana et al. in [28], reviewed methods for accurate and perfect perception as well as methods for planning and implementing safe vehicle movement at the fifth level of its autonomy, based on graphs and machine learning.

Abdallaoui et al. in [29] presented a synthetic analysis of navigation and control methods for autonomous vehicles using maneuvering rules, machine learning, deep learning, probabilistic and hybrid approaches. This takes into account the measurement uncertainty of sensors, modeling of a dynamic environment, real-time response speed and safe interactions with other road users.

He and Liu, in [30], compared four integrated hierarchical satellite and inertial navigation algorithms for motion control and positioning of autonomous vehicles.

Ibrahim et al. in [31], show a technological solution using the integration of satellite and inertial systems in combination with an extended filter, which ensures greater reliability and accuracy of autonomous driving.

Liu et al. in [32], analyze the state of control technology for both autonomous vehicles and connected and automated vehicles in the field of vehicle state estimation and trajectory tracking control.

A method that incorporates scenario understanding into the motion prediction task of an autonomous vehicle to improve adaptability and avoid motion prediction errors is proposed by Karle et al. in [33]. To do this, they use an a priori evaluation of the scenario based on semantic information, and the evaluation adaptively selects the most accurate prediction model, but also recognizes if no model can accurately predict this scenario.

Yang et al. in [34] proposed a dataset in the cooperative perception of ships, in the form of three typical ship navigation scenarios, covering ports, islands and open waters, for typical ship classes such as container ships, bulk carriers and cruise ships. This allows the performance of the main vehicle cooperative perception models to be assessed when transferred to ship cooperative perception scenes.

A method for training an autonomous car in simulated urban traffic scenarios to be able to independently assess road conditions before crossing an unsignalized intersection is presented by Tsai et al. in [35]. To identify traffic behavior at an intersection, an autonomous car simulator was used, which builds the intersection environment and simulates the traffic management process. Observational images from a semantic segmentation camera installed in an autonomous car and the vehicle’s speed are used to train models based on the convolutional neural network architecture.

Cai et al. in [36], describe the use of roadside perception infrastructure to collaboratively perceive vehicles and infrastructure, through edge computing to extract intermediate features in real time and networks to transmit these features to vehicles. Here, a multi-agent reinforcement learning-based service scheduling method is proposed for vehicle-infrastructure cooperative perception migration, using a discrete time-varying graph to model the relationship between service nodes and edge server nodes.

Wang et al. in [37], solve the autonomous vehicle routing problem using a multi-objective vehicle routing optimization algorithm based on preference adaptation. For this purpose, they used a weight adjustment as a sequential decision-making method that is able to adapt to different approximate Pareto fronts and find better quality solutions.

The above analysis of the state of the literature in the field of methods of perception and control of the movement of autonomous vehicles shows the possibilities of improving them by using an artificial neural network to generate domains of prohibited maneuvers of passing objects, contributing to increasing the safety of autonomous driving in various real conditions of the surrounding environment.

1.2. Study Objectives

The main achievements presented in this article are as follows:

- Synthesis of a radar perception algorithm mapping the neural domains of autonomous objects characterizing their collision risk;

- Assessment of the degree of radar perception on the example of multi-object autonomous driving simulation.

1.3. Article Content

First, the process of autonomous driving of multiple objects was characterized, presenting its functional diagram, which consists of the radar perception of detecting and tracking objects, identification of their collision risk using an artificial neural network, and then a multi-stage dynamic programming algorithm for making maneuvering decisions. The next section describes the details of radar perception of collision risk based on the three-layer structure of an artificial neural network. Then, the synthesis of the algorithm for safe control of an autonomous vehicle and its pseudocode is described. To confirm the objectives of the paper adopted at the beginning of the paper, simulation tests of the safe autonomous driving algorithm were carried out in the situation of passing ten other autonomous vehicles.

2. Autonomous Driving of Multiple Objects

The most advanced technological solution of an autonomous vehicle should, in addition to implementing measurement functions, detecting and tracking objects and obstacles within radar perception, include an appropriate safe control algorithm [33,34,35,36,37].

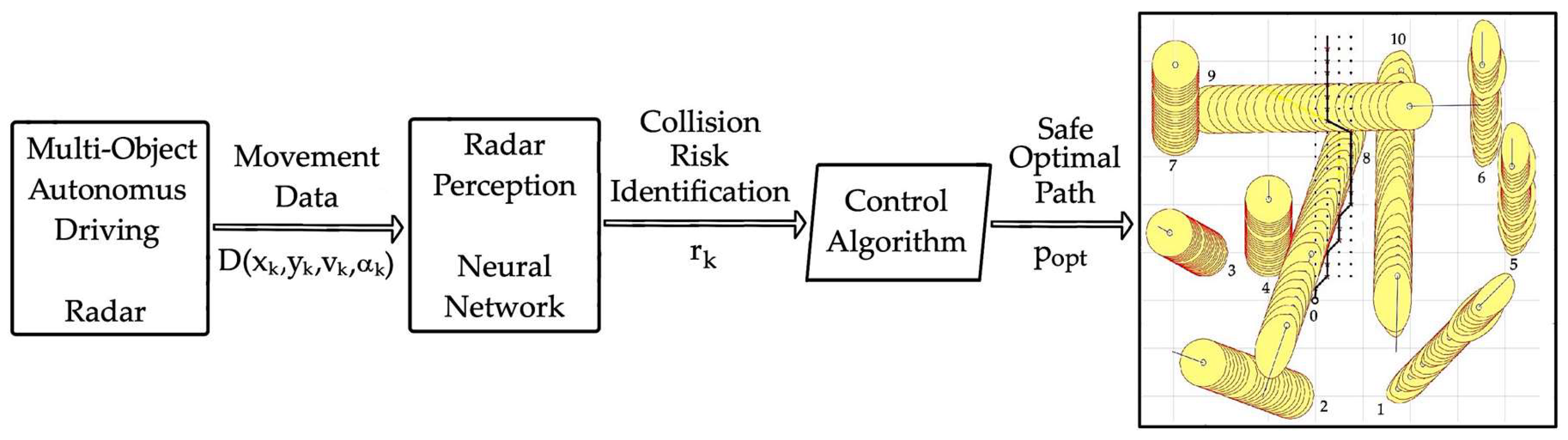

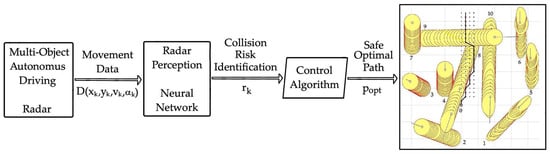

The functional structure of such a multi-object safe autonomous driving system is shown in Figure 1.

Figure 1.

Functional diagram of the autonomous driving system for many objects: D is the movement data vector of k object; vk is speed of the k object; αk is course of the k object; xk and yk are coordinates of the k object position; rk is the collision risk of the k object; and popt is the optimal path of the autonomous vehicle.

The motion of our k = 0 autonomous vehicle as a kinematic object can be described by appropriate equations in discrete form, which are also used in the process of predicting the motion of autonomous objects [38]:

However, the passing autonomous vehicles k = 1, 2, …, K limit the movement of our autonomous vehicle:

These restrictions take the form of moving prohibited areas assigned to individual passing autonomous vehicles and other environmental obstacles.

Stationary autonomous vehicles and other fixed objects are assigned a circular limitation with a radius equal to the safe passing distance ds in given environmental conditions:

However, moving autonomous vehicles are assigned a limitation in the form of ellipses with a fixed size proportional to the value of the safe passing distance ds or a variable size corresponding to the collision threat from other autonomous objects and the environment, shaped by a previously trained artificial neural network:

where lk and wk are the length and width of the k autonomous vehicle, and fk is the focal distance of the ellipse.

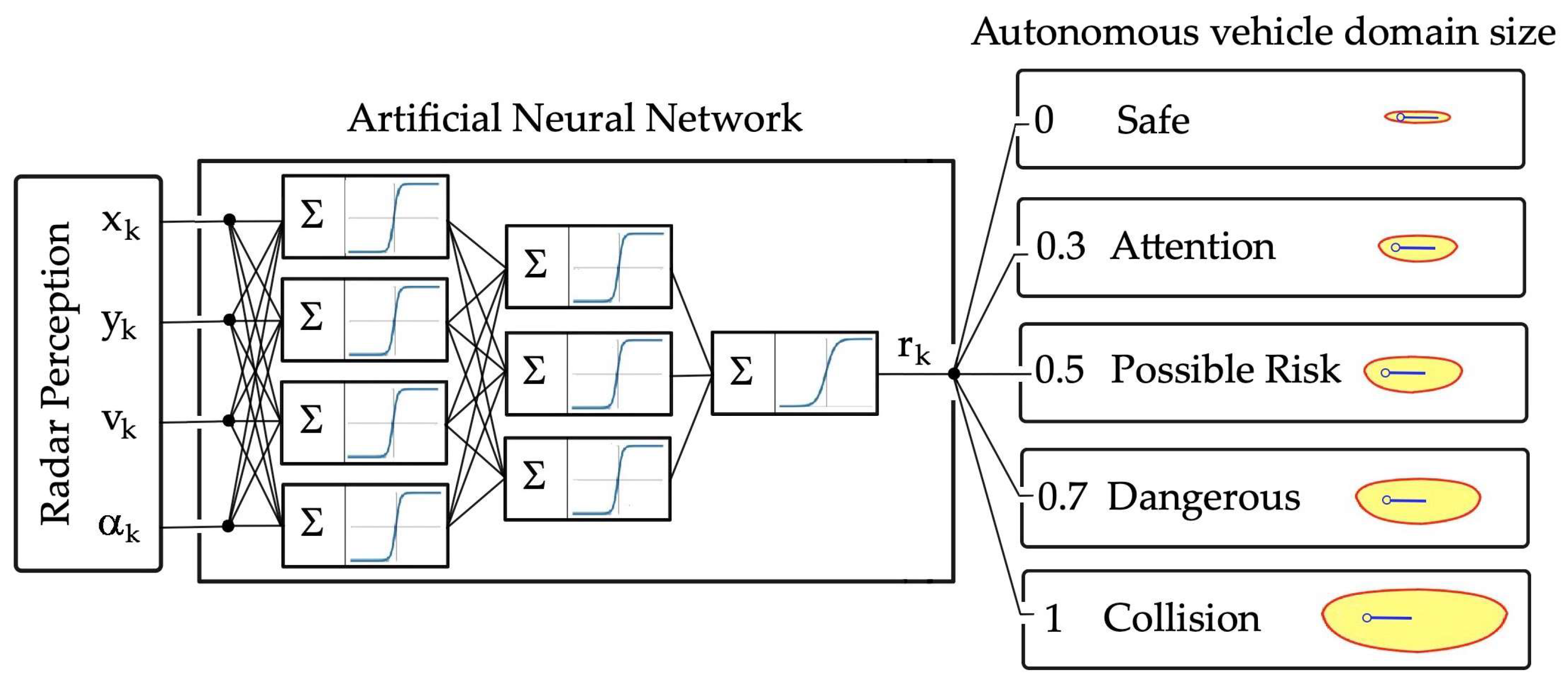

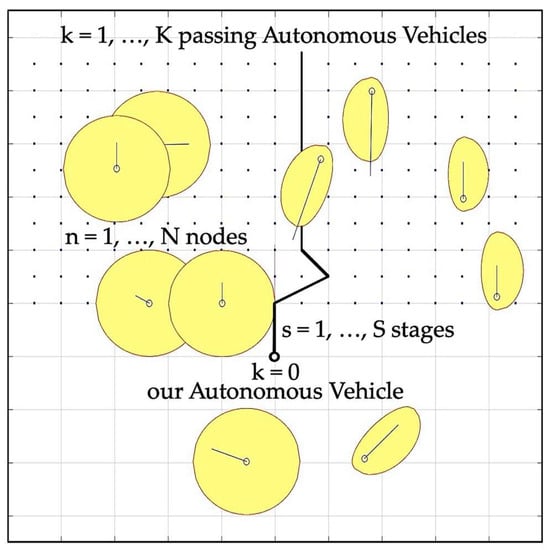

3. Radar Perception of Collision Risk

The task of the artificial neural network, in each subsequent step of prediction, the motion k of an autonomous object is to assess the risk of collision rk with it by adequately mapping the size of the domain in the shape of an ellipse (Figure 2).

Figure 2.

Generating the neural domain of the k-th object’s collision risk rk during autonomous driving.

The size of the domains was adjusted to the degree of safety of vehicle movement, assessed by the risk of collision. The following five levels of safety of the passed k vehicle were adopted, to which the collision risk value rk was arbitrarily assigned:

- “Safe” where rk = 0;

- “Attention” where rk = 0.3;

- “Possible Risk” where rk = 0.5;

- “Dangerous” where rk = 0.7;

- “Collision” where rk = 1.

An artificial neural network model was used to assess the safety level of the situation and assign collision risk values. A three-layer neural network model was used, with four input quantities measured by the radar device and one output quantity in the form of collision risk. Nonlinear activation functions were assumed in the first and second layers and a sigmoidal activation function in the third output layer. At each stage and node of the calculation, the algorithm asked the neural network about the collision risk value and generated the appropriate size of the vehicle domain.

The synthesis of this neural network was carried out in the Neural Network Toolbox of MATLAB version 2024a. An error propagation algorithm with adaptive learning rate and dynamics was used to train it.

Ten road scenarios were used to train the neural network, taking into account both parallel vehicle traffic and various types of intersections. A total of 300 drivers participated in the entire network training process, which lasted several months. In a given road situation scenario, the driver assessed it subjectively in terms of safety, assessing the risk of collision on a scale from zero to one. The neural network trained in this way represents the average experience of a larger group of drivers.

4. Control Algorithm

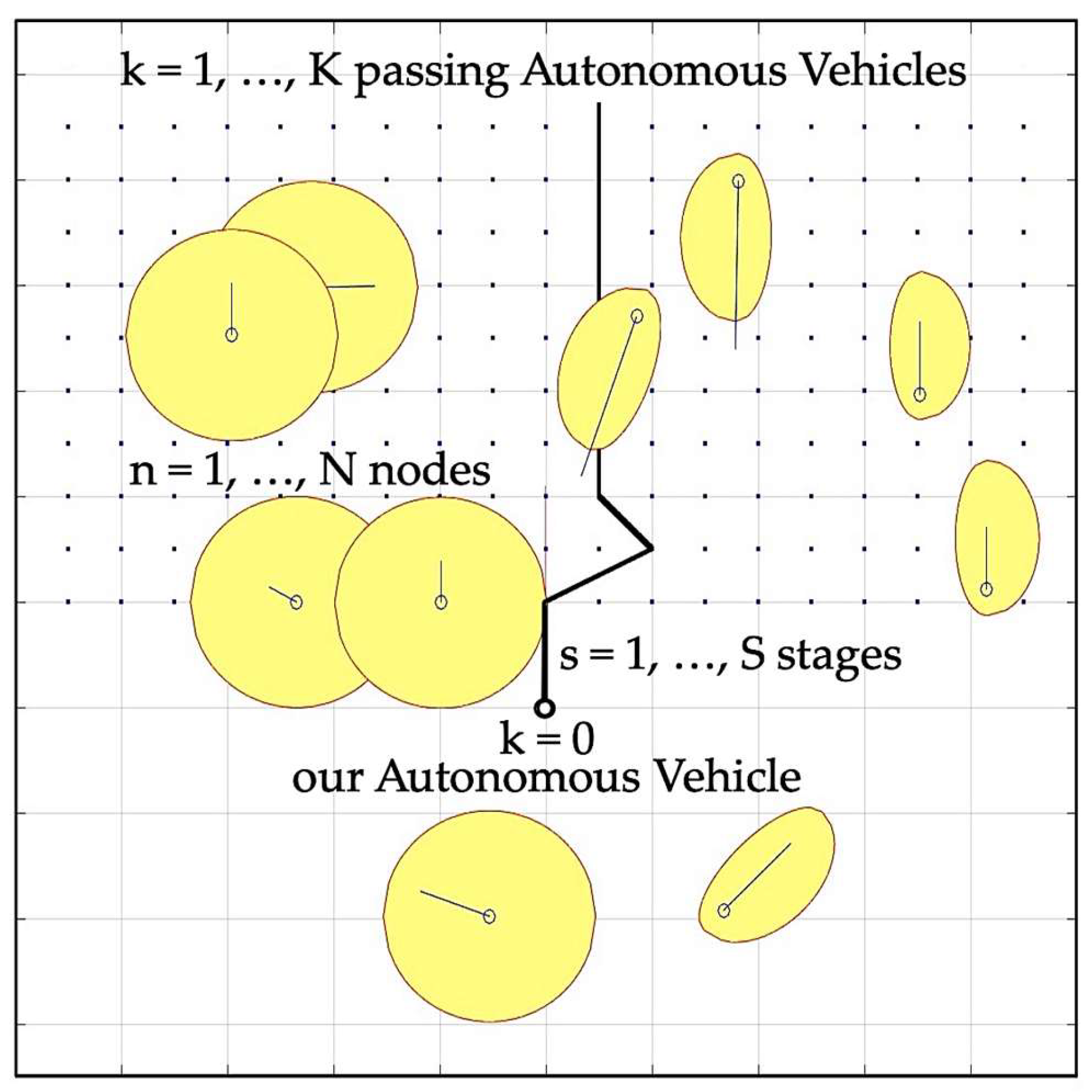

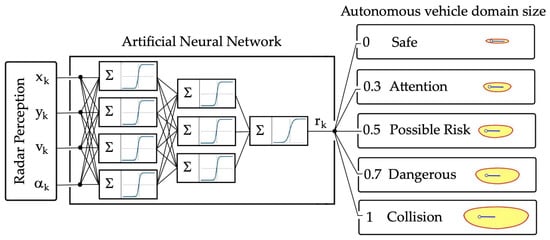

The plane of possible states in the autonomous driving process is treated discreetly, dividing it into stages s, and each stage into nodes n (Figure 3). Then, the possible movement paths of the autonomous vehicle are dynamically programmed, eliminating nodes that are in prohibited areas defined by the neural domains of collision risk. Among all safe paths, the optimal path is selected, ensuring the shortest autonomous driving time topt.

Figure 3.

Dynamic programming grid.

The combination of radar perception with the generation of neural domains of collision risk allowed the synthesis of an algorithm for optimal safe autonomous driving when passing many other autonomous vehicles, as presented below (Algorithm 1).

| Algorithm 1: Autonomous driving with neural domains of collision risk |

| BEGIN |

| 1. Radar Perception Data: xk, yk, vk, αk |

| 2. Stage: s:=1 |

| 3. Autonomous Vehicle: k=1 |

| 4. Creating collision risk neural domain with k Autonomous Vehicle |

| IF not k=K THEN (k:= k+1 and GOTO 4) |

| ELSE Node: n:=1 |

| 5. Dynamic programming of our Autonomous Vehicle safe path |

| IF not n=N THEN (n:= n+1 and GOTO 6) |

| ELSE s:= S |

| IF not s=S THEN (s:= s+1 and GOTO 3) |

| ELSE Determining optimal path of our Autonomous Vehicle |

| in relation to passes k Autonomous Vehicles |

| END |

The algorithm consists of the following three calculation procedures:

- Creating collision risk neural domain of our k = 0 Autonomous Vehicle with k other Autonomous Vehicles;

- Dynamic programming of safe path of our k = 0 Autonomous Vehicle;

- Determining the optimal path our k = 0 Autonomous Vehicle in relation to all k passes objects.

The autonomous driving algorithm with neural domains of collision risk of passing autonomous vehicles was implemented in Matlab/Simulink software, version 2024a.

5. Computer Simulation

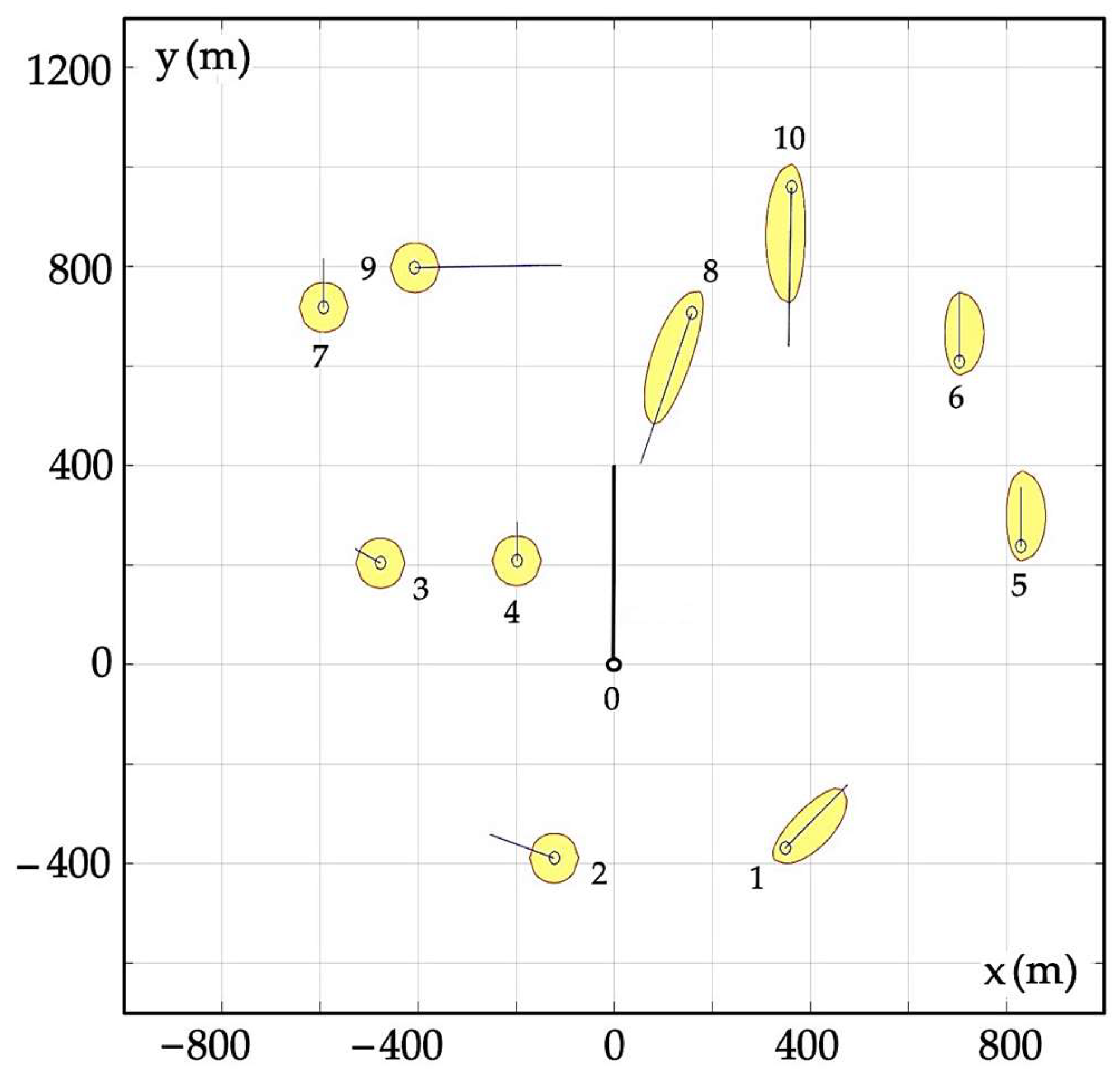

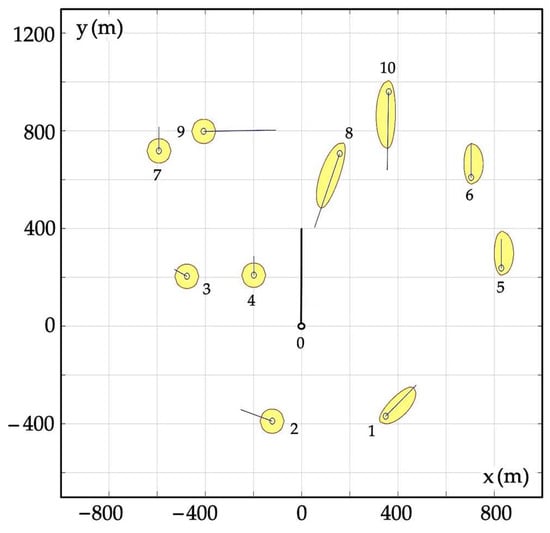

The developed autonomous driving algorithm was checked and assessed through computer simulation on the example of a situation where our autonomous vehicle safely passed ten autonomous objects (Table 1).

Table 1.

Variables of radar perception of autonomous driving of our autonomous vehicle k = 0 in relation to k = 10 other autonomous objects encountered.

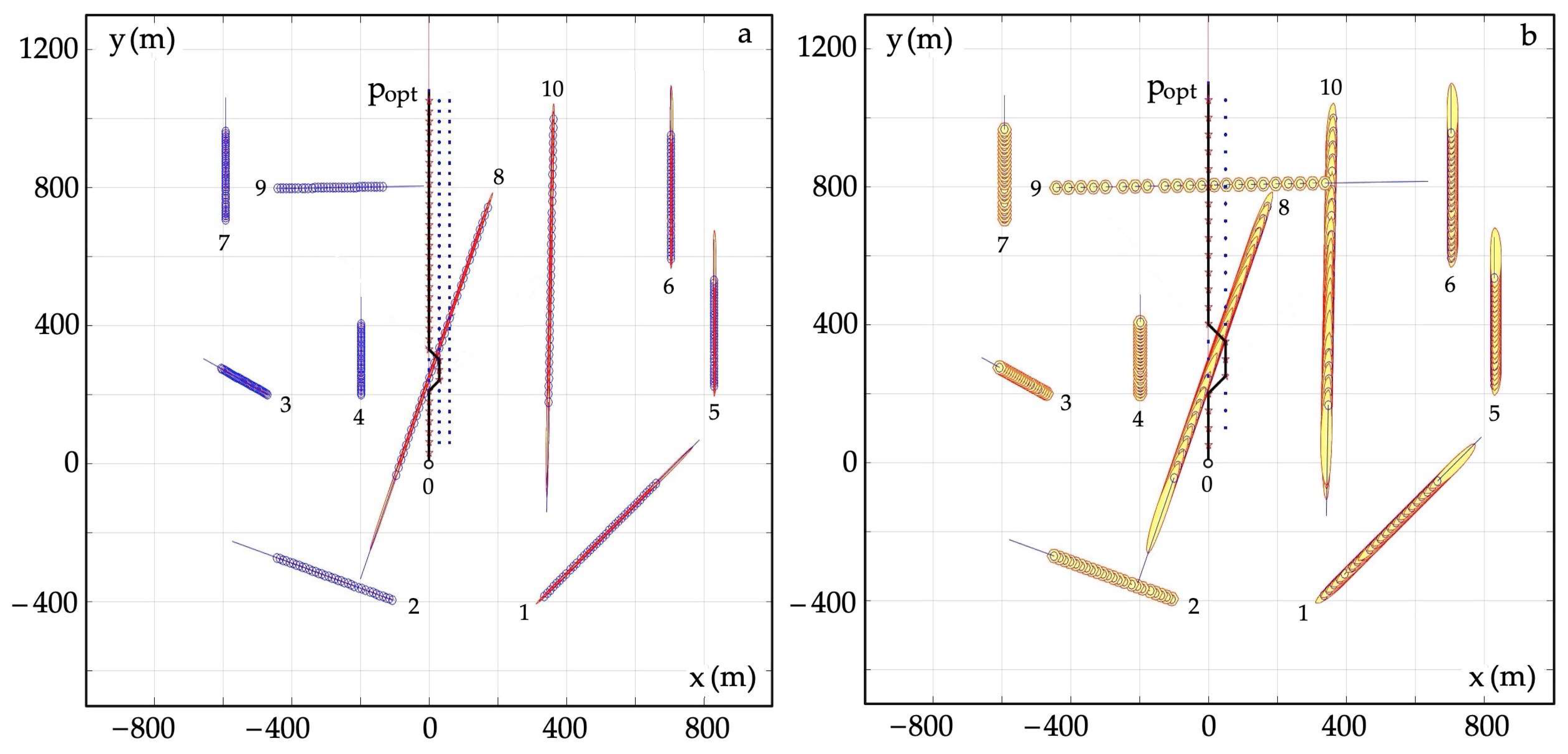

Figure 4 illustrates this situation in the form of object velocity vectors and the collision risk domains assigned to them. However, in accordance with road traffic law, objects to which we are obliged to give way are assigned elliptical domains, while others are assigned circular domains.

Figure 4.

Illustration of the examined situation during autonomous driving of many objects.

The elliptical shape of the domain used ensures that our autonomous vehicle avoids the traffic passing another autonomous vehicle in front of it, causing it to give way. Other objects with traffic restrictions are obliged to give way to us.

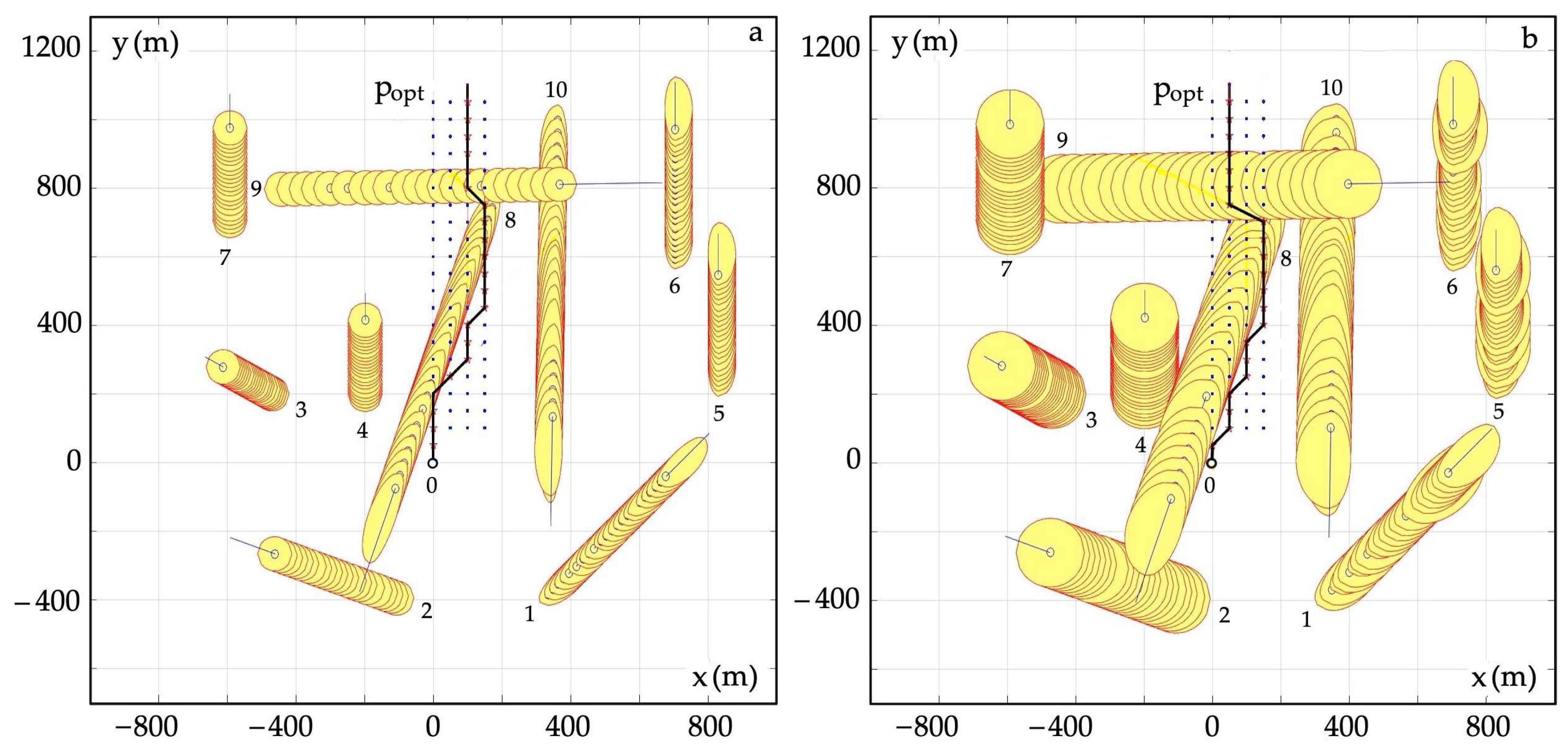

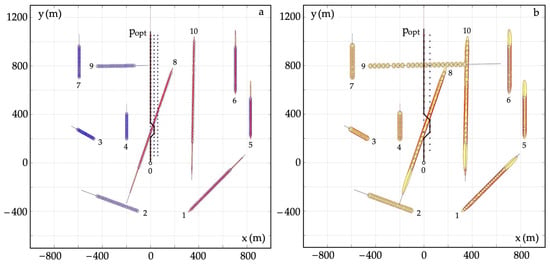

Simulation tests of the safe autonomous vehicle driving algorithm were carried out in various environmental conditions, characterized by the need to maintain a safe passing distance ds. The range of the set value of the safe passing distance ds was from 5 m to 200 m, which corresponds to traffic conditions from good and average to poor. After entering the data describing the situation according to Table 1, the algorithm was run in the Matlab/Simulink software. After a dozen or so seconds, the results were obtained in the form of the optimal path popt of the own vehicle and the trajectories of passing k vehicles with assigned neural domains with variable sizes corresponding to the level of collision risk rk. Thus, Figure 5 shows the optimal safe path of our autonomous vehicle in good object movement conditions, when ds = 5 20 m.

Figure 5.

Optimal safe autonomous driving path in good environmental conditions: (a) ds = 5 m; (b) ds = 20 m.

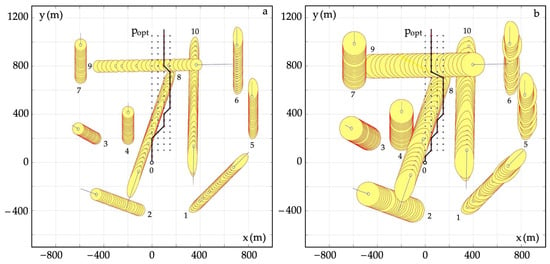

The optimal safe autonomous driving path of our vehicle in average traffic conditions, when ds = 50 ÷ 100 m, is shown in Figure 6.

Figure 6.

Optimal safe autonomous driving path in average environmental conditions: (a) ds = 50 m; (b) ds = 100 m.

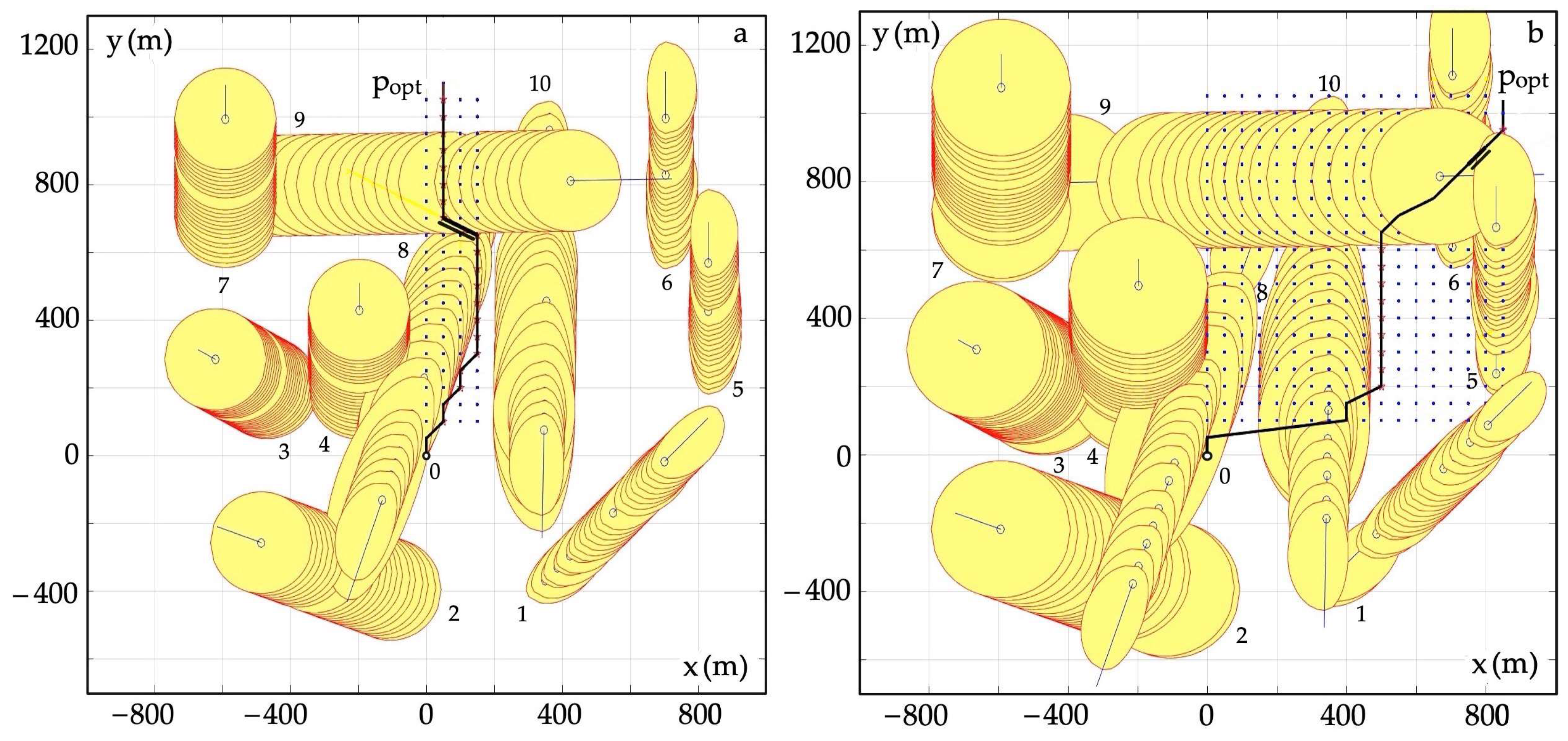

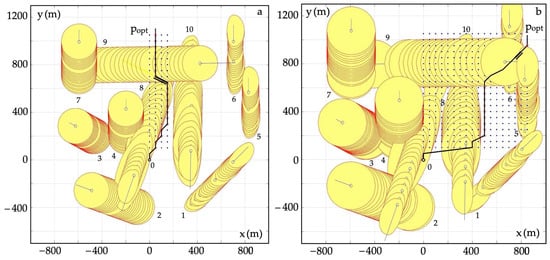

Figure 7 presents the optimal safe path of our autonomous vehicle in poor object movement conditions when ds = 150 ÷ 200 m.

Figure 7.

Optimal safe autonomous driving path in poor environmental conditions: (a) ds = 150 m; (b) ds = 200 m; === reducing vehicle speed by 25%.

The measure of the optimality of the safe path of an autonomous vehicle is its implementation time topt, which ranged from 1714 s to 2921 s, with changes in the safe passing distance ds from 5 m to 200 m.

In difficult conditions of the vehicle traffic environment, as in Figure 7, the domains of the most dangerous vehicles 8 and 10 were enlarged when approaching our vehicle, illustrating an increased risk of collision. Then, as it moved away, these domains got smaller, presenting a lower collision risk.

The method presented in this article, in comparison to previously developed methods, takes into account the limitations of safe vehicle control resulting from changing environmental conditions.

To assess the functioning of the developed safe autonomous driving algorithms, it is very useful to analyze the sensitivity of the optimal control quality index both to the accuracy of measurement of control process state variables and to the impact of environmental disturbances.

Sensitivity analysis concerns the assessment of the quality of functioning optimal and safe systems control of autonomous objects. The sensitivity functions sx of the optimal and safe control u of the process described by state variables x can be presented as partial derivatives of the quality control index Q:

where I is the quality index of time-optimal control topt.

The measure of the sensitivity of optimal driving is the relative change in the optimal implementation time of the optimal path of an autonomous object caused by a change in the ith parameter pi of the process model:

Computer simulations of the developed an autonomous driving with neural domains of the collision risk algorithm allowed us to obtain the sensitivity function to errors in the measurement of the position coordinates δxy0, speed δv0 and course δα0 of our autonomous object 0.

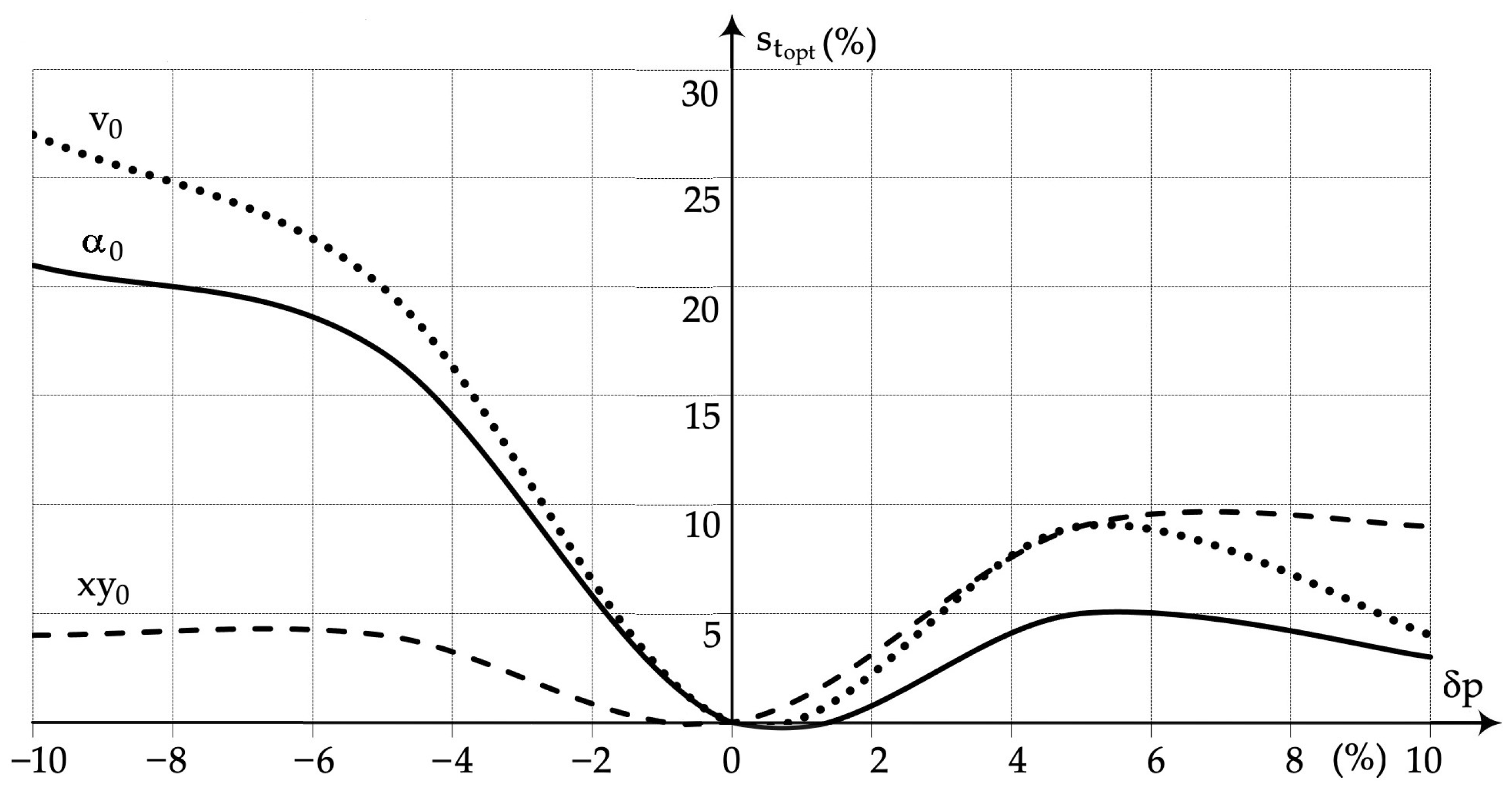

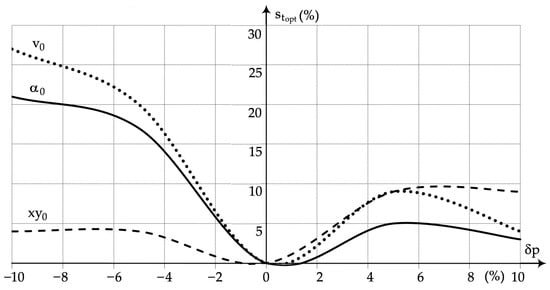

The sensitivity characteristics of optimal safe autonomous driving, presented in Figure 8, were determined at various values of inaccuracy in the perception of motion parameters δp ranging from −10% to 10%.

Figure 8.

Sensitivity characteristics of the time-optimal autonomous driving path to errors of the following: xy0 object position coordinates; v0 object speed; α0 object course.

The study of the time-optimal sensitivity of autonomous driving showed its greatest changes being from 4% to 27% for errors in the perception of the speed of an autonomous object ranging from −10% to +10%.

Similarly, large changes in sensitivity from 3% to 22% occurred for errors in the perception of the heading of an autonomous object ranging from −10% to +10%.

However, object position measurement errors from −10% to +10% caused the least sensitivity, ranging from 4% to 9%.

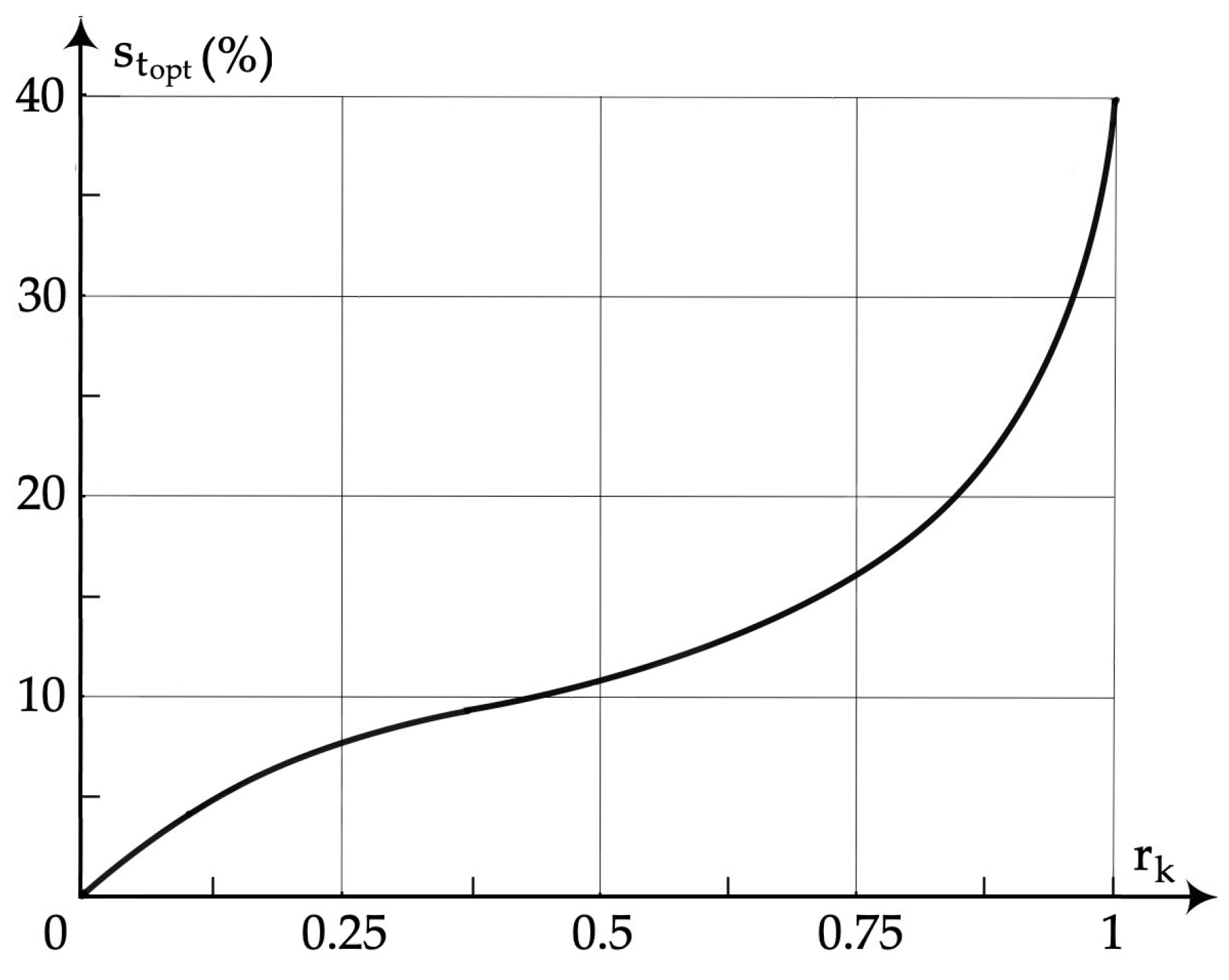

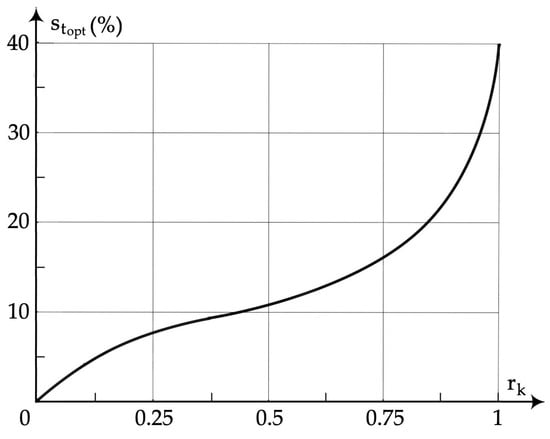

The sensitivity of the time-optimal autonomous driving path to the radar perception of the collision risk rk of objects is shown in Figure 9.

Figure 9.

Sensitivity characteristic of the time-optimal autonomous driving path to radar perception of the degree of collision risk.

A very important factor in time-optimal autonomous driving is its sensitivity to the state of the surrounding environment, which also affects the possible risk of collision.

In good autonomous driving conditions, when the assessed risk is at the “Attention” level (Figure 2), the sensitivity of the estimated time-optimal autonomous driving does not exceed 10%. However, in difficult environmental conditions, rated as “Possible Risk, Dangerous, Collision” (poor lighting, heavy rainfall, heavy traffic), the sensitivity of time-optimal autonomous driving increased from 15% to 40%.

This increase in the sensitivity of time-optimal autonomous driving was caused by taking into account the risk of collisions from passing autonomous vehicles, which were assigned neural domains of prohibited maneuvering areas of variable size.

6. Conclusions

The synthesis of a radar perception algorithm mapping the neural domains of autonomous objects characterizing their collision risk and the assessment of the degree of radar perception on the example of multi-object autonomous driving simulation presented in the article confirmed the thesis stated at the beginning of the work, that it is possible to improve methods of perception and control of the movement of autonomous vehicles by using an artificial neural network to generate domains of prohibited maneuvers of passing objects, contributing to increasing the safety of autonomous driving in various real conditions of the surrounding environment.

Simulation tests of the developed autonomous driving with neural domains of collision risk algorithm allow for the assessment of the following factors affecting safe autonomous driving:

- Greatest changes from 4% to 27% for errors occur in the perception of the speed of an autonomous object ranging from −10% to +10%;

- Large changes in sensitivity from 3% to 22% occur for errors in the perception of the heading of an autonomous object ranging from −10% to +10%;

- Object position measurement errors from −10% to +10% cause the least sensitivity ranging from 4% to 9%;

- The increase in the sensitivity of time-optimal autonomous driving was caused by taking into account the risk of collisions from passing autonomous vehicles, which were assigned neural domains of prohibited maneuvering areas of variable size;

- The advantage of the presented method for determining the optimal safe autonomous driving path is its low sensitivity to the inaccuracy of the input data from the radar device;

- The limitation of the method is the reaction to changes in the direction and speed of passing vehicles during safe path calculations.

The plan for further research on the perception and optimal safe control of autonomous vehicles should include:

- Taking into account the properties of the autonomous driving process in situations where other autonomous vehicles do not respect the right of road;

- Application of a cooperative and non-cooperative positional game model that takes into account areas of prohibited maneuvers due to the obligation to maintain a safe distance when passing autonomous objects;

- Using the collision risk matrix game model, cooperative and non-cooperative;

- Optimization of the process of safe autonomous driving based on the selected particle swarm model, for example using the ant algorithm;

- Simulation of more complex autonomous driving situations, taking into account both a larger number of objects and various real scenarios of parallel two-way and one-way driving, with an intersection and a roundabout.

Funding

This research was funded by the research project “Methods for optimal and safe control of a larger number of autonomous objects” no. WE/2024/PZ/02, the Electrical Engineering Faculty, Gdynia Maritime University, Poland.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Aufrère, R.; Gowdy, J.; Mertz, C.; Thorpe, C.; Wang, C.C.; Yata, T. Perception for collision avoidance and autonomous driving. Mechatronics 2003, 13, 1149–1161. [Google Scholar] [CrossRef]

- Marcano, M.; Matute, J.A.; Lattarulo, R.; Martí, E.; Pérez, J. Intelligent Control Approaches for Modeling and Control of Complex Systems. Complexity 2018, 2018, 7615123. [Google Scholar] [CrossRef]

- Manghat, S.K. Multi Sensor Multi Object Tracking in Autonomous Vehicles. Master’s Thesis, Purdue University, Indianapolis, IN, USA, 12 December 2019. [Google Scholar]

- Sligar, P. Machine Learning-Based Radar Perception for Autonomous Vehicles Using Full Physics Simulation. IEEE Access 2020, 8, 51470–51476. [Google Scholar] [CrossRef]

- Manjunath, A.; Liu, Y.; Henriques, B.; Engstle, A. Radar Based Object Detection and Tracking for Autonomous Driving. In Proceedings of the 2018 IEEE MTT-S International Conference on Crowaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Hussain, M.I.; Azam, S.; Munir, F.; Khan, Z.; Jeon, M. Multiple Objects Tracking using Radar for Autonomous Driving. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Yang, B.; Ruo, R.; Liang, M.; Casas, S.; Urtasun, R. RadarNet: Exploiting Radar for Robust Perception of Dynamic Objects. arXiv 2020. [Google Scholar] [CrossRef]

- Kramer, A. Radar-Based Perception for Visually Degraded Environments. Ph.D. Thesis, University of Washington, Washington, DC, USA, 2021. [Google Scholar]

- Scheiner, N.; Weishaupt, F.; Tilly, J.F.; Dickmann, J. New Challenges for Deep Neural Networks in Automotive Radar Perception. In Automatisiertes Fahren; Bertram, T., Ed.; Springer Vieweg: Wiesbaden, Germany, 2021. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Yao, S.; Guan, R.; Peng, Z.; Xu, C.; Shi, Y.; Yue, Y.; Lim, E.G.; Seo, H.; Man, K.L.; Zhu, X.; et al. Radar Perception in Autonomous Driving: Exploring Different Data Representations. arXiv 2021. [Google Scholar] [CrossRef]

- Tu, J.; Li, H.; Yan, X.; Ren, M.; Chen, Y.; Liang, M.; Bitar, E.; Yumer, E.; Urtasun, R. Exploring Adversarial Robustness of Multi-sensor Perception Systems in Self Driving. In Proceedings of the 5th Conference on Robot Learning, London, UK, 8–11 November 2021; Available online: https://proceedings.mlr.press/v164/tu22a.html (accessed on 20 February 2024).

- Hoss, M.; Scholtes, M.; Eckstein, L. A Review of Testing Object-Based Environment Perception for Safe Automated Driving. Automot. Innov. 2022, 5, 223–250. [Google Scholar] [CrossRef]

- Gao, X. Efficient and Enhanced Radar Perception for Autonomous Driving Systems. Ph.D. Thesis, University of Washington, Washington, DC, USA, 2023. Available online: https://hdl.handle.net/1773/50783 (accessed on 18 February 2024).

- Li, P.; Wang, P.; Berntorp, K.; Liu, H. Exploiting Temporal Relations on Radar Perception for Autonomous Driving. arXiv 2022. [Google Scholar] [CrossRef]

- Zhou, Y.; Lu, L.; Zhao, H.; López-Benítez, M.; Yu, L.; Yue, Y. Towards Deep Radar Perception for Autonomous Driving: Datasets, Methods, and Challenges. Sensors 2022, 22, 4208. [Google Scholar] [CrossRef]

- Huang, T. V2X Cooperative Perception for Autonomous Driving: Recent Advances and Challenges. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Gragnaniello, D.; Greco, A.; Saggese, A.; Vento, M.; Vicinanza, A. Benchmarking 2D Multi-Object Detection and Tracking Algorithms in Autonomous Vehicle Driving Scenarios. Sensors 2023, 23, 4024. [Google Scholar] [CrossRef]

- Pandharipande, A.; Cheng, C.-H.; Dauwels, J.; Gurbuz, S.Z.; Guzman, J.I.; Li, G.; Piazzoni, A.; Wang, P.; Santra, A. Sensing and Machine Learning for Automotive Perception: A Review. IEEE Sens. J. 2023, 23, 11097–11115. [Google Scholar] [CrossRef]

- Barbosa, F.M.; Osorio, F.S. Camera-Radar Perception for Autonomous Vehicles and ADAS: Concepts, Datasets and Metrics. arXiv 2023. [Google Scholar] [CrossRef]

- Sun, C.; Li, Y.; Li, H.; Xu, E.; Li, Y.; Li, W. Forward Collision Warning Strategy Based on Millimeter-Wave Radar and Visual Fusion. Sensors 2023, 23, 9295. [Google Scholar] [CrossRef]

- Gebregziabher, B. Multi Object Tracking for Predictive Collision Avoidance. arXiv 2023. [Google Scholar] [CrossRef]

- Snider, J.M. Automatic Steering Methods for Autonomous Automobile Path Tracking; Tech. Rep. CMU-RITR-09-08; Robotics Institute: Pittsburgh, PA, USA, 2009; Available online: https://api.semanticscholar.org/CorpusID:17512121 (accessed on 16 February 2024).

- Lombard, A.; Hao, X.; Abbas-Turki, A.; Moudni, A.E.; Galland, S.; Yasar, A.U.H. Lateral Control of an Unmaned Car Using GNSS Positionning in the Context of Connected Vehicles. Procedia Comput. Sci. 2016, 98, 148–155. [Google Scholar] [CrossRef]

- Lee, D.H.; Chen, K.L.; Liou, K.H.; Liu, C.L.; Liu, J.L. Deep learning and control algorithms of direct perception for autonomous driving. Appl. Intell. 2019, 51, 237–247. Available online: https://api.semanticscholar.org/CorpusID:204907021 (accessed on 1 March 2024). [CrossRef]

- Muzahid, A.J.M.; Kamarulzaman, S.F.; Rahman, M.A.; Murad, S.A.; Kamal, A.S.; Alenezi, A.H. Multiple vehicle cooperation and collision avoidance in automated vehicles: Survey and an AI-enabled conceptual framework. Sci. Rep. 2023, 13, 603. [Google Scholar] [CrossRef]

- Sana, F.; Azad, N.L.; Raahemifar, K. Autonomous Vehicle Decision-Making and Control in Complex and Unconventional Scenarios—A Review. Machines 2023, 11, 676. [Google Scholar] [CrossRef]

- Abdallaoui, S.; Ikaouassen, H.; Kribeche, A.; Chaibet, A.; Aglzim, E. Advancing autonomous vehicle control systems: An in-depth overview of decision-making and manoeuvre execution state of the art. J. Eng. 2023, 2023, e12333. [Google Scholar] [CrossRef]

- He, Y.; Li, J.; Liu, J. Research on GNSS INS & GNSS/INS Integrated Navigation Method for Autonomous Vehicles: A Survey. IEEE Access 2023, 11, 79033–79055. [Google Scholar] [CrossRef]

- Ibrahim, A.; Abosekeen, A.; Azouz, A.; Noureldin, A. Enhanced Autonomous Vehicle Positioning Using a Loosely Coupled INS/GNSS-Based Invariant-EKF Integration. Sensors 2023, 23, 6097. [Google Scholar] [CrossRef]

- Localization, Path Planning, Control, and System Integration. Available online: https://master-engineer.com/2020/11/04/localization-path-planning-control-and-system-integration/ (accessed on 9 January 2024).

- Karle, P.; Furtner, L.; Lienkamp, M. Self-Evaluation of Trajectory Predictors for Autonomous Driving. Electronics 2024, 13, 946. [Google Scholar] [CrossRef]

- Yang, W.; Wang, X.; Luo, X.; Xie, S.; Chen, J. S2S-Sim: A Benchmark Dataset for Ship Cooperative 3D Object Detection. Electronics 2024, 13, 885. [Google Scholar] [CrossRef]

- Tsai, J.; Chang, Y.-T.; Chen, Z.-Y.; You, Z. Autonomous Driving Control for Passing Unsignalized Intersections Using the Segmentation Technique. Electronics 2024, 13, 484. [Google Scholar] [CrossRef]

- Cai, C.; Chen, B.; Qiu, J.; Xu, Y.; Li, M.; Yang, Y. Migratory Perception in Edge-Assisted Internet of Vehicles. Electronics 2023, 12, 3662. [Google Scholar] [CrossRef]

- Wang, L.; Song, C.; Sun, Y.; Lu, C.; Chen, Q. A Neural Multi-Objective Capacitated Vehicle Routing Optimization Algorithm Based on Preference Adjustment. Electronics 2023, 12, 4167. [Google Scholar] [CrossRef]

- Liu, W.; Hua, M.; Deng, Z.; Meng, Z.; Huang, Y.; Hu, C.; Song, S.; Gao, L.; Liu, C.; Shuai, B.; et al. A Systematic Survey of Control Techniques and Applications in Connected and Automated Vehicles. IEEE Internet Things J. 2023, 10, 21892–21916. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).