OpenWeedGUI: An Open-Source Graphical Tool for Weed Imaging and YOLO-Based Weed Detection

Abstract

1. Introduction

2. Materials and Methods

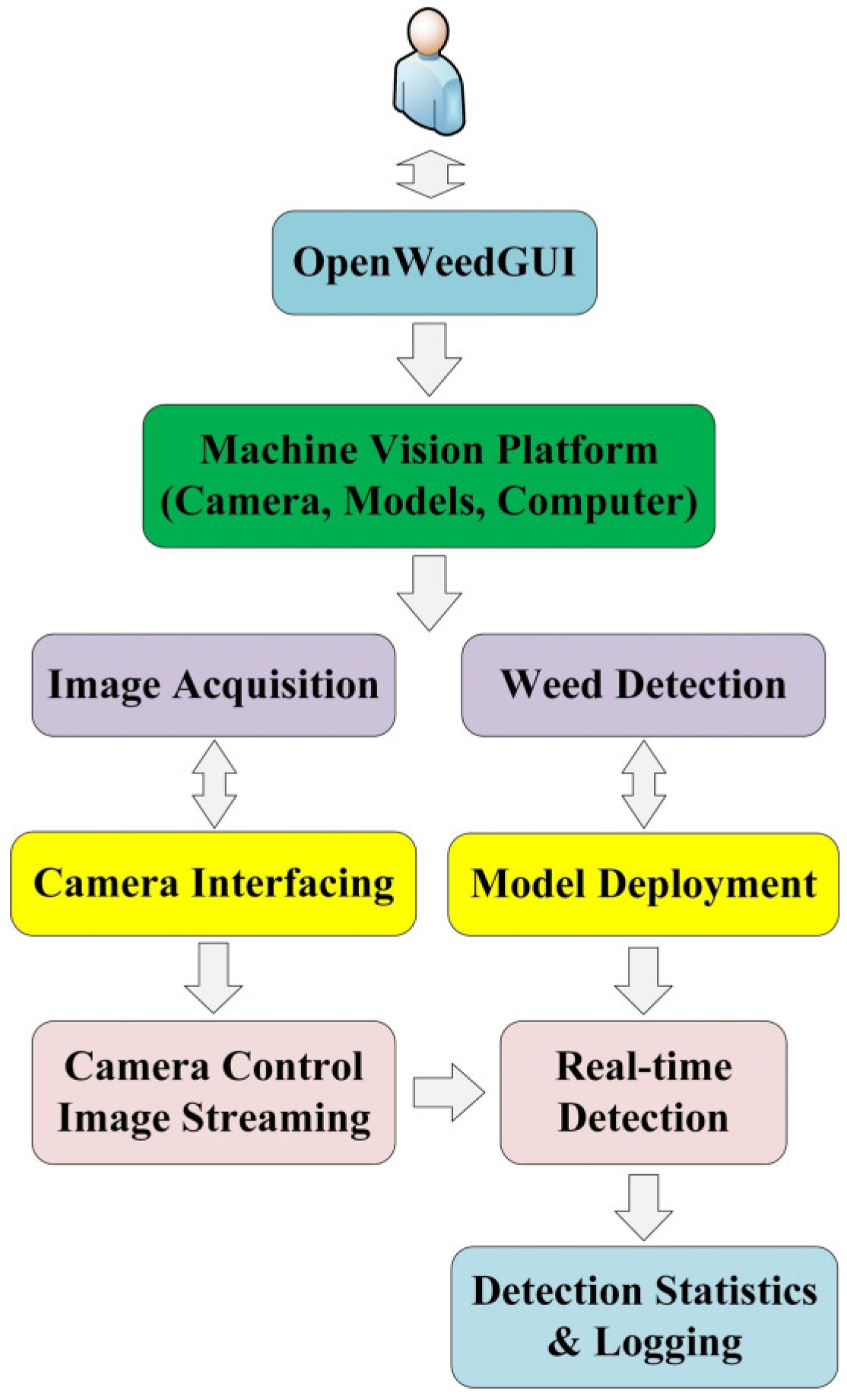

2.1. GUI Design Features

2.1.1. GUI Framework

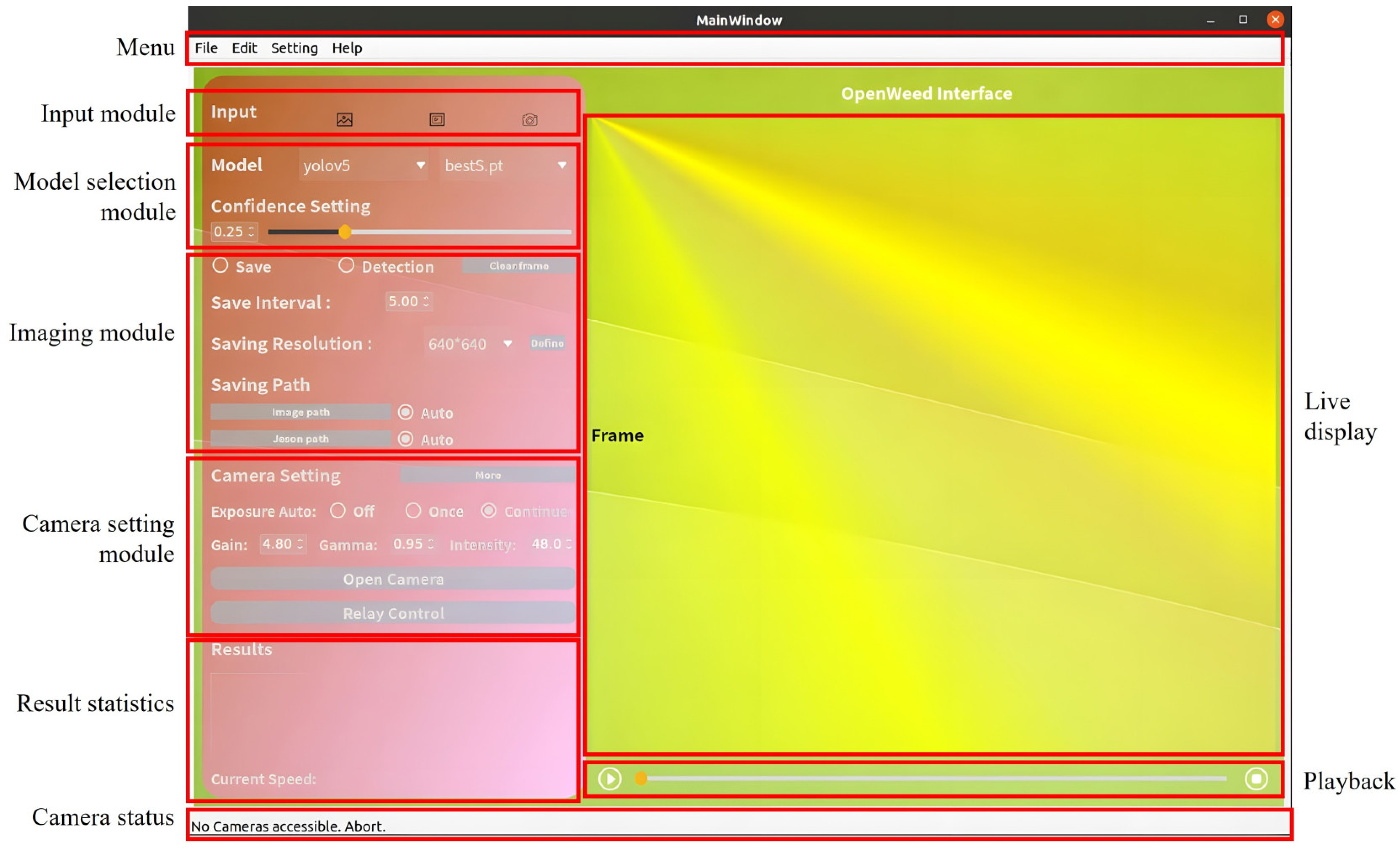

2.1.2. GUI Module Description

- Menu: It offers streamlined navigation of the interface functionalities including system settings, help documentation, module shortcuts, and advanced camera control features, such as setting the region of interest (ROI). The ROI setting allows one to work with a specific rectangular area of input images or live image streams for improved weed detection. With the menu bar, users can effortlessly switch among different operation tasks, enhancing the overall user experience.

- Input Module: This module allows users to select their preferred type of input data, i.e., image, video, and real-time camera image streams (primary target). This flexibility makes the GUI well-suited for real-time, online, and offline weed detection tasks.

- Model Selection Module: As an important technical feature in the GUI, this module enables users to choose from and deploy a collection of pre-trained YOLO models (Section 2.3) for weed detection. Additionally, users can adjust the confidence threshold for enhanced weed detection. This module offers ease of model switching during the weed detection process, enabling users to decide on a model that best suits detection needs.

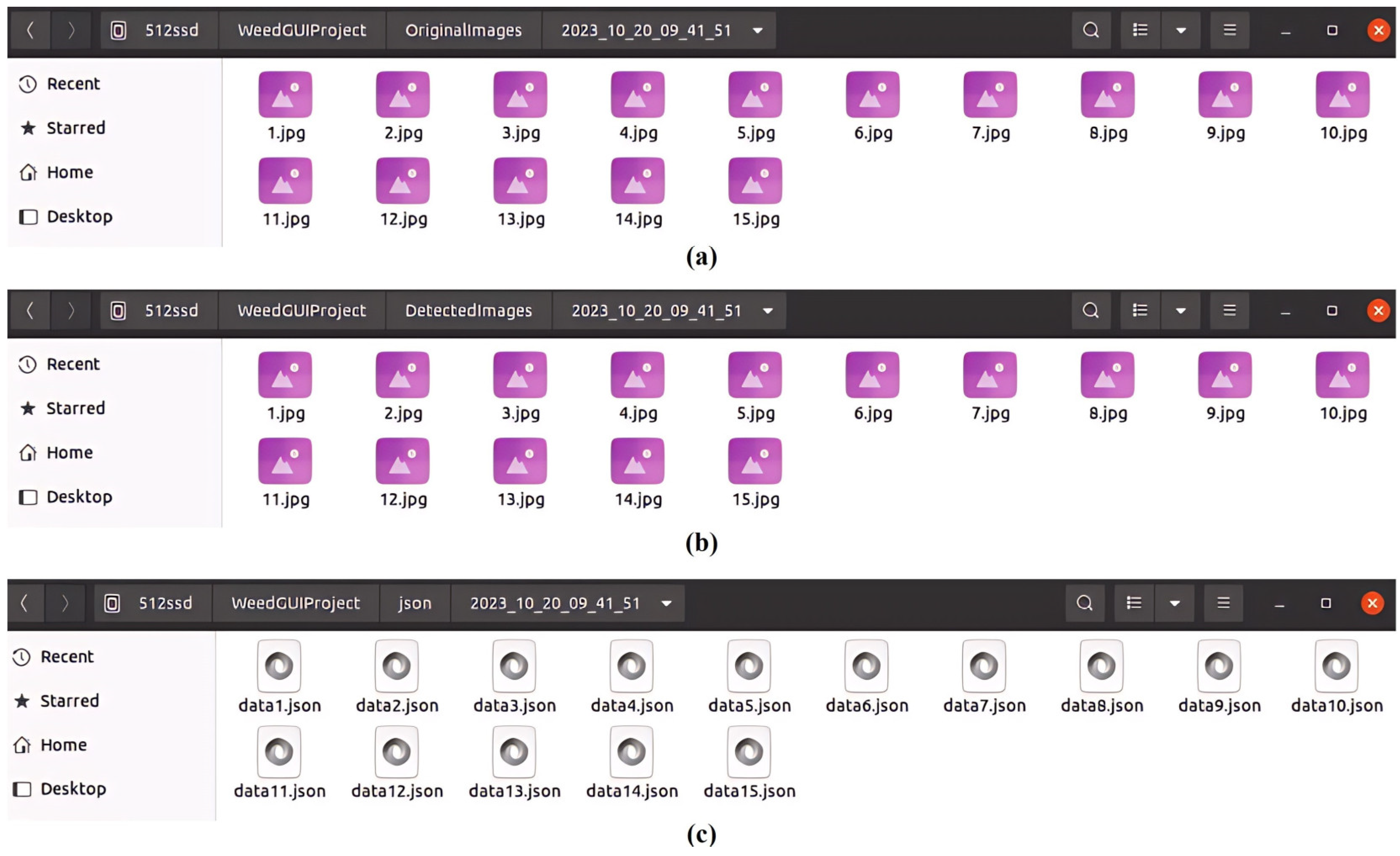

- Imaging Module: This module offers options for capturing and saving detected images. It has two checkboxes for saving and detection functions, respectively, with the flexibility to enable or disable image saving during the detection process. Users can specify a saving interval (in seconds) that determines how frequently images (in .jpg format), alongside associated detection files (in .json format), are saved locally. Images can be saved at either the original or customized resolution, and upon clicking the “Save” checkbox, three separate folders will be created, each timestamped, for saving raw images, the corresponding images overlaid with detection bounding boxes, and JSON files, respectively. The “detection” will perform weed detection by a specified model for camera video streams in real-time.

- Camera Setting Module: This module allows users to interact with imaging hardware and adjust camera parameters, including exposure times, gain, gamma, and intensity. A handy “More” button is added, upon clicking, to enable additional color space settings including Saturation, Hue, and Value. The permissible adjustment ranges (specific to the imaging hardware described in Section 2.2.1) for these camera parameters are given in Table 1.

- Display Module: This module displays the source image data, with weed detection bounding boxes overlaid if the detection option is checked. It supports the presentation of static images, external videos, and real-time camera video frames. The display dimensions are configured to a square shape of 640 × 640 pixels, dictated by the input size of YOLO models. However, the size of the display module can be readily modified to cater to specific input requirements.

- Playback Control Module: This module incorporates three essential components including a start button, a progress bar, and a stop button, for weed detection of external videos. With a video loaded, users can initiate playback by selecting the start button. Throughout the playback, the checkbox option for image detection and saving remains accessible, and the progress bar allows users to define the specific range for weed detection by adjusting its position.

- Status Output Module: This module communicates real-time operational updates, whether it is tracking the processing status, alerting the user about potential issues, or updating on successful tasks. For instance, it displays connection statuses, such as verifying if the camera is successfully connected or indicating any disconnections. It also specifies the source of the current video stream, whether it is from a local file or a real-time camera. This design keeps the users informed every step of the way.

2.2. Hardware and Software

2.2.1. Hardware

2.2.2. Software

2.3. YOLO Models

3. Experimentation and Discussion

3.1. Indoor Experimentation

3.2. In-Field Testing

3.3. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- MacRae, A.; Webster, T.; Sosnoskie, L.; Culpepper, A.; Kichler, J. Cotton yield loss potential in response to length of Palmer amaranth (Amaranthus palmeri) interference. J. Cotton. Sci. 2013, 17, 227–232. [Google Scholar]

- Morgan, G.D.; Baumann, P.A.; Chandler, J.M. Competitive impact of Palmer amaranth (Amaranthus palmeri) on cotton (Gossypium hirsutum) development and yield. Weed Technol. 2001, 15, 408–412. [Google Scholar] [CrossRef]

- Manalil, S.; Coast, O.; Werth, J.; Chauhan, B.S. Weed management in cotton (Gossypium hirsutum L.) through weed-crop competition: A review. Crop Prot. 2017, 95, 53–59. [Google Scholar] [CrossRef]

- Pimentel, D. Pesticides applied for the control of invasive species in the United States. In Integrated Pest Management; Elsevier: Amsterdam, The Netherlands, 2014; pp. 111–123. [Google Scholar]

- Abbas, T.; Zahir, Z.A.; Naveed, M.; Kremer, R.J. Limitations of existing weed control practices necessitate development of alternative techniques based on biological approaches. Adv. Agron. 2018, 147, 239–280. [Google Scholar]

- Del Prado-Lu, J.L. Insecticide residues in soil, water, and eggplant fruits and farmers’ health effects due to exposure to pesticides. Environ. Health Prev. Med. 2015, 20, 53–62. [Google Scholar] [CrossRef] [PubMed]

- Ecobichon, D.J. Pesticide use in developing countries. Toxicology 2001, 160, 27–33. [Google Scholar] [CrossRef] [PubMed]

- Young, S.L. Beyond precision weed control: A model for true integration. Weed Technol. 2018, 32, 7–10. [Google Scholar] [CrossRef]

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Chostner, B. See & Spray: The next generation of weed control. Resour. Mag. 2017, 24, 4–5. [Google Scholar]

- Kennedy, H.; Fennimore, S.A.; Slaughter, D.C.; Nguyen, T.T.; Vuong, V.L.; Raja, R.; Smith, R.F. Crop signal markers facilitate crop detection and weed removal from lettuce and tomato by an intelligent cultivator. Weed Technol. 2020, 34, 342–350. [Google Scholar] [CrossRef]

- Bauer, M.V.; Marx, C.; Bauer, F.V.; Flury, D.M.; Ripken, T.; Streit, B. Thermal weed control technologies for conservation agriculture—A review. Weed Res. 2020, 60, 241–250. [Google Scholar] [CrossRef]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A novel benchmark of YOLO object detectors for multi-class weed detection in cotton production systems. Comput. Electron. Agric. 2023, 205, 107655. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J. DeepWeeds: A multiclass weed species image dataset for deep learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Tang, Y.; Luo, F.; Wang, L.; Li, C.; Niu, Q.; Li, H. Weed25: A deep learning dataset for weed identification. Front. Plant Sci. 2022, 13, 1053329. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Qi, X.; Zheng, Y.; Lu, Y.; Li, Z. Deep Data Augmentation for Weed Recognition Enhancement: A Diffusion Probabilistic Model and Transfer Learning Based Approach. arXiv 2022, arXiv:2210.09509. [Google Scholar]

- Lu, Y. CottonWeedDet12: A 12-class weed dataset of cotton production systems for benchmarking AI models for weed detection. Zenodo 2023. [Google Scholar] [CrossRef]

- Coleman, G.R.; Bender, A.; Hu, K.; Sharpe, S.M.; Schumann, A.W.; Wang, Z.; Bagavathiannan, M.V.; Boyd, N.S.; Walsh, M.J. Weed detection to weed recognition: Reviewing 50 years of research to identify constraints and opportunities for large-scale cropping systems. Weed Technol. 2022, 6, 1–50. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Terven, J.; Cordova-Esparza, D. A comprehensive review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar] [CrossRef]

- Wang, Q.; Cheng, M.; Huang, S.; Cai, Z.; Zhang, J.; Yuan, H. A deep learning approach incorporating YOLO v5 and attention mechanisms for field real-time detection of the invasive weed Solanum rostratum Dunal seedlings. Comput. Electron. Agric. 2022, 199, 107194. [Google Scholar] [CrossRef]

- dos Santos, R.P.; Fachada, N.; Beko, M.; Leithardt, V.R. A rapid review on the use of free and open source technologies and software applied to precision agriculture practices. J. Sens. Actuator Netw. 2023, 12, 28. [Google Scholar] [CrossRef]

- Mössinger, J.; Troost, C.; Berger, T. Bridging the gap between models and users: A lightweight mobile interface for optimized farming decisions in interactive modeling sessions. Agric. Syst. 2022, 195, 103315. [Google Scholar] [CrossRef]

- Wisse, M.; Chiang, T.-C.; van der Hoorn, G. D1. 15: Best Practices in Developing Open Platform for Agri-Food Robotics; European Commission: Brussels, Belgium, 2020. [Google Scholar]

- Mobaraki, N.; Amigo, J.M. HYPER-Tools. A graphical user-friendly interface for hyperspectral image analysis. Chemom. Intell. Lab. Syst. 2018, 172, 174–187. [Google Scholar] [CrossRef]

- Khandarkar, S.; Wadhankar, V.; Dabhade, D. Detection and identification of artificially ripened fruits using MATLAB. Int. J. Innov. Res. Comput. Commun. Eng. 2018, 6, 9136–9140. [Google Scholar]

- Lu, R.; Pothula, A.K.; Mizushima, A.; VanDyke, M.; Zhang, Z. System for Sorting Fruit. US Patent 9,919,345, 20 March 2018. [Google Scholar]

- Deshmukh, L.; Kasbe, M.; Mujawar, T.; Mule, S.; Shaligram, A. A wireless electronic nose (WEN) for the detection and classification of fruits: A case study. In Proceedings of the 2016 International Symposium on Electronics and Smart Devices (ISESD), Bandung, Indonesia, 29–30 November 2016; pp. 174–178. [Google Scholar]

- Bauer, A.; Bostrom, A.G.; Ball, J.; Applegate, C.; Cheng, T.; Laycock, S.; Rojas, S.M.; Kirwan, J.; Zhou, J. Combining computer vision and deep learning to enable ultra-scale aerial phenotyping and precision agriculture: A case study of lettuce production. Hortic. Res. 2019, 6, 70. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Lu, R.; Zhang, Z. Development and preliminary evaluation of a new apple harvest assist and in-field sorting machine. Appl. Eng. Agric. 2022, 38, 23–35. [Google Scholar] [CrossRef]

- Gao, J.; French, A.P.; Pound, M.P.; He, Y.; Pridmore, T.P.; Pieters, J.G. Deep convolutional neural networks for image-based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Deng, B.; Lu, Y.; Xu, J. Weed database development: An updated survey of public weed datasets and cross-season weed detection adaptation. Ecol. Inform. 2024, 81, 102546. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Lv, W.; Xu, S.; Zhao, Y.; Wang, G.; Wei, J.; Cui, C.; Du, Y.; Dang, Q.; Liu, Y. DETRs beat YOLOs on real-time object detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

| Camera Parameter | Description | Range |

|---|---|---|

| Gain | Control the sensitivity of the camera’s sensor to light | [0, 24] |

| Gamma | Perform gamma correction | [0, 2.4] |

| Intensity | Set a specific target intensity level for the camera to maintain. | [0, 100] |

| Saturation | Adjust the intensity of colors, making them more or vivid | [0, 2] |

| Hue | Modify the overall tint and tone of the image | [0, 300] |

| Value | Adjust the brightness of the image | [0, 1] |

| YOLO Variant | Model in GUI | Input Size | Number of Parameters (Million) | URL | |

|---|---|---|---|---|---|

| YOLOv3 | YOLOv3-tiny | best-tiny.pt | 640 × 640 | 8.6 | https://github.com/ultralytics/YOLOv3, assessed on 9 July 2023 |

| YOLOv3 | best3.pt | 640 × 640 | 61.5 | ||

| YOLOv3-SPP | bestSPP.pt | 640 × 640 | 62.6 | ||

| YOLOv4 | YOLOv4pacsp-s | bestv4_pacsp-s.pt | 640 × 640 | 8.1 | https://github.com/WongKinYiu/PyTorch_YOLOv4, assessed on 9 July 2023 |

| YOLOv4pacsp | bestv4_pacsp.pt | 640 × 640 | 45.4 | ||

| YOLOv4 | bestv4.pt | 640 × 640 | 63.2 | ||

| YOLOv4pacsp-x | bestv4_pacsp-x.pt | 640 × 640 | 97.8 | ||

| Scaled-YOLOv4 | YOLOv4-P5 | bestsv4-p5.pt | 640 × 640 | 72.6 | https://github.com/WongKinYiu/ScaledYOLOv4, assessed on 9 July 2023 |

| YOLOv4-P6 | bestsv4-p6.pt | 640 × 640 | 128.6 | ||

| YOLOv4-P7 | bestsv4-p7.pt | 640 × 640 | 287.9 | ||

| YOLOR | YOLOR-P6 | bestR-P6.pt | 640 × 640 | 37.5 | https://github.com/WongKinYiu/yolor, assessed on 9 July 2023 |

| YOLOR-CSP | bestR-CSP.pt | 640 × 640 | 53.3 | ||

| YOLOR-CSP-X | bestR-CSP-X.pt | 640 × 640 | 102.4 | ||

| YOLOv5 | YOLOv5n | bestN.pt | 640 × 640 | 1.9 | https://github.com/ultralytics/YOLOv5, assessed on 9 July 2023 |

| YOLOv5s | bestS.pt | 640 × 640 | 7.2 | ||

| YOLOv5m | bestM.pt | 640 × 640 | 21.2 | ||

| YOLOv5l | bestL.pt | 640 × 640 | 46.5 | ||

| YOLOv5x | bestX.pt | 640 × 640 | 86.7 | ||

| YOLOv5 + TensorRT | YOLOv5n | bestN.engine | 640 × 640 | 1.9 | https://github.com/wang-xinyu/tensorrtx/tree/master/yolov5, assessed on 9 July 2023 |

| YOLOv5s | bestS.engine | 640 × 640 | 7.2 | ||

| YOLOv5m | bestM.engine | 640 × 640 | 21.2 | ||

| YOLOv5l | bestL.engine | 640 × 640 | 46.5 | ||

| YOLOv5x | bestX.engine | 640 × 640 | 86.7 | ||

| YOLOX | YOLOX-s | bests_ckpt.pth | 640 × 640 | 9.0 | https://github.com/Megvii-BaseDetection/YOLOX, assessed on 9 July 2023 |

| YOLOX-m | bestm_ckpt.pth | 640 × 640 | 25.3 | ||

| YOLOX-l | best_ckpt.pth | 800 × 800 | 54.2 | ||

| YOLOX-x | bestx_ckpt.pth | 800 × 800 | 99.1 | ||

| YOLOv8 | YOLOv8s | yolov8bestS.pt | 640 × 1200 | 28.6 | https://github.com/ultralytics/ultralytics, assessed on 9 July 2023 |

| YOLOv8m | yolov8bestM.pt | 640 × 640 | 78.9 | ||

| YOLOv8l | yolov8bestL.pt | 800 × 800 | 165.2 | ||

| YOLOv8x | yolov8bestX.pt | 640 × 640 | 257.8 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, J.; Lu, Y.; Deng, B. OpenWeedGUI: An Open-Source Graphical Tool for Weed Imaging and YOLO-Based Weed Detection. Electronics 2024, 13, 1699. https://doi.org/10.3390/electronics13091699

Xu J, Lu Y, Deng B. OpenWeedGUI: An Open-Source Graphical Tool for Weed Imaging and YOLO-Based Weed Detection. Electronics. 2024; 13(9):1699. https://doi.org/10.3390/electronics13091699

Chicago/Turabian StyleXu, Jiajun, Yuzhen Lu, and Boyang Deng. 2024. "OpenWeedGUI: An Open-Source Graphical Tool for Weed Imaging and YOLO-Based Weed Detection" Electronics 13, no. 9: 1699. https://doi.org/10.3390/electronics13091699

APA StyleXu, J., Lu, Y., & Deng, B. (2024). OpenWeedGUI: An Open-Source Graphical Tool for Weed Imaging and YOLO-Based Weed Detection. Electronics, 13(9), 1699. https://doi.org/10.3390/electronics13091699