Enhancing Security in Software Design Patterns and Antipatterns: A Framework for LLM-Based Detection

Abstract

1. Introduction

2. State of the Art

2.1. LLMs and Their Application in Software Security

2.2. Limitations of Traditional Static Analysis Tools

- Lack of Contextual Awareness: Static analysis tools operate without a complete understanding of the broader context, often evaluating code at the function or file level. This limitation prevents them from detecting complex security patterns that span multiple components or layers. For example, issues related to Feature Envy or God Object require an understanding of relationships across components, which these tools struggle to capture.

- High Rate of False Positives: Due to their rigid rule-based detection, static analysis tools tend to produce many false positives, as they cannot discern the purpose of the code or the context in which it executes. This can lead to tagging secure code as potentially vulnerable, increasing the manual workload for developers who must review each alert.

- Limited Detection of Complex Patterns and Architectural Issues: Static tools focus mainly on identifying isolated vulnerabilities but lack the capacity to detect architectural issues that degrade security over time. This makes static tools ineffective at identifying complex antipatterns like Spaghetti Code, where components are tangled in a chaotic way, making the system difficult to audit and secure.

- Limited Adaptability and Scalability: Static analysis tools are inflexible, as they rely on pre-established rules and are not readily adaptable to new software designs or emerging threats. Additionally, integrating these tools into CI/CD can slow down the development process for large projects, as they struggle to scale efficiently for the real-time analysis of complex dependencies.

2.3. Key Security Heuristics Guiding LLM Analysis

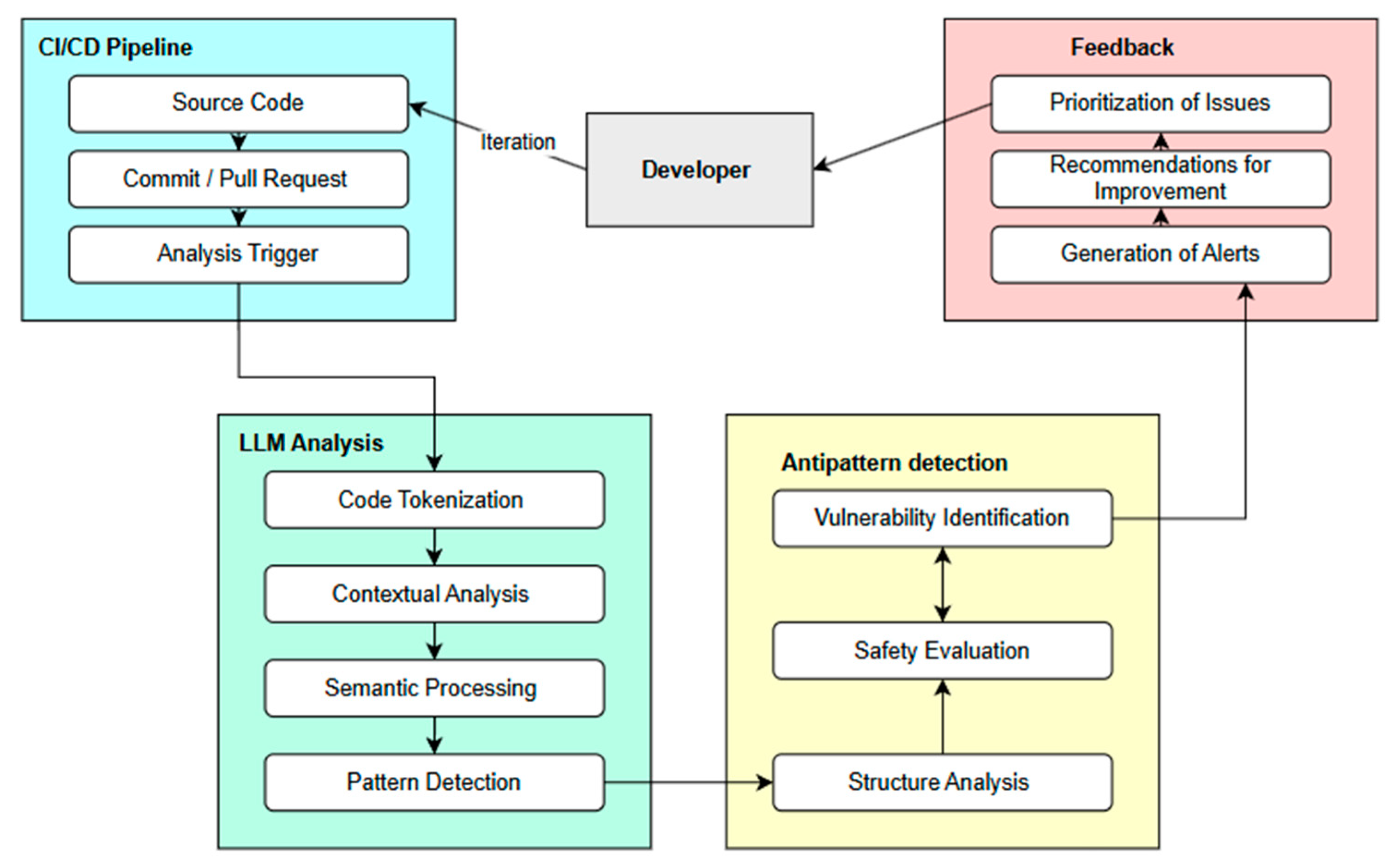

3. Methodology: Theoretical Framework for Detecting Antipatterns in CI/CD Using LLMs

- 1

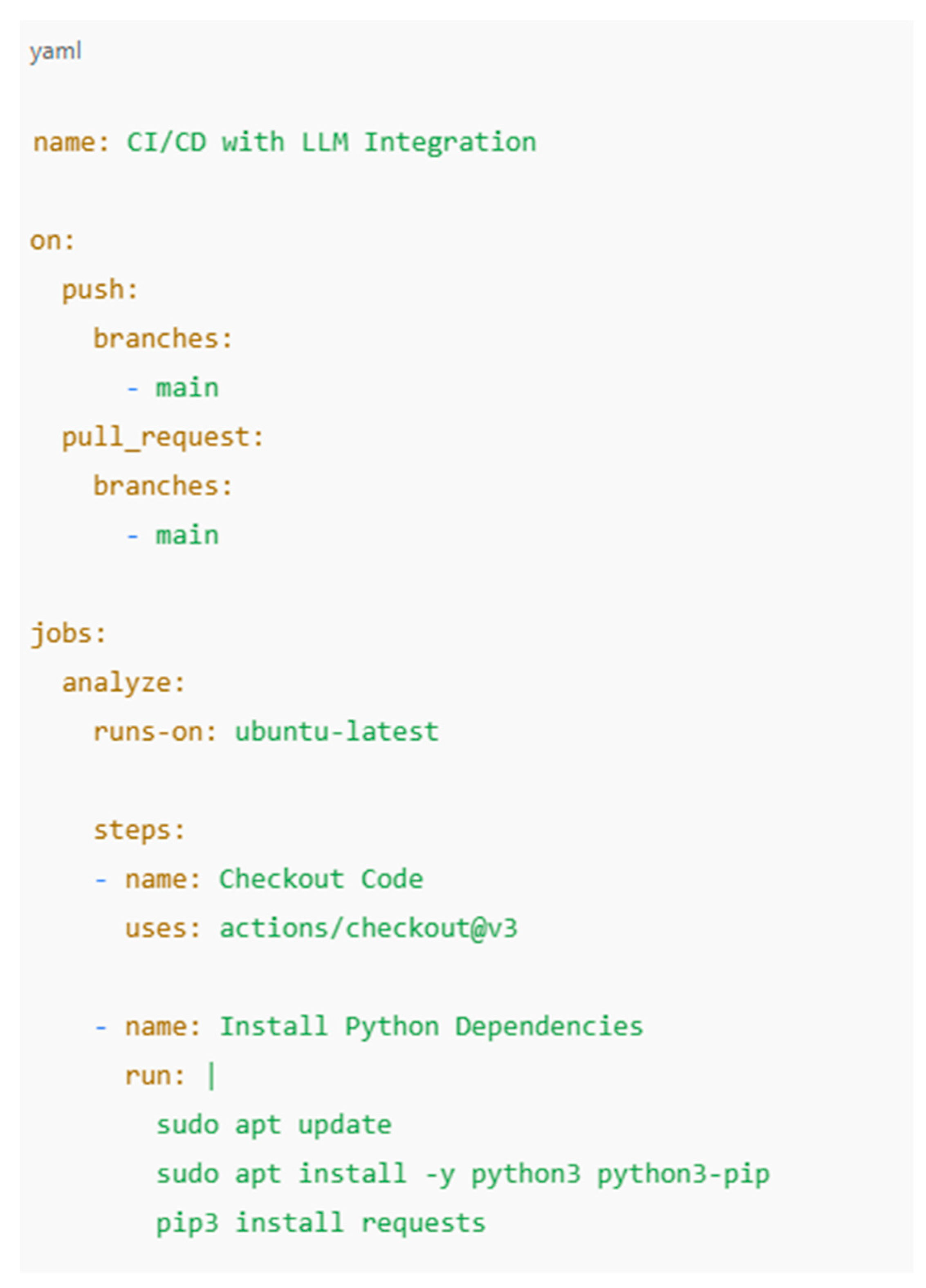

- Integration into CI/CD Pipelines:

- LLMs are integrated into tools such as Jenkins, GitLab CI/CD, or GitHub Actions to monitor code at specific stages.

- For instance, during a code commit, the LLM scans for potential antipatterns and provides immediate feedback to developers

- 2

- Tokenization and Contextual Analysis:

- Each code snippet undergoes tokenization, yielding embeddings for every element. These embeddings are processed using the LLM’s attention mechanism, prioritizing critical structures like control flows and variable dependencies.

- 3

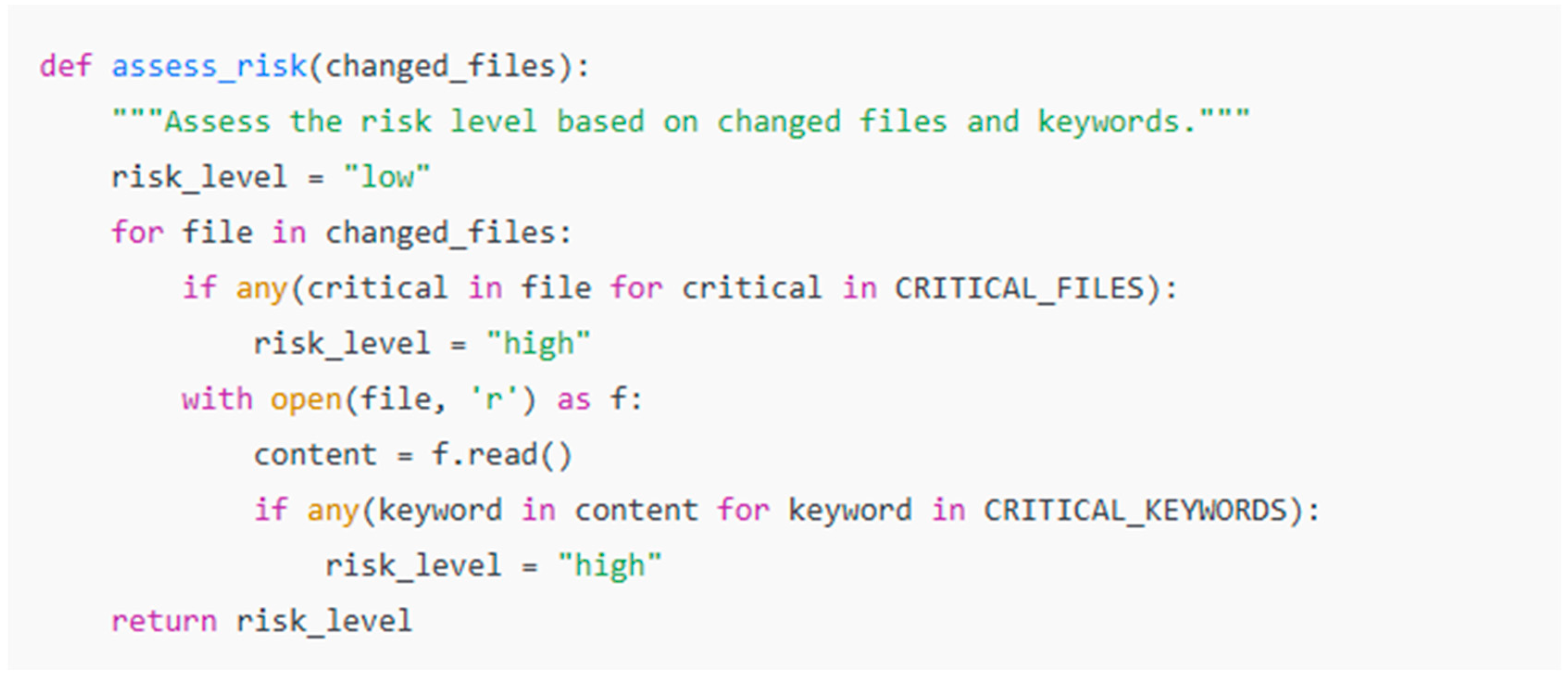

- Security Heuristic Application:

- The LLM employs heuristics, such as the principle of least privilege and input validation, to evaluate code. For instance, it identifies modules with excessive permissions or inadequate data sanitization.

- 4

- Feedback and Remediation:

- The framework generates actionable recommendations, such as refactoring suggestions for God Objects or secure alternatives for deprecated encryption algorithms.

Real-World Implementation Suggestions

4. Discussion

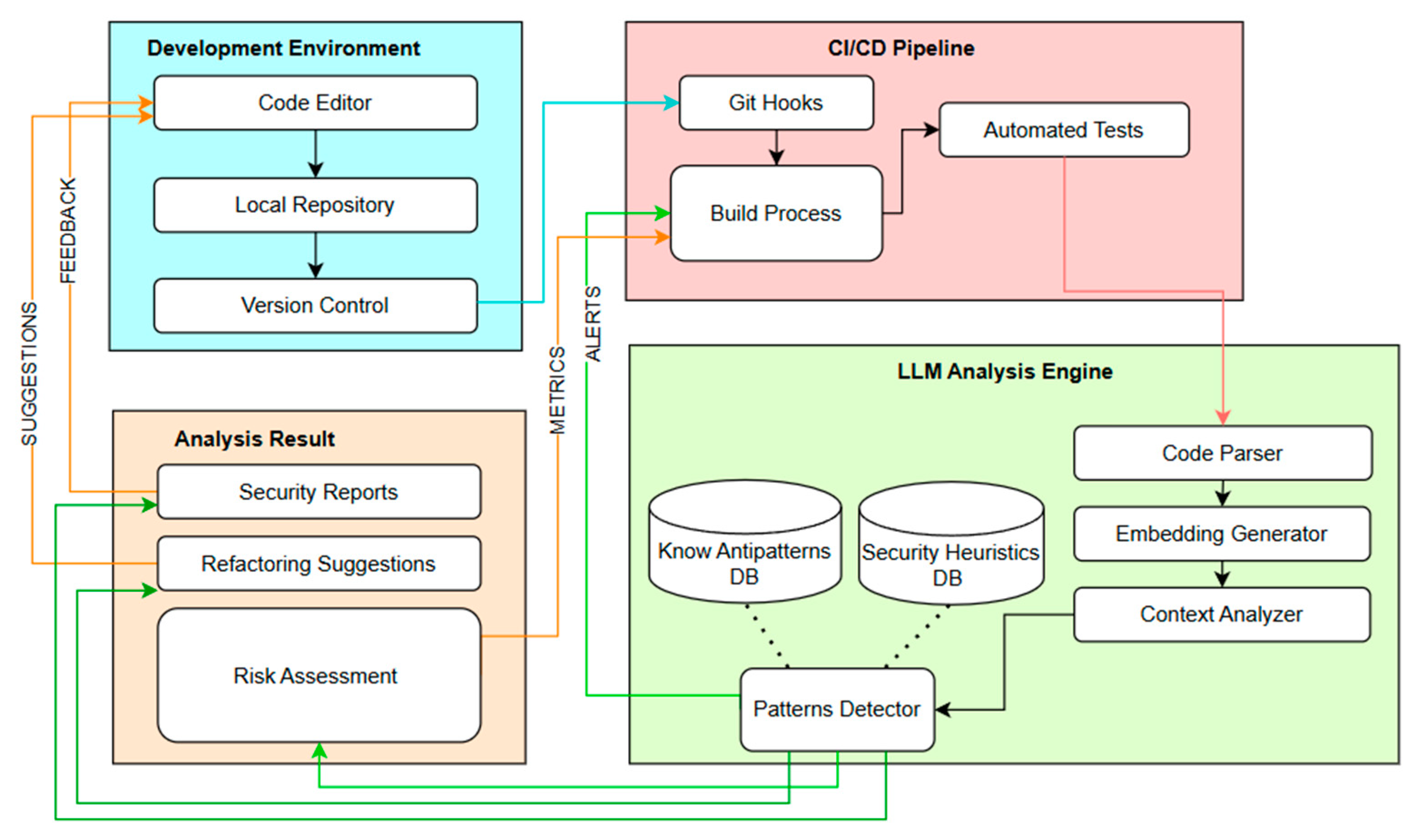

4.1. Conceptual Architecture of the LLM with Security Heuristics

- Development environment:

- Integrates with industry-standard development tools.

- Provides instant feedback to developers.

- Manages version control integration.

- CI/CD Pipeline:

- Manages automated build processes.

- Executes test suites.

- Manages deployment workflows.

- LLM Analysis Engine:

- Processes code with a specialized analyzer.

- Generates code embeds for analysis.

- Applies security and pattern matching heuristics.

- Maintains databases of known antipatterns and security rules.

- Analysis Results:

- Generates comprehensive security reports.

- Provides practical refactoring recommendations.

- Provides metrics for risk assessment.

- Real-time information for developers.

- Continuous integration into development workflows.

- Automated security assessment.

- Contextual analysis of code changes.

4.1.1. LLM Architecture in CI/CD Environments

- Behavioral Pattern: List the behavioral design patterns from the Gang of Four (GoF) catalog, which focus on communication between objects and the responsibility assignment in a system. The patterns listed include well-known ones like the Chain of Responsibility, Observer, and Visitor, each addressing specific design problems.

- Security Risks: This column describes the potential security vulnerabilities associated with each behavioral pattern when implemented without proper security measures. Examples of security risks include unvalidated data flow, unauthorized state changes, sensitive data exposure, and weak algorithm selection. These risks arise when the pattern allows unsafe or unintended behavior in its interactions or data handling.

4.1.2. Predictive Vulnerability Identification

4.1.3. Real-Time Analysis in CI/CD Pipelines

4.1.4. Detecting Complex Vulnerabilities

4.1.5. Faster Remediation Suggestions

4.1.6. Context-Aware Vulnerability Detection

4.2. Comparative Analysis with Traditional Tools

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Minna, F.; Massacci, F.; Tuma, K. Analyzing and mitigating (with LLMs) the security misconfigurations of Helm charts from Artifact Hub. arXiv 2024, arXiv:2403.09537. [Google Scholar]

- Charalambous, Y.; Tihanyi, N.; Sun, Y.; Ferrag, M.A.; Cordeiro, L. A New Era in Software Security: Towards Self-Healing Software via Large Language Models and Formal Verification. arXiv 2023, arXiv:2305.14752. [Google Scholar]

- Bagai, R.; Masrani, A.; Ranjan, P.; Najana, M. Implementing Continuous Integration and Deployment (CI/CD) for Machine Learning Models on AWS. Int. J. Glob. Innov. Solut. IJGIS 2024. [Google Scholar] [CrossRef]

- Chen, T. Challenges and Opportunities in Integrating LLMs into Continuous Integration/Continuous Deployment (CI/CD) Pipelines. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; pp. 364–367. [Google Scholar] [CrossRef]

- Vighe, S. Security for Continuous Integration and Continuous Deployment Pipeline. Int. Res. J. Mod. Eng. Technol. Sci. 2024, 6, 2325–2330. [Google Scholar] [CrossRef]

- Chen, L.; Guo, Q.; Jia, H.; Zeng, Z.; Wang, X.; Xu, Y.; Wu, J.; Wang, Y.; Gao, Q.; Wang, J.; et al. A Survey on Evaluating Large Language Models in Code Generation Tasks. arXiv 2024, arXiv:2408.16498. [Google Scholar]

- Di Penta, M. Understanding and Improving Continuous Integration and Delivery Practice using Data from the Wild. In Proceedings of the 13th Innovations in Software Engineering Conference (Formerly Known as India Software Engineering Conference) (ISEC ’20), Jabalpur, India, 27–29 February 2020; Association for Computing Machinery: New York, NY, USA, 2020; p. 1. [Google Scholar] [CrossRef]

- Hilton, M.; Nelson, N.; Tunnell, T.; Marinov, D.; Dig, D. Trade-offs in continuous integration: Assurance, security, and flexibility. In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering (ESEC/FSE 2017), Paderborn, Germany, 4–8 September 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 197–207. [Google Scholar] [CrossRef]

- Tihanyi, N.; Ferrag, M.A.; Jain, R.; Bisztray, T.; Debbah, M. CyberMetric: A Benchmark Dataset based on Retrieval-Augmented Generation for Evaluating LLMs in Cybersecurity Knowledge. In Proceedings of the 2024 IEEE International Conference on Cyber Security and Resilience (CSR), London, UK, 2–4 September 2024; pp. 296–302. [Google Scholar] [CrossRef]

- Chen, Y.; Cui, M.; Wang, D.; Cao, Y.; Yang, P.; Jiang, B.; Lu, Z.; Liu, B. A survey of large language models for cyber threat detection. Comput. Secur. 2024, 145, 104016. [Google Scholar] [CrossRef]

- Paulo; Realpe, C.; Collazos, C.A.; Hurtado, J.; Granollers, A. A Set of Heuristics for Usable Security and User Authentication. In Proceedings of the XVII International Conference on Human Computer Interaction (Interacción ’16), Salamanca, Spain, 13–16 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; p. 21. [Google Scholar] [CrossRef]

- Jaferian, P.; Hawkey, K.; Sotirakopoulos, A.; Velez-Rojas, M.; Beznosov, K. Heuristics for evaluating IT security management tools. In Proceedings of the Seventh Symposium on Usable Privacy and Security (SOUPS ’11), Pittsburgh, PA, USA, 20–22 July 2011; Association for Computing Machinery: New York, NY, USA, 2011; p. 7. [Google Scholar] [CrossRef]

- Patil, R.; Gudivada, V. A Review of Current Trends, Techniques, and Challenges in Large Language Models (LLMs). Appl. Sci. 2024, 14, 2074. [Google Scholar] [CrossRef]

- Szabó, Z.; Bilicki, V. A New Approach to Web Application Security: Utilizing GPT Language Models for Source Code Inspection. Future Internet 2023, 15, 326. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Fan, D.; Zhu, J.; Zhou, T.; Zeng, L.; Li, Z. VTT-LLM: Advancing Vulnerability-to-Tactic-and-Technique Mapping through Fine-Tuning of Large Language Model. Mathematics 2024, 12, 1286. [Google Scholar] [CrossRef]

- Diaba, S.Y.; Shafie-Khah, M.; Elmusrati, M. Cyber Security in Power Systems Using Meta-Heuristic and Deep Learning Algorithms. IEEE Access 2023, 11, 18660–18672. [Google Scholar] [CrossRef]

- Cabrero-Daniel, B.; Herda, T.; Pichler, V.; Eder, M. Exploring Human-AI Collaboration in Agile: Customised LLM Meeting Assistants. In Agile Processes in Software Engineering and Extreme Programming; Šmite, D., Guerra, E., Wang, X., Marchesi, M., Gregory, P., Eds.; XP 2024. Lecture Notes in Business Information Processing; Springer: Cham, Switzerland, 2024; Volume 512. [Google Scholar] [CrossRef]

- Raj, R.; Costa, D.E. The role of library versions in Developer-ChatGPT conversations. In Proceedings of the 21st International Conference on Mining Software Repositories (MSR ’24), Lisbon, Portugal, 15–16 April 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 172–176. [Google Scholar] [CrossRef]

- Fürntratt, H.; Schnabl, P.; Krebs, F.; Unterberger, R.; Zeiner, H. Towards Higher Abstraction Levels in Quantum Computing. In Service-Oriented Computing—ICSOC 2023 Workshops; Monti, F., Plebani, P., Moha, N., Paik, H., Barzen, J., Ramachandran, G., Bianchini, D., Tamburri, D., Mecella, M., Eds.; ICSOC 2023. Lecture Notes in Computer Science; Springer: Singapore, 2024; Volume 14518. [Google Scholar] [CrossRef]

- Li, G.; Peng, X.; Wang, Q.; Xie, T.; Jin, Z.; Wang, J.; Ma, X.; Li, X. Large Models: Challenges of Natural Interaction-Based Human-Machine Collaborative Tools for Software Development and Evolution. J. Softw. 2023, 34, 4601–4606. [Google Scholar]

- Cotroneo, D.; De Luca, R.; Liguori, P. DeVAIC: A tool for security assessment of AI-generated code. Inf. Softw. Technol. 2025, 177, 107572. [Google Scholar] [CrossRef]

- Ullah, S.; Han, M.; Pujar, S.; Pearce, H.; Coskun, A.; Stringhini, G. LLMs Cannot Reliably Identify and Reason About Security Vulnerabilities (Yet?): A Comprehensive Evaluation, Framework, and Benchmarks. In IEEE Symposium on Security and Privacy; IEEE Xplorer: San Francisco, CA, USA, 2024; pp. 862–880. [Google Scholar] [CrossRef]

- Al-Hawawreh, M.; Aljuhani, A.; Jararweh, Y. Chatgpt for cybersecurity: Practical applications, challenges, and future directions. Cluster Comput. 2023, 26, 3421–3436. [Google Scholar] [CrossRef]

- Wu, H.; Yu, Z.; Huang, D.; Zhang, H.; Han, W. Automated Enforcement of the Principle of Least Privilege over Data Source Access. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December–1 January 2020; pp. 510–517. [Google Scholar] [CrossRef]

- Romano, S.; Zampetti, F.; Baldassarre, M.T.; Di Penta, M.; Scanniello, G. Do Static Analysis Tools Affect Software Quality when Using Test-driven Development? In Proceedings of the ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM) (ESEM ’22), Helsinki, Finland, 19–23 September 2022; ACM: New York, NY, USA, 2022. 12p. [Google Scholar] [CrossRef]

- Walker, A.; Coffey, M.; Tisnovsky, P.; Cerny, T. On limitations of modern static analysis tools. In Information Science and Applications; Kim, K.J., Kim, H.-Y., Eds.; Springer: Berlin/Heidelberg, Germany; pp. 577–586. [CrossRef]

- Yang, B.; Hu, H. An Efficient Verification Approach to Separation of Duty in Attribute-Based Access Control. IEEE Trans. Knowl. Data Eng. 2024, 36, 4428–4442. [Google Scholar] [CrossRef]

- Barlas, E.; Du, X.; Davis, J.C. Exploiting input sanitization for regex denial of service. In Proceedings of the 44th International Conference on Software Engineering (ICSE ’22), Pittsburgh, PA, USA, 21–29 May 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 883–895. [Google Scholar] [CrossRef]

| Article | Focus Research |

|---|---|

| Exploring Human-AI Collaboration in Agile: Customized LLM Meeting Assistants [17]. | Develop adaptive LLM tools tailored for Agile methodologies to enhance team collaboration and automate routine meeting tasks. |

| The role of library versions in Developer-ChatGPT conversations [18]. | Create compatibility analysis tools to address issues in developer–LLM interactions caused by mismatched library versions. |

| Towards Higher Abstraction Levels in Quantum Computing [19]. | Investigate new abstraction models and frameworks to simplify quantum algorithm development and improve accessibility for non-expert developers. |

| Challenges from LLMs as a Natural Language Based Human–machine Collaborative Tool for Software Development and Evolution [20]. | Explore strategies to address limitations of LLMs in software development, such as error handling, version control, and maintaining collaboration quality. |

| Devaic: A tool for security assessment of ai-generated code [21]. | Development of Devaic, a tool for systematic security assessment of AI-generated code, addressing vulnerabilities in automated code. |

| LLMs Cannot Reliably Identify and Reason About Security Vulnerabilities (Yet?): A Comprehensive Evaluation, Framework, and Benchmarks [22]. | Comprehensive evaluation of LLMs’ capabilities in identifying and reasoning about security vulnerabilities, revealing reasoning gaps. |

| ChatGPT for cybersecurity: practical applications, challenges, and future directions [23]. | Exploring ChatGPT’s applications in cybersecurity, identifying limitations (error propagation, lack of tuning) and proposing improvements. |

| Type | Pattern | Antipattern |

|---|---|---|

| Description | Reusable solutions to common design problems in software | Poor design choices that lead to maintainability and security issues |

| Examples | Observer, Singleton | Spaghetti Code, God Class, Lava Flow |

| Advantages | - Improved modularity - Clear structure - Reusability | None; these designs typically create more problems than they solve |

| Disadvantages | - Can introduce security vulnerabilities if misused - Tightly coupled code - Global state (Singleton) | - Poor maintainability - Hard to refactor - High technical debt |

| Security Impact | Observer Pattern: - Uncontrolled access to data - Event flooding - Race conditions Singleton Pattern: - Global state compromise - Thread safety issues | - Increases risk of vulnerabilities due to code complexity - Difficult to apply security patches - Obfuscates security flaws (unintentionally) |

| Common Misuse | - Misuse of Observer can lead to data leakage (e.g., sensitive data shared with unintended observers) - Misuse of Singleton can create single points of failure or global state manipulation | - Spaghetti Code often results from rushed development or lack of proper design practices, leading to logic errors, buffer overflows, and other vulnerabilities |

| Behavioral Pattern | Security Risks | LLM Evaluation |

|---|---|---|

| Chain of Responsibility | Unvalidated data flowing through handlers, potentially reaching insecure handlers. | Check if handlers validate data properly and enforce security policies at each stage. |

| Command | Malicious commands can be injected or executed without proper authorization. | Ensure commands are authenticated, validated, and logged before execution. |

| Interpreter | Code injection or interpretation vulnerabilities due to improper input validation. | Detect injection vulnerabilities and ensure secure input validation. |

| Iterator | Exposing sensitive data or internal states while iterating collections. | Ensure iterators do not expose sensitive data or internal structures. |

| Mediator | Single point of failure or sensitive interactions exposed through unsecured communication. | Evaluate if communication between objects is properly secured, preventing unauthorized access. |

| Memento | Mementos capturing sensitive state data insecurely or exposing them unintentionally. | Verify mementos store sensitive state securely and prevent unauthorized access. |

| Observer | Sensitive data leaks through unauthorized updates or insecure communication. | Ensure only authorized observers receive updates and that communication is authenticated. |

| State | Unauthorized access to object states or changes in behavior due to improper state transitions. | Check state transitions for proper access control and ensure unauthorized users cannot modify states. |

| Strategy | Insecure algorithm selection, especially in cases like weak encryption strategies. | Evaluate if strategy selection is secure, flagging weak or deprecated algorithms. |

| Template Method | Overriding or bypassing critical security steps in subclasses, leading to vulnerabilities. | Ensure that critical security steps are consistently enforced in the template method. |

| Visitor | Unauthorized visitors accessing or modifying sensitive elements in object structures. | Check if visitors are restricted and that access control prevents unauthorized operations. |

| Feature | Traditional Tools | Proposed LLM Framework |

|---|---|---|

| Contextual Detection | Limited to analysis per file | Full system analysis |

| False Positives | High number | Reduced by context |

| Analysis Time | Fast but superficial | Deeper but optimized |

| Scalability | Limited in large projects | Adaptable to any scale |

| Antipattern Detection | Based on fixed rules | Contextual learning |

| IC/DC Integration | Can slow down the pipeline | Seamless integration |

| Updates | Requires manual update | Continuous self-learning |

| Suggestions for Improvement | Generic | Contextualized to the project |

| Security Coverage | Focused on known vulnerabilities | Proactive detection |

| Maintenance | Requires regular configuration | Self-adaptive |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Andrade, R.; Torres, J.; Ortiz-Garcés, I. Enhancing Security in Software Design Patterns and Antipatterns: A Framework for LLM-Based Detection. Electronics 2025, 14, 586. https://doi.org/10.3390/electronics14030586

Andrade R, Torres J, Ortiz-Garcés I. Enhancing Security in Software Design Patterns and Antipatterns: A Framework for LLM-Based Detection. Electronics. 2025; 14(3):586. https://doi.org/10.3390/electronics14030586

Chicago/Turabian StyleAndrade, Roberto, Jenny Torres, and Iván Ortiz-Garcés. 2025. "Enhancing Security in Software Design Patterns and Antipatterns: A Framework for LLM-Based Detection" Electronics 14, no. 3: 586. https://doi.org/10.3390/electronics14030586

APA StyleAndrade, R., Torres, J., & Ortiz-Garcés, I. (2025). Enhancing Security in Software Design Patterns and Antipatterns: A Framework for LLM-Based Detection. Electronics, 14(3), 586. https://doi.org/10.3390/electronics14030586