The Effect of Emotional Intelligence on the Accuracy of Facial Expression Recognition in the Valence–Arousal Space

Abstract

:1. Introduction

2. Related Works

2.1. Categorical Emotion Recognition Models

2.2. Dimensional Emotion Recognition Models

2.3. Multimodal Emotion Recognition Approaches

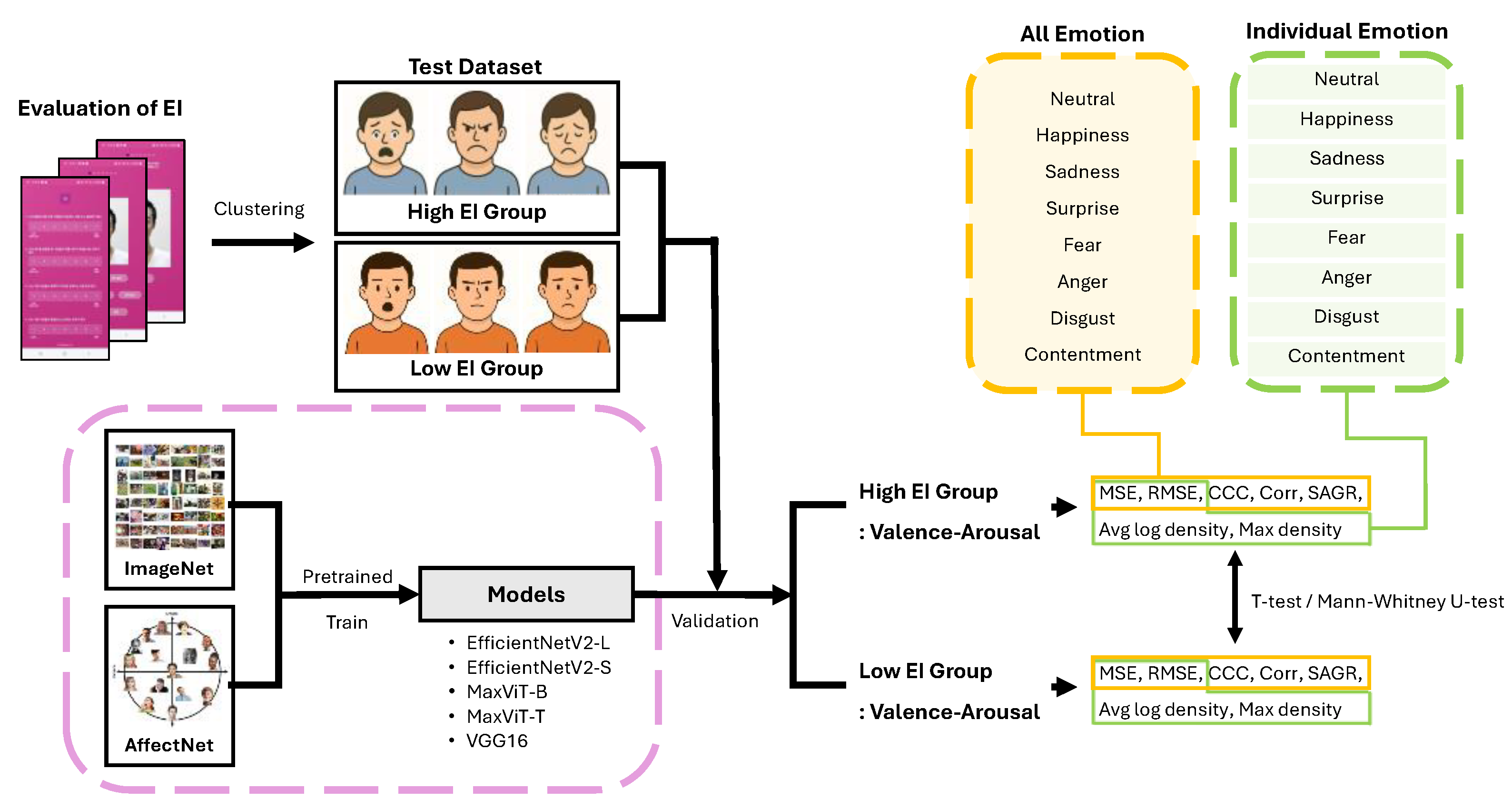

3. Materials and Methods

3.1. Dataset

3.2. Model Architecture

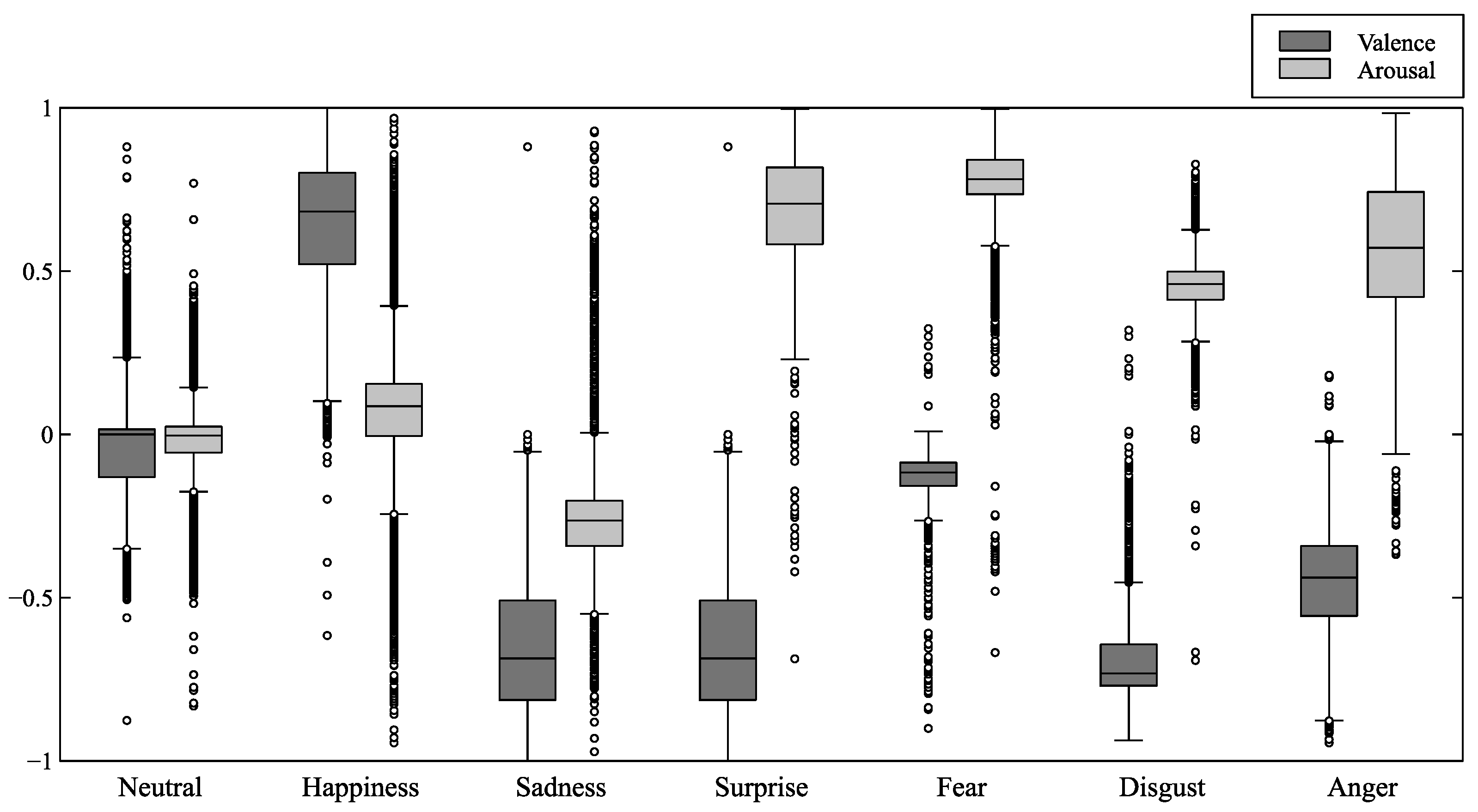

3.3. Reference Framework

3.4. Participants and EI Grouping

3.5. Experimental Task and Procedure

- I am often surprised by the reactions of others to my behavior.

- When I express my thoughts, people often respond with anger or irritation.

- I sometimes realize that people are unpredictable.

- I am often surprised by the behavior of others.

- I inadvertently hurt someone’s feelings.

- I sometimes find it hard to understand the choices others make.

3.6. Evaluation Metrics

4. Analysis

4.1. Deep Learning-Based Emotion Recognition

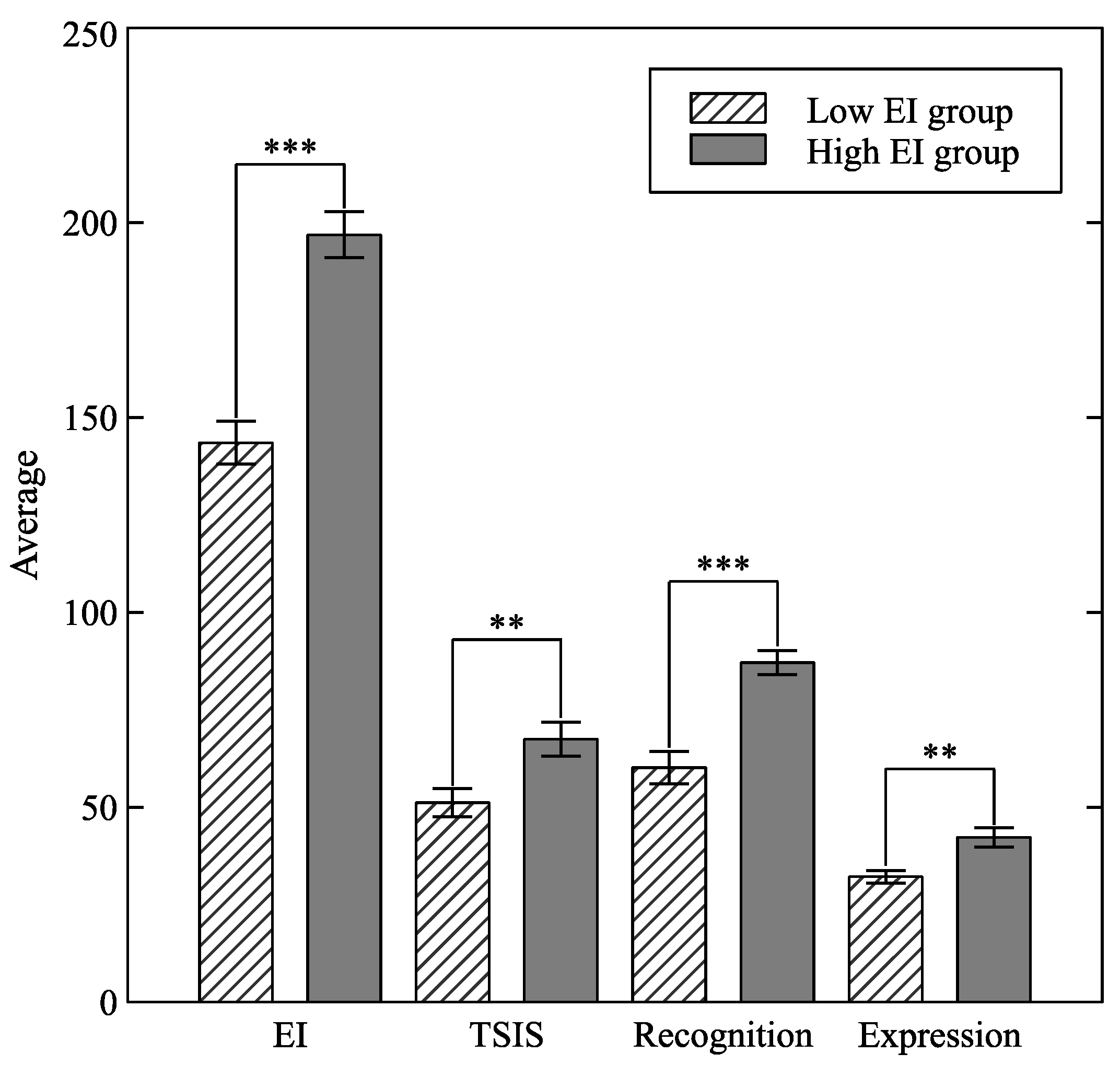

4.2. EI Grouping and Statistical Analysis

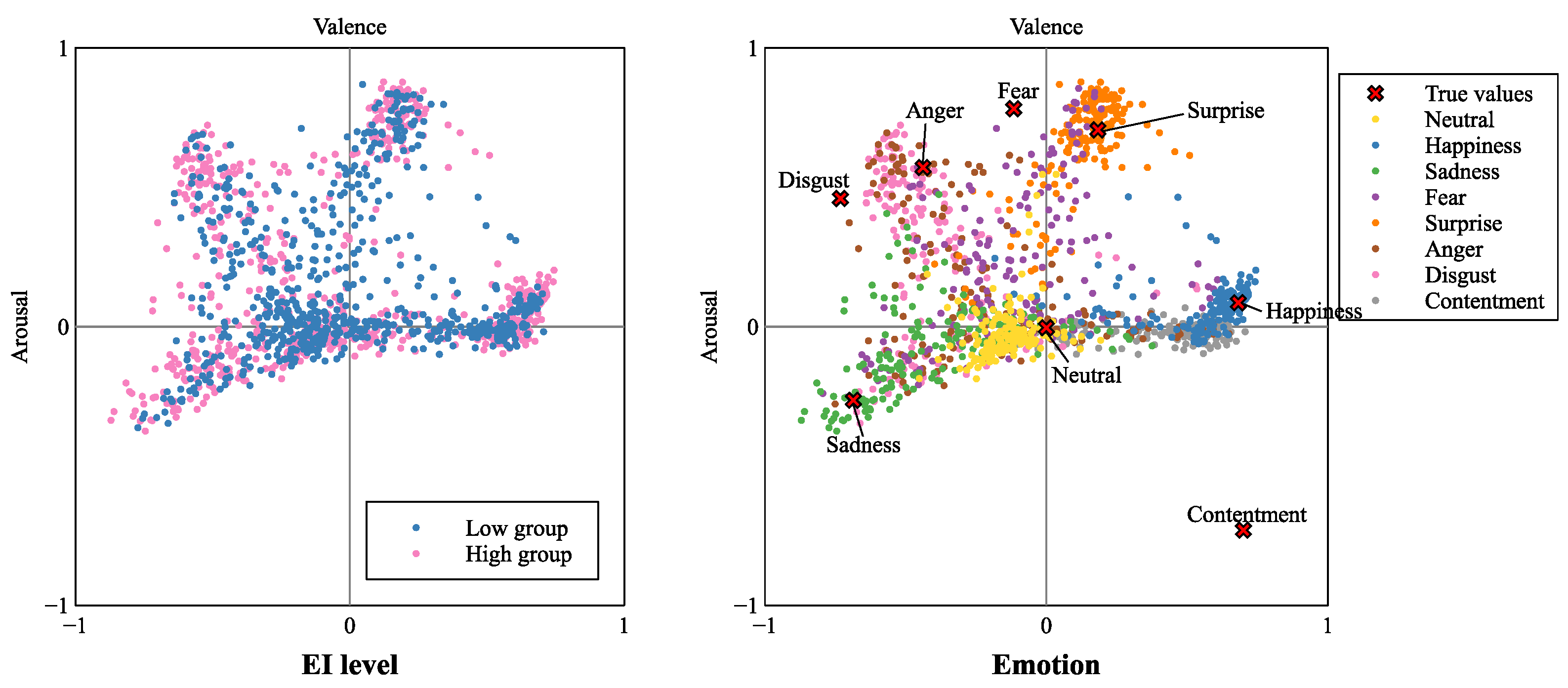

4.3. EI-Based Comparison of Emotion Prediction

5. Results

5.1. Deep Learning Recognition Results

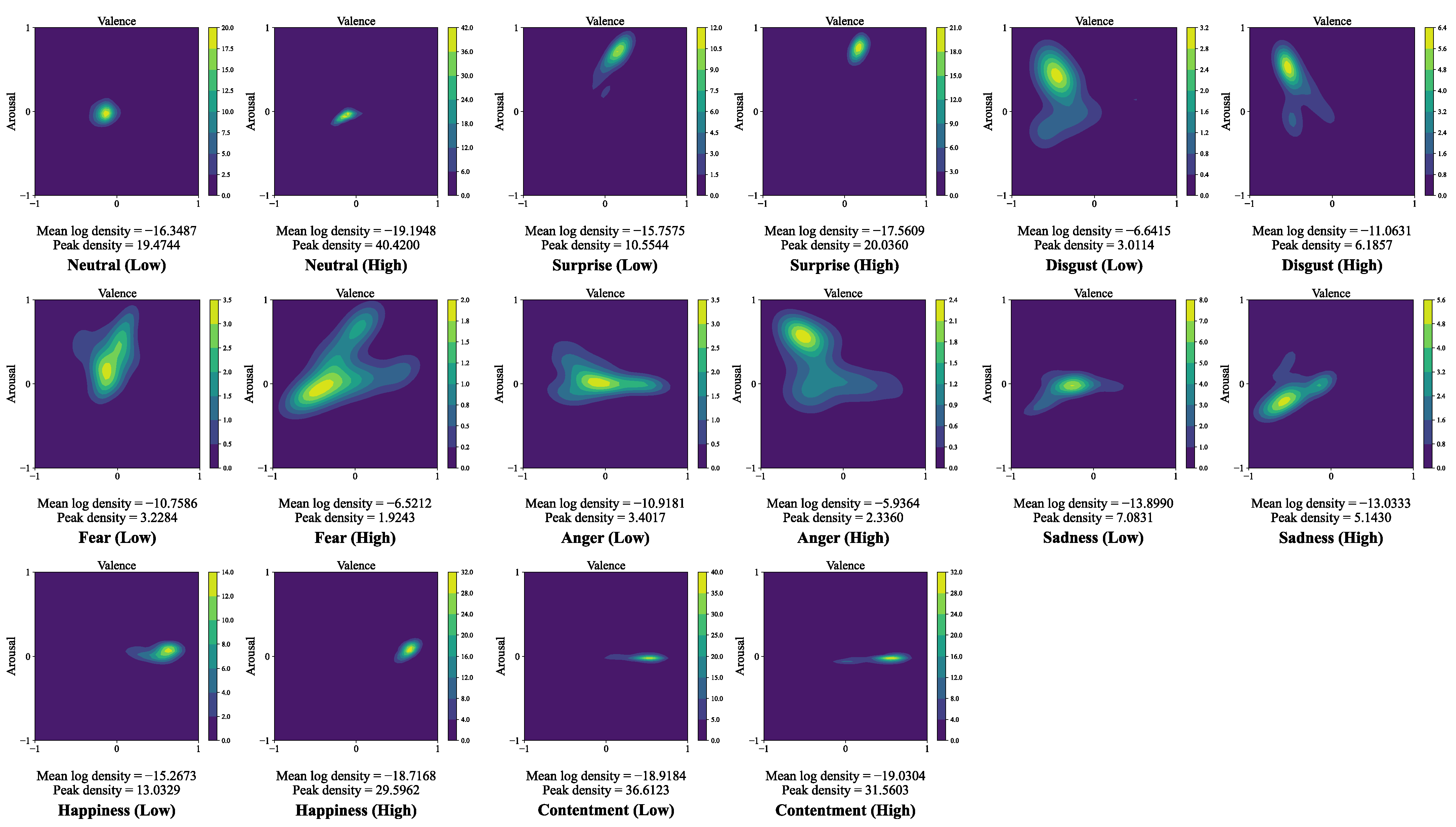

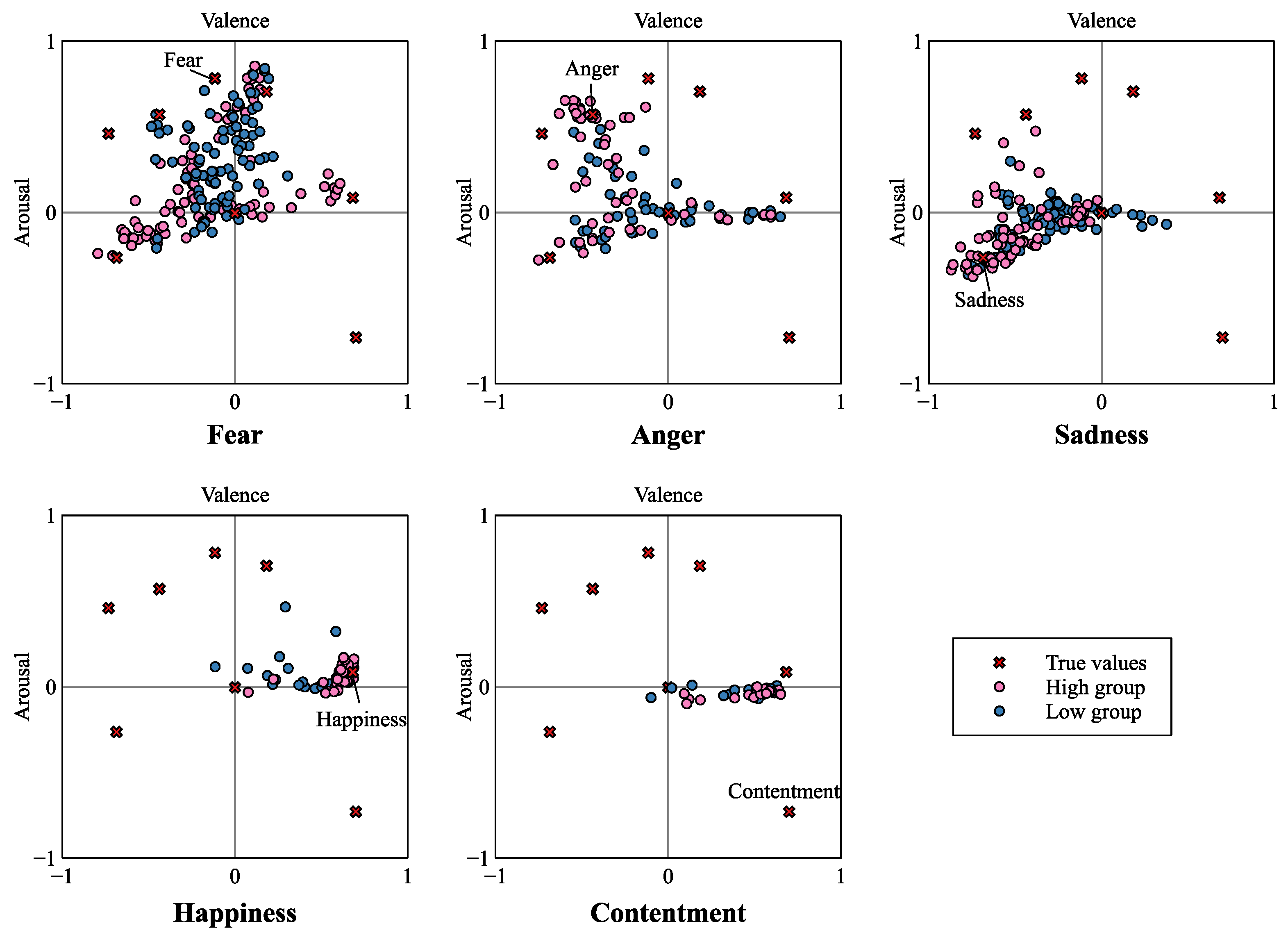

5.2. Group Classification Based on Emotional Intelligence Scores and Determination of Emotion-Specific True Values

5.3. Performance Comparison of Facial Recognition Models Based on Emotional Intelligence Levels

5.3.1. Analysis Results for All Emotions

5.3.2. Analysis Results for Individual Emotions

6. Discussion

6.1. Overall Emotion Recognition

6.2. Neutral, Surprise, Disgust

6.3. Fear, Anger, Sadness

6.4. Happiness, Contentment

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sajjad, M.; Ullah, F.U.M.; Ullah, M.; Christodoulou, G.; Cheikh, F.A.; Hijji, M.; Muhammad, K.; Rodrigues, J.J.P.C. A comprehensive survey on deep facial expression recognition: Challenges, applications, and future guidelines. Alex. Eng. J. 2023, 68, 817–840. [Google Scholar] [CrossRef]

- Kopalidis, T.; Solachidis, V.; Vretos, N.; Daras, P. Advances in facial expression recognition: A survey of methods, benchmarks, models, and datasets. Information 2024, 15, 135. [Google Scholar] [CrossRef]

- Wen, Z.; Lin, W.; Wang, T.; Xu, G. Distract Your Attention: Multi-Head Cross Attention Network for Facial Expression Recognition. Biomimetics 2023, 8, 199. [Google Scholar] [CrossRef] [PubMed]

- Mao, J.; Xu, R.; Yin, X.; Chang, Y.; Nie, B.; Huang, A.; Wang, Y. POSTER++: A Simpler and Stronger Facial Expression Recognition Network. Pattern Recognit. 2025, 157, 110951. [Google Scholar] [CrossRef]

- Barrett, L.F. Are emotions natural kinds? Perspect. Psychol. Sci. 2006, 1, 28–58. [Google Scholar] [CrossRef]

- Scherer, K.R. What are emotions? And how can they be measured? Soc. Sci. Inf. 2005, 44, 695–729. [Google Scholar] [CrossRef]

- Frijda, N.H.; Kuipers, P.; ter Schure, E. Relations among emotion, appraisal, and emotional action readiness. J. Pers. Soc. Psychol. 1989, 57, 212–228. [Google Scholar] [CrossRef]

- Shuman, V.; Sander, D.; Scherer, K.R. Levels of valence: The nature of pleasure and displeasure in emotion. Front. Psychol. 2013, 4, 261. [Google Scholar] [CrossRef]

- Barrett, L.F. Valence is a basic building block of emotional life. J. Res. Pers. 2006, 40, 35–55. [Google Scholar] [CrossRef]

- Cabanac, M. What is emotion? Behav. Process. 2002, 60, 69–83. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Le, N.; Nguyen, K.; Tran, Q.; Tjiputra, E.; Le, B.; Nguyen, A. Uncertainty-Aware Label Distribution Learning for Facial Expression Recognition. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 1–10. [Google Scholar]

- Hwooi, S.K.W.; Othmani, A.; Sabri, A.Q.M. Deep learning-based approach for continuous affect prediction from facial expression images in valence-arousal space. IEEE Access 2022, 10, 96053–96066. [Google Scholar] [CrossRef]

- Bobicev, V.; Sokolova, M. Inter-Annotator Agreement in Sentiment Analysis: Machine Learning Perspective. In Proceedings of the Recent Advances in Natural Language Processing (RANLP), Varna, Bulgaria, 4–6 September 2017; pp. 97–102. [Google Scholar]

- Stoewen, D.L. The vital connection between emotional intelligence and well-being—Part 1: Understanding emotional intelligence and why it matters. Can. Vet. J. 2024, 65, 182–183. [Google Scholar] [PubMed]

- Kihlstrom, J.F.; Cantor, N. Social intelligence. In Handbook of Intelligence, 2nd ed.; Sternberg, R.J., Ed.; Cambridge University Press: Cambridge, UK, 2000; pp. 359–379. [Google Scholar]

- Parkinson, B. Emotions are social. Br. J. Psychol. 1996, 87, 663–683. [Google Scholar] [CrossRef]

- van Kleef, G.A.; Cheshin, A.; Fischer, A.H.; Schneider, I.K. Editorial: The social nature of emotions. Front. Psychol. 2016, 7, 896. [Google Scholar] [CrossRef]

- DeBusk, B.C.; Austin, E.J. Emotional intelligence and social perception. Pers. Individ. Dif. 2011, 51, 877–882. [Google Scholar] [CrossRef]

- Elfenbein, H.A.; Foo, M.D.; Boldry, J.G.; Tan, H.H. Emotional intelligence and the recognition of emotion from facial expressions. In Emotional Intelligence in Everyday Life, 2nd ed.; Ciarrochi, J., Forgas, J.P., Mayer, J.D., Eds.; Psychology Press: New York, NY, USA, 2006; pp. 111–122. [Google Scholar]

- Swain, R.H.; O’Hare, A.J.; Brandley, K.; Gardner, A.T. Individual differences in social intelligence and perception of emotion expression of masked and unmasked faces. Cogn. Res. Princ. Implic. 2022, 7, 54. [Google Scholar] [CrossRef]

- Salovey, P.; Mayer, J.D. Emotional intelligence. Imagin. Cogn. Pers. 1990, 9, 185–211. [Google Scholar] [CrossRef]

- Punia, N.; Dutta, J.; Sharma, Y. Emotional Intelligence: A Theoretical Framework. Int. J. Sci. Eng. Res. 2015, 6, 967–975. [Google Scholar]

- Adolphs, R. Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 2002, 1, 21–62. [Google Scholar] [CrossRef]

- Kober, H.; Barrett, L.F.; Joseph, J.; Bliss-Moreau, E.; Lindquist, K.; Wager, T.D. Functional grouping and cortical–subcortical interactions in emotion: A meta-analysis of neuroimaging studies. NeuroImage 2008, 42, 998–1031. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, L. A network model of the emotional brain. Trends Cogn. Sci. 2017, 21, 357–371. [Google Scholar] [CrossRef] [PubMed]

- Bar-On, R.; Tranel, D.; Denburg, N.L.; Bechara, A. Exploring the neurological substrate of emotional and social intelligence. Brain 2003, 126, 1790–1800. [Google Scholar] [CrossRef]

- Lamm, C.; Singer, T. The role of anterior insular cortex in social emotions. Brain Struct. Funct. 2010, 214, 579–591. [Google Scholar] [CrossRef]

- Adolphs, R.; Baron-Cohen, S.; Tranel, D. Impaired recognition of social emotions following amygdala damage. J. Cogn. Neurosci. 2002, 14, 1264–1274. [Google Scholar] [CrossRef]

- Pundlik, A.; Verma, S.; Dhingra, K. Neural Pathways Involved in Emotional Regulation and Emotional Intelligence. J. Knowl. Learn. Sci. Technol. 2024, 3, 165–172. [Google Scholar] [CrossRef]

- Silvera, D.; Martinussen, M.; Dahl, T.I. The Tromsø Social Intelligence Scale, a self-report measure of social intelligence. Scand. J. Psychol. 2001, 42, 313–319. [Google Scholar] [CrossRef]

- Crowne, K.A. The relationships among social intelligence, emotional intelligence and cultural intelligence. Organ. Manag. J. 2009, 6, 148–163. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNetV2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning (ICML), Virtual Event, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Tu, Z.; Talebi, H.; Zhang, H.; Yang, F.; Milanfar, P.; Bovik, A.; Li, Y. MaxViT: Multi-axis vision transformer. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 459–479. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Liu, C.; Hirota, K.; Dai, Y. Patch attention convolutional vision transformer for facial expression recognition with occlusion. Inf. Sci. 2022, 619, 781–794. [Google Scholar] [CrossRef]

- Chang, W.-Y.; Hsu, S.-H.; Chien, J.-H. FATAUVA-Net: An integrated deep learning framework for facial attribute recognition, action unit detection, and valence-arousal estimation. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020. [Google Scholar]

- Wagner, N.; Mätzler, F.; Vossberg, S.R.; Schneider, H.; Pavlitska, S.; Zöllner, J.M. CAGE: Circumplex affect guided expression inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 4683–4692. [Google Scholar]

- Soleymani, M.; Pantic, M.; Pun, T. Multimodal emotion recognition in response to videos. IEEE Trans. Affect. Comput. 2011, 3, 211–223. [Google Scholar] [CrossRef]

- Kollias, D.; Tzirakis, P.; Baird, A.; Cowen, A.; Zafeiriou, S. ABAW: Valence-arousal estimation, expression recognition, action unit detection & emotional reaction intensity estimation challenges. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 5889–5898. [Google Scholar]

- Ouzar, Y.; Bousefsaf, F.; Djeldjli, D.; Maaoui, C. Video-based multimodal spontaneous emotion recognition using facial expressions and physiological signals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 2460–2469. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Social EQ. Available online: https://play.google.com/store/apps/details?id=com.esrc.socialeq.android&hl=ko (accessed on 13 March 2025).

- Russell, J.A. Reading Emotions from and into Faces: Resurrecting a Dimensional-Contextual Perspective; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Russell, J.A.; Barrett, L.F. Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. J. Pers. Soc. Psychol. 1999, 76, 805–819. [Google Scholar] [CrossRef] [PubMed]

- Ortony, A. Are all “basic emotions” emotions? A problem for the (basic) emotions construct. Perspect. Psychol. Sci. 2022, 17, 41–61. [Google Scholar] [CrossRef]

- Elfenbein, H.A.; Marsh, A.A.; Ambady, N. Emotional intelligence and the recognition of emotion from facial expressions. In The Social Psychology of Emotional and Behavioral Problems: Interfaces of Social and Clinical Psychology; Snyder, C.R., Ed.; Guilford Press: New York, NY, USA, 2005; pp. 53–73. [Google Scholar]

- Mayer, J.D.; Caruso, D.R.; Sitarenios, G.; Escobar, M.R. How many emotional intelligence abilities are there? An examination of four measures of emotional intelligence. Pers. Individ. Dif. 2024, 219, 112468. [Google Scholar] [CrossRef]

- Carrera-Levillain, P.; Fernandez-Dols, J.M. Neutral faces in context: Their emotional meaning and their function. J. Nonverbal Behav. 1994, 18, 281–299. [Google Scholar] [CrossRef]

- Said, C.P.; Sebe, N.; Todorov, A. Structural resemblance to emotional expressions predicts evaluation of emotionally neutral faces. Emotion 2009, 9, 260–265. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial Action Coding System; Environmental Psychology & Nonverbal Behavior: Palo Alto, CA, USA, 1978. [Google Scholar]

- Meyer, W.U.; Niepel, M.; Rudolph, U.; Schützwohl, A. An experimental analysis of surprise. Cogn. Emot. 1991, 5, 295–311. [Google Scholar] [CrossRef]

- Reisenzein, R.; Horstmann, G.; Schützwohl, A. The cognitive-evolutionary model of surprise: A review of the evidence. Top. Cogn. Sci. 2019, 11, 50–74. [Google Scholar] [CrossRef]

- Moretti, L.; Di Pellegrino, G. Disgust selectively modulates reciprocal fairness in economic interactions. Emotion 2010, 10, 169–173. [Google Scholar] [CrossRef]

- da Silva, T.M.H.R.; Hammett, R.; Low, G. Emotional Intelligence in Business: Enhancing Leadership, Collaboration, and Performance. In Building Business Knowledge for Complex Modern Business Environments; IGI Global: Hershey, PA, USA, 2025; pp. 149–178. [Google Scholar]

- Marinova, S.; Anand, S.; Park, H. Other-oriented emotional intelligence, OCBs, and job performance: A relational perspective. J. Soc. Psychol. 2025, 165, 270–289. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, W.C.; Shafiq, M.; Nawaz, M.J.; Saleem, I.; Nazeer, S. The impact of emotional intelligence on project success: Mediating role of team cohesiveness and moderating role of organizational culture. Int. J. Eng. Bus. Manag. 2024, 16, 18479790241232508. [Google Scholar] [CrossRef]

- Peléšková, Š.; Polák, J.; Janovcová, M.; Chomik, A.; Sedláčková, K.; Frynta, D.; Landová, E. Human emotional evaluation of ancestral and modern threats: Fear, disgust, and anger. Front. Psychol. 2024, 14, 1321053. [Google Scholar] [CrossRef] [PubMed]

- Stemmler, G.; Heldmann, M.; Pauls, C.A.; Scherer, T. Constraints for emotion specificity in fear and anger: The context counts. Psychophysiology 2001, 38, 275–291. [Google Scholar] [CrossRef]

- Ridley, C.A.; Maass, J.K.; Randell, J.A. Empathy unveiled: Exploring the mediating role of empathy in the sad eyewitness effect. J. Sci. Psychol. 2025, 20, 7–20. [Google Scholar]

- Qadeer, A.; Amin, A.; Aziz, A.; Aurangzaib, S.; Muzaffar, S.; Batool, R.; Rahman, A.U. Neuroscience of empathy: Bridging neurophysiology and organizational well-being. Dialogue Soc. Sci. Rev. 2025, 3, 55–68. [Google Scholar]

- LeDoux, J. The emotional brain, fear, and the amygdala. Cell. Mol. Neurobiol. 2003, 23, 727–738. [Google Scholar] [CrossRef]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Ekman, P.; Davidson, R.J.; Friesen, W.V. The Duchenne smile: Emotional expression and brain physiology: II. J. Pers. Soc. Psychol. 1990, 58, 342–353. [Google Scholar] [CrossRef]

- Maringer, M.; Krumhuber, E.G.; Fischer, A.H.; Niedenthal, P.M. Beyond smile dynamics: Mimicry and beliefs in judgments of smiles. Emotion 2011, 11, 181–187. [Google Scholar] [CrossRef]

- Kim, T.K. T test as a parametric statistic. Korean J. Anesthesiol. 2015, 68, 540–546. [Google Scholar] [CrossRef]

| Work | AffectNet | CAGE | This Work | |||

|---|---|---|---|---|---|---|

| Level | Valence | Arousal | Total 1 | Total 1 | Valence | Arousal |

| MAE | - | - | 0.239 | 0.235 | - | - |

| MSE | - | - | 0.103 | 0.102 | 0.097 | 0.171 |

| RMSE | 0.37 | 0.41 | 0.321 | 0.320 | 0.225 | 0.299 |

| CCC | 0.60 | 0.34 | 0.782 | 0.784 | 0.780 | 0.495 |

| Corr | 0.66 | 0.54 | - | - | 0.807 | 0.565 |

| SAGR | 0.74 | 0.65 | - | - | 0.759 | 0.781 |

| Model 1 | Group | MSE | RMSE | CCC | Corr | SAGR | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | U | z | p 2 | r | Mean | SD | U | z | p 2 | r | ||||||

| Valence + Arousal | L | Low | 0.133 | 0.148 | 10,638 | 2.143 | 0.032 | 0.18 | 0.268 | 0.188 | 10,704 | 2.245 | 0.025 | 0.19 | 0.662 | 0.720 | 75.7 |

| High | 0.115 | 0.156 | 0.229 | 0.207 | 0.737 | 0.760 | 80.1 | ||||||||||

| S | Low | 0.144 | 0.158 | 10,518 | 1.958 | 0.050 | 0.17 | 0.281 | 0.192 | 10,522 | 1.964 | 0.050 | 0.17 | 0.634 | 0.689 | 74.6 | |

| High | 0.127 | 0.170 | 0.246 | 0.214 | 0.720 | 0.737 | 80.9 | ||||||||||

| B | Low | 0.144 | 0.149 | 10,763 | 2.336 | 0.020 | 0.20 | 0.290 | 0.187 | 10,775 | 2.354 | 0.019 | 0.20 | 0.615 | 0.693 | 73.5 | |

| High | 0.121 | 0.155 | 0.243 | 0.208 | 0.709 | 0.746 | 80.5 | ||||||||||

| T | Low | 0.142 | 0.158 | 10,316 | 1.646 | 0.100 | 0.14 | 0.283 | 0.195 | 10,327 | 1.663 | 0.096 | 0.14 | 0.631 | 0.695 | 74.6 | |

| High | 0.124 | 0.155 | 0.248 | 0.206 | 0.712 | 0.740 | 80.9 | ||||||||||

| V | Low | 0.148 | 0.158 | 10,643 | 2.151 | 0.032 | 0.18 | 0.288 | 0.197 | 10,563 | 0.210 | 0.043 | 0.18 | 0.616 | 0.680 | 70.6 | |

| High | 0.126 | 0.162 | 0.247 | 0.210 | 0.702 | 0.737 | 78.3 | ||||||||||

| Valence | L | Low | 0.102 | 0.198 | 10,751 | 2.317 | 0.021 | 0.20 | 0.232 | 0.221 | 10,751 | 2.317 | 0.021 | 0.20 | 0.759 | 0.804 | 74.3 |

| High | 0.068 | 0.145 | 0.179 | 0.189 | 0.862 | 0.869 | 80.1 | ||||||||||

| S | Low | 0.109 | 0.214 | 10,296 | 1.616 | 0.106 | 0.14 | 0.241 | 0.226 | 10,296 | 1.616 | 0.106 | 0.14 | 0.746 | 0.781 | 73.5 | |

| High | 0.076 | 0.142 | 0.199 | 0.191 | 0.849 | 0.850 | 80.1 | ||||||||||

| B | Low | 0.121 | 0.214 | 10,818 | 2.420 | 0.016 | 0.21 | 0.263 | 0.229 | 10,818 | 2.420 | 0.016 | 0.21 | 0.703 | 0.755 | 71.3 | |

| High | 0.083 | 0.153 | 0.206 | 0.202 | 0.821 | 0.836 | 78.7 | ||||||||||

| T | Low | 0.120 | 0.224 | 10,528 | 1.973 | 0.048 | 0.17 | 0.256 | 0.234 | 10,528 | 1.973 | 0.048 | 0.17 | 0.708 | 0.756 | 73.5 | |

| High | 0.085 | 0.168 | 0.208 | 0.206 | 0.817 | 0.831 | 77.9 | ||||||||||

| V | Low | 0.121 | 0.216 | 10,629 | 2.129 | 0.033 | 0.18 | 0.260 | 0.232 | 10,629 | 2.129 | 0.033 | 0.18 | 0.710 | 0.750 | 70.6 | |

| High | 0.082 | 0.156 | 0.208 | 0.198 | 0.826 | 0.836 | 78.7 | ||||||||||

| Arousal | L | Low | 0.163 | 0.207 | 10,214 | 1.489 | 0.136 | 0.13 | 0.304 | 0.267 | 10,214 | 1.489 | 0.136 | 0.13 | 0.503 | 0.577 | 77.2 |

| High | 0.163 | 0.234 | 0.278 | 0.294 | 0.535 | 0.577 | 80.1 | ||||||||||

| S | Low | 0.179 | 0.219 | 10,127 | 1.355 | 0.175 | 0.12 | 0.320 | 0.320 | 10,127 | 1.355 | 0.175 | 0.12 | 0.459 | 0.534 | 75.7 | |

| High | 0.177 | 0.261 | 0.294 | 0.294 | 0.515 | 0.551 | 81.6 | ||||||||||

| B | Low | 0.179 | 0.219 | 9991 | 1.145 | 0.252 | 0.10 | 0.316 | 0.259 | 9991 | 1.145 | 0.252 | 0.10 | 0.457 | 0.570 | 75.7 | |

| High | 0.177 | 0.261 | 0.279 | 0.287 | 0.518 | 0.586 | 82.4 | ||||||||||

| T | Low | 0.164 | 0.203 | 9839 | 0.911 | 0.362 | 0.08 | 0.309 | 0.263 | 9839 | 0.911 | 0.362 | 0.08 | 0.485 | 0.568 | 75.7 | |

| High | 0.162 | 0.223 | 0.287 | 0.283 | 0.532 | 0.579 | 83.8 | ||||||||||

| V | Low | 0.174 | 0.212 | 9947 | 1.078 | 0.281 | 0.09 | 0.316 | 0.274 | 9947 | 1.078 | 0.281 | 0.09 | 0.447 | 0.540 | 70.6 | |

| High | 0.169 | 0.243 | 0.285 | 0.298 | 0.498 | 0.566 | 77.9 | ||||||||||

| Title | Emotion | Group | Mean | SD | t | df | p | Cohen’s d |

|---|---|---|---|---|---|---|---|---|

| Entire model | Neutral | Low | 0.191 | 0.115 | 2.900 | 168 | 0.004 | 0.45 |

| High | 0.148 | 0.072 | ||||||

| Surprise | Low | 0.208 | 0.200 | 3.423 | 168 | 0.001 | 0.53 | |

| High | 0.124 | 0.103 | ||||||

| Disgust | Low | 0.498 | 0.281 | 2.589 | 168 | 0.011 | 0.40 | |

| High | 0.397 | 0.225 | ||||||

| Fear | Low | 0.524 | 0.208 | −4.850 | 168 | 0.000 | 0.75 | |

| High | 0.714 | 0.288 | ||||||

| Anger | Low | 0.671 | 0.260 | 4.603 | 168 | 0.000 | 0.71 | |

| High | 0.457 | 0.337 | ||||||

| Sadness | Low | 0.463 | 0.235 | 4.153 | 168 | 0.000 | 0.64 | |

| High | 0.317 | 0.218 | ||||||

| Happiness | Low | 0.215 | 0.202 | 4.303 | 168 | 0.000 | 0.66 | |

| High | 0.104 | 0.120 | ||||||

| Contentment | Low | 0.783 | 0.092 | 0.472 | 168 | 0.638 | 0.07 | |

| High | 0.776 | 0.102 | ||||||

| Valid model | Fear | Low | 0.524 | 0.208 | −4.850 | 168 | 0.000 | 0.75 |

| High | 0.712 | 0.288 | ||||||

| Anger | Low | 0.685 | 0.264 | 3.718 | 100 | 0.000 | 0.74 | |

| High | 0.455 | 0.350 | ||||||

| Sadness | Low | 0.460 | 0.235 | 4.002 | 134 | 0.000 | 0.69 | |

| High | 0.304 | 0.213 | ||||||

| Happiness | Low | 0.220 | 0.195 | 2.885 | 66 | 0.005 | 0.71 | |

| High | 0.106 | 0.119 | ||||||

| Contentment | Low | 0.779 | 0.102 | 0.867 | 32 | 0.392 | 0.31 | |

| High | 0.754 | 0.070 |

| Model 1 | Group 2 | Valence + Arousal | Valence | Arousal | |||

|---|---|---|---|---|---|---|---|

| MSE | RMSE | MSE | RMSE | MSE | RMSE | ||

| L | Low | Fear * t(32) = 2.289, p = 0.029, d = 0.78 | Fear * t(32) = 2.334, p = 0.026, d = 0.80 | ||||

| High | Anger * U = 204, z = 2.049, p = 0.041, r = 0.50 | Anger * U = 215, z = 2.428, p = 0.014, r = 0.59 | Sadness * t(32) = −2.107, p = 0.043, d = 0.73 | Anger * U = 202, z = 1.981, p = 0.049, r = 0.48 | Anger * U = 202, z = 1.981, p = 0.049, r = 0.48 | ||

| S | Low | Fear * t(24.202) = 2.759, p = 0.011, d = 0.95 | Fear ** t(24.202) = 2.848, p = 0.009, d = 0.97 | Fear ** U = 66, z = −2.704, p = 0.006, r = 0.66 | Fear ** t(24.011) = 3.098, p = 0.005, d = 1.06 | Fear * t(32) = 2.097, p = 0.044, d = 0.72 | |

| High | Sadness * U = 121, z = 2.325, p = 0.020, r = 0.56 | Sadness * t(32) = −2.464, p = 0.019, d = 0.85 | Sadness * U = 205, z = 2.084, p = 0.038, r = 0.51 | Sadness * U = 205, z = 2.084, p = 0.038, r = 0.51 | Sadness * U = 206, z = 2.118, p = 0.034, r = 0.51 | Sadness * U = 206, z = 2.118, p = 0.034, r = 0.51 | |

| Anger * U = 204, z = 2.049, p = 0.041, r = 0.50 | Contentment * t(32) = −2.207, p = 0.035, d = 0.75 | Contentment * t(32) = −2.195, p = 0.036, d = 0.78 | |||||

| B | Low | Fear * t(24.502) = 2.447, p = 0.022, d = 0.84 | Fear * t(21.880) = 2.366, p = 0.027, d = 0.81 | Fear * U = 86, z = −2.015, p = 0.045, r = 0.49 | Fear * U = 86, z = −2.015, p = 0.045, r = 0.49 | ||

| High | Sadness * U = 206, z = 2.118, p = 0.034, r = 0.51 | Sadness * t(32) = −2.499, p = 0.018, d = 0.85 | Sadness * U = 211, z = 2.290, p = 0.022, r = 0.56 | Sadness * U = 211, z = 2.290, p = 0.022, r = 0.56 | |||

| Happiness * U = 213, z = 2.359, p = 0.018, r = 0.57 | Happiness * U = 213, z = 2.359, p = 0.018, r = 0.57 | ||||||

| T | Low | Fear * t(32) = 2.188, p = 0.036, d = 0.75 | Fear * t(24.776) = 2.160, p = 0.041, d = 0.74 | Fear * U = 77, z = −2.325, p = 0.020, r = 0.564 | Fear * U = 77, z = −2.325, p = 0.020, r = 0.564 | ||

| High | |||||||

| V | Low | Fear * U = 82, z = −2.153, p = 0.031, r = 0.52 | Fear ** t(32) = 2.767, p = 0.009, d = 0.95 | Fear * U = 83, z = −2.118, p = 0.034, r = 0.51 | Fear * t(32) = 2.412, p = 0.022, d = 0.83 | ||

| High | Happiness * U = 202, z = 1.981, p = 0.049, r = 0.48 | Sadness * U = 204, z = 2.049, p = 0.041, r = 0.50 | Happiness * U = 218, z = 2.532, p = 0.011, r = 0.61 | Happiness * U = 218, z = 2.532, p = 0.011, r = 0.61 | Sadness * U = 217, z = 2.497, p = 0.012, r = 0.43 | Sadness * t(32) = −2.678, p = 0.012, d = 0.92 | |

| Anger * t(32) = −2.161, p = 0.038, d = 0.74 | |||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, Y.; Cho, A.; Lee, H.; Whang, M. The Effect of Emotional Intelligence on the Accuracy of Facial Expression Recognition in the Valence–Arousal Space. Electronics 2025, 14, 1525. https://doi.org/10.3390/electronics14081525

Kim Y, Cho A, Lee H, Whang M. The Effect of Emotional Intelligence on the Accuracy of Facial Expression Recognition in the Valence–Arousal Space. Electronics. 2025; 14(8):1525. https://doi.org/10.3390/electronics14081525

Chicago/Turabian StyleKim, Yubin, Ayoung Cho, Hyunwoo Lee, and Mincheol Whang. 2025. "The Effect of Emotional Intelligence on the Accuracy of Facial Expression Recognition in the Valence–Arousal Space" Electronics 14, no. 8: 1525. https://doi.org/10.3390/electronics14081525

APA StyleKim, Y., Cho, A., Lee, H., & Whang, M. (2025). The Effect of Emotional Intelligence on the Accuracy of Facial Expression Recognition in the Valence–Arousal Space. Electronics, 14(8), 1525. https://doi.org/10.3390/electronics14081525