Abstract

For the commercialization of connected vehicles and smart cities, extensive research is carried out on autonomous driving, Vehicle-to-Everything (V2X) communication, and platooning. However, limitations remain, such as restrictions to highway environments, and studies are conducted separately due to challenges in ensuring reliability and real-time performance under external influences. This paper proposes a cooperative autonomous driving system based on V2X network implemented in the CARLA simulator, which simulates an urban environment to optimize vehicle-embedded systems and ensure safety and real-time performance. First, the proposed Throttle–Steer–Brake (TSB) driving technique reduces the computational overhead for following vehicles by utilizing the control commands of a leading vehicle. Second, a V2X network is designed to support object perception, cluster escape, and joining. Third, an urban perception system is developed and validated for safety. Finally, pseudonymized vehicle identifiers, Advanced Encryption Standard (AES), and the Edwards-curve Digital Signature Algorithm (EdDSA) are employed for data reliability and security. The system is validated in processing time and accuracy, confirming feasibility for real-world application. TSB driving demonstrates a computation speed approximately 466 times faster than conventional waypoints-based driving. Accurate urban perception and V2X communication enable safe cluster escape and joining, establishing a foundation for cooperative autonomous driving with improved safety and real-time capabilities.

1. Introduction

In cooperative autonomous driving, related studies, such as autonomous driving control, urban environmental perception, and V2X networks, are often conducted separately. In the existing research on platooning and connected vehicles, the ultra-low latency and high reliability of V2X networks for real-time vehicle communication are essential requirements [1]. In addition, most research focuses primarily on highway environments. According to a 2020 survey by the International Road Federation, the motorway or highway network measures 105,948 km, whereas the main or national road network extends to 251,957 km [2]. The length of national roads is approximately 2.5 times longer than that of highways. Therefore, it is necessary to develop a cooperative autonomous driving system tailored to urban environments [3].

This paper integrates cooperative autonomous driving technologies and evaluates them in a CARLA simulator-based urban environments that resembles real-world conditions. The experiment prioritizes real-time performance and safety. If both real-time capability and safety are ensured, the system is expected to enable immediate deployment and commercialization in connected vehicles and smart cities in the near future.

The vehicle is controlled using a Proportional–Integral–Differential (PID) controller and the Pure Pursuit algorithm [4]. The CARLA server is configured, and cluster information is generated and managed by transmitting and receiving data such as vehicle ID and x and y coordinates. Based on the established V2X network, the throttle, steer, and brake values from the leading vehicle in cooperative autonomous driving are forwarded to the following vehicle to realize TSB driving. Urban environments perception compares traffic light recognition based on Artificial Intelligence (AI) with that based on Hue Saturation Value (HSV), and HSV-based perception is selected due to its superior performance [5]. Pedestrian recognition and stop procedures are triggered based on pedestrian location data exchanged with the edge server. If the distance between the vehicle and the pedestrian is less than a predefined threshold, an emergency stop is initiated. The most important cluster escape and joining algorithms in cooperative autonomous driving operate based on the vehicle’s order on the server and its distance from the leading vehicle. Additionally, to ensure secure communication, a security framework is constructed using pseudonymized vehicle identifiers, AES encryption, and EdDSA digital signatures to protect privacy and maintain data integrity [6].

TSB driving demonstrates a time gain approximately 466 times greater than that of waypoints-based driving, indicating its suitability for real-time vehicle communication and control. HSV-based traffic light perception achieves an accuracy of 99.58% and operates nearly 100 times faster than AI-based perception. The urban environments, including pedestrians, are successfully recognized, and vehicles perform safe stops within lanes. In this study, vehicles navigate urban environments at safe speeds and successfully perform cluster escape and joining. In conclusion, the integrated safety of cooperative autonomous driving in urban environments is validated, and the security aspect of the V2X network is carefully considered. The proposed system is expected to contribute significantly to the foundational research in V2X Network and autonomous driving.

2. Related Works and Contribution

2.1. Platooning

Most preceding studies on platooning and cooperative autonomous driving focused on trucks or heavy-duty vehicles [7].

Platooning is typically based on a distributed architecture, where control operates within each vehicle. However, a method proposed in the existing research adopts a centralized control approach, in which a Multi-access Edge Computing (MEC) server collects vehicle data and manages the cluster [8]. In the experimental setup, the number of vehicles on the highway and in the cluster is set to 20, with an inter-vehicle distance of 10 m. This architecture offers greater scalability and safer management than a simple Vehicle-to-Vehicle (V2V) network. However, in urban environments, vehicle communication is often restricted, and the low-latency benefits of V2V communication are not fully exploited. In this study, we construct a server that manages information for all vehicles, integrating the strengths of both V2V and Vehicle-to-Infrastructure (V2I) communication. This enables safe cooperative autonomous driving in urban environments.

One of the key functions of platooning is perception, including vehicle detection. In the ARGO Project, a 3D vision system is constructed using two cameras to detect obstacles and vehicles. In addition, the system aims to track the preceding vehicle based on its position and speed. A target point is defined near the preceding vehicle, and in this study, the waypoints-based autonomous driving method for the latter vehicle uses the position of the preceding vehicle as a reference [9]. Since additional control strategies are required to enhance the performance of the cooperative autonomous driving system, this study introduces safe control method based on cluster escape and joining algorithms, as well as TSB driving.

Vehicular Ad hoc Networks (VANETs) are utilized to distribute the fundamental tasks of autonomous driving among agents. VANETs can be implemented using either traditional Internet Protocol (IP)-based networking or Information-Centric Networking (ICN). Constructing efficient VANETs involving thousands of vehicles in urban environments remains a major challenge [10]. In this study, a cluster of three vehicles is implemented in an urban setting, allowing vehicles to dynamically join and escape the cluster. The system’s performance is evaluated in terms of time efficiency and computational overhead.

2.2. Autonomous Driving Control

Geometric and dynamic models are commonly employed in the design of autonomous vehicle control systems. The dynamic bicycle model provides a more accurate representation of vehicle motion, incorporating tire–road interactions. The Model Predictive Controller (MPC) is utilized to perform trajectory tracking by computing optimal control signals based on the vehicle’s current state [11]. In this study, a predictive control model is designed based on the dynamic bicycle model and the MPC structure.

Autonomous driving can be modeled as a two-layer control architecture [12]. The first layer determines the target point of the vehicle using fuzzy logic, while the second layer employs conventional control methods to actuate the vehicle toward the target point defined by the first layer. This approach demonstrates strong performance in real-world autonomous vehicle control. In this study, a similar principle to waypoints-based driving is adopted, where the target position of the vehicle is defined accordingly. TSB driving controls the vehicle using the throttle, steer, brake values to reach the target position, and it is significant, in that the target position is updated in real time through the V2X network.

2.3. V2X Network

The V2X network technology is a next-generation communication framework that includes V2V, V2I, Vehicle-to-Pedestrian (V2P), and Vehicle-to-Network (V2N) communication. V2X communication technologies include Dedicated Short-Range Communication (DSRC) and Long-Term Evolution (LTE)-V2X, which are currently deployed, and 5th Generation (5G)-V2X, which is under development. V2X network offers advantages such as enhanced traffic safety, traffic flow optimization, and environmental sustainability. However, its commercialization remains premature, and the verification of its reliability and security is still insufficient. To improve this, prior research has proposed a comprehensive testing methodology for V2X network [13]. Security threats and real-world application scenarios have been systematically analyzed across multiple areas, including protocol conformance testing, functional testing, and performance evaluation. However, the results remain hypothetical due to a lack of real-world experimental validation. In this study, we conduct a test under real-world-like conditions and verify the practical reliability of the V2X network through empirical evaluation.

Numerous studies have conducted comprehensive reviews of the Resource Allocation (RA) mechanisms of Cellular Vehicle-to-Everything (C-V2X). Compared to conventional DSRC-based V2X network, C-V2X offers advantages such as broader coverage, higher reliability, and Quality of Service (QoS) guarantees. When comparing LTE-V2X with 5th Generation New Radio (5G-NR) V2X, LTE-V2X provides strong reliability guarantees, while 5G-NR V2X enhances QoS and supports AI-based optimization. The advancements in 5G-NR V2X have been summarized, and future research directions have been suggested [14]. In this study, a V2X network is implemented in a simulated real-world environment with consideration for security.

A comprehensive tutorial on 5G-NR V2X communication technology and the corresponding standard, introduced in the 3rd Generation Partnership Project (3GPP) Release 16, is available [15]. 5G-NR V2X complements LTE-V2X by addressing its limitations and supporting V2X networks optimized for Intelligent Transport Systems (ITS). While LTE-V2X lacks a mechanism to verify packet reception, 5G-NR V2X introduces Hybrid Automatic Repeat Request (HARQ) and the Physical Sidelink Feedback Channel (PSFCH) to confirm transmission. In addition, 5G-NR V2X incorporates a QoS management framework that utilizes various V2X applications. This related research proposes using LTE-V2X for basic safety message transmission and 5G-NR V2X for enhanced functionality. The specifications of 5G-NR V2X are systematically organized, and its core technologies are explained in detail to suggest future research directions.

To implement cooperative autonomous driving, a V2X network is a critical component. Table 1 compares the characteristics of the V2X network, the V2V network, and the network in CARLA simulator utilized in this study [16,17]. The V2X network encompasses various communication entities, including Road Side Units (RSUs), pedestrians, network infrastructure, and government agencies, in addition to vehicles. It provides wide-area communication capabilities (extending several kilometers) by leveraging technologies such as 5G, LTE, Wi-Fi, DSRC, and C-V2X. In contrast, the V2V network is based on direct communication between vehicles and utilizes DSRC and C-V2X technologies, supporting communication within a range of approximately 800 m. In the case of the network in CARLA simulator, communication occurs between the server and clients, as well as between vehicles, and theoretically enables communication over an unlimited range. In terms of communication standards, the V2X network supports both Institute of Electrical and Electronics Engineers (IEEE) 802.11 p and IEEE 802.11 bd, whereas the V2V network is limited to IEEE 802.11 p [18,19]. Additionally, the V2X network serves comprehensive purposes, including traffic congestion mitigation, accident prevention, the enhancement of autonomous driving performance, and environmental impact reduction. Meanwhile, the V2V network primarily focuses on traffic flow optimization and accident reduction. In addition to these functions, the network in CARLA simulator offers a virtual environment for evaluating and improving algorithmic processing speed.

Table 1.

Comparison between V2X network, V2V network, and network in CARLA simulator.

2.4. Urban Environment Perception

A related study aims to improve traffic light perception performance in Advanced Driver Assistance Systems (ADASs) by enhancing You Only Look Once version 8 (YOLOv8) with meta-learning techniques. Existing YOLO and Convolutional Neural Network (CNN) models exhibit low accuracy under varying illumination conditions and are significantly affected by the size of the traffic lights and surrounding elements such as dataset characteristics. By applying the Model-Agnostic Meta-Learning (MAML) algorithm, an AI model that is robust to new environments is developed, maximizing performance in color differentiation. Unlike conventional methods that detect the entire traffic light as a bounding box, this approach detects only the illuminated section, thereby optimizing the labeling process. As a result, both accuracy is improved and computational overhead is reduced. Through feature weight optimization, the model further enhances color recognition by assigning higher weights to color features, resulting in improved accuracy and reduced computational overhead. This method demonstrates higher accuracy than the baseline YOLOv8 model under various conditions such as daytime, nighttime, and fog, while maintaining real-time performance with 30 Frames Per Second (FPS). However, it remains susceptible to errors when colors are similar, and the model size is relatively large at 25 million parameters, requiring substantial computational resources. AI-based perception methods still face limitations related to color confusion and latency [20]. Therefore, we aims to compare the proposed approach with a simpler HSV value-based method, and to identify a more accurate and faster object perception technique for vehicular environments through cross-validation.

Related research proposes a coarse-to-fine deep learning framework to improve the reliability of existing YOLO-based traffic light perception methods [21]. These existing methods struggle with perception due to the small size and diverse shapes of traffic lights, and they have difficulty distinguishing directional features, such as arrow indicators. To address these challenges, traffic light perception is divided into two stages. In the first stage, YOLOv8-nano is enhanced to detect small traffic lights and to group them into super classes for an initial search. In the second stage, the system accurately classifies traffic light types. It applies depthwise separable convolutions and expansion layers to improve both weight efficiency and accuracy. Super class detection demonstrates better performance than conventional YOLOv5 and YOLOv7, while the second stage achieves higher accuracy with fewer parameters compared to traditional CNN models. As a result, the YOLO-based detection performance is maximized, and the method maintains real-time operation with an average detection speed of 9.9 ms. However, performance still degrades in low-light conditions, and validation remains limited in real-world autonomous driving environments. In this study, a new dataset tailored to the experimental environment is constructed and evaluated in simulated autonomous driving environments.

2.5. Contribution in Integrated System

This study integrates different technological component (autonomous vehicle control, urban environments perception, and V2X network), distinguishing itself from prior research limited to single domains. By comprehensively addressing the feasibility of cooperative autonomous driving systems within the real world, the study provides meaningful contributions to the field. Vehicle control is implemented using both TSB driving and waypoints-based driving. The proposed TSB driving approach allows the latter vehicle to utilize the throttle, steering, and brake values of the preceding vehicle, thereby reducing the computational overhead and overcoming hardware limitations to satisfy the real-time requirements.

Unlike previous studies focused on highway environments, this method maintains real-time performance and safety even under increased computational overhead due to traffic light and pedestrian perception, as well as data transmission via the V2X network in urban settings. This highlights the advantage of the proposed control technique in ensuring lightweight and scalable cooperative autonomous driving. In terms of urban environments perception, this study develops a stop algorithm by recognizing traffic lights and pedestrians. For traffic light perception, the HSV-based perception method is adopted and compared against a CNN-based YOLO model. By analyzing both approaches in terms of accuracy and computational efficiency, the study selects the method better suited to the urban environments, contrasting with prior research that relies on a single perception model.

Furthermore, vehicles in the cluster are defined as clients, and a V2X network is designed to enable the real-time exchange of location data. This allows vehicles to dynamically escape or join the cluster, ensuring stable cooperative autonomous driving even in complex urban environments. Notably, this study proposes an algorithm that enables vehicles to flexibly escape or join the cluster depending on traffic conditions, particularly the stop situations frequently encountered in urban environments, which is an aspect rarely addressed in earlier studies.

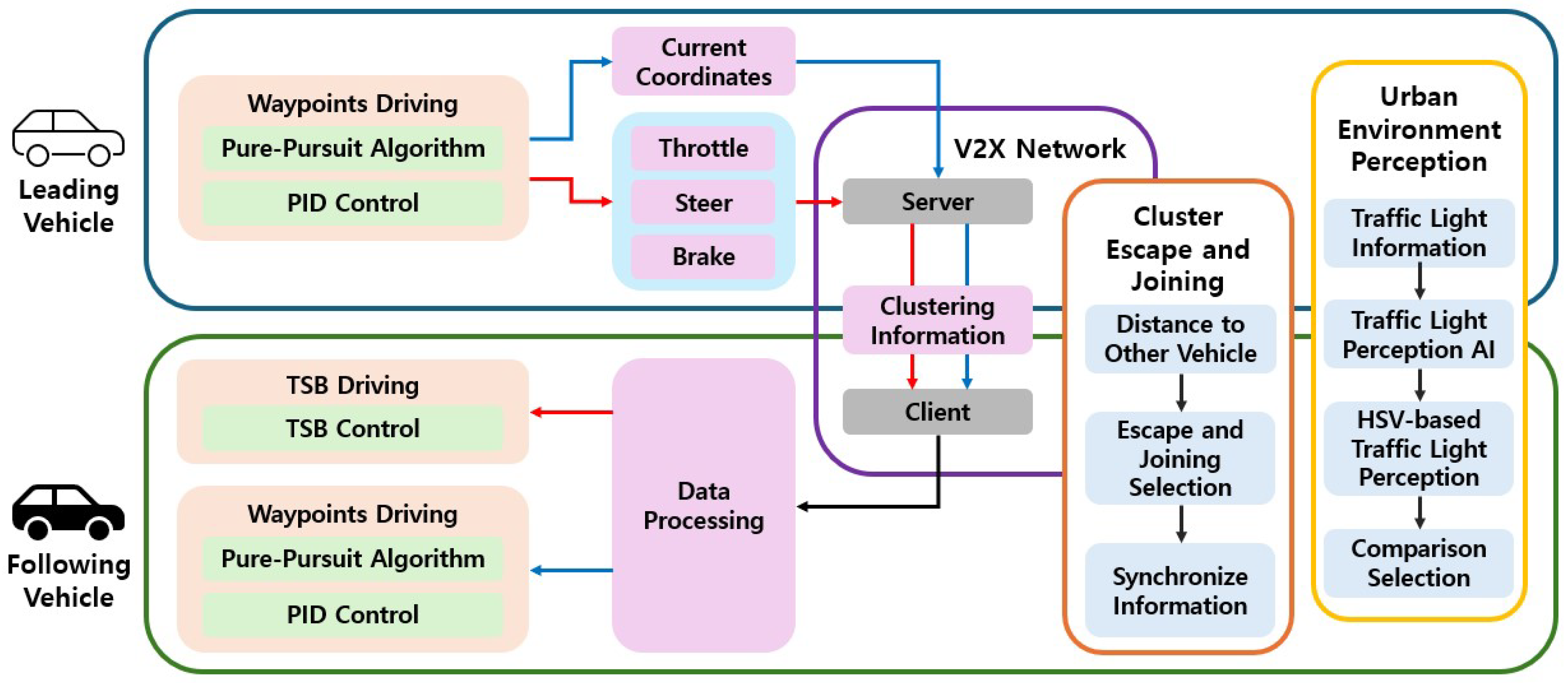

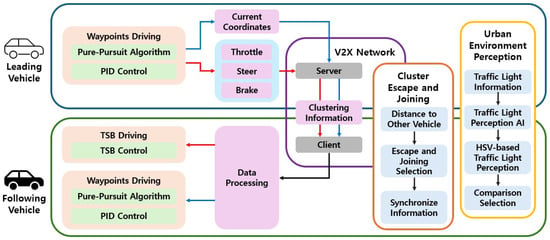

3. Methodology and Control Model Analysis

This paper employs the TSB driving method through a V2X network to realize cooperative autonomous driving in an urban environments. For vehicle control, the Pure Pursuit algorithm and PID control technique are applied. In the urban environments setting, traffic lights are detected using the Open Source Computer Vision Library (OpenCV, 4.9.0) with HSV-based color filtering, and pedestrians are recognized through communication with the CARLA server. A central communication server is established within the V2X network, which interacts with the CARLA server. The CARLA server communicates with vehicles via socket programming. Additionally, a cluster escape and joining algorithm is designed, enabling vehicles to dynamically change cooperative driving states in real time. There are five reference terms used for vehicle roles in this study: leading vehicle, following vehicle, preceding vehicle, latter vehicle, and tail vehicle. The leading vehicle is positioned at the front of the cluster, while the tail vehicle is located at the rear. The preceding vehicle refers to the one ahead during an information exchange, transmitting data to the vehicle behind. The latter vehicle is the one behind, receiving data from the preceding vehicle. Vehicles that follow the preceding vehicle and rely on its data are referred to as following vehicles, which include all vehicles in the cluster except for the leading vehicle. The overall architecture of this study is illustrated in Figure 1, and each method is described in detail in the subsequent sections. The red arrows indicate the TSB driving method, the blue arrows represent the waypoints-based driving method, and the black arrows denote the process applied to all vehicles. The colors of the boxes are used solely for visual distinction.

Figure 1.

Structure of cooperative autonomous driving using TSB driving and waypoints driving.

3.1. TSB Driving and Wapoints Driving

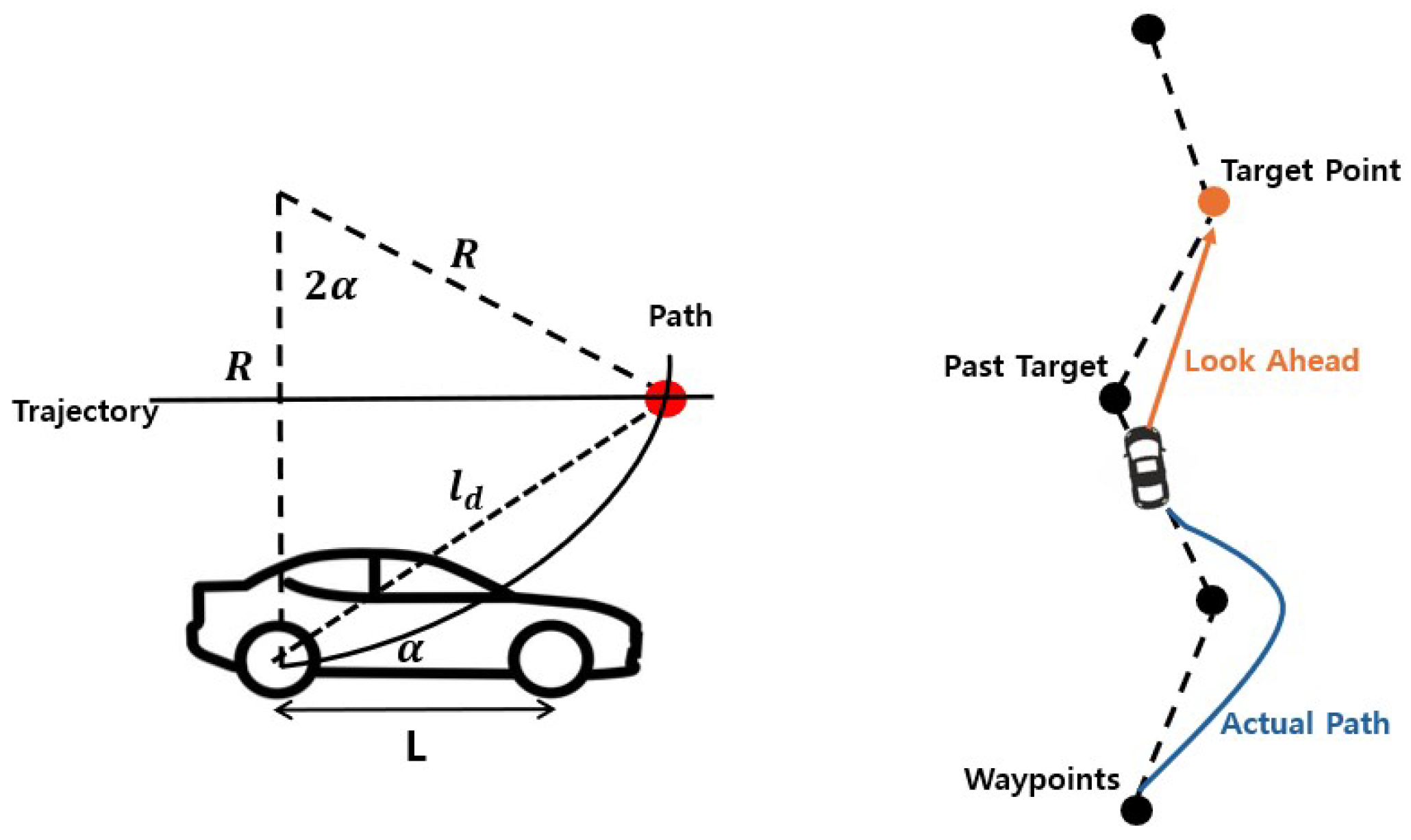

Waypoints-based driving employs the Pure Pursuit algorithm to control the steering angle and uses PID control to regulate the vehicle’s speed [22]. This control strategy is applied to the leading vehicle and to following vehicles when entering curved sections.

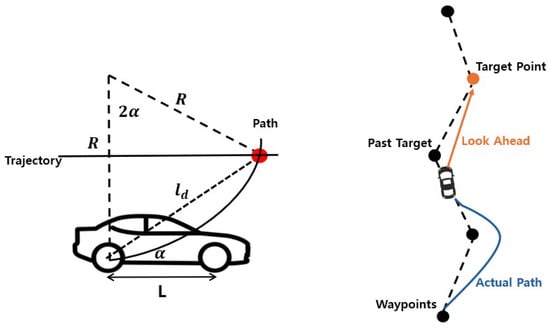

The look ahead path following with the target point and actual vehicle path is illustrated in Figure 2, and the Pure Pursuit algorithm, as expressed in Equation (1), is applied to compute the steering angle [23]. The orange line indicates the look ahead distance between the vehicle and the target point, while the blue line represents the actual driving path of the vehicle. Dashed lines represent reference trajectories based on waypoints, whereas solid lines indicate the actual path traveled by the vehicle. The steering angle is calculated based on the distance to the target point, the wheelbase, and the angle formed with respect to the center of the rear axle. As the vehicle moves, the target point continuously updates, and the steering angle is adjusted accordingly. In this study, the distance between waypoints is set to 1 m, and to ensure stable trajectory tracking, the next waypoint is selected when the distance between the vehicle and the waypoint is less than 2 m. Therefore, the look ahead distance is set to 2 m. The Tesla Model 3, used in the CARLA simulator, has a wheelbase L = 2.85 m. R represents the turning radius of the vehicle, which is the radius of the circular path that the vehicle follows based on its current steering angle. The angle is computed by subtracting the current vehicle yaw from the angle between the vehicle’s current position and the coordinates of the target point. Finally, the derived steering angle is converted into radians and transmitted as the control command .

Figure 2.

Look ahead path following with target point and actual vehicle path.

For speed control, the PID control technique is employed, and a formula such as Equation (2) is applied [24]. Here, represents the calculated output and serves as the vehicle’s final throttle value. The error term is defined as the difference between the target throttle value of 0.3, which corresponds to a target speed of 30 km/h, and the current throttle value. This control process utilizes the proportional, integral, and differential constants , , and to avoid sudden acceleration or deceleration. In the CARLA simulator, the throttle value ranges from 0.0 to 1.0, with 1.0 representing full throttle. Based on experimental tuning, the constants are set as = 1.0, = 0.05, and = 0.01. The calculated throttle value is clipped between 0.0 and 1.0 using the np.clip() function and transmitted as the command value.

TSB driving is a method in which the latter vehicle receives and applies the throttle, steer, and brake values generated by the preceding vehicle during driving. While conventional waypoints-based driving computes the control commands based on the target and current x, y coordinates, TSB driving directly utilizes the TSB values produced by the preceding vehicle during straight line motion as control inputs for the latter vehicle. This approach allows following vehicles to perform optimized driving without the need for separate calculations.

When the preceding vehicle uses a threshold-based method to distinguish between straight and curved driving, the accuracy degrades in S-shaped or complex curved road segments. To address this limitation, the preceding vehicle employs an AI approach based on the yaw_diff value to classify driving states. The yaw_diff represents the rate of change in the vehicle’s heading direction and is modeled as time-series data using a Recurrent Neural Network (RNN). Based on this classification, the control system switches to transmitting TSB values to the following vehicles during straight line driving, while applying waypoints-based driving in curved segments. Comparative analysis confirms that this hybrid approach enables stable driving while significantly reducing computational overhead compared to conventional waypoints-based methods.

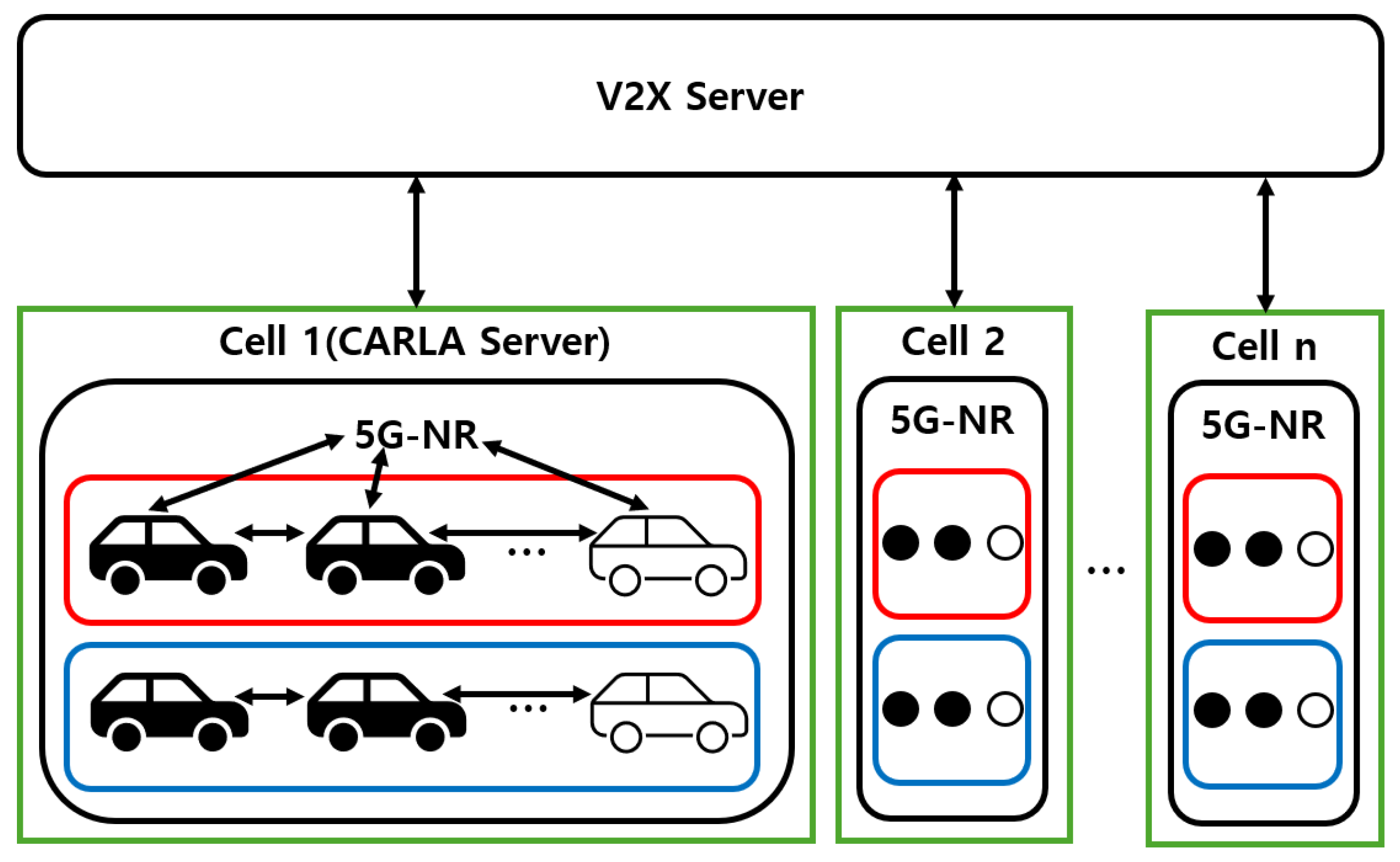

3.2. Communication and Security Between Vehicles in Cooperative Autonomous Driving

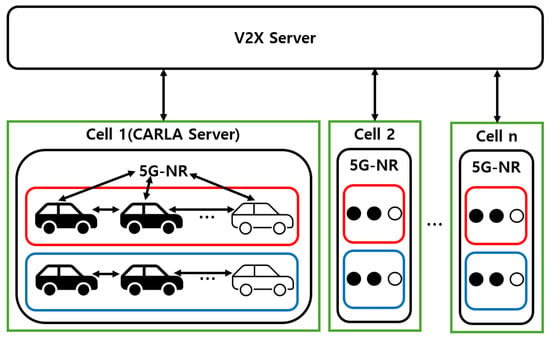

In cooperative autonomous driving system, the accurate management of cluster information is essential due to continuous inter-vehicle communication and the handling of complex requests [25,26]. In this research, the CARLA simulator is considered an edge server operating within a single wireless network cell, reflecting the coverage area of a typical 5G base station. This assumption does not imply the need to deploy a server in every cell but rather serves to model communication delays and cluster control. V2V communication is based on the direct communication method between vehicles and other devices (PC5) interface, while V2I communication conceptually corresponds to the network-based communication method between vehicles and the infrastructure via the cellular base station (Uu) interface. Without implementing the physical layer, the communication is abstractly modeled, and message transmission is secured through Transmission Control Protocol (TCP)-based socket communication. Since the focus of this study lies in evaluating the effectiveness of control strategies rather than precise network implementation, factors such as latency and channel effects are not simulated. The edge server manages the cluster by validating vehicle data and enhances security through pseudonymization, encryption, and digital signatures [27]. The simulation environment is configured under ideal conditions to compare the performance of TSB-based driving and waypoints-based driving. The proposed network architecture is illustrated in Figure 3. The red and blue groups indicate separate vehicle clusters.

Figure 3.

Network topology for the proposed cooperative autonomous driving system.

The network structure primarily consists of 5G-NR communication among the V2X server, the CARLA server, and the preceding and latter vehicles. The V2X server stores and utilizes information received from the CARLA server to support efficient cluster management and data processing. To verify the digital signature of a pseudonymized vehicle, the system is linked to a database that maps pseudonymized IDs to actual vehicle IDs. Upon receiving an authentication request from the CARLA server, the public key of the real ID that is mapped from the pseudonym is retrieved and returned for verification. The CARLA server functions as an edge server and provides the V2X server with various data, including actor information, map data, and traffic flow information such as pedestrian locations. It also supports the creation and management of RSUs. Each vehicle transmits pseudonymized information such as its vehicle ID and current location to the CARLA server. The server records these data to determine whether clustering should be performed. Additionally, the preceding vehicle uses the PC5 interface to communicate with the latter vehicle and determine its control behavior [28].

Table 2 presents the message format used for inter-vehicle communication, which is designed to securely transmit vehicle identification and driving information. The vehicle ID, front ID, and rear ID are pseudonymized for vehicle distinction and are managed per session. A session ID is assigned to maintain and track the communication link between each vehicle and the CARLA server.

Table 2.

Message packet details for the V2X network.

When the message type is defined as driving data exchange, the Status field is used to distinguish between straight and curved sections. In the case of escape or joining messages, this field indicates the vehicle’s state, such as standby, acceptance, or rejection. Encrypted data include driving information such as the vehicle’s location, speed, and steering angle, and are protected using symmetric key encryption methods such as AES. For escape and joining messages, this data field is padded with zeros. The Checksum field is responsible for detecting message errors and verifying data integrity through the Secure Hash Algorithm-224 (SHA-224). The Signature field ensures the authenticity of the message source, incorporating an EdDSA-based digital signature. This EdDSA-based signature is used only during the “Init” stage to authenticate the pseudonymized ID.

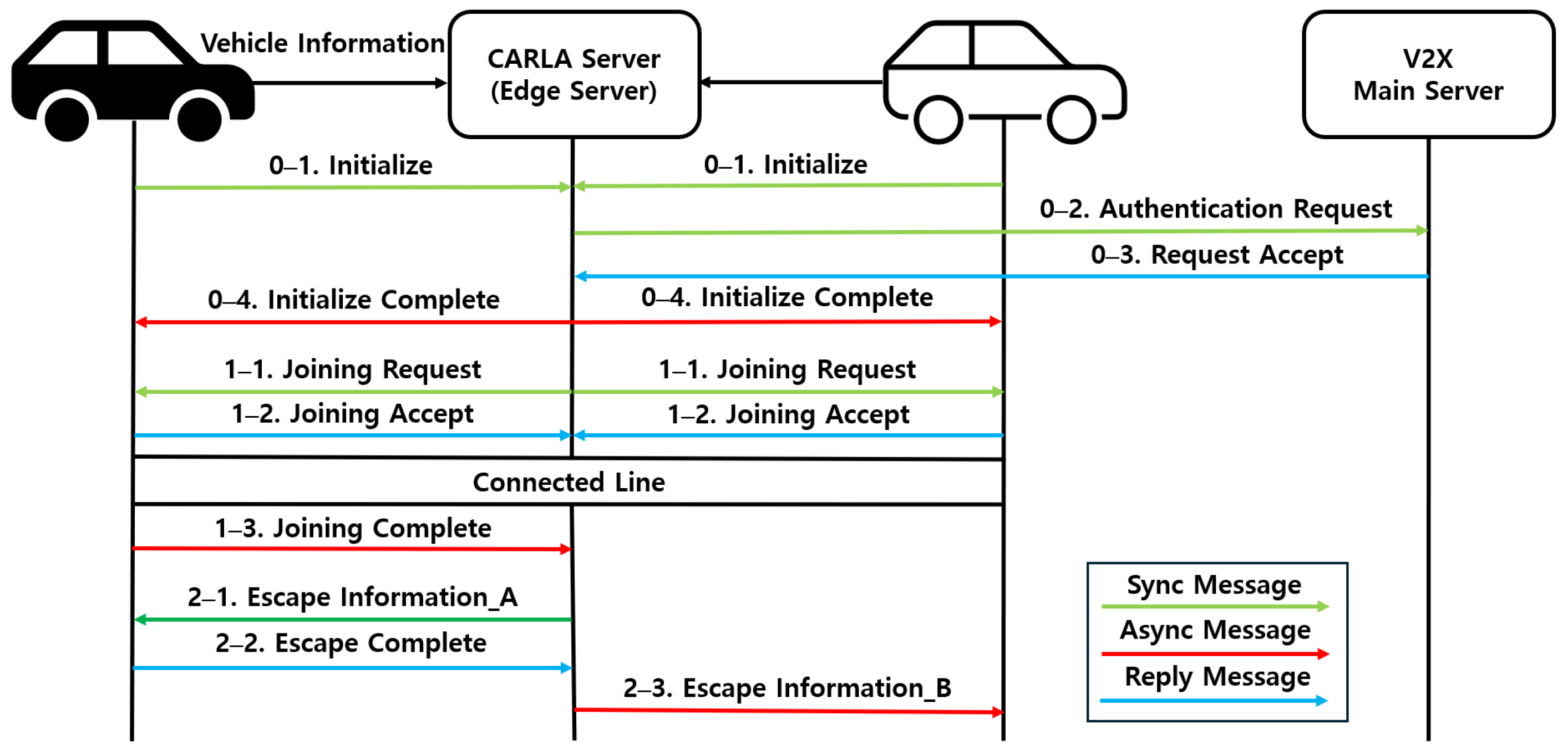

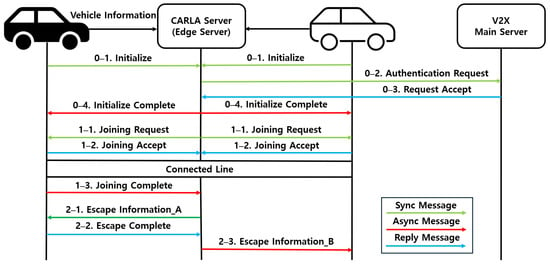

The vehicle information exchange and cluster formation process defines and implements initialization, cluster escape, and joining procedures according to the flow illustrated in Figure 4.

Figure 4.

Flow for vehicle information exchange and cluster management.

3.2.1. Init

When a vehicle enters a new region managed by a different CARLA server and first connects to the corresponding edge server, its vehicle ID and initial location information are encrypted and transmitted. At this stage, the pseudonymized vehicle ID is inserted into the vehicle ID, front ID, and rear ID fields. The message type is set to “Init”, and the driving information field is padded with zeros. The CARLA server updates and manages vehicle information based on the received packet. Simultaneously, the V2X server obtains the public key corresponding to the actual vehicle ID that is mapped from the pseudonymized ID. Using this key, the vehicle is authenticated through a digital signature. Once authentication is completed, the CARLA server sends an initialization completion message to the vehicle along with waypoints information. The vehicle then begins autonomous driving based on the assigned waypoints and its destination.

3.2.2. Cluster Joining

When a vehicle approaches within 15 m of another, the CARLA server identifies the preceding and latter vehicles and generates a “Cluster Joining Request” message, which initiates the cluster formation procedure. Upon receiving this request, each vehicle updates its joining status and responds to the CARLA server with a message indicating standby, acceptance, or rejection. If a vehicle is successfully joined to the cluster, its own ID is recorded in the vehicle ID field. The ID of the preceding vehicle is recorded in the front ID field, while that of the latter vehicle is recorded in the rear ID field. During this stage, the encrypted driving information field is padded with zeros, and the message type is set to “Cluster Joining”. Subsequently, the preceding and latter vehicles are connected via the PC5 interface. The preceding vehicle determines the necessary driving information and transmits it to the latter vehicle. Finally, when the latter vehicle sends a confirmation packet indicating successful joining, the CARLA server updates the vehicle information accordingly.

3.2.3. Cluster Escape

When a vehicle moves more than 30 m away, the server sends a “Cluster Escape” message to the latter vehicle. The ID of the corresponding vehicle is recorded in both the vehicle ID and front ID fields. If a latter vehicle exists, its ID is recorded in the rear ID field, and if not, the ID of the corresponding vehicle is used instead. The encrypted driving information field is padded with zeros, and the message type is set to “Cluster Escape”. The vehicle then updates its status and responds to the server. The server subsequently updates the vehicle information and processes the escape request.

3.3. Urban Environment Perception

Unlike highways, urban environments require the careful consideration of traffic lights and pedestrians, particularly at intersections. At a red traffic light, the vehicle must come to a complete stop. When the leading vehicle stops, multiple following vehicles may join the cluster. Conversely, if only the following vehicles stop, they may escape the cluster. As the proposed cooperative autonomous driving system operates in urban environments, traffic light and pedestrian perception are regarded as critical factors. Pedestrians transmit their real-time location information to the edge server, which identifies the pedestrian closest to the vehicle. The server then determines a safe distance based on the road width. If a pedestrian is detected within this distance, the vehicle stops immediately [29].

3.3.1. Traffic Light Perception

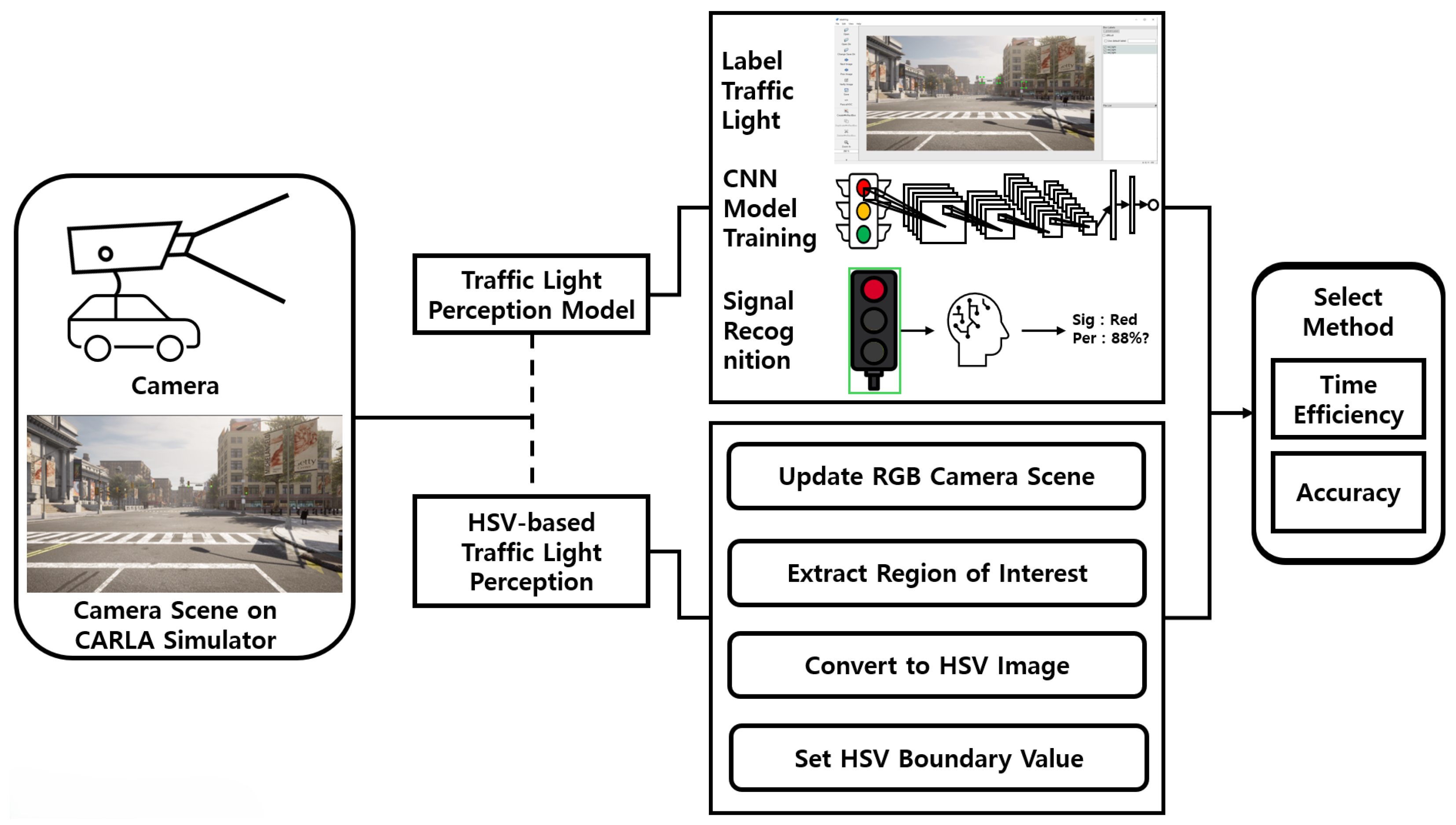

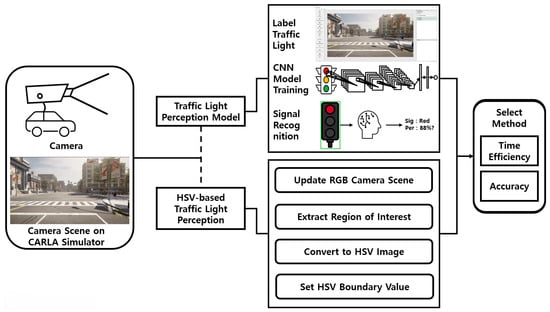

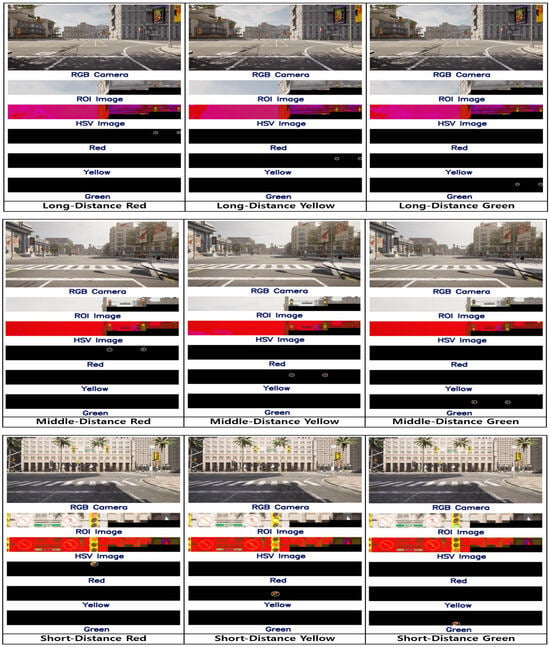

The traffic light perception process is illustrated in Figure 5. This study compares an AI-based method, which utilizes a trained traffic light detection model, with an HSV value-based method and selects the more suitable approach for the urban environments.

Figure 5.

Structure of traffic light perception in urban environment.

The AI-based perception method uses a Red–Green–Blue (RGB) camera in the CARLA simulator to capture traffic light scenes at intersections. Red, yellow, and green signals are collected at equal frequencies. The entire traffic light is labeled to preprocess the data for training a CNN model. Based on the training results, a traffic light perception model is generated. This study employs YOLOv12nano, the lightest version of YOLOv12, which is the latest iteration in the YOLO series. YOLO is an object detection model originally proposed by Joseph Redmon in 2015. While earlier object detection models require multiple passes over an image and require substantial computational resources, YOLO improves efficiency by detecting objects in a single pass. Because traffic light detection must operate continuously during driving and the vehicle’s embedded system must manage multiple simultaneous tasks, the computational resources allocated for perception are limited. Therefore, YOLOv12nano is selected for its higher accuracy and faster performance compared to YOLOv11, while requiring fewer computational resources [30].

The HSV value-based traffic light perception method is implemented using OpenCV. Traffic light locations at various intersections are defined as Regions of Interests (ROIs) and extracted, enabling focused analysis on the traffic light area. The input image is converted into the HSV color space, and color-based filtering is applied by setting HSV thresholds corresponding to traffic light HSV colors. If the number of pixels within the threshold in the ROI is eight or more, the traffic light is considered successfully detected. As HSV values may vary due to weather or environmental conditions, repeated experiments are conducted to validate robustness. The results confirm that setting the pixel count threshold to eight yields accurate and reliable perception.

The two methods are compared in terms of time efficiency and accuracy. As this study is conducted in an urban environment, real-time performance is prioritized. Accordingly, if both methods satisfy a predefined accuracy threshold, the method with superior time efficiency is selected. The accuracy threshold is experimentally determined to ensure safe and reliable traffic light perception.

3.3.2. Pedestrian Perception

Pedestrian detection is based on V2P communication within the V2X network [31]. If a pedestrian’s location received via the V2X network is within the vehicle’s predefined safety distance, an emergency response is triggered and the vehicle comes to a stop. The Tesla Model 3, used in this research, has a total length of 4.72 m and a width of 2.09 m. The distance from the vehicle center is approximately 2.36 m in the longitudinal direction and 1.05 m laterally. Pedestrian positions are continuously received through the V2X network. If a pedestrian is detected within 6.5 m of the client vehicle, emergency braking is executed.

In this study, the average driving speed is set to 30 km/h, requiring a minimum braking distance of 1.5 m. The predefined braking distance of 6.5 m satisfies this requirement [32]. On actual roads, assuming an average urban speed of 50 km/h in South Korea, the required braking distance is approximately 20 m. Therefore, applying this system to real vehicles would require communication constraints to limit signal exchange within the roadway. If the vehicle speed exceeds 40 km/h, the braking distance threshold is adjusted to 20 m within the algorithm.

3.4. Cluster Escape and Joining

In urban environments, the cluster escape and joining algorithm plays a crucial role [33,34]. Unlike highways, where vehicles maintain continuous movement, vehicles in urban environments frequently stop. Therefore, safe cooperative autonomous driving is feasible only when this algorithm is properly implemented. In this study, the algorithm is designed using each vehicle’s ID and its distance from the preceding vehicle within the V2X network.

The pseudo-code of the cluster escape and joining algorithm is presented in Algorithm 1. The input includes the vehicle ID and its distance from the preceding vehicle. The output provides the cluster state, represented by , as well as the IDs of vehicles that have escaped or joined the cluster.

| Algorithm 1 Algorithm of the cluster escape and joining. |

|

If the ID of the leading vehicle received through the V2X network does not match the ID of the current vehicle, it is classified as a following vehicle. The cluster escape and joining algorithm operates based on the distance to the preceding vehicle. In cooperative autonomous driving, the maximum allowable distance between vehicles in a cluster is set to 30 m. If the distance exceeds this threshold, the vehicle is considered to have escaped from the cluster. In such a case, the system sets to “escape” and designates the vehicle as a new leading vehicle, transitioning it to waypoints-based driving mode. The optimal inter-vehicle distance within a cluster is set to 15 m. If the distance to the preceding vehicle exceeds 15 m but remains below 30 m, the throttle value is increased to close the gap to 15 m. Conversely, if the distance is between 8 m and 15 m, the throttle is reduced to maintain the target spacing. If the preceding vehicle comes to a stop, adjusting the throttle alone may result in a collision. Therefore, if the distance to the preceding vehicle falls below 8 m, the brake is applied to bring the vehicle to a complete stop. As the following vehicles also stop sequentially, this mechanism prevents collisions even when the current vehicle remains stationary.

A joining event occurs when the ID of the current vehicle matches that of the leading vehicle. If the leading vehicle approaches within 10 m of the tail vehicle in another cluster, a joining is triggered. This event is indicated by setting to “join”, and the current vehicle is reclassified as the tail vehicle of the target cluster. Since the tail vehicle functions as a following vehicle within the group, the TSB driving mode may be applied depending on the situation.

4. Results

In this research, cooperative autonomous driving in an urban environments is implemented using the CARLA simulator. CARLA simulator provides open-source digital assets for autonomous driving system development and urban layout simulation, making it suitable for this research. Moreover, the CARLA simulator offers an effective experimental environments by supporting environmental perception through various sensors, such as cameras and Light Detection and Ranging (LiDAR), enabling vehicles to perform autonomous driving accordingly.

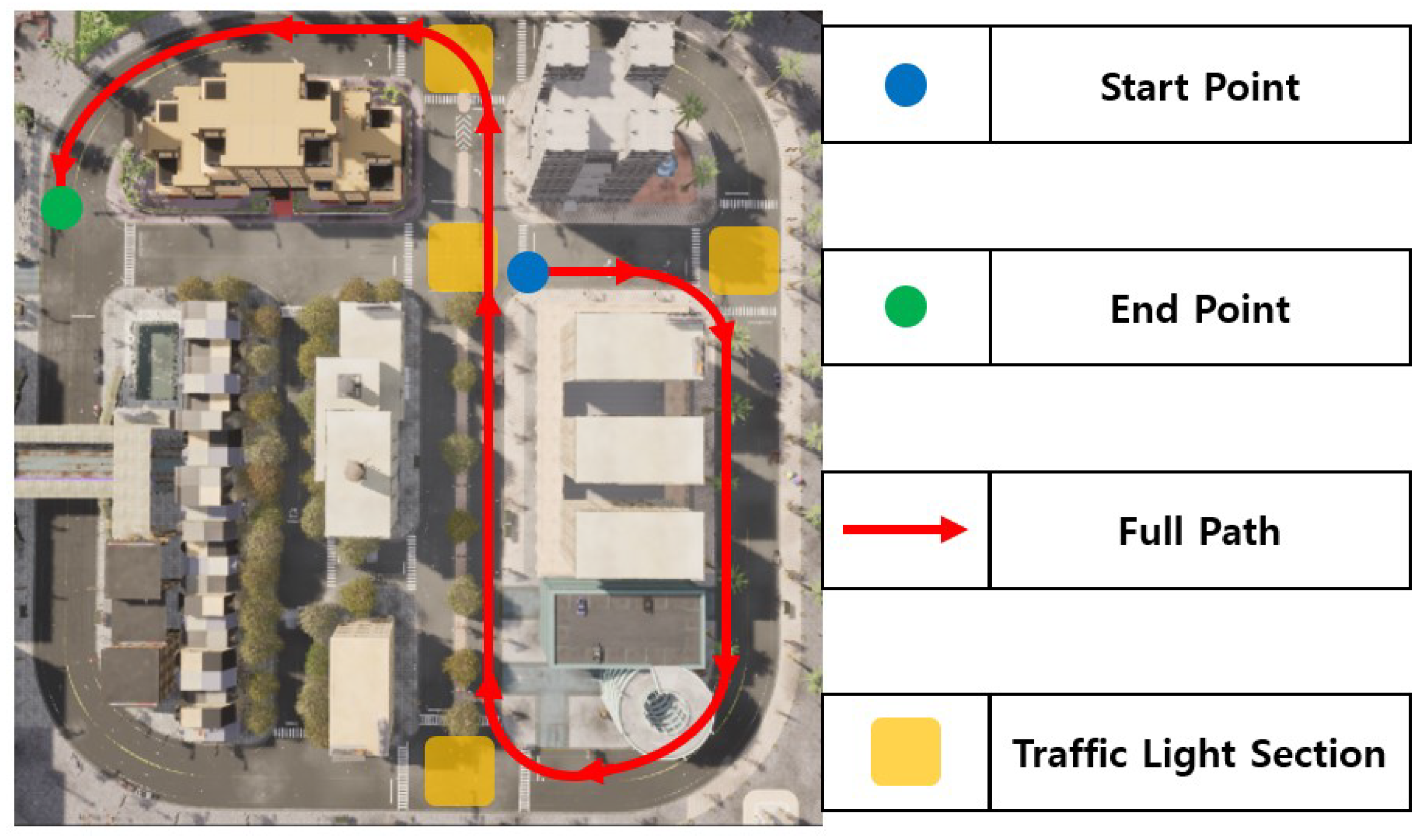

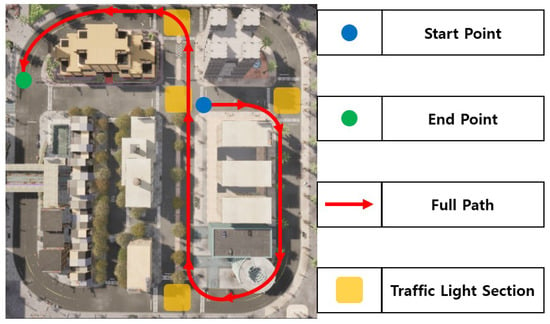

This study is conducted based on TOWN 10 in the CARLA simulator, which features a grid layout, including numerous different junctions including a 4-way yellow-box junction, dedicated turning lanes and central reservations. Traffic lights are installed at all junctions where two or more roads meet, and the driving route is configured to pass through a total of four traffic lights. When the number of pedestrians is set to 10, interactions between vehicles and pedestrians at intersections rarely occur. Conversely, when set to 50, pedestrians continuously cross intersections, causing vehicles to remain stopped. Therefore, the number of pedestrians is set to 25 to allow the vehicle to detect pedestrians at intersections, perform a stop, and resume driving. The entire route, including the departure point, arrival point, and traffic light sections, is illustrated in Figure 6.

Figure 6.

Full path and location information in urban environment.

To evaluate the performance of the proposed cooperative autonomous driving system, experiments are conducted using the CARLA simulator version 0.9.12. The experimental environment is configured on a high-performance system equipped with an NVIDIA RTX 3080 Graphics Processing Unit (GPU, emTek, Jakarta, Indonesia) and 64 gigabytes (GB) of RAM.

4.1. TSB Driving and Wapoints Driving

Autonomous driving in this study utilizes both TSB driving and waypoints-based driving. In the TSB driving mode, the following vehicle measures the total travel distance using the Euclidean distance method and divides it by the total execution time to calculate the execution time per meter [35]. The corresponding results are summarized in Table 3.

Table 3.

Performance indicators for TSB driving and waypoints-based driving.

The total travel distance for TSB driving is approximately 189 m, whereas waypoints-based driving covers around 166 m, resulting in a difference of about 23 m. This discrepancy arises from variations in route selection and is considered acceptable for comparative analysis. Despite the longer travel distance, the total execution time for TSB driving is measured at approximately 0.1114 s, which is about 388 times shorter than that of waypoints-based driving, 38.8167 s. Consequently, the execution time per meter for TSB driving is approximately 466 times lower, indicating that TSB driving is significantly more efficient in terms of computational performance and practical applicability.

4.2. Communication and Security Between Vehicles in Cooperative Autonomous Driving

Efficient communication and security between vehicles are critical for optimizing clustering and ensuring safety in cooperative autonomous driving systems [36]. This research designs a system that enables clustering through real-time V2X communication, allowing vehicles to cooperate for safe and efficient operation. The key considerations for communication and security in this process are outlined as follows.

4.2.1. Assessment of Interoperability

The cooperative autonomous driving system proposed in this study optimizes clustering through real-time information exchange between vehicles and employs V2X communication. Interoperability is a key factor in ensuring seamless data exchange across different vehicle manufacturers and communication technologies. Designed based on 5G-NR V2X, the system supports low latency, high-speed transmission, and high reliability, thereby enabling real-time, delay-free communication among heterogeneous vehicles. In particular, the PC5 interface facilitates direct communication between vehicles, ensuring efficient data exchange regardless of the vehicle brands or protocol differences. The CARLA simulator and the V2X server operate in conjunction to manage vehicle location, speed, and behavior in real time, enhancing both clustering and driving performance. Additionally, vehicle authentication is achieved by mapping pseudonymized IDs to real IDs, and data integrity is maintained through public key-based authentication. Throughout this process, authenticated data exchange ensures secure and interoperable communication between vehicles and infrastructures.

4.2.2. Assessment of Personal Information Protection

Privacy protection is a critical aspect of real-time V2X communication. In this research, various security mechanisms are designed to ensure data confidentiality and identity protection. Specifically, the use of pseudonymized vehicle IDs, AES encryption, and EdDSA digital signatures are designed as core security measures to achieve this objective.

The pseudonymized vehicle ID prevents the external exposure of vehicle information, thereby protecting the driver’s privacy. The vehicle ID, front ID, and rear ID are all pseudonymized and managed in a way that prevents association to the vehicle’s actual identity. These IDs are maintained on a session basis and securely mapped to the real ID through interaction with the V2X server. This approach ensures that the vehicle’s real-time location and operational data cannot be tracked, playing a vital role in minimizing privacy breaches. Moreover, the pseudonymized vehicle ID is updated either per session or periodically when a vehicle connects to a cluster network, preventing the continuous tracking of the vehicle’s location or behavior. Such periodic updates are essential for minimizing vehicle traceability and ensuring both privacy protection and anonymity.

Critical vehicle communication data, such as location, speed, and steering angle, can be encrypted and transmitted using AES, thereby preventing external attackers from eavesdropping or tampering with the messages. EdDSA-based digital signatures enable the verification of message authenticity, ensuring that transmitted data originates from authenticated vehicles. This mechanism prevents man-in-the-middle attacks and guarantees both message origin and integrity. EdDSA is particularly effective in V2X network, as it provides faster processing and smaller signature sizes compared to Rivest–Shamir–Adleman (RSA), enhancing both performance and security. However, since signature generation still incurs latency, EdDSA is applied only during the “Init” stage to authenticate pseudonymized vehicle IDs. During subsequent V2V communication, digital signatures are omitted to allow fast and efficient packet exchange. This selective use of signatures supports real-time clustering and minimizes potential privacy breaches during continuous V2X communications. Standards such as IEEE 1609.2 define digital signature and encryption methods to secure V2X communications [37]. In this study, EdDSA and AES are adopted to implement these cryptographic mechanisms, along with additional security features such as pseudonymized ID management and session-based identity handling. Consequently, the proposed method inherits the strengths of existing standards while enhancing real-time performance and communication efficiency, making a significant contribution to the secure design of cooperative autonomous driving systems.

4.2.3. Countermeasures Against Attack Simulations

V2V communication systems are vulnerable to various attacks, including man-in-the-middle attacks, replay attacks, and denial-of-service (DoS) attacks. The security mechanisms implemented in this study demonstrate strong resistance against such simulated threats.

First, EdDSA digital signatures and AES encryption are employed to ensure data integrity and authenticity. These techniques prevent attackers from intercepting or tampering with transmitted messages. Since each message is cryptographically signed, the system can verify whether it originated from an authenticated vehicle, effectively mitigating man-in-the-middle attacks. Additionally, timestamps and checksums are embedded in each message to distinguish between newly transmitted data and previously sent packets, thereby protecting against replay attacks. Furthermore, pseudonymized vehicle IDs and location-based encryption are utilized to prevent external tracking of vehicle positions. This approach safeguards driver privacy and significantly reduces the risk of traceable data leakage.

4.3. Urban Environment Perception

4.3.1. Traffic Light Perception

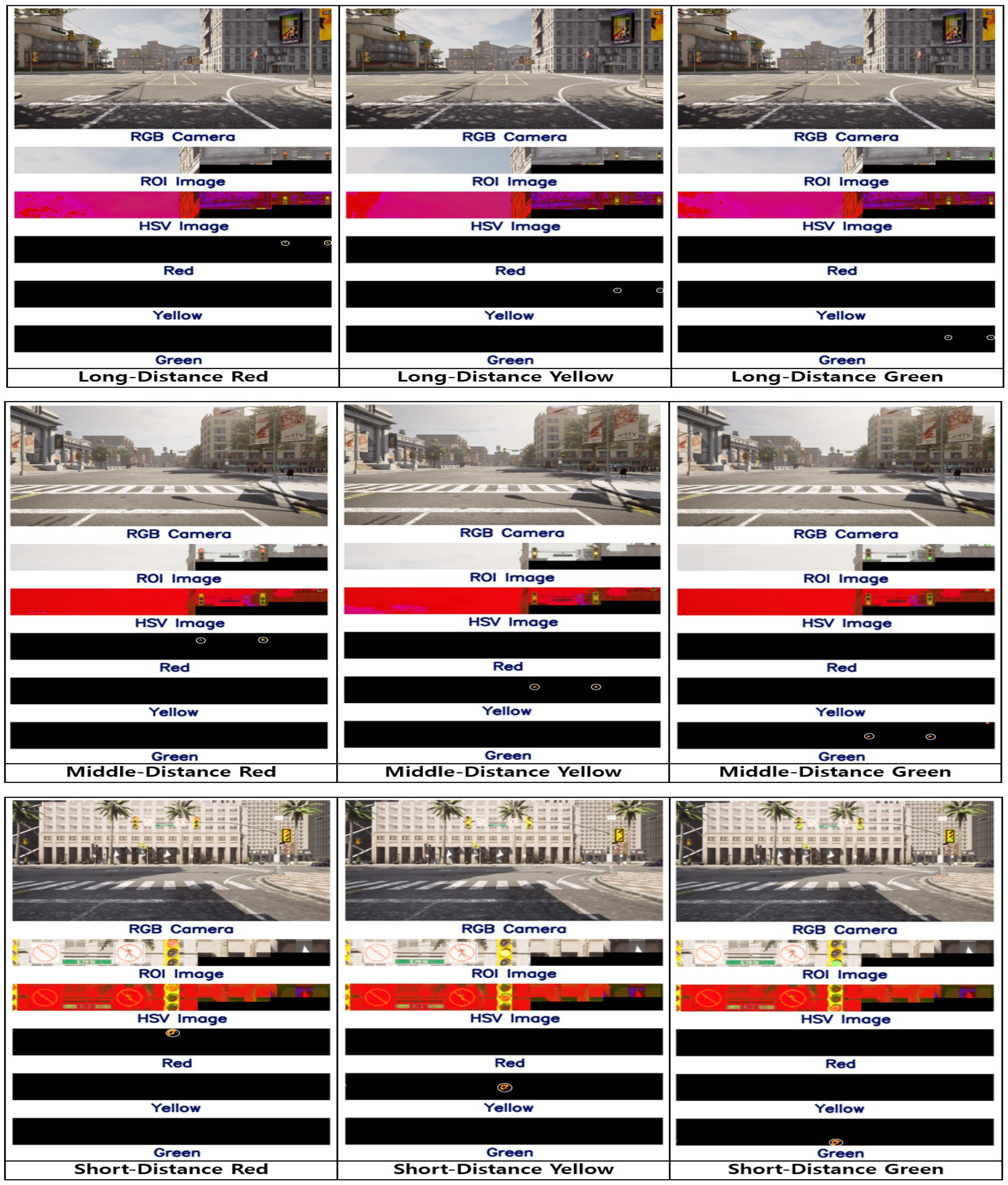

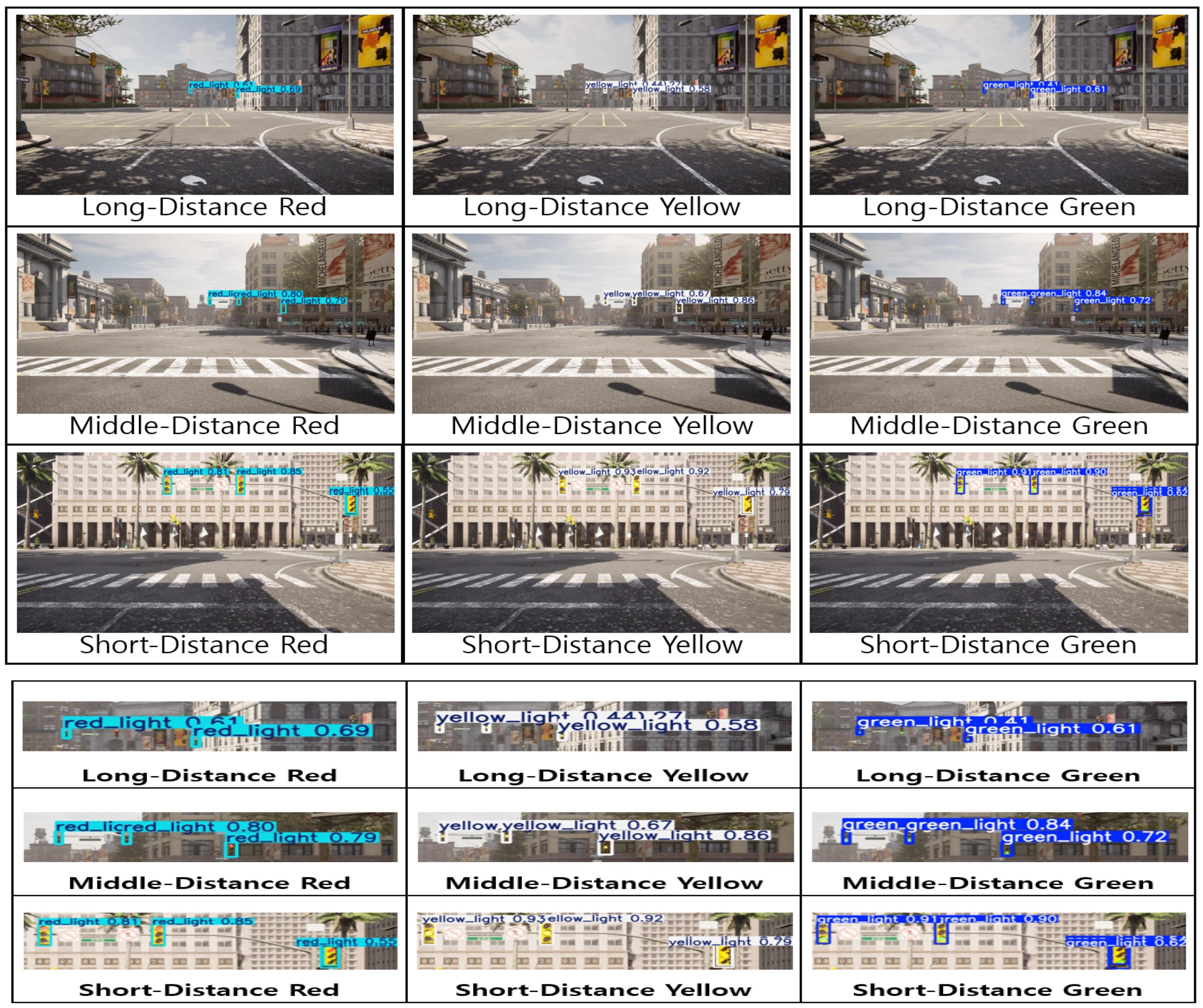

Traffic light perception in the urban environments is performed using two methods. In Figure 7 and Figure 8, and Table 4, the HSV value-based perception method is referred to as HSV perception, while the AI-based method, which employs a trained traffic light perception model, is referred to as AI perception. The AI-based model is trained on a balanced dataset of 225 images, with an equal distribution of red, yellow, and green traffic light states. Since the purpose is not to optimize model performance but to establish a baseline for comparison with the HSV-based method, data augmentation is not applied. This design choice is necessary to evaluate the two approaches under controlled experimental conditions. The perception results are compared in terms of accuracy and time efficiency. If no traffic light is detected, the system retains the previous control command to maintain safe autonomous driving in the urban environments. Figure 7 shows the results of the HSV-based perception, while Figure 8 presents the AI-based perception results. A quantitative comparison of accuracy and time efficiency is summarized in Table 4.

Figure 7.

Result and process images of HSV perception’s traffic light perception experiment.

Figure 8.

Result images of AI perception’s traffic light perception experiment.

Table 4.

Performance indicators for AI perception and HSV perception.

The AI-based perception method demonstrates relatively lower performance in the experimental evaluation. In contrast, the HSV-based perception method successfully detects all traffic signals after 800 iterations, achieves an accuracy of 99.5833%, and requires only 3.851 ms to recognize a single traffic light. As discussed in Section 3.3.1, real-time performance is prioritized due to the urban driving environments. Given that the HSV-based method is approximately 101.8 times faster, it is adopted for urban environments perception. The high perception accuracy of 99.5833% confirms its reliability. Since it is efficient in both time and accuracy, no explicit accuracy threshold is defined. In most scenarios, traffic lights are absent. These cases are classified as no_signal. When the signal is categorized as no_signal, the vehicle retains its previous control state, allowing it to stop at red lights and proceed through green lights. Through repeated experiments, it is confirmed that HSV-based perception enables reliable urban environments perception and supports safe autonomous driving.

4.3.2. Pedestrian Perception

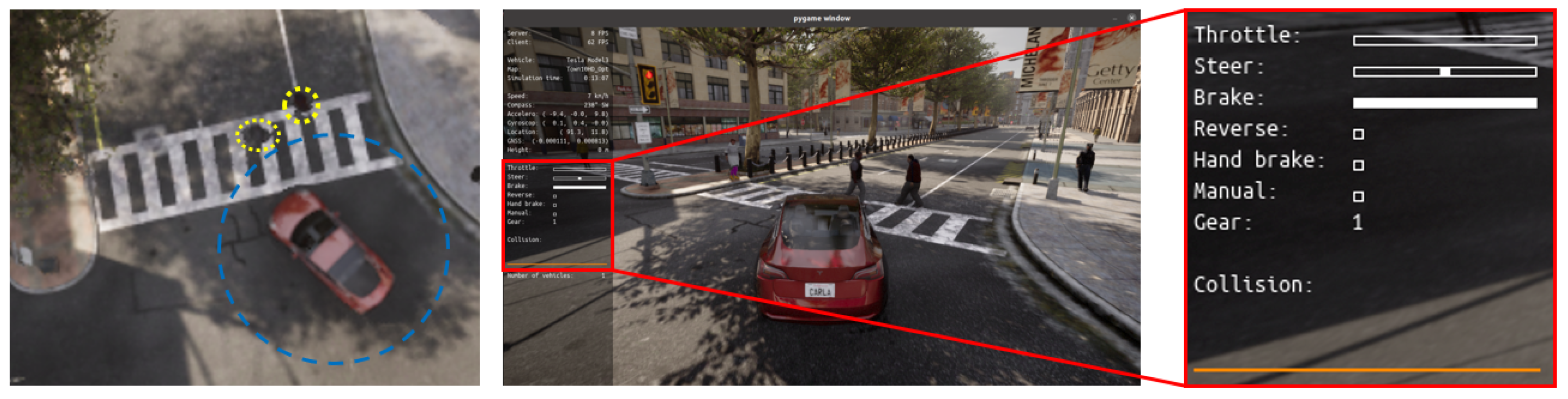

The presence of pedestrians within the maximum distance from the center of the vehicle to the curb is continuously monitored via the CARLA server. As illustrated in Figure 9, the vehicle initiates braking to prevent collisions when a pedestrian is detected. The blue circle indicates the pedestrian detection range of the vehicle, while the yellow circle visualizes the pedestrian’s location. Given the average vehicle speed of 30 km/h, the vehicle stops if a pedestrian is located within 6.5 m of its center. Once the pedestrian moves beyond the safe threshold, the vehicle resumes driving. If the vehicle speed exceeds 40 km/h, the threshold distance is adjusted to 20 m. This behavior is essential for ensuring safety in urban environments. For static obstacles, the vehicle must generate an avoidance path. However, due to the dynamic nature of pedestrian movement, a full stop is required to guarantee safety. With future advancements in V2X networks, it is anticipated that vehicles will not only stop for pedestrians but also dynamically generate alternative routes through areas with lower pedestrian density, thereby improving traffic flow and driver convenience.

Figure 9.

Pedestrian perception in urban environment.

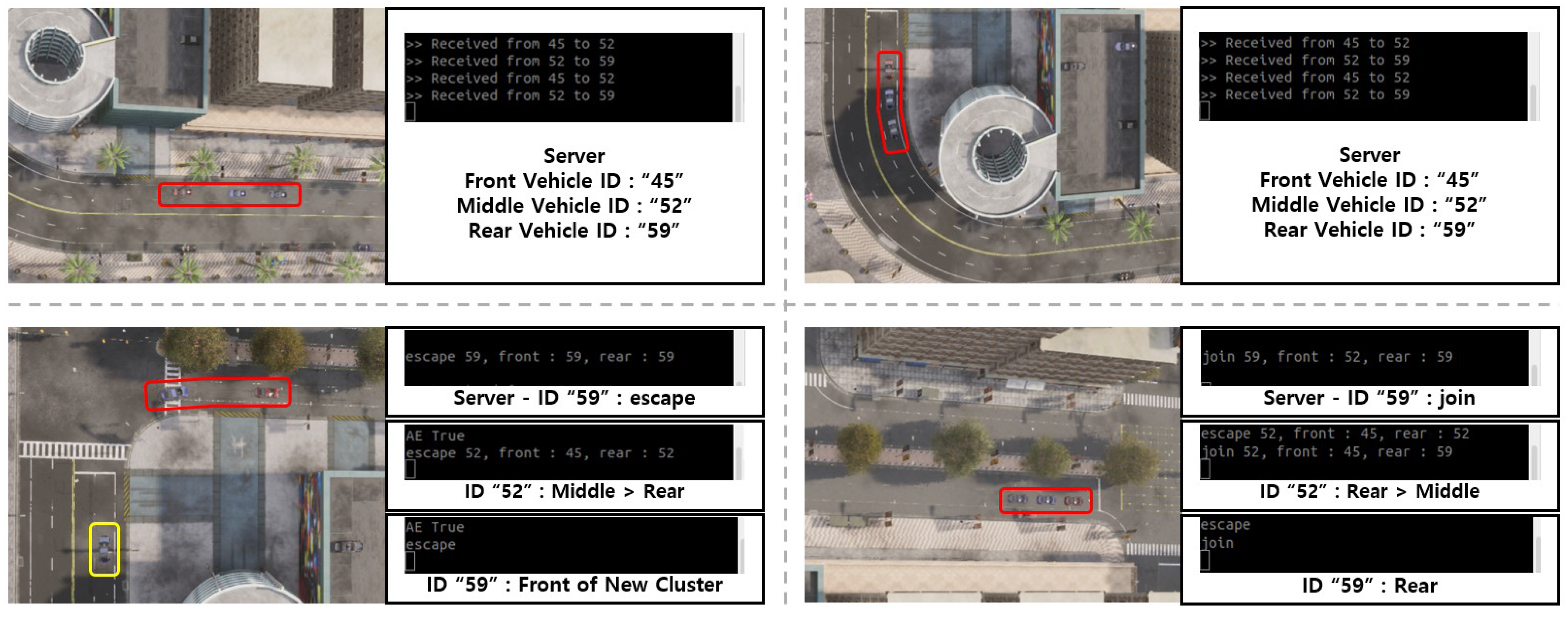

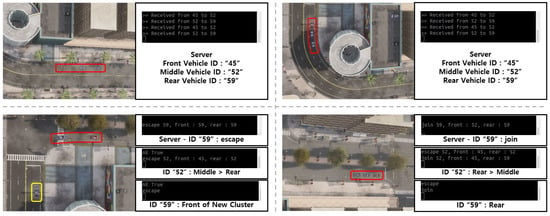

4.4. Cluster Escape and Joining

In Figure 10, the vehicle states during a cluster escape and joining scenario, along with the server’s communication status, are illustrated. The red square indicates the initial cluster, while the yellow square shows a new cluster formed by a vehicle that escaped from the original group. The server facilitates sequential communication between the preceding and latter vehicles. The vehicles involved in this scenario have the vehicle IDs “49”, “52”, and “59”. Cluster escape and joining typically occur when a vehicle stops at an intersection. In this case, the tail vehicle remains at the intersection and subsequently escapes the cluster. The server notifies that client 3, with vehicle ID “59”, has escaped. The vehicle with ID “59” receives this notification, records the information, and responds to the server. The server then assigns vehicle ID “59” as the leading vehicle in a newly formed cluster and resumes waypoints-based driving. Additionally, the server updates client 2, with vehicle ID “52”, that its role has changed to the new tail vehicle. Client 2 acknowledges this change and records the update accordingly.

Figure 10.

Experimental results of cluster escape and joining algorithm.

4.5. Integrated System of Cooperative Autonomous Driving

To implement the cooperative autonomous driving system, the experiment is conducted in an integrated environment composed of three independent components: the CARLA server, the V2X server, and the vehicles. Each component is executed in a separate Ubuntu terminal to ensure modularity and process isolation.

4.5.1. CARLA Server

When the CARLA simulator is launched, the CARLA server is initialized to manage various elements such as actors, traffic, and simulation time through the World class. The server continuously monitors the real-time locations of all actors, assuming the use of Global Positioning System (GPS) sensors, and utilizes this information to perform tasks such as accident prevention. To ensure secure communication, the CARLA server exchanges data with the V2X server, receiving information such as vehicle ID, front ID, and rear ID from each vehicle. Based on this information, the server analyzes inter-vehicle relationships and, if the predefined conditions are met, manages them as a single cluster. Upon receiving vehicle data, the server first checks the message type and then executes the corresponding operation. In addition, the cluster and cell information maintained by the V2X server is periodically transmitted to the CARLA server, where it is stored and used for synchronized management.

4.5.2. Vehicles

Vehicles are registered as vehicle objects among the Actor instances in the World class of the CARLA server. To reflect the real-world driving conditions, each vehicle undergoes an initialization process via the “INIT” message, and the experiment is conducted under the assumption that all vehicles enter a defined cell. Communication between the vehicle and the server is established through the Uu interface, while the PC5 interface is assumed for V2V communication. After completing initialization, each vehicle receives waypoints from the server based on its assigned destination and proceeds accordingly. Safety, particularly accident prevention during driving, is treated as the highest priority. When the CARLA server detects a potential collision with a pedestrian or another vehicle, it issues an emergency signal, and the vehicle immediately sets the brake value to 1 to come to a stop. Similarly, when a red signal is detected during traffic light perception, a control_red signal is generated, and the brake is also set to 1. Each vehicle transmits its x and y coordinates to the server in real time. If the vehicle belongs to a cluster, this positional information is also shared with both the preceding and latter vehicles. A vehicle designated as a latter vehicle receives the throttle, steer, brake or coordinates information from the preceding vehicle and adjusts its movement accordingly. It then transmits its own x and y coordinates to the latter vehicle via V2V communication, depending on the status value contained in the most recently received message.

The vehicle control process is structured into three stages as illustrated in Algorithm 2. First, to prioritize pedestrian safety, the system determines whether the vehicle should stop based on pedestrian detection. Next, traffic light information is used to control vehicle behavior in accordance with traffic signals. These two steps help prevent potential collisions in advance. In the final stage, the vehicle identifies its position through communication with the server and selects either TSB-based or waypoints-based driving, depending on the presence of a preceding vehicle and the assigned driving mode. The proposed method contributes to both safety and driving efficiency in cooperative autonomous driving within urban environments.

| Algorithm 2 Integrated algorithm for perception and autonomous driving control. |

|

4.5.3. V2X Server

The V2X server is anticipated to be expanded in future work to serve as a central communication server in real-world environments. At the current stage, each CARLA server is assumed to represent a single cell, where all relevant information is collected, recorded, and used for tasks such as ID verification via a connected database. The collected data are stored in JavaScript Object Notation (JSON) format, and specific methods for data storage and processing will be developed in future work.

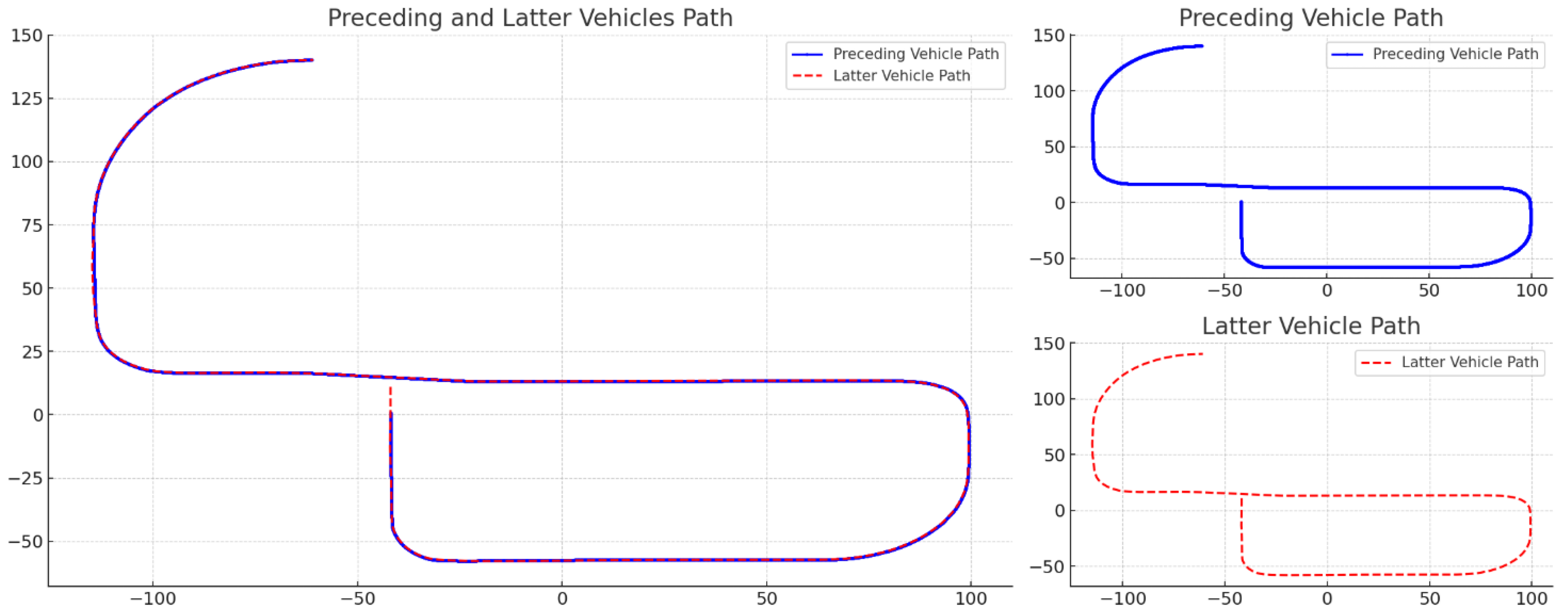

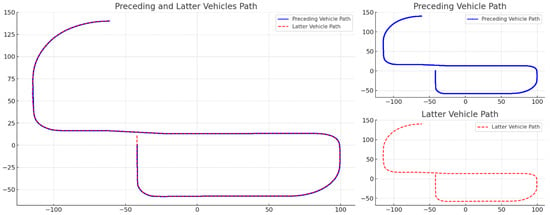

Figure 11 illustrates the cooperative autonomous driving route between the preceding and latter vehicles, as a result of the integrated system proposed in this study. The preceding vehicle follows a waypoints-based driving method, while the latter vehicle alternates between TSB-based and waypoints-based driving. In the region where the x-coordinate is less than –100, the path of the latter vehicle does not exactly align with that of the preceding vehicle. However, since both trajectories remain within the lane boundaries, the situation is considered safe. A detailed analysis reveals a slight delay in the transition between curved and straight driving modes, indicating that the AI model takes some time to switch from curved to straight section control. This issue is attributed to the boundary conditions set by the AI model and does not compromise the safety of the TSB driving approach. As a result, both vehicles successfully demonstrate safe driving behavior, confirming the practical applicability of the proposed system.

Figure 11.

Integrated system result of cooperative autonomous driving.

In this research, both the transmission time of the V2X server and the time required for a vehicle to join or leave a cluster are measured to be within 1 ms. The V2X server’s transmission time is verified 1781 times, yielding an average of 0.152 ms. The time for a vehicle to complete cluster joining or escape is verified 1015 times, with an average time of 0.23 ms. Although the simulation is conducted under ideal conditions without external forces, the proposed system is expected to maintain its safety, accuracy, and efficiency, even when applied in real-world environments where various external influences exist.

5. Conclusions and Future Works

In this paper, cooperative autonomous driving is implemented and verified in an urban environments. A new driving technique, called TSB driving, is proposed to ensure real-time performance in V2X communication by minimizing the computational overhead of the following vehicle in cluster scenarios. Autonomous driving, V2X network, urban environments perception, and security components are integrated to enable seamless information exchange. Based on this shared information, vehicles are able to perform cooperative autonomous driving safely within the urban environments.

When cooperative autonomous driving is conducted in urban environments, this study verifies whether safe and efficient driving is achievable by evaluating the computational overhead, speed, and accuracy across each domain. In urban environments, pedestrians, infrastructure, and vehicles are interconnected through a V2X network, enabling real-time communication and coordination across multiple domains. This real-time interoperability is a key factor for realizing cooperative autonomous driving in urban environments. By integrating multiple domains, including autonomous driving, V2X communication, urban environments perception, and security, the proposed system demonstrates improvements in accuracy and time efficiency compared to related studies. Furthermore, a new driving method, TSB driving, is compared with conventional waypoints-based driving to validate its practicality. The adoption of TSB driving contributes to research and development by reducing hardware dependency and enhancing system efficiency.

In future work, the proposed functionality will be expanded by implementing cooperative autonomous driving verified in the CARLA simulator on real roads. For example, by utilizing experimental testbed vehicles, the system can generate traffic information and obtain real-time location data of target vehicles through communication with various RSUs. In addition, autonomous vehicle safety can be further enhanced by diversifying driving routes. Rather than performing complete stops, avoidance routes can be dynamically generated by refining pedestrian detection. The braking distance is adjusted based on the vehicle’s speed, and the pedestrian detection range is modified accordingly. Since pedestrians may occasionally be detected outside of the road area, the communication region is planned to be restricted to valid road boundaries using GPS information. As this study is conducted in a simulated environment, no communication errors have occurred between V2X devices. However, future research will incorporate scenarios involving network latency and connection loss to enhance the system’s robustness for real-world deployment.

From a technical perspective, various studies are planned to optimize autonomous driving control and enhance safety and real-time performance within the V2X network. Although TSB driving offers advantages in real-time responsiveness, it remains limited in terms of safety. To address this, scenarios will be further subdivided, or corrective parameters will be applied to ensure that TSB-based driving follows a trajectory as closely as possible to that of waypoints-based driving. Furthermore, the experimental environment is planned to be extended to include multiple CARLA server cells, which conceptually represent base stations deployed in different regions of a real-world network. The findings of this study are expected to provide a foundational framework for the development of connected vehicles and smart cities by enabling direct application in real-world scenarios.

In conclusion, this paper introduces TSB driving to optimize the computational overhead on vehicles and proposes a cooperative autonomous driving system that integrates urban environments perception and information exchange via the V2X network. A method for enhancing reliability with consideration for network security is also presented, and its immediate applicability is demonstrated through experiments conducted in the CARLA simulator, which closely replicates urban environments. Future work aims to contribute to the advancement of connected vehicles and smart cities by conducting experiments in real-world environments, deploying multiple RSUs, and further expanding and refining the scope of information exchange.

Author Contributions

Conceptualization, M.Y. and D.S.; methodology, M.Y., D.S. and S.K.; software, M.Y. and D.S.; validation, M.Y., D.S. and S.K.; writing—original draft preparation, M.Y., D.S. and S.K.; writing—review and editing, M.Y. and D.S.; visualization, M.Y. and D.S.; supervision, K.K.; project administration, K.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

https://github.com/ggaebi99/Cooperative-autonomous-driving-in-CARLA-Simulator (accessed on 9 April 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| V2X | Vehicle-to-Everything |

| TSB | Throttle–Steer–Brake |

| AES | Advanced Encryption Standard |

| EdDSA | Edwards-curve Digital Signature Algorithm |

| PID | Proportional–Integral–Differential |

| AI | Artificial Intelligence |

| HSV | Hue Saturation Value |

| MEC | Multi-access Edge Computing |

| V2V | Vehicle-to-Vehicle |

| V2I | Vehicle-to-Infrastructure |

| VANETs | Vehicular Ad hoc NETworks |

| ICN | Information-Centric Networking |

| IP | Internet Protocol |

| MPC | Model Predictive Controller |

| V2P | Vehicle-to-Pedestrian |

| V2N | Vehicle-to-Network |

| DSRC | Dedicated Short-Range Communication |

| LTE | Long-Term Evolution |

| 5G | 5th Generation |

| RA | Resource Allocation |

| C-V2X | Cellular Vehicle-to-Everything |

| QoS | Quality of Service |

| 5G-NR | 5th Generation New Radio |

| 3GPP | 3rd Generation Partnership Project |

| ITS | Intelligent Transport Systems |

| HARQ | Hybrid Automatic Repeat and Request |

| PSFCH | Physical Sidelink Feedback Channel |

| RSU | Road Side Unit |

| IEEE | Institute of Electrical and Electronics Engineers |

| ADAS | Advanced Driver Assistance System |

| YOLOv8 | You Only Look Once version 8 |

| CNN | Convolutional Neural Network |

| MAML | Model-Agnostic Meta-Learning |

| FPS | Frames Per Second |

| OpenCV | Open Source Computer Vision Library |

| RNN | Recurrent Neural Network |

| PC5 | The direct communication method between vehicles and other devices |

| Uu | The network-based communication method between vehicles and the infrastructure via the cellular base station |

| TCP | Transmission Control Protocol |

| SHA-224 | Secure Hash Algorithm-224 |

| RGB | Red–Green–Blue |

| ROI | Region of Interest |

| LiDAR | Light Detection and Ranging |

| GPU | Graphic Processing Unit |

| GB | Gigabytes |

| RSA | Rivest–Shamir–Adleman |

| Dos | Denial-of-Service |

| GPS | Global Positioning System |

| JSON | JavaScript Object Notation |

References

- Mande, S.; Ramachandran, N. A Comprehensive Survey on Challenges and Issues in V2X and V2V Communication in 6G Future Generation Communication Models. Ing. Des Syst. D’Information 2024, 6, 951–960. [Google Scholar] [CrossRef]

- IRF World Road Statistics. Total Road Network (by Road Type)—United States. Available online: http://18.134.46.19/dashboard/checksumgraph (accessed on 16 April 2025).

- Louati, A.; Louati, H.; Kariri, E.; Neifar, W.; Hassan, M.K.; Khairi, M.H.H.; Farahat, M.A.; El-Hoseny, H.M. Sustainable Smart Cities through Multi-Agent Reinforcement Learning-Based Cooperative Autonomous Vehicles. Sustainability 2024, 2, 1779. [Google Scholar] [CrossRef]

- Jain, H.; Babel, P. A Comprehensive Survey of PID and Pure Pursuit Control Algorithms for Autonomous Vehicle Navigation. arXiv 2024, arXiv:2409.09848. [Google Scholar]

- Hema, D.; Kannan, D.S. Interactive color image segmentation using HSV color space. Sci. Technol. 2020, 6, 37–41. [Google Scholar] [CrossRef]

- Muslam, M.M.A. Enhancing security in vehicle-to-vehicle communication: A comprehensive review of protocols and techniques. Vehicles 2024, 2, 450–467. [Google Scholar] [CrossRef]

- Malik, S.; Khan, M.A.; El-Sayed, H.; Khan, M.J. Should Autonomous Vehicles Collaborate in a Complex Urban Environment or Not? Smartcities 2023, 7, 2447–2483. [Google Scholar] [CrossRef]

- Quadri, C.; Mancuso, V.; Ajmone Marsan, M.; Rossi, G.P. Platooning on the edge. In Proceedings of the 23rd International ACM Conference on Modeling, Analysis and Simulation of Wireless and Mobile Systems, Alicante, Spain, 16–20 November 2020; Volume 11, pp. 1–10. [Google Scholar]

- Broggi, A.; Bertozzi, M.; Fascioli, A.; Bianco, C.G.L.; Piazzi, A. Visual perception of obstacles and vehicles for platooning. IEEE Trans. Intell. Transp. Syst. 2000, 9, 164–176. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 3, 58443–58469. [Google Scholar] [CrossRef]

- Kong, J.; Pfeiffer, M.; Schildbach, G.; Borrelli, F. Kinematic and dynamic vehicle models for autonomous driving control design. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; Volume 8, pp. 1094–1099. [Google Scholar]

- Naranjo, J.E.; González, C.; García, R.; de Pedro, T.; Haber, R.E. Power-steering control architecture for automatic driving. IEEE Trans. Intell. Transp. Syst. 2005, 12, 406–415. [Google Scholar] [CrossRef]

- Wang, J.; Shao, Y.; Ge, Y.; Yu, R. A survey of vehicle to everything (V2X) testing. Sensors 2019, 1, 334. [Google Scholar] [CrossRef]

- Sehla, K.; Nguyen, T.M.T.; Pujolle, G.; Velloso, P.B. Resource Allocation Modes in C-V2X: From LTE-V2X to 5G-V2X. IEEE Internet Things J. 2022, 3, 8291–8314. [Google Scholar] [CrossRef]

- Garcia, M.H.C.; Molina-Galan, A.; Boban, M.; Gozalvez, J.; Coll-Perales, B.; Şahin, T.; Kousaridas, A. A Tutorial on 5G NR V2X Communications. IEEE Commun. Surv. Tutor. 2021, 2, 1972–2026. [Google Scholar] [CrossRef]

- Mannoni, V.; Berg, V.; Sesia, S.; Perraud, E. A comparison of the V2X communication systems: ITS-G5 and C-V2X. In Proceedings of the 2019 IEEE 89th Vehicular Technology Conference (VTC2019-Spring), Kuala Lumpur, Malaysia, 28 April–1 May 2019; Volume 4, pp. 1–5. [Google Scholar]

- CARLA Unreal Engine 4 Documentation. Available online: https://carla.readthedocs.io/en/latest (accessed on 3 August 2021).

- IEEE Standard for Information Technology—Local and Metropolitan Area Networks—Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications Amendment 6: Wireless Access in Vehicular Environments. Available online: https://standards.ieee.org/ieee/802.11p/3953/ (accessed on 17 June 2010).

- IEEE Standard for Information Technology–Telecommunications and Information Exchange between Systems—Local and Metropolitan Area Networks–Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications Amendment: Enhancements for Ambient Power Communication (AMP). Available online: https://standards.ieee.org/ieee/802.11bp/11592/ (accessed on 21 March 2024).

- Tammisetti, V.; Stettinger, G.; Cuellar, M.P.; Molina-Solana, M. Meta-YOLOv8: Meta-Learning-Enhanced YOLOv8 for Precise Traffic Light Color Detection in ADAS. Electronics 2025, 14, 468. [Google Scholar] [CrossRef]

- Yao, Z.; Liu, Q.; Fu, J.; Xie, Q.; Li, B.; Ye, Q.; Li, Q. A Coarse-to-Fine Deep Learning Based Framework for Traffic Light Recognition. IEEE Trans. Intell. Transp. Syst. 2024, 4, 13887–13899. [Google Scholar] [CrossRef]

- Huang, Y.; Tian, Z.; Jiang, Q.; Xu, J. Path tracking based on improved pure pursuit model and pid. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology, Weihai, China, 14–16 October 2020; Volume 10, pp. 359–364. [Google Scholar]

- Coulter, R.C. Implementation of the Pure Pursuit Path Tracking Algorithm; Carnegie Mellon University: Pittsburg, PA, USA, 1992; Volume 1, pp. 1–15. [Google Scholar]

- Ang, K.H.; Chong, G.; Li, Y. PID control system analysis, design, and technology. IEEE Trans. Control Syst. Technol. 2005, 7, 559–576. [Google Scholar]

- Khalid, I.; Maglogiannis, V.; Naudts, D.; Shahid, A.; Moerman, I. Optimizing hybrid v2x communication: An intelligent technology selection algorithm using 5g, c-v2x PC5 and dsrc. Future Internet 2024, 3, 107. [Google Scholar] [CrossRef]

- Hur, D.; Lee, D.; Oh, J.; Won, D.; Song, C.; Cho, S. Survey on challenges and solutions of C-V2X: LTE-V2X communication technology. In Proceedings of the 2023 Fourteenth International Conference on Ubiquitous and Future Networks (ICUFN), Paris, France, 4–7 July 2023; Volume 7, pp. 639–641. [Google Scholar]

- Sutradhar, K.; Pillai, B.G.; Amin, R.; Narayan, D.L. A survey on privacy-preserving authentication protocols for secure vehicular communication. Comput. Commun. 2024, 3, 1–18. [Google Scholar] [CrossRef]

- Bazzi, A.; Berthet, A.O.; Campolo, C.; Masini, B.M.; Molinaro, A.; Zanella, A. On the design of sidelink for cellular V2X: A literature review and outlook for future. IEEE Access 2021, 7, 97953–97980. [Google Scholar] [CrossRef]

- Verstraete, T.; Muhammad, N. Pedestrian collision avoidance in autonomous vehicles: A review. Computers 2024, 3, 78. [Google Scholar] [CrossRef]

- YOLO12: Attention-Centric Object Detection. Available online: https://docs.ultralytics.com/models/yolo12 (accessed on 22 February 2025).

- Merdrignac, P.; Shagdar, O.; Nashashibi, F. Fusion of perception and V2P communication systems for the safety of vulnerable road users. IEEE Trans. Intell. Transp. Syst. 2016, 12, 1740–1751. [Google Scholar] [CrossRef]

- Fei, R.; Ma, M.; Hei, X.; Yang, L.; Li, A.; Qiu, Y. A new safety distance model based on braking process considering adhesion coefficient. Electronics 2024, 1, 421. [Google Scholar] [CrossRef]

- Kasi, K.; Karuppanan, G. Framework to Identify Vehicle Platoons under Heterogeneous Traffic Conditions on Urban Roads. Sustainability 2024, 1, 78. [Google Scholar] [CrossRef]

- Segata, M.; Bloessl, B.; Joerer, S.; Dressler, F.; Cigno, R.L. Supporting platooning maneuvers through IVC: An initial protocol analysis for the JOIN maneuver. In Proceedings of the 2014 11th Annual Conference on Wireless On-Demand Network Systems and Services (WONS), Obergurgl, Austria, 2–4 April 2014; Volume 4, pp. 130–137. [Google Scholar]

- Tian, D.; Wang, Y.; Lu, G.; Yu, G. A VANETs routing algorithm based on Euclidean distance clustering. In Proceedings of the 2010 2nd International Conference on Future Computer and Communication, Wuhan, China, 21–24 May 2010; Volume 5, pp. 1–183. [Google Scholar]

- Nagy, R.; Török, Á.; Pethő, Z. Evaluating V2X-Based Vehicle Control under Unreliable Network Conditions, Focusing on Safety Risk. Appl. Sci. 2024, 6, 5661. [Google Scholar] [CrossRef]

- IEEE Standard for Wireless Access in Vehicular Environments–Security Services for Application and Management Messages. Available online: https://standards.ieee.org/ieee/1609.2/10258/ (accessed on 3 December 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).