Laser-Powered Harvesting Tool for Tabletop Grown Strawberries

Abstract

1. Introduction

- Productive: with average cycle time of 8 s at 50% of maximum robot velocity. As the laser cutting time is averaging s, it is possible to achieve a manipulation time of s at 100% robot velocity and in turn a total cycle time of s.

- Small footprint: slim tool with effective interacting width of 35 mm greatly enhances fruit reachability.

- Robust: precise stem entrapment tolerating strawberry localization error of up to mm, in addition to the near maintenance-free laser cutting.

2. Materials and Methods

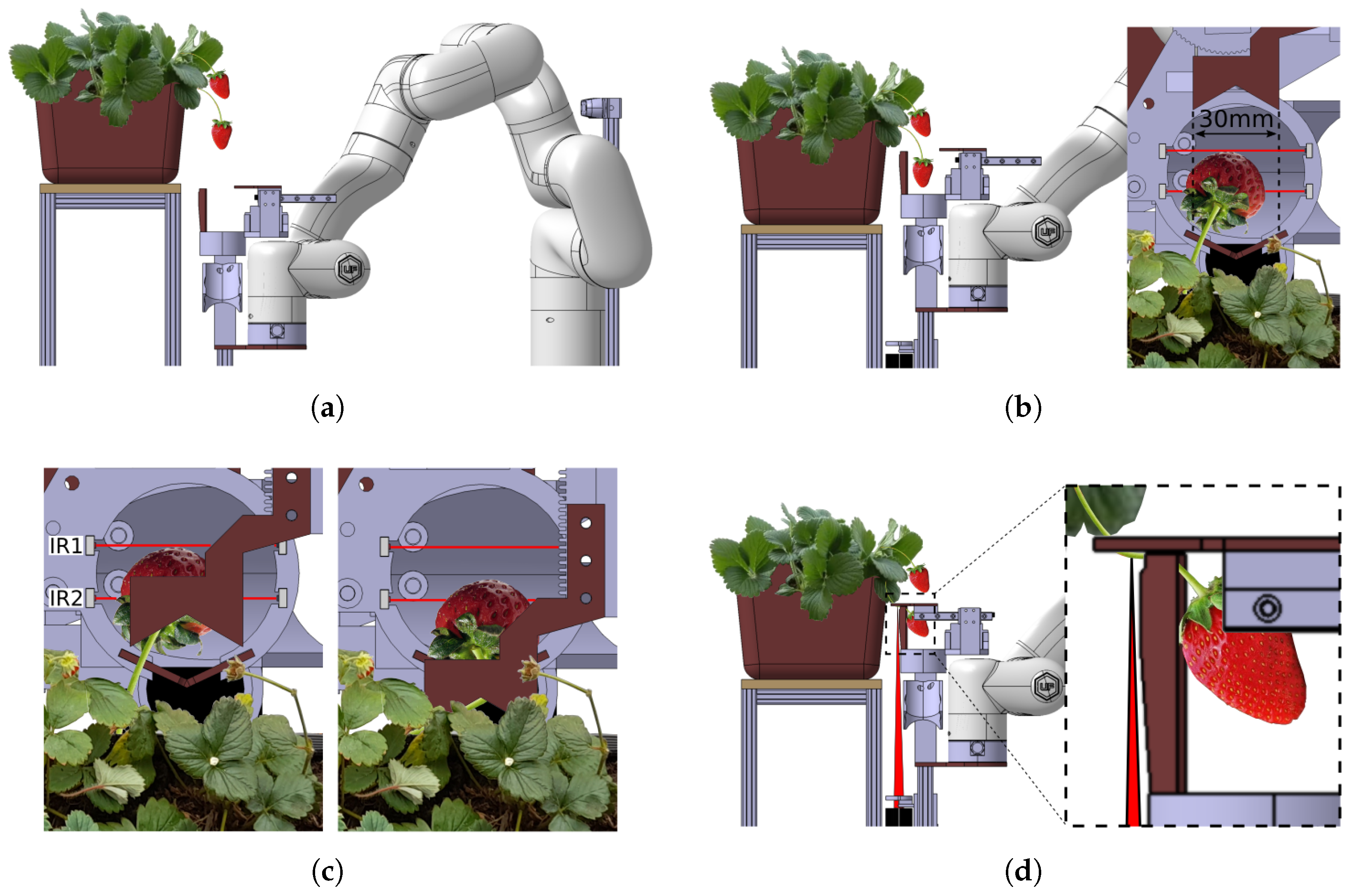

2.1. Minimal Footprint Harvesting Tool

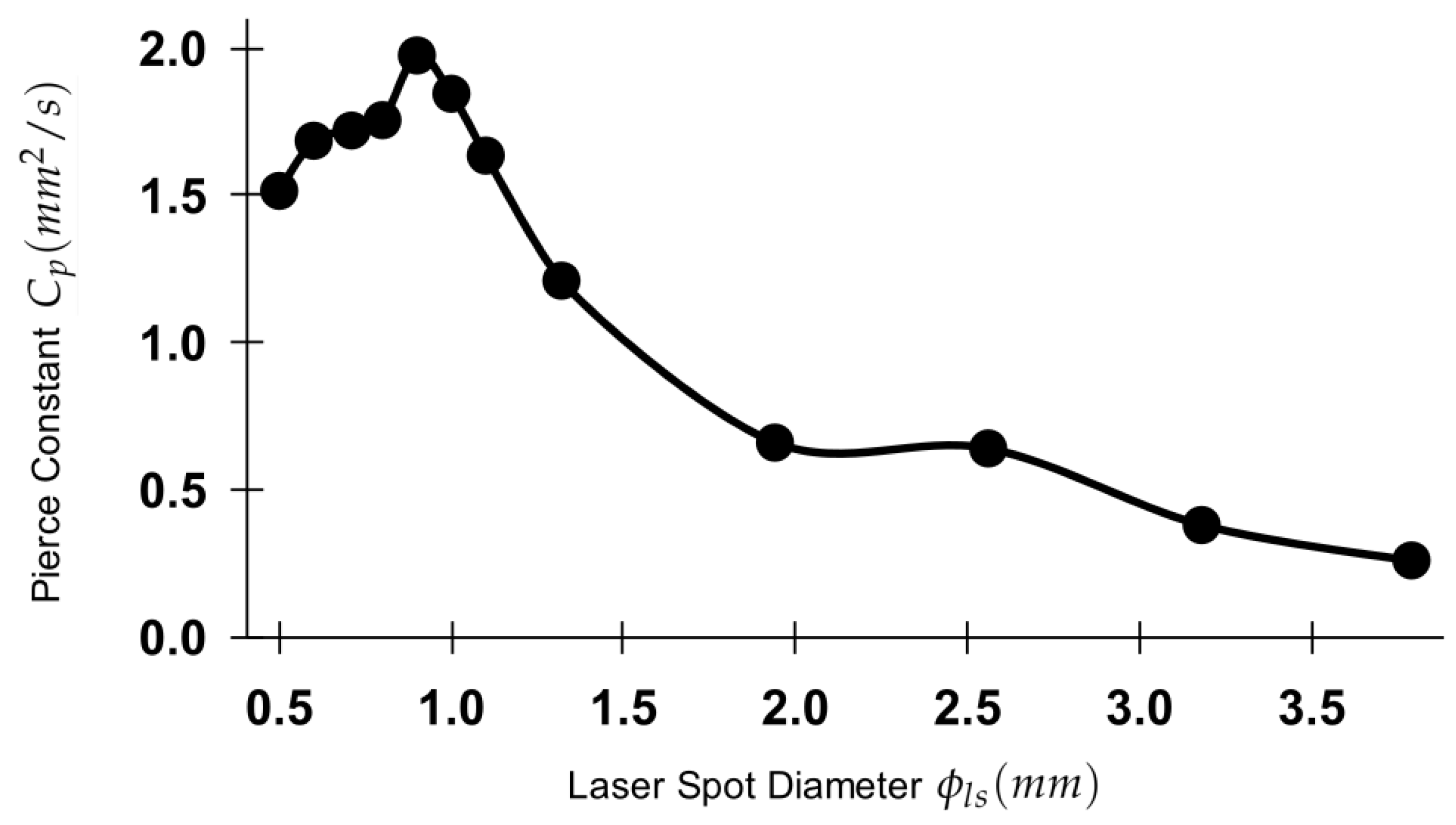

2.2. Laser Spot Dynamics

3. Results

3.1. Optimal Laser Spot Diameter

3.2. Strawberry Localization

| Algorithm 1 Strawberry localization algorithm. |

| Input: colored scene point cloud from RGB-D CAM1, colored scene point cloud from RGB-D CAM2. Output: Vectors defining the bounding box for localized strawberries in arm base frame.

|

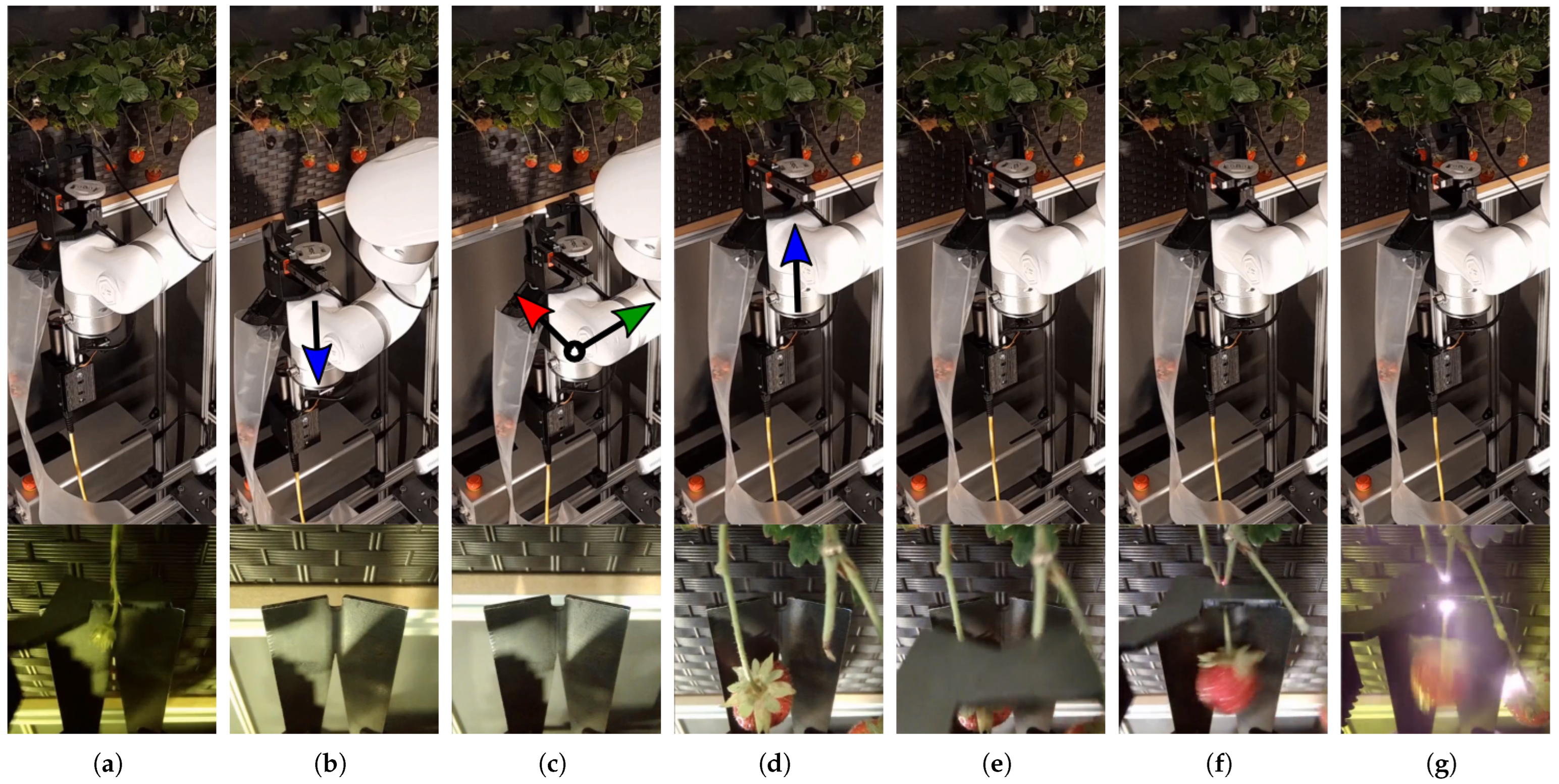

3.3. Harvesting Motion

| Algorithm 2 Harvesting algorithm. |

| Input: . Output: desired tool tip position in arm base frame .

|

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Nolte, K.; Ostermeier, M. Labour Market Effects of Large-Scale Agricultural Investment: Conceptual Considerations and Estimated Employment Effects. World Dev. 2017, 98, 430–446. [Google Scholar] [CrossRef]

- Government, U. The Impact on the Horticulture and Food Processing Sectors of Closing the Seasonal Agricultural Workers Scheme and the Sectors Based Scheme. 2013. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/257242/migrant-seasonal-workers.pdf (accessed on 14 March 2025).

- Bank, T.W. Employment in Agriculture (Percentage of Total Employment) (Modeled ILO Estimate). Available online: https://data.worldbank.org/indicator/SL.AGR.EMPL.ZS (accessed on 12 June 2021).

- UK, NFUonline. Establishing the Labour Availability Issues of the UK Food and Drink Sector. 2021. Available online: https://www.nfuonline.com/archive?treeid=152097 (accessed on 18 February 2022).

- Duckett, T.; Pearson, S.; Blackmore, S.; Grieve, B. Agricultural Robotics: The Future of Robotic Agriculture. arXiv 2018, arXiv:1806.06762. [Google Scholar]

- Cassey, A.; Lee, K.; Sage, J.; Tozer, P. Assessing post-harvest labor shortages, wages, and welfare. Agric. Food Econ. 2018, 6, 17. [Google Scholar] [CrossRef]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Kootstra, G.; Wang, X.; Blok, P.M.; Hemming, J.; van Henten, E. Selective Harvesting Robotics: Current Research, Trends, and Future Directions. Curr. Robot. Rep. 2021, 2, 95–104. [Google Scholar] [CrossRef]

- De-An, Z.; Jidong, L.; Wei, J.; Ying, Z.; Yu, C. Design and control of an apple harvesting robot. Biosyst. Eng. 2011, 110, 112–122. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Onishi, Y.; Yoshida, T.; Kurita, H.; Fukao, T.; Arihara, H.; Iwai, A. An automated fruit harvesting robot by using deep learning. ROBOMECH J. 2019, 6, 13. [Google Scholar] [CrossRef]

- Brown, J.; Sukkarieh, S. Design and evaluation of a modular robotic plum harvesting system utilizing soft components. J. Field Robot. 2021, 38, 289–306. [Google Scholar] [CrossRef]

- Mu, L.; Cui, G.; Liu, Y.; Cui, Y.; Fu, L.; Gejima, Y. Design and simulation of an integrated end-effector for picking kiwifruit by robot. Inf. Process. Agric. 2020, 7, 58–71. [Google Scholar] [CrossRef]

- Feng, Q.; Wang, X.; Wang, G.; Li, Z. Design and test of tomatoes harvesting robot. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 949–952. [Google Scholar]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; van Tuijl, B.; Barth, R.; Wais, E.; van Henten, E.J. Performance Evaluation of a Harvesting Robot for Sweet Pepper. J. Field Robot. 2017, 34, 1123–1139. [Google Scholar] [CrossRef]

- Xiong, Y.; Peng, C.; Grimstad, L.; From, P.J.; Isler, V. Development and field evaluation of a strawberry harvesting robot with a cable-driven gripper. Comput. Electron. Agric. 2019, 157, 392–402. [Google Scholar] [CrossRef]

- Parsa, S.; Debnath, B.; Khan, M.A.; E., A.G. Modular autonomous strawberry picking robotic system. J. Field Robot. 2024, 41, 2226–2246. [Google Scholar] [CrossRef]

- Tituaña, L.; Gholami, A.; He, Z.; Xu, Y.; Karkee, M.; Ehsani, R. A small autonomous field robot for strawberry harvesting. Smart Agric. Technol. 2024, 8, 100454. [Google Scholar] [CrossRef]

- Ochoa, E.; Mo, C. Design and Field Evaluation of an End Effector for Robotic Strawberry Harvesting. Actuators 2025, 14, 42. [Google Scholar] [CrossRef]

- Chang, C.L.; Huang, C.C. Design and Implementation of an AI-Based Robotic Arm for Strawberry Harvesting. Agriculture 2024, 14, 2057. [Google Scholar] [CrossRef]

- Li, Z.; Yuan, X.; Yang, Z. Design, simulation, and experiment for the end effector of a spherical fruit picking robot. Int. J. Adv. Robot. Syst. 2023, 20, 2057. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef]

- De Preter, A.; Anthonis, J.; De Baerdemaeker, J. Development of a Robot for Harvesting Strawberries. IFAC-PapersOnLine 2018, 51, 14–19. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, X.; Au, W.; Kang, H.; Chen, C. Intelligent robots for fruit harvesting: Recent developments and future challenges. Precis. Agric. 2022, 23, 1573–1618. [Google Scholar] [CrossRef]

- Baeten, J.; Donné, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous Fruit Picking Machine: A Robotic Apple Harvester. In Field and Service Robotics: Results of the 6th International Conference, Chamonix, France, 9–12 July 2003; Laugier, C., Siegwart, R., Eds.; Springe: Berlin/Heidelberg, Germany, 2008; pp. 531–539. [Google Scholar]

- Tanigaki, K.; Fujiura, T.; Akase, A.; Imagawa, J. Cherry-harvesting robot. Comput. Electron. Agric. 2008, 63, 65–72. [Google Scholar] [CrossRef]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S.; Kobayashi, K.; Kohno, Y.; Kamata, J.; Kurita, M. Evaluation of a strawberry-harvesting robot in a field test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar] [CrossRef]

- Hayashi, S.; Yamamoto, S.; Tsubota, S.; Ochiai, Y.; Kobayashi, K.; Kamata, J.; Kurita, M.; Inazumi, H.; Peter, R. Automation technologies for strawberry harvesting and packing operations in Japan 1. J. Berry Res. 2014, 4, 19–27. [Google Scholar] [CrossRef]

- Hu, G.; Chen, C.; Chen, J.; Sun, L.; Sugirbay, A.; Chen, Y.; Jin, H.; Zhang, S.; Bu, L. Simplified 4-DOF manipulator for rapid robotic apple harvesting. Comput. Electron. Agric. 2022, 199, 107177. [Google Scholar] [CrossRef]

- Lehnert, C.; English, A.; McCool, C.; Tow, A.W.; Perez, T. Autonomous Sweet Pepper Harvesting for Protected Cropping Systems. IEEE Robot. Autom. Lett. 2017, 2, 872–879. [Google Scholar] [CrossRef]

- van Henten, E.; Hemming, J.; van Tuijl, B.; van Tuijl, B.; Kornet, J.; Meuleman, J.; Bontsema, J.; van Os, E. An Autonomous Robot for Harvesting Cucumbers in Greenhouses. Auton. Robot. 2002, 13, 241–258. [Google Scholar] [CrossRef]

- BACHCHE, S.; OKA, K. Performance Testing of Thermal Cutting Systems for Sweet Pepper Harvesting Robot in Greenhouse Horticulture. J. Syst. Des. Dyn. 2013, 7, 36–51. [Google Scholar] [CrossRef]

- Liu, J.; Li, Z.; Li, P.; Mao, H. Design of a laser stem-cutting device for harvesting robot. In Proceedings of the 2008 IEEE International Conference on Automation and Logistics, Qingdao, China, 1–3 September 2008; pp. 2370–2374. [Google Scholar] [CrossRef]

- Liu, J.; Hu, Y.; Xu, X.; Li, P. Feasibility and influencing factors of laser cutting of tomato peduncles for robotic harvesting. Afr. J. Biotechnol. 2011, 10, 15552–15563. [Google Scholar] [CrossRef]

- Heisel, T.; Schou, J.; Andreasen, C.; Christensen, S. Using laser to measure stem thickness and cut weed stems. Weed Res. 2002, 42, 242–248. [Google Scholar] [CrossRef]

- Mathiassen, S.K.; Bak, T.; Christensen, S.; Kudsk, P. The Effect of Laser Treatment as a Weed Control Method. Biosyst. Eng. 2006, 95, 497–505. [Google Scholar] [CrossRef]

- Coleman, G.; Betters, C.; Squires, C.; Leon-Saval, S.; Walsh, M. Low Energy Laser Treatments Control Annual Ryegrass (Lolium rigidum). Front. Agron. 2021, 2, 35. [Google Scholar] [CrossRef]

- Nadimi, M.; Sun, D.; Paliwal, J. Recent applications of novel laser techniques for enhancing agricultural production. Laser Phys. 2021, 31, 053001. [Google Scholar] [CrossRef]

- Woo, S.; Uyeh, D.D.; Kim, J.; Kim, Y.; Kang, S.; Kim, K.C.; Lee, S.Y.; Ha, Y.; Lee, W.S. Analyses of Work Efficiency of a Strawberry-Harvesting Robot in an Automated Greenhouse. Agronomy 2020, 10, 1751. [Google Scholar] [CrossRef]

- Sorour, M.; From, P.J.; Elgeneidy, K.; Kanarachos, S.; Sallam, M. Produce Harvesting by Laser Stem-Cutting. In Proceedings of the 2022 IEEE 18th International Conference on Automation Science and Engineering (CASE), Mexico City, Mexico, 22–26 August 2022; pp. 487–492. [Google Scholar] [CrossRef]

- Raycus. 50W Q-Switched Pulse Fiber Laser. Available online: https://en.raycuslaser.com/products/50w-q-switched-pulse-fiber-laser.html (accessed on 22 March 2025).

- Grimstad, L.; From, P.J. Thorvald II—A Modular and Re-configurable Agricultural Robot. IFAC-PapersOnLine 2017, 50, 4588–4593. [Google Scholar] [CrossRef]

- xARM. xARM Collaborative Robot. Available online: https://www.ufactory.cc/xarm-collaborative-robot (accessed on 14 March 2025).

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

- Ge, Y.; Xiong, Y.; From, P.J. Instance Segmentation and Localization of Strawberries in Farm Conditions for Automatic Fruit Harvesting. IFAC-PapersOnLine 2019, 52, 294–299. [Google Scholar] [CrossRef]

- Meng, Z.; Du, X.; Sapkota, R.; Ma, Z.; Cheng, H. YOLOv10-pose and YOLOv9-pose: Real-time strawberry stalk pose detection models. Comput. Ind. 2025, 165. [Google Scholar] [CrossRef]

- Sorour, M.; From, P.J.; Elgeneidy, K.; Kanarachos, S.; Sallam, M. Compact Strawberry Harvesting Tube Employing Laser Cutter. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 8956–8962. [Google Scholar]

| (mm) | (mm) | (s) | (mm/s) | (mm2/s) |

|---|---|---|---|---|

| (mm) | (mm) | (s) | (mm/s) | (mm2/s) |

|---|---|---|---|---|

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| 55 1 | 100 | ||

| 25 1 | 70 | ||

| 30 1 | 70 | ||

| 1 | t | 1 | |

| 50 1 | 20 | ||

| 30 1 | 1000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sorour, M.; From, P. Laser-Powered Harvesting Tool for Tabletop Grown Strawberries. Electronics 2025, 14, 1708. https://doi.org/10.3390/electronics14091708

Sorour M, From P. Laser-Powered Harvesting Tool for Tabletop Grown Strawberries. Electronics. 2025; 14(9):1708. https://doi.org/10.3390/electronics14091708

Chicago/Turabian StyleSorour, Mohamed, and Pål From. 2025. "Laser-Powered Harvesting Tool for Tabletop Grown Strawberries" Electronics 14, no. 9: 1708. https://doi.org/10.3390/electronics14091708

APA StyleSorour, M., & From, P. (2025). Laser-Powered Harvesting Tool for Tabletop Grown Strawberries. Electronics, 14(9), 1708. https://doi.org/10.3390/electronics14091708