Asynchronized Jacobi Solver on Heterogeneous Mobile Devices

Abstract

:1. Introduction

- A complete paradigm for a heterogeneous Jacobi solver on a mobile MPSoC, with various schemes (data-sharing, load-balancing and asynchronous design, etc.) to fully exploit the capabilities of various processors on the MPSoC while alleviating the data-sharing overhead.

- A comprehensive evaluation and discussion of various aspects of the solver (time cost, processor utilization, energy consumption, etc.) with real applications, to shed light on the future design of other numerical solvers.

2. Related Work

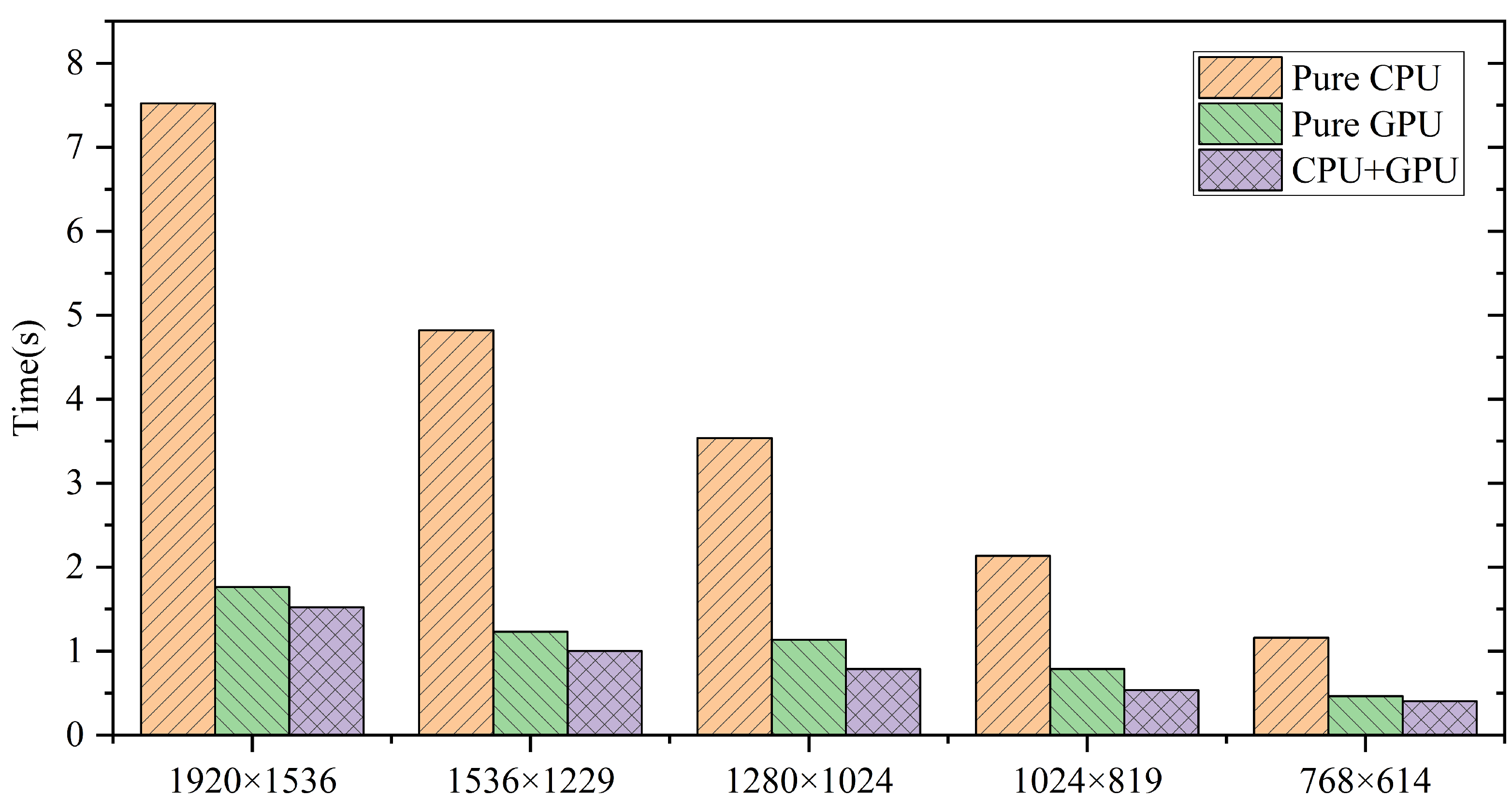

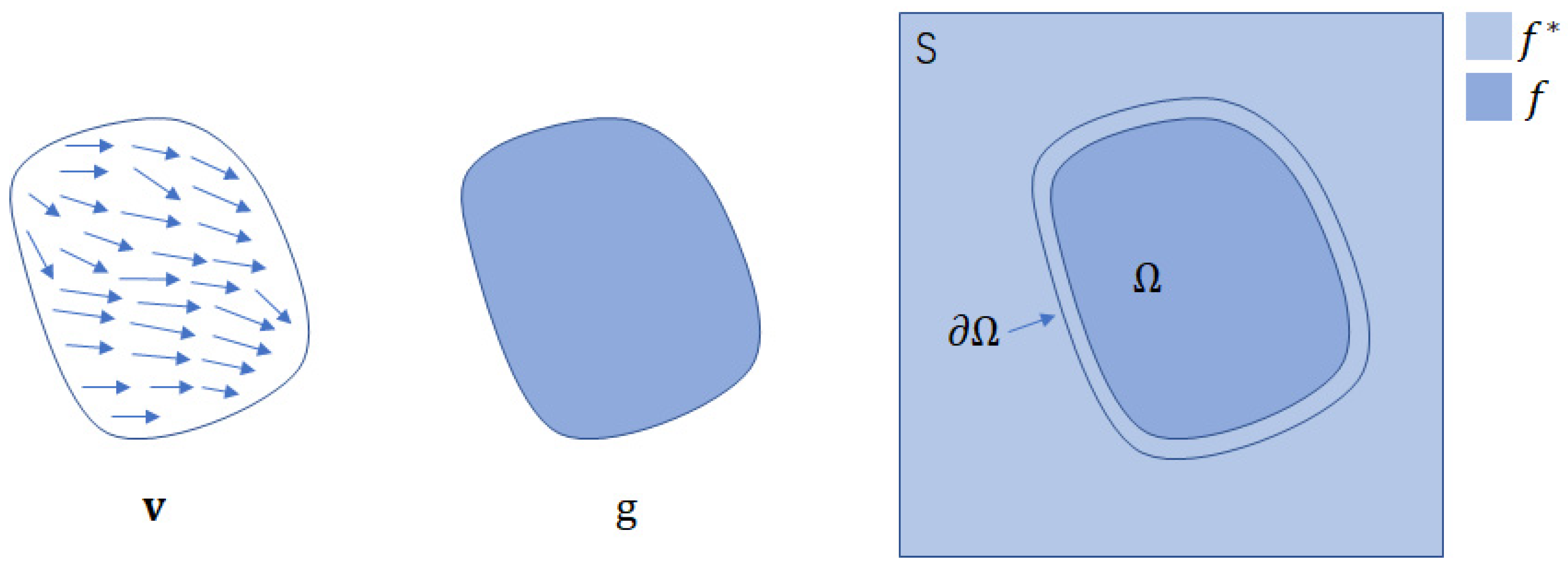

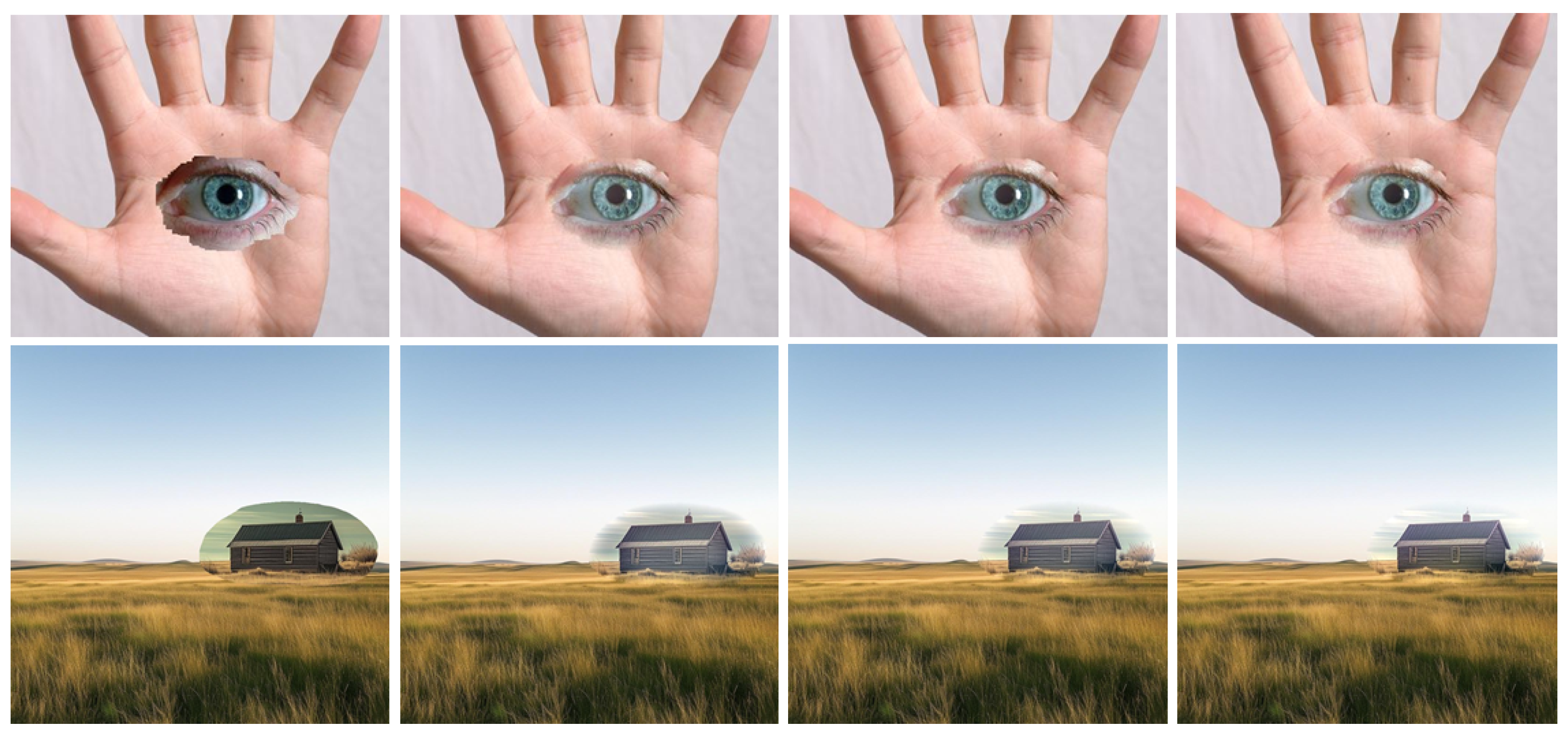

2.1. Numerical Solver for CG

2.2. Mobile Graphics

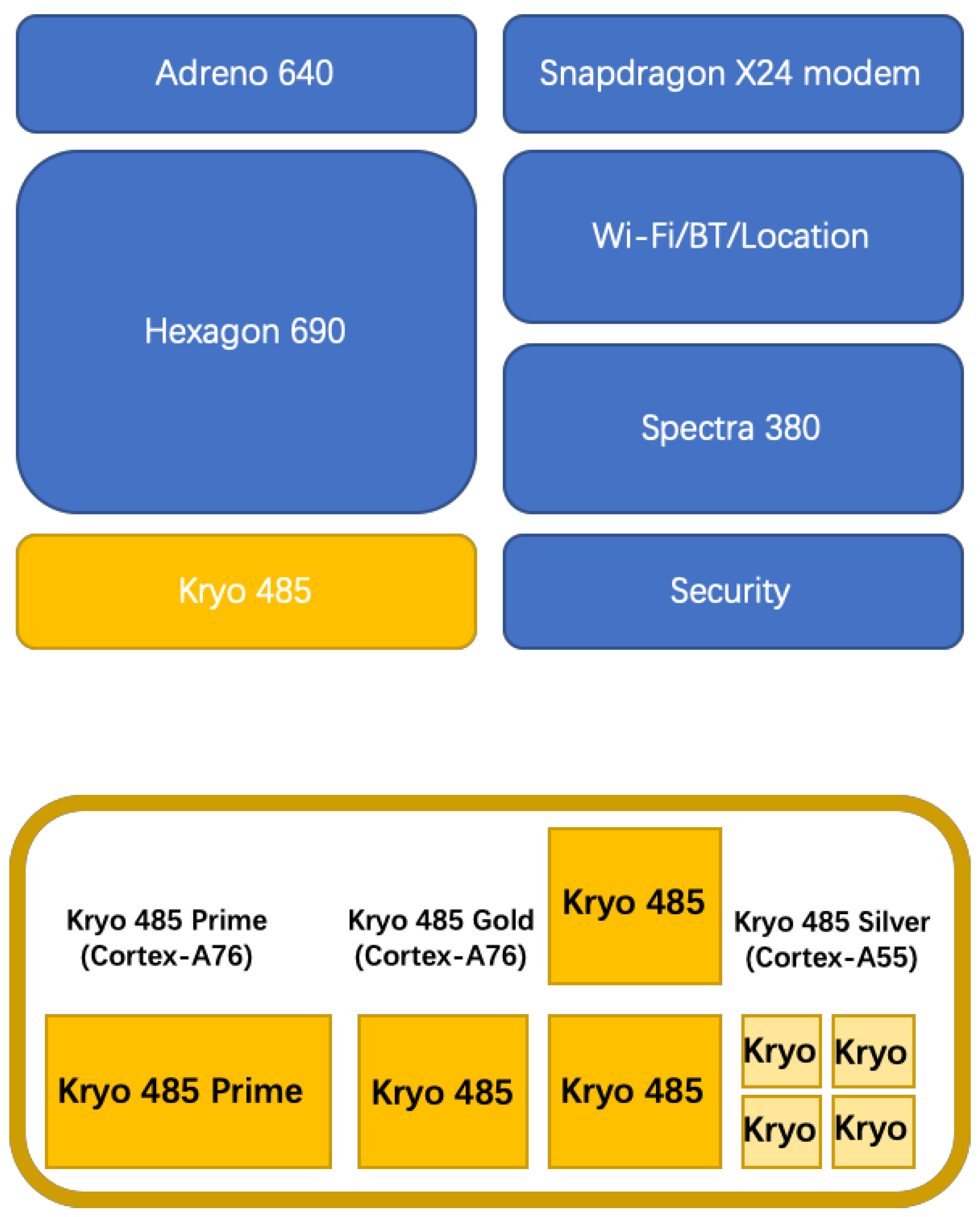

3. Background

- Global memory permits read/write access to all work-items in all work-groups running on any device within a context.

- Constant memory is a region of global memory which remains constant during the execution of a kernel instance.

- Local memory is local to the work-group and can be used to allocate variables that are shared by all work-items in that work-group.

- Private memory is private to a work-item, where variables defined are not visible to another work-item.

- Buffer is stored as a block of contiguous memory and used as a general-purpose object to hold data.

- Image holds one-, two- or three-dimensional images.

- Pipe is an ordered sequence of data items with a write endpoint where data items are inserted and a read endpoint where data items are removed.

- Read/Write/Fill commands explicitly read and write data associated with a memory object between the host and global memory regions.

- Map/Unmap commands map data from the memory object into a contiguous block of memory, which is accessed through a host-accessible pointer.

- Copy commands. The data associated with a memory object is copied between two buffers.

- Shared virtual memory provides a way for transparent data migration between a host and devices by extending the global memory region into the host memory region.

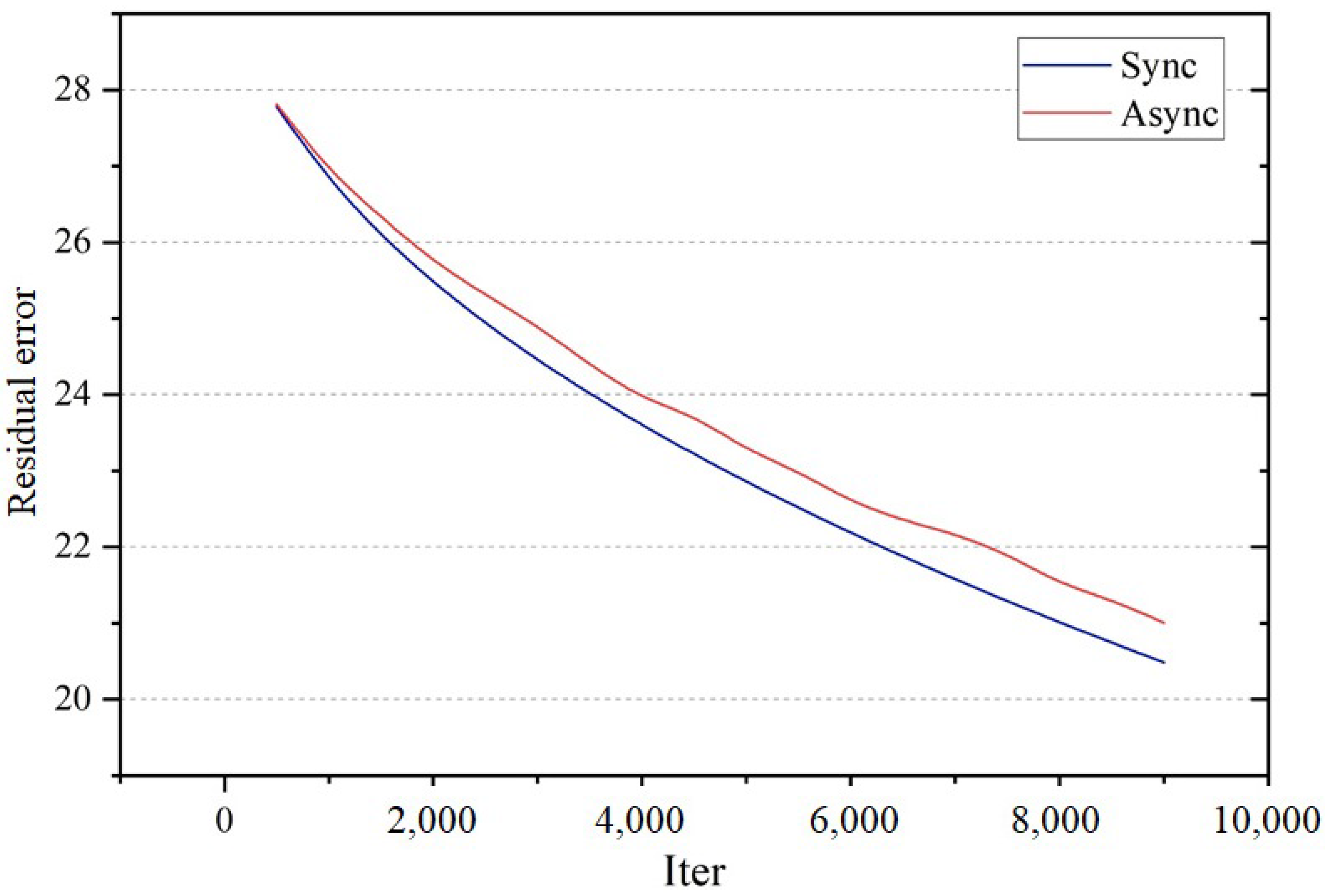

4. Asynchronized Jacobi Iteration

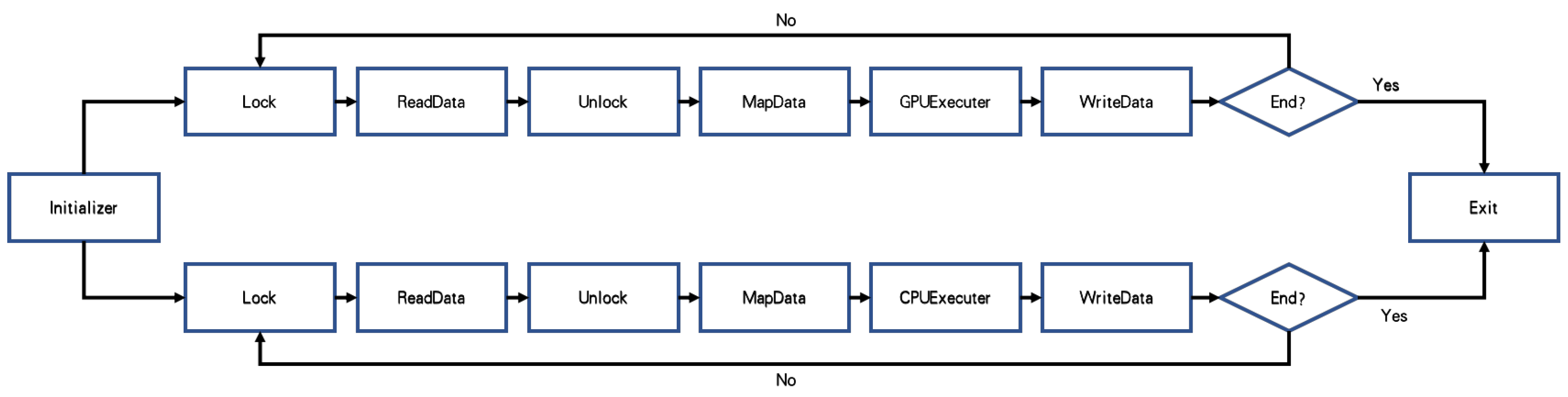

5. Implementation

5.1. Solver

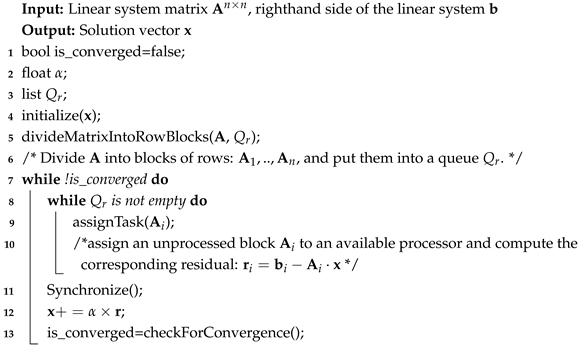

| Algorithm 1: Synchronous Jacobi iteration on a Heterogeneous MPSoC |

|

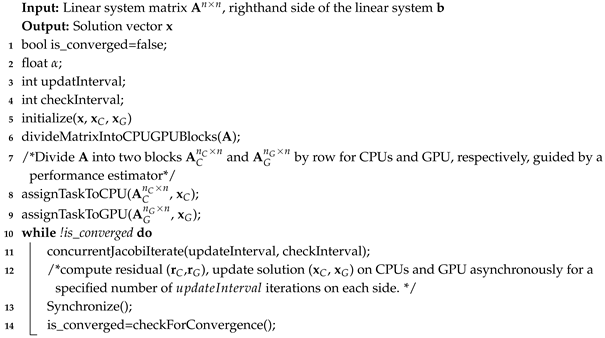

| Algorithm 2: Asynchronous Jacobi iteration on a Heterogeneous MPSoC |

|

5.2. Data Storage and Sharing

5.3. Workload Distribution

6. Experimental Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, T.; Yao, Y.; Han, L.; Zhang, D.; Zhang, Y. Implementation of Jacobi iterative method on graphics processor unit. In Proceedings of the 2009 IEEE International Conference on Intelligent Computing and Intelligent Systems, Shanghai, China, 20–22 November 2009; IEEE: New York, NY, USA, 2009; Volume 3, pp. 324–327. [Google Scholar]

- Cheik Ahamed, A.K.; Magoulès, F. Efficient implementation of Jacobi iterative method for large sparse linear systems on graphic processing units. J. Supercomput. 2017, 73, 3411–3432. [Google Scholar] [CrossRef]

- Morris, G.R.; Abed, K.H. Mapping a jacobi iterative solver onto a high-performance heterogeneous computer. IEEE Trans. Parallel Distrib. Syst. 2012, 24, 85–91. [Google Scholar] [CrossRef]

- Cao, W.; Xu, C.F.; Wang, Z.H.; Yao, L.; Liu, H.Y. CPU/GPU computing for a multi-block structured grid based high-order flow solver on a large heterogeneous system. Clust. Comput. 2014, 17, 255–270. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, J.; Chae, D.; Kim, D.; Kim, J. ulayer: Low latency on-device inference using cooperative single-layer acceleration and processor-friendly quantization. In Proceedings of the Fourteenth EuroSys Conference 2019, Dresden, Germany, 25–28 March 2019; pp. 1–15. [Google Scholar]

- Jiang, S.; Ran, L.; Cao, T.; Xu, Y.; Liu, Y. Profiling and optimizing deep learning inference on mobile GPUs. In Proceedings of the 11th ACM SIGOPS Asia-Pacific Workshop on Systems, Tsukuba, Japan, 24–25 August 2020; pp. 75–81. [Google Scholar]

- Toledo, S. Taucs: A Library of Sparse Linear Solvers. 2003. Available online: https://www.tau.ac.il/~stoledo/taucs/ (accessed on 20 April 2025).

- Shearer, J.; Wolfe, M.A. ALGLIB, a simple symbol-manipulation package. Commun. ACM 1985, 28, 820–825. [Google Scholar] [CrossRef]

- Bolz, J.; Farmer, I.; Grinspun, E.; Schröder, P. Sparse matrix solvers on the GPU: Conjugate gradients and multigrid. ACM Trans. Graph. (TOG) 2003, 22, 917–924. [Google Scholar] [CrossRef]

- Goodnight, N.; Woolley, C.; Lewin, G.; Luebke, D.; Humphreys, G. A multigrid solver for boundary value problems using programmable graphics hardware. In Proceedings of the ACM SIGGRAPH 2005 Courses, Los Angeles, CA, USA, 31 July–4 August 2005; p. 193-es. [Google Scholar]

- Zollhöfer, M.; Nießner, M.; Izadi, S.; Rehmann, C.; Zach, C.; Fisher, M.; Wu, C.; Fitzgibbon, A.; Loop, C.; Theobalt, C.; et al. Real-time non-rigid reconstruction using an RGB-D camera. ACM Trans. Graph. (ToG) 2014, 33, 1–12. [Google Scholar] [CrossRef]

- Zollhöfer, M.; Dai, A.; Innmann, M.; Wu, C.; Stamminger, M.; Theobalt, C.; Nießner, M. Shading-based refinement on volumetric signed distance functions. ACM Trans. Graph. (ToG) 2015, 34, 1–14. [Google Scholar] [CrossRef]

- Heide, F.; Steinberger, M.; Tsai, Y.T.; Rouf, M.; Pająk, D.; Reddy, D.; Gallo, O.; Liu, J.; Heidrich, W.; Egiazarian, K.; et al. Flexisp: A flexible camera image processing framework. ACM Trans. Graph. (ToG) 2014, 33, 1–13. [Google Scholar] [CrossRef]

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D reconstruction at scale using voxel hashing. ACM Trans. Graph. (ToG) 2013, 32, 1–11. [Google Scholar] [CrossRef]

- Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2387–2395. [Google Scholar]

- Wu, B.; Wang, Z.; Wang, H. A GPU-based multilevel additive schwarz preconditioner for cloth and deformable body simulation. ACM Trans. Graph. (TOG) 2022, 41, 1–14. [Google Scholar] [CrossRef]

- DeVito, Z.; Mara, M.; Zollhöfer, M.; Bernstein, G.; Ragan-Kelley, J.; Theobalt, C.; Hanrahan, P.; Fisher, M.; Niessner, M. Opt: A domain specific language for non-linear least squares optimization in graphics and imaging. ACM Trans. Graph. (TOG) 2017, 36, 1–27. [Google Scholar] [CrossRef]

- Mara, M.; Heide, F.; Zollhöfer, M.; Nießner, M.; Hanrahan, P. Thallo–scheduling for high-performance large-scale non-linear least-squares solvers. ACM Trans. Graph. (TOG) 2021, 40, 1–14. [Google Scholar] [CrossRef]

- Lai, Z.; Wei, K.; Fu, Y.; Härtel, P.; Heide, F. ∇-Prox: Differentiable Proximal Algorithm Modeling for Large-Scale Optimization. ACM Trans. Graph. 2023, 42, 1–19. [Google Scholar] [CrossRef]

- Bangaru, S.P.; Wu, L.; Li, T.M.; Munkberg, J.; Bernstein, G.; Ragan-Kelley, J.; Durand, F.; Lefohn, A.; He, Y. SLANG.D: Fast, Modular and Differentiable Shader Programming. ACM Trans. Graph. 2023, 42, 1–28. [Google Scholar] [CrossRef]

- Barker, J.; Martin, S.; Guy, R.; Munoz-Lopez, J.E.; Kapoulkine, A.; Chang, K. Moving mobile graphics. In Proceedings of the SIGGRAPH Courses, Virtual, 17 August 2020; pp. 1–2. [Google Scholar]

- Kim, S.; Harada, T.; Kim, Y.J. Energy-efficient global illumination algorithms for mobile devices using dynamic voltage and frequency scaling. Comput. Graph. 2018, 70, 198–205. [Google Scholar] [CrossRef]

- Wang, R.; Yu, B.; Marco, J.; Hu, T.; Gutierrez, D.; Bao, H. Real-time rendering on a power budget. ACM Trans. Graph. (TOG) 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Zhang, Y.; Ortin, M.; Arellano, V.; Wang, R.; Gutierrez, D.; Bao, H. On-the-Fly Power-Aware Rendering. Comput. Graph. Forum 2018, 37, 155–166. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, R.; Huo, Y.; Hua, W.; Bao, H. Powernet: Learning-based real-time power-budget rendering. IEEE Trans. Vis. Comput. Graph. 2021, 28, 3486–3498. [Google Scholar] [CrossRef]

- Nah, J.H.; Lim, Y.; Ki, S.; Shin, C. Z 2 traversal order: An interleaving approach for VR stereo rendering on tile-based GPUs. Comput. Vis. Media 2017, 3, 349–357. [Google Scholar] [CrossRef]

- Yan, X.; Xu, J.; Huo, Y.; Bao, H. Neural Rendering and Its Hardware Acceleration: A Review. arXiv 2024, arXiv:2402.00028. [Google Scholar]

- FreeOCL: Multi-Platform Implementation of OpenCL 1.2 Targeting CPUs. Available online: http://www.zuzuf.net/FreeOCL/ (accessed on 20 April 2025).

- Saad, Y. Iterative methods for linear systems of equations: A brief historical journey. In 75 Years of Mathematics of Computation; Contemporary Mathematics; Brenner, S.C., Shparlinski, I.E., Shu, C., Szyld, D.B., Eds.; American Mathematical Society: Providence, RI, USA, 2020; Volume 754, pp. 197–215. [Google Scholar]

- Chow, E.; Frommer, A.; Szyld, D.B. Asynchronous Richardson iterations: Theory and practice. Numer. Algorithms 2021, 87, 1635–1651. [Google Scholar] [CrossRef]

- Saad, Y. Iterative Methods for Sparse Linear Systems; SIAM: Bangkok, Thailand, 2003. [Google Scholar]

- Kincaid, D.R.; Oppe, T.C.; Young, D.M. ITPACKV 2D User’s Guide; Technical Report; Texas University: Austin, TX, USA, 1989. [Google Scholar]

- Barrett, R.; Berry, M.; Chan, T.F.; Demmel, J.; Donato, J.; Dongarra, J.; Eijkhout, V.; Pozo, R.; Romine, C.; Van der Vorst, H. Templates for the Solution of Linear Systems: Building Blocks for Iterative Methods; SIAM: Bangkok, Thailand, 1994. [Google Scholar]

- Jia, F.; Zhang, D.; Cao, T.; Jiang, S.; Liu, Y.; Ren, J.; Zhang, Y. Codl: Efficient cpu-gpu co-execution for deep learning inference on mobile devices. In Proceedings of the 20th Annual International Conference on Mobile Systems, Applications and Services, Portland, OR, USA, 27 June–1 July 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 209–221. [Google Scholar]

- Cooper, B.; Scogland, T.R.W.; Ge, R. Shared Virtual Memory: Its Design and Performance Implications for Diverse Applications. In Proceedings of the 38th ACM International Conference on Supercomputing, Kyoto, Japan, 4–7 June 2024. [Google Scholar]

- Kim, H.; Sim, J.; Gera, P.; Hadidi, R.; Kim, H. Batch-aware unified memory management in GPUs for irregular workloads. In Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne, Switzerland, 16–20 March 2020; pp. 1357–1370. [Google Scholar]

- Jin, Z.; Vetter, J.S. Evaluating Unified Memory Performance in HIP. In Proceedings of the 2022 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Lyon, France, 30 May–3 June 2022; IEEE: New York, NY, USA, 2022; pp. 562–568. [Google Scholar]

- Kunegis, J.; Preusse, J. Fairness on the web: Alternatives to the power law. In Proceedings of the 4th Annual ACM Web Science Conference, Evanston, IL, USA, 22–24 June 2012; pp. 175–184. [Google Scholar]

- Yesil, S.; Heidarshenas, A.; Morrison, A.; Torrellas, J. WISE: Predicting the performance of sparse matrix vector multiplication with machine learning. In Proceedings of the 28th ACM SIGPLAN Annual Symposium on Principles and Practice of Parallel Programming, Montreal, QC, Canada, 25 February–1 March 2023; pp. 329–341. [Google Scholar]

- Davis, T.A.; Hu, Y. The university of Florida sparse matrix collection. ACM Trans. Math. Softw. 2011, 38, 1–25. [Google Scholar] [CrossRef]

- Pérez, P.; Gangnet, M.; Blake, A. Poisson image editing. ACM Trans. Graph. 2003, 22, 313–318. [Google Scholar] [CrossRef]

| Category | Features | Description |

|---|---|---|

| Matrix size | Row number | |

| Column number | ||

| Non-zero entry number | ||

| Non-zero skew | , , , , , , , | Features measuring the skewness of the non-zero distribution across rows |

| , , , , , , , | Features measuring the skewness of the non-zero distribution across column | |

| Non-zero locality | , , , , , , , | Features measuring the skewness of the non-zero distribution across tiles |

| , , , , , , , | Features measuring the skewness of the non-zero distribution across row blocks | |

| , , , , , , , | Features measuring the skewness of the non-zero distribution across column blocks |

| Data Type | Matrix Size | |||

|---|---|---|---|---|

| CPU | 5.8 | 29.4 | 185.4 | 1387.2 |

| Buffer | 8.3 | 78.7 | 647.8 | 4898.3 |

| Image | 1.07 | 5.89 | 47.3 | 335 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, Z.; Hong, X.; Cheng, Y.; Chen, L.; Cheng, X.; Lin, J. Asynchronized Jacobi Solver on Heterogeneous Mobile Devices. Electronics 2025, 14, 1768. https://doi.org/10.3390/electronics14091768

Liao Z, Hong X, Cheng Y, Chen L, Cheng X, Lin J. Asynchronized Jacobi Solver on Heterogeneous Mobile Devices. Electronics. 2025; 14(9):1768. https://doi.org/10.3390/electronics14091768

Chicago/Turabian StyleLiao, Ziqiang, Xiayun Hong, Yao Cheng, Liyan Chen, Xuan Cheng, and Juncong Lin. 2025. "Asynchronized Jacobi Solver on Heterogeneous Mobile Devices" Electronics 14, no. 9: 1768. https://doi.org/10.3390/electronics14091768

APA StyleLiao, Z., Hong, X., Cheng, Y., Chen, L., Cheng, X., & Lin, J. (2025). Asynchronized Jacobi Solver on Heterogeneous Mobile Devices. Electronics, 14(9), 1768. https://doi.org/10.3390/electronics14091768