A Framework for Analyzing and Testing Cyber–Physical Interactions for Smart Grid Applications

Abstract

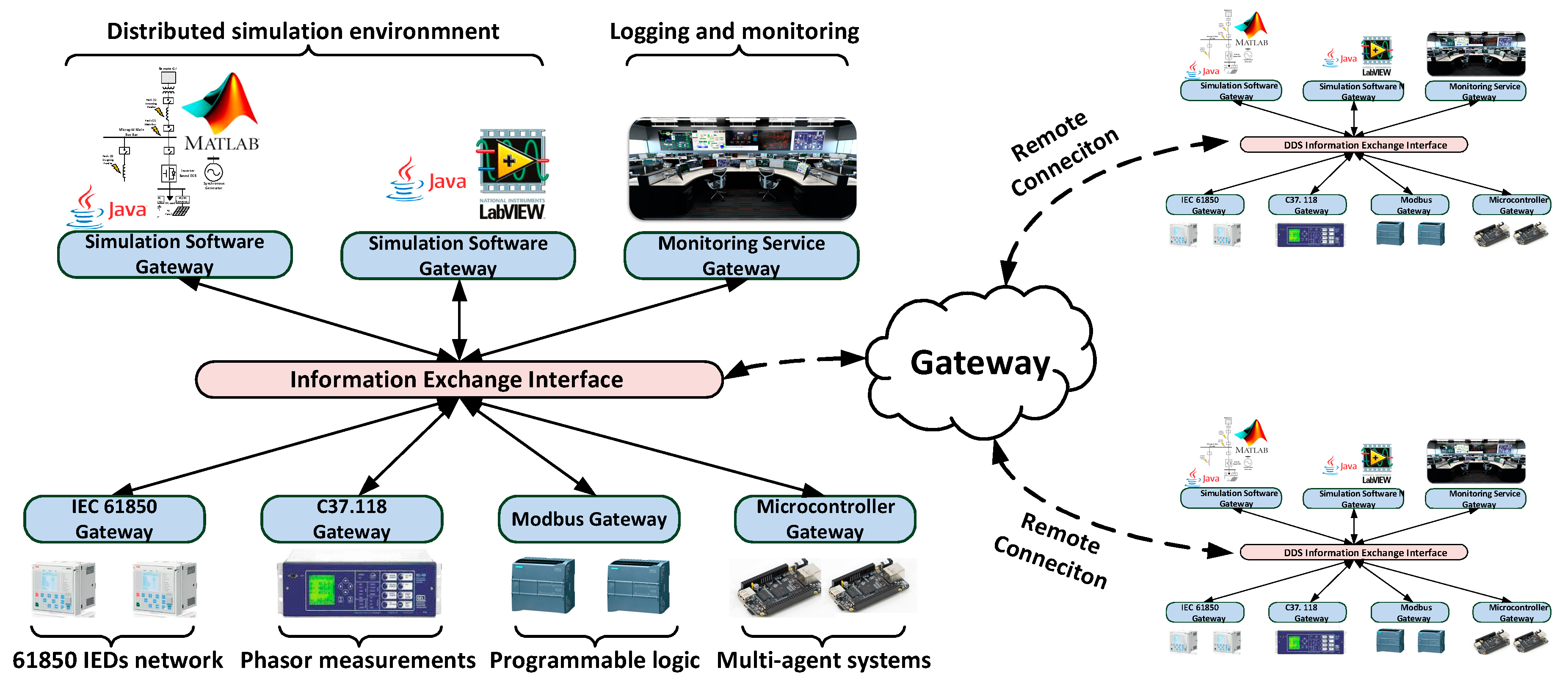

:1. Introduction

- (1)

- A holistic cyber–physical energy systems framework, with ease of integration of simulation packages, software, and hardware by utilizing a data-centric communication backbone to manage information exchange and seamlessly orchestrate the components of the system together.

- (2)

- Protocol emulation and translation, which allows the integration of a wide range of multi-protocol/multi-vendor devices into the developed framework.

- (3)

- Scalability to a wide range of smart grid applications, as demonstrated in the case studies.

- (4)

- Remote connectivity, data monitoring, and logging.

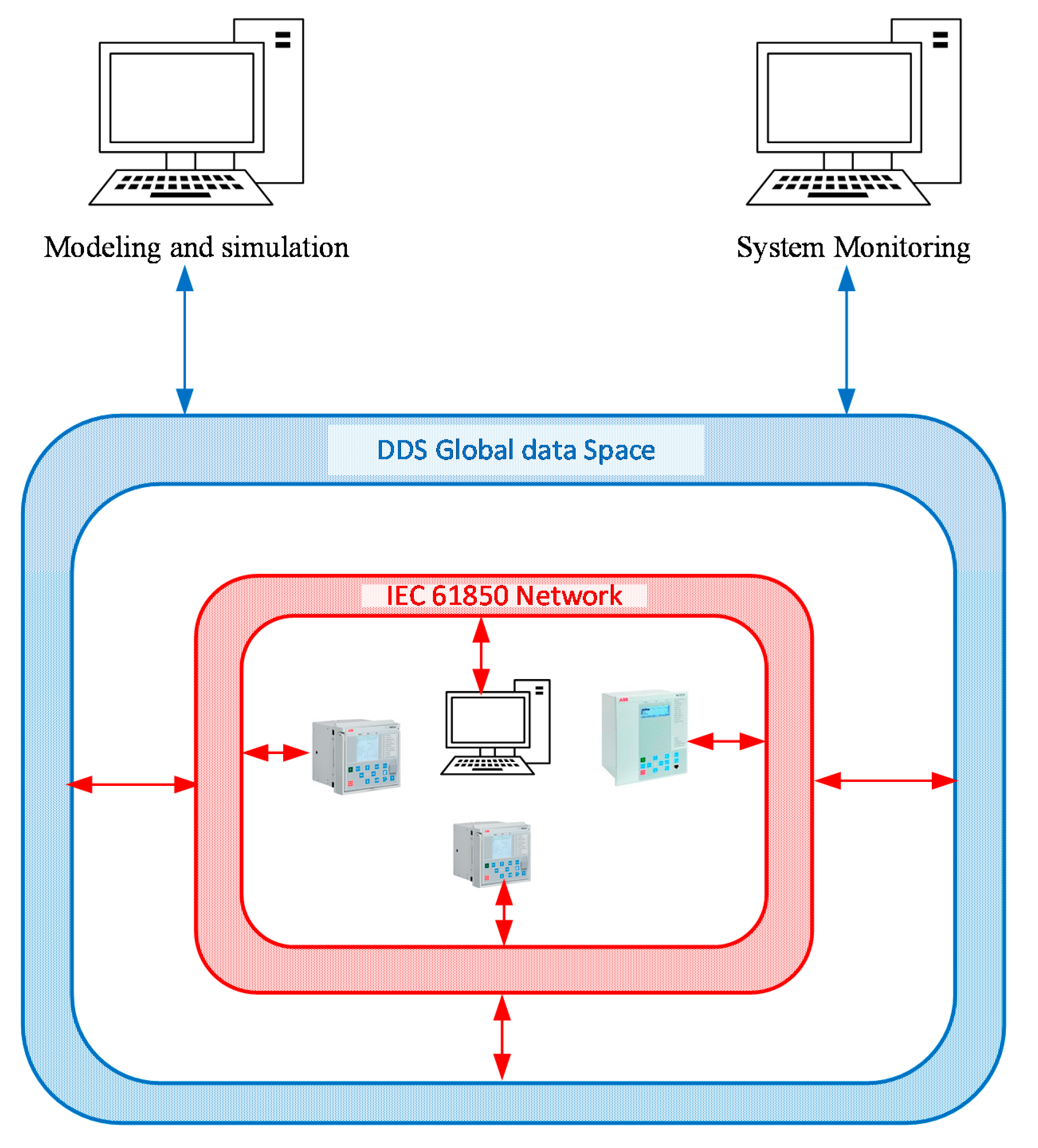

2. Framework Description

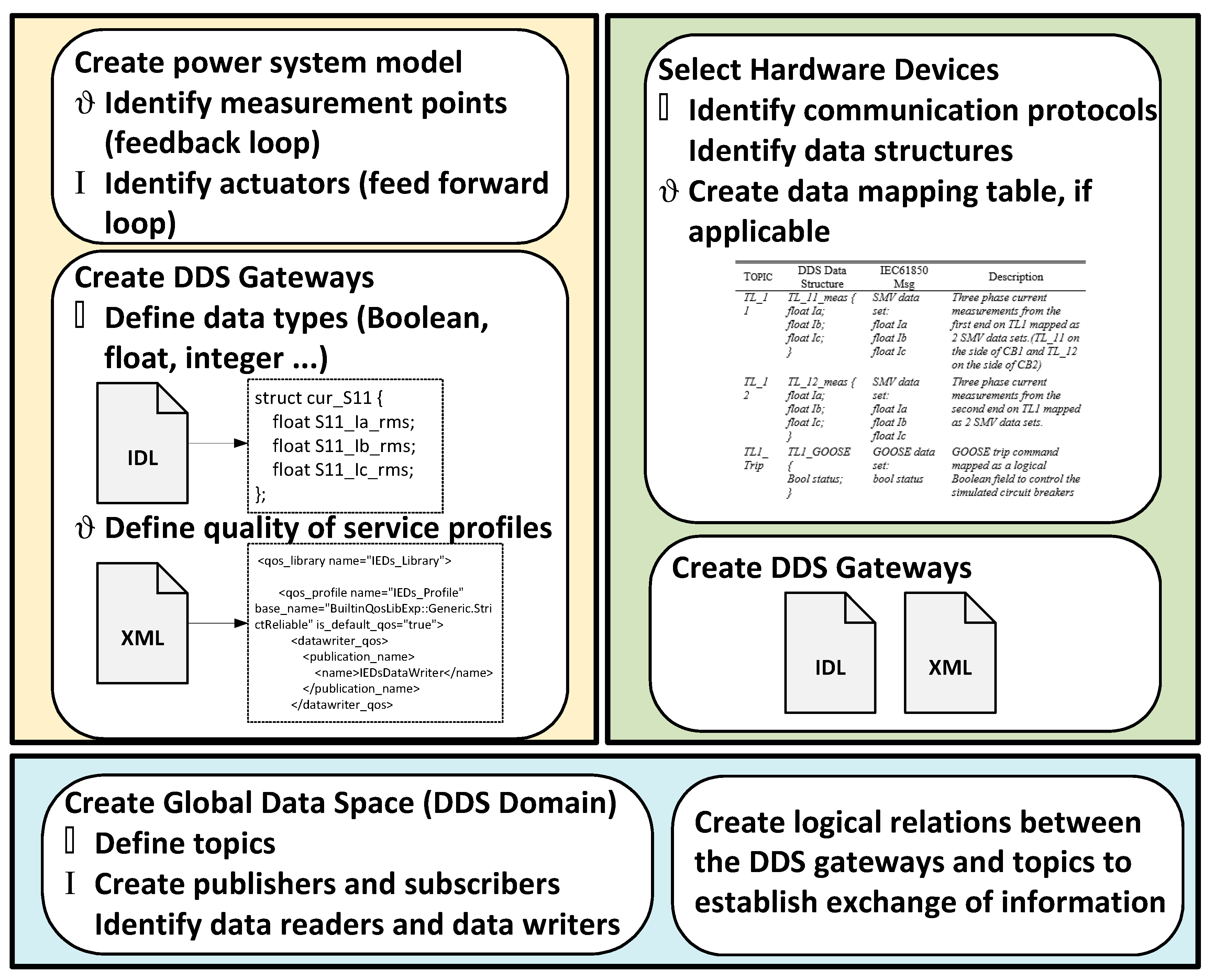

3. Procedure for Developing the Co-Simulation Framework

- Domain participant: participates in the appropriate DDS domain as defined by the user.

- DDS publisher/subscriber: can be configured as a publisher or as a subscriber. The DDS topic name to subscribe to/publish from and the DDS data types to read/update need to be defined by the user.

- DDS reader: reads data per the definitions in the subscriber block.

- DDS writer: updates data per the definition in the publisher block.

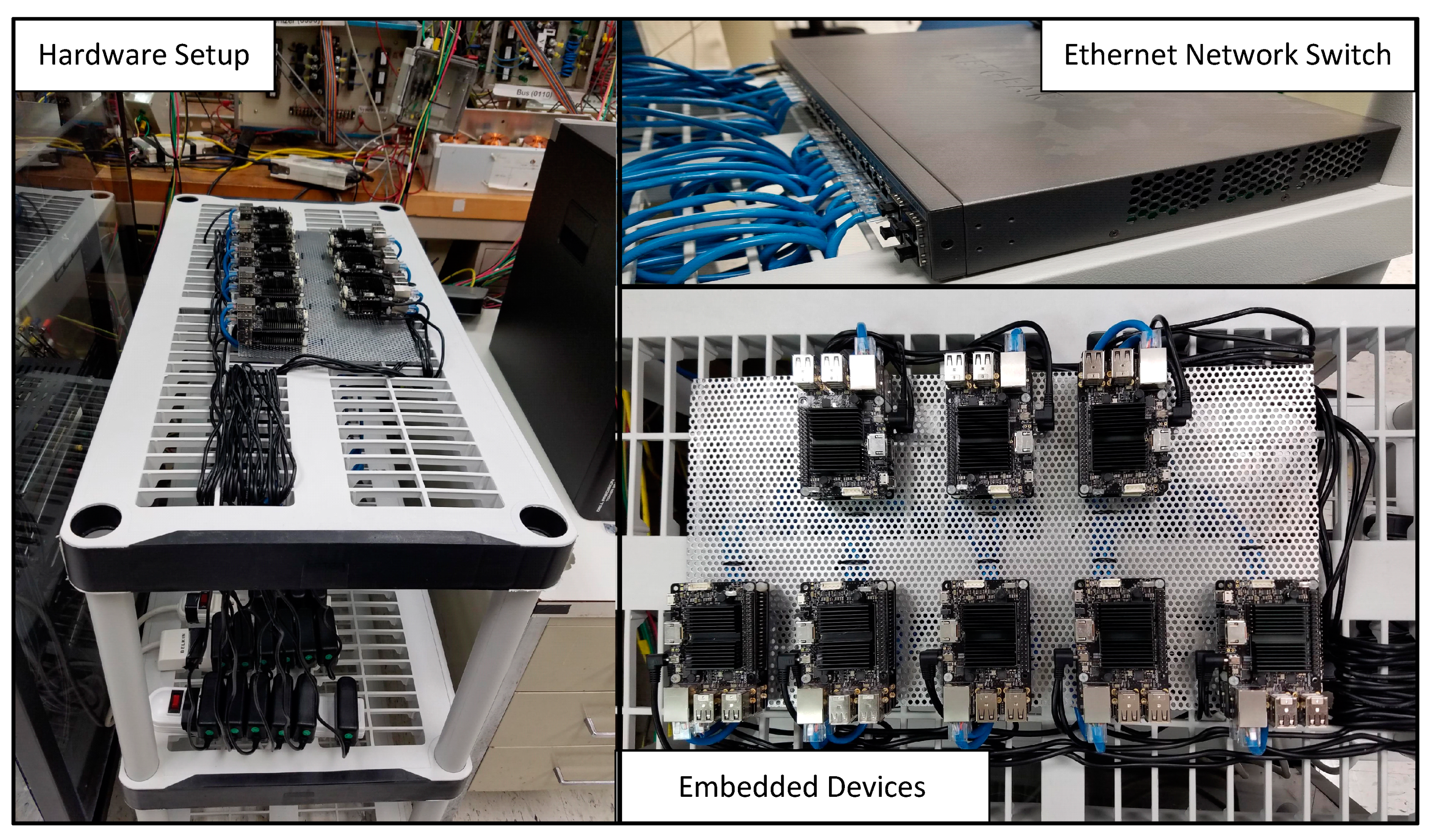

4. Verification Case Studies

4.1. Hierarchical Control of Electric Vehicle Charging

4.2. Protection of an Active Distribution Network

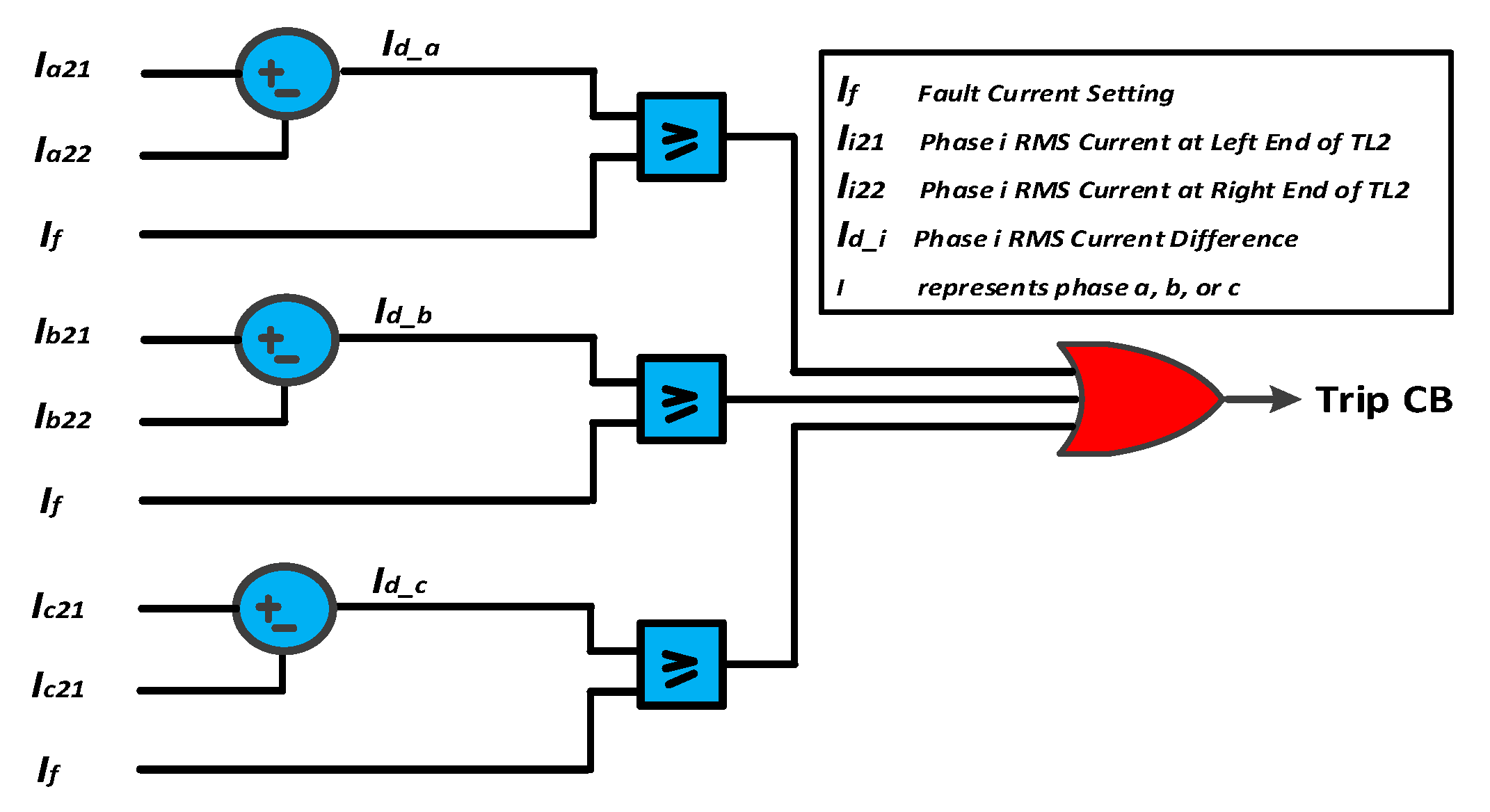

4.2.1. System under Study

4.2.2. Flow of Information within the Co-Simulation Model

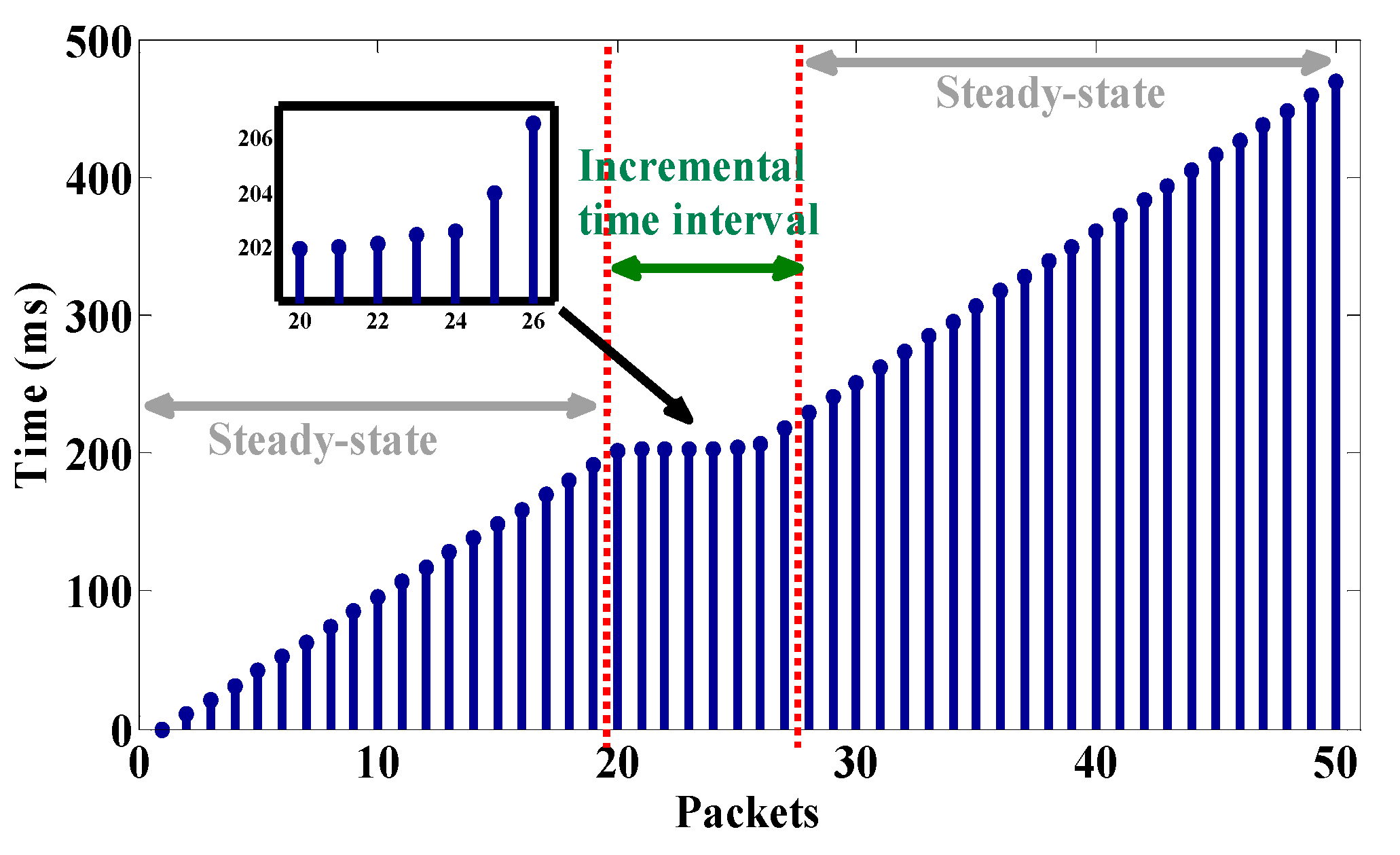

4.2.3. DDS Advantages

4.2.4. Case Study

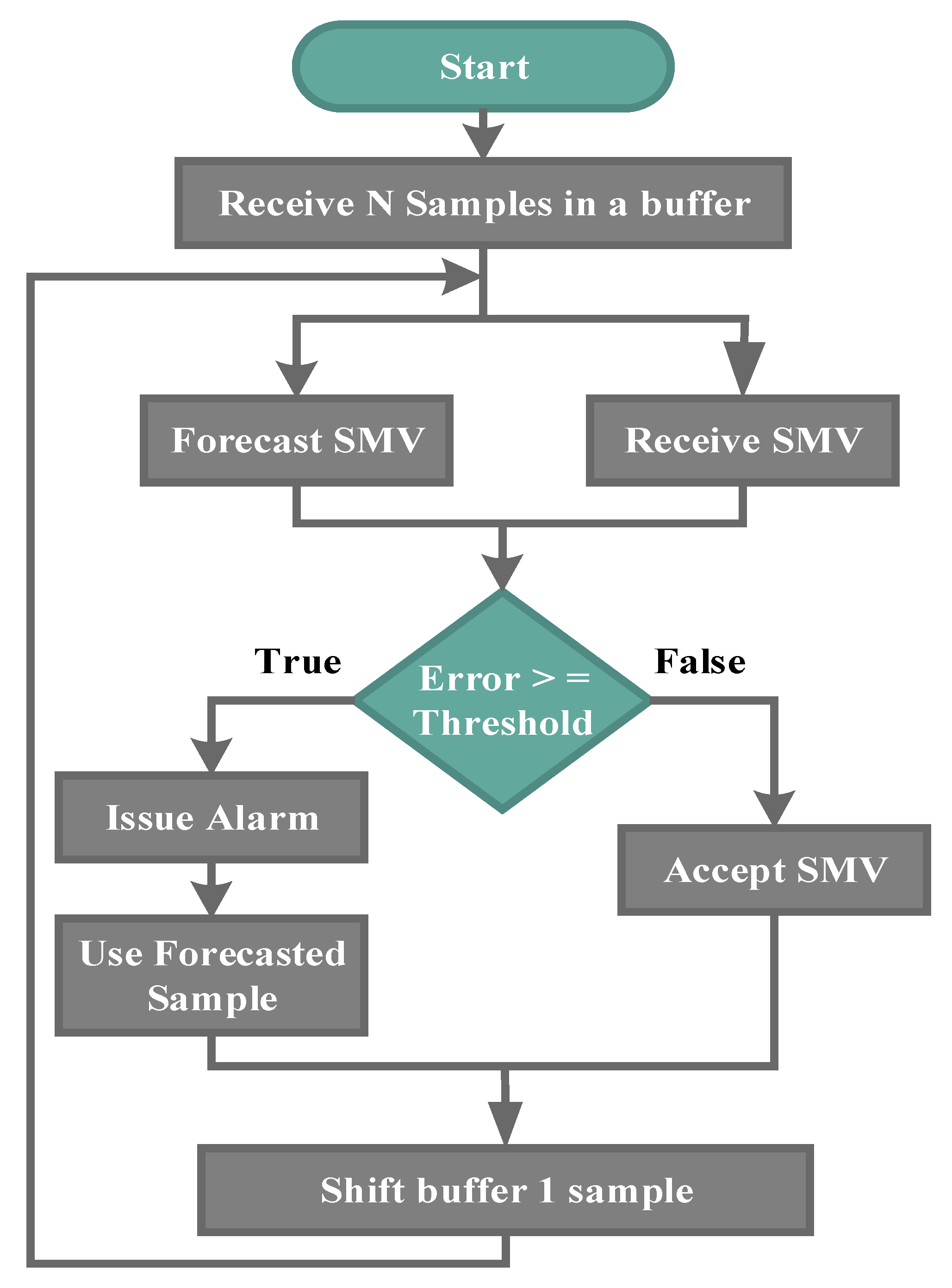

4.3. Detection of Fake Measurement Injection Attack

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Akella, R.; Tang, H.; McMillin, B.M. Analysis of information flow security in cyber–physical systems. Int. J. Crit. Infrastruct. Prot. 2010, 3, 157–173. [Google Scholar] [CrossRef]

- Albagli, A.N.; Falcão, D.M.; de Rezende, J.F. Smart grid framework co-simulation using HLA architecture. Electr. Power Syst. Res. 2016, 130, 22–33. [Google Scholar] [CrossRef]

- Bhor, D.; Angappan, K.; Sivalingam, K.M. Network and power-grid co-simulation framework for Smart Grid wide-area monitoring networks. J. Netw. Comput. Appl. 2016, 59, 274–284. [Google Scholar] [CrossRef]

- Boroojeni, K.; Amini, M.H.; Nejadpak, A.; Dragičević, T.; Iyengar, S.S.; Blaabjerg, F. A Novel Cloud-Based Platform for Implementation of Oblivious Power Routing for Clusters of Microgrids. IEEE Access 2017, 5, 607–619. [Google Scholar] [CrossRef]

- Celli, G.; Pegoraro, P.A.; Pilo, F.; Pisano, G.; Sulis, S. DMS Cyber-Physical Simulation for Assessing the Impact of State Estimation and Communication Media in Smart Grid Operation. IEEE Trans. Power Syst. 2014, 29, 2436–2446. [Google Scholar] [CrossRef]

- Sharma, E.; Chiculita, C.; Besanger, Y. Co-simulation of a low-voltage utility grid controlled over IEC 61850 protocol. In Proceedings of the 2015 5th International Conference on Electric Utility Deregulation and Restructuring and Power Technologies (DRPT), Changsha, China, 20–24 Novembar 2015; pp. 2365–2372. [Google Scholar]

- Oh, S.-J.; Yoo, C.-H.; Chung, I.-Y.; Won, D.-J. Hardware-in-the-Loop Simulation of Distributed Intelligent Energy Management System for Microgrids. Energies 2013, 6, 3263–3283. [Google Scholar] [CrossRef]

- Almas, M.S.; Vanfretti, L. RT-HIL Implementation of the Hybrid Synchrophasor and GOOSE-Based Passive Islanding Schemes. IEEE Trans. Power Deliv. 2016, 31, 1299–1309. [Google Scholar] [CrossRef]

- Goughnour, D.; Stevents, J. Testing Intelligent Device Communications in Distributed System. Available online: http://trianglemicroworks.com/docs/default-source/referenced-documents/testing-intelligent-device-communications-in-a-distributed-system.pdf?sfvrsn=2 (accessed on 1 April 2017).

- Boroojeni, K.G.; Amini, M.H.; Iyengar, S.S. Smart Grids: Security and Privacy Issues; Springer International Publishing: New York, NY, USA, 2016. [Google Scholar]

- El Hariri, M.; Youssef, T.; Habib, H.F.; Mohammed, O. A Network-in-the-Loop Framework to Analyze Cyber and Physical Information Flow in Smart Grids. In Proceedings of the 2018 IEEE Innovative Smart Grid Technologies—Asia (ISGT Asia), Singapore, 22–25 May 2018; pp. 646–651. [Google Scholar]

- Alkhawaja, A.R.; Ferreira, L.L.; Albano, M. Message Oriented Middleware with QoS Support for Smart Grids, In Proceedings of the InForum 2012—Conference on Embedded Systems and Real Time, Caparica, Portugal, 6–7 September 2012.

- RTi Connext DDS Professional. Available online: https://www.rti.com/products/dds/omg-dds-standard.html (accessed on 21 December 2016).

- Corsaro, A. DDS & OPC-UA Explained. Available online: http://www.prismtech.com/events/dds-and-opc-ua-explained-live-webcast (accessed on 1 April 2017).

- Youssef, T.A.; Elsayed, A.T.; Mohammed, O.A. Data Distribution Service-Based Interoperability Framework for Smart Grid Testbed Infrastructure. Energies 2016, 9, 150. [Google Scholar] [CrossRef]

- American National Standard for Electrical Power Systems and Equipment: “ANSI C84.1-2006, Voltage Ratings (60 Hertz)”; American National Standards Institute: Washington, DC, USA, 2006.

- IEC. Communication Networks and Systems in Substation—Specific Communication Service Mapping; IEC 61850.8; Technical Report; IEC: Geneve, Switzerland, 2008. [Google Scholar]

- El Hariri, M.; Youssef, T.A.; Mohammed, O.A. On the Implementation of the IEC 61850 Standard: Will Different Manufacturer Devices Behave Similarly under Identical Conditions? Electronics 2016, 5, 85. [Google Scholar] [CrossRef]

- Youssef, T.A.; Hariri, M.E.; Bugay, N.; Mohammed, O.A. IEC 61850: Technology standards and cyber-threats. In Proceedings of the 2016 IEEE 16th International Conference on Environment and Electrical Engineering (EEEIC), Florence, Italy, 7–10 June 2016; pp. 1–6. [Google Scholar]

| Topic | DDS Data Structure | IEC61850 Message | Description |

|---|---|---|---|

| TL-11 | TL_11_meas { float Ia; float Ib; float Ic; } | SMV data set: float Ia float Ib float Ic | Measurements of 3-phase currents from the left end of TL1 mapped as an SMV data set. |

| TL-12 | TL_12_meas { float Ia; float Ib; float Ic; } | SMV data set: float Ia float Ib float Ic | Measurements of 3-phase currents from the right end on TL1 mapped as an SMV data set. |

| TL1-Trip | TL1_GOOSE { Bool status; } | GOOSE data set: bool status | GOOSE message mapped as a logical Boolean field to trip the simulated CBs |

| Message Type | Average Delay Time |

|---|---|

| SMV | 120 μs |

| GOOSE | 11.2 μs |

| Total Samples | False Positives | False Positive (%) |

|---|---|---|

| 48,434 | 273 | 0.56 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

El Hariri, M.; Youssef, T.; Saleh, M.; Faddel, S.; Habib, H.; Mohammed, O.A. A Framework for Analyzing and Testing Cyber–Physical Interactions for Smart Grid Applications. Electronics 2019, 8, 1455. https://doi.org/10.3390/electronics8121455

El Hariri M, Youssef T, Saleh M, Faddel S, Habib H, Mohammed OA. A Framework for Analyzing and Testing Cyber–Physical Interactions for Smart Grid Applications. Electronics. 2019; 8(12):1455. https://doi.org/10.3390/electronics8121455

Chicago/Turabian StyleEl Hariri, Mohamad, Tarek Youssef, Mahmoud Saleh, Samy Faddel, Hany Habib, and Osama A. Mohammed. 2019. "A Framework for Analyzing and Testing Cyber–Physical Interactions for Smart Grid Applications" Electronics 8, no. 12: 1455. https://doi.org/10.3390/electronics8121455

APA StyleEl Hariri, M., Youssef, T., Saleh, M., Faddel, S., Habib, H., & Mohammed, O. A. (2019). A Framework for Analyzing and Testing Cyber–Physical Interactions for Smart Grid Applications. Electronics, 8(12), 1455. https://doi.org/10.3390/electronics8121455