Towards Human Motion Tracking: Multi-Sensory IMU/TOA Fusion Method and Fundamental Limits

Abstract

:1. Introduction

- Requirements of fixed external anchors: They need to be deployed in certain scenarios; for example, Zihajehzadeh et al. [23] introduced a magnetometer-free algorithm for human lower-body motion tracking by fusing inertial sensors with an UWB localization system. However, it is hard to realize wearable systems, and is not suitable for BAN applications.

- State-of-the-art studies seldom concentrate on the fundamental limits of IMU/TOA fusion methods, and the error correction effects are not satisfying. For example, Nilsson et al. [27] proposed a cooperative localization method by fusion of dual foot-mounted inertial sensors and inter-agent UWB ranging, but the experiment results show that, compared with the performance lower bounds [22], there is still a lot of room for improvement.

2. Related Work

2.1. HMT Systems and Applications

2.2. Sensor Fusion and Filtering

2.3. Cramér–Rao Lower Bound

3. Problem Definition

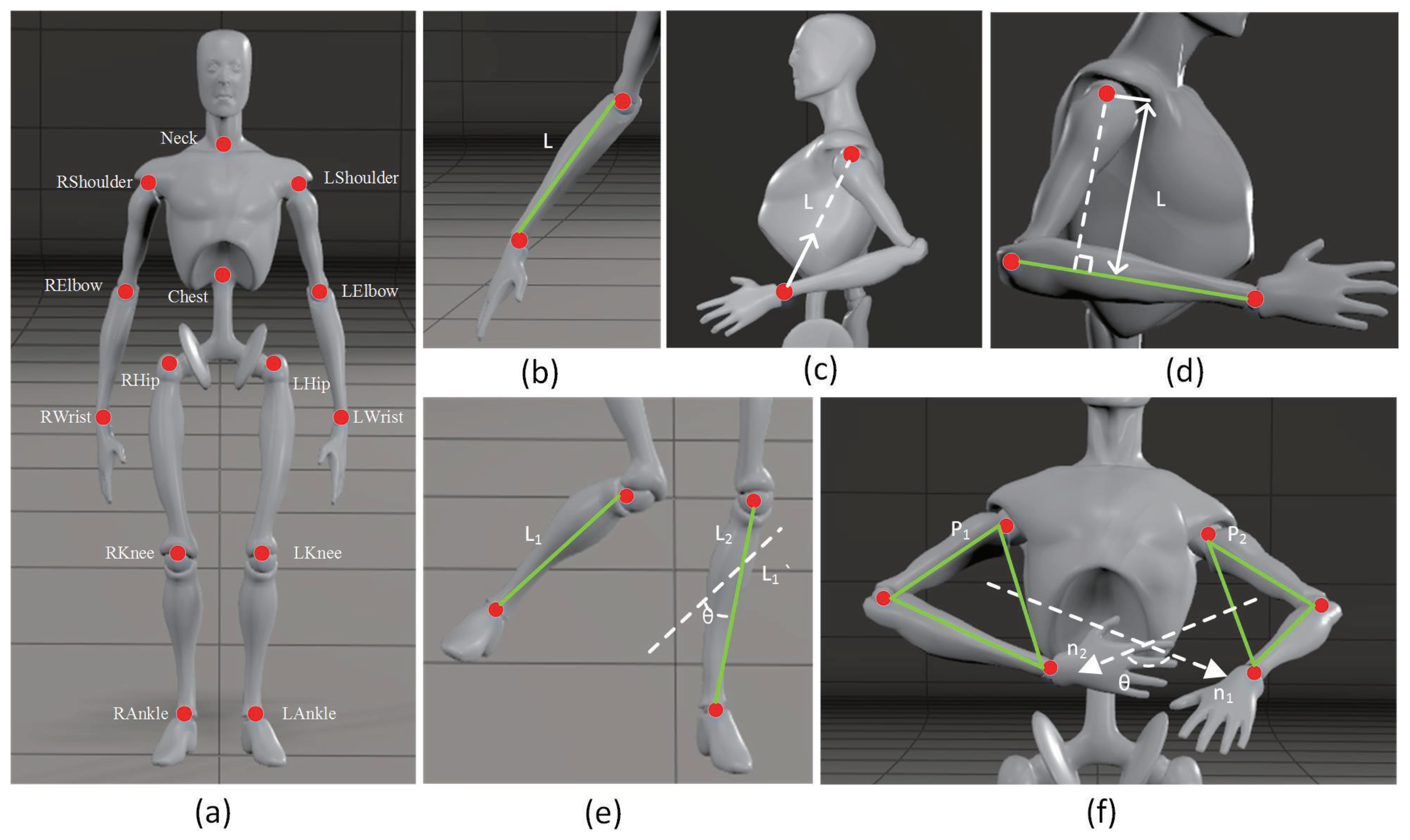

3.1. Model Description

3.2. Error Sources

3.3. Model Presentation

4. IMU/TOA Fusion Based HMT Method

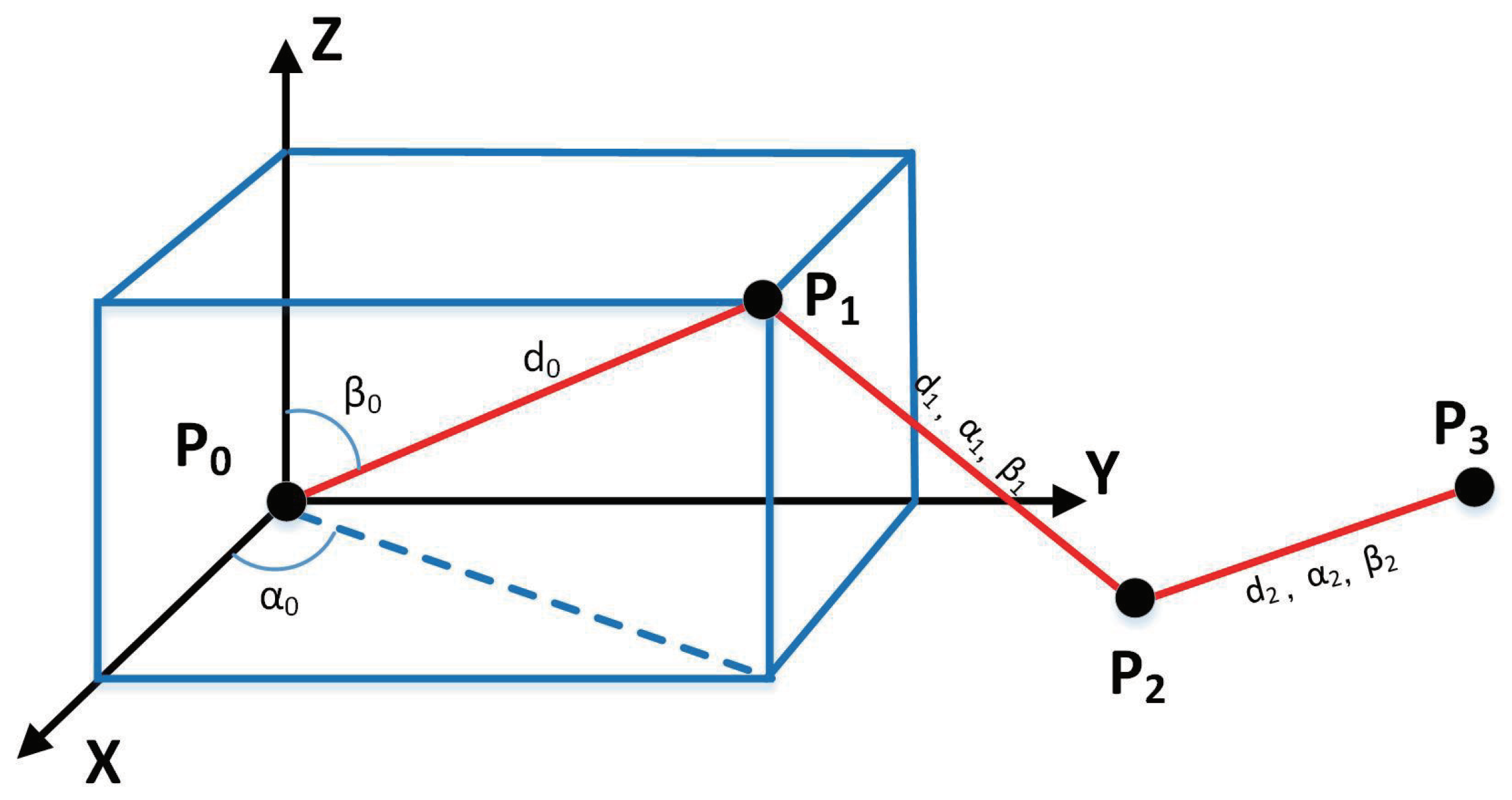

4.1. Measurement Parameter Information Matrix

4.2. State Parameter Information Matrix

4.3. Integral Information Matrix

| Algorithm 1 Proposed IMU/TOA fusion-based human motion tracking method. |

|

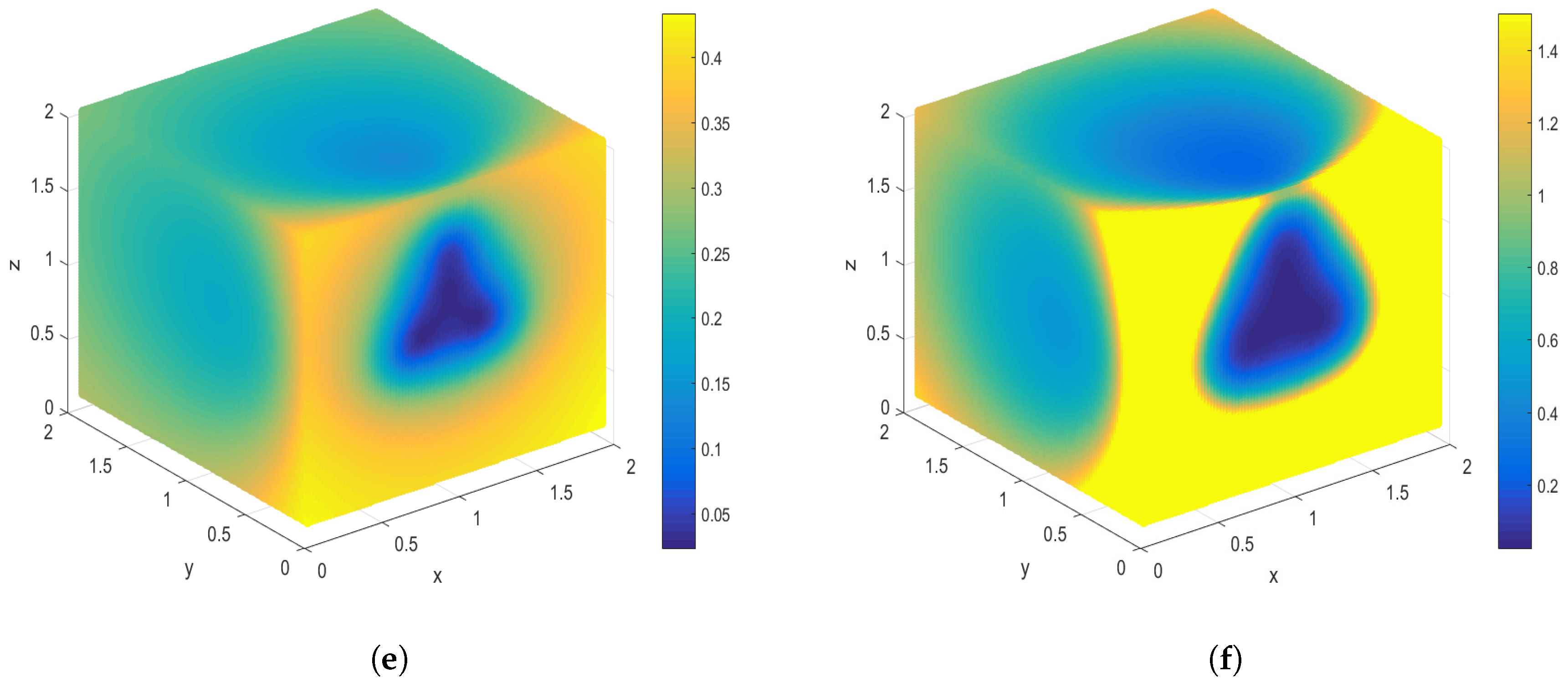

5. Spatial Performance Analysis

5.1. Scenario Setup

5.2. Performance Analysis

- In the aspect of two-dimensional condition, shown in Figure 3a,b, lower CRLB is shown when the position is closer to the trunk. It is also lower in the lower limb than the upper, which is possibly due to the selection of reference nodes. To verify this, a comparison experiment was conducted when the reference nodes were chosen differently. Results shown in Figure 3a,b indicate that the relative position of the reference nodes cause different CRLB of human motion and the more uniform the nodes distribute, the relative lower CRLB it achieves. The same result can also be seen in Figure 3c,d when only distance measurement is applied in the human motion sensing process.

- Since human motion is a three-dimensional process, stereoscopic presentation is shown in Figure 3e,f. The human is assumed to be placed in the XOZ plane when the y is set as zero. The 3D version of CRLB is likely to be a 2D one that stretches along the Z-axis. Similar results could be seen in 3D condition that, the closer to the trunk, the lower the CRLB that could be achieved.

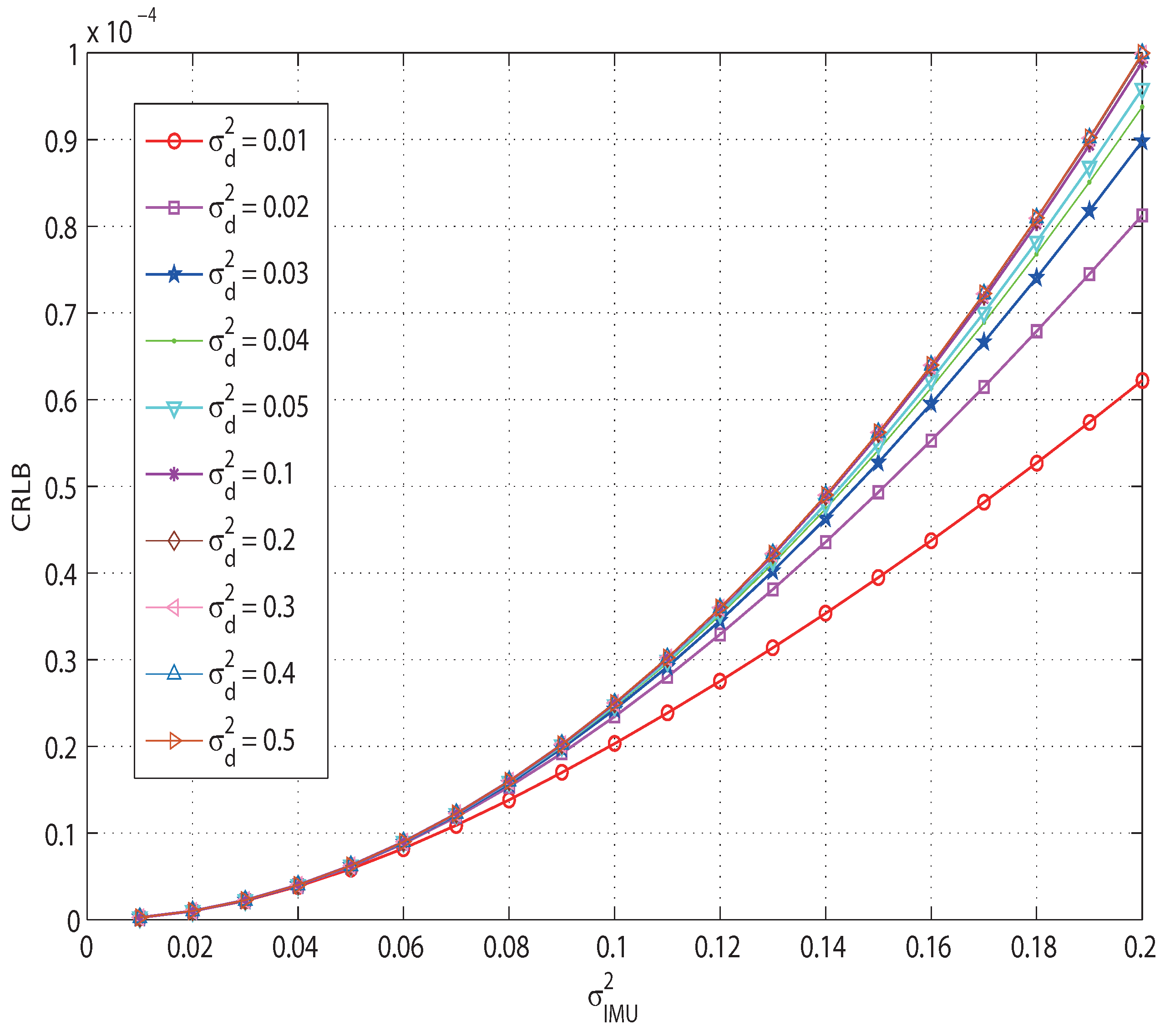

6. Temporal Performance Analysis

6.1. Scenario Setup

6.2. Performance Analysis

- When only inertial sensors are adopted in the tracking system, as indicated by the black solid line in Figure 5, the accumulative errors may tend to be diverging. Theoretically, this confirms that IMU based HMT system faces the problem of accumulative errors. However, IMU/TOA fusion method can avoid this divergence. The performance curves of proposed approaches achieve stability after certain steps, i.e., their errors converge.

- Compared with sole TOA tracking method, IMU/TOA fusion-based method can significantly increase the accuracy of human body motion tracking. The lower bound of proposed fusion method could drop below 8 cm.

- With increase of and , PCRLB also increases, which means that the accuracy is inversely proportional to these two parameters.

- Under different topologies, the theoretical accuracy lower bounds of the tracking system are different.

- The different topological conditions tend to be stable after the same iteration number (around 15), which means the convergence rate is roughly the same under various topologies.

- Topology 3 suffers the largest RMSE, which may be due to the dense reference nodes. The minimum RMSE is less than 8 cm, as shown in Topology 4, which makes it a better choice for practice applications.

- The proposed fusion HMT systems with TOA integrated share identical trend results with that of solely TOA ranging.

7. Practical Use Case

7.1. Platform Overview and Experiment Setup

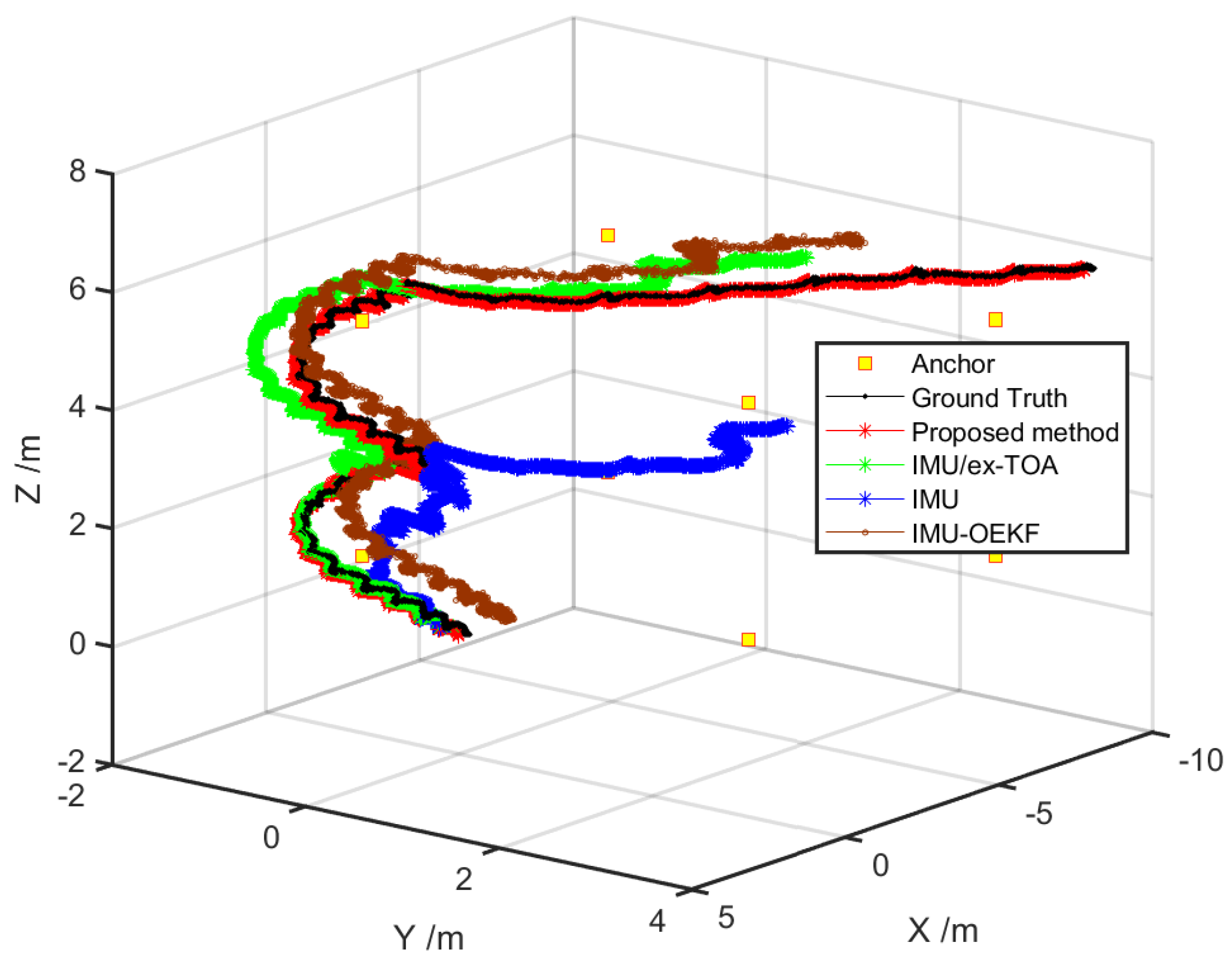

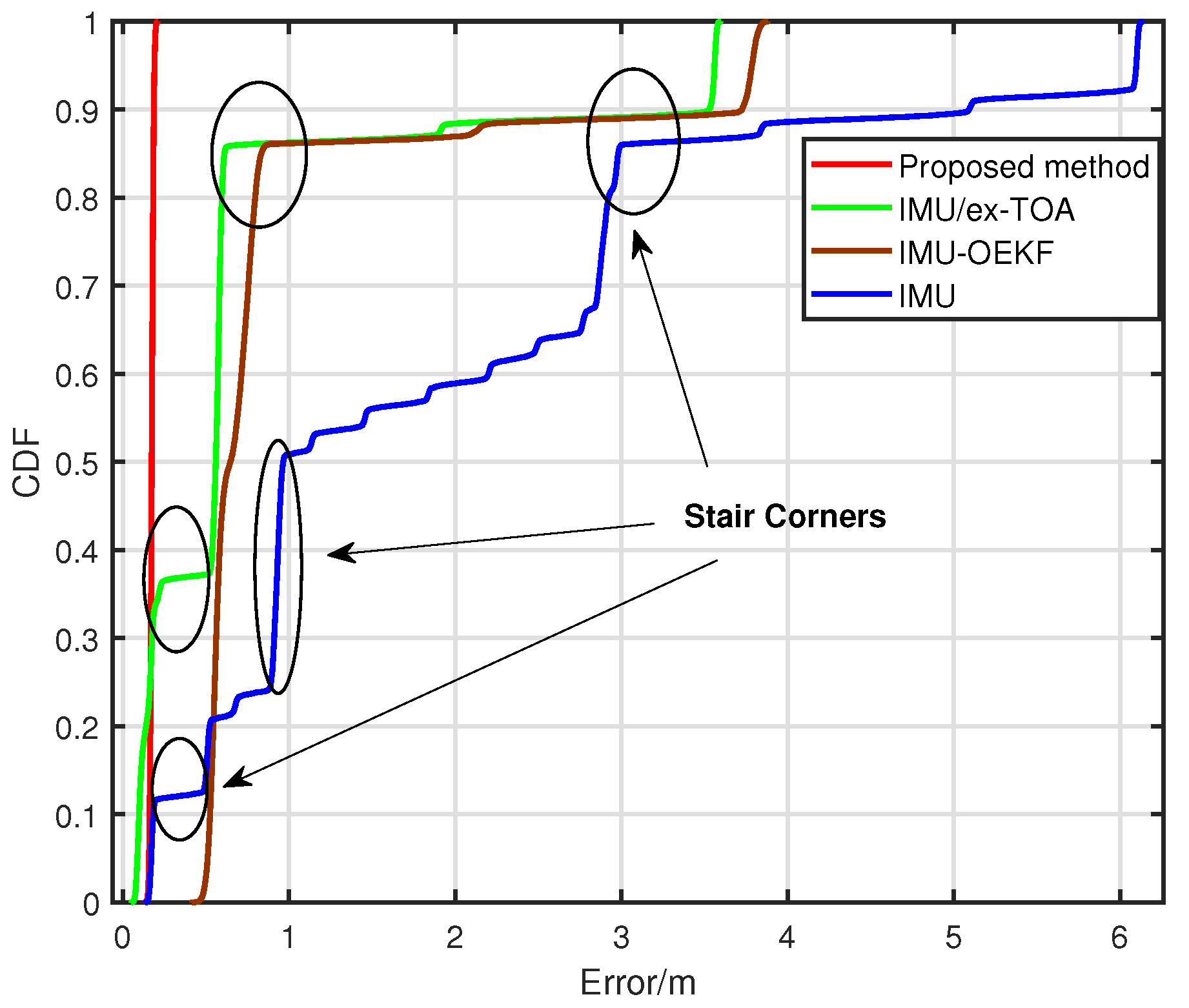

7.2. Practical Use Case in 3D Scenario

- (1)

- Only IMU was used for human lower limb tracking. Zero velocity update (ZUPT) algorithm [51] was applied. For simplicity, we denoted it as “IMU” method.

- (2)

- IMU and TOA fusion method in [23] was applied. UWB based TOA tag nodes were mounted to the right lower limb and communicated with external anchor nodes, implementing TOA localization algorithm. For simplicity, and to separate it from our proposed method, we denoted it as “IMU/ex-TOA” method.

- (3)

- Optimal Enhanced Kalman Filter (OEKF)-based method in [52] was applied. The cumulative errors in attitude and velocity were corrected using the attitude fusion filtering algorithm and ZUPT, respectively. For simplicity, and to separate it from our proposed method, we denoted it as “IMU-OEKF” method.

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Derivation of the CRLB

Appendix B. Derivation of the PCRLB

References

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. [Google Scholar] [CrossRef]

- Fortino, G.; Giannantonio, R.; Gravina, R.; Kuryloski, P.; Jafari, R. Enabling Effective Programming and Flexible Management of Efficient Body Sensor Network Applications. IEEE Trans. Hum. Mach. Syst. 2013, 43, 115–133. [Google Scholar] [CrossRef] [Green Version]

- Sodhro, A.H.; Luo, Z.; Sangaiah, A.K.; Baik, S.W. Mobile edge computing based QoS optimization in medical healthcare applications. Int. J. Inf. Manag. 2018. [Google Scholar] [CrossRef]

- Sodhro, A.H.; Pirbhulal, S.; Qaraqe, M.; Lohano, S.; Sodhro, G.H.; Junejo, N.U.; Luo, Z. Power control algorithms for media transmission in remote healthcare systems. IEEE Access 2018, 6, 42384–42393. [Google Scholar] [CrossRef]

- Cao, J.; Li, W.; Ma, C.; Tao, Z. Optimizing multi-sensor deployment via ensemble pruning for wearable activity recognition. Inf. Fusion 2018, 41, 68–79. [Google Scholar] [CrossRef]

- Galzarano, S.; Giannantonio, R.; Liotta, A.; Fortino, G. A task-oriented framework for networked wearable computing. IEEE Trans. Autom. Sci. Eng. 2016, 13, 621–638. [Google Scholar] [CrossRef]

- Abebe, G.; Cavallaro, A. Inertial-Vision: Cross-Domain Knowledge Transfer for Wearable Sensors. In Proceedings of the 2017 International Conference on Computer Vision, Venice, Italy, 22–29 Octorber 2017; pp. 1392–1400. [Google Scholar]

- Fortino, G.; Galzarano, S.; Gravina, R.; Li, W. A framework for collaborative computing and multi-sensor data fusion in body sensor networks. Inf. Fusion 2015, 22, 50–70. [Google Scholar] [CrossRef]

- Yang, X.; Tian, Y. Super Normal Vector for Human Activity Recognition with Depth Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1028–1039. [Google Scholar] [CrossRef]

- Bux, A.; Angelov, P.; Habib, Z. Vision Based Human Activity Recognition: A Review. Adv. Comput. Intell. Syst. 2017, 513, 341–371. [Google Scholar]

- Zhao, H.; Ding, Y.; Yu, B.; Jiang, C.; Zhang, W. Design and implementation of Peking Opera action scoring system based on human skeleton information. MATEC Web Conf. 2018, 232, 01026. [Google Scholar] [CrossRef]

- Vicon. Available online: https://www.vicon.com/ (accessed on 15 January 2019).

- Optotrak. Available online: https://www.ndigital.com/ (accessed on 15 January 2019).

- Hong, J.H.; Ramos, J.; Dey, A.K. Toward Personalized Activity Recognition Systems With a Semipopulation Approach. IEEE Trans. Hum. Mach. Syst. 2016, 46, 101–112. [Google Scholar] [CrossRef]

- Xsens. Available online: https://www.xsens.com/ (accessed on 15 January 2019).

- Invensense. Available online: https://www.invensense.com/ (accessed on 15 January 2019).

- VMSens. Available online: https://www.vmsens.com (accessed on 15 January 2019).

- Noitom. Available online: http://www.noitom.com.cn/ (accessed on 15 January 2019).

- Decawave. Available online: https://www.decawave.com/ (accessed on 15 January 2019).

- Cornacchia, M.; Ozcan, K.; Zheng, Y.; Velipasalar, S. A Survey on Activity Detection and Classification Using Wearable Sensors. IEEE Sens. J. 2017, 17, 386–403. [Google Scholar] [CrossRef]

- Bao, S.D.; Meng, X.L.; Xiao, W.; Zhang, Z.Q. Fusion of Inertial/Magnetic Sensor Measurements and Map Information for Pedestrian Tracking. Sensors 2017, 17, 340. [Google Scholar] [CrossRef]

- Xu, C.; He, J.; Zhang, X.; Yao, C.; Tseng, P.H. Geometrical Kinematic Modeling on Human Motion using Method of Multi-Sensor Fusion. Inf. Fusion 2017, 41, 243–254. [Google Scholar] [CrossRef]

- Zihajehzadeh, S.; Park, E.J. A Novel Biomechanical Model-Aided IMU/UWB Fusion for Magnetometer-Free Lower Body Motion Capture. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 927–938. [Google Scholar] [CrossRef]

- Qiu, S.; Wang, Z.; Zhao, H.; Hu, H. Using Distributed Wearable Sensors to Measure and Evaluate Human Lower Limb Motions. IEEE Trans. Instrum. Meas. 2016, 65, 939–950. [Google Scholar] [CrossRef] [Green Version]

- Fullerton, E.; Heller, B.; Munoz-Organero, M. Recognising human activity in free-living using multiple body-worn accelerometers. IEEE Sens. J. 2017, 17, 5290–5297. [Google Scholar] [CrossRef]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimed. Tools Appl. 2017, 76, 4405–4425. [Google Scholar] [CrossRef]

- Nilsson, J.O.; Zachariah, D.; Skog, I.; Händel, P. Cooperative localization by dual foot-mounted inertial sensors and inter-agent ranging. Eur. J. Adv. Signal Process. 2013, 2013, 164. [Google Scholar] [CrossRef] [Green Version]

- Sodhro, A.H.; Shaikh, F.K.; Pirbhulal, S.; Lodro, M.M.; Shah, M.A. Medical-QoS based telemedicine service selection using analytic hierarchy process. In Handbook of Large-Scale Distributed Computing in Smart Healthcare; Springer: Cham, Switzerland, 2017; pp. 589–609. [Google Scholar]

- Lodro, M.M.; Majeed, N.; Khuwaja, A.A.; Sodhro, A.H.; Greedy, S. Statistical channel modelling of 5G mmWave MIMO wireless communication. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–5. [Google Scholar]

- Magsi, H.; Sodhro, A.H.; Chachar, F.A.; Abro, S.A.K.; Sodhro, G.H.; Pirbhulal, S. Evolution of 5G in Internet of medical things. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018. [Google Scholar]

- Sodhro, A.H.; Pirbhulal, S.; Sodhro, G.H.; Gurtov, A.; Muzammal, M.; Luo, Z. A joint transmission power control and duty-cycle approach for smart healthcare system. IEEE Sens. J. 2018. [Google Scholar] [CrossRef]

- Kinect. Available online: https://developer.microsoft.com/en-us/windows/kinect (accessed on 15 January 2019).

- Iyengar, S.; Bonda, F.T.; Gravina, R.; Guerrieri, A.; Fortino, G.; Sangiovanni-Vincentelli, A. A framework for creating healthcare monitoring applications using wireless body sensor networks. In Proceedings of the ICST 3rd International Conference on Body Area Networks, Tempe, AZ, USA, 13–17 March 2008. [Google Scholar]

- Anwary, A.R.; Yu, H.; Vassallo, M. Optimal Foot Location for Placing Wearable IMU Sensors and Automatic Feature Extraction for Gait Analysis. IEEE Sens. J. 2018, 18, 2555–2567. [Google Scholar] [CrossRef]

- Ghasemzadeh, H.; Jafari, R. Physical Movement Monitoring Using Body Sensor Networks: A Phonological Approach to Construct Spatial Decision Trees. IEEE Trans. Ind. Inf. 2011, 7, 66–77. [Google Scholar] [CrossRef]

- Yun, X.; Bachmann, E.R. Design, Implementation, and Experimental Results of a Quaternion-Based Kalman Filter for Human Body Motion Tracking. IEEE Int. Conf. Robot. Autom. 2006, 22, 1216–1227. [Google Scholar] [CrossRef] [Green Version]

- Zhao, S.; Shmaliy, Y.S.; Shi, P.; Ahn, C.K. Fusion Kalman/UFIR filter for state estimation with uncertain parameters and noise statistics. IEEE Trans. Ind. Electr. 2017, 64, 3075–3083. [Google Scholar] [CrossRef]

- Briese, D.; Kunze, H.; Rose, G. UWB localization using adaptive covariance Kalman Filter based on sensor fusion. In Proceedings of the 2017 IEEE 17th International Conference on Ubiquitous Wireless Broadband (ICUWB), Salamanca, Spain, 12–15 September 2017. [Google Scholar]

- Kim, H.; Liu, B.; Goh, C.Y.; Lee, S.; Myung, H. Robust vehicle localization using entropy-weighted particle filter-based data fusion of vertical and road intensity information for a large scale urban area. IEEE Robot. Autom. Lett. 2017, 2, 1518–1524. [Google Scholar] [CrossRef]

- Bai, F.; Vidal-Calleja, T.; Huang, S. Robust Incremental SLAM Under Constrained Optimization Formulation. IEEE Robot. Autom. Lett. 2018, 3, 1207–1214. [Google Scholar] [CrossRef]

- Sebastián, P.S.J.; Virtanen, T.; Garcia-Molla, V.M.; Vidal, A.M. Analysis of an efficient parallel implementation of active-set Newton algorithm. J. Supercomput. 2018. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, L.; Liu, Q.; Yin, Y.; Cheng, L.; Zimmermann, R. Fusion of magnetic and visual sensors for indoor localization: Infrastructure-free and more effective. IEEE Trans. Multimed. 2017, 19, 874–888. [Google Scholar] [CrossRef]

- Tichavsky, P.; Muravchik, C.H.; Nehorai, A. Posterior Cramér-Rao bounds for discrete-time nonlinear filtering. IEEE Trans. Signal Process. 1998, 46, 1386–1396. [Google Scholar] [CrossRef]

- Qi, Y.; Kobayashi, H.; Suda, H. Analysis of wireless geolocation in a non-line-of-sight environment. IEEE Trans. Wirel. Commun. 2006, 5, 672–681. [Google Scholar]

- Yang, Z.; Liu, Y. Quality of Trilateration: Confidence Based Iterative Localization. IEEE Trans. Parallel Distrib. Syst. 2010, 21, 631–640. [Google Scholar] [CrossRef]

- Geng, Y.; Pahlavan, K. Design, implementation, and fundamental limits of image and RF based wireless capsule endoscopy hybrid localization. IEEE Trans. Mob. Comput. 2016, 15, 1951–1964. [Google Scholar] [CrossRef]

- Zhang, H.; Dufour, F.; Anselmi, J.; Laneuville, D.; Nègre, A. Piecewise optimal trajectories of observer for bearings-only tracking by quantization. In Proceedings of the 2017 20th International Conference on Information Fusion (Fusion), Xi’an, China, 10–13 July 2017; pp. 1–7. [Google Scholar]

- Fisher/Information. Available online: https://en.wikipedia.org/wiki/Fisher_information (accessed on 15 January 2019).

- Horn, R.A.; Johnson, C.R. Matrix Analysis; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Gasparini, M. Markov Chain Monte Carlo in Practice. Technometrics 1999, 39, 338. [Google Scholar] [CrossRef]

- Nilsson, J.O.; Gupta, A.K.; Handel, P. Foot-mounted inertial navigation made easy. In Proceedings of the 2014 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Busan, Korea, 27–30 Octorber 2014. [Google Scholar]

- Fan, Q.; Zhang, H.; Sun, Y.; Zhu, Y.; Zhuang, X.; Jia, J.; Zhang, P. An Optimal Enhanced Kalman Filter for a ZUPT-Aided Pedestrian Positioning Coupling Model. Sensors 2018, 18, 1404. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; He, J.; Zhang, X.; Tseng, P.H.; Duan, S. Toward Near-Ground Localization: Modeling and Applications for TOA Ranging Error. IEEE Trans. Antennas Propag. 2017, 65, 5658–5662. [Google Scholar] [CrossRef]

- Deng, C.Y. A generalization of the ShermanMorrisonWoodbury formula. Appl. Math. Lett. 2011, 24, 1561–1564. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, C.; He, J.; Zhang, X.; Zhou, X.; Duan, S. Towards Human Motion Tracking: Multi-Sensory IMU/TOA Fusion Method and Fundamental Limits. Electronics 2019, 8, 142. https://doi.org/10.3390/electronics8020142

Xu C, He J, Zhang X, Zhou X, Duan S. Towards Human Motion Tracking: Multi-Sensory IMU/TOA Fusion Method and Fundamental Limits. Electronics. 2019; 8(2):142. https://doi.org/10.3390/electronics8020142

Chicago/Turabian StyleXu, Cheng, Jie He, Xiaotong Zhang, Xinghang Zhou, and Shihong Duan. 2019. "Towards Human Motion Tracking: Multi-Sensory IMU/TOA Fusion Method and Fundamental Limits" Electronics 8, no. 2: 142. https://doi.org/10.3390/electronics8020142

APA StyleXu, C., He, J., Zhang, X., Zhou, X., & Duan, S. (2019). Towards Human Motion Tracking: Multi-Sensory IMU/TOA Fusion Method and Fundamental Limits. Electronics, 8(2), 142. https://doi.org/10.3390/electronics8020142