Multipath Ghost Suppression Based on Generative Adversarial Nets in Through-Wall Radar Imaging

Abstract

:1. Introduction

- has robustness in finishing multipath-ghost suppression without accurate walls’ locations;

- preserves the target images even if they are overlapped with multipath ghosts;

- finishes multipath ghost suppression without the use of complicated tuning parameters in different detection scenes; and

- prevents the energy difference of target images from enlarging, which is beneficial in identifying all targets.

2. Multipath Model

3. Generative Adversarial Model

4. Network Architecture

4.1. Generator G

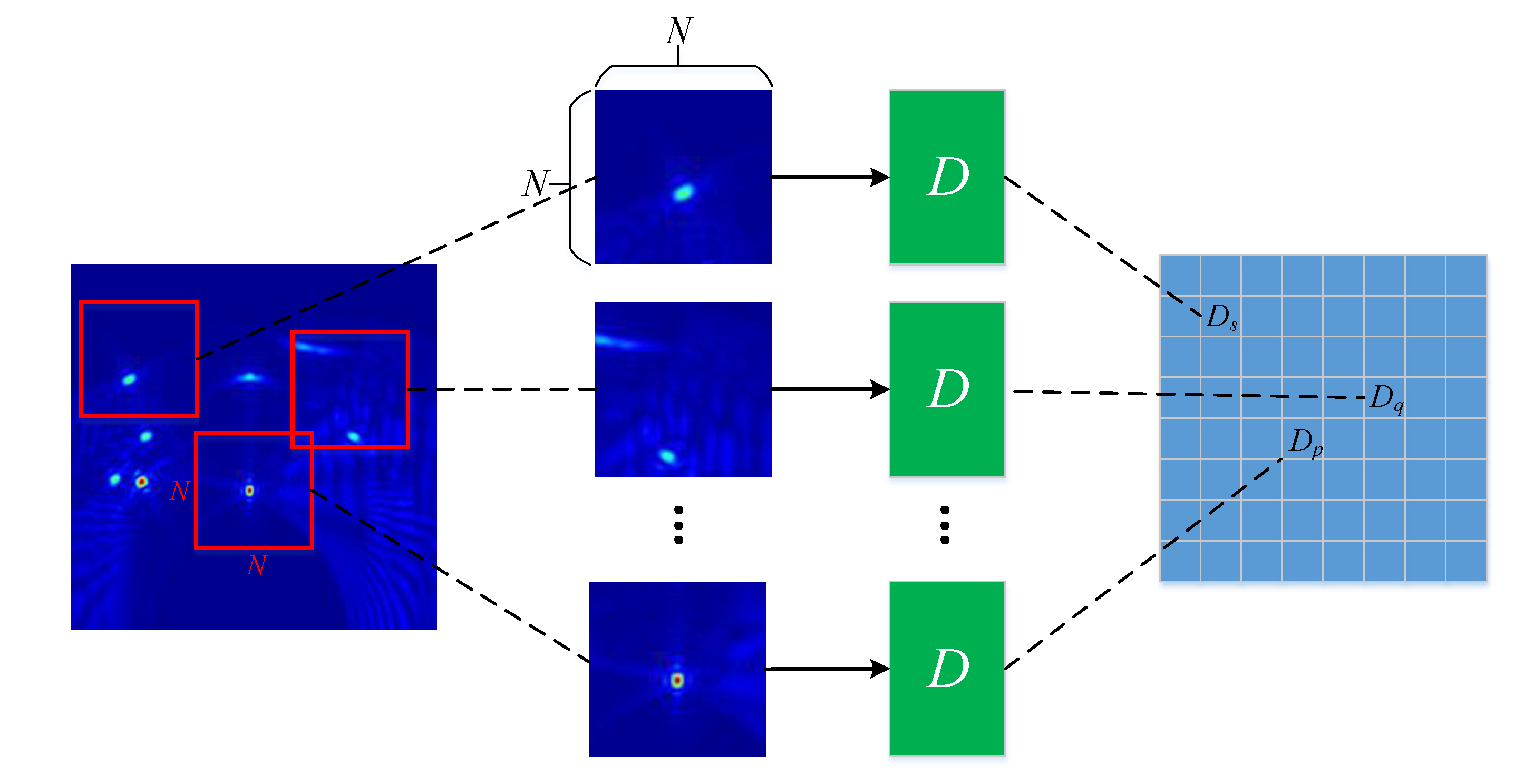

4.2. Discriminator D

4.3. Detailed Architectures of G and D

- Generator architectureencoder:decoder:

- Discriminator architecture

5. Simulation and Discussion

5.1. Data Preparation

- Dataset 1The number of targets is set to a random number ranging from one to four; 1000 samples and 100 samples of data are respectively used as a training set and a validation set.

- Dataset 2The number of targets is increased to a random number ranging from ten to twenty; 2000 samples and 200 samples of data are respectively used as a training set and a validation set.

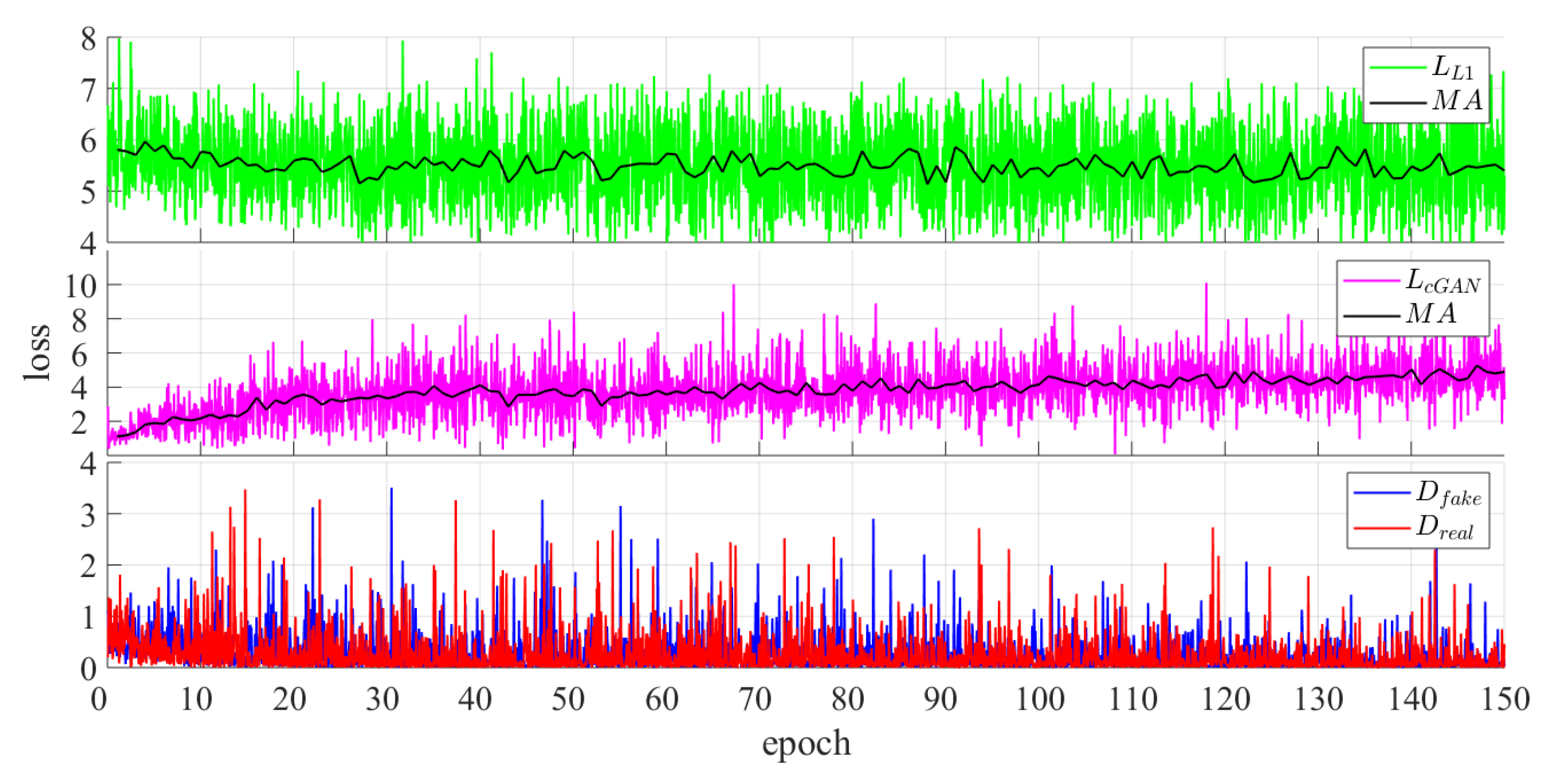

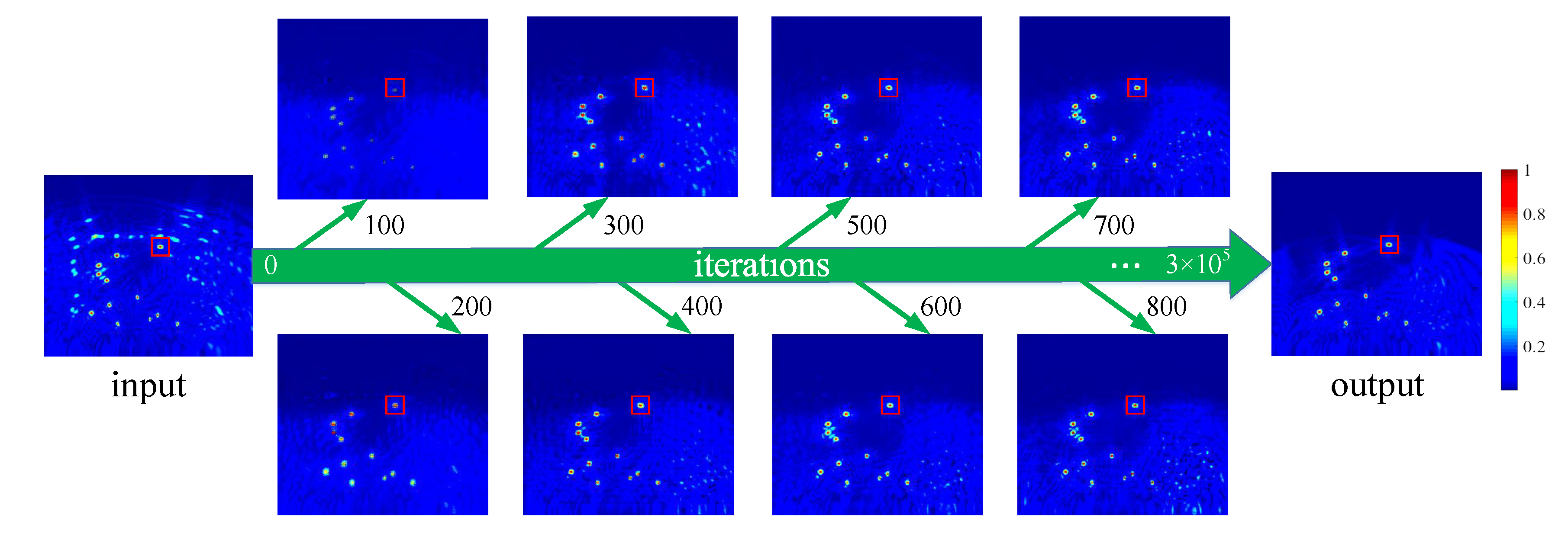

5.2. Training Details

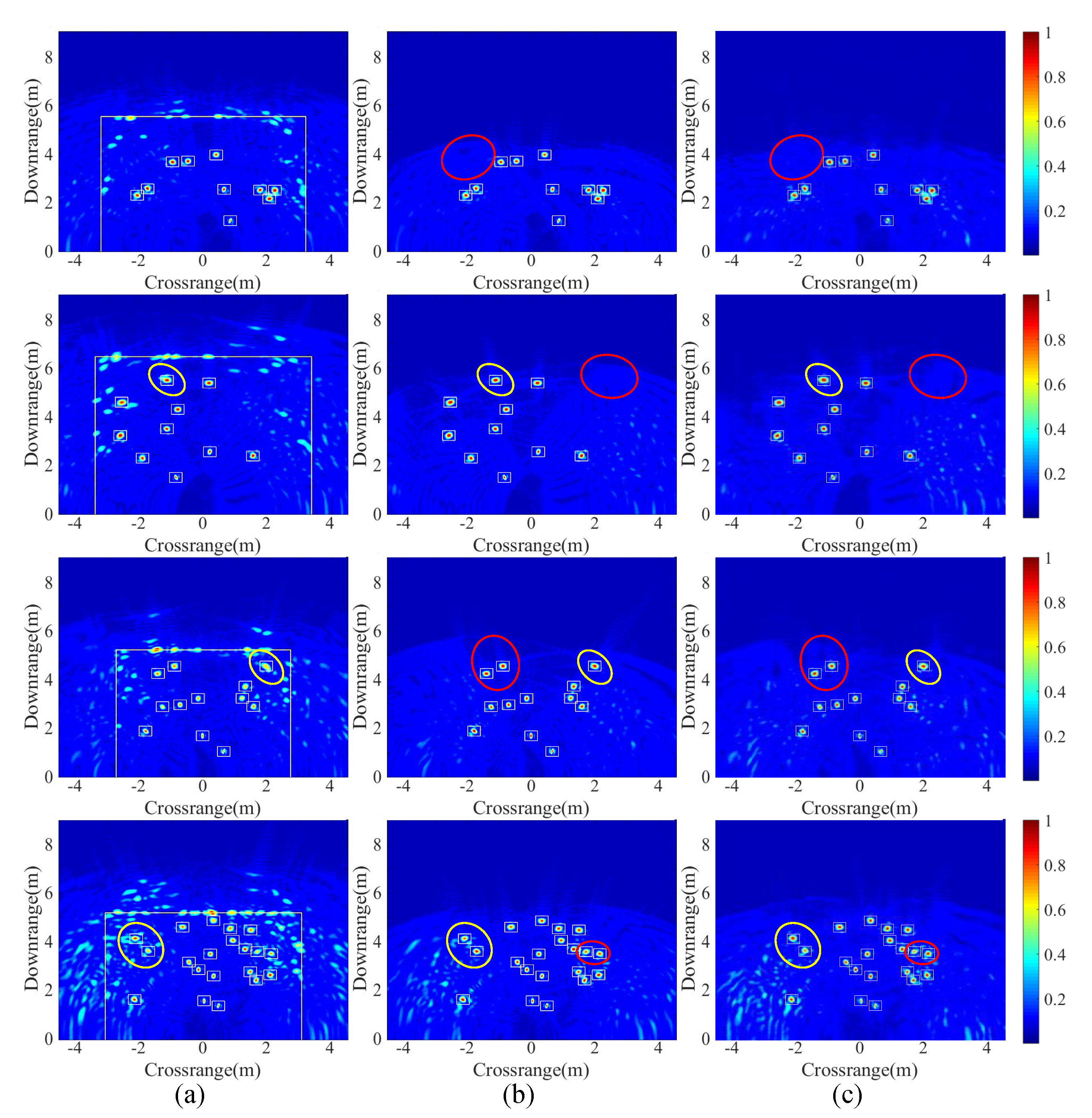

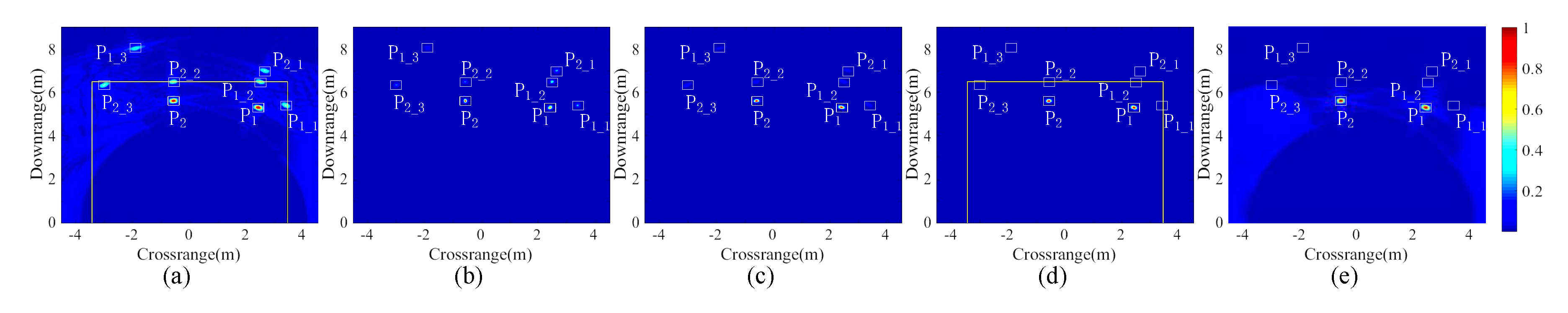

5.3. Result Analysis

5.4. Comparison of Different Methods

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jia, Y.; Zhong, X.; Liu, J.; Guo, Y. Single-side two-location spotlight imaging for building based on MIMO through-wall-radar. Sensors 2016, 16, 1441. [Google Scholar] [CrossRef] [PubMed]

- Halman, J.I.; Shubert, K.A.; Ruck, G.T. SAR processing of ground-penetrating radar data for buried UXO detection: results from a surface-based system. IEEE Trans. Antennas Propag. 1998, 46, 1023–1027. [Google Scholar] [CrossRef]

- Lei, C.; Ouyang, S. A time-domain beamformer for UWB through-wall imaging. In Proceedings of the IEEE Region 10 Conference, Taipei, Taiwan, 30 October–2 November 2007; Volume 10, pp. 1–4. [Google Scholar]

- Setlur, P.; Amin, M.; Ahmad, F. Multipath model and exploitation in through-the-wall and urban radar sensing. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4021–4034. [Google Scholar] [CrossRef]

- Setlur, P.; Alli, G.; Nuzzo, L. Multipath exploitation in through-wall radar imaging via point spread functions. IEEE Trans. Image Process. 2013, 22, 4571–4586. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Chen, W. Multipath ghost elimination for through-wall radar imaging. IET Radar Sonar Navig. 2016, 10, 299–310. [Google Scholar] [CrossRef]

- Guo, S.; Cui, G.; Kong, L.; Yang, X. An Imaging Dictionary Based Multipath Suppression Algorithm for Through-Wall Radar Imaging. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 269–283. [Google Scholar] [CrossRef]

- Li, Z.; Kong, L.; Jia, Y.; Zhao, Z.; Lan, F. A novel approach of multi-path suppression based on sub-aperture imaging in through-wall-radar imaging. In Proceedings of the IEEE Radar Conference, Ottawa, ON, Canada, 29 April–3 May 2013; pp. 1–4. [Google Scholar]

- Guo, S.; Yang, X.; Cui, G.; Song, Y.; Kong, L. Multipath Ghost Suppression for Through-the-Wall Imaging Radar via Array Rotating. IEEE Geosci. Remote Sens. Lett. 2018, 15, 868–872. [Google Scholar] [CrossRef]

- Liu, J.; Jia, Y.; Kong, L.; Yang, X.; Liu, Q.H. Sign-coherence-factor-based suppression for grating lobes in through-wall radar imaging. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1681–1685. [Google Scholar] [CrossRef]

- Lu, B.; Sun, X.; Zhao, Y.; Zhou, Z. Phase coherence factor for mitigation of sidelobe artifacts in through-the-wall radar imaging. J. Electromagn. Waves Appl. 2013, 27, 716–725. [Google Scholar] [CrossRef]

- Burkholder, R.J.; Browne, K.E. Coherence factor enhancement of through-wall radar images. IEEE Antennas Wirel. Propag. Lett. 2010, 9, 842–845. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. arXiv 2017, arXiv:1611.07004. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image computing and computer-assisted intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Li, C.; Wand, M. Precomputed real-time texture synthesis with markovian generative adversarial networks. In Proceedings of the European Conference on Computer Vision ECCV, Amsterdam, The Netherlands, 8–16 October 2016; pp. 702–716. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Wang, X.; Gupta, A. Generative image modeling using style and structure adversarial networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 318–335. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

| One Error | Two Errors or More | |

|---|---|---|

| GAN trained by Dataset 1 | 0.5% | 0% |

| GAN trained by Dataset 2 | 2.5% | 0.5% |

| Method | Computation Times |

|---|---|

| PCF | 1.09 |

| Subaperture-fusion | 0.62 |

| Imaging-dictionary | 1.65 |

| The proposed method | 0.65 |

| Method | Advantages | Disadvantages |

|---|---|---|

| PCF | 1. Does not require prior walls’ locations. 2. Does not require complicated tuning parameters. | 1. Poor multipath suppression for SAI. 2. A part of target images are degraded with low SMCR. |

| Subaperture-fusion | Does not require prior walls’ locations. | 1. Poor suppression for the multipath ghosts of back wall. 2. A part of target images are degraded with low SMCR. 3. Requires complicated tuning parameters. |

| Imaging-dictionary | 1. Excellent multipath suppression. 2. Does not require complicated tuning parameters. | Requires prior walls’ locations. |

| The proposed method | 1. Excellent multipath suppression. 2. Does not require prior walls’ locations. 3. No target images with degradation. 4. Does not require complicated tuning parameters. | Requires a dataset with labels. |

| Method | ||||||

|---|---|---|---|---|---|---|

| Original image | 5.89 | 5.90 | 8.48 | 6.37 | 5.74 | 6.00 |

| PCF | 13.09 | 6.99 | 35.11 | 10.47 | 12.81 | 12.53 |

| Subaperture-fusion | 17.41 | 17.15 | 24.93 | 20.89 | 17.41 | 18.42 |

| Imaging-dictionary | 103.56 | 119.37 | 172.02 | 112.93 | 75.06 | 123.06 |

| The proposed method | 32.45 | 54.49 | 56.49 | 43.49 | 31.27 | 27.06 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, Y.; Song, R.; Chen, S.; Wang, G.; Guo, Y.; Zhong, X.; Cui, G. Multipath Ghost Suppression Based on Generative Adversarial Nets in Through-Wall Radar Imaging. Electronics 2019, 8, 626. https://doi.org/10.3390/electronics8060626

Jia Y, Song R, Chen S, Wang G, Guo Y, Zhong X, Cui G. Multipath Ghost Suppression Based on Generative Adversarial Nets in Through-Wall Radar Imaging. Electronics. 2019; 8(6):626. https://doi.org/10.3390/electronics8060626

Chicago/Turabian StyleJia, Yong, Ruiyuan Song, Shengyi Chen, Gang Wang, Yong Guo, Xiaoling Zhong, and Guolong Cui. 2019. "Multipath Ghost Suppression Based on Generative Adversarial Nets in Through-Wall Radar Imaging" Electronics 8, no. 6: 626. https://doi.org/10.3390/electronics8060626

APA StyleJia, Y., Song, R., Chen, S., Wang, G., Guo, Y., Zhong, X., & Cui, G. (2019). Multipath Ghost Suppression Based on Generative Adversarial Nets in Through-Wall Radar Imaging. Electronics, 8(6), 626. https://doi.org/10.3390/electronics8060626