SARM: Salah Activities Recognition Model Based on Smartphone

Abstract

:1. Introduction

- To recognize count the three important activities in a Rakat of Qayam (ST), Ruku (STB), and Sujood (STP) in prayer of an Alzheimer’s patient using six features with low complexity on different window sizes;

- A model that would be used to implement the mobile-based application, which directs the Namazi (worshipper) to perform their prayer according to the code of Islam without any human intervention needed;

- With correct counting and recognition of activities, an Alzheimer’s patient can be corrected during prayer with the belief of no mistake or confusion in the prayer on completion.

2. Related Work

3. Salah Activities Recognition Model (SARM)

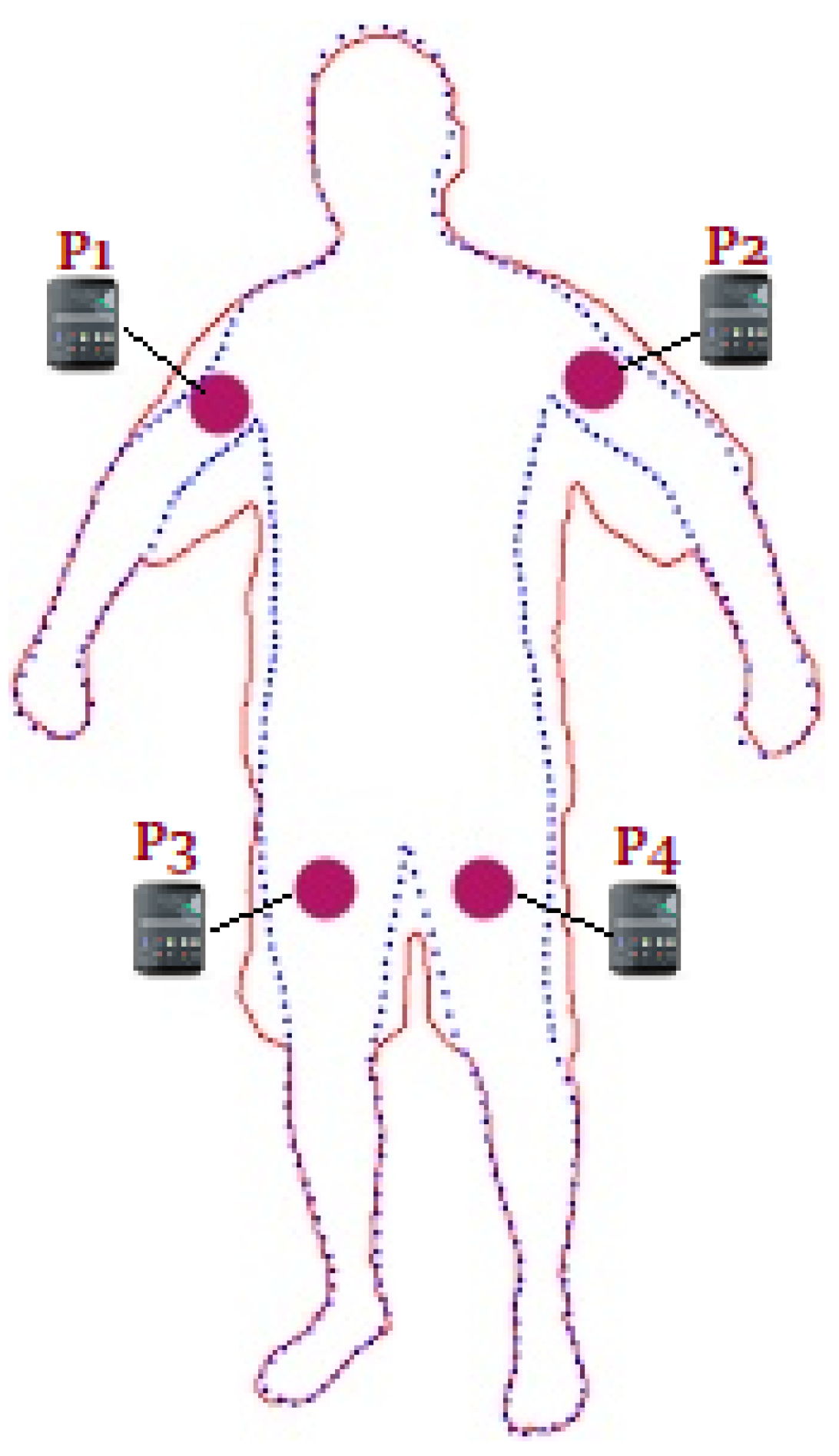

3.1. Data Collection

- First, Salah is dependent on recitation. The duration of each activity (ST, STB, and STP) depends on the user (how much time they take to recite) recitation of Salah (prayer). Some recite at a low pace, while others are a bit faster. The estimated duration of ST is from 12 to 20 s. However, for STB and STP, the average spans are from 5 to 9 s.

- The variation is gender-biased; for men, the obligation is to put the hand on the waist and for a female to put the hand on the chest.

- Initially, we connected the mobile phone to the laptop MATLAB cloud.

- We developed a code script for data acquisition:

- Initialize mobiledev object.

- Enable sensors with sample rate 50 Hz.

- Disable sensors.

- Save the data into mat files from the log.

- We loaded the code script into the MATLAB cloud.

- All the candidates performed each activity (ST, STB, and STP) 20 times. Every instance of activity was recorded separately. Here, the mobile phone served as a wearable sensor (for data collection) and was deployed on our specimen set of two women and eight men. For all 20 activities, the subjects were briefed first each time they started with the activity, but the order of performing the activity was completely up to the subjects.

- −

- ST is a standing activity; here, men place their hands on their navel and women place their hands over their chest. As Salah is observed in a whispering mode, we were unable to track the progress and accomplishment of ST. For this reason, we directed our volunteers to rest their hands for a moment before indulging in the second phase. However, these indicative samples were later removed from the experimental data and were not considered to be noted in our findings. The longevity of the interval time for ST depended on the recitation speed of the user (about 12 to 20 s). To record ST, we executed the MATLAB script. When the activity was finished by the performer, we disabled the sensors through the hard code and saved the data. The same procedure was followed to record the ST activity (20 times) of each candidate.

- −

- In STB, a user moves from a standing position to a bowing position, and there they rest for more or less 4 to 7 s (depending on the user recitation speed), and then move back to the standing position, with this all deemed as one series of STB activity. We executed the script when the user started the activity, and after we were done with recording the first series of STB activity, we disabled the sensor and saved our initial data. In the same way, 20 STB series were recorded for each candidate.

- −

- In STP, the user moves from a standing position to a prostration position, where they stay for 5 to 9 s, and then after performing the prostration recitation, they continue from prostration to a sitting position, with this total sequence depicting one complete cycle of STP activity. For each user, we recorded the STP activity 20 times.

3.2. Data Preprocessing

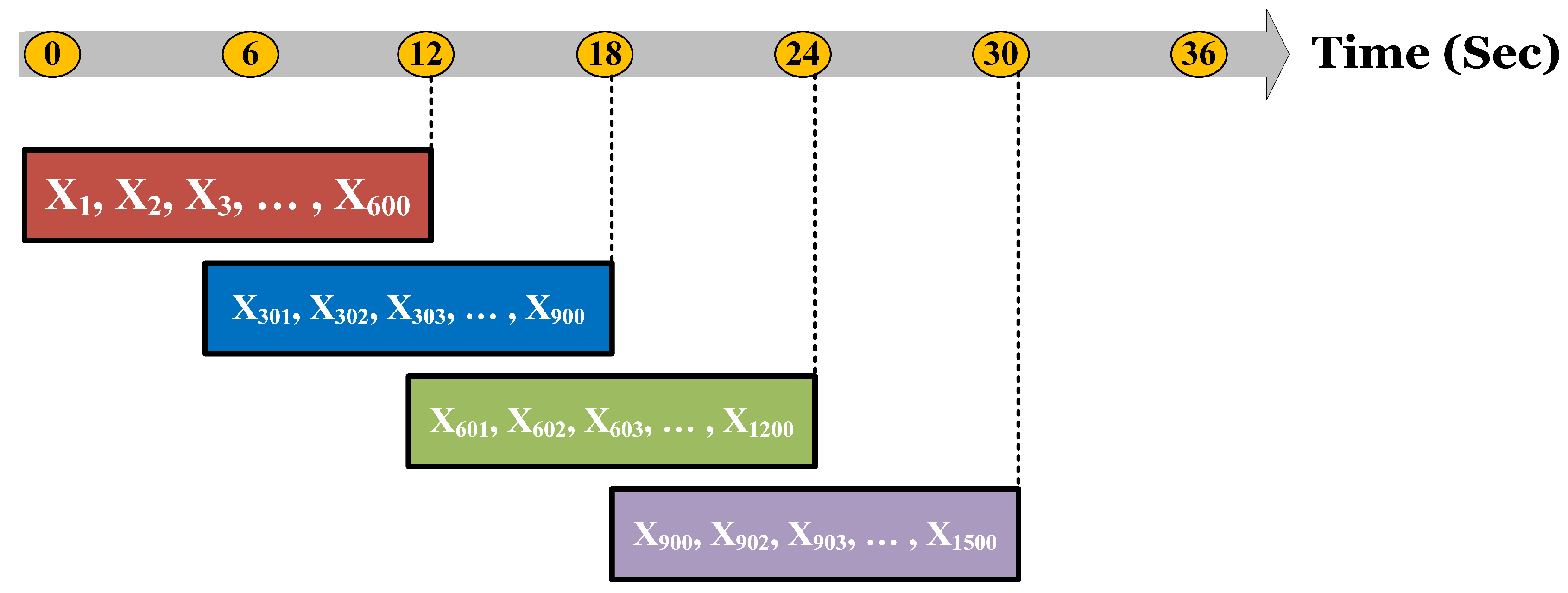

3.3. Segmentation

3.4. Feature Extraction

| Algorithm 1: SARM |

|

4. Evaluation

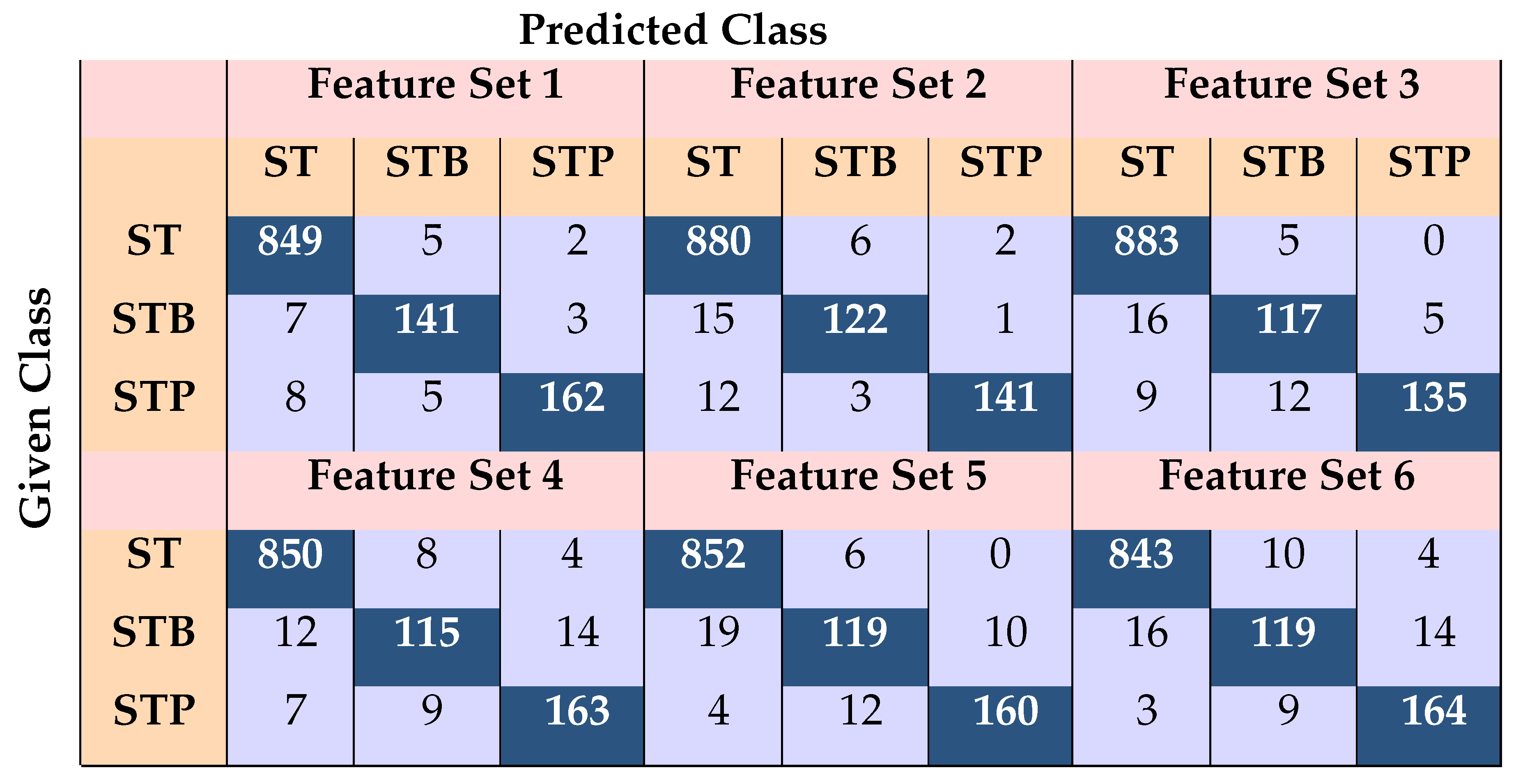

4.1. Performance Evaluation of Rf Classifier at Three Body Positions

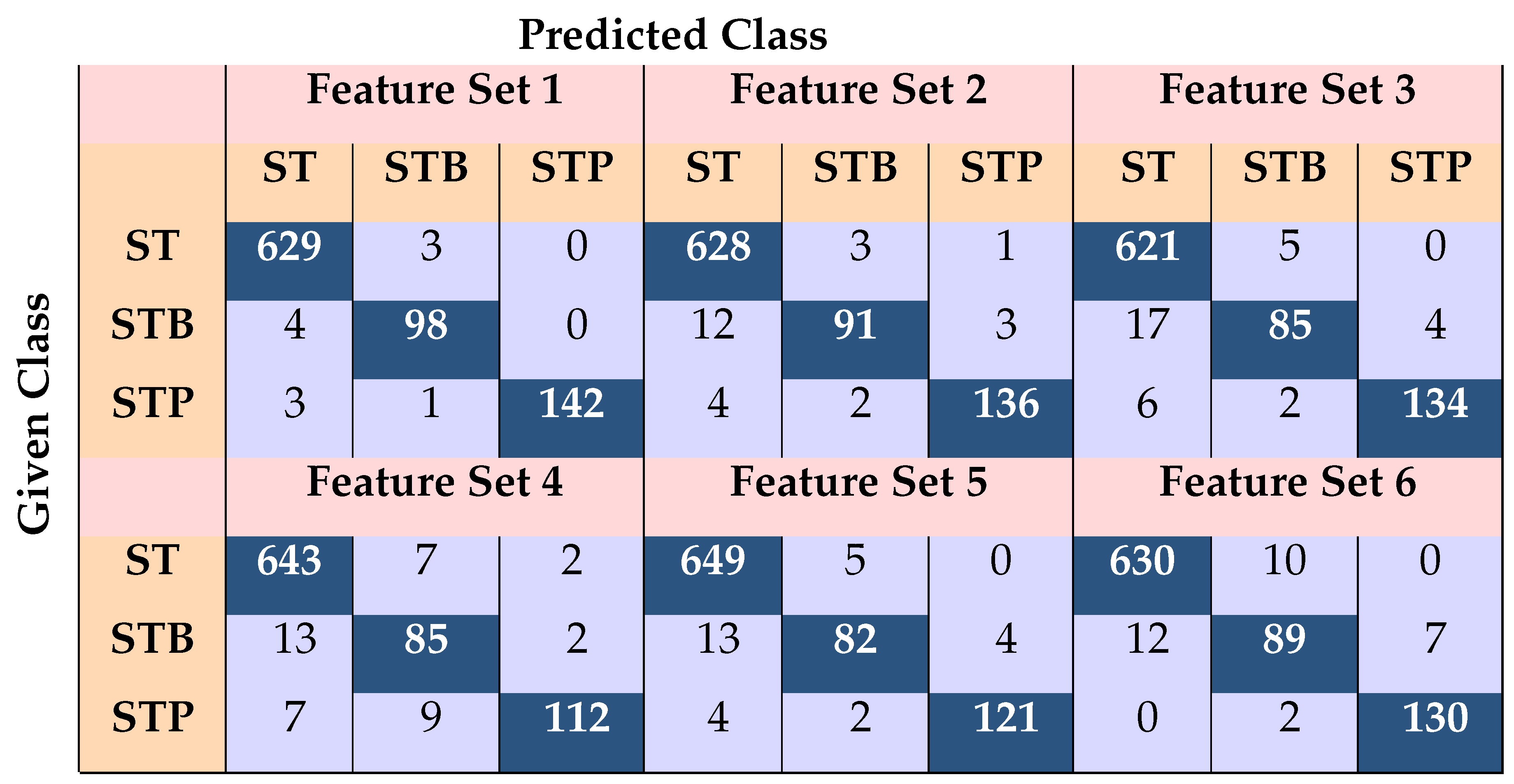

- The analysis of ST activity in the first feature set resulted in satisfactory performance of 98%–99% on all positions. For the other two activities, the best performance was achieved at the TP position (96% STB, 98.6% STP). This position leads than the other two positions, with nearly 3% for STB activity and about 2% for STP activity.

- In fs2, the average precision decreases relative to FS1. Mainly a drop is observed for STB activity. The corresponding confusion metrics of LH (Table 5), RH (Table 6) and TP (Table 7) indicate that STB activity is mainly confused with ST activity. ST activity has no performance increment or decrement. The performance behavior of ST and STP activities is similar or will vary slightly (See Figure 6c). However, on the fs2, the LH position leads (around 92.2%) STB activity over the other two with a margin of 2%.

- By using fs3, the overall performance degrades even more, especially for STB and STP activities. This decline can be seen in confusion metrics and in Figure 6c. Mainly, we can see that STB activity is mainly confused with ST activity. However, STP activity is primarily confused with STB activity. In terms of body position, TP is in the leading over STP (93.7%). LH takes the lead over STB (87.7%), and RH leads over ST (98.2%) activity recognition.

- In the case of fs4, performance remains the same in most cases. For example, ST activity is recognized high at the RH position, STB at LH, and STP at TP position. However, overall, performance decreased by about 1% to 2% from fs3.

- For fs5, our experiment has shown improvement due to the addition of percentile feature information with all four features. The trend of the performance went up after continuous decrements, explicitly in favor of STP activity. This improvement can be observed at RH (as shown in the confusion matrix Table 6) and TP position(as shown in the confusion matrix Table 7). Similar, trends are observed for fs6.

4.2. Recognition of Activities by Using Knn and DT at Three Body Positions

- To recognize ST activity using DT on fs1, the performance at the RH body position was relatively better than at the other two positions, i.e., 99%. However, the results were satisfactory for the other two positions as well, i.e., 98.3% and 98% at LH and TP, respectively. Using fs5, the average performance of overall activities recognition was 1% shifted down with the same classifier. Figure 7b shows the performance reduction on fs5 compared to fs1 at the RH and TP body positions. However, using the feature set fs5, we can see that the performance has been improved at the position of LH relative to the other two positions, i.e., 98.28%. Therefore, the performance difference between the RH and LH positions was measured as about 1.08% and at (LH–TP), the difference was about 0.84%.In the case of the KNN classifier, the performance was not so changed compared to the DT results for ST activity. However, slightly more differences were observed in performance among the body positions on fs1. For example, the leading performance was acquired at the RH position, around 98.60%; whereas on the positions of LF and TP, the F-Measure values were about 96% and 97%. In the case of fs5 using the KNN classifier, the performance was improved at the LH and TP body positions comparatively when we applied fs1 (on fs1, the F-Measure was about 96.87% but on fs5, it was about 98%; further, the same trend was observed on TP. For more detail, the performance is shown in the graphs in Figure 7).

- To recognize STB activity in the perspective of the classifiers, the overall performance of DT was better than the KNN classifier. Moreover, in terms of fs1, the recognition performance was better at the RH position than the other two body positions using DT. However, using fs5, the performance accuracy trend was surprisingly changed at RH towards lower, at about 73.22%, as can be seen in Figure 7b. Therefore, we can say that fs1 can be a better option to recognize the STB activity instead of fs5. However, when we did the comparisons of body positions using fs5 followed by DT, the performances at TP and LH were observed to be significantly improved compared to the RH position (TP = 82.68%, LH = 83.33%). In the case of the KNN classifier, the STB activity recognition was seen as high at the RH position using fs1. while for the performance of KNN on fs5, the TP position played the lead role position, i.e., 83.89%, and this time, the result at LH can be seen to be the lowest, about 8.16%, as seen in Figure 7d.

- For STP activity, the trend in performance was changed as compared to the other activities at different body positions, whereby a high recognition performance was achieved for ST and STB at the RH position, but this time, the trend of increment was in the favor of the TP position in all cases. In terms of the role of the classifiers, KNN produced more reasonable results than DT using fs5, while DT performed better on fs1. The performance differences between DT and KNN on fs1 were about 0.99% RH, 2% LH, and 1% at the TP positions, respectively.

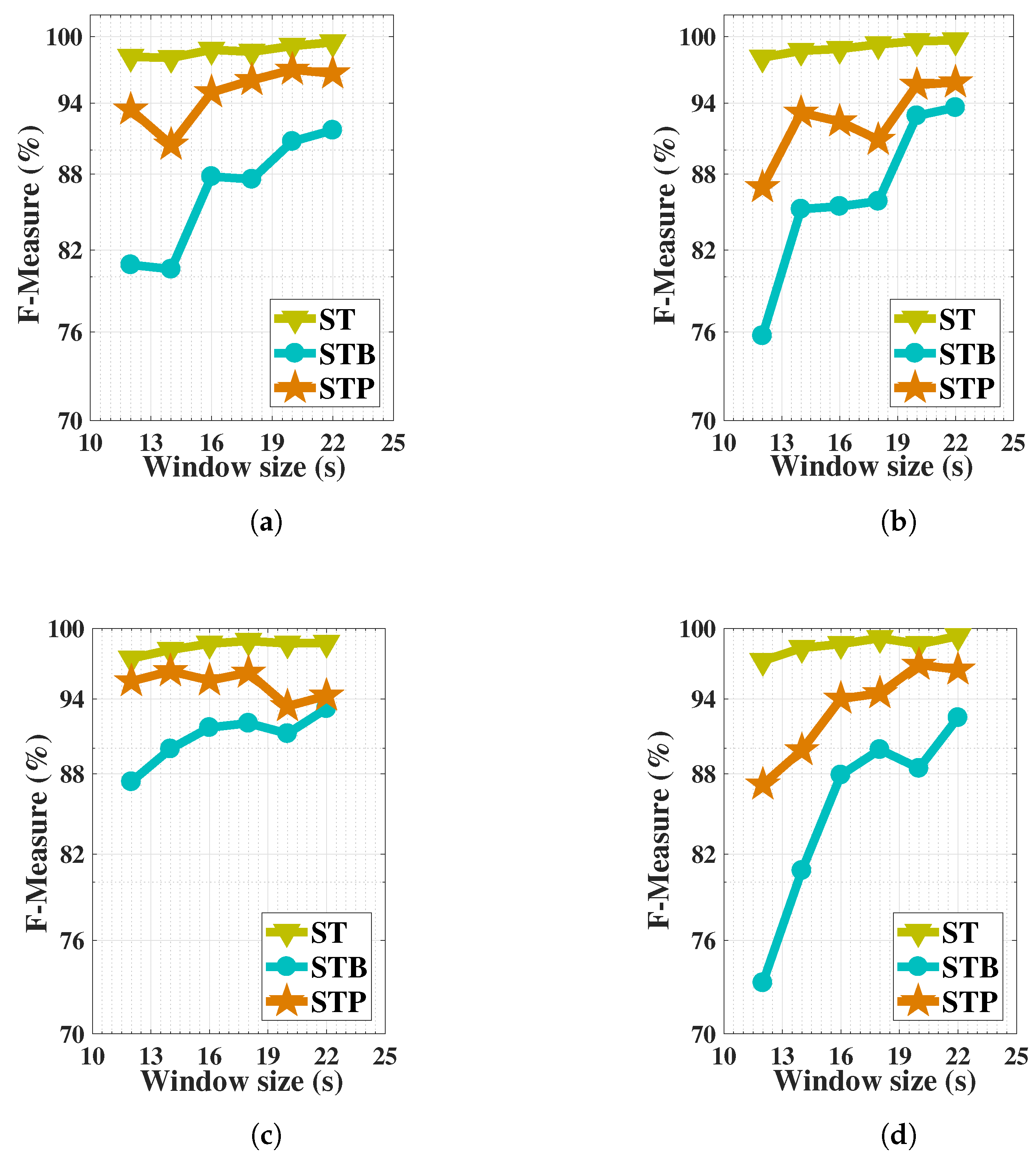

4.3. Influence of Window Size on the Recognition of Activities

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Recognition of Activities by Using Naive Bayes at Three Body Positions

References

- Rashidi, P.; Mihailidis, A. A survey on ambient-assisted living tools for older adults. IEEE J. Biomed. Health Inform. 2013, 17, 579–590. [Google Scholar] [CrossRef] [PubMed]

- San-Segundo, R.; Blunck, H.; Moreno-Pimentel, J.; Stisen, A.; Gil-Martín, M. Robust Human Activity Recognition using smartwatches and smartphones. Eng. Appl. Artif. Intell. 2018, 72, 190–202. [Google Scholar] [CrossRef]

- Kwapisz, J.; Weiss, G.; Moore, S. Activity recognition using cell phone accelerometers. ACM SIGKDD Explor. 2011, 12, 74–82. [Google Scholar] [CrossRef]

- Lam, K.Y.; Tsang, N.W.H.; Han, S.; Zhang, W.; Ng, J.K.Y.; Nath, A. Activity tracking and monitoring of patients with alzheimer’s disease. Multimed. Tools Appl. 2015, 76. [Google Scholar] [CrossRef]

- Khan, W.Z.; Xiang, Y.; Aalsalem, M.Y.; Arshad, Q. Mobile phone sensing systems: A survey. IEEE Commun. Surv. Tutorials 2013, 15, 402–427. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, B.; Bai, Y.; Sun, G.; Zhu, R. Daily mood assessment based on mobile phone sensing. In Proceedings of the 2012 9th International Workshop on Wearable and Implantable Body Sensor Networks, London, UK, 9–12 May 2012; pp. 142–147. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.; Scholten, H.; Havinga, P. A Survey of Online Activity Recognition Using Mobile Phones. Sensors 2015, 15, 2059–2085. [Google Scholar] [CrossRef] [PubMed]

- König, A.; Crispim Junior, C.F.; Derreumaux, A.; Bensadoun, G.; Petit, P.D.; Bremond, F.; David, R.; Verhey, F.; Aalten, P.; Robert, P. Validation of an automatic video monitoring system for the detection of instrumental activities of daily living in dementia patients. J. Alzheimers Dis. 2015, 44, 675–685. [Google Scholar] [CrossRef] [PubMed]

- Liciotti, D.; Frontoni, E.; Zingaretti, P.; Bellotto, N.; Duckett, T. HMM-based Activity Recognition with a Ceiling RGB-D Camera. In Proceedings of the 6th International Conference on Pattern Recognition Applications and Methods, Porto, Portugal, 24–26 February 2017. [Google Scholar]

- Cook, D.J.; Augusto, J.C.; Jakkula, V.R. Ambient intelligence: Technologies, applications, and opportunities. Pervasive Mob. Comput. 2009, 5, 277–298. [Google Scholar] [CrossRef] [Green Version]

- Cook, D.J.; Das, S.K. How smart are our environments? An updated look at the state of the art. Pervasive Mob. Comput. 2007, 3, 53–73. [Google Scholar] [CrossRef]

- Lara, D.; Labrador, M.A. A Survey on Human Activity Recognition using. IEEE Commun. Surv. Tutorials 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Köping, L.; Shirahama, K.; Grzegorzek, M. A general framework for sensor-based human activity recognition. Comput. Biol. Med. 2018, 95, 248–260. [Google Scholar] [CrossRef] [PubMed]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. Energy Efficient Smartphone-Based Activity Recognition using Fixed-Point Arithmetic. Special Session in Ambient Assisted Living: Home Care. J. Univers. Comput. Sci. 2013, 19, 1295–1314. [Google Scholar]

- Martín, H.; Bernardos, A.M.; Iglesias, J.; Casar, J.R. Activity logging using lightweight classification techniques in mobile devices. Pers. Ubiquitous Comput. 2013, 17, 675–695. [Google Scholar] [CrossRef]

- Bayat, A.; Pomplun, M.; Tran, D.A. A study on human activity recognition using accelerometer data from smartphones. Procedia Comput. Sci. 2014, 34, 450–457. [Google Scholar] [CrossRef]

- Hassan, M.M.; Uddin, M.Z.; Mohamed, A.; Almogren, A. A robust human activity recognition system using smartphone sensors and deep learning. Future Gener. Comput. Syst. 2018, 81, 307–313. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. Complex human activity recognition using smartphone and wrist-worn motion sensors. Sensors 2016, 16, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Siirtola, P.; Röning, J. Recognizing Human Activities User-independently on Smartphones Based on Accelerometer Data. Int. J. Interact. Multimed. Artif. Intell. 2012, 1, 38. [Google Scholar] [CrossRef]

- Schindhelm, C.K. Activity recognition and step detection with smartphones: Towards terminal based indoor positioning system. In Proceedings of the IEEE International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Sydney, Australia, 9–12 September 2012; pp. 2454–2459. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A mobile platform for real-time human activity recognition. In Proceedings of the Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 14–17 January 2012; pp. 667–671. [Google Scholar] [CrossRef]

- Kose, M.; Incel, O.D.; Ersoy, C. Online Human Activity Recognition on Smart Phones. In Proceedings of the 2nd International Workshop on Mobile Sensing: From Smartphones and Wearables to Big Data, Beijing, China, 16–20 April 2012; pp. 11–15, ISBN 978-1-4503-1227-1. [Google Scholar]

- Gomes, J.B.; Krishnaswamy, S.; Gaber, M.M.; Sousa, P.A.C.; Menasalvas, E. MARS: A personalised mobile activity recognition system. In Proceedings of the 2012 IEEE 13th International Conference on Mobile Data Management (MDM 2012), Bengaluru, Karnataka, India, 23–26 July 2012; pp. 316–319. [Google Scholar] [CrossRef]

- Lane, N.; Mohammod, M.; Lin, M.; Yang, X.; Lu, H.; Ali, S.; Doryab, A.; Berke, E.; Choudhury, T.; Campbell, A. BeWell: A Smartphone Application to Monitor, Model and Promote Wellbeing. In Proceedings of the 5th International ICST Conference on Pervasive Computing Technologies for Healthcare, Dublin, Ireland, 23–26 May 2011. [Google Scholar] [CrossRef]

- Frank, J.; Mannor, S.; Precup, D. Activity recognition with mobile phones. Mach. Learn. Knowl. Discov. Databases 2011, 6913, 2–5. [Google Scholar]

- Khan, A.M.; Siddiqi, M.H.; Lee, S.W. Exploratory data analysis of acceleration signals to select light-weight and accurate features for real-time activity recognition on smartphones. Sensors 2013, 13, 13099–13122. [Google Scholar] [CrossRef]

- Khan, A.M.; Tufail, A.; Khattak, A.M.; Laine, T.H. Activity recognition on smartphones via sensor-fusion and KDA-based SVMs. Int. J. Distrib. Sens. Netw. 2014, 10, 503291. [Google Scholar] [CrossRef]

- Vo, Q.V.; Hoang, M.T.; Choi, D. Personalization in mobile activity recognition system using-medoids clustering algorithm. Int. J. Distrib. Sens. Netw. 2013, 9, 315841. [Google Scholar] [CrossRef]

- Dernbach, S.; Das, B.; Krishnan, N.C.; Thomas, B.L.; Cook, D.J. Simple and Complex Activity Recognition through Smart Phones. In Proceedings of the 2012 Eighth International Conference on Intelligent Environments, Guanajuato, Mexico, 26–29 June 2012; pp. 214–221. [Google Scholar] [CrossRef]

- Chen, Y.; Shen, C. Performance Analysis of Smartphone-Sensor Behavior for Human Activity Recognition. IEEE Access 2017, 5, 3095–3110. [Google Scholar] [CrossRef]

- Khan, A.M.; Lee, Y.K.; Lee, S.Y.; Kim, T.S. Human Activity Recognition via an Accelerometer- Enabled-Smartphone Using Kernel Discriminant Analysis. In Proceedings of the 2010 5th International Conference on Future Information Technology, Busan, Korea, 21–23 May 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Sztyler, T.; Stuckenschmidt, H.; Petrich, W. Position-aware activity recognition with wearable devices. Pervasive Mob. Comput. 2017, 38, 281–295. [Google Scholar] [CrossRef]

- Al Huda, F.; Tolle, H.; Andrie Asmara, R. Realtime Online Daily Living Activity Recognition Using Head-Mounted Display. Int. J. Interact. Mob. Technol. 2017, 11, 67. [Google Scholar] [CrossRef]

- Shoaib, M.; Scholten, H.; Havinga, P. Towards Physical Activity Recognition Using Smartphone Sensors. In Proceedings of the 2013 IEEE 10th International Conference on Ubiquitous Intelligence and Computing and 2013 IEEE 10th International Conference on Autonomic and Trusted Computing, Vietri sul Mere, Italy, 18–21 December 2013; pp. 80–87. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical Human Activity Recognition Using Wearable Sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fahim, M.; Fatima, I.; Lee, S.; Lee, Y.K. Daily Life Activity Tracking Application for Smart Homes using Android Smartphone. In Proceedings of the 2012 14th International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 19–22 February 2012; pp. 241–245. [Google Scholar]

- Huynh, T.; Schiele, B. Analyzing Features for Activity Recognition. In Proceedings of the 2005 Joint Conference on Smart Objects and Ambient Intelligence: Innovative Context-Aware Services: Usages and Technologies, Grenoble, France, 12–14 October 2005; pp. 1–6. [Google Scholar]

- Tahavori, F.; Stack, E.; Agarwal, V.; Burnett, M.; Ashburn, A.; Hoseinitabatabaei, S.A.; Harwin, W. Physical activity recognition of elderly people and people with parkinson’s (PwP) during standard mobility tests using wearable sensors. In Proceedings of the 2017 International Smart Cities Conference (ISC2), Wuxi, China, 14–17 September 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Banos, O.; Galvez, J.M.; Damas, M.; Pomares, H.; Rojas, I. Window Size Impact in Human Activity Recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cornacchia, M.; Ozcan, K.; Zheng, Y.; Velipasalar, S. A Survey on Activity Detection and Classification Using Wearable Sensors. IEEE Sensors J. 2017, 17, 386–403. [Google Scholar] [CrossRef]

- Janidarmian, M.; Fekr, A.R.; Radecka, K.; Zilic, Z. A comprehensive analysis on wearable acceleration sensors in human activity recognition. Sensors 2017, 17, 529. [Google Scholar] [CrossRef]

- Preece, S.J.; Goulermas, J.Y.; Kenney, L.P.; Howard, D. A comparison of feature extraction methods for the classification of dynamic activities from accelerometer data. IEEE Trans. Biomed Eng. 2009, 56, 871–879. [Google Scholar] [CrossRef]

- Figo, D.; Diniz, P.C.; Ferreira, D.R.; Cardoso, J.M.P. Preprocessing techniques for context recognition from accelerometer data. Pers. Ubiquitous Comput. 2010, 14, 645–662. [Google Scholar] [CrossRef]

- Aminian, K.; Robert, P.; Buchser, E.E.; Rutschmann, B.; Hayoz, D.; Depairon, M. Physical activity monitoring based on accelerometry: Validation and comparison with video observation. Med Biol. Eng. Comput. 1999, 37, 304–308. [Google Scholar] [CrossRef] [PubMed]

- Farringdon, J.; Moore, A.; Tilbury, N.; Church, J.; Biemond, P. Wearable sensor badge and sensor jacket for context awareness. In Proceedings of the Digest of Papers, Third International Symposium on Wearable Computers, San Francisco, CA, USA, 18–19 October 1999; pp. 107–113. [Google Scholar] [CrossRef]

- Jeong, D.U.; Kim, S.J.; Chung, W.Y. Classification of posture and movement using a 3-axis accelerometer. In Proceedings of the 2007 International Conference on Convergence Information Technology (ICCIT 2007), Gyeongju, Korea, 21–23 November 2007; pp. 837–844. [Google Scholar] [CrossRef]

- Van Laerhoven, K.; Cakmakci, O. What shall we teach our pants? In Proceedings of the Digest of Papers, Fourth International Symposium on Wearable Computers, Atlanta, GA, USA, 16–17 October 2000; pp. 77–83. [Google Scholar] [CrossRef]

| No | Studied Activities | Sensor Type | Machine Learning Classifier | Source |

|---|---|---|---|---|

| 1 | Ac1–Ac13 | Acc | SVM, ANN | [17] |

| 2 | Ac0–Ac2, Ac4 | Acc | NB, DT, Decision Table | [15] |

| 3 | Ac0–Ac3, Ac4–Ac6, Ac13, Ac15–Ac20 | Acc, LA, Gyro | NB, KNN, DT | [18] |

| 4 | Ac0, Ac5, Ac6, Ac21, Ac22 | Acc | MP, SVM, RF, SL, LMT | [16] |

| 5 | Ac1–Ac6 | Acc | SVM | [14] |

| 6 | Ac0–Ac2, Ac4, Ac14, Ac24 | Acc | KNN, QDA | [19] |

| 7 | Ac1, Ac4–Ac6, Ac13 | Acc, Gyro, light | DT | [20] |

| 8 | Ac0, Ac4, Ac5 | Acc | DT (C4.5) | [21] |

| 9 | Ac0–Ac2, Ac4 | Acc | NB, KNN | [22] |

| 10 | Ac0, Ac4, Ac23, Ac24 | Acc | NB | [23] |

| 11 | Ac0, Ac4, Ac25–Ac27 | Acc, GPS, Microphone | Linear Regression, LR | [24] |

| 12 | Ac0, Ac4, Ac13, Ac28 | Acc | SVM | [25] |

| 13 | Ac0, Ac1, Ac4–Ac6, Ac29 | Acc | ANN | [26] |

| 14 | Ac0–Ac2, Ac4–Ac6, Ac14, Ac37, Ac38, Ac29–Ac31, Ac24 | Acc | SVM, GMM | [27] |

| 15 | Ac4–Ac6, Ac13, Ac14 | Acc | SVM, K-mediods, K-means | [28] |

| 16 | Ac0–Ac3, Ac24, Ac32–Ac36 | Acc, Gyro | MP, NB, BN, Decision Table, BFT, K-star | [29] |

| 17 | Ac4–Ac6, Ac13, Ac28 | Acc, Gyro | KNN, RF, SVM | [30] |

| 18 | Ac0, Ac2, Ac4–Ac6 | Acc | ANN | [31] |

| 19 | Ac0–Ac4, Ac6, Ac28 | Acc, Gyro, Light, MM, sound level data | NB, KNN, ANN, SVM, DT, RF | [32] |

| 20 | Ac0, Ac1, Ac4–Ac6, Ac28 | Acc | ANN | [33] |

| 21 | Ac0–Ac2, Ac4–Ac6 | Acc, GPS, Gyro, MM | NB, SVM, ANN, KNN, LR, RBC, DT | [34] |

| Subject | Weight (kg) | Height (cm) | Average Time (s) Spent on Single ST (C3) | Average Time (s) Spent on Single STB (C4) | Average Time (s) on Single STP (C5) | Total Time (s) of ST (20 × C3) | Total Time (s) of STB (20 × C4) | Total Time (s) of STP (20 × C5) |

|---|---|---|---|---|---|---|---|---|

| S1 | 90 | 177.8 | 16 | 6 | 6 | 320 | 120 | 120 |

| S2 | 56 | 168 | 15 | 4 | 8 | 300 | 80 | 160 |

| S3 | 65 | 173 | 12 | 5 | 7 | 240 | 100 | 140 |

| S4 | 97 | 183 | 15 | 6 | 8 | 300 | 120 | 160 |

| S5 | 75 | 175 | 18 | 5 | 6 | 360 | 100 | 120 |

| S6 | 85 | 178 | 15 | 7 | 7 | 300 | 140 | 140 |

| S7 | 80 | 178.3 | 17 | 5 | 5 | 340 | 100 | 100 |

| S8 | 90 | 175.2 | 20 | 5 | 8 | 400 | 100 | 160 |

| S9 | 70 | 170.6 | 14 | 5 | 5 | 280 | 100 | 100 |

| S10 | 62 | 172.2 | 16 | 6 | 9 | 320 | 120 | 180 |

| Total time of recorded data from body position | 3160 | 1080 | 1380 | |||||

| Feature set | Features | Short Notation |

|---|---|---|

| 1 | Max | fs1 |

| 2 | Max, Min | fs2 |

| 3 | Max, Min, Median | fs3 |

| 4 | Max, Min, Median, Mean | fs4 |

| 5 | Max, Min, Median, Mean, Percentile | fs5 |

| 6 | Max, Min, Median, Mean, Percentile, Standard Deviation | fs6 |

| Feature | Computational Cost | Storage Requirement | Suitable for Mobile-Phone |

|---|---|---|---|

| Max | very low | very low | Yes |

| Min | very low | very low | Yes |

| Median | medium | very low | Yes |

| Mean | very low | very low | Yes |

| Percentile | very low | very low | Yes |

| Standard deviation | very low | very low | Yes |

| Predicted Class | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Feature Set 1 | Feature Set 5 | ||||||||

| ST | STB | STP | Precision (%) | ST | STB | STP | Precision (%) | ||

| Given Class | ST | 7 | 0 | 18 | 0 | ||||

| STB | 6 | 1 | 95 | 23 | 9 | ||||

| STP | 6 | 11 | 91 | 7 | 29 | ||||

| Recall(%) | |||||||||

| Predicted Class | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Feature Set 1 | Feature Set 5 | ||||||||

| ST | STB | STP | Precision (%) | ST | STB | STP | Precision (%) | ||

| Given Class | ST | 4 | 1 | 10 | 7 | ||||

| STB | 14 | 5 | 9 | 20 | |||||

| STP | 9 | 6 | 2 | 10 | |||||

| Recall (%) | |||||||||

| Predicted Class | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Feature Set 1 | Feature Set 5 | ||||||||

| ST | STB | STP | Precision (%) | ST | STB | STP | Precision (%) | ||

| Given Class | ST | 5 | 1 | 14 | 2 | ||||

| STB | 4 | 1 | 14 | 3 | |||||

| STP | 3 | 8 | 3 | 5 | |||||

| Recall (%) | |||||||||

| Predicted Class | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Feature Set 1 | Feature Set 5 | ||||||||

| ST | STB | STP | Precision (%) | ST | STB | STP | Precision (%) | ||

| Given Class | ST | 6 | 0 | 7 | 2 | ||||

| STB | 11 | 1 | 22 | 10 | |||||

| STP | 7 | 11 | 1 | 12 | |||||

| Recall (%) | |||||||||

| Predicted Class | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Feature Set 1 | Feature Set 5 | ||||||||

| ST | STB | STP | Precision (%) | ST | STB | STP | Precision (%) | ||

| Given Class | ST | 7 | 3 | 7 | 2 | ||||

| STB | 23 | 8 | 19 | 24 | |||||

| STP | 19 | 11 | 4 | 16 | |||||

| Recall (%) | |||||||||

| Predicted Class | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Feature Set 1 | Feature Set 5 | ||||||||

| ST | STB | STP | Precision (%) | ST | STB | STP | Precision (%) | ||

| Given Class | ST | 2 | 1 | 9 | 0 | ||||

| STB | 24 | 0 | 21 | 1 | |||||

| STP | 6 | 4 | 0 | 3 | |||||

| Recall (%) | |||||||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmad, N.; Han, L.; Iqbal, K.; Ahmad, R.; Abid, M.A.; Iqbal, N. SARM: Salah Activities Recognition Model Based on Smartphone. Electronics 2019, 8, 881. https://doi.org/10.3390/electronics8080881

Ahmad N, Han L, Iqbal K, Ahmad R, Abid MA, Iqbal N. SARM: Salah Activities Recognition Model Based on Smartphone. Electronics. 2019; 8(8):881. https://doi.org/10.3390/electronics8080881

Chicago/Turabian StyleAhmad, Nafees, Lansheng Han, Khalid Iqbal, Rashid Ahmad, Muhammad Adil Abid, and Naeem Iqbal. 2019. "SARM: Salah Activities Recognition Model Based on Smartphone" Electronics 8, no. 8: 881. https://doi.org/10.3390/electronics8080881

APA StyleAhmad, N., Han, L., Iqbal, K., Ahmad, R., Abid, M. A., & Iqbal, N. (2019). SARM: Salah Activities Recognition Model Based on Smartphone. Electronics, 8(8), 881. https://doi.org/10.3390/electronics8080881