Figure 1.

The geometric structure of microphones and sound from 2D space.

Figure 1.

The geometric structure of microphones and sound from 2D space.

Figure 2.

The principle of sound angle measurement from 2D space.

Figure 2.

The principle of sound angle measurement from 2D space.

Figure 3.

Geometry of differential mobile robot.

Figure 3.

Geometry of differential mobile robot.

Figure 4.

ICR of a mobile robot.

Figure 4.

ICR of a mobile robot.

Figure 5.

Radius of rotation based on locations of sound sources.

Figure 5.

Radius of rotation based on locations of sound sources.

Figure 6.

Driving performance experiment of a mobile robot.

Figure 6.

Driving performance experiment of a mobile robot.

Figure 7.

To compare with curvature and without curvature from the straight line path.

Figure 7.

To compare with curvature and without curvature from the straight line path.

Figure 8.

To compare with curvature and without curvature from the S-curved line path.

Figure 8.

To compare with curvature and without curvature from the S-curved line path.

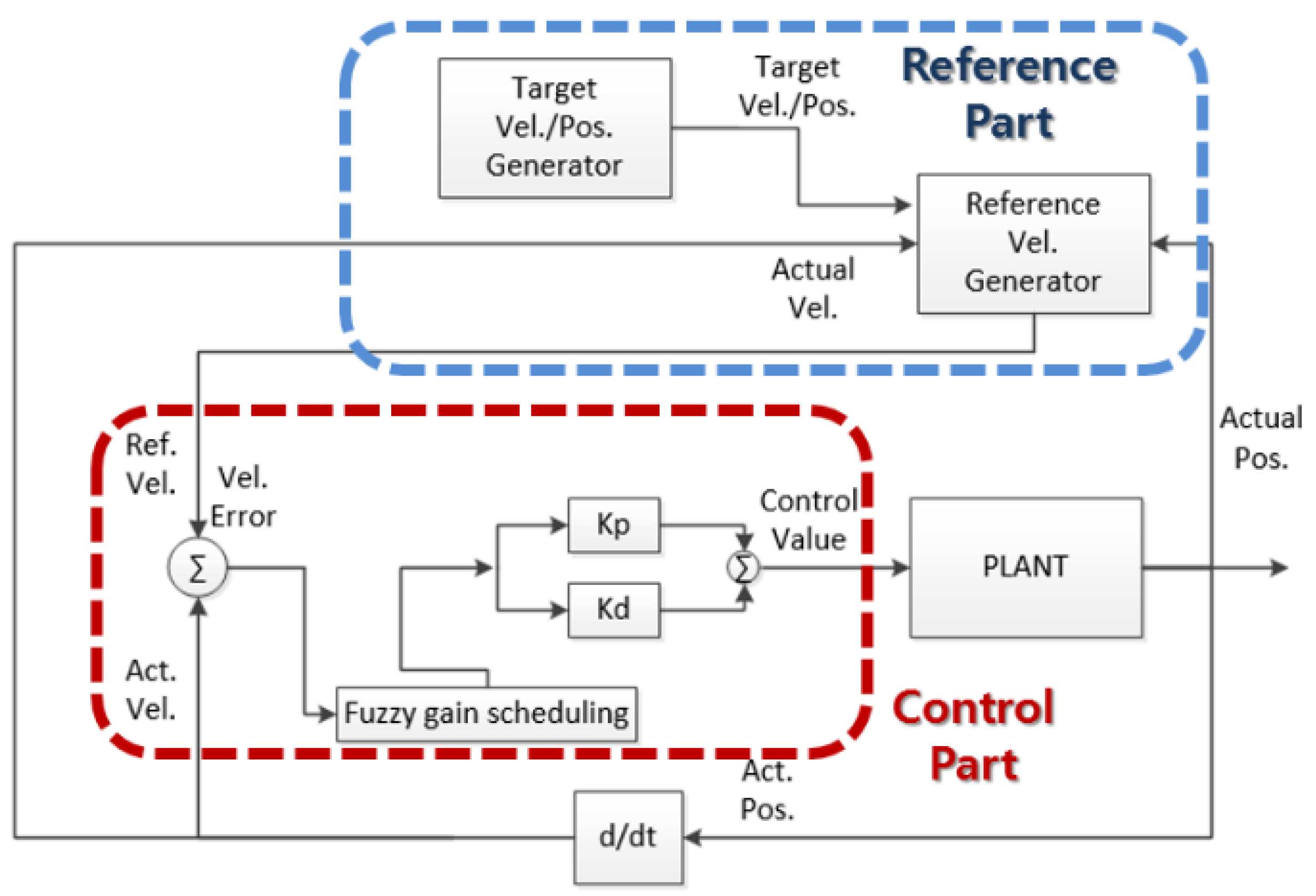

Figure 9.

Fuzzy gain scheduling of the PD controller structure.

Figure 9.

Fuzzy gain scheduling of the PD controller structure.

Figure 10.

PD control system.

Figure 10.

PD control system.

Figure 11.

Structure of the fuzzy inference system.

Figure 11.

Structure of the fuzzy inference system.

Figure 12.

Membership functions of error and dot-error.

Figure 12.

Membership functions of error and dot-error.

Figure 13.

Curvature tracking in the static position.

Figure 13.

Curvature tracking in the static position.

Figure 14.

Velocity profile of curvature tracking in the static position.

Figure 14.

Velocity profile of curvature tracking in the static position.

Figure 15.

Curvature tracking in the dynamic position.

Figure 15.

Curvature tracking in the dynamic position.

Figure 16.

Velocity profile of curvature tracking in the dynamic position.

Figure 16.

Velocity profile of curvature tracking in the dynamic position.

Figure 17.

Comparison of distances between static and dynamic curvature tracking.

Figure 17.

Comparison of distances between static and dynamic curvature tracking.

Figure 18.

Overall system.

Figure 18.

Overall system.

Figure 19.

Flowchart of the overall system.

Figure 19.

Flowchart of the overall system.

Figure 20.

Moving object (Master).

Figure 20.

Moving object (Master).

Figure 21.

Mobile robot (Slave).

Figure 21.

Mobile robot (Slave).

Figure 22.

Experimental environment.

Figure 22.

Experimental environment.

Figure 23.

Straight line estimation.

Figure 23.

Straight line estimation.

Figure 24.

Velocity profile of the straight line estimation robot.

Figure 24.

Velocity profile of the straight line estimation robot.

Figure 25.

Real-time estimated locations (a), (b). (c) (, , and ) of sound sources in the straight trajectory estimation.

Figure 25.

Real-time estimated locations (a), (b). (c) (, , and ) of sound sources in the straight trajectory estimation.

Figure 26.

The distance and angle of the straight orbit experiment.

Figure 26.

The distance and angle of the straight orbit experiment.

Figure 27.

S-curved line estimation.

Figure 27.

S-curved line estimation.

Figure 28.

Velocity profile of curved line estimation robot.

Figure 28.

Velocity profile of curved line estimation robot.

Figure 29.

Real-time estimated locations (a), (b). (c) (, , and ) of sound sources in curved trajectory estimation.

Figure 29.

Real-time estimated locations (a), (b). (c) (, , and ) of sound sources in curved trajectory estimation.

Figure 30.

The distance and angle of the curve orbit experiment.

Figure 30.

The distance and angle of the curve orbit experiment.

Table 1.

Distance, position error, and driving time without curvature trajectory.

Table 1.

Distance, position error, and driving time without curvature trajectory.

| Distance (m) | Error (m) | Time (m/s) |

|---|

| 0.1 | 2.050 | 0.050 | 19570 |

| 0.2 | 2.303 | 0.303 | 10870 |

| 0.3 | 2.388 | 0.388 | 7670 |

| 0.4 | 2.457 | 0.457 | 5770 |

| 0.5 | 2.734 | 0.734 | 5310 |

Table 2.

Rotation radius, distance, position error, and driving time with curvature trajectory.

Table 2.

Rotation radius, distance, position error, and driving time with curvature trajectory.

| Rotation (m) | Distance (m) | Error (m) | Time (m/s) |

|---|

| 0.1 | 1.944 | 3.054 | 0.057 | 32946 |

| 0.2 | 2.174 | 3.415 | 0.171 | 18480 |

| 0.3 | 2.203 | 3.460 | 0.280 | 11962 |

| 0.4 | 2.392 | 3.757 | 0.401 | 9562 |

| 0.5 | 2.488 | 3.908 | 0.656 | 8542 |

Table 3.

The trajectory results of the root mean square error (RMSE).

Table 3.

The trajectory results of the root mean square error (RMSE).

| Trajectory | RMSE |

|---|

| 0.1 | Non-curvature | 0.0025 |

| Curvature | 0.0032 |

| 0.2 | Non-curvature | 0.0918 |

| Curvature | 0.0292 |

| 0.3 | Non-curvature | 0.1505 |

| Curvature | 0.0784 |

| 0.4 | Non-curvature | 0.2088 |

| Curvature | 0.1608 |

| 0.5 | Non-curvature | 0.5388 |

| Curvature | 0.4303 |

Table 4.

Fuzzy inference for .

Table 4.

Fuzzy inference for .

| | | NB | NM | NS | Z | PS | PM | PB |

|---|

| |

|---|

| NB | B | B | B | B | B | B | B |

| NM | S | B | B | B | B | B | S |

| NS | S | S | B | B | B | S | S |

| Z | S | S | S | B | S | S | S |

| PS | S | S | B | B | B | S | S |

| PM | S | B | B | B | B | B | S |

| PB | B | B | B | B | B | B | B |

Table 5.

Fuzzy inference for .

Table 5.

Fuzzy inference for .

| | | NB | NM | NS | Z | PS | PM | PB |

|---|

| |

|---|

| NB | S | S | S | S | S | S | S |

| NM | B | B | S | S | S | B | B |

| NS | B | B | B | S | B | B | B |

| Z | B | B | B | B | B | B | B |

| PS | B | B | B | S | B | B | B |

| PM | B | B | S | S | S | B | B |

| PB | S | S | S | S | S | S | S |

Table 6.

Velocity profile along the curvature in the static position.

Table 6.

Velocity profile along the curvature in the static position.

| | Wheel | Left | Right |

|---|

| Method | | Velocity | Acceleration | Distance | Velocity | Acceleration | Distance |

|---|

| Case 1 | 0.342 | 0.342 | 2.528 | 0.258 | 0.258 | 1.9 |

Table 7.

Velocity profile of curvature in the dynamic position.

Table 7.

Velocity profile of curvature in the dynamic position.

| | Wheel | Left | Right |

|---|

| Method | | Velocity | Acceleration | Distance | Velocity | Acceleration | Distance |

|---|

| Case 1 | 0.384 | 0.384 | 2.214 | 0.216 | 0.216 | 0.785 |

| Case 2 | 0.216 | 0.216 | 0.785 | 0.384 | 0.384 | 1.413 |

Table 8.

Results of the experiment on static and dynamic curvature trajectory.

Table 8.

Results of the experiment on static and dynamic curvature trajectory.

| Curvature | Control | Distance (m) | Time (s) | Error (m) |

|---|

| Case 1 (Static) | CPID | 2.35 | 8 | 0.146 |

| CFGS-PD | 2.33 | 7.92 | 0.044 |

| Case 2 (Dynamic) | CPID | 2.25 | 8.36 | 0.198 |

| CFGS-PD | 2.25 | 8.11 | 0.085 |

Table 9.

Results of RMSE.

Table 9.

Results of RMSE.

| Curvature | Control | RMSE |

|---|

| Case 1 (Static) | CPID | 0.0213 |

| CFGS-PD | 0.0019 |

| Case 2 (Dynamic) | CPID | 0.0392 |

| CFGS-PD | 0.0072 |

Table 10.

Hardware specifications of the moving object.

Table 10.

Hardware specifications of the moving object.

| List | Specification |

|---|

| Size (mm) | 352 (W) × 326 (L) × 320 (H) |

| Weight (kg) | 5.3 |

| Distance between wheels (mm) | 290 |

| Radius of wheel (mm) | 60 |

Table 11.

Hardware specifications of a mobile robot.

Table 11.

Hardware specifications of a mobile robot.

| List | Specification |

|---|

| Size (mm) | 280 (W) × 350 (L) × 260 (H) |

| Weight (kg) | 4.5 |

| Distance between wheels (mm) | 289 |

| Radius of wheel (mm) | 60 |

Table 12.

Components used in the experiment.

Table 12.

Components used in the experiment.

| Mobile robot |

| Article | Manufacturer | Goods |

| Mobile robot | PNU_IRL-LAB | PNU_IRL-LAB |

| Motor drive | NTRexLAB | NT-DC20A |

| DC motor | D&J | IG-32PGM |

| MCU | TI | LM3s8962 |

| Amplifier kit | Velleman | K2372 |

| Bluetooth module | Sena_Tech | PARANI-ESD200 |

| Battery | Anyrc | 11.1V-LiPo-Battery |

| Moving object |

| Article | Manufacturer | Goods |

| Moving object | NTRexLAB | NT-Commander-1 |

| MCU | TI | LM3s8962 |

| DC motor | D&J | RB-35GM |

| Battery | Anyrc | 11.1V-LiPo-Battery |

| Speaker | INKEL | INKEL 6K28G |

| Robot control board | NTRexLAB | NT-ControllerBv1 |

| Bluetooth module | Firmtech | FB100AS |