1. Introduction

In the last three decades, studies on autonomous driving have made remarkable progress due to the efforts of many researchers [

1,

2]. In the field, driver convenience is enhanced by providing adaptive cruise control to maintain a constant vehicle speed and a highway driving assist system to prevent lane departure on highways [

3]. However, research studies on urban environments are still scarce compared to highways, which are relatively simple environments mostly containing vehicles and roads. Unlike highways, various dangerous situations happen in urban environments. Obstacles such as construction sites often block roads or cover lanes, as shown in

Figure 1a, and sometimes pedestrians appear suddenly on the road (

Figure 1b). In addition, vehicles close to the opposite lane can be critically dangerous to other vehicles in the adjacent lane. Thus, autonomous driving algorithms must guarantee safety by making flexible decisions [

4,

5].

One of the difficult tasks in autonomous driving is lane recognition when considering extreme situations, such as crowded roads, shadows, and dazzling light. [

6]. Until recently, most conventional algorithms required mathematical description of low-level features, such as color patterns and straight lines [

7,

8]. Adopting deep learning has led to extraction of lanes without feature description. Among the vision deep learning methods, recurrent neural networks (RNN) pass information along each row or column so that each pixel can only receive information from the same row or column [

9,

10]. Zhang et al. proposed this design in order to learn the geometrical conditions that make up a road, including lane boundaries and road areas. This design performs lane boundary segmentation and road area segmentation at the same time [

11]. On the other hand, spatial convolutional neural networks (SCNNs) implement sequential message passing to utilize structural information [

6]. Although parts of traffic lanes in urban environments could be obscured by obstacles (e.g., other vehicles, traffic cones, shadows, etc.), this method predicts the obscured lane locations by extending the other parts of traffic lanes that are not obscured. Moreover, performance of SCNN in terms of processing time can be improved by changing the SCNN network model to be sparser.

To determine the motion of autonomous vehicles, information about road conditions is integrated. Search- and sampling-based planning and optimization are the ways to determine a path by integrating information from sensors [

12,

13,

14]. These path planning algorithms divide the space into compartments and traverses, checking the state identified by the sensor information. This state space is usually represented as an occupancy grid or lattice [

15]. The graph searching algorithm gives the solution based on the evaluation criteria. The A* algorithm, one of the search-based algorithms, decides on the path by using the Voronoi cost function to define the path length and proximity to obstacles [

16,

17]. This algorithm works well for searching paths in a known space. However, it has poor memory efficiency and performance in large areas [

12].

Recently, technologies connecting other facilities around autonomous vehicles have been developed. Especially, vehicle-to-everything (V2X) communication is an essential technique in intelligent transport systems (ITS) to provide real-time information (e.g., traffic conditions and accidents [

18,

19,

20,

21]). For example, by utilizing the compensated route waypoints and V2X technologies, critical performance (relating to road safety, driving efficiency, situational awareness, etc.) is enhanced in highly demanding situations, such as at intersections and during lane changes.

In this paper, a flexible path planning algorithm is proposed for urban environments, using a novel sensor integration method, namely the sensor-weighted integration field (SWIF) method, which is based on the newly proposed vision deep learning technique (i.e., the spare spatial convolution neural network (SSCNN) technique). By processing all sensor data, including vision, LiDAR, and GPS data, the SWIF algorithm provides a 2D field measuring 300 pixels × 300 pixels (6m × 6m in distance units). The proposed algorithm is capable of assigning different weights to each pixel, with higher weights representing safer areas. In particular, utilizing SSCNN enables not only highly accurate lane recognition, but also means that obscured or erased traffic lanes can be detected based on the recognized lane parts. In addition, traffic lanes are also recognized both in normal maneuvering and in abnormal maneuvering scenarios. Moreover, both SWIF and SSCNN are developed to become real-time systems by making the network structures and functions sparse. Based on the 2D weighted field method and as a result of SWIF and SSCNN, a cost field is implemented to derive the minimal steering angle needed to avoid obstacles without route or lane departure. Thus, this algorithm enables safe and flexible motion planning in real time, and was verified through experiments in urban scenarios. Consequently, the proposed algorithm enables safe driving and allows for sudden changes.

The paper is organized as follows. In

Section 2, the specifications of sensors used in the experiments are introduced. In

Section 3.1, after reviewing existing the SCNN model, the SSCNN network model for fast lane recognition is explained. In

Section 3.2, the SWIF algorithm is introduced. In

Section 3.3, the motion planning and control method used to generate steering angles and vehicle speeds is described. In

Section 4, experimental results are demonstrated. In

Section 5, conclusions are described.

3. Proposed Methodology

Three types of sensor data and map information were integrated for the recognition of diverse road environments. The sparse spatial convolutional neural network (SSCNN), a vision deep learning method, was proposed for lane recognition. It is capable of detecting the adjacent four traffic lines in any driving direction. The route from origin to destination in an absolute coordinate is obtained from the map and transformed into relative route data using GPS. In addition, by utilizing detected object data from LiDAR, the dangerous and safe areas are defined. The above pre-processing data are integrated into the SWIF and are then used for motion planning to determine the steering angle and vehicle speed. The proposed algorithm flow is shown in

Figure 3.

3.1. Vision Deep Learning: Sparse Spatial CNN

On urban roads, there are various types of lane classes, such as straight roads, curved roads, crossroads, and diverse road markings, and the lane markings can be obscured or erased. Moreover, while avoiding or overtaking objects, vehicles may have to maneuver to various locations across diverse roads. Therefore, this section describes the novel dataset, which considers lane extension and maneuvers in many directions, along with SSCNN, which is a lighter and faster deep learning network model than the previous ones. In addition, SSCNN was designed to obtain data for the adjacent four traffic lines from a road image.

3.1.1. Dataset

Existing datasets did not consider steering and movement on the road. Regarding the Caltech lanes dataset (Aly, 2008), Tusimple benchmark dataset (Tusimple, 2017) [

22], and the CULane dataset (Pan, 2018) [

6] datasets, most of them looked at the vanishing point direction. Moreover, regarding the inclination of a lane due to the steering on the lane, the recognition rate was critically low. Thus, a dataset containing these issues was generated for deep learning.

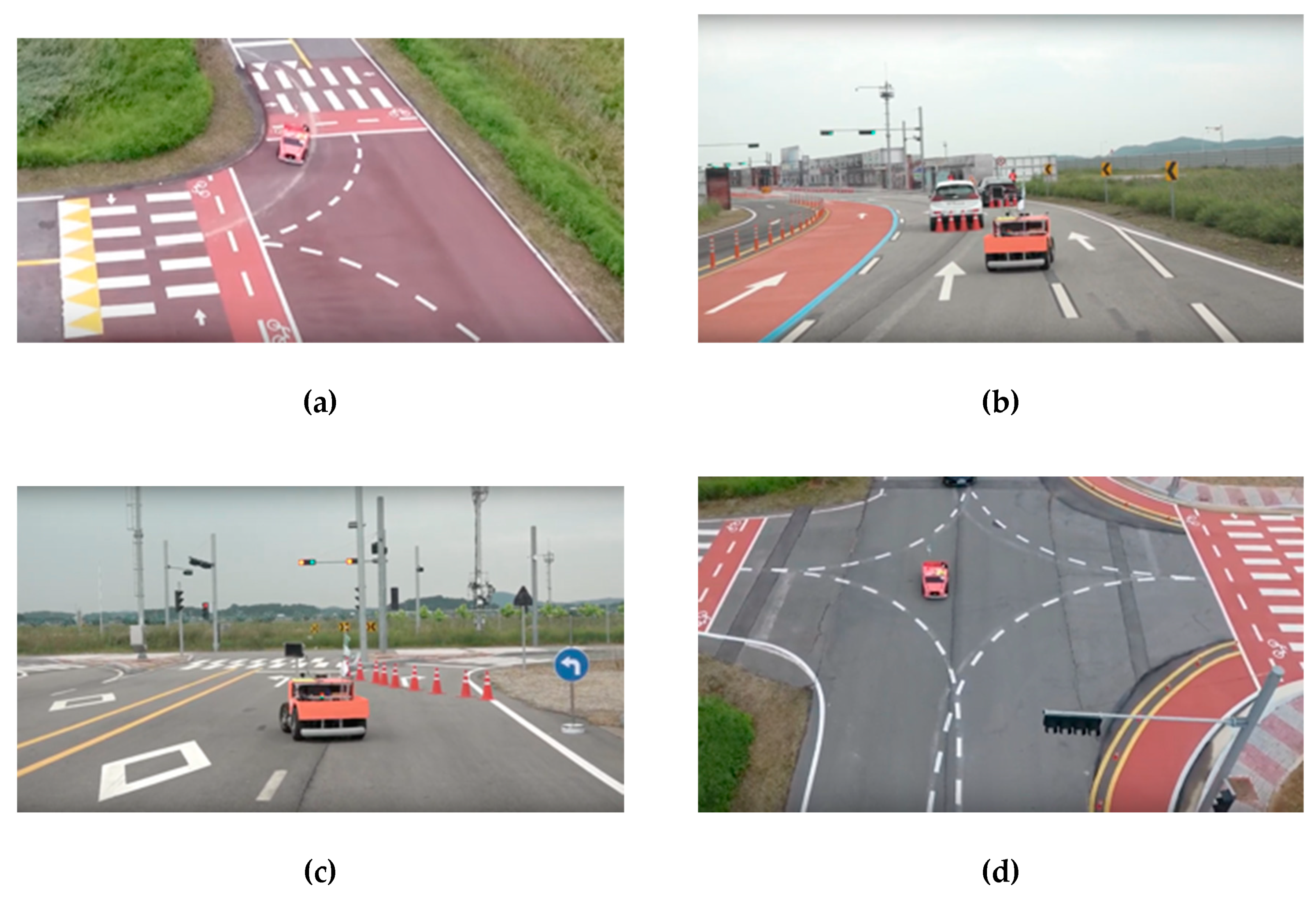

With the sensor viewing forward and horizontally on the vehicle, the data were collected in the K-City facility, which is built for autonomous vehicle testing in Korea. In total, 7175 frames were extracted from the road image. The urban dataset was separated into 6011 frames as training sets and 264 frames as test sets. The training dataset was also divided into normal and abnormal maneuvering, while classifying the classes into straight roads, curved roads, crossroads, and road markings (e.g., arrows, diamonds, speed bumps, and crosswalks). In general, normal maneuvering means that a vehicle drives properly along the center of the lane, and abnormal maneuvering means that a vehicle staggers in any direction, regardless of lane direction. The images for each classification are shown in

Figure 4. As shown in

Figure 5, the ratios of each class are represented. Especially, 27.78% of the dataset consisted of abnormal maneuvering, and 72.22% of the dataset consisted of normal maneuvering. Moreover, a total of four adjacent traffic lines were labeled, and road markings were not labeled. The labeling was proceeded by placing points on the coordinates in the image to create a spline through the points.

3.1.2. Proposed Network Model

Among the most commonly used deep learning methods, the neural network structure of the combined Markov random field–conditional random field (MRF–CRF) method is the basic structure for deep learning. In this structure, information is conveyed between every neuron, as shown in

Figure 6a. The structure of the SCNN was designed to transfer information only between neighboring neurons from the next slice, to reduce unnecessary processes and also to reduce execution time. The structure of the SCNN network model is expressed as a 3D tensor with dimensions C × H × W, where C is the number of channels, and H and W are the number of rows and columns, respectively. When the information is conveyed downward or upward in the existing SCNN, the information is transferred immediately to the next row. Moreover, when the information is conveyed rightward or leftward, the information is transferred immediately to the next column (Pan, 2018) [

6]. For example, the process of information transfer to the right is simply expressed in

Figure 6b. In other words, through SCNN, information is only transferred between neighboring slices.

In order to apply the proposed algorithm to autonomous vehicles in urban environments, it is important to maintain the high performance of the lane recognition rates in all driving environments, including during normal and abnormal maneuvering. In addition, a lighter network model in terms of calculation is required for real-time operation with a high sampling rate. Thus, in order to design a lighter network model in terms of computation, the neural network structure of the spatial convolutional neural network (SCNN), which showed the highest results in lane recognition performance, was modified. When the existing SCNN was used, the computation phase of SCNN took the most time in the entire execution time of the integrated system. Thus, the new network model was proposed to increase the computational efficiency of the existing SCNN. Based on the network structure of the SCNN, the

n rows or

n columns are grouped to make the transfer process sparser, and then the information is transferred between the neighboring groups (not slices), as shown in

Figure 6d. In other words, when transferring downwards or upwards, the

n rows are grouped, and information is conveyed through

H/n slices. When transmitting rightward or leftward, the

n columns are grouped, and information is conveyed through

W/n slices. With this method, the number of transfers was reduced by 1/

n times. In addition, the structural property of the MRF–CRK, which reprocesses the result and gets a more accurate one, was introduced in each grouped slice, as shown in

Figure 6c,e. For example, when information is transferred rightward through the SSCNN from the start to the end of the tensor, the processes shown in

Figure 6c–e are performed sequentially. The network model formed in these ways was newly defined as the sparse spatial convolutional neural network (SSCNN). In application of the SSCNN to our proposed system, the number of

n was set as 2. In

Figure 6, 1 × 4 × 4 tensors are represented for simplicity instead of a large-size 3D tensor.

These models were evaluated for urban autonomous driving with the Tusimple benchmark dataset, CULane dataset, and the above dataset. In real-world applications, the predicted center points of each lane were extracted by applying the argmax function to the horizontal slice of the 2D tensor output from the neural network. Additionally, the Gaussian filter was used to smooth the output values. Parameters were manually tuned according to the output tensor size and noise level.

3.2. Proposed Sensor Integration Algorithm: Sensor-Weighted Integration Field (SWIF)

When an autonomous vehicle is traveling, it is important to know not only where the lanes are, but also in which direction to go and which are is safe from dangerous factors. For these factors, a simple and accurate sensor integration method, named SWIF, is proposed, which uses three sensors to recognize the environment, decides where the safer area is in which to maneuver, and then minimizes the overall risk during vehicle travel.

3.2.1. Lane Data

The SSCNN identifies the locations of the four adjacent lanes in the road image, which are named “left-left” (or blue line in the image), “left” (or green line in the image), “right” (or red line in the image), and “right-right” (or yellow line in the image), in order from left to right. Moreover, if any of the four lines are not recognized, this part is specified as “none”. For example, as shown in

Figure 7, only three traffic lines are detected on a two-lane road because there is no “right-right lane”. Considering the hardware position of the vision sensor (i.e., viewing angle and height), the detected lanes are changed into curves, as seem from the top by using the warping function in OpenCV. Moreover, the curves are expressed in the field (the size of which is 300 pixels × 300 pixels) by cutting the elements outside the 6 m wide by 6 m long area. This field is defined as a lane field. Moreover, in the lane field, a weighted lane field is formed by applying the weighting factor in the following process shown in

Table 2.

Figure 8a–c are the schematic diagrams that describe how much weight is applied on each column and each line. Based on the location of the red dot (representing the column component of the left traffic line in a random row), weights are given on the right side of red dot, as shown white area in

Figure 8a. Conversely, for the right traffic line, weights are applied on the left side, as shown in

Figure 8b. The white dots in

Figure 8,b represent the weights, and the more white dots there are in each column, the more weight is added. Because the width between traffic lines is about 3 m, higher weights are applied to the point 1.5 m away from the left line, and lower weights are applied thereafter. The same method is applied to the right line. Moreover, if the weight for the left line and the one for right line are applied to the same column, the weight value is decided by superposition. Due to this weighting method, even if one line is hidden and not recognized, the highest weights are given to the center of the lane. In addition, even when the lane width is not constant, the highest weights can be given to the center of the lane, as shown in

Figure 8c. The weighted lane field is formed by applying the above process to every row. The weighted lane field shown in

Figure 8d is the result of the application of the above process to the one shown in

Figure 7.

3.2.2. LiDAR Data

From the LiDAR data, 571 distance data points from −45° to 225° based on the location of LiDAR data was obtained. After transforming these data to point data with ordinary coordinates, the points were clustered by classifying them as a single object. Based on the clustering process, the circle with the minimum size that fit the clustered points was determined as the object. In this process, the distance between neighboring points was less than 10 cm. Thus, the object data included the location and size of objects. In this process, the average filtering method was also applied to remove the spiking noise.

To prevent obstacle collision in advance, the field formed by LiDAR was divided into dangerous and safe areas. The fitted circles, which represent objects, were expressed by radius R and the center locations (

cenX,

cenY). As shown in

Figure 9a, these circles represent the detected objects, and can be also represented with the following parameters:

tD is the distance between LiDAR position (

Lx,

Ly) and the tangent points of the object;

cenDist is the distance from (

Lx,

Ly) to the center of the object;

∠ l and

∠ s represent the angle to the center of the object and the half angle between two tangents, respectively. Moreover, the back area of the obstacles, which was not detected by LiDAR, was defined as the unknown area, being represented as form of a four-point polygon. Equation (1) expresses each point {(

x1, y1), (

x2, y2), (

x3, y3), (

x4, y4)} forming the polygon.

As shown in

Figure 9b, the LiDAR field was divided into an object area (red), unknown area (yellow), safe area (black). The red area represents obstacles and the yellow area is undetected, defined as the dangerous area. Before moving on to the next step, the object field formed as above is changed to a binary weighted field, as shown in

Figure 10a, which shows the dangerous area in black and also shows the other area in gray.

However, rather than being represented by two parted areas, it is necessary to define which points are safer than other points in the safe area. Equation (2) expands the dangerous area by a constant width with the

M2 value shown in

Figure 10b.

M1 is a matrix field measuring 300 pixels × 300 pixels (6 m × 6 m), which is the sample field for LiDAR, as shown in

Figure 10a.

M2 is a window measuring 10 pixels × 10 pixels (0.2 m × 0.2 m), as shown in

Figure 10b. As a result of Equation (2), the weighted object field is formed, as shown in

Figure 10c.

3.2.3. GPS Data

When traveling to a destination, the vehicle should keep the route that can be obtained from the map. The vehicle requires the relative route when traveling in order to go the right way, but the route data of the map is expressed as absolute coordinates

XAOYA. For this reason, Equations (3) and (4) are utilized. With the values from the GPS data, such as

My position O’ (which is the absolute location of the vehicle) and heading angle

θ, in Equation (3) the coordinate from

XAOYA is changed to the relative coordinate

XRO’YR. Finally, in Equation (4), to synchronize lane and object fields, waypoints are transformed into the coordinate

X’RO’’Y’R.

In forming the weighted route field shown in

Figure 11b, the waypoints and their surroundings are weighted. Based on the location of each waypoint, the surrounding area of the point is divided into circles of four different radii. The smaller the circle, the higher the weight that is given, and the outer area of the largest circle is not weighted. Additionally, for regions that overlap with other waypoints, the higher weight value out of the two is applied. Thus, when regions are closer to the waypoints, their weights are higher. This ensures that when an object is present on the path it is not completely off, while it is off the path when traveling.

3.2.4. SWIF Algorithm

By summing the three types of weighed fields, SWIF is formed for the situation shown in

Figure 12a,b (shown in

Figure 12c,d respectively). In SWIF, each coordinate has a weight ranging from 0 to 255, indicating the degree of safety.

3.3. Proposed Motion Planning and Maneuvering Control

Therefore, the SWIF algorithm is able to note whether each point is safer in the formed field. In order to judge which area (rather than which point) in the field is safer for maneuvering. The desired steering angle and speed of the vehicle are obtained by the cost field for motion planning. Consequently, these two variables are used for the reference input values in the closed-loop vehicle maneuvering controller, which is implemented with PID plus an integral anti-windup scheme to follow the safe area obtained by the SWIF algorithm.

3.3.1. Vehicle Speed and Steering Angle Decisions

The cost field, as shown in

Figure 13a, has four parameters,

d,

width,

θ1, and

θ2. Here,

d is the half height of the cost field as a scanning range, and

width means the width of the vehicle. These two parameters are constant. On the other hand,

θ1 and

θ2 are the variables that vary in the cost field. Here,

θ1 is the candidate of the desired steering angle and

θ2 is the potential angle for checking whether the next area of

θ1 is safer or not. Thus, each cost field contains different

θ1 and

θ2 values.

The cost field is expressed as M3, and the sample of SWIF is shown as M4 in Equation (5) and

Figure 13. The size of M3 and M4 is the same as 300 pixels × 300 pixels (or 6 m × 6 m). As shown in Equation (5), M3 and M4 are operated as a Hadamard product, and then the theta weighting field score is derived as a scalar value by summing all of the elements in the operated matrix. Moreover, by varying the thetas, different scores are derived.

Finally, as shown in

Figure 13c, the

θ1 and

θ2 are determined as the values of the cost field from which the maximum score is derived. Thus,

θ1 becomes the desired steering setting.

3.3.2. Maneuvering Control Algorithm

Using the SWIF algorithm with SSCNN, the desired steering angle and speed values are derived with the sampling rate of 20 Hz. Since a sudden change of the vehicle steering angle is obtained with the instantaneous sensor recognition, vehicle maneuver with a sudden large change in steering angle or speed may occur. To prevent this situation in advance and to allow flexible driving, outputs (i.e., steering angle and vehicle speed) of the PID were moderated by the integral anti-windup scheme, as shown in

Figure 14.

As shown

Figure 14a, reference input variables

v and

θ are the desired speed and steering angle values, respectively, as in the results of the algorithm described above, and variables

v_out and

θ_out are the output values calculated by the proposed algorithm. In order to track the desired values, a PID controller for vehicle speed was designed to provide a fast response to change of desired speed by reducing the selection time. However, PID control parameters for the steering angle were finely tuned, not only to provide a relatively slow response, but also to avoid a rocking motion when driving. Moreover, the integral anti-windup scheme in the PID control loop, as shown in

Figure 14b, was utilized to prevent dangerous situations, such as sudden stopping or sudden acceleration.

5. Discussion and Remarks

By changing the existing network structure of SCNN to be sparser, the accuracy of SSCNN becomes lower by 5.4% on average than the original SCNN. However, the average recognition rate is 66.2% in various urban environments. Additionally, when it is applied to SWIF, the result is complemented by using the averaging filter that enables the derived value to be skipped when a large deviation from the previous results is derived. Moreover, since SSCNN is capable of producing values 2.7 times faster than the original one, it is more suitable for real-time systems and is able to compensate for slightly lower accuracy.

Both the SWIF and control algorithms are tested in two urban scenarios: (a) a person suddenly appearing on the road (b) and a construction site covering part of the road. As a result, even when large objects blocked part of the road or obstacles appeared at close ranges, it was possible to observe the slow speed and rotation of the steering angle, which helped avoid a secondary dangerous situation due to oversteering. Moreover, considering the presence of objects outside the lane, it was also able to maneuver without lane or route deviation by keeping a safe distance (i.e., about 50 cm) from the objects.

6. Conclusions

This paper proposed two novel algorithms: the first algorithm is the vision deep learning method, which is named the sparse spatial convolutional neural network (SSCNN); and the second one is the sensor integration method, which is called the sensor-weighted integration field (SWIF). Due to the proposed SSCNN, for lane recognition on urban roads, the test vehicle was able to recognize the adjacent traffic lanes in any direction on urban roads, since lane data was learned by considering both normal and abnormal driving directions at the same time. Moreover, it also worked well because of the newly proposed network model, which is sparser than the existing one. Thus, when learned with the same Tusimple and CULane datasets, the accuracy of SSCNN was lower but the computational speed was dramatically increased by 2.7 times, which significantly contributed to real-time system performance for the autonomous vehicle. For this reason, it is noted that SSCNN seems to be adoptable. In the future, a slight reduction in accuracy will be corrected in the next step, such as integrating sensors or applying filters. Based on the detected lanes and the previously described SSCNN, the SWIF algorithm was also proposed by forming a weighted field, utilizing both the obstacle data from LiDAR data and waypoints from GPS data. This system is efficient, indicating which areas are safe from dangerous factors, with a resolution of 2 cm per pixel and a processing speed of 18–26 frames per second. Additionally, SWIF can simplify the data integration and can be expanded easily without requiring complicated mathematical calculations. Using the motion planning method in SWIF, this algorithm does not always judge the center area between feature points (e.g., the lanes, routes, and obstacles) as a safe path. The safe path is decided in two steps (θ1, θ2), so that the vehicle can detect the safest direction and area and keep a safe distance from dangerous factors on diverse urban roads and with minimum change of steering angle. Consequently, as the SWIF algorithm is based on SSCNN, to prevent a dangerous situation in advance, the vehicle can recognize situations both inside the lane and outside the lane and then divert the travel direction from the center path for safer urban driving, rather than just following the center line between lanes and obstacles. Thus, the vehicle is able to travel in real-time with flexibility and without route or lane departure due to the sudden steering in the presence of diverse disturbances on urban roads. Moreover, in the future, efficient paths calculated from diverse information obtained from V2X communication will be also integrated with the proposed SWIF algorithm for better autonomous driving performance in urban environments.