Computer Vision Intelligent Approaches to Extract Human Pose and Its Activity from Image Sequences

Abstract

:1. Introduction

2. Theoretical Framework

2.1. Classical Computational Intelligence Based Models

2.1.1. Support Vector Machines

2.1.2. Fuzzy Models

2.1.3. Neural Networks

2.2. Deep Learning Based Model-LSTM

- : input vector to the LSTM unit

- : forget gate’s activation vector

- : input/update gate’s activation vector

- : output gate’s activation vector

- : hidden state vector also known as output vector of the LSTM unit

- : cell state vector

- , and are weight matrices and bias vector parameters which need to be learned during training where the superscripts n and h refer to the number of input features and number of cells/neurons, respectively.

2.3. Advantages and Disadvantages of the Computational Intelligence Models

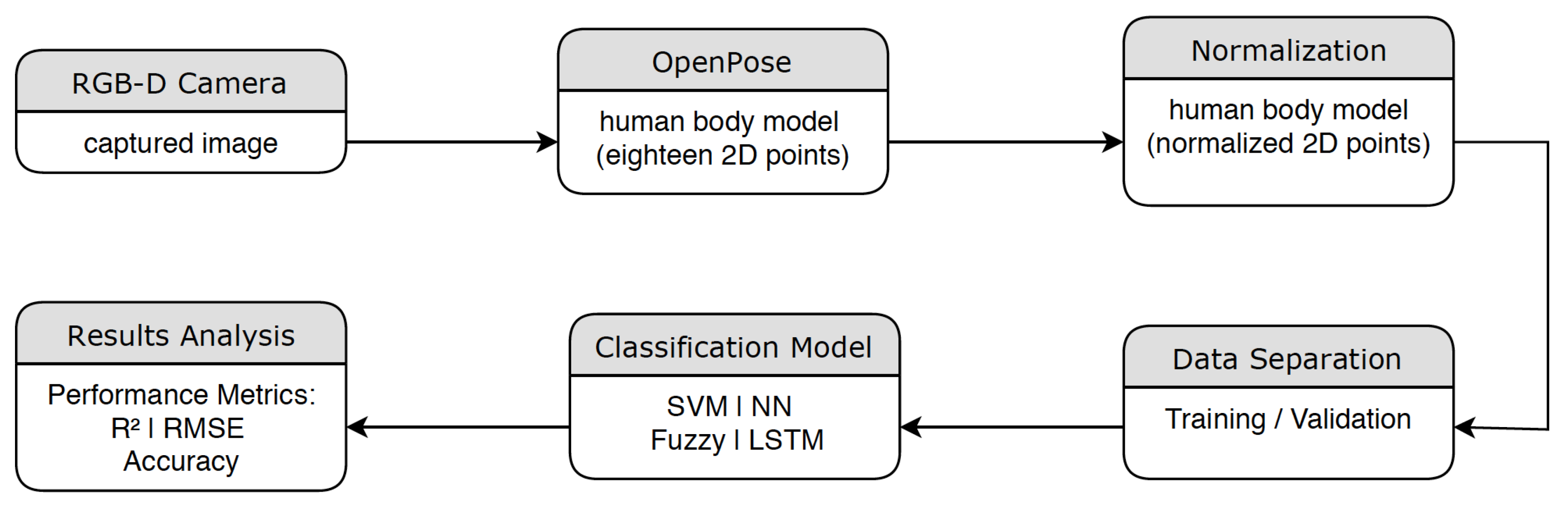

3. Developed Applications

3.1. Human Pose Detection and Recognition in Homes

3.2. Human Activity Detection and Recognition in Homes

4. Results and Discussion

4.1. Data Acquisition and Preparation

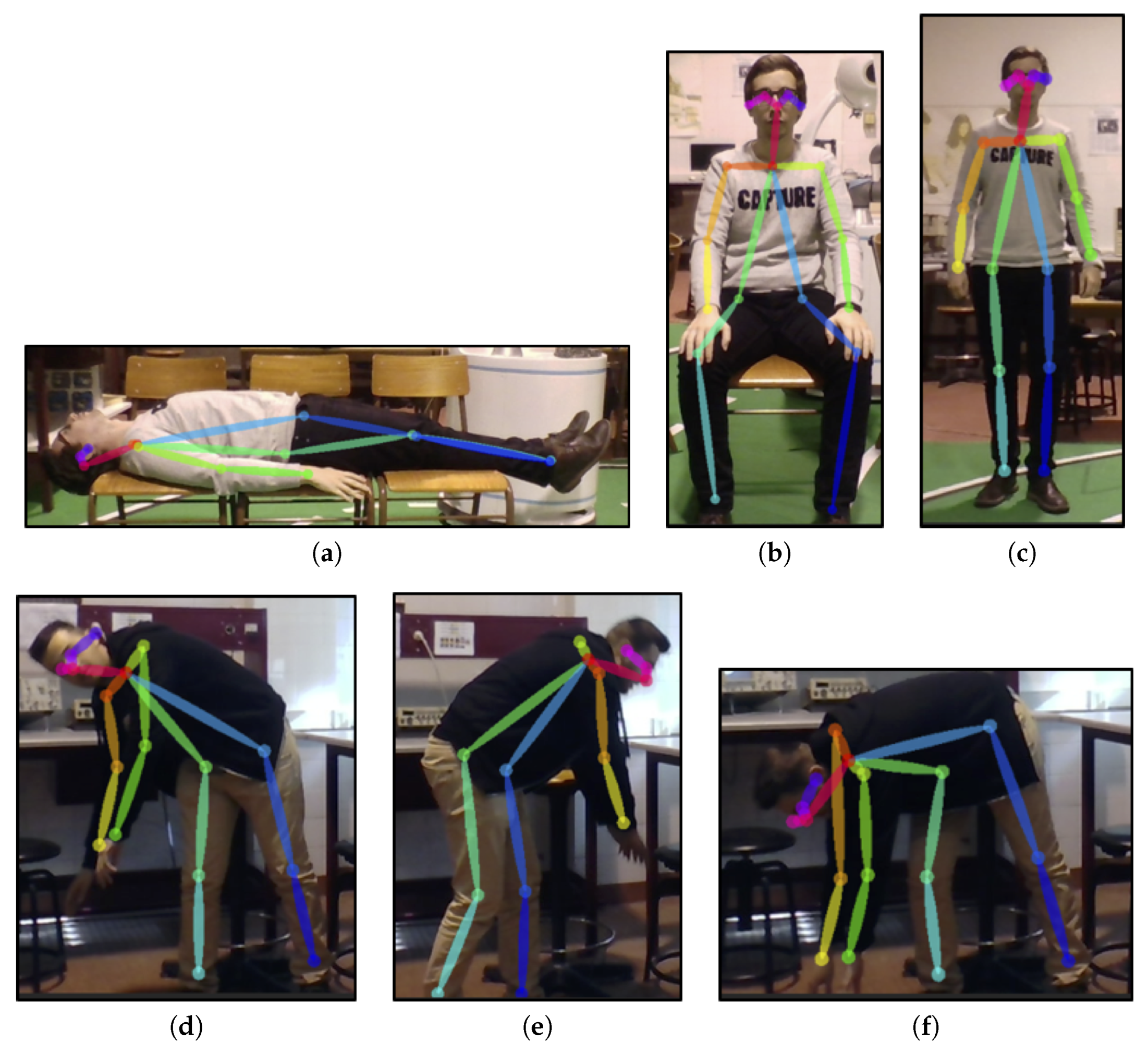

4.1.1. Pose Detection

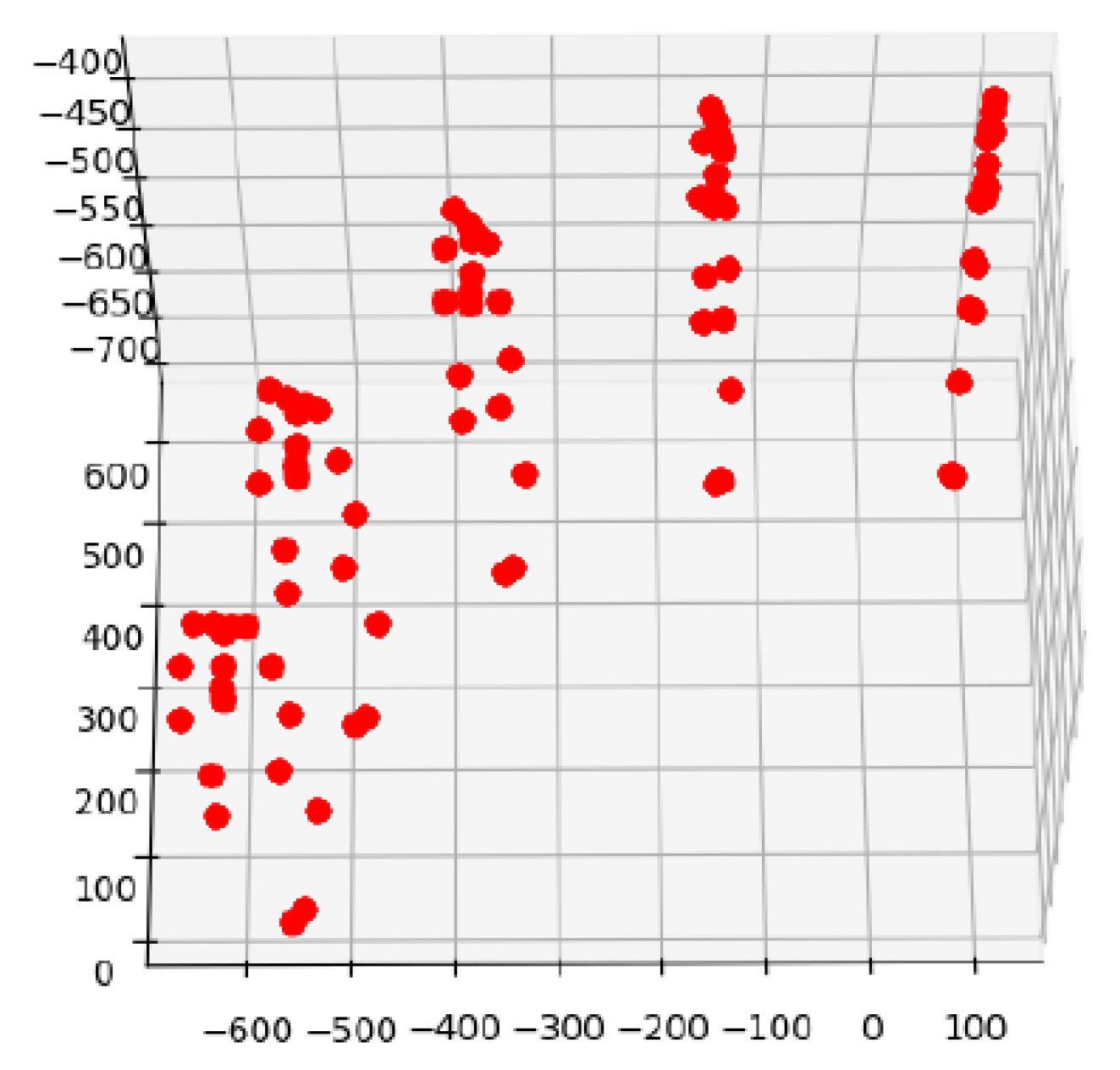

4.1.2. Activity Detection

4.2. Performance Indexes for Results Analysis

4.3. Human Pose Detection and Recognition

- the fuzzy sugeno inference with 100 clusters using subtractive clustering;

- the support vector machine model using the linear epsilon insensitive cost, while the gaussian kernel’s bandwidth has to be set to 0.1, along with the lagrangian multipliers bound set to 20;

- the probabilistic neural network (NN-pnn), with 140 neurons in the radial basis layer

4.4. Human Activity Detection and Recognition

5. Conclusions and Future Work

- Human Pose semantics from video images, for six different classes. The cubic SVM model obtained 95.97% of correct classification, during tests in situations different than the training phase.

- Human Activity semantics from video image sequences. The implemented LSTM learned model achieved an overall accuracy of 88%, during tests in situations different than the training phase.

- Atomic activities and quantify the time interval that each activity take place.

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Chan, M.; Campo, E.; Estève, D.; Fourniols, J.Y. Smart homes?current features and future perspectives. Maturitas 2009, 64, 90–97. [Google Scholar] [CrossRef] [PubMed]

- Bonnefon, J.F.; Shariff, A.; Rahwan, I. The social dilemma of autonomous vehicles. Science 2016, 352, 1573–1576. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Matthias, B.; Kock, S.; Jerregard, H.; Kallman, M.; Lundberg, I.; Mellander, R. Safety of collaborative industrial robots: Certification possibilities for a collaborative assembly robot concept. In Proceedings of the 2011 IEEE International Symposium on Assembly and Manufacturing (ISAM), Tampere, Finland, 25–27 May 2011; pp. 1–6. [Google Scholar]

- Veloso, M.; Biswas, J.; Coltin, B.; Rosenthal, S.; Kollar, T.; Mericli, C.; Samadi, M.; Brandao, S.; Ventura, R. Cobots: Collaborative robots servicing multi-floor buildings. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots And Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 5446–5447. [Google Scholar]

- Jia, Y.; Zhang, B.; Li, M.; King, B.; Meghdari, A. Human-Robot Interaction. J. Robot. 2018, 2018, 3879547. [Google Scholar] [CrossRef]

- Zanchettin, A.M.; Ceriani, N.M.; Rocco, P.; Ding, H.; Matthias, B. Safety in human-robot collaborative manufacturing environments: Metrics and control. IEEE Trans. Autom. Sci. Eng. 2015, 13, 882–893. [Google Scholar] [CrossRef] [Green Version]

- Lasota, P.A.; Fong, T.; Shah, J.A. A survey of methods for safe human-robot interaction. Found. Trends® Robot. 2017, 5, 261–349. [Google Scholar] [CrossRef]

- Amato, F.; Moscato, V.; Picariello, A.; Sperliì, G. Extreme events management using multimedia social networks. Future Gener. Comput. Syst. 2019, 94, 444–452. [Google Scholar] [CrossRef]

- Aggarwal, J.; Xia, L. Human activity recognition from 3D data: A review. Pattern Recognit. Lett. 2014, 48, 70–80. [Google Scholar] [CrossRef]

- Argyriou, V.; Petrou, M.; Barsky, S. Photometric stereo with an arbitrary number of illuminants. Comput. Vis. Image Underst. 2010, 114, 887–900. [Google Scholar] [CrossRef]

- Gonçalves, P.J.S.; Torres, P.M.; Santos, F.; António, R.; Catarino, N.; Martins, J. A vision system for robotic ultrasound guided orthopaedic surgery. J. Intell. Robot. Syst. 2015, 77, 327–339. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Kim, E.; Helal, S.; Cook, D. Human activity recognition and pattern discovery. IEEE Perv. Comput. 2009, 9, 48–53. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A public domain dataset for human activity recognition using smartphones. In Esann; i6doc.com Publishing: Bruges, Belgium, 2013; ISBN 978-2-87419-081-0. [Google Scholar]

- Yuan, G.; Wang, Z.; Meng, F.; Yan, Q.; Xia, S. An overview of human activity recognition based on smartphone. Sens. Rev. 2019, 39, 288–306. [Google Scholar] [CrossRef]

- Hassan, M.M.; Uddin, M.Z.; Mohamed, A.; Almogren, A. A robust human activity recognition system using smartphone sensors and deep learning. Future Gener. Comput. Syst. 2018, 81, 307–313. [Google Scholar] [CrossRef]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Chen, K.; Yao, L.; Zhang, D.; Wang, X.; Chang, X.; Nie, F. A Semisupervised Recurrent Convolutional Attention Model for Human Activity Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2019, 1–10. [Google Scholar] [CrossRef]

- Núñez, J.C.; Cabido, R.; Pantrigo, J.J.; Montemayor, A.S.; Vélez, J.F. Convolutional Neural Networks and Long Short-Term Memory for skeleton-based human activity and hand gesture recognition. Pattern Recognit. 2018, 76, 80–94. [Google Scholar] [CrossRef]

- Amato, F.; Castiglione, A.; Moscato, V.; Picariello, A.; Sperlì, G. Multimedia summarization using social media content. Multimed. Tools Appl. 2018, 77, 17803–17827. [Google Scholar] [CrossRef]

- Vapnik, V. Statistical Learning Theory; Wiley: New York, NY, USA, 1998. [Google Scholar]

- Sousa, J.; Kaymak, U. Fuzzy Decision Making in Modeling and Control; World Scientific Pub. Co.: Singapore, 2002. [Google Scholar]

- Takagi, T.; Sugeno, M. Fuzzy Identification of Systems and its Applications to Modelling and Control. IEEE Trans. Syst. Man Cybern. 1985, 15, 116–132. [Google Scholar] [CrossRef]

- Chiu, S.L. Fuzzy model identification based on cluster estimation. J. Intell. Fuzzy Syst. 1994, 2, 267–278. [Google Scholar] [CrossRef]

- Castilho, H.P.; Gonçalves, P.J.S.; Pinto, J.R.C.; Serafim, A.L. Intelligent real-time fabric defect detection. In Proceedings of the International Conference Image Analysis and Recognition, Montreal, QC, Canada, 2–24 August 2007; pp. 1297–1307. [Google Scholar]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Zhang, G.P. Neural networks for classification: a survey. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2000, 30, 451–462. [Google Scholar] [CrossRef] [Green Version]

- Specht, D.F. Probabilistic neural networks. Neural Netw. 1990, 3, 109–118. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A Review of Recurrent Neural Networks: LSTM Cells and Network Architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Gonçalves, P.J.S. The Classification Platform Applied to Mammographic Images. In Computational Intelligence and Decision Making; Madureira, A., Reis, C., Marques, V., Eds.; Springer: Dordrecht, The Netherlands, 2013; pp. 239–248. [Google Scholar]

- Gonçalves, P.J.; Estevinho, L.M.; Pereira, A.P.; Sousa, J.M.; Anjos, O. Computational intelligence applied to discriminate bee pollen quality and botanical origin. Food Chem. 2018, 267, 36–42. [Google Scholar] [CrossRef] [Green Version]

- Ketkar, N. Introduction to keras. In Deep Learning with Python; Springer: New York, NY, USA, 2017; pp. 97–111. [Google Scholar]

- Geisser, S. Predictive Inference; Routledge: Abingdon, UK, 2017. [Google Scholar]

- Kohavi, R.; John, G.H. Wrappers for feature subset selection. Artif. Intell. 1997, 97, 273–324. [Google Scholar] [CrossRef] [Green Version]

| Class Number | Class Description | P 1 | P 2 | P 3 | P 4 | P 5 | P 6 | P 7 | P 8 | Size |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Lying down | 26 | 16 | 21 | 11 | 16 | 11 | 11 | 8 | 120 |

| 2 | Sitting | 22 | 20 | 9 | 10 | 19 | 15 | 12 | 13 | 120 |

| 3 | Standing | 18 | 19 | 11 | 21 | 19 | 12 | 10 | 10 | 120 |

| 4 | Slanted Right | 12 | 19 | 14 | 14 | 13 | 18 | 18 | 12 | 120 |

| 5 | Slanted Left | 18 | 29 | 12 | 14 | 13 | 18 | 12 | 12 | 120 |

| 6 | Crouching | 16 | 21 | 8 | 20 | 14 | 14 | 9 | 11 | 120 |

| Method | RMSE | Time Elapsed [msec] | |

|---|---|---|---|

| Cubic SVM | 0.99523 | 0.11785 | 1.3133 |

| Sugeno-SC | 0.73216 | 0.91893 | 1.7956 |

| PNN | 0.85338 | 0.65279 | 0.38707 |

| Class Number | Class Description | Nr. Errors | Accuracy % |

|---|---|---|---|

| 1 | Lying down | 1 | 99.2 |

| 2 | Sitting | 3 | 97.6 |

| 3 | Standing | 2 | 98.4 |

| 4 | Slanted Right | 3 | 97.0 |

| 5 | Slanted Left | 6 | 95.0 |

| 6 | Crouching | 14 | 88.0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gonçalves, P.J.S.; Lourenço, B.; Santos, S.; Barlogis, R.; Misson, A. Computer Vision Intelligent Approaches to Extract Human Pose and Its Activity from Image Sequences. Electronics 2020, 9, 159. https://doi.org/10.3390/electronics9010159

Gonçalves PJS, Lourenço B, Santos S, Barlogis R, Misson A. Computer Vision Intelligent Approaches to Extract Human Pose and Its Activity from Image Sequences. Electronics. 2020; 9(1):159. https://doi.org/10.3390/electronics9010159

Chicago/Turabian StyleGonçalves, Paulo J. S., Bernardo Lourenço, Samuel Santos, Rodolphe Barlogis, and Alexandre Misson. 2020. "Computer Vision Intelligent Approaches to Extract Human Pose and Its Activity from Image Sequences" Electronics 9, no. 1: 159. https://doi.org/10.3390/electronics9010159

APA StyleGonçalves, P. J. S., Lourenço, B., Santos, S., Barlogis, R., & Misson, A. (2020). Computer Vision Intelligent Approaches to Extract Human Pose and Its Activity from Image Sequences. Electronics, 9(1), 159. https://doi.org/10.3390/electronics9010159