gMSR: A Multi-GPU Algorithm to Accelerate a Massive Validation of Biclusters

Abstract

:1. Introduction

2. Materials and Methods

2.1. Parallelization of the MSR Measure

- High level: the calculation of the MSR value, , of every bicluster =, is an independent task (see Equation (3)).

- Medium level: for each bicluster, , the residue, , of every element , can be processed independently (see Equation (1)).

- Low level: for each residue , every mean (see Equation (2)) can be calculated in an independent way.

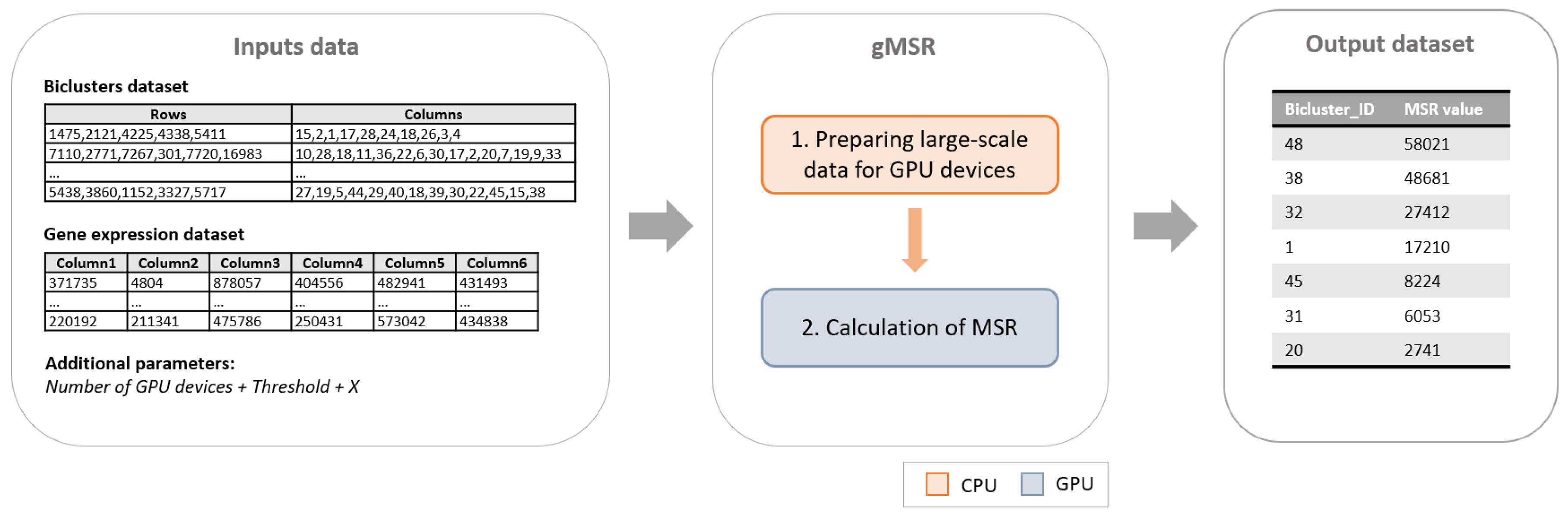

2.2. The gMSR Algorithm

2.2.1. Phase 1: Preparing Large-Scale Data for GPU Devices

- The gene expression dataset is stored completely in the global memory of each GPU device. Because this dataset is used to calculate the MSR of each bicluster, the number of the necessary data transfers between the GPU and the CPU is minimized.

- The set of biclusters is distributed in the remaining part of the global memory of each GPU device. As it can be observed in Figure 2, the set of biclusters stored in the CPU memory is divided into different parts, called chunks, that are distributed in a balanced way between all GPU devices. Every chunk adjusts to the space that is still available in every GPU global memory. That way, the GPU storage capacity is maximized.

2.2.2. Phase 2: Calculation of MSR on GPU Devices

- Calculation of the means of a bicluster.

- Calculation of the residues of each element of a bicluster.

- Calculation of the MSR value of a bicluster.

2.3. Datasets Description

3. Results and Discussion

3.1. Synthetic Biclusters Analysis

3.1.1. Performance Evaluation

3.1.2. Scalability Evaluation

3.2. Real Biclusters Analysis

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Figures and Tables

| Biclusters | MSR Sequential | gMSR(1) | gMSR(2) | gMSR(4) | gMSR(8) |

|---|---|---|---|---|---|

| 50,000 | 193.34 s | 0.75 s | 0.75 s | 0.94 s | 1.53 s |

| 100,000 | 386.565 s | 0.99 s | 0.92 s | 1.07 s | 1.58 s |

| 150,000 | 579.779 s | 1.16 s | 1.04 s | 1.16 s | 1.72 s |

| 200,000 | 773.741 s | 1.46 s | 1.23 s | 1.36 s | 1.89 s |

| 250,000 | 966.482 s | 1.79 s | 1.47 s | 1.56 s | 2.04 s |

| 300,000 | 1160.55 s | 2.19 s | 1.70 s | 1.72 s | 2.27 s |

| 350,000 | 1353.08 s | 2.41 s | 1.73 s | 1.93 s | 2.55 s |

| 400,000 | 1546.94 s | 2.97 s | 2.14 s | 2.24 s | 2.78 s |

| 450,000 | 1740.07 s | 3.14 s | 2.41 s | 2.56 s | 3.09 s |

| 500,000 | 1932.82 s | 3.60 s | 2.87 s | 2.90 s | 3.37 s |

| 550,000 | 2127.71 s | 3.88 s | 3.09 s | 3.06 s | 3.54 s |

| 600,000 | 2320.18 s | 4.26 s | 3.52 s | 3.41 s | 3.92 s |

| 650,000 | 2520.62 s | 4.80 s | 3.98 s | 3.78 s | 4.31 s |

| 700,000 | 2709.06 s | 5.11 s | 4.35 s | 4.22 s | 4.64 s |

| 750,000 | 2902.9 s | 5.69 s | 4.84 s | 4.67 s | 5.08 s |

| 800,000 | 3096.84 s | 6.30 s | 5.25 s | 5.10 s | 5.57 s |

| 850,000 | 3276.43 s | 6.85 s | 5.78 s | 5.57 s | 6.04 s |

| 900,000 | 3462.87 s | 7.40 s | 6.22 s | 6.12 s | 6.54 s |

| 950,000 | 3675.22 s | 8.18 s | 6.85 s | 6.65 s | 6.97 s |

| 1,000,000 | 3869.25 s | 8.94 s | 7.37 s | 7.20 s | 7.51 s |

| Sizes | MSR Sequential | gMSR(1) | gMSR(2) | gMSR(4) | gMSR(8) |

|---|---|---|---|---|---|

| 5 × 5 | 2.76 s | 2.12 s | 2.05 s | 2.12 s | 2.59 s |

| 10 × 10 | 27.83 s | 2.23 s | 2.21 s | 2.19 s | 2.73 s |

| 20 × 20 | 406.84 s | 2.85 s | 2.46 s | 2.55 s | 3.09 s |

| 30 × 30 | 1934.68 s | 3.55 s | 2.70 s | 2.91 s | 3.38 s |

| 40 × 40 | 5977.15 s | 4.38 s | 3.04 s | 3.10 s | 3.57 s |

| 50 × 50 | 14,378.5 s | 5.10 s | 3.52 s | 3.30 s | 3.75 s |

| 65 × 65 | 40,395.9 s | 6.85 s | 4.47 s | 3.77 s | 3.93 s |

| Biclusters | gMSR(1) | gMSR(2) | gMSR(4) | gMSR(8) |

|---|---|---|---|---|

| 50,000 | 6.55 s | 2.45 s | 2.46 s | 2.95 s |

| 100,000 | 7.40 s | 3.71 s | 3.72 s | 4.19 s |

| 150,000 | 8.52 s | 5.34 s | 5.10 s | 5.58 s |

| 200,000 | 9.44 s | 7.08 s | 6.42 s | 6.76 s |

| 250,000 | 10.78 s | 8.52 s | 7.78 s | 8.11 s |

| 300,000 | 11.94 s | 10.29 s | 9.40 s | 9.51 s |

| 350,000 | 14.11 s | 11.76 s | 10.76 s | 10.76 s |

| 400,000 | 15.69 s | 13.49 s | 12.26 s | 12.11 s |

| 450,000 | 17.01 s | 14.51 s | 12.82 s | 12.47 s |

| 500,000 | 18.31 s | 15.58 s | 14.66 s | 14.13 s |

| 550,000 | 20.26 s | 16.94 s | 15.93 s | 15.23 s |

| 600,000 | 21.57 s | 18.44 s | 17.45 s | 16.89 s |

| 650,000 | 22.93 s | 19.84 s | 18.89 s | 18.34 s |

| 700,000 | 24.60 s | 21.53 s | 20.25 s | 19.99 s |

| 750,000 | 26.23 s | 22.86 s | 21.74 s | 20.98 s |

| 800,000 | 27.88 s | 24.34 s | 23.31 s | 22.78 s |

| 850,000 | 29.83 s | 25.92 s | 25.07 s | 24.46 s |

| 900,000 | 31.50 s | 27.41 s | 25.98 s | 24.96 s |

| 950,000 | 33.48 s | 29.11 s | 28.18 s | 26.71 s |

| 1,000,000 | 35.52 s | 30.72 s | 28.85 s | 27.28 s |

| 2,000,000 | 76.80 s | 64.01 s | 59.90 s | 56.76 s |

| 4,000,000 | 182.60 s | 160.59 s | 143.11 s | 130.55 s |

| 6,000,000 | 316.01 s | 289.01 s | 272.39 s | 235.22 s |

| 8,000,000 | 475.89 s | 451.27 s | 422.36 s | 378.34 s |

| 17,331,328 | 1597.68 s | 1431.62 s | 1218.39 s | 989.87 s |

References

- Gauthier, J.; Vincent, A.T.; Charette, S.J.; Derome, N. A brief history of bioinformatics. Brief. Bioinform. 2019, 20, 1981–1996. [Google Scholar] [CrossRef] [PubMed]

- Libbrecht, M.W.; Noble, W.S. Machine learning applications in genetics and genomics. Nat. Rev. Genet. 2015, 16, 321–332. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chowdhury, H.A.; Bhattacharyya, D.K.; Kalita, J.K. (Differential) Co-Expression Analysis of Gene Expression: A Survey of Best Practices. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 17, 1154–1173. [Google Scholar] [CrossRef]

- Su, L.; Liu, G.; Wang, J.; Xu, D. A rectified factor network based biclustering method for detecting cancer-related coding genes and miRNAs, and their interactions. Methods 2019, 166, 22–30. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Bhanot, G.; Khiabanian, H. TuBA: Tunable biclustering algorithm reveals clinically relevant tumor transcriptional profiles in breast cancer. GigaScience 2019, 8, giz064. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, Q.; Chen, Y.; Liu, L.; Tao, D.; Li, X. On Combining Biclustering Mining and AdaBoost for Breast Tumor Classification. IEEE Trans. Knowl. Data Eng. 2020, 32, 728–738. [Google Scholar] [CrossRef]

- Gao, C.; McDowell, I.C.; Zhao, S.; Brown, C.D.; Engelhardt, B.E. Context specific and differential gene co-expression networks via Bayesian biclustering. PLoS Comput. Biol. 2016, 12, e1004791. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bentham, R.B.; Bryson, K.; Szabadkai, G. Biclustering Analysis of Co-regulation Patterns in Nuclear-Encoded Mitochondrial Genes and Metabolic Pathways. In Cancer Metabolism; Humana Press: New York, NY, USA, 2019; pp. 469–478. [Google Scholar]

- Xie, J.; Ma, A.; Fennell, A.; Ma, Q.; Zhao, J. It is time to apply biclustering: A comprehensive review of biclustering applications in biological and biomedical data. Brief. Bioinform. 2018, 20, 1450–1465. [Google Scholar] [CrossRef]

- Li, J.; Reisner, J.; Pham, H.; Olafsson, S.; Vardeman, S. Biclustering with missing data. Inf. Sci. 2020, 510, 304–316. [Google Scholar] [CrossRef]

- Gomez-Vela, F.; López, A.; Lagares, J.A.; Baena, D.S.; Barranco, C.D.; García-Torres, M.; Divina, F. Bioinformatics from a Big Data Perspective: Meeting the Challenge. In Lecture Notes in Computer Science, Proceedings of the Bioinformatics and Biomedical Engineering, Granada, Spain, 26–28 April 2017; Springer: Cham, Switzerland, 2017; pp. 349–359. [Google Scholar]

- Vandromme, M.; Jacques, J.; Taillard, J.; Jourdan, L.; Dhaenens, C. A Biclustering Method for Heterogeneous and Temporal Medical Data. IEEE Trans. Knowl. Data Eng. 2020, 1, 1. [Google Scholar] [CrossRef]

- Orzechowski, P.; Moore, J.H. EBIC: A scalable biclustering method for large scale data analysis. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; pp. 31–32. [Google Scholar]

- Orzechowski, P.; Boryczko, K. Effective biclustering on GPU-capabilities and constraints. Prz Elektrotech. 2015, 1, 133–136. [Google Scholar] [CrossRef]

- White, T. Hadoop: The Definitive Guide; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2009. [Google Scholar]

- Dean, J.; Ghemawat, S. MapReduce: Simplified data processing on large clusters. Commun. ACM 2008, 51, 107–113. [Google Scholar] [CrossRef]

- Liao, R.; Zhang, Y.; Guan, J.; Zhou, S. CloudNMF: A MapReduce implementation of nonnegative matrix factorization for large-scale biological datasets. Genom. Proteom. Bioinform. 2014, 12, 48–51. [Google Scholar] [CrossRef] [Green Version]

- Zaharia, M.; Xin, R.S.; Wendell, P.; Das, T.; Armbrust, M.; Dave, A.; Meng, X.; Rosen, J.; Venkataraman, S.; Franklin, M.J.; et al. Apache spark: A unified engine for big data processing. Commun. ACM 2016, 59, 56–65. [Google Scholar] [CrossRef]

- Sarazin, T.; Lebbah, M.; Azzag, H. Biclustering using Spark-MapReduce. In Proceedings of the 2014 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 27–30 October 2014; pp. 58–60. [Google Scholar]

- NVIDIA. NVIDIA CUDA Programming Guide 2.0; NVIDIA Corporation: Santa Clara, CA, USA, 2008. [Google Scholar]

- Orzechowski, P.; Boryczko, K. Rough assessment of GPU capabilities for parallel PCC-based biclustering method applied to microarray data sets. Bio-Algorithms Med-Syst. 2015, 11, 243–248. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Cui, Y. A GPU-accelerated algorithm for biclustering analysis and detection of condition- dependent coexpression network modules. Sci. Rep. 2017, 7, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clevert, D.A.; Unterthiner, T.; Povysil, G.; Hochreiter, S. Rectified factor networks for biclustering of omics data. Bioinformatics 2017, 33, i59–i66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- González-Domínguez, J.; Expósito, R.R. Accelerating binary biclustering on platforms with CUDA-enabled GPUs. Inf. Sci. 2019, 496, 317–325. [Google Scholar] [CrossRef]

- Liu, B.; Yu, C.; Wang, D.Z.; Cheung, R.C.; Yan, H. Design exploration of geometric biclustering for microarray data analysis in data mining. IEEE Trans. Parallel Distrib. Syst. 2013, 25, 2540–2550. [Google Scholar] [CrossRef]

- Orzechowski, P.; Boryczko, K.; Moore, J.H. Scalable biclustering—the future of big data exploration? GigaScience 2019, 8, giz078. [Google Scholar] [CrossRef] [PubMed]

- Saber, H.; Elloumi, M. A new study on biclustering tools, bicluster validation and evaluation functions. Int. J. Comput. Sci. Eng. Surv. 2015, 6, 1. [Google Scholar] [CrossRef]

- Cheng, Y.; Church, G.M. Biclustering of expression data. Ismb 2000, 8, 93–103. [Google Scholar]

- Consortium, T.G.O. Expansion of the Gene Ontology knowledgebase and resources. Nucleic Acids Res. 2016, 45, 331–338. [Google Scholar]

- Raudvere, U.; Kolberg, L.; Kuzmin, I.; Arak, T.; Adler, P.; Peterson, H.; Vilo, J. g: Profiler: A web server for functional enrichment analysis and conversions of gene lists (2019 update). Nucleic Acids Res. 2019, 47, W191–W198. [Google Scholar] [CrossRef] [Green Version]

- Kuleshov, M.V.; Jones, M.R.; Rouillard, A.D.; Fernandez, N.F.; Duan, Q.; Wang, Z.; Koplev, S.; Jenkins, S.L.; Jagodnik, K.M.; Lachmann, A.; et al. Enrichr: A comprehensive gene set enrichment analysis web server 2016 update. Nucleic Acids Res. 2016, 44, W90–W97. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fan, J.; Fan, D.; Slowikowski, K.; Gehlenborg, N.; Kharchenko, P. UBiT2: A client-side web-application for gene expression data analysis. bioRxiv 2017, 118992. [Google Scholar] [CrossRef] [Green Version]

- Liao, Y.; Wang, J.; Jaehnig, E.J.; Shi, Z.; Zhang, B. WebGestalt 2019: Gene set analysis toolkit with revamped UIs and APIs. Nucleic Acids Res. 2019, 47, W199–W205. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lopez-Fernandez, A.; Rodriguez-Baena, D.; Gomez-Vela, F.; Diaz-Diaz, N. BIGO: A web application to analyse gene enrichment analysis results. Comput. Biol. Chem. 2018, 76, 169–178. [Google Scholar] [CrossRef]

- Orzechowski, P.; Moore, J.H. EBIC: An open source software for high-dimensional and big data analyses. Bioinformatics 2019, 35, 3181–3183. [Google Scholar] [CrossRef]

- Falcon, S.; Gentleman, R. Using GOstats to test gene lists for GO term association. Bioinformatics 2007, 23, 257–258. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gomez-Pulido, J.A.; Cerrada-Barrios, J.L.; Trinidad-Amado, S.; Lanza-Gutierrez, J.M.; Fernandez-Diaz, R.A.; Crawford, B.; Soto, R. Fine-grained parallelization of fitness functions in bioinformatics optimization problems: Gene selection for cancer classification and biclustering of gene expression data. BMC Bioinform. 2016, 17, 330. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Shang, Y.; Yang, Y. Clustering coefficients of large networks. Inf. Sci. 2017, 382, 350–358. [Google Scholar] [CrossRef]

- Rodriguez-Baena, D.S.; Perez-Pulido, A.J.; Aguilar-Ruiz, J.S. A biclustering algorithm for extracting bit-patterns from binary datasets. Bioinformatics 2011, 27, 2738–2745. [Google Scholar] [CrossRef] [Green Version]

- Sato, T.; Kaneda, A.; Tsuji, S.; Isagawa, T.; Yamamoto, S.; Fujita, T.; Yamanaka, R.; Tanaka, Y.; Nukiwa, T.; Marquez, V.E.; et al. PRC2 overexpression and PRC2-target gene repression relating to poorer prognosis in small cell lung cancer. Sci. Rep. 2013, 3, 1911. [Google Scholar] [CrossRef]

- Saelens, W.; Cannoodt, R.; Saeys, Y. A comprehensive evaluation of module detection methods for gene expression data. Nat. Commun. 2018, 9, 1090. [Google Scholar] [CrossRef]

- Müssel, C.; Schmid, F.; Blätte, T.J.; Hopfensitz, M.; Lausser, L.; Kestler, H.A. BiTrinA—multiscale binarization and trinarization with quality analysis. Bioinformatics 2016, 32, 465–468. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lopez-Fernandez, A.; Rodriguez-Baena, D.; Gomez-Vela, F.; Divina, F.; Garcia-Torres, M. A multi-GPU biclustering algorithm for binary datasets. J. Parallel Distrib. Comput. 2021, 147, 209–219. [Google Scholar] [CrossRef]

| BICLUSTER ID | MSR |

|---|---|

| 49,448 | 61.6479 |

| 49,645 | 76.8776 |

| 13,489 | 79.617 |

| 49,446 | 92.3971 |

| 49,632 | 101.599 |

| 49,631 | 108 |

| 49,474 | 112.931 |

| 10,142 | 116.005 |

| 49,642 | 124.366 |

| 14,333 | 127.386 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

López-Fernández, A.; Rodríguez-Baena, D.S.; Gómez-Vela, F. gMSR: A Multi-GPU Algorithm to Accelerate a Massive Validation of Biclusters. Electronics 2020, 9, 1782. https://doi.org/10.3390/electronics9111782

López-Fernández A, Rodríguez-Baena DS, Gómez-Vela F. gMSR: A Multi-GPU Algorithm to Accelerate a Massive Validation of Biclusters. Electronics. 2020; 9(11):1782. https://doi.org/10.3390/electronics9111782

Chicago/Turabian StyleLópez-Fernández, Aurelio, Domingo S. Rodríguez-Baena, and Francisco Gómez-Vela. 2020. "gMSR: A Multi-GPU Algorithm to Accelerate a Massive Validation of Biclusters" Electronics 9, no. 11: 1782. https://doi.org/10.3390/electronics9111782

APA StyleLópez-Fernández, A., Rodríguez-Baena, D. S., & Gómez-Vela, F. (2020). gMSR: A Multi-GPU Algorithm to Accelerate a Massive Validation of Biclusters. Electronics, 9(11), 1782. https://doi.org/10.3390/electronics9111782