A Simulation Tool for Evaluating Video Streaming Architectures in Vehicular Network Scenarios

Abstract

:1. Introduction

2. Related Work

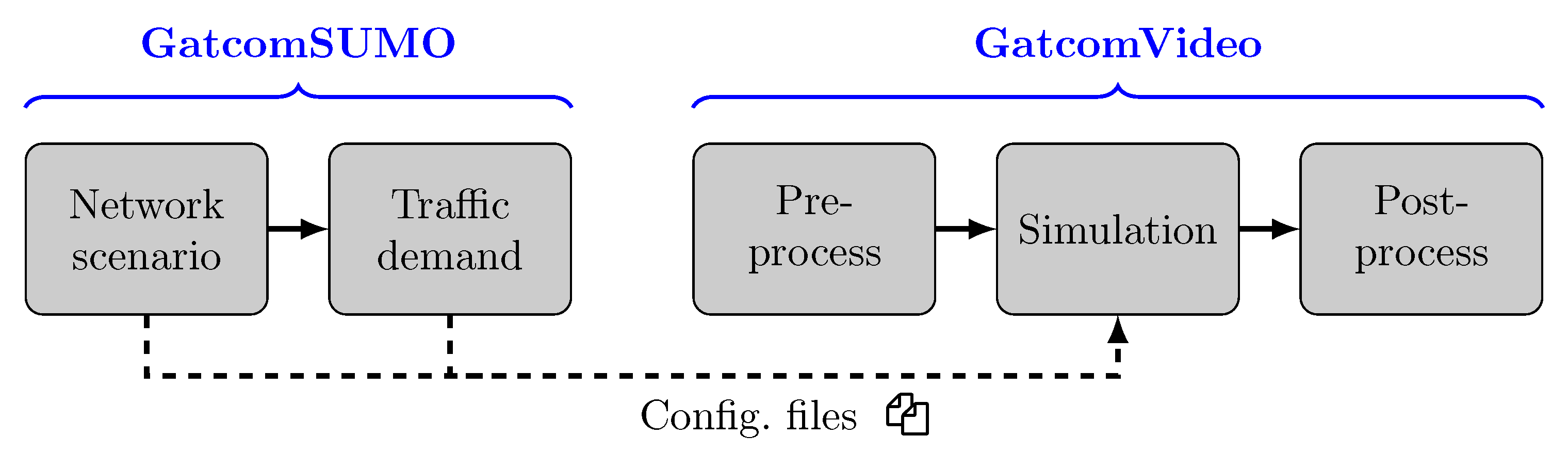

3. VDSF-VN Simulation Framework

3.1. GatcomSUMO

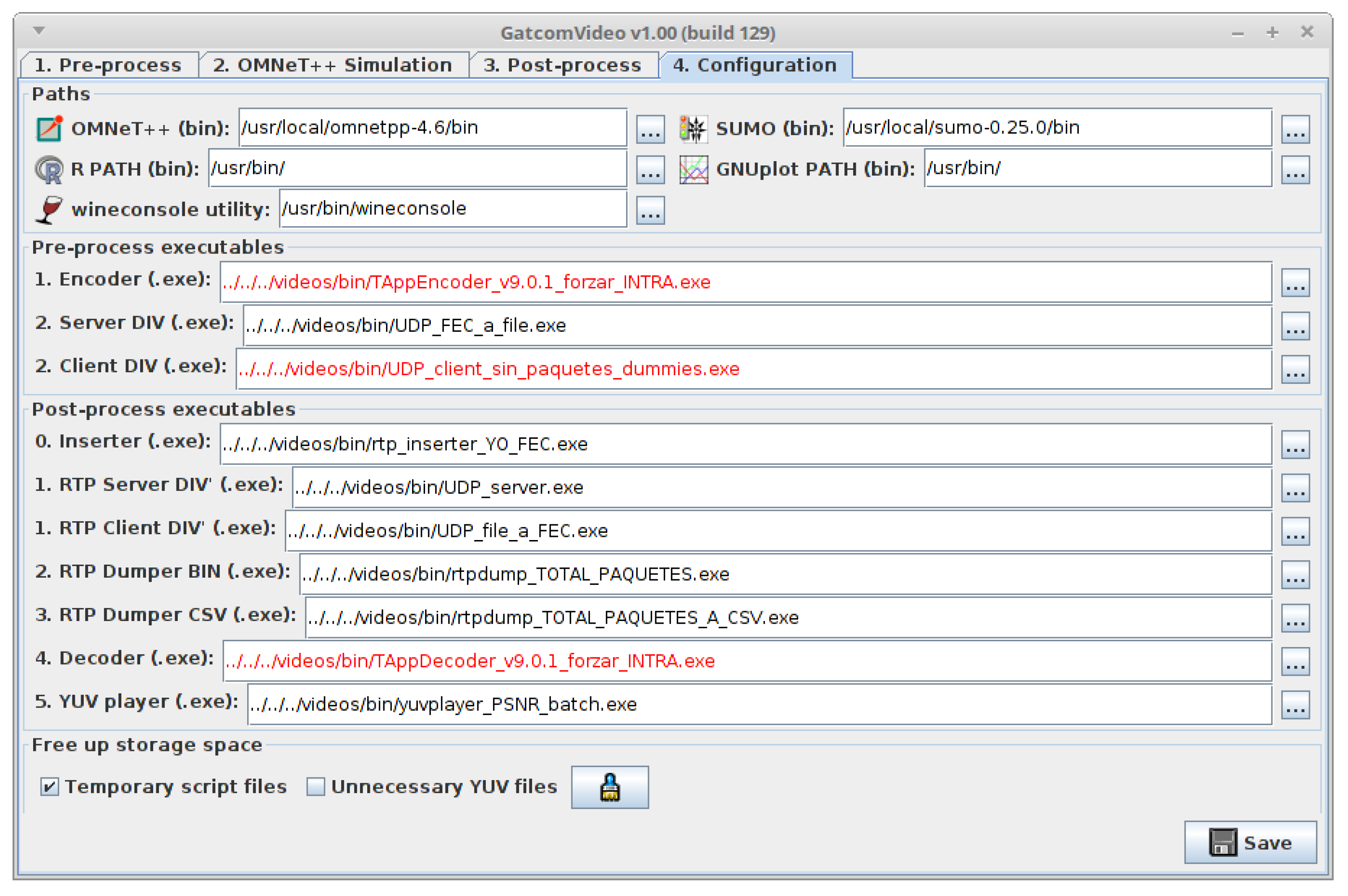

3.2. GatcomVideo

4. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Joshi, J.; Jain, K.; Agarwal, Y.; Deka, M.J.; Tuteja, P. VWS: Video surveillance on wheels using cloud in VANETs. In Proceedings of the 2015 IEEE 12th Malaysia International Conference on Communications (MICC), Kuching, Malaysia, 23–25 November 2015; pp. 129–134. [Google Scholar] [CrossRef]

- Agarwal, Y.; Jain, K.; Karabasoglu, O. Smart vehicle monitoring and assistance using cloud computing in vehicular Ad Hoc networks. Int. J. Transp. Sci. Technol. 2018, 7, 60–73. [Google Scholar] [CrossRef]

- Kumar, N.; Kaur, K.; Jindal, A.; Rodrigues, J.J. Providing healthcare services on-the-fly using multi-player cooperation game theory in Internet of Vehicles (IoV) environment. Digit. Commun. Netw. 2015, 1, 191–203. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Quan, H.; Zhang, Y.; Zhao, Q.; Liu, L. SVC: Secure VANET-Assisted Remote Healthcare Monitoring System in Disaster Area. KSII Trans. Internet Inf. Syst. 2016, 10, 1229–1248. [Google Scholar] [CrossRef]

- Olaverri-Monreal, C.; Gomes, P.; Fernandes, R.; Vieira, F.; Ferreira, M. The See-Through System: A VANET-enabled assistant for overtaking maneuvers. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 123–128. [Google Scholar] [CrossRef]

- Uhrmacher, A.M.; Brailsford, S.; Liu, J.; Rabe, M.; Tolk, A. Reproducible Research in Discrete Event Simulation: A Must or Rather a Maybe? In Proceedings of the 2016 Winter Simulation Conference WSC ’16, Arlington, VA, USA, 11–14 December 2016; pp. 1301–1315. [Google Scholar]

- Krajzewicz, D.; Erdmann, J.; Behrisch, M.; Bieker, L. Recent Development and Applications of SUMO—Simulation of Urban MObility. Int. J. Adv. Syst. Meas. 2012, 5, 128–138. [Google Scholar]

- Kurczveil, T.; López, P.A. eNetEditor: Rapid prototyping urban traffic scenarios for SUMO and evaluating their energy consumption. In SUMO 2015—Intermodal Simulation for Intermodal Transport; Deutsches Zentrum für Luft und Raumfahrt e.V.: Berlin-Adlershof, Germany, 2015; pp. 137–160. [Google Scholar] [CrossRef]

- Papaleondiou, L.G.; Dikaiakos, M.D. TrafficModeler: A Graphical Tool for Programming Microscopic Traffic Simulators through High-Level Abstractions. In Proceedings of the VTC Spring 2009—IEEE 69th Vehicular Technology Conference, Barcelona, Spain, 26–29 April 2009; pp. 1–5. [Google Scholar] [CrossRef]

- Arellano, W.; Mahgoub, I. TrafficModeler extensions: A case for rapid VANET simulation using, OMNET++, SUMO, and VEINS. In Proceedings of the 2013 High Capacity Optical Networks and Emerging/Enabling Technologies, Magosa, Cyprus, 11–13 December 2013; pp. 109–115. [Google Scholar] [CrossRef]

- Schweizer, J. SUMOPy: An Advanced Simulation Suite for SUMO; Lecture Notes in Computer Science (LNCS); Springer: Berlin/Heidelberg, Germany, 2014; pp. 71–82. [Google Scholar] [CrossRef]

- Fogue, M.; Garrido, P.; Martinez, F.J.; Cano, J.; Calafate, C.T.; Manzoni, P. Using roadmap profiling to enhance the warning message dissemination in vehicular environments. In Proceedings of the 2011 IEEE 36th Conference on Local Computer Networks, Bonn, Germany, 4–7 October 2011; pp. 18–20. [Google Scholar] [CrossRef]

- Varga, A.; Hornig, R. An Overview of the OMNeT++ Simulation Environment. In Proceedings of the 1st International Conference on Simulation Tools and Techniques for Communications, Networks and Systems & Workshops Simutools ’08, Marseille, France, 3–7 March 2008; pp. 60:1–60:10. [Google Scholar]

- Sommer, C.; German, R.; Dressler, F. Bidirectionally Coupled Network and Road Traffic Simulation for Improved IVC Analysis. IEEE Trans. Mob. Comput. 2011, 10, 3–15. [Google Scholar] [CrossRef] [Green Version]

- Gocmenoglu, C.; Acarman, T.; Levrat, B. WGL-VANET: A web-based visualization tool for VANET simulations. In Proceedings of the 2015 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Yokohama, Japan, 5–7 November 2015; pp. 62–63. [Google Scholar]

- Riley, G.F.; Henderson, T.R. The ns-3 Network Simulator. In Modeling and Tools for Network Simulation; Springer: Berlin/Heidelberg, Germany, 2010; pp. 15–34. [Google Scholar] [CrossRef]

- Barberis, C.; Gueli, E.; Le, M.T.; Malnati, G.; Nassisi, A. A customizable visualization framework for VANET application design and development. In Proceedings of the 2011 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–12 January 2011; pp. 569–570. [Google Scholar] [CrossRef]

- Barr, R.; Hass, Z.J.; van Renesse, R. JiST/SWANS: Java in Simulation Time/Scalable Wireless Ad hoc Network Simulator. Available online: http://jist.ece.cornell.edu (accessed on 21 November 2020).

- Finnson, J.; Zhang, J.; Tran, T.; Minhas, U.F.; Cohen, R. A Framework for Modeling Trustworthiness of Users in Mobile Vehicular Ad-Hoc Networks and Its Validation through Simulated Traffic Flow. In Proceedings of the 20th International Conference on User Modeling, Adaptation, and Personalization UMAP’12, Montreal, QC, Canada, 16–20 July 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 76–87. [Google Scholar] [CrossRef]

- Klaue, J.; Rathke, B.; Wolisz, A. EvalVid—A Framework for Video Transmission and Quality Evaluation; Springer: Berlin/Heidelberg, Germany, 2003; pp. 255–272. [Google Scholar] [CrossRef]

- ISO/IEC JTC1. ISO/IEC 14496-2. Coding of Audio-Visual Objects. 2001. Available online: https://www.iso.org/standard/36081.html (accessed on 21 November 2020).

- ns-2. The Network Simulator. Available online: http://www.isi.edu/nsnam/ns/ (accessed on 21 November 2020).

- Pediaditakis, D.; Tselishchev, Y.; Boulis, A. Performance and scalability evaluation of the Castalia wireless sensor network simulator. In Proceedings of the 3rd International ICST Conference on Simulation Tools and Techniques, Malaga, Spain, 15–19 March 2010; p. 53. [Google Scholar] [CrossRef] [Green Version]

- Rosário, D.; Zhao, Z.; Silva, C.; Cerqueira, E.; Braun, T. An OMNeT++ Framework to Evaluate Video Transmission in Mobile Wireless Multimedia Sensor Networks. In Proceedings of the 6th International ICST Conference on Simulation Tools and Techniques, Cannes, France, 5–7 March 2013; pp. 277–284. [Google Scholar] [CrossRef]

- Saladino, D.; Paganelli, A.; Casoni, M. A tool for multimedia quality assessment in NS3: QoE Monitor. Simul. Model. Pract. Theory 2013, 32, 30–41. [Google Scholar] [CrossRef]

- High Efficiency Video Coding (HEVC). ITU-T Recommendation H.265. 2013. Available online: https://www.itu.int/rec/T-REC-H.265 (accessed on 21 November 2020).

- Haklay, M.M.; Weber, P. OpenStreetMap: User-Generated Street Maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef] [Green Version]

- Wegener, A.; Piórkowski, M.; Raya, M.; Hellbrück, H.; Fischer, S.; Hubaux, J.P. TraCI: An Interface for Coupling Road Traffic and Network Simulators. In Proceedings of the 11th Communications and Networking Simulation Symposium CNS ’08, Ottawa, ON, Canada, 14–17 April 2008; ACM: New York, NY, USA, 2008; pp. 155–163. [Google Scholar] [CrossRef]

- Garrido Abenza, P.P.; Malumbres, M.P.; Piñol Peral, P. GatcomSUMO: A Graphical Tool for VANET Simulations Using SUMO and OMNeT++. In Proceedings of the SUMO User Conference 2017 (SUMO2017), Berlin-Adlershof, Germany, 8–10 May 2017; Volume 31, pp. 113–133. [Google Scholar]

- Advanced Video Coding (AVC) for Generic Audiovisual Services. ITU-T Recommendation H.264. 2003. Available online: https://www.itu.int/rec/T-REC-H.264 (accessed on 21 November 2020).

- Joint Collaborative Team on Video Coding (JCT-VC). HEVC Reference Software HM (HEVC Test Model) and Common Test Conditions. Available online: https://hevc.hhi.fraunhofer.de (accessed on 21 November 2020).

- Wang, Y.; Sanchez, Y.; Schierl, T.; Wenger, S.; Hannuksela, M. RTP Payload Format for High Efficiency Video Coding; RFC 7798; Internet Engineering Task Force: Fremont, CA, USA, 2016. [Google Scholar] [CrossRef]

- Seeling, P.; Reisslein, M. Video Transport Evaluation With H.264 Video Traces. IEEE Commun. Surv. Tutor. 2012, 14, 1142–1165. [Google Scholar] [CrossRef] [Green Version]

- Misra, K.; Segall, A.; Horowitz, M.; Xu, S.; Fuldseth, A.; Zhou, M. An Overview of Tiles in HEVC. IEEE J. Sel. Top. Signal Process. 2013, 7, 969–977. [Google Scholar] [CrossRef]

- Bossen, F. Common test conditions and software reference. In Proceedings of the 11th Meeting of the Joint Collaborative Team on Video Coding (JCT-VC), Shanghai, China, 10–19 October 2012. [Google Scholar]

- LAN/MAN Standards Committee of the IEEE Computer Society. IEEE Standard for Information Technology—Telecommunications and Information Exchange between Systems Local and Metropolitan Area Networks—Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications; IEEE Std 802.11-2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–3534. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016; Available online: https://www.r-project.org/ (accessed on 21 November 2020).

- Williams, T.; Kelley, C. Gnuplot 4.6: An Interactive Plotting Program. 2013. Available online: http://gnuplot.sourceforge.net/ (accessed on 21 November 2020).

- Bandyopadhyay, S.K.; Wu, Z.; Pandit, P.; Boyce, J.M. An Error Concealment Scheme for Entire Frame Losses for H.264/AVC. In Proceedings of the 2006 IEEE Sarnoff Symposium, Princeton, NJ, USA, 27–28 March 2006; pp. 1–4. [Google Scholar] [CrossRef]

- Julliard, A. Wine. Available online: https://www.winehq.org/ (accessed on 21 November 2020).

- Abenza, P.P.G.; Malumbres, M.P.; Piñol, P.; López-Granado, O. Source Coding Options to Improve HEVC Video Streaming in Vehicular Networks. Sensors 2018, 18, 3107. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garrido Abenza, P.P.; Malumbres, M.P.; Peral, P.P.; López-Granado, O. Evaluating the Use of QoS for Video Delivery in Vehicular Networks. In Proceedings of the 2020 29th International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 3–6 August 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Abenza, P.P.G.; Peral, P.P.; Malumbres, M.P.; López-Granado, O. Simulation Framework for Evaluating Video Delivery Services over Vehicular Networks. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garrido Abenza, P.P.; P. Malumbres, M.; Piñol, P.; López Granado, O. A Simulation Tool for Evaluating Video Streaming Architectures in Vehicular Network Scenarios. Electronics 2020, 9, 1970. https://doi.org/10.3390/electronics9111970

Garrido Abenza PP, P. Malumbres M, Piñol P, López Granado O. A Simulation Tool for Evaluating Video Streaming Architectures in Vehicular Network Scenarios. Electronics. 2020; 9(11):1970. https://doi.org/10.3390/electronics9111970

Chicago/Turabian StyleGarrido Abenza, Pedro Pablo, Manuel P. Malumbres, Pablo Piñol, and Otoniel López Granado. 2020. "A Simulation Tool for Evaluating Video Streaming Architectures in Vehicular Network Scenarios" Electronics 9, no. 11: 1970. https://doi.org/10.3390/electronics9111970

APA StyleGarrido Abenza, P. P., P. Malumbres, M., Piñol, P., & López Granado, O. (2020). A Simulation Tool for Evaluating Video Streaming Architectures in Vehicular Network Scenarios. Electronics, 9(11), 1970. https://doi.org/10.3390/electronics9111970