Recognition of Drivers’ Activity Based on 1D Convolutional Neural Network

Abstract

:1. Introduction

2. Materials and Methods

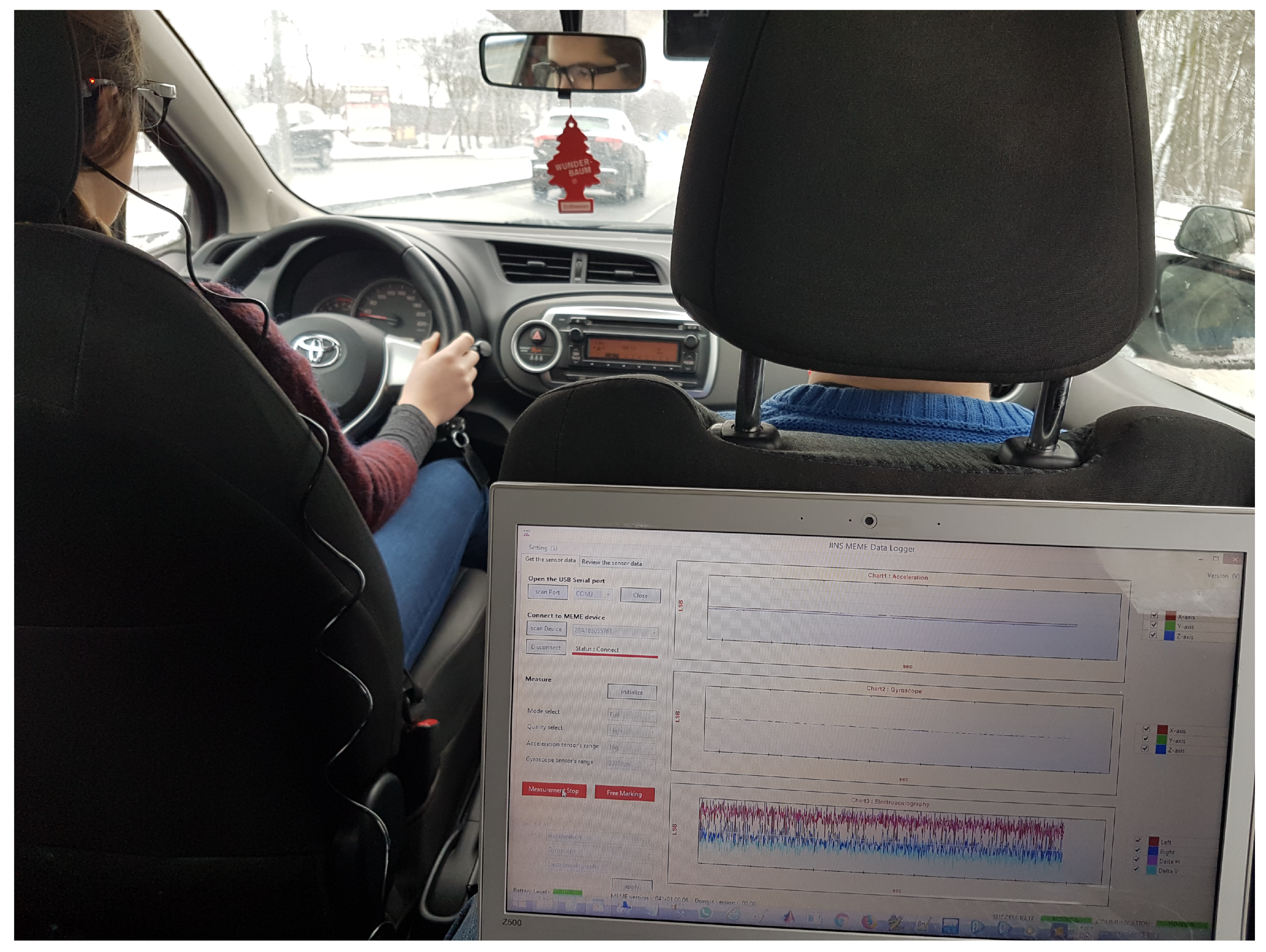

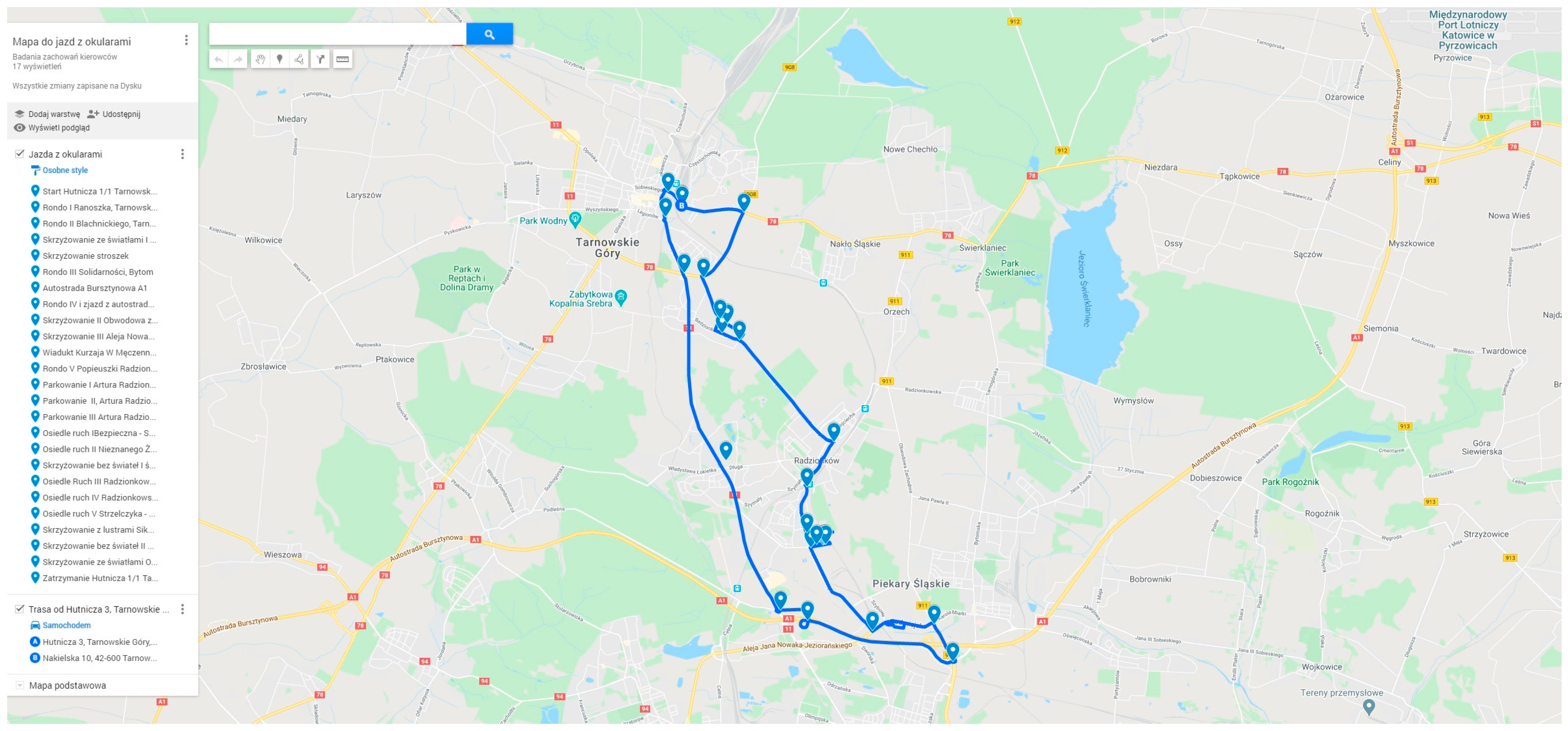

2.1. Experiment Setup

- journey through a motorway;

- drive straight ahead in city traffic;

- passage of a section straight ahead outside of the urban area;

- drive straight ahead in residential traffic;

- driving through a roundabout (right turn, driving straight ahead and left turn);

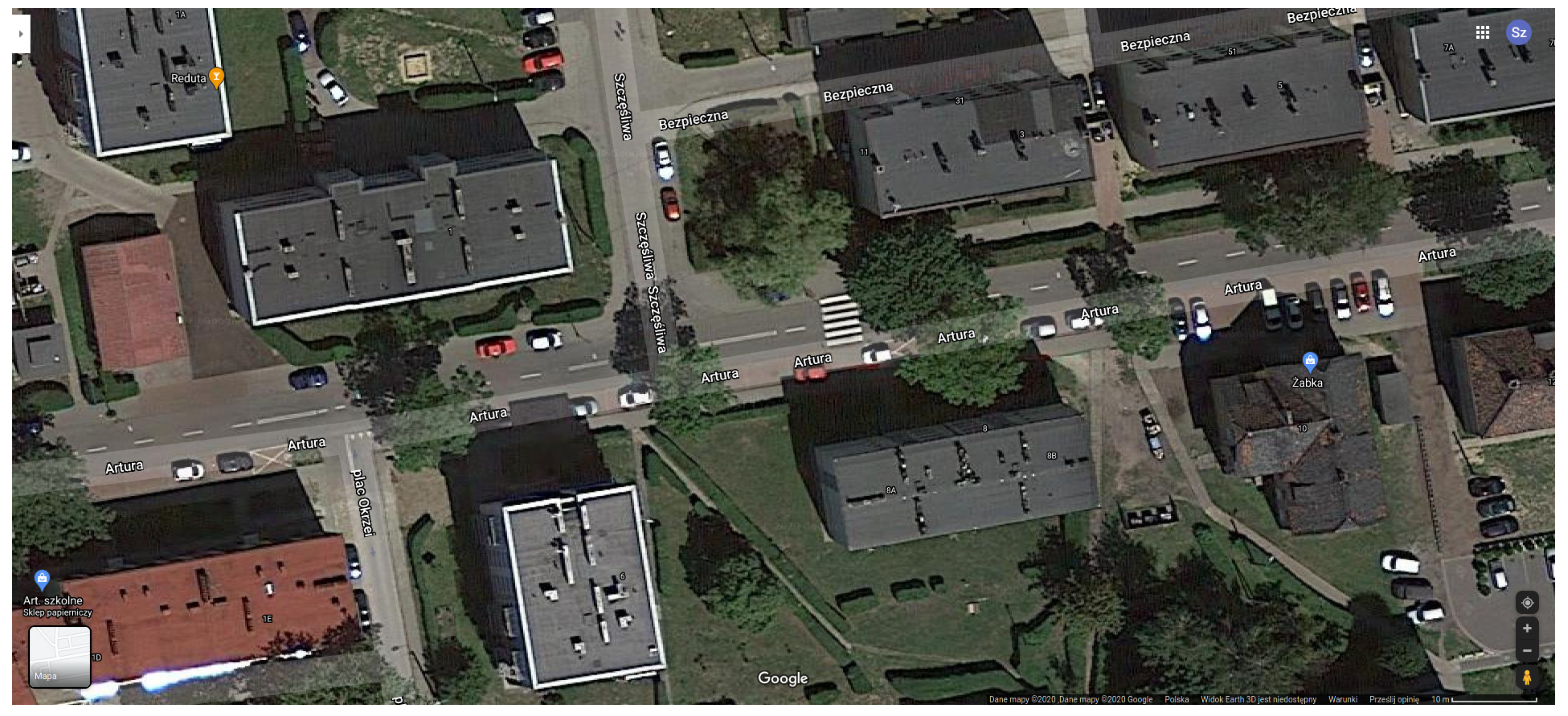

- driving through a crossroads (right turn, driving straight ahead and left turn);

- parking (parallel, perpendicular, angled).

2.2. Data Preprocessing

- parking: 120 recordings,

- driving through a roundabout: 120 recordings,

- driving in city traffic: 160 recordings,

- driving through an intersection: 120 recordings.

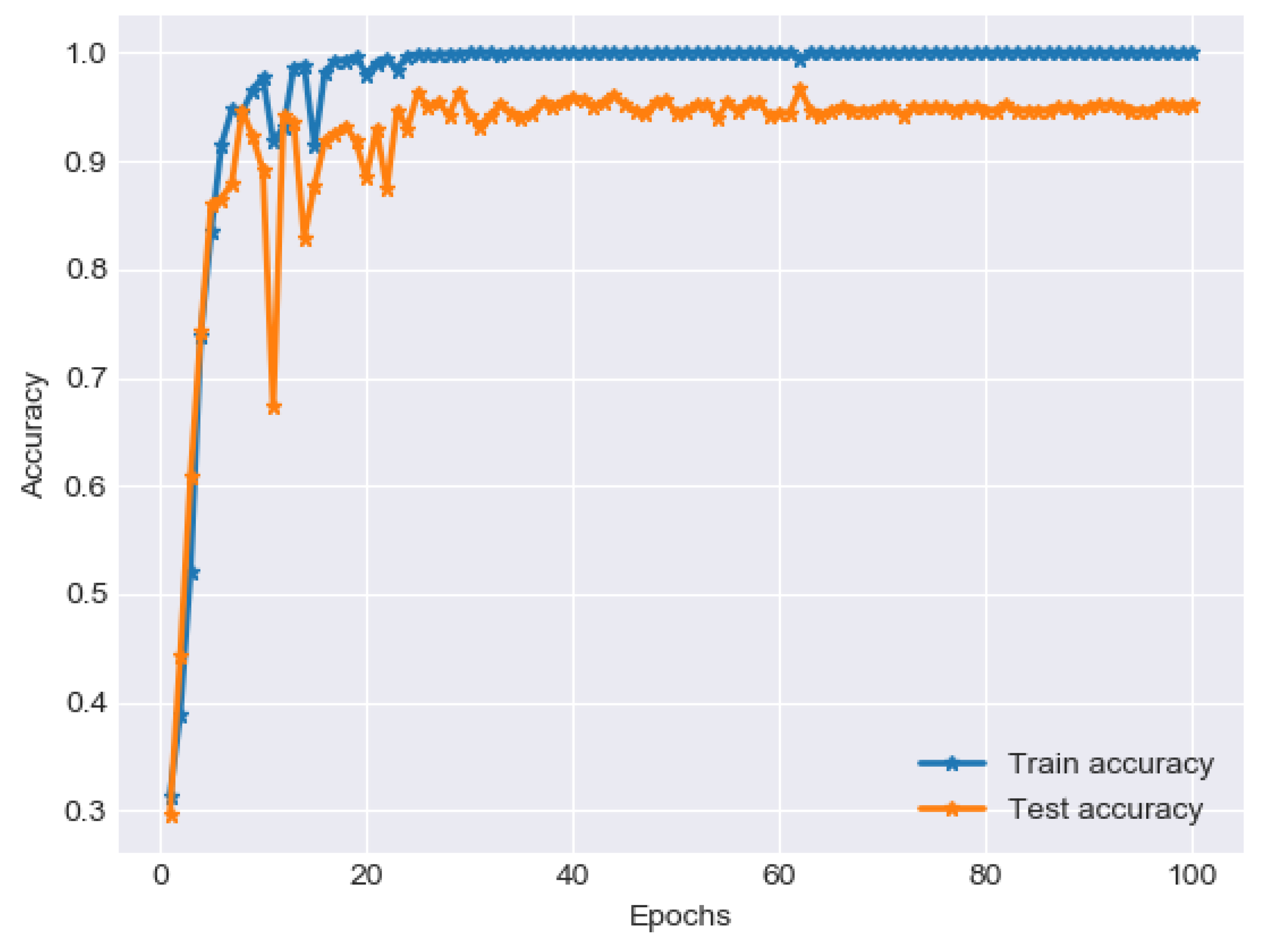

2.3. Classification

- Convolution with max pooling block—first convolution layer produces 64 feature maps which are then processed by activation function in order to capture non-linear patterns and followed by pooling layer with kernel size of two to reduce the extracted information. The second convolution layer generates 32 feature maps with kernel of size three (as in the first block), followed again by rectified linear unit (ReLU) and pooling layer [35]. Although the kernel size of convolutional layer may be much higher in the case of 1D CNN than in their two-dimensional (2D) counterpart, the best results were achieved with the smaller kernel.

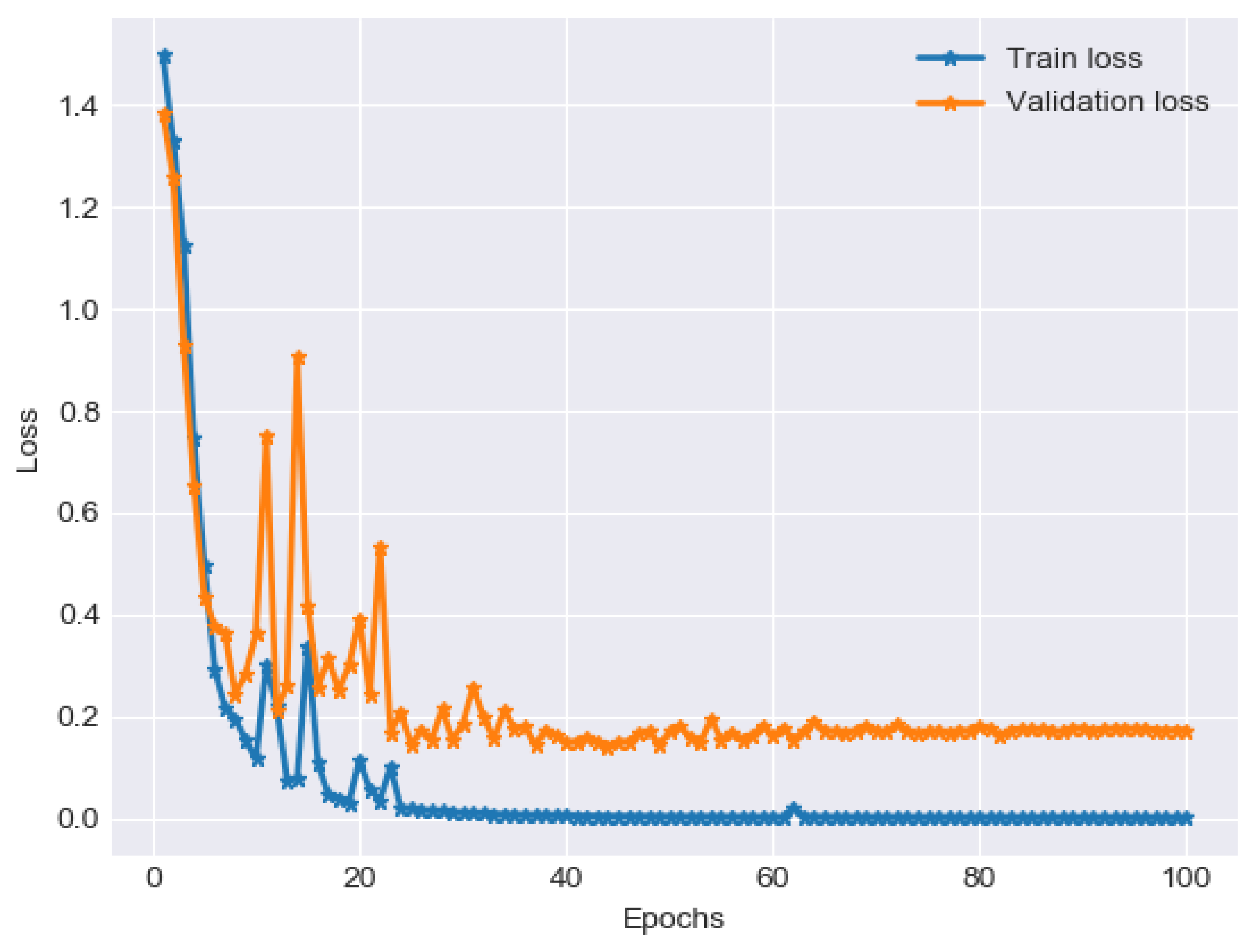

- Dropout layer—the dropout layer was set with the rate of 0.5. This layer turned out to be the key element because it prevents overfitting at the beginning of the training phase [36].

- Dense and flatten blocks—after obtaining the data from the second convolutional pooling block, the feature maps are mapped into their one-dimensional representation and classified with the single and final layer consisting of four neurons followed by softmax probability activation function.

3. Results

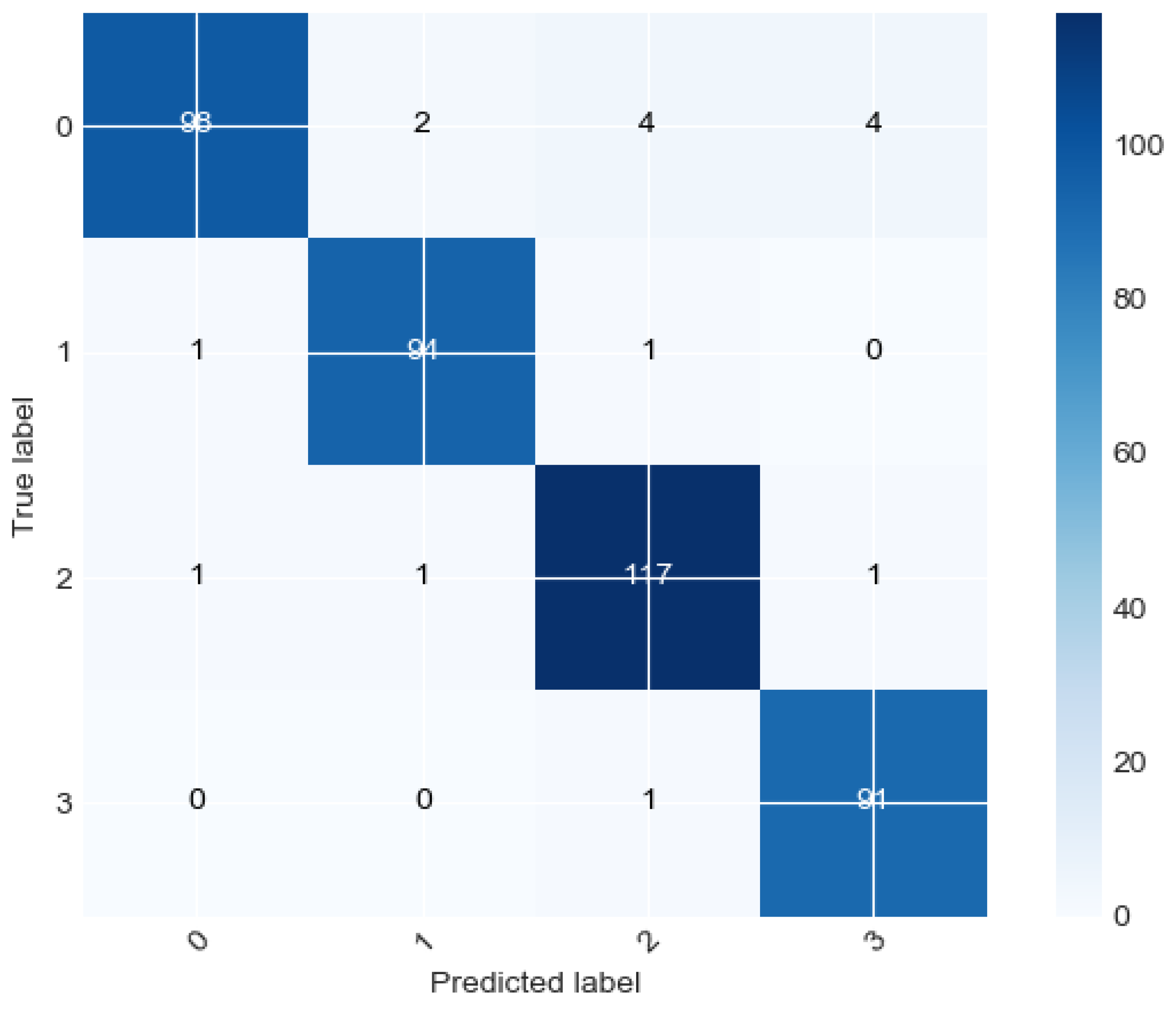

- for parking—ten out of 108 signals were classified incorrectly (two as driving through a roundabout, four as driving in city traffic and four as driving through an intersection);

- for driving through a roundabout—two out of 96 signals were classified incorrectly (one as parking and one as driving in city traffic);

- for driving in city traffic—three out of 120 signals were classified incorrectly (as one of each group);

- for driving through an intersection—one out of 92 signals was classified incorrectly (as driving in city traffic).

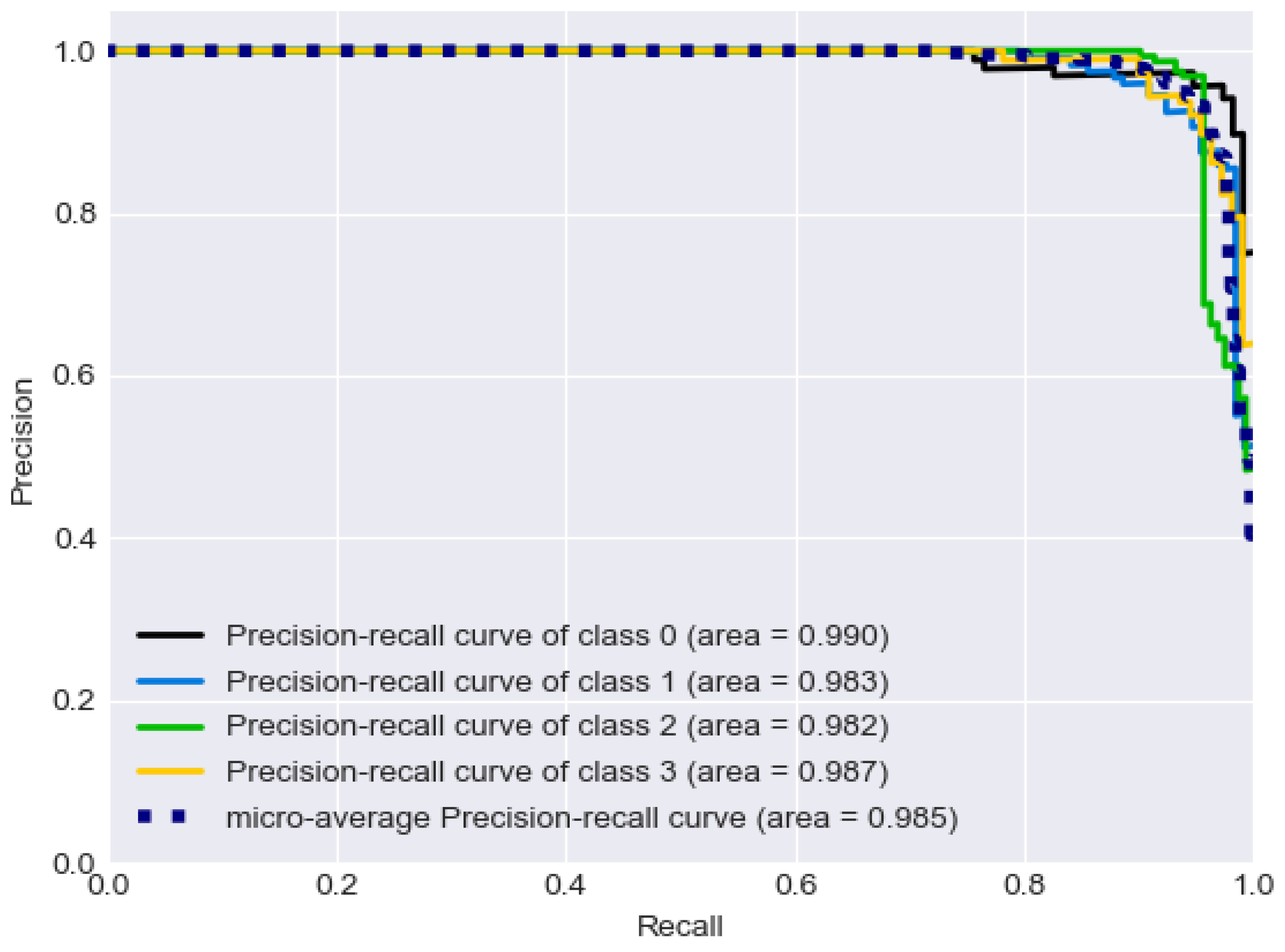

- Precision is the proportion of positive samples out of the retrieved samples (true positive and false positive).

- Recall (sensitivity) measures how accurate is the model within all the positive samples.

- F1 score—combination of the two aforementioned metrics which rises when both precision and recall increases.

4. Discussion

- Doniec et al.’s study, which used BFS approach with 2-fold cross validation on seven activities (62% driving on a highway, parking in front, parallel parking, slope parking, driving around a roundabout, driving in city traffic, driving in residential traffic) and four activities (85% for driving on a freeway, city traffic, parking, driving in a residential area) [17];

- Jiang et al. study which used k-nearest neighbors (kNN) and SVM classifying approach on signals from wearable devices (90%) [38];

- Vora et al.’s study which used driver’s eye tracking in video recording and CNN-based classifier (95.2%) [25];

- Galarza et al.’s study, which used a video recording of the driver’s face, statistical model and Google API-based classifier (93.37%) [39];

- Mulhall et al.’s study, which used binary logistic regression for recognition of lane departures (73%) and microsleeps (96%) during driving in real road conditions [40].

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Ethical Statements

Abbreviations

| 1D | One-dimensional |

| 2D | Two-dimensional |

| BFS | Best fit sequence[s] |

| CNN | Convolutional neural network |

| CUDA | Compute unified device architecture |

| ECG | Electrocardiogram |

| EEG | Electroencephalogram |

| EOG | Electrooculography |

| GAN | Generative adversarial network |

| GPU | Graphics processing unit |

| IMU | Inertial measurement unit |

| kNN | K-nearest neighbors |

| LSTM | Long short-term memory |

| MARS | Masking action relevant stimuli |

| RNN | Recurrent neural network |

| ReLU | Rectified linear unit |

| ROC | Receiver operating characteristic |

| SVM | Support vector machine |

| VRAM | Video RAM |

References

- Salvucci, D.D. Modeling Driver Behavior in a Cognitive Architecture. Hum. Factors 2006, 48, 362–380. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Braunagel, C.; Geisler, D.; Rosenstiel, W.; Kasneci, E. Online Recognition of Driver-Activity Based on Visual Scanpath Classification. IEEE Intell. Transp. Syst. Mag. 2017, 9, 23–36. [Google Scholar] [CrossRef]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Tröster, G. Eye movement analysis for activity recognition. In Proceedings of the 11th International Conference on Ubiquitous Computing, Orlando, FL, USA, 30 September–3 October 2009; pp. 41–50. [Google Scholar] [CrossRef] [Green Version]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Troster, G. Eye movement analysis for activity recognition using electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 741–753. [Google Scholar] [CrossRef] [PubMed]

- Huda, K.; Hossain, M.S.; Ahmad, M. Recognition of reading activity from the saccadic samples of electrooculography data. In Proceedings of the 2015 International Conference on Electrical Electronic Engineering (ICEEE), Rajashi, Bangladesh, 4–6 November 2015; pp. 73–76. [Google Scholar] [CrossRef]

- D’Souza, S.; Natarajan, S. Recognition of EOG based reading task using AR features. In Proceedings of the International Conference on Circuits, Communication, Control and Computing (I4C), Bangalore, India, 20–22 November 2014; pp. 113–117. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Driver Activity Recognition for Intelligent Vehicles: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2019, 68, 5379–5390. [Google Scholar] [CrossRef] [Green Version]

- Sigari, M.H.; Pourshahabi, M.R.; Soryani, M.; Fathy, M. A Review on Driver Face Monitoring Systems for Fatigue and Distraction Detection. Int. J. Adv. Sci. Technol. 2014, 64, 73–100. [Google Scholar] [CrossRef]

- Niwa, S.; Yuki, M.; Noro, T.; Shioya, S.; Inoue, K. A Wearable Device for Traffic Safety—A Study on Estimating Drowsiness with Eyewear, JINS MEME; SAE Technical Paper Series; SAE International: Detroit, MI, USA, 2016. [Google Scholar] [CrossRef]

- Joseph, D.P.; Miller, S.S. Apical and basal membrane ion transport mechanisms in bovine retinal pigment epithelium. J. Physiol. 1991, 435, 439–463. [Google Scholar] [CrossRef]

- Lagodzinski, P.; Shirahama, K.; Grzegorzek, M. Codebook-based electrooculography data analysis towards cognitive activity recognition. Comput. Biol. Med. 2017, 95. [Google Scholar] [CrossRef]

- Grzegorzek, M. Sensor Data Understanding; Logos Verlag Berlin GmbH: Berlin, Germany, 2017. [Google Scholar]

- Shirahama, K.; Köping, L.; Grzegorzek, M. Codebook Approach for Sensor-Based Human Activity Recognition. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, UbiComp ’16, Heidelberg, Germany, 12–16 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 197–200. [Google Scholar] [CrossRef]

- JINS MEME. JINS MEME Glasses Specifications. Available online: https://www.cnet.com/reviews/jins-meme-preview/ (accessed on 17 June 2020).

- Braunagel, C.; Kasneci, E.; Stolzmann, W.; Rosenstiel, W. Driver-activity recognition in the context of conditionally autonomous driving. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems (ITSC), Las Palmas, Spain, 15–18 September 2015; pp. 1652–1657. [Google Scholar] [CrossRef]

- Khushaba, R.N.; Kodagoda, S.; Lal, S.; Dissanayake, G. Driver drowsiness classification using fuzzy wavelet-packet-based feature-extraction algorithm. IEEE Trans. Biomed. Eng. 2011, 58, 121–131. [Google Scholar] [CrossRef] [Green Version]

- Doniec, R.; Sieciński, S.; Piaseczna, N.; Mocny-Pachońska, K.; Lang, M.; Szymczyk, J. The Classifier Algorithm for Recognition of Basic Driving Scenarios. In Information Technology in Biomedicine; Piętka, E., Badura, P., Kawa, J., Więcławek, W., Eds.; Springer: Cham, Switzerland, 2020; pp. 359–367. [Google Scholar] [CrossRef]

- Stapel, J.; Hassnaoui, M.E.; Happee, R. Measuring Driver Perception: Combining Eye-Tracking and Automated Road Scene Perception. Hum. Factors J. Hum. Factors Ergon. Soc. 2020. [Google Scholar] [CrossRef]

- Gao, Z.K.; Li, Y.L.; Yang, Y.X.; Ma, C. A recurrence network-based convolutional neural network for fatigue driving detection from EEG. Chaos Interdiscip. J. Nonlinear Sci. 2019, 29, 113126. [Google Scholar] [CrossRef]

- Karuppusamy, N.S.; Kang, B.Y. Multimodal System to Detect Driver Fatigue Using EEG, Gyroscope, and Image Processing. IEEE Access 2020, 8, 129645–129667. [Google Scholar] [CrossRef]

- Jiao, Y.; Deng, Y.; Luo, Y.; Lu, B.L. Driver sleepiness detection from EEG and EOG signals using GAN and LSTM networks. Neurocomputing 2020, 408, 100–111. [Google Scholar] [CrossRef]

- Shin, J.; Kim, S.; Yoon, T.; Joo, C.; Jung, H.I. Smart Fatigue Phone: Real-time estimation of driver fatigue using smartphone-based cortisol detection. Biosens. Bioelectron. 2019, 136, 106–111. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Wang, X.; Yang, Y.; Mu, C.; Cai, Q.; Dang, W.; Zuo, S. EEG-Based Spatio–Temporal Convolutional Neural Network for Driver Fatigue Evaluation. IEEE Trans. Neural Networks Learn. Syst. 2019, 30, 2755–2763. [Google Scholar] [CrossRef]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2. [Google Scholar] [CrossRef] [Green Version]

- Vora, S.; Rangesh, A.; Trivedi, M.M. Driver Gaze Zone Estimation Using Convolutional Neural Networks: A General Framework and Ablative Analysis. IEEE Trans. Intell. Veh. 2018, 3, 254–265. [Google Scholar] [CrossRef]

- Act of 5 January 2011 on Vehicle Drivers. Journal of Laws of the Republic of Poland (Dz.U. 2011 nr 30 poz. 151). Available online: http://prawo.sejm.gov.pl/isap.nsf/DocDetails.xsp?id=WDU20110300151 (accessed on 24 November 2020).

- Act of 6 September 2001 on the road traffic. Journal of Laws of the Republic of Poland (Dz.U. 1997 nr 28 poz. 152). Available online: http://isap.sejm.gov.pl/isap.nsf/download.xsp/WDU20011251371/U/D20011371Lj.pdf (accessed on 24 November 2020).

- Regulation of the Minister of Infrastructure of 28 June 2019 on Examining Applicants for Driving Licenses, Training, Examining and Obtaining Qualifications by Examiners and Samples of Documents Used in These Matters. Journal of Laws of the Republic of Poland (Dz.U. 2019 poz. 1206). Available online: http://isap.sejm.gov.pl/isap.nsf/DocDetails.xsp?id=WDU20190001206 (accessed on 24 November 2020).

- Doniec, R.; Duraj, K.; Mocny-Pachońska, K.; Piaseczna, N.; Sieciński, S.; Tkacz, E. Drivers’ Activity Tracking With JINS MEME Smart Glasses. 2020. Available online: https://ieee-dataport.org/documents/drivers-activity-tracking-jins-meme-smart-glasses (accessed on 24 November 2020).

- Van Leeuwen, P.M.; de Groot, S.; Happee, R.; de Winter, J.C.F. Differences between racing and non-racing drivers: A simulator study using eye-tracking. PLoS ONE 2017, 12, e0186871. [Google Scholar] [CrossRef] [Green Version]

- Philipp, G.; Song, D.; Carbonell, J.G. The exploding gradient problem demystified—Definition, prevalence, impact, origin, tradeoffs, and solutions. arXiv 2017, arXiv:1712.05577. [Google Scholar]

- Kiranyaz, S.; Ince, T.; Abdeljaber, O.; Avci, O.; Gabbouj, M. 1-D Convolutional Neural Networks for Signal Processing Applications. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8360–8364. [Google Scholar] [CrossRef]

- Amiri, P.; Abbasi, H.; Derakhshan, A.; Gharib, B.; Nooralishahi, B.; Mirzaaghayan, M. Potential Prognostic Markers in the Heart Rate Variability Features for Early Diagnosis of Sepsis in the Pediatric Intensive Care Unit using Convolutional Neural Network Classifiers. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 1031–1034. [Google Scholar] [CrossRef]

- Zubarev, I.; Zetter, R.; Halme, H.L.; Parkkonen, L. Adaptive neural network classifier for decoding MEG signals. NeuroImage 2019, 197, 425–434. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [Green Version]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar] [CrossRef]

- Kumar, R.; Indrayan, A. Receiver operating characteristic (ROC) curve for medical researchers. Indian Pediatr. 2011, 48, 277–287. [Google Scholar] [CrossRef] [PubMed]

- Jiang, L.; Lin, X.; Liu, X.; Bi, C.; Xing, G. SafeDrive: Detecting Distracted Driving Behaviors Using Wrist-Worn Devices. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 144:1–144:22. [Google Scholar] [CrossRef]

- Galarza, E.E.; Egas, F.D.; Silva, F.M.; Velasco, P.M.; Galarza, E.D. Real Time Driver Drowsiness Detection Based on Driver’s Face Image Behavior Using a System of Human Computer Interaction Implemented in a Smartphone. In Proceedings of the International Conference on Information Technology & Systems (ICITS 2018), Libertad City, Ecuador, 10–12 January 2018; Rocha, Á., Guarda, T., Eds.; Springer: Cham, Switzerland, 2018; pp. 563–572. [Google Scholar] [CrossRef]

- Mulhall, M.D.; Cori, J.; Sletten, T.L.; Kuo, J.; Lenné, M.G.; Magee, M.; Spina, M.A.; Collins, A.; Anderson, C.; Rajaratnam, S.M.; et al. A pre-drive ocular assessment predicts alertness and driving impairment: A naturalistic driving study in shift workers. Accid. Anal. Prev. 2020, 135, 105386. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Shirahama, K.; Nisar, M.; Köping, L.; Grzegorzek, M. Comparison of Feature Learning Methods for Human Activity Recognition Using Wearable Sensors. Sensors 2018, 18, 679. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Choi, M.; Koo, G.; Seo, M.; Kim, S.W. Wearable Device-Based System to Monitor a Driver’s Stress, Fatigue, and Drowsiness. IEEE Trans. Instrum. Meas. 2018, 67, 634–645. [Google Scholar] [CrossRef]

| Category | Precision | Recall | F1-Score |

|---|---|---|---|

| 0—parking | 0.98 | 0.907 | 0.942 |

| 1—roundabout | 0.97 | 0.98 | 0.97 |

| 2—city traffic | 0.95 | 0.975 | 0.96 |

| 3—intersection | 0.95 | 0.98 | 0.968 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doniec, R.J.; Sieciński, S.; Duraj, K.M.; Piaseczna, N.J.; Mocny-Pachońska, K.; Tkacz, E.J. Recognition of Drivers’ Activity Based on 1D Convolutional Neural Network. Electronics 2020, 9, 2002. https://doi.org/10.3390/electronics9122002

Doniec RJ, Sieciński S, Duraj KM, Piaseczna NJ, Mocny-Pachońska K, Tkacz EJ. Recognition of Drivers’ Activity Based on 1D Convolutional Neural Network. Electronics. 2020; 9(12):2002. https://doi.org/10.3390/electronics9122002

Chicago/Turabian StyleDoniec, Rafał J., Szymon Sieciński, Konrad M. Duraj, Natalia J. Piaseczna, Katarzyna Mocny-Pachońska, and Ewaryst J. Tkacz. 2020. "Recognition of Drivers’ Activity Based on 1D Convolutional Neural Network" Electronics 9, no. 12: 2002. https://doi.org/10.3390/electronics9122002

APA StyleDoniec, R. J., Sieciński, S., Duraj, K. M., Piaseczna, N. J., Mocny-Pachońska, K., & Tkacz, E. J. (2020). Recognition of Drivers’ Activity Based on 1D Convolutional Neural Network. Electronics, 9(12), 2002. https://doi.org/10.3390/electronics9122002