1. Introduction

Multimedia forensics is one of the key technologies for digital evidence authentication in cybersecurity. The rapid expansion in the amount of digital content in social networks has also brought about a significant increase in the number of copyright infringements [

1]. Nowadays, new image processing manipulations are rapidly developing and are incorporated into image processing software such as Photo Impact and Adobe Photoshop. Digital images are more likely to be copied and tampered while transmitting over the Internet. Therefore, concerns pertaining to the enhancement of security and protection against violations of digital images have become critical over the past decade [

2]. Researchers are devoted to designing associated forensics algorithms, detecting the unauthorized manipulation, and then protecting the copyrights of original images.

In general, the current techniques for image copyright protection can be divided into digital watermarking [

3,

4,

5] and content-based copy detection [

6,

7]. Digital watermarking is the mechanism that embeds digital watermarks as the copyright information into digital images, and the embedded digital watermark is extracted as a basis for verification during copyright detection procedures. However, if there is a large number of images that need to be detected, the process of watermarking extraction verification would be time consuming and labor-intensive. On the other hand, content-based copy detection is the mechanism that captures unique feature values from original digital images, and could detect the suspected images from a large number of images based on the feature values. Digital watermarking and content-based copy detection techniques are considered to complement each other and could effectively protect the copyrights of digital images. It can be seen that content-based copy detection can first find a list of suspected images from a large number of images, and then the embedded watermark in suspected images can be extracted for further verification—in other words, when the image owner worries his/her images have been illegally manipulated and circulated over the Internet. S/he could first generate various manipulation images and feed them into the copy detection scheme for extracting image features. Next, s/he collects the similar images by using image search engine—i.e., Google Images. With the extracted image features, the image owner could filter out the suspicious images by using image copy detection. If the identified copy images are manipulated by the image owner herself/himself, s/he could exclude them based on the corresponding sources. As for the rest identified copy images, the image owner could claim her/his ownership and ask the unauthorized users to pay the penalty or take legal responsibility by extracting the hidden watermark.

The traditional image copy detection schemes [

8] based on comparing the shape or texture in images can only detect the unauthorized copy of images. However, the infringement of digital images includes not only unauthorized copies, but also different image processing manipulations, such as rotation, compression, cropping and blurring, etc. Researchers observed that typical image manipulations may leave unique traces and designed forensic algorithms that extract features related to these traces and use them to detect targeted image manipulations [

9,

10]. However, most of the algorithms are designed to detect a single image processing manipulation. As a result, multiple tests must be run to authenticate an image, which would disturb the detection results and increase the overall false alarm rate among several detectors.

Lately, to effectively detect the infringed images with multiple image processing manipulations, content-based image copy detection schemes have been presented by scholars. Kim et al. [

11] first applied the discrete cosine transform (DCT) to propose a novel content-based image copy detection mechanism, which can detect infringed digital images with scaling and other content modifications. However, it achieved a poor performance in the processing of rotated digital images, which can only detect the manipulation with a 180° rotation. To detect the digital images with different rotation angles, Wu et al. [

12] proposed a scheme by extracting and comparing image feature values in the Y channel of the YUV (luminance, blue and red chrominance) color model, and could successfully detect the infringed images with rotation of 90° or 270°. However, it is still not able to effectively detect infringed images with others rotation angles. Lin et al. [

13] proposed an image copy detection mechanism based on the feature extraction method of edge information, and successfully detected the infringed image with more different rotation angles. Lately, Zhou et al. [

14] proposed a mechanism that can detect infringed images with any rotation angles by extracting and comparing two global feature values (gradient magnitude and direction) of the digital image. However, this mechanism requires experts to analyze the digital images in advance to extract effective feature values of the digital images.

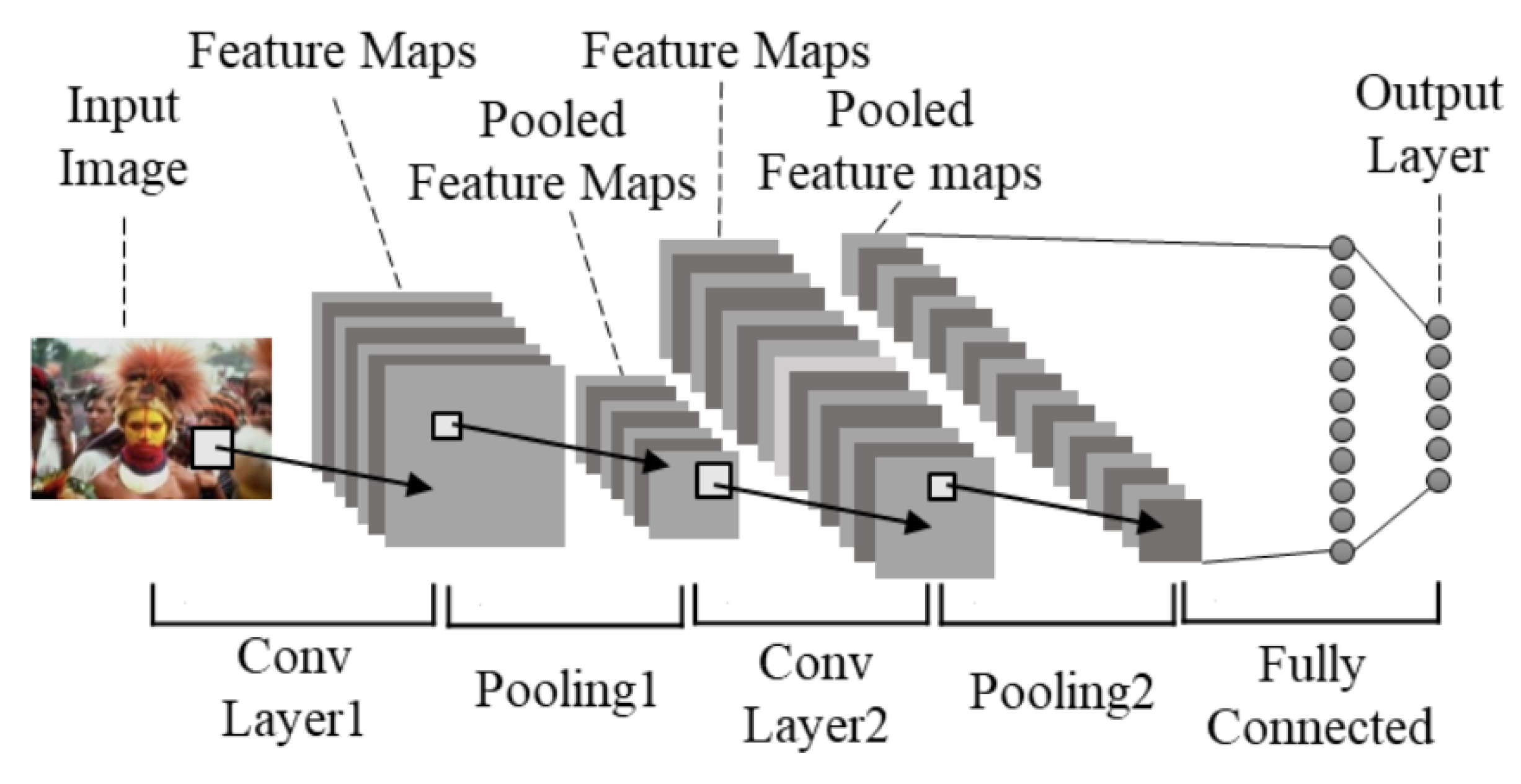

Recently, a deep learning technique based on convolutional neural networks (CNNs) has taken on an extraordinary role in computer vision research fields, such as image recognition, object detection, and semantic segmentation [

15]. It has been demonstrated that CNNs have an ability to automatically learn and classify the feature values of digital images, which is helpful to design associated forensics algorithms and detect the unauthorized manipulation for image copyright protection. Nowadays, several CNN models, such as Google Inception Net, ResNet, MobileNet, NASNet, and their different versions, have been developed by researchers to automatically learn classification features directly from data [

16]. Inception V3 is now consider to be a representative version of the CNN family. Donahue et al.’s [

17] research shows that the image feature extraction model in Inception V3 can retain the parameters of all convolutional layers in the trained model and replace the last fully connected layer of the next training task. The target image may use the trained neural network to extract the features of the image. The extracted features will be used as input to solve the classification problem caused by the insufficient data of the neural network, which would also shorten the time required for training and improve the accuracy of classification.

In this paper, to study a high-performance convolutional neural network model for protection against violations of digital images by adaptively learning manipulation features, a content-based image copy detection scheme based on Inception V3 is proposed. The image dataset was transferred by a number of image processing manipulations, and the feature values were automatically extracted for learning and detecting the suspected unauthorized digital image. A Google Inception Net training model was used to automatically establish a convolutional layer to train the dataset. The performance under different training parameters is studied to design the optimum training model. The experimental results show that the proposed scheme takes an extraordinary role in detecting duplicate digital images under different manipulations, such as rotation, scaling, and other content modifications. The detection accuracy and efficiency are rapidly improved compared with other content-based image copy detection schemes.

The remainder of the paper is organized as follows: The related work of content-based image copy detection and the overview of CNNs are presented in

Section 2.

Section 3 provides a detailed description of the proposed scheme. The experimental results and comparison of related literature are presented in

Section 4. Lastly,

Section 5 concludes our work.

3. Methodology

In this study, the conventional neural network (CNN) method was adopted to develop image copy detection based on the training of the detection CNN model to determine whether the query digital image is a suspected unauthorized image. The image copy detection model of this scheme consists of image preprocessing and detection model training procedures. To gain an expanded digital image dataset, images were first converted into 44 different forms including the original image during image preprocessing. After that, the digital images were divided into two sorts of datasets by selecting 70% of the digital images as the training dataset, and the remaining 30% of the digital images are regarded as the test dataset. The image copy detection model was then trained by automatically extracting feature values of each image based on Inception V3. Finally, the detection model is used to detect whether the query image is a suspected unauthorized image or not. The flowchart of the proposed image copy detection scheme is shown in

Figure 2.

3.1. Image Preprocessing

At the image preprocessing stage, various common image processing manipulations, such as translation, rotation, and other content modifications, were firstly performed on each image via image processing software to generate 44 different forms of the image that are regarded as manipulated digital images. It was noted that the original image and its corresponding manipulation images will form a group—in other words, they will have the same label. This is because our proposed image copy detection scheme aimed to shrink the training time and scale of the training dataset. When the image owner wants to verify whether the similar images collected from the Internet contains her/his image, they only needs to generate various manipulation images in advance and feed them into our proposed image copy detection scheme for training the features of her/his image. Then, the image copy detection scheme would identify the most suspicious image from the similar images. To prevent overfitting, 70% of the images which belong to the same group were selected and finally formed a training dataset and 10% of the training dataset were randomly extracted for verification during model training. The remaining 30% of the images were regarded as the test dataset.

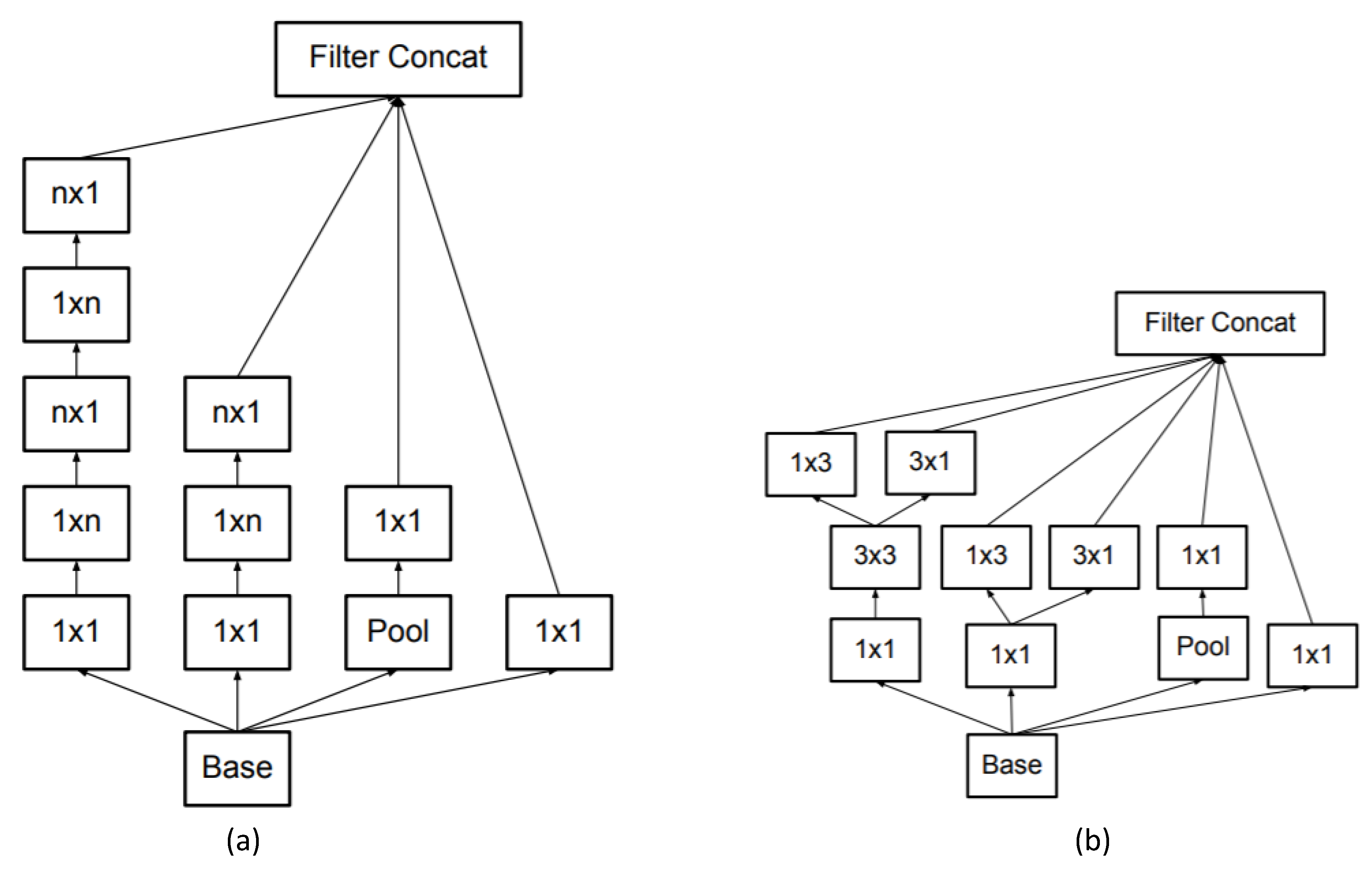

3.2. Detection Model Training

Tensorflow was selected as the development environment to train the dataset, and the Google Net Inception V3 [

33] convolutional neural network architecture was utilized to learn the training model, which contains four significant parts—i.e., 1 × 1 convolution, 3 × 3 convolution, 5 × 5 convolution, and 3 × 3 maximum pooling, as shown in

Figure 3.

To gain optimized feature values, different information of digital images was extracted by multiple convolutional layers and combining the outputs of these convolutional layers as the input of the next layer [

34]. Inception V3 improves the idea of factorization that decomposes a relatively large two-dimensional convolutional layer into petty small one-dimensional convolutional layers to accelerate the computing speed and deepness of the network. The convolution structure performs a significant role in handling spatial feature values and increases the diversity of feature values. The layout of our network is shown in

Table 1. The output size of each module is the input size of the next one. The variations of the reduction technique are depicted in [

33] and are also used in our proposed network architecture to increase the accuracy while maintaining a constant amount of computation complexity. The classifier in our network is set as softmax. It is noted that both sigmoid and softmax can be used for multiclass classification. However, the sigmoid looks at each raw output value separately. In contrast, the outputs of softmax are all interrelated. Based on the scenario of image copy detection, softmax is selected as classifier in our proposed scheme.

To determine the relationship between images and labels, the preprocessed digital image dataset with corresponding labels is put into the model for training. The detection model training consists of two stages: (a) training parameters setting and (b) model accuracy detection. During the setting training parameters stage, the training amplitude, time, and size of the input digital image dataset are adjusted, and the size of the feature map and the number of extracted feature values are also determined.

In the Inception training model, the input size of the digital image was first set and divided into three depths based on the three channels of RGB as the input layer. The convolution layer depth was set to 32, which will gain 32 filters with a depth, height, and width of 3. The convolution results are 32 feature values with heights and widths of 149 based on the following equations:

where

W1 is the width of the unconvoluted digital image; W

2 is the width of the convoluted feature map;

F is the width of the filter;

P is the number of zeros padded around the original digital image;

S is the stride; H

2 is the height of the convoluted feature map;

H1 is the height of the unconvoluted digital image.

Besides image size, training steps and learning rate can also be set as the parameters to justify the training results. The default training steps were set as 4000; this is because more training steps may improve the training accuracy but lower validation accuracy. The learning rate controls the training level of the final layer, and the default value was set as 0.01. After each training stage, the accuracy of the model was checked by the verification dataset. The training stage was repeated until the model accuracy reached the setting value (90%), as shown in

Figure 6.

During the image copy detection procedure, the system will automatically find five images in the dataset that are most similar to the query image as the suspected image list. The suspected image list is then used to compare and verify whether the query image is a suspected unauthorized image or not.

4. Results

To train the effectiveness and accuracy of the proposed method, the WBIIS database [

35,

36], which contains 10,000 open-source and copyright-free digital images in jpeg format with sizes of 384 × 256 and 256 × 384, was chosen as the test database in the experiment; the example of the test images is shown in

Figure 7. We selected 70% of the digital images as the training dataset and the remaining 30% of the digital images as the test dataset. To train whether the proposed scheme can detect unauthorized duplicated images under various attacks, each image was transferred into different forms based on 43 image processing manipulations, as shown in

Figure 8. It is noted that the watermarked image was not included in manipulations images as shown in

Figure 8. This is because we assumed that once the original image carries the hidden watermark, its corresponding manipulations will also carry the hidden watermark. Therefore, we only need to focus on discussing whether our proposed copy detection with CNNs could identify the manipulations or not.

4.1. Results in Different Learning Rates

To study the relationship between the learning rate and the training results, this scheme set the initial value of the learning rate parameters of the trained Inception V3 image feature vector model to 0.005, 0.01, 0.5, and 1.0 to compare the training results, as shown in

Table 2.

Table 2 indicates that the result of training time is inversely proportional to the learning rate. It also shows that the training time and accuracy of the default learning rate (i.e., 0.01) are satisfactory—431 and 96.23%, respectively. The training time will increase and the accuracy will decrease if the learning rate is decreased to 0.005. Therefore, we speculate that while the initial value of the learning rate parameter is less than 0.01, the longer training time will lead to overfitting and decreases the accuracy. To obtain a higher accuracy, the initial value of the learning rate should be adjusted upward, and the accuracy will reach up to 99.55% while the learning rate is 1.0.

4.2. Comparison Results in Different Training Models

To compare the results of Inception_v3 training model in Tensorflow with other training models, we future trained the detection model in ResNet_v2, MobileNet_v2, and NASNet large; the comparison results are shown in

Table 3. The results show that all of the training models can achieve very high accuracy results, whereas the training time varies widely. The smallest neural network MobileNet_v2 has the shortest training time, and the accuracy rate can reach up to 98.77%. Although the accuracy rate of NASNet_large is 99.34%, the training time is also the longest. This is because NASNet_large not only trains for the most effective feature values, but also trains to gain the best neural network architecture, which would result in more training time. With respect to Inception_v3, the accuracy is 99.55% which is the best in the four models when the learning rate is set as 1, and the training time (315 s) is also very significant. Therefore, in general, the comparison results demonstrate that the Inception_v3 model used in the proposed scheme can achieve the best performance compared with other models.

4.3. Comparison Results in Different Training Datasets

Certainly, as the results in

Table 3 demonstrate, with all manipulated images including the original image serve as the training dataset, our proposed method works with Inception_v3 and can offer a detection accuracy of up to 99.55%. However, it is impractical because such accuracy is dependent on all manipulated images being included in the training dataset. Therefore, the second experiment was conducted which only uses original images in the training dataset except for 43 manipulated images. Unfortunately, the detection accuracy rate is then decreased to 90.06%. Under such circumstances, we can see that 7 of the 10 test images with the Crayon effect cannot be detected, as is the case for six rotation manipulations, such as 22.5°, 45°, 67°, 90°, 180°, and 270° rotations. The number of undetected manipulated images is significantly decreased, and the worst case is that 4 of 6 rotation manipulated images are undetectable. For 5 of the 10 test images, the amount of undetectable rotation manipulated images ranges from 3 to 4. To increase the detection accuracy rate, the third experiment was conducted, in which the test dataset including original images and manipulated images with 45° rotation was used. With the assistance of the manipulated images with a 45° rotation, the CNN obtains extra features regarding the rotation manipulation. It is noted that the detection accuracy rate changed to 96.47%, which is 6% more than that trained with the dataset excluding manipulated images.

Based on the above experimental data demonstrated in

Table 4, we can see that the supplementary features offered by manipulated images with a 45° rotation significantly increase the detection accuracy rate of our proposed scheme. There are 8 of 10 test images which have their manipulated images with various rotations successfully detected. As for manipulated images with the “Crayon effect”, there are 4 of 10 test images that cannot be correctly detected. However, the corresponding similarities among the manipulated images with the Crayon effect and their query images are significantly increased. After carefully observing the corresponding test images which cannot be identified from the manipulated images with the “Crayon effect”, it is found that this only occurs when test images are complex and contain vivid colors.

4.4. Comparison with Content-Based Image Copy Detection Schemes

To detect the performance and accuracy of the proposed scheme, the image copy detection results are compared with some representative content-based image copy detection schemes—i.e., Lin et al.’s scheme [

13], Wu et al.’s scheme [

12], and Kim et al.’s scheme [

11]. We randomly selected 10 images from the image database, and each image was processed based on the 44 image processing manipulations to generate the query digital images. We calculated the number of images that can be detected in 10 images of each image processing manipulation. Only if all of the 10 images in each image processing manipulation are detected will the detection result be marked as “Yes”, otherwise the result will be marked as “No”.

Table 5 shows the detection results of the proposed scheme and other content-based image copy detection schemes in different image processing manipulations.

In the experiment, the proposed image copy detection scheme with the training dataset including all manipulated images could successfully detect all query digital images with an accuracy of 100%, whereas the accuracy of Lin et al.’s, Wu et al.’s, and Kim et al.’s schemes can only achieve 88.6, 70.5 and 63.6%, respectively. In terms of the robustness of detection schemes, the proposed scheme can resist all of 44 image processing manipulations which is the best in all of the four schemes. Lin et al.’s scheme can resist most of the image processing manipulations, expect for the “45° Rotation”, “Crayon Effect”, “Mosaic”, “Central Rotation”, and “Sharpened”. The performance of Wu et al.’s and Kim et al.’s schemes is not satisfactory while dealing with such image processing manipulations. With respect to detection time, Lin et al.’s, Wu et al.’s, and Kim et al.’s schemes should manually extract specific image feature values for detection, which is time consuming. On the contrary, the proposed scheme would automatically detect whether the query image is an unauthorized duplicated image once it is input into the detection model. Therefore, the comparison results demonstrate that the proposed scheme outperforms the compared image copy detection schemes in terms of detection time and accuracy. Once the proposed image copy detection scheme with the training dataset included original images and manipulated images with 45° rotation, the detection accuracy rate is the same as that of Lin et al.’s scheme. Although, both of the schemes cannot deal with manipulated images with the Crayon effect. Our proposed scheme still can identify 4 of 10 test images. As for manipulated images with 67°, 90°, 270°, and 180° rotations, the average detection accuracy rate still remains about 80%. Moreover, our proposed scheme can resist “45° Rotation”, “Mosaic” “Central Rotation”, and “Sharpened”, which cannot be handled by Lin et al.’s scheme.

Table 6 demonstrates Comparison II when images with 45° rotation/90° rotation are added to the training dataset. Here, we can see the detection performance of our proposed scheme on manipulation with different rotations angles increased, although the “Crayon Effect” manipulation is still the weakness of our proposed scheme. After carefully observing the “Crayon Effect” manipulation, we found it leads to the detection capability being decreased when the texture of the image is more blurred.

To further evaluate whether our proposed image copy detection with a CNN successfully learns the feature of training set images, and then could identify some manipulated images which are slightly different from those in the training set, five extra manipulated images were generated as 10° rotation, image with 15% noise, image twisted 25°, image 1.4 times wider, and horizontally shifted image, and added into query images.

From the accuracies listed in

Table 7, we can see the accuracies of the training set are nearly the same as previous experimental results. As for the accuracies of the testing set, they are slightly lower than those of accuracies of the training set. We believe this is mainly caused by either the “Crayon Effect” or few angle rotations manipulations. Such results are consistent with previous experiments and prove that our proposed image copy detection with a CNN could take the advantage of CNNs to learn the features of images so that some manipulations can still be identified even if they are out of the scope of the training set.

5. Conclusions

An image copy detection scheme based on the Inception V3 convolutional neural network is proposed in this paper. The image dataset is firstly transferred by a number of image processing manipulations for training the detection model with feature values. During the image copy detection procedure, the system will automatically find out the suspected image list to compare and verify whether the query image is a suspected unauthorized image or not. The experimental results show that the training accuracy will reach up to 99.55% while the training dataset including all manipulated images and the learning rate is set as 1.0, which is superior to the model of ResNet_v2, MobileNet_v2, and NASNet large. Even though the training dataset only includes original images and manipulated images with 45° rotations, our proposed scheme still outperforms Lin et al.’s scheme on the amount of manipulation types which have been successfully identified. Certainly, 80% of the manipulated images with rotations can be detected, but 40% of the manipulated images with the Crayon effect are hard to identify when the training dataset only contains original images and manipulated images with 45° rotation with our proposed scheme. Such a result indicates the lowest requirement of the training set with our proposed scheme. However, the experimental results also pointed out that our proposed image copy detection scheme can identify some manipulations which are slightly different from those in the training set. We believe it is a benefit that deep learning can effectively extract the supplementary features of images in the training set. Certainly, with experiments conducted in this work, we also discovered that the additional information is still limited by feeding manipulated images with 45° rotations. In the future, we will try to explore how to improve the detection performance of CNNs on the “Crayon Effect” and find out the tradeoff between the amount of manipulation types which should be included in the training set and the accuracy of the testing set to increase the practicability of the image copy detection scheme.