Open-Source Drone Programming Course for Distance Engineering Education

Abstract

:1. Introduction

2. Related Work

2.1. Distance Learning in Robotics

2.2. Drone Programming Education

3. RoboticsAcademy Learning Environment

- Hardware layer: represents the robot itself, being a real robot or a simulated one.

- Middleware layer: includes the software drivers required to read information from robot sensors and control robot actuators.

- Application layer: contains the student’s code (algorithms implemented by the student to solve the problem proposed) and a template (internal platform functions that provide two simple APIs to access robot hardware and take care of graphical issues for students’ code debugging purposes).

3.1. Hardware Layer

3.2. Middleware Layer

3.3. Application Layer

- Hardware abstraction layer (HAL) API: simple API to obtain processed data from robot sensors and command robot actuators at a high level

- Graphical user interface (GUI) API: used for student’s code checking and debugging, shows sensory data or partial processing in a visual way (for example, the images from the robot camera or the results of applying color filtering)

- Timing skeleton for implementing the reactive robot behavior: a continuous loop of iterations, executed several times per second. Each iteration performs four steps: collecting sensory data, processing them, deciding actions, and sending orders to the actuators

- drone. get_position (): returns the actual position of the drone as a 1 × 3 NumPy array [x, y, z], in meters.

- drone. get_velocity (): returns the actual velocities of the drone as a 1 × 3 NumPy array [vx, vy, vz], in m/s.

- drone. get_yaw_rate (): returns the actual yaw rate of the drone, in rad/s.

- drone. get_orientation (): returns the actual roll, pitch, and yaw of the drone as a 1 × 3 NumPy array [roll, pitch, yaw], in rad.

- drone. get frontal_image (): returns the latest images from the frontal camera as an OpenCV image (cv2_image).

- drone. get_ventral_image (): returns the latest images from the ventral camera as an OpenCV image (cv2_image).

- drone. get_landed_state (): returns one if the drone is on the ground (landed), two if it is in the air, and four if landing.

- drone.set_cmd_vel (vx, vy, vz, yaw_rate): commands the linear velocity of the drone in the x, y, and z directions (in m/s) and the yaw rate (rad/s) in its body-fixed frame.

- drone.set_cmd_mix (vx, vy, z, yaw_rate): commands the linear velocity of the drone in the x, y directions (in m/s), the height (z) related to the takeoff point, and the yaw rate (in rad/s).

- drone.takeoff (height): takes off from the current location to the given elevation (in meters).

- drone. land (): lands at the current location.

4. Drone Programming Course

4.1. Course Syllabus

- Unit 1. Introduction to aerial robotics: types of UAVs, real drone applications such as military, logistics, visual inspection.

- Unit 2. Drone sensors and perception: inertial measurement units (IMUs), compass, GPS, LIDAR, cameras, elementary image processing.

- Unit 3. Flight physics and basic control: 3D geometry, quadrotor physics, basic movements, hovering, forward motion, rotation, stabilization.

- Unit 4. Drone control: reactive systems, proportional–integral–derivative (PID) controllers, fuzzy control, finite state machines, position-based control, vision-based control.

- Unit 5. Drone global navigation: 2D and 3D path planning.

- Unit 6. Visual self-localization: ORBSlam, semi-direct visual odometry (SVO) algorithms.

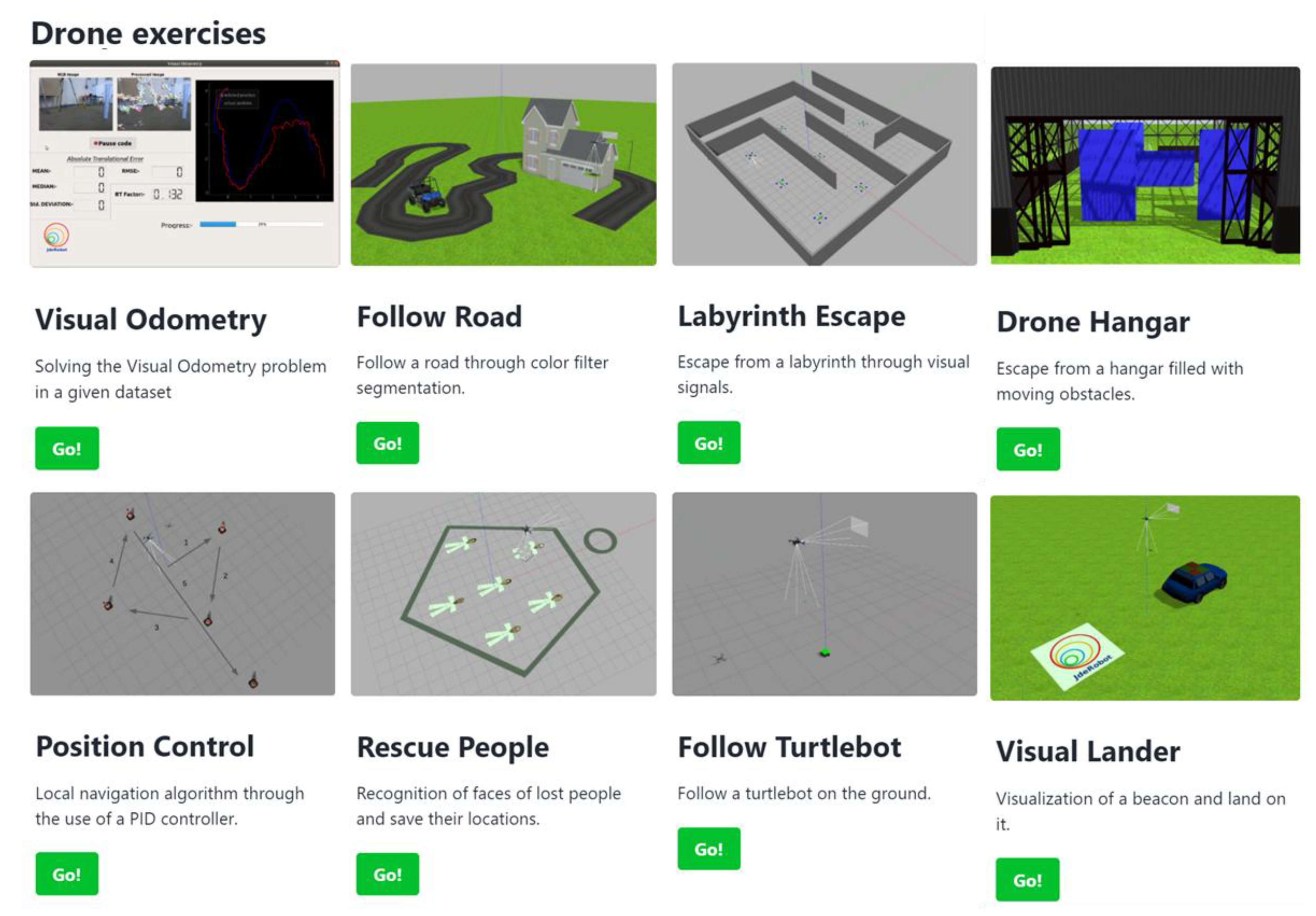

4.2. Course Practical Exercises

- Navigation by position (http://jderobot.github.io/RoboticsAcademy/exercises/Drones/position_control). This exercise aims to implement an autopilot by using the GPS sensor, the IMU, and a position-based PID controller. For this exercise, a simulated 3D world has been designed that contains the quadrotor and five beacons arranged in a cross. The objective is to program the drone to follow a predetermined route visiting the five waypoints in a given sequence, as shown in Figure 4. It illustrates the algorithms typically included in commercial autopilots such as ArduPilot or PX4.

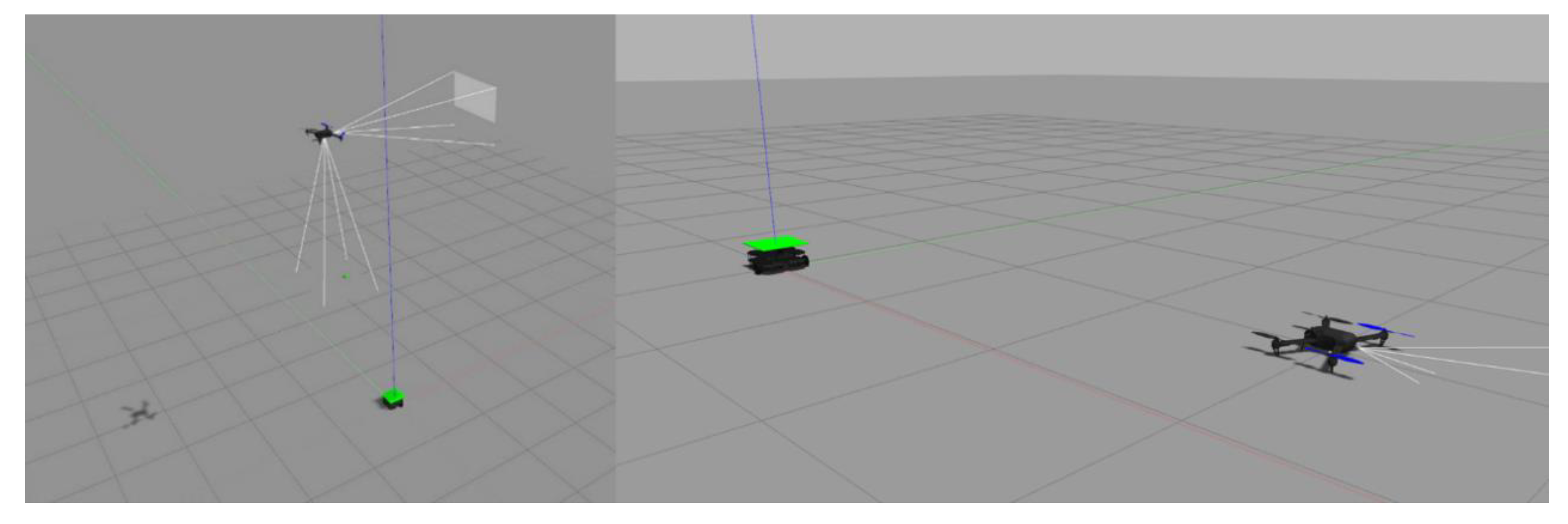

- Following an object on the ground (http://jderobot.github.io/RoboticsAcademy/exercises/Drones/follow_turtlebot). In this exercise, the objective is to implement the logic that allows a quadrotor to follow a moving object on the ground (an autonomous robot named Turtlebot with a green-colored top, see Figure 5), using a primary color filter in the images and a vision-based PID controller. The drone keeps its altitude and moves only in a 2D plane.

- Landing on a moving car (http://jderobot.github.io/RoboticsAcademy/exercises/Drones/visual_lander). In this exercise, the student needs to combine pattern recognition and vision-based control to land on a predefined beacon, a four-square chess pattern on the roof of a moving car (see Figure 6). The required image processing is slightly more complicated than a simple color filter, as the beacon may be partially seen, and its center is the relevant feature. Likewise, the controller needs to command the vertical movement of the drone.

- Escape from a maze using visual clues (http://jderobot.github.io/RoboticsAcademy/exercises/Drones/labyrinth_escape). In this exercise, the student needs to combine local navigation and computer vision algorithms to escape from a labyrinth with the aid of visual clues. The clues are green arrows placed on the ground, indicating the direction to be followed (see Figure 7). Pattern recognition in real-time is the focus here, as fast detection is essential for the drone.

- Searching for people to rescue within a perimeter (http://jderobot.github.io/RoboticsAcademy/exercises/Drones/rescue_people). The objective of this exercise is to implement the logic of a global navigation algorithm to sweep a specific area systematically and efficiently (foraging algorithm), in conjunction with visual face-recognition techniques, to report the location of people for subsequent rescue (Figure 8). The drone behavior is typically implemented as a finite state machine, with several states such as go-to-the-perimeter, explore-inside-the-perimeter, or go-back-home.

4.3. Lessons Learned

- Easy and time-efficient installation, allowing the students to start programming drones earlier: installation previously consumed at least two weeks to prepare the programming environment, as it was based on Linux packages and virtual machines. With the current framework release, installation recipes use binary Debian packages (for students with Linux operating system) and Docker containers (for students with Windows or macOS). These Docker containers have the environment already pre-installed, being installed themselves in a fast way. In the 2018/2019 course, 85.7% of students used RoboticsAcademy in native Linux, while 14.3% used Microsoft Windows with Docker containers (Figure 10). As reported in the surveys, the user experience was pleasant on both systems.

- The same platform is used for programming simulated and real drones: previously, students had to learn different tools to program different drones (both simulated and real), resulting in less time to learn programming and algorithms.

- Distance learning: the course was designed to be taught remotely; therefore, any student could learn and practice from home, anytime.

- The forum proved to be a precious tool for intercommunication and doubt resolution.

5. Drone Programming Competitions

- Competitive exercises. Several exercises in our present collection are based on completing a task in a given time while competing against the fastest or more complete solution, increasing the student’s engagement.

- Social interactions between students, teachers, and developers promoted utilizing a dedicated RoboticsAcademy (https://developers.unibotics.org/) web forum and several accounts across prime social media platforms since 2015, such as a video channel on YouTube (https://www.youtube.com/channel/UCgmUgpircYAv_QhLQziHJOQ) with more than 300 videos and 40,000 single visits and a Twitter account with more than 200 tweets (https://twitter.com/jderobot).

6. Summary and Conclusions

- Each exercise is divided into three layers: hardware, middleware, and application. This internal design makes it easier to run the same student’s code in physical and simulated robots with only minor configuration changes. The open-source Gazebo simulator, widely recognized in the robotics community, has been used for 3D simulations of all robots, sensors, and environments.

- The middleware layer is ROS-based, the de facto standard in service robotics that supports many programming languages, including Python. Previously, RoboticsAcademy was separated from this widely used middleware, needing frequent maintenance, and having little scalability and a short number of users (only those who already had a thorough background with the tool).

- The application layer contains the student’s code. It includes a hardware abstraction layer (HAL) API to grant students high-level access to the robot sensors and actuators, and a graphical user interface (GUI) API to show sensor data, process images, or debug code.

- RoboticsAcademy runs natively on Linux Ubuntu machines and can be installed using official Debian packages. Docker containers are used to allow easy installation in Windows and macOS based computers, making RoboticsAcademy particularly suited to the distance learning approach.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Aliane, N. Teaching fundamentals of robotics to computer scientists. Comput. Appl. Eng. Educ. 2011, 19, 615–620. [Google Scholar] [CrossRef]

- Mateo, T.; Andujar, J. Simulation tool for teaching and learning 3d kinematics workspaces of serial robotic arms with up to 5-DOF. Comput. Appl. Eng. Educ. 2012, 20, 750–761. [Google Scholar] [CrossRef]

- Mateo, T.; Andujar, J. 3D-RAS: A new educational simulation tool for kinematics analysis of anthropomorphic robotic arms. Int. J. Eng. Educ. 2011, 27, 225–237. [Google Scholar]

- Lopez-Nicolas, G.; Romeo, A.; Guerrero, J. Simulation tools for active learning in robot control and programming. In Proceedings of the 20th EAEEIE Annual Conference, Valencia, Spain, 22–24 June 2009; Innovation in Education for Electrical and Information Engineering: New York, NY, USA, 2009. [Google Scholar]

- Lopez-Nicolas, G.; Romeo, A.; Guerrero, J. Active learning in robotics based on simulation tools. Comput. Appl. Eng. Educ. 2014, 22, 509–515. [Google Scholar] [CrossRef]

- Jara, C.; Candelas, F.; Pomares, J.; Torres, F. Java software platform for the development of advanced robotic virtual laboratories. Comput. Appl. Eng. Educ. 2013, 21, 14–30. [Google Scholar] [CrossRef] [Green Version]

- Gil, A.; Reinoso, O.; Marin, J.; Paya, L.; Ruiz, J. Development and deployment of a new robotics toolbox for education. Comput. Appl. Eng. Educ. 2015, 23, 443–454. [Google Scholar] [CrossRef]

- Fabregas, E.; Farias, G.; Dormido-Canto, S.; Guinaldo, M.; Sanchez, J.; Dormido, S. Platform for teaching mobile robotics. J. Intell. Robot. Syst. 2016, 81, 131–143. [Google Scholar] [CrossRef]

- Detry, R.; Corke, P.; Freese, M. TRS: An Open-Source Recipe for Teaching/Learning Robotics with a Simulator. 2014. Available online: http://ulgrobotics.github.io/trs (accessed on 16 December 2020).

- Guzman, J.; Berenguel, M.; Rodriguez, F.; Dormido, S. An interactive tool for mobile robot motion planning. Robot. Auton. Syst. 2008, 56, 396–409. [Google Scholar] [CrossRef] [Green Version]

- Guyot, L.; Heiniger, N.; Michel, O.; Rohrer, F. Teaching robotics with an open curriculum based on the e-puck robot, simulations and competitions. In Proceedings of the 2nd International Conference on Robotics in Education (RiE 2011), Vienna, Austria, 15–16 September 2011; Stelzer, R., Jafarmadar, K., Eds.; Ghent University: Ghent, Belgium, 2011; pp. 53–58. [Google Scholar]

- Soto, A.; Espinace, P.; Mitnik, R. A mobile robotics course for undergraduate students in computer science. In Proceedings of the 2006 IEEE 3rd Latin American Robotics Symposium (LARS’06), Santiago, Chile, 26–27 October 2006; IEEE: New York, NY, USA, 2007; pp. 187–192. [Google Scholar]

- Thrun, S. Teaching challenge. IEEE Robot. Autom. Mag. 2006, 13, 12–14. [Google Scholar] [CrossRef]

- Jara, C.A.; Candelas, F.A.; Puente, S.; Torres, F. Hands-on experiences of undergraduate students in automatics and robotics using a virtual and remote laboratory. Comput. Educ. 2011, 57, 2451–2461. [Google Scholar] [CrossRef]

- Cliburn, D.C. Experiences with the LEGO Mindstorms throughout the Undergraduate Computer Science Curriculum. In Frontiers in Education, Proceedings of the 36th Annual Conference, San Diego, CA, USA, 27–31 October 2006; IEEE: New York, NY, USA, 2007; pp. 1–6. [Google Scholar] [CrossRef]

- Gomez-de-Gabriel, J.M.; Mandow, A.; Fernandez-Lozano, J.; García-Cerezo, A.J. Using LEGO NXT Mobile Robots with LabVIEW for Undergraduate Courses on Mechatronics. IEEE Trans. Educ. 2011, 54, 41–47. [Google Scholar] [CrossRef]

- Cuéllar, M.; Pegalajar Jiménez, M. Design and Implementation of Intelligent Systems with LEGO Mindstorms for Undergraduate Computer Engineers. Comput. Appl. Eng. Educ. 2014, 22, 153–166. [Google Scholar] [CrossRef]

- Montés, N.; Rosillo, N.; Mora, M.C.; Hilario, L. Real-Time Matlab-Simulink-Lego EV3 Framework for Teaching Robotics Subjects. In Proceedings of the International Conference on Robotics and Education RiE 2017; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Gonzalez-Garcia, S.; Rodríguez, J.; Loreto, G.; Montaño Serrano, V. Teaching forward kinematics in a robotics course using simulations: Transfer to a real-world context using LEGO mindstorms™. Int. J. Interact. Des. Manuf. 2020, 14. [Google Scholar] [CrossRef]

- Zhang, M.; Wan, Y. Improving Learning Experiences Using LEGO Mindstorms EV3 Robots in Control Systems Course. Int. J. Electr. Eng. Educ. 2020. [Google Scholar] [CrossRef]

- Esposito, J.M. The state of robotics education: Proposed goals for positively transforming robotics education at postsecondary institutions. IEEE Robot. Autom. Mag. 2017, 24, 157–164. [Google Scholar] [CrossRef]

- Corke, P.; Greener, E.; Philip, R. An Innovative Educational Change: Massive Open Online Courses in Robotics and Robotic Vision. IEEE Robot. Autom. Mag. 2016, 23, 81–89. [Google Scholar] [CrossRef]

- Artificial Intelligence for Robotics. Available online: https://www.udacity.com/course/artificial-intelligence-for-robotics--cs373 (accessed on 5 November 2020).

- Autonomous Mobile Robots. Available online: https://www.edx.org/course/autonomous-mobile-robots-ethx-amrx-1 (accessed on 5 November 2020).

- Pozzi, M.; Malvezzi, M.; Prattichizzo, D. Mooc on the art of grasping and manipulation in robotics: Design choices and lessons learned. In Proceedings of the International Conference on Robotics and Education RiE 2017, Sofia, Bulgaria, 26–28 April 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 71–78. [Google Scholar]

- Kulich, M.; Chudoba, J.; Kosnar, K.; Krajnik, T.; Faigl, J.; Preucil, L. Syrotek-distance teaching of mobile robotics. IEEE Trans. Educ. 2013, 56, 18–23. [Google Scholar] [CrossRef]

- Zalewski, J.; Gonzalez, F. Evolution in the Education of Software Engineers: Online Course on Cyberphysical Systems with Remote Access to Robotic Devices. Int. J. Online Eng. 2017, 13, 133–146. [Google Scholar] [CrossRef] [Green Version]

- Téllez, R.; Ezquerro, A.; Rodríguez, M.Á. ROS in 5 Days: Entirely Practical Robot Operating System Training; Independently Published: Madrid, Spain, 2016. [Google Scholar]

- Casañ, G.A.; Cervera, E.; Moughlbay, A.A.; Alemany, J.; Martinet, P. ROS-based online robot programming for remote education and training. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; IEEE: New York, NY, USA, 2015; pp. 6101–6106. [Google Scholar]

- Cervera, E.; Martinet, P.; Marin, R.; Moughlbay, A.A.; Del Pobil, A.P.; Alemany, J.R.; Casañ, G. The robot programming network. J. Intell. Robot. Syst. 2016, 81, 77–95. [Google Scholar] [CrossRef] [Green Version]

- Casañ, G.; Cervera, E. The Experience of the Robot Programming Network Initiative. J. Robot. 2018, 2018, 2312984. [Google Scholar] [CrossRef] [Green Version]

- Cervera, E.; Del Pobil, A.P. Roslab: Sharing ROS Code Interactively with Docker and Jupyterlab. IEEE Robot. Autom. Mag. 2019, 26, 64–69. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, Y. Summary of cloud robot research. In Proceedings of the 2019 25th International Conference on Automation and Computing (ICAC), Lancaster, UK, 5–7 September 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Autonomous Navigation for Flying Robots. Available online: https://www.edx.org/course/autonomous-navigation-for-flying-robots (accessed on 5 November 2020).

- Engel, J.; Sturm, J.; Cremers, D. Scale-aware navigation of a low-cost quadrocopter with a monocular camera. Robot. Auton. Syst. 2014, 62, 1646–1656. [Google Scholar] [CrossRef] [Green Version]

- Engel, J.; Sturm, J.; Cremers, D. Camera-based navigation of a low-cost quadrocopter. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; IEEE: New York, NY, USA, 2012; pp. 2815–2821. [Google Scholar]

- Learn the Importance of Autonomous Systems and Drone Technologies. Available online: https://www.edx.org/professional-certificate/umgc-usmx-drones-and-autonomous-systems (accessed on 5 November 2020).

- Robotics: Aerial Robotics. Available online: https://www.coursera.org/learn/robotics-flight (accessed on 16 December 2020).

- Flying Car and Autonomous Flight Engineer. Available online: https://www.udacity.com/course/flying-car-nanodegree--nd787 (accessed on 5 November 2020).

- Drone Programming Primer for Software Development. Available online: https://www.udemy.com/course/drone-programming-primer-for-software-development/ (accessed on 5 November 2020).

- Psirofonia, P.; Samaritakis, V.; Eliopoulos, P.; Potamitis, I. Use of unmanned aerial vehicles for agricultural applications with emphasis on crop protection: Three novel case-studies. Int. J. Agric. Sci. Technol. 2017, 5, 30–39. [Google Scholar] [CrossRef]

- Freimuth, H.; Müller, J.; König, M. Simulating and executing UAV-assisted inspections on construction sites. In Proceedings of the 34th International Symposium on Automation and Robotics in Construction (ISARC 2017), Taipei, Taiwan, 28 June–1 July 2017; Tribun EU: Brno, Czech Republic, 2017; pp. 647–654. [Google Scholar]

- Deep Learning and Multiple Drone Vision. Available online: https://icarus.csd.auth.gr/cvpr2020-tutorial-deep-learning-and-multiple-drone-vision/ (accessed on 5 November 2020).

- Cañas, J.; Martin, L.J. Innovating in robotics education with gazebo simulator and jderobot framework. In Proceedings of the XXII Congreso Universitario de Innovación Educativa en Enseñanzas Técnicas CUIEET, Alcoy, Spain, 17–19 June 2014; pp. 1483–1496. [Google Scholar]

- Joseph, L. Learning Robotics Using Python; Packt Publishing: Birmingham, UK, 2015. [Google Scholar]

- Koenig, N.P.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; IEEE: New York, NY, USA, 2005; pp. 2149–2154. [Google Scholar]

- Quigley, M.; Gerkey, B.; Smart, W.D. Programming Robots with ROS: A Practical Introduction to the Robot Operating System; O’Reilly Media, Inc.: Newton, MS, USA, 2015. [Google Scholar]

- Koubaa, A.; Allouch, A.; Alajlan, M.; Javed, Y.; Belghith, A.; Khalgui, M. Micro Air Vehicle Link (MAVlink) in a Nutshell: A Survey. IEEE Access 2019, 7, 87658–87680. [Google Scholar] [CrossRef]

- Subhash, S.; Cudney, E.A. Gamified learning in higher education: A systematic review of the literature. Comput. Hum. Behav. 2018, 87, 192–206. [Google Scholar] [CrossRef]

- Barata, G.; Gama, S.; Pires Jorge, J.A.; Gonçalves, D. Engaging engineering students with gamification: An empirical study. In Proceedings of the 2013 5th International Conference on Games and Virtual Worlds for Serious Applications (VS-GAMES), Poole, UK, 11–13 September 2013; IEEE: New York, NY, USA, 2013. [Google Scholar]

- Sanchez-Carmona, A.; Robles, S.; Pons, J. A gamification experience to improve engineering students’ performance through motivation. J. Technol. Sci. Educ. 2017, 7, 150–161. [Google Scholar] [CrossRef] [Green Version]

- Reiners, T.; Wood, L. Gamification in Education and Business; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Menezes, C.; Bortolli, R. Potential of gamification as assessment tool. Creat. Educ. 2016, 7, 561–566. [Google Scholar] [CrossRef] [Green Version]

| Drones | |

| DR1 | Navigation by position |

| DR2 | Following an object on the ground |

| DR3 | Following a road with visual control |

| DR4 | Cat and mouse |

| DR5 | Escape from a maze following visual clues |

| DR6 | Searching for people to rescue within a perimeter |

| DR7 | Escape from a hangar with moving obstacles |

| DR8 | Land on a beacon |

| Computer Vision | |

| CV1 | Color filter for object detection |

| CV2 | Visual odometry for self-localization |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cañas, J.M.; Martín-Martín, D.; Arias, P.; Vega, J.; Roldán-Álvarez, D.; García-Pérez, L.; Fernández-Conde, J. Open-Source Drone Programming Course for Distance Engineering Education. Electronics 2020, 9, 2163. https://doi.org/10.3390/electronics9122163

Cañas JM, Martín-Martín D, Arias P, Vega J, Roldán-Álvarez D, García-Pérez L, Fernández-Conde J. Open-Source Drone Programming Course for Distance Engineering Education. Electronics. 2020; 9(12):2163. https://doi.org/10.3390/electronics9122163

Chicago/Turabian StyleCañas, José M., Diego Martín-Martín, Pedro Arias, Julio Vega, David Roldán-Álvarez, Lía García-Pérez, and Jesús Fernández-Conde. 2020. "Open-Source Drone Programming Course for Distance Engineering Education" Electronics 9, no. 12: 2163. https://doi.org/10.3390/electronics9122163

APA StyleCañas, J. M., Martín-Martín, D., Arias, P., Vega, J., Roldán-Álvarez, D., García-Pérez, L., & Fernández-Conde, J. (2020). Open-Source Drone Programming Course for Distance Engineering Education. Electronics, 9(12), 2163. https://doi.org/10.3390/electronics9122163