Abstract

Over one billion people in the world suffer from some form of disability. Nevertheless, according to the World Health Organization, people with disabilities are particularly vulnerable to deficiencies in services, such as health care, rehabilitation, support, and assistance. In this sense, recent technological developments can mitigate these deficiencies, offering less-expensive assistive systems to meet users’ needs. This paper reviews and summarizes the research efforts toward the development of these kinds of systems, focusing on two social groups: older adults and children with autism.

1. Introduction

According to the World Health Organization (WHO) [1], one in seven people experience disability to some extent. However, only half can afford the required healthcare services [1]. This is especially critical when a person’s quality of life diminishes and their independence is reduced. In this context, technological advances can play an important role, since they may enable people with disabilities to receive the healthcare necessary to lead a fulfilling life and be independent [2].

A review of the literature reveals the enormous variety of assistive technology currently available. Given the wide ranges of types and levels of deficiency, assistive technology can be classified depending on its complexity. Three concentric spheres of assistive technology can be defined with the user at their center. These are (from the inside to the outside): embodied assistive technology, assistive environments, and assistive robots.

Embodied assistive technology includes mobility devices [3,4] (e.g., wheelchairs, prostheses, exoskeletons, or artificial limbs); specialized aids (e.g., hearing [5], vision [6,7,8], cognition [9], or communication [10]); and specific hardware, software, and peripherals that assist people with disabilities with accessing information technologies (e.g., computers and mobile devices). Although these systems provide valued help, they usually offer just one functionality and lack much intelligence (intelligence being understood as the ability to receive feedback from the environment and adapt their behavior).

Going a step further, the environment can be adapted to the user’s needs, with sensors and actuators, such as cameras or domotic systems, such that more functionalities are covered and more information about the user’s health status can be gathered and processed, providing this technology with intelligence. Along those lines, we can find smart homes [11], virtual assistants [12,13,14] and ambient assisted living (AAL) settings [15,16,17]. Nevertheless, this kind of technology fails to support independent life when the user has chronic or degenerative limitations in motor and/or cognitive abilities.

As a solution, assistive robotics (AR) emerged. Its main goal is to fruitfully promote the well-being and independence of persons with disabilities. Robots may assist people in a wide range of tasks at home (especially in terms of activities for daily living), and so ongoing research includes household robots [18,19,20] and rehabilitation robots [21,22], among others. In the case of assistive robots, interdisciplinarity is required to achieve the final goal, integrating research areas such as artificial intelligence, human-robot interaction, and machine learning techniques, among others.

Thus, motivated by the current societal needs of the particular risk groups (i.e., children and older adults), this paper reviews and summarizes the promising and challenging research on assistive robotics aimed at helping older persons and children with autism to perform their daily tasks.

2. Socially Assistive Robots

One of the main difficulties in the acceptance of assistive technology is the way in which this technology is perceived. In this sense, the interaction between the robot and the user is a key issue. This social interaction led to the development of socially assistive robotics (SAR). According to Feil-Seifer and Mataric [23], SAR can be defined as the intersection of AR and socially interactive robotics (SIR), whose main task is interaction with human individuals.

Ideally, SAR should operate autonomously and not require the manipulation of a human operator. The interaction with the user must be intuitive and must not require extensive training. Additionally, the robots have to adapt their behaviors to the new routines and needs of the users, which is currently the most challenging task to be solved [24]. To meet this demand, artificial intelligence and machine learning algorithms must be developed and deployed in these systems, since the robots cannot be programmed in advance to react to every possible circumstance that might occur during interactions with the users.

As mentioned above, there exists a wide variety of applications depending on the needs to be covered and the demands of the target social group. Given that the SAR focuses on improving the user’s life conditions, this study reviews the advances in two of the most vulnerable social groups:

- Older adults;

- People with cognitive disorders.

3. Older Adult Care

The aging population is one of today’s major health concerns. This unprecedented situation urgently requires technological solutions to confront the constantly increasing demands of care services, which are currently overwhelmed. In this regard, the WHO identifies two key concepts in its Global strategy and plan of action on aging and health [25]:

- Healthy aging, understood as the process of developing and maintaining functional ability for older people’s well-being;

- Functional ability, where technology is used to perform functions that might otherwise be difficult or impossible.

Healthy aging has become popular topic in recent decades. In this regard, SAR develops systems to improve older people’s health through physical activity, which has a positive cognitive impact [26]. Some research attempts have consisted of companion robots that help users with assisted therapy and activity (see [27] for an overview). However, work is needed to promote for their acceptance among older people, as pointed out in [28], especially in terms of social interactions.

In addition, SAR for promoting physical exercise has been developed. This is, for instance, the case of the robotic coach proposed by Görer et al. [29]. It is essentially a technique based on a learning by imitation approach, which is used to learn the exercises from a human demonstrator. Then, the reference joint angles are used to evaluate the user’s movements and to provide them with the necessary feedback to improve their performance. Note that two different platforms are used to achieve this goal. A NAO robot is used to describe the physical exercises, while an RGB-D camera captures the movements of the person. This can be problematic, since the correct position of the RBG-D device is essential to properly evaluate the user’s performance. In addition, no sitting exercises are used because the skeleton data are insufficient to obtain the required results. Finally, the robot may confuse the user, given that it emulates the exercise as a demonstration and performs certain movements that are not to be carried out, such as head motions.

Another proposal is PHAROS [30,31], a socially assistive robot that monitors and evaluates the daily physical exercise done at the user’s home (see Figure 1). For this, machine learning techniques (i.e., a convolutional neural network (CNN) together with a recurrent neural network (RNN)) are used to properly identify and evaluate the exercise performance. In addition, it integrates a recommender that generates the workout every day such that the person is working on what is necessary to stay healthy.

Figure 1.

PHAROS robot in a pilot study at a residence of the elderly, Doña Rosa (Alicante).

Assisting functional ability requires more complex systems. In this sense, systems have been evolving over time, integrating an increasing number of functionalities. This is the case of the HOBBIT [32], a robot to help older people feel safe and continue to live in their own home. With this aim, the robot, illustrated in Figure 2, is able to autonomously navigate around the user’s apartment, going anywhere they request, being able to pick up objects from the ground, bring a specific object, learn new objects to be found in the future, call in case of emergency, provide games for entertainment, and also remind the user to take their medication.

Figure 2.

Hobbit robot in a pilot study at the Doña Rosa senior care home.

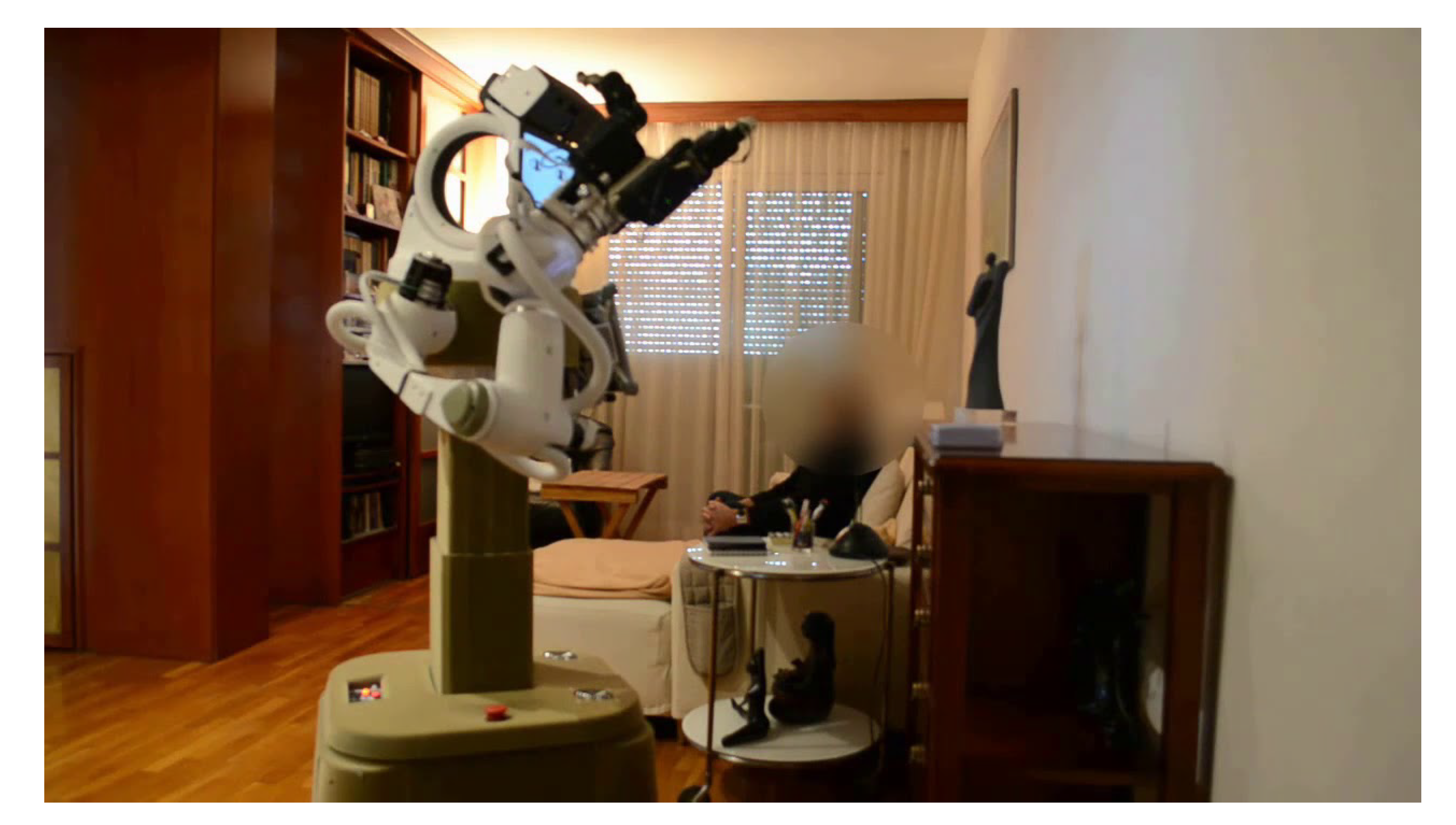

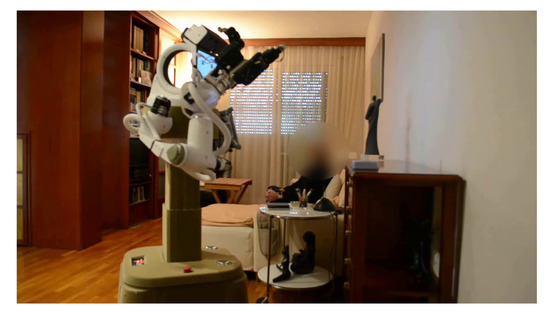

Analogously, the EU project RAMCIP [33] has developed a robotic assistant for older adults and those suffering from mild cognitive impairments (MCI) and dementia (see Figure 3). This robotic assistant also integrates several functionalities that promote physical and cognitive activity, such as detecting a fall (in which case a relative or external caregiver is informed), checking the cooker has been turned off after preparing a meal or the lights have been turned on when walking at night, picking up improperly left or fallen objects from the ground and moving them to safe storage, reminding users about their mediation, bringing the corresponding medicine and monitoring its taking, and facilitating social interactions with family and friends.

Figure 3.

RAMCIP robot in a pilot study at a user’s home.

Other solutions consider the possibility of integrating a robot platform into a smart home environment such that its functionalities may be augmented. An example is the robot activity support system (RAS) created by Washington State University [34] for adults with memory problems and other impairments to help them to live independently. Thus, the smart home has sensors in the walls to track the user’s movement and feeds their data into the robot’s neural network. This allows the robot to integrate activity detection technology to provide assistance when required. However, it is still at an early stage of development, and can only provide video instructions on how to do simple tasks, such as assisting a person through the steps of taking a dog for a walk or guiding them to an object. In addition, the need to install additional technology at home makes this option difficult and costly to implement.

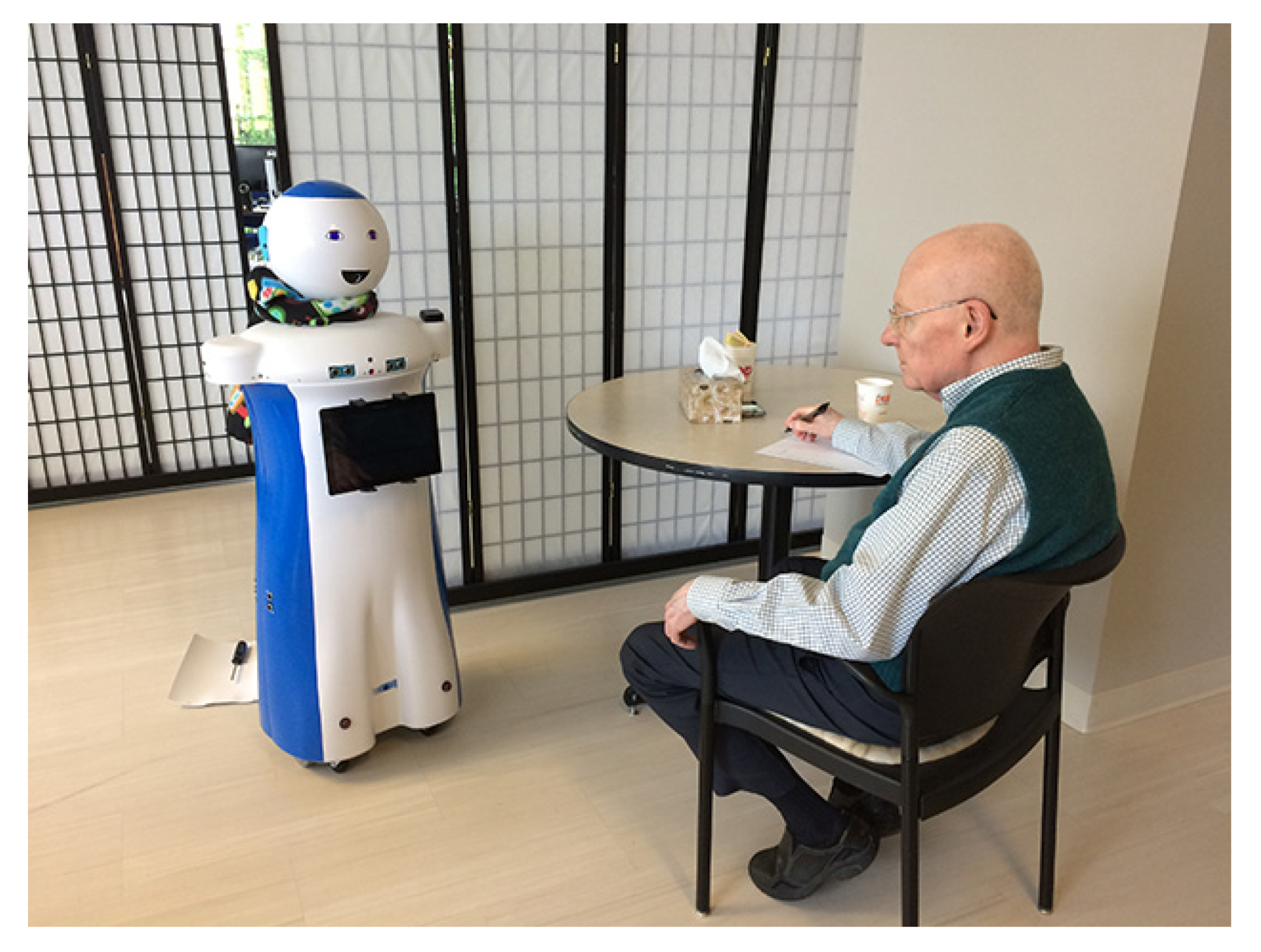

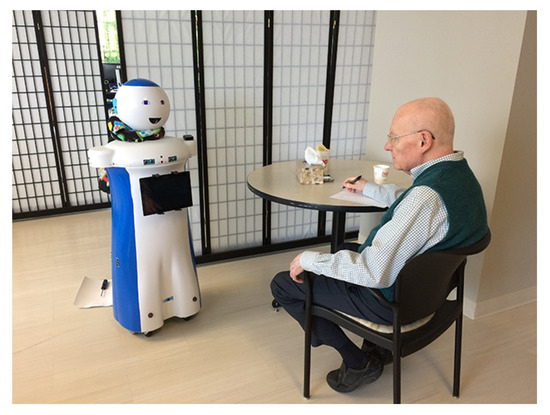

Alternatively, other developments aim to assist people in nursing homes and healthcare facilities. In these kinds of systems, the key issue is the social component, with the aim being for the older adult user to perceive the robotic platform as a social companion rather than a machine to perform predefined tasks. This is the main focus of Rudy [35], an assistive robot created by INF Robotics in 2017. This robot offers telemedicine capabilities, such as remote patient monitoring (RPM), medication reminders, and medication dispensing (shown in Figure 4). In addition, it integrates a social component that, together with its friendly appearance, engages users. In fact, the social interactions are the most appreciated functionality of this system, since loneliness is a major issue among the aging. Nevertheless, it costs , which is a significant amount which is not within all budgets.

Figure 4.

Rudy in a pilot study.

Along similar lines, Trinity College Dublin developed Stevie in 2017, which they improved in 2019 as Stevie II (Figure 5). The aim of this socially assistive IA robot was to augment the role of caregivers in long-term care environments, allowing them to concentrate mainly on person-centered tasks. Its functionalities range from medication reminders to keeping residents cognitively stimulated with quizzes and games. For this, enhanced expressive capabilities and a well-defined social component are used.

Figure 5.

Stevie II in a pilot study.

4. Training Communication and Social Interaction in Children with Autism

In recent years, the use of SAR has become popular for the treatment and diagnosis of autism [36]. Indeed, the research in this field has presented an increase in user therapy acceptance and improvements in their social skills [37].

Applied behavior analysis (ABA) is one of the most extended therapies for the treatment of autism. It consists of improving specific behaviors, which are divided into simple and repetitive tasks that are presented sequentially and strategically while measuring and analyzing the patient’s performance during the therapy [38].

The automation of some aspects of the therapy using technology with different devices and tools has been widely studied (videos, virtual and augmented reality, and robotics [39]). ABA therapies combined with SARs have exhibited substantial advantages and demonstrated their effectiveness in obtaining positive results in patients, such as high enthusiasm, increased attention, and increased social activity [40].

These results may be explained by the fact that children with autism feel more comfortable interacting with robots, because their behavior and reactions are more predictable [41]. Furthermore, the social skills of the patients could be gradually improved by increasing the complexity and unpredictability of the robot’s behavior, making it more similar to actual human behavior [42].

These robotics systems can be used to manage therapy sessions, collect data and analyze the interactions with the patient, and generate information from this data in the form of reports and graphs. For this reason, they are a powerful tool for the therapist to check patient’s progress and facilitate diagnosis.

The visual appeal of the robotics platform is a key factor to engaging the attention of children with autism. In general, these robots tend to use bright colors, rotating mechanical parts, striking shapes, and lights [43]. Additionally, some studies have reported that children with autism prefer to interact with robots with less humanoid characteristics [44]. However, some anthropomorphic robots have been succesfully used in research, especially in imitation and emotion recognition activities. Table 1 and Table 2 present different SAR robots used in experiments. Following [45], there are several robot types depending on their location on the humanoid spectrum:

Table 1.

Robots used in autism therapies.

Table 2.

Robots used in autism therapies.

- Android. They look like humans.

- Mascot. They have humanoid forms but abstract or cartoonish appearances.

- Mechanical. Humanoid forms with visibly mechanical parts.

- Animal. Meant to look like pets.

- Non-Humanoid. No resemblance to any living being.

Since the therapist’s availability is limited, SARs must be developed with a certain level of autonomy in order to carry out the treatments. This autonomy is directly correlated with a SAR’s level of intelligence in adapting to the environment and the patient’s responses. This is where machine learning comes in, providing solutions to the problems these systems must address, such as eye-tracking, and face or automatic speech recognition.

Eye-tracking is the process of measuring the point of fixation of the gaze or the movement of an eye with respect to the head. It is used to measure a patient’s attention to the robot. There exist commercial solutions for this purpose, but they are high cost or depend on special and invasive hardware (Tobii EyeX). However, there are many works focused on inferring the gazes of the users from images of their faces. Traditional techniques usually rely on shape-based methods, such as [100,101], observing geometries such as pupil centers and iris edges; and in appearance-based methods, such as [102,103], they directly use the images of the eyes for the prediction, with handmade features along with neural networks. In recent years, the focus has been on deep learning techniques to accomplish this task using standard and inexpensive camera devices. This is the case of [104], which uses a convolutional neural network to predict the gaze of the user from a color image of their face, previously trained with a large-scale dataset of faces and correlated gazes. More recent works such as [105] predict emotions and the patient’s mood states from eye tracking data using recurrent neural networks.

The study of the patient’s gaze is a crucial technique that helps with the diagnosis of autism and measures the effectiveness of the interaction between the robot and the user. In [106], the researchers carried out a study comparing the gaze attention of patients with autism when they interacted with humans and with robots. Similar to the previous example, in [107] the authors compare the gaze attention of people with autism while maintaining conversations with a human and a realistic android, which could serve as a diagnostic tool. In [84,85] the authors report the effects of repeated exposure to the humanoid robot Robota, which includes an increase in gaze attention and imitation.

Most of the experiments with these robots do not specify the kind of eye-tracking technique they use, or even whether they use external hardware, but recent works in this topic show that deep learning techniques outperform traditional ones without the need for invasive tools, so developments may move in this direction in order to ensure the best experience for users.

Face recognition has been one of the most widely studied research topics in computer vision since the beginnings of computer science, as it provides the recognition of subjects in a non-intrusive manner. The first step involves the detection and delimitation of the region of the image containing the face. Traditionally, detection has been conducted by searching for handcrafted features, like in [108], which uses cascade classifiers with different resolutions, trained with the Adaboost technique, based on Haar-like features. Subsequently, a vector of characteristics is extracted to describe the face, using global techniques like Eigenfaces [109] or Fisherfaces [110] based on Principal Component Analysis, or using local descriptors, like Local Binary Pattern Histograms [111], which codify the local structure of the image by comparing every pixel with its neighbourhood. However, traditional methods suffer when the conditions of the face are not ideal: recognition rates decrease with variations of the pose of the face and changes in the lighting conditions. Recent works have adopted end-to-end architectures based on deep learning that greatly outperform the traditional methods. Studies such as [112,113,114,115] use variations of convolutional neural network architectures trained with large-scale face datasets, obtained without pose restrictions, with good results on tests. Along with face recognition, recent studies like [116,117], classify the user’s emotions by means of variants of convolutional neural networks, with promising results.

These characteristics are important for socially assistive robots in order to identify the patient and their mood and keep track of the history of the interaction. In [59], the researchers used face and emotion recognition to make a Pepper robot adapt a story to the mood of the children. In [118], the authors propose a technique for face recognition using a humanoid robot NAO to track the faces of the children with autism and measure their concentration during social interaction. In [61], the authors propose several activities through the interaction with a Pepper robot, receiving feedback by measuring the users’ smiles.

Finally, automatic speech recognition is considered the most important bridge to enable human-machine communication. However, the technical difficulties of speech processing led to the keyboard and mouse becoming the most accurate interfaces for this purpose. Traditional methods in speech processing used statistical models, such as hidden Markov models [119,120], to process the wave signal and recognize the words pronounced and understand the sentences. However, these methods were very limited in vocabulary and the complexity of the sentences that human users could use and the recognition rates were far from perfect. Today, with the advent of GPUs, as in the previous sections, deep learning techniques are becoming the focus for researchers. End-to-end architectures, such as that proposed in [121,122,123], mainly based on a combination of convolutional neural networks, for extraction of features, and recurrent neural networks, for temporal information analysis, are taking the lead and obtaining interesting results.

In the case of social robotics, speech recognition is an important feature, as we need an intuitive, organic, and more natural method of communication than the old-fashioned peripherals. In [58], the researchers propose the use of the Nao robot to maintain conversations with children with autism and automatically extract crucial information on their interests to recommend them picture books. In [57], the authors propose a conversational therapy using a Nao robot that encourages the child to talk about their experiences and help them to recognize objects and imitate facial expressions. As a different approach, in [62], the authors use a Pepper robot to teach people with typical development to communicate with people with autism spectrum disorder.

All of these studies show that not only can patients with autism benefit from the advent of the SAR and artificial intelligence techniques, but therapists and family members also have more tools to help them with therapy and day-to-day living.

5. Conclusions

Socioeconomic changes and the lack of healthcare professionals to cover the unceasing demand of services and care have led to the need for technological solutions to mitigate this situation. In addition to intelligently interacting with the environment, the techniques developed must be successfully adopted by users. In this sense, neuroscientific evidence shows that users, especially children, tend to engage with robots better than traditional screens and their design must make the user feel comfortable and increase their well-being. As a consequence, the scientific response to these issues is assistive robotics, and more precisely, socially assistive robotics, which integrates a human-robot interaction in a social way.

This paper presents an overview of the state-of-the-art SAR solutions for helping and assisting older adults in their daily activities, such as activity scheduling and rehabilitation; and for helping children with autism spectrum disorders by means of diagnosis and social therapies. These solutions benefit from new advances in artificial intelligence, as these increase the autonomy levels of assistance robots, allowing them to adapt to unforeseen circumstances without the direct intervention of a human. Thus, the advent of SAR along with AI can help users with their day-to-day living, promoting their daily functioning, well-being, and independence.

Despite the active development in (social) assistive technology, there is still work to be done. Indeed, the current solutions do not provide ideal solutions to all needs of people with disabilities, but the results are highly promising.

Author Contributions

Conceptualization, E.M.-M., F.E. and M.C.; methodology, E.M.-M., F.E. and M.C.; software, E.M.-M., F.E. and M.C.; validation, E.M.-M., F.E. and M.C.; formal analysis, E.M.-M., F.E. and M.C.; investigation, E.M.-M., F.E. and M.C.; resources, E.M.-M., F.E. and M.C.; writing—original draft preparation, E.M.-M., F.E. and M.C.; writing—review and editing, E.M.-M., F.E. and M.C.; visualization, E.M.-M., F.E. and M.C.; supervision, E.M.-M., F.E. and M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Spanish Government TIN2016-76515-R grant for the COMBAHO project, supported with Feder funds. It has also been supported by Spanish grants for PhD studies ACIF/2017/243 and FPU16/00887.

Acknowledgments

Experiments were made possible by a generous hardware donation from NVIDIA.

Conflicts of Interest

The authors declare no conflict of interest.

References

- World Health Organization (WHO). Disability. 2019. Available online: https://www.who.int/disabilities/en/ (accessed on 19 February 2020).

- Du Toit, R.; Keeffe, J.; Jackson, J.; Bell, D.; Minto, H.; Hoare, P. A Global Public Health Perspective. Facilitating Access to Assistive Technology. Optom. Vis. Sci. 2018, 95, 883–888. [Google Scholar] [CrossRef]

- Pant, P.; Gupta, V.; Khanna, A.; Saxena, N. Technology foresight study on assistive technology for locomotor disability. Technol. Disabil. 2018, 29, 163–171. [Google Scholar] [CrossRef]

- Tahsin, M.M.; Khan, R.; Gupta, A.K.S. Assistive technology for physically challenged or paralyzed person using voluntary tongue movement. In Proceedings of the 2016 5th International Conference on Informatics, Electronics and Vision (ICIEV), Dhaka, Bangladesh, 13–14 May 2016. [Google Scholar]

- Abdallah, E.E.; Fayyoumi, E. Assistive Technology for Deaf People Based on Android Platform. Procedia Comput. Sci. 2016, 94, 295–301. [Google Scholar] [CrossRef]

- Suresh, A.; Arora, C.; Laha, D.; Gaba, D.; Bhambri, S. Intelligent Smart Glass for Visually Impaired Using Deep Learning Machine Vision Techniques and Robot Operating System (ROS). In Robot Intelligence Technology and Applications 5; Springer: New York, NY, USA, 2018; pp. 99–112. [Google Scholar]

- Phillips, M.; Proulx, M.J. Social Interaction Without Vision: An Assessment of Assistive Technology for the Visually Impaired. Technol. Innov. 2018, 20, 85–93. [Google Scholar] [CrossRef]

- Bhowmick, A.; Hazarika, S.M. An insight into assistive technology for the visually impaired and blind people: State-of-the-art and future trends. J. Multimodal User Interfaces 2017, 11, 149–172. [Google Scholar] [CrossRef]

- Palmqvist, L.; Danielsson, H. Parents act as intermediary users for their children when using assistive technology for cognition in everyday planning: Results from a parental survey. Assist. Technol. 2019, 1–9. [Google Scholar] [CrossRef]

- Davydov, M.; Lozynska, O. Linguistic models of assistive computer technologies for cognition and communication. In Proceedings of the 2016 XIth International Scientific and Technical Conference Computer Sciences and Information Technologies (CSIT), Lviv, Ukraine, 6–10 September 2016. [Google Scholar]

- De Oliveira, G.A.A.; de Bettio, R.W.; Freire, A.P. Accessibility of the smart home for users with visual disabilities. In Proceedings of the 15th Brazilian Symposium on Human Factors in Computer Systems—IHC 16, São Paulo, Brazil, 4–7 October 2016. [Google Scholar]

- Escalona, F.; Martinez-Martin, E.; Cruz, E.; Cazorla, M.; Gomez-Donoso, F. EVA: EVAluating at-home rehabilitation exercises using augmented reality and low-cost sensors. Virtual Real. 2019. [Google Scholar] [CrossRef]

- Costa, A.; Novais, P.; Julian, V.; Nalepa, G.J. Cognitive assistants. Int. J. Hum.-Comput. Stud. 2018, 117. [Google Scholar] [CrossRef]

- Costa, A.; Novais, P.; Julian, V. A Survey of Cognitive Assistants. In Intelligent Systems Reference Library; Springer: New York, NY, USA, 2017; pp. 3–16. [Google Scholar]

- Ruano, A.; Hernandez, A.; Ureña, J.; Ruano, M.; Garcia, J. NILM Techniques for Intelligent Home Energy Management and Ambient Assisted Living: A Review. Energies 2019, 12, 2203. [Google Scholar] [CrossRef]

- Costa, A.; Julián, V.; Novais, P. Advances and trends for the development of ambient-assisted living platforms. Expert Syst. 2016, 34, e12163. [Google Scholar] [CrossRef]

- Gomez-Donoso, F.; Escalona, F.; Rivas, F.M.; Cañas, J.M.; Cazorla, M. Enhancing the ambient assisted living capabilities with a mobile robot. Comput. Intell. Neurosci. 2019, 2019. [Google Scholar] [CrossRef] [PubMed]

- ENRICHME: ENabling Robot and Assisted Living Environment for Independent Care and Health Monitoring of the Elderly. 2015. Available online: http://www.enrichme.eu/wordpress/ (accessed on 19 February 2020).

- Paco Plus EU Project. 2010. Available online: http://www.paco-plus.org/ (accessed on 19 February 2020).

- Cruz, E.; Escalona, F.; Bauer, Z.; Cazorla, M.; García-Rodríguez, J.; Martinez-Martin, E.; Rangel, J.C.; Gomez-Donoso, F. Geoffrey: An Automated Schedule System on a Social Robot for the Intellectually Challenged. Comput. Intell. Neurosci. 2018, 2018. [Google Scholar] [CrossRef] [PubMed]

- Luxton, D.D.; Riek, L.D. Artificial intelligence and robotics in rehabilitation. In Handbook of Rehabilitation Psychology, 3rd ed.; American Psychological Association: Washington, DC, USA, 2019; pp. 507–520. [Google Scholar]

- Martinez-Martin, E.; Cazorla, M. Rehabilitation Technology: Assistance from Hospital to Home. Comput. Intell. Neurosci. 2019, 2019. [Google Scholar] [CrossRef] [PubMed]

- Feil-Seifer, D.; Mataric, M.J. Defining socially assistive robotics. In Proceedings of the 9th International Conference on Rehabilitation Robotics, ICORR 2005, Chicago, IL, USA, 28 June–1 July 2005; pp. 465–468. [Google Scholar]

- Tapus, A.; Mataric, M.J. Socially Assistive Robots: The Link between Personality, Empathy, Physiological Signals, and Task Performance. In AAAI Spring Symposium: Emotion, Personality, and Social Behavior; AAAI Press: Boston, MA, USA, 2008; pp. 133–140. [Google Scholar]

- World Health Organization (WHO). Global Strategy and Action Plan on Ageing and Health; Technical Report; World Health Organization Publications: Geneva, Switzerland, 2017. [Google Scholar]

- Mura, G.; Carta, M.G. Physical Activity in Depressed Elderly. A Systematic Review. Clin. Pract. Epidemiol. Ment. Health 2013, 9, 125–135. [Google Scholar] [CrossRef]

- Martinez-Martin, E.; del Pobil, A.P. Personal Robot Assistants for Elderly Care: An Overview. In Intelligent Systems Reference Library; Springer: New York, NY, USA, 2017; pp. 77–91. [Google Scholar]

- Oh, S.; Oh, Y.H.; Ju, D.Y. Understanding the Preference of the Elderly for Companion Robot Design. In Advances in Intelligent Systems and Computing; Springer: New York, NY, USA, 2019; pp. 92–103. [Google Scholar]

- Görer, B.; Salah, A.A.; Akın, H.L. An autonomous robotic exercise tutor for elderly people. Auton. Robot. 2016, 41, 657–678. [Google Scholar] [CrossRef]

- Martinez-Martin, E.; Costa, A.; Cazorla, M. PHAROS 2.0—A PHysical Assistant RObot System Improved. Sensors 2019, 19, 4531. [Google Scholar] [CrossRef]

- Costa, A.; Martinez-Martin, E.; Cazorla, M.; Julian, V. PHAROS—PHysical Assistant RObot System. Sensors 2018, 18, 2633. [Google Scholar] [CrossRef]

- EU Project. HOBBIT—The Mutual Care Robot. 2007–2013. Available online: http://hobbit.acin.tuwien.ac.at/ (accessed on 19 February 2020).

- EU Project. RAMCIP—Robotic Assistant for MCI Patients at Home. 2015–2020. Available online: https://ramcip-project.eu (accessed on 19 February 2020).

- Wilson, G.; Pereyda, C.; Raghunath, N.; de la Cruz, G.; Goel, S.; Nesaei, S.; Minor, B.; Schmitter-Edgecombe, M.; Taylor, M.; Cook, D.J. Robot-enabled support of daily activities in smart home environments. In Cognitive Systems Research; Elsevier: Amsterdam, The Netherlands, 2019; Volume 54, pp. 258–272. [Google Scholar]

- INF Robotics. Rudy. 2019. Available online: http://infrobotics.com/#rudy (accessed on 19 February 2020).

- Dickstein-Fischer, L.A.; Crone-Todd, D.E.; Chapman, I.M.; Fathima, A.T.; Fischer, G.S. Socially assistive robots: Current status and future prospects for autism interventions. Innov. Entrep. Health 2018, 5, 15. [Google Scholar] [CrossRef]

- Scassellati, B.; Admoni, H.; Matarić, M. Robots for use in autism research. Annu. Rev. Biomed. Eng. 2012, 14, 275–294. [Google Scholar] [CrossRef]

- Kasari, C.; Lawton, K. New directions in behavioral treatment of autism spectrum disorders. Curr. Opin. Neurol. 2010, 23, 137. [Google Scholar] [CrossRef]

- Goldsmith, T.R.; LeBlanc, L.A. Use of technology in interventions for children with autism. J. Early Intensive Behav. Interv. 2004, 1, 166. [Google Scholar] [CrossRef]

- Begum, M.; Serna, R.W.; Yanco, H.A. Are robots ready to deliver autism interventions? A comprehensive review. Int. J. Soc. Robot. 2016, 8, 157–181. [Google Scholar] [CrossRef]

- Scassellati, B. How social robots will help us to diagnose, treat, and understand autism. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2007; pp. 552–563. [Google Scholar]

- Dautenhahn, K.; Werry, I. Towards interactive robots in autism therapy: Background, motivation and challenges. Pragmat. Cogn. 2004, 12, 1–35. [Google Scholar] [CrossRef]

- Cabibihan, J.J.; Javed, H.; Ang, M.; Aljunied, S.M. Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism. Int. J. Soc. Robot. 2013, 5, 593–618. [Google Scholar] [CrossRef]

- Robins, B.; Dautenhahn, K.; Dubowski, J. Does appearance matter in the interaction of children with autism with a humanoid robot? Interact. Stud. 2006, 7, 479–512. [Google Scholar] [CrossRef]

- Ricks, D.J.; Colton, M.B. Trends and considerations in robot-assisted autism therapy. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 4354–4359. [Google Scholar]

- Salvador, M.J.; Silver, S.; Mahoor, M.H. An emotion recognition comparative study of autistic and typically-developing children using the zeno robot. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6128–6133. [Google Scholar]

- Salvador, M.; Marsh, A.S.; Gutierrez, A.; Mahoor, M.H. Development of an ABA autism intervention delivered by a humanoid robot. In International Conference on Social Robotics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 551–560. [Google Scholar]

- Silva, V.; Leite, P.; Soares, F.; Esteves, J.S.; Costa, S. Imitate Me!—Preliminary Tests on an Upper Members Gestures Recognition System. In CONTROLO 2016; Springer: Cham, Switzerland, 2017; pp. 373–383. [Google Scholar]

- Geminiani, A.; Santos, L.; Casellato, C.; Farabbi, A.; Farella, N.; Santos-Victor, J.; Olivieri, I.; Pedrocchi, A. Design and validation of two embodied mirroring setups for interactive games with autistic children using the NAO humanoid robot. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1641–1644. [Google Scholar]

- Chevalier, P.; Isableu, B.; Martin, J.C.; Tapus, A. Individuals with autism: Analysis of the first interaction with nao robot based on their proprioceptive and kinematic profiles. In Advances in Robot Design and Intelligent Control; Springer: Berlin/Heidelberg, Germany, 2016; pp. 225–233. [Google Scholar]

- Lytridis, C.; Vrochidou, E.; Chatzistamatis, S.; Kaburlasos, V. Social engagement interaction games between children with Autism and humanoid robot NAO. In Proceedings of the 13th International Conference on Soft Computing Models in Industrial and Environmental Applications, San Sebastian, Spain, 6–8 June 2018; pp. 562–570. [Google Scholar]

- English, B.A.; Coates, A.; Howard, A. Recognition of Gestural Behaviors Expressed by Humanoid Robotic Platforms for Teaching Affect Recognition to Children with Autism-A Healthy Subjects Pilot Study. In Proceedings of the International Conference on Social Robotics, Tsukuba, Japan, 22–24 November 2017; pp. 567–576. [Google Scholar]

- Qidwai, U.; Kashem, S.B.A.; Conor, O. Humanoid Robot as a Teacher’s Assistant: Helping Children with Autism to Learn Social and Academic Skills. J. Intell. Robot. Syst. 2019, 1–12. [Google Scholar] [CrossRef]

- Shamsuddin, S.; Yussof, H.; Ismail, L.; Hanapiah, F.A.; Mohamed, S.; Piah, H.A.; Zahari, N.I. Initial response of autistic children in human-robot interaction therapy with humanoid robot NAO. In Proceedings of the 2012 IEEE 8th International Colloquium on Signal Processing and its Applications, Malacca, Malaysia, 23–25 March 2012; pp. 188–193. [Google Scholar]

- Tapus, A.; Peca, A.; Aly, A.; Pop, C.; Jisa, L.; Pintea, S.; Rusu, A.S.; David, D.O. Children with autism social engagement in interaction with Nao, an imitative robot: A series of single case experiments. Interact. Stud. 2012, 13, 315–347. [Google Scholar] [CrossRef]

- Petric, F.; Miklic, D.; Kovacic, Z. Robot-assisted autism spectrum disorder diagnostics using POMDPs. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, New York, NY, USA, 7 November 2017; pp. 369–370. [Google Scholar]

- Mavadati, S.M.; Feng, H.; Salvador, M.; Silver, S.; Gutierrez, A.; Mahoor, M.H. Robot-based therapeutic protocol for training children with Autism. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 855–860. [Google Scholar]

- Yang, X.; Shyu, M.L.; Yu, H.Q.; Sun, S.M.; Yin, N.S.; Chen, W. Integrating Image and Textual Information in Human–Robot Interactions for Children With Autism Spectrum Disorder. IEEE Trans. Multimed. 2018, 21, 746–759. [Google Scholar] [CrossRef]

- Azuar, D.; Gallud, G.; Escalona, F.; Gomez-Donoso, F.; Cazorla, M. A Story-Telling Social Robot with Emotion Recognition Capabilities for the Intellectually Challenged. In Proceedings of the Iberian Robotics Conference, Porto, Portugal, 20–22 November 2019; pp. 599–609. [Google Scholar]

- Burkhardt, F.; Saponja, M.; Sessner, J.; Weiss, B. How should Pepper sound-Preliminary investigations on robot vocalizations. Stud. Zur Sprachkommun. Elektron. Sprachsignalverarbeitung 2019, 2019, 103–110. [Google Scholar]

- Nunez, E.; Matsuda, S.; Hirokawa, M.; Suzuki, K. Humanoid robot assisted training for facial expressions recognition based on affective feedback. In Proceedings of the International Conference on Social Robotics, Paris, France, 26–30 October 2015; pp. 492–501. [Google Scholar]

- Yabuki, K.; Sumi, K. Learning Support System for Effectively Conversing with Individuals with Autism Using a Humanoid Robot. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 4266–4270. [Google Scholar]

- Wood, L.J.; Zaraki, A.; Walters, M.L.; Novanda, O.; Robins, B.; Dautenhahn, K. The iterative development of the humanoid robot kaspar: An assistive robot for children with autism. In Proceedings of the International Conference on Social Robotics, Tsukuba, Japan, 22–24 November 2017; pp. 53–63. [Google Scholar]

- Wainer, J.; Robins, B.; Amirabdollahian, F.; Dautenhahn, K. Using the humanoid robot KASPAR to autonomously play triadic games and facilitate collaborative play among children with autism. IEEE Trans. Auton. Ment. Dev. 2014, 6, 183–199. [Google Scholar] [CrossRef]

- Wainer, J.; Dautenhahn, K.; Robins, B.; Amirabdollahian, F. A pilot study with a novel setup for collaborative play of the humanoid robot KASPAR with children with autism. Int. J. Soc. Robot. 2014, 6, 45–65. [Google Scholar] [CrossRef]

- Wood, L.J.; Zaraki, A.; Robins, B.; Dautenhahn, K. Developing kaspar: A humanoid robot for children with autism. Int. J. Soc. Robot. 2019, 1–18. [Google Scholar] [CrossRef]

- Huijnen, C.A.; Lexis, M.A.; de Witte, L.P. Matching robot KASPAR to autism spectrum disorder (ASD) therapy and educational goals. Int. J. Soc. Robot. 2016, 8, 445–455. [Google Scholar] [CrossRef]

- Dautenhahn, K.; Nehaniv, C.L.; Walters, M.L.; Robins, B.; Kose-Bagci, H.; Mirza, N.A.; Blow, M. KASPAR— A minimally expressive humanoid robot for human–robot interaction research. Appl. Bionics Biomech. 2009, 6, 369–397. [Google Scholar] [CrossRef]

- Robins, B.; Dautenhahn, K.; Dickerson, P. From isolation to communication: a case study evaluation of robot assisted play for children with autism with a minimally expressive humanoid robot. In Proceedings of the 2009 Second International Conferences on Advances in Computer-Human Interactions, Cancun, Mexico, 1–7 February 2009; pp. 205–211. [Google Scholar]

- Kozima, H.; Michalowski, M.P.; Nakagawa, C. Keepon. Int. J. Soc. Robot. 2009, 1, 3–18. [Google Scholar] [CrossRef]

- Kozima, H.; Nakagawa, C.; Yasuda, Y. Interactive robots for communication-care: A case-study in autism therapy. In Proceedings of the ROMAN 2005 IEEE International Workshop on Robot and Human Interactive Communication, Nashville, TN, USA, 13–15 August 2005; pp. 341–346. [Google Scholar]

- Azmin, A.F.; Shamsuddin, S.; Yussof, H. HRI observation with My Keepon robot using Kansei Engineering approach. In Proceedings of the 2016 2nd IEEE International Symposium on Robotics and Manufacturing Automation (ROMA), Ipoh, Malaysia, 25–27 September 2016. [Google Scholar]

- Dunst, C.J.; Trivette, C.M.; Hamby, D.W.; Prior, J.; Derryberry, G. Effects of Child-Robot Interactions on the Vocalization Production of Young Children with Disabilities. Social Robots. Research Reports, Number 4; Orelena Hawks Puckett Institute: Asheville, NC, USA, 2013. [Google Scholar]

- Woodyard, A.H.; Guleksen, E.P.; Lindsay, R.O. PABI: Developing a New Robotic Platform for Autism Therapy; Technical Report; Worcester Polytechnic Institute: Worcester, UK, 2015. [Google Scholar]

- Brown, A.S. Face to Face with Autism. Mech. Eng. Mag. Sel. Artic. 2018, 140, 35–39. [Google Scholar] [CrossRef]

- Dickstein-Fischer, L.A.; Pereira, R.H.; Gandomi, K.Y.; Fathima, A.T.; Fischer, G.S. Interactive tracking for robot-assisted autism therapy. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 107–108. [Google Scholar]

- Kim, E.S.; Berkovits, L.D.; Bernier, E.P.; Leyzberg, D.; Shic, F.; Paul, R.; Scassellati, B. Social robots as embedded reinforcers of social behavior in children with autism. J. Autism Dev. Disord. 2013, 43, 1038–1049. [Google Scholar] [CrossRef]

- Kim, E.S.; Paul, R.; Shic, F.; Scassellati, B. Bridging the research gap: Making HRI useful to individuals with autism. J. Hum.-Robot Interact. 2012, 1, 26–54. [Google Scholar] [CrossRef]

- Larriba, F.; Raya, C.; Angulo, C.; Albo-Canals, J.; Díaz, M.; Boldú, R. Externalising moods and psychological states in a cloud based system to enhance a pet-robot and child’s interaction. Biomed. Eng. Online 2016, 15, 72. [Google Scholar] [CrossRef][Green Version]

- Curtis, A.; Shim, J.; Gargas, E.; Srinivasan, A.; Howard, A.M. Dance dance pleo: Developing a low-cost learning robotic dance therapy aid. In Proceedings of the 10th International Conference on Interaction Design and Children, Ann Arbor, MI, USA, 20–23 June 2011; pp. 149–152. [Google Scholar]

- Dautenhahn, K.; Billard, A. Games children with autism can play with Robota, a humanoid robotic doll. In Universal Access and Assistive Technology; Springer: Berlin/Heidelberg, Germany, 2002; pp. 179–190. [Google Scholar]

- Billard, A.; Robins, B.; Dautenhahn, K.; Nadel, J. Building robota, a mini-humanoid robot for the rehabilitation of children with autism. RESNA Assist. Technol. J. 2006, 19, 37–49. [Google Scholar] [CrossRef]

- Billard, A. Robota: Clever toy and educational tool. Robot. Auton. Syst. 2003, 42, 259–269. [Google Scholar] [CrossRef]

- Robins, B.; Dautenhahn, K.; Te Boekhorst, R.; Billard, A. Effects of repeated exposure to a humanoid robot on children with autism. In Designing a More Inclusive World; Springer: Berlin/Heidelberg, Germany, 2004; pp. 225–236. [Google Scholar]

- Robins, B.; Dickerson, P.; Stribling, P.; Dautenhahn, K. Robot-mediated joint attention in children with autism: A case study in robot-human interaction. Interact. Stud. 2004, 5, 161–198. [Google Scholar] [CrossRef]

- Watanabe, K.; Yoneda, Y. The world’s smallest biped humanoid robot “i-Sobot”. In Proceedings of the 2009 IEEE Workshop on Advanced Robotics and its Social Impacts, Tokyo, Japan, 23–25 November 2009; pp. 51–53. [Google Scholar]

- Kaur, M.; Gifford, T.; Marsh, K.L.; Bhat, A. Effect of robot–child interactions on bilateral coordination skills of typically developing children and a child with autism spectrum disorder: A preliminary study. J. Mot. Learn. Dev. 2013, 1, 31–37. [Google Scholar] [CrossRef]

- Srinivasan, S.M.; Lynch, K.A.; Bubela, D.J.; Gifford, T.D.; Bhat, A.N. Effect of interactions between a child and a robot on the imitation and praxis performance of typically devloping children and a child with autism: A preliminary study. Percept. Mot. Ski. 2013, 116, 885–904. [Google Scholar] [CrossRef] [PubMed]

- Duquette, A.; Michaud, F.; Mercier, H. Exploring the use of a mobile robot as an imitation agent with children with low-functioning autism. Auton. Robot. 2008, 24, 147–157. [Google Scholar] [CrossRef]

- Pradel, G.; Dansart, P.; Puret, A.; Barthélemy, C. Generating interactions in autistic spectrum disorders by means of a mobile robot. In Proceedings of the IECON 2010—36th Annual Conference on IEEE Industrial Electronics Society, Glendale, AZ, USA, 7–10 November 2010; pp. 1540–1545. [Google Scholar]

- Giannopulu, I.; Pradel, G. Multimodal interactions in free game play of children with autism and a mobile toy robot. NeuroRehabilitation 2010, 27, 305–311. [Google Scholar] [CrossRef] [PubMed]

- Ravindra, P.; De Silva, S.; Tadano, K.; Saito, A.; Lambacher, S.G.; Higashi, M. Therapeutic-assisted robot for children with autism. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 3561–3567. [Google Scholar]

- Dautenhahn, K. Socially intelligent robots: dimensions of human–robot interaction. Philos. Trans. R. Soc. Biol. Sci. 2007, 362, 679–704. [Google Scholar] [CrossRef]

- Lee, J.; Takehashi, H.; Nagai, C.; Obinata, G.; Stefanov, D. Which robot features can stimulate better responses from children with autism in robot-assisted therapy? Int. J. Adv. Robot. Syst. 2012, 9, 72. [Google Scholar] [CrossRef]

- Lathan, C.; Boser, K.; Safos, C.; Frentz, C.; Powers, K. Using cosmo’s learning system (CLS) with children with autism. In Proceedings of the International Conference on Technology-Based Learning with Disabilities, Edinburgh, UK, 15–17 August 2007; pp. 37–47. [Google Scholar]

- Tzafestas, S. Sociorobot Field Studies. In Sociorobot World; Springer: Berlin/Heidelberg, Germany, 2016; pp. 175–202. [Google Scholar]

- Askari, F.; Feng, H.; Sweeny, T.D.; Mahoor, M.H. A Pilot Study on Facial Expression Recognition Ability of Autistic Children Using Ryan, A Rear-Projected Humanoid Robot. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing and Tai’an, China, 27–31 August 2018; pp. 790–795. [Google Scholar]

- Askari, F. Studying Facial Expression Recognition and Imitation Ability of Children with Autism Spectrum Disorder in Interaction with a Social Robot; Technical Report; University of Denver: Denver, CO, USA, 2018. [Google Scholar]

- Mollahosseini, A.; Abdollahi, H.; Mahoor, M.H. Studying Effects of Incorporating Automated Affect Perception with Spoken Dialog in Social Robots. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing and Tai’an, China, 27–31 August 2018; pp. 783–789. [Google Scholar]

- Ishikawa, T. Passive Driver Gaze Tracking with Active Appearance Models; Technical Report; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2004. [Google Scholar]

- Valenti, R.; Sebe, N.; Gevers, T. Combining head pose and eye location information for gaze estimation. IEEE Trans. Image Process. 2011, 21, 802–815. [Google Scholar] [CrossRef]

- Baluja, S.; Pomerleau, D. Non-intrusive gaze tracking using artificial neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 28 November–1 December 1994; pp. 753–760. [Google Scholar]

- Sewell, W.; Komogortsev, O. Real-time eye gaze tracking with an unmodified commodity webcam employing a neural network. In Proceedings of the CHI’10 Extended Abstracts on Human Factors in Computing Systems, Denver, CO, USA, 10–11 December 2010; pp. 3739–3744. [Google Scholar]

- Krafka, K.; Khosla, A.; Kellnhofer, P.; Kannan, H.; Bhandarkar, S.; Matusik, W.; Torralba, A. Eye Tracking for Everyone. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Sims, S.D.; Putnam, V.; Conati, C. Predicting Confusion from Eye-Tracking Data with Recurrent Neural Networks. arXiv 2019, arXiv:1906.11211. [Google Scholar]

- Damm, O.; Malchus, K.; Jaecks, P.; Krach, S.; Paulus, F.; Naber, M.; Jansen, A.; Kamp-Becker, I.; Einhaeuser-Treyer, W.; Stenneken, P.; et al. Different gaze behavior in human-robot interaction in Asperger’s syndrome: An eye-tracking study. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Korea, 26–29 August 2013; pp. 368–369. [Google Scholar]

- Yoshikawa, Y.; Kumazaki, H.; Matsumoto, Y.; Miyao, M.; Kikuchi, M.; Ishiguro, H. Relaxing Gaze Aversion of Adolescents with Autism Spectrum Disorder in Consecutive Conversations with Human and Android Robot—A Preliminary Study. Front. Psychiatry 2019, 10, 370. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Turk, M.; Pentland, A. Eigenfaces for recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef] [PubMed]

- Belhumeur, P.N.; Hespanha, J.P.; Kriegman, D.J. Eigenfaces vs. fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face recognition with local binary patterns. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 469–481. [Google Scholar]

- Sun, Y.; Liang, D.; Wang, X.; Tang, X. Deepid3: Face recognition with very deep neural networks. arXiv 2015, arXiv:1502.00873. [Google Scholar]

- Masi, I.; Wu, Y.; Hassner, T.; Natarajan, P. Deep face recognition: A survey. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October– 1 November 2018; pp. 471–478. [Google Scholar]

- Yucel, M.K.; Bilge, Y.C.; Oguz, O.; Ikizler-Cinbis, N.; Duygulu, P.; Cinbis, R.G. Wildest faces: Face detection and recognition in violent settings. arXiv 2018, arXiv:1805.07566. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Pramerdorfer, C.; Kampel, M. Facial expression recognition using convolutional neural networks: State of the art. arXiv 2016, arXiv:1612.02903. [Google Scholar]

- Kahou, S.E.; Bouthillier, X.; Lamblin, P.; Gulcehre, C.; Michalski, V.; Konda, K.; Jean, S.; Froumenty, P.; Dauphin, Y.; Boulanger-Lewandowski, N.; et al. Emonets: Multimodal deep learning approaches for emotion recognition in video. J. Multimodal User Interfaces 2016, 10, 99–111. [Google Scholar] [CrossRef]

- Ismail, L.; Shamsuddin, S.; Yussof, H.; Hashim, H.; Bahari, S.; Jaafar, A.; Zahari, I. Face detection technique of Humanoid Robot NAO for application in robotic assistive therapy. In Proceedings of the 2011 IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, 25–27 November 2011; pp. 517–521. [Google Scholar]

- Juang, B.H.; Rabiner, L.R. Hidden Markov models for speech recognition. Technometrics 1991, 33, 251–272. [Google Scholar] [CrossRef]

- Jelinek, F. Statistical Methods for Speech Recognition; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Amodei, D.; Ananthanarayanan, S.; Anubhai, R.; Bai, J.; Battenberg, E.; Case, C.; Casper, J.; Catanzaro, B.; Cheng, Q.; Chen, G.; et al. Deep speech 2: End-to-end speech recognition in english and mandarin. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 173–182. [Google Scholar]

- Xiong, W.; Wu, L.; Alleva, F.; Droppo, J.; Huang, X.; Stolcke, A. The Microsoft 2017 conversational speech recognition system. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5934–5938. [Google Scholar]

- Chan, W.; Jaitly, N.; Le, Q.; Vinyals, O. Listen, attend and spell: A neural network for large vocabulary conversational speech recognition. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 4960–4964. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).