AGV Localization System Based on Ultra-Wideband and Vision Guidance

Abstract

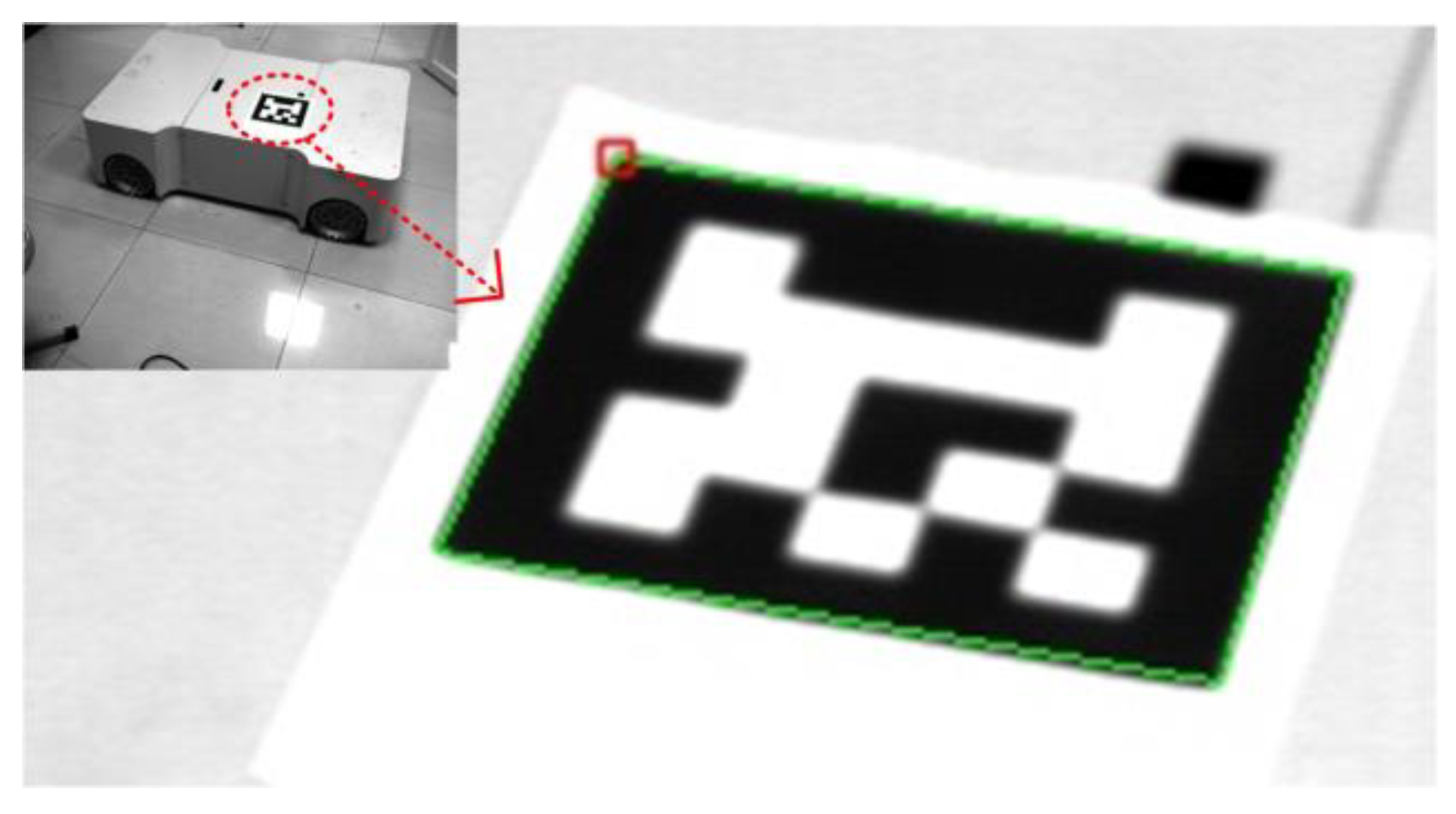

:1. Introduction

2. AGV Localization Technology

2.1. Ultra-Wideband Localization

2.1.1. Time of Flight (TOF) Ranging Algorithm

2.1.2. The Trilateral Centroid Localization Algorithm

2.2. Monocular Visual Localization

2.2.1. Coordinate System Conversion Relationship

2.2.2. Corner Recognition and PnP Algorithm

2.3. Localization Method Fusion Complementary

3. Localization Experiment Results and Analysis

3.1. Experimental Scheme Design

3.2. Static Localization Experiment

3.3. Dynamic Localization Experiment

3.4. Effect of Camera Resolution on Experimental Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Long, J.; Zhang, C.L. The Summary of AGV Guidance Technology. Adv. Mater. Res. 2012, 591–593, 1625–1628. [Google Scholar] [CrossRef]

- Moosavian, A.; Xi, F. Modular design of parallel robots with static redundancy. Mech. Mach. Theory 2016, 96, 26–37. [Google Scholar] [CrossRef]

- Lu, S.; Xu, C.; Zhong, R.Y.; Wang, L. A RFID-enabled positioning system in automated guided vehicle for smart factories. J. Manuf. Syst. 2017, 44, 179–190. [Google Scholar] [CrossRef]

- Yudanto, R.G.; Petré, F. Sensor fusion for indoor navigation and tracking of automated guided vehicles. In Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015. [Google Scholar]

- Wang, L.; Guo, H. Exploring Key Technologies of Multi-Sensor Data Fusion; Atlantis Press: Paris, France, 2017. [Google Scholar]

- Song, Z.; Wu, X.; Xu, T.; Sun, J.; Gao, Q.; He, Y. A new method of AGV navigation based on Kalman Filter and a magnetic nail localization. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 Decmber 2016. [Google Scholar]

- Škrabánek, P.; Vodička, P. Magnetic strips as landmarks for mobile robot navigation. In Proceedings of the 2016 International Conference on Applied Electronics (AE), Pilsen, Czech Republic, 6–7 September 2016. [Google Scholar]

- Sun, G.; Feng, D.; Zhang, Y.; Weng, D. Detection and control of a wheeled mobile robot based on magnetic navigation. In Proceedings of the 2013 9th Asian Control Conference (ASCC), Istanbul, Turkey, 23–26 June 2013. [Google Scholar]

- Wang, C.; Wang, L.; Qin, J.; Wu, Z.; Duan, L.; Cao, M.; Li, Z.; Li, W.; Lu, Z.; Ling, Y.; et al. Development of a vision navigation system with Fuzzy Control Algorithm for Automated Guided Vehicle. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Beijing, China, 2–5 August 2015. [Google Scholar]

- Li, Q.; Hu, Z.; Ge, L. AGV Design Using Electromagnetic Navigation. Mod. Electron. Technol. 2012, 35, 79–81. [Google Scholar]

- Xin, J.; Jiao, X.-L.; Yang, Y.; Liu, D. Visual navigation for mobile robot with Kinect camera in dynamic environment. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016. [Google Scholar]

- Ronzoni, D.; Olmi, R.; Secchi, C.; Fantuzzi, C. AGV global localization using indistinguishable artificial landmarks. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Wu, X.; Lou, P.; Tang, D.; Yu, J. An intelligent-optimal predictive controller for path tracking of Vision-based Automated Guided Vehicle. In Proceedings of the 2008 International Conference on Information and Automation, Shanghai, China, 20–23 June 2008. [Google Scholar]

- Zhou, C.; Shuai, P.; Dai, C. The Application of QR Codes and WIFI Technology in the Autonomous Navigation System for AGV; Atlantis Press: Paris, France, 2018. [Google Scholar]

- Zhou, C.; Liu, X. The Study of Applying the AGV Navigation System Based on Two Dimensional Bar Code. In Proceedings of the 2016 International Conference on Industrial Informatics—Computing Technology, Intelligent Technology, Industrial Information Integration (ICIICII), Wuhan, China, 3–4 December 2016. [Google Scholar]

- Zeng, P.; Wu, F.; Zhi, T.; Xiao, L.; Zhu, S. Research on Automatic Tool Delivery for CNC Workshop of Aircraft Equipment Manufacturing. J. Phys. Conf. Ser. 2019, 1215, 012007. [Google Scholar] [CrossRef]

- Weng, J.-F.; Su, K.-L. Development of a SLAM based automated guided vehicle. J. Intell. Fuzzy Syst. 2019, 36, 1245–1257. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, X.; Wang, J.; Wen, H. Research on improved graph-based SLAM used in intelligent garage. In Proceedings of the 2017 IEEE International Conference on Information and Automation (ICIA), Macau SAR, China, 18–20 July 2017. [Google Scholar]

- Schueftan, D.S.; Colorado, M.J.; Bernal, I.F.M. Indoor mapping using SLAM for applications in Flexible Manufacturing Systems. In Proceedings of the 2015 IEEE 2nd Colombian Conference on Automatic Control (CCAC), Manizales, Colombia, 14–16 October 2015. [Google Scholar]

- Zhang, J.; Lou, P.; Qian, X.; Wu, X. Research on Vision-Guided AGV Precise Positioning Technology for Multi-window Real-time Ranging. J. Instrum. 2016, 37, 1356–1363. [Google Scholar]

- He, Z.; Lou, P.; Qian, X.; Wu, X.; Zhu, L. Research on Multi-Vision and Laser Integrated Navigation AGV Precise Positioning Technology. J. Instrum. 2017, 38, 2830–2838. [Google Scholar]

- Zhang, H.; Cheng, X.; Liu, C.; Sun, J. AGV vision positioning technology based on global sparse map. J. Beijing Univ. Aeronaut. Astronaut. 2019, 45, 218–226. [Google Scholar]

- Li, X.; Zhang, W. An Adaptive Fault-Tolerant Multisensor Navigation Strategy for Automated Vehicles. IEEE Trans. Veh. Technol. 2010, 59, 2815–2829. [Google Scholar]

- Wang, H.; Zhang, X.; Li, X.; Han, L.; Zhang, J. GPS/DR information fusion for AGV navigation. In Proceedings of the World Automation Congress, Beijing, China, 6–8 July 2012. [Google Scholar]

- Wei, Z.; Lang, Y.; Yang, F.; Zhao, S. A TOF Localization Algorithm Based on Improved Double-sided Two Way Ranging. DEStech Trans. Comput. Sci. Eng. 2018, 25, 307–315. [Google Scholar] [CrossRef]

- Chen, P.; Wang, C. IEPnP: An Iterative Estimation Algorithm for Camera Pose Based on EPnP. Acta Opt. Sin. 2018, 38, 138–144. [Google Scholar] [CrossRef]

| X-axis | |||||||

|---|---|---|---|---|---|---|---|

| 120 | 240 | 360 | 480 | 600 | 720 | ||

| Y-axis | 120 | (117.6,112.1) = 8.25651 | (242.0,127.4) = 7.66551 | (362.1,127.1) = 7.40405 | (477.3,113.1) = 7.40945 | (597.9,127.4) = 7.69220 | (717.3,128.5) = 8.91852 |

| 240 | (122.2,247.6) = 7.91201 | (241.8,246.8) = 7.03420 | (361.8,246.6) = 6.84105 | (481.6,233.8) = 6.40312 | (601.9,247.0) = 7.25328 | (717.6,231.8) = 8.54400 | |

| 360 | (121.8,367.3) = 7.51864 | (241.7,366.6) = 6.81542 | (361.6,365.8) = 6.07289 | (481.6,354.4) = 5.82409 | (601.8,366.8) = 7.03420 | (717.8,367.5) = 7.81601 | |

| 480 | (122.1,472.1) = 8.17435 | (241.7,472.9) = 7.30068 | (361.7,486.0) = 6.23618 | (478.0,473.9) = 6.41950 | (601.9,486.9) = 7.15681 | (721.9,487.8) = 8.02808 | |

| 600 | (122.6,608.1) = 8.50706 | (242.2,607.2) = 7.52861 | (362.0,606.4) = 6.70522 | (482.0,593.6) = 6.70522 | (602.2,607.4) = 7.72010 | (717.4,608.3) = 8.69770 | |

| X-axis | |||||||

|---|---|---|---|---|---|---|---|

| 120 | 240 | 360 | 480 | 600 | 720 | ||

| Y-axis | 120 | (119.57,120.14) = 0.45222 | (239.35,120.13) = 0.66287 | (359.29,120.23) = 0.66287 | (479.28,120.26) = 0.76551 | (599.36,120.14) = 0.75961 | (720.44,120.13) = 0.45880 |

| 240 | (119.37,240.17) = 0.65253 | (239.34,239.86) = 0.67469 | (359.18,240.27) = 0.86331 | (479.16,240.29) = 0.88865 | (599.32,240.18) = 0.70342 | (720.61,240.18) = 0.63600 | |

| 360 | (119.25,360.24) = 0.78746 | (239.13,360.27) = 0.91093 | (361.39,360.36) = 1.43586 | (478.73,360.34) = 1.31472 | (599.09,360.25) = 0.94372 | (720.78,360.23) = 0.81320 | |

| 480 | (119.38,480.18) = 0.64560 | (239.29,480.14) = 0.72367 | (360.84,480.24) = 0.87361 | (480.83,480.27) = 0.87281 | (599.23,480.18) = 0.79076 | (719.38,480.17) = 0.64288 | |

| 600 | (119.58,600.14) = 0.44272 | (239.36,600.14) = 0.65513 | (360.71,600.24) = 0.74947 | (480.71,600.27) = 0.75961 | (599.38,600.14) = 0.63561 | (720.43,600.16) = 0.45880 | |

| Number | Monocular Camera Localization Error/mm | UWB Localization Error/mm |

|---|---|---|

| 1 | 12.233 | 96.384 |

| 2 | 14.264 | 94.207 |

| 3 | 13.747 | 97.395 |

| 4 | 12.573 | 94.343 |

| 5 | 12.837 | 91.374 |

| 6 | 10.468 | 95.442 |

| 7 | 12.759 | 89.485 |

| 8 | 14.377 | 94.873 |

| 9 | 12.439 | 94.244 |

| 10 | 12.876 | 96.382 |

| Number | Localization Error of Blocked Road/mm |

|---|---|

| 1 | 47.436 |

| 2 | 51.357 |

| 3 | 53.583 |

| 4 | 49.437 |

| 5 | 50.422 |

| 6 | 44.382 |

| 7 | 62.593 |

| 8 | 45.873 |

| 9 | 47.795 |

| 10 | 48.376 |

| Camera Resolution/Pixel | Average Static Localization Error at Coordinates (240, 240 cm)/cm | Dynamic Localization Error on Normal Road Section/mm | Dynamic Localization Error of Blocked Road Section/mm |

|---|---|---|---|

| 1280 1024 | 0.675 | 12.857 | 50.125 |

| 640 480 | 1.572 | 19.374 | 66.581 |

| 320 240 | 3.351 | 45.718 | 89.743 |

| Method | Path Flexibility | Technical Difficulty | Precision | Cost |

|---|---|---|---|---|

| Method of this article | Good | General | High | General |

| LiDAR SLAM | Good | High | High | High |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, X.; Luo, Z.; Jiang, W. AGV Localization System Based on Ultra-Wideband and Vision Guidance. Electronics 2020, 9, 448. https://doi.org/10.3390/electronics9030448

Hu X, Luo Z, Jiang W. AGV Localization System Based on Ultra-Wideband and Vision Guidance. Electronics. 2020; 9(3):448. https://doi.org/10.3390/electronics9030448

Chicago/Turabian StyleHu, Xiaohao, Zai Luo, and Wensong Jiang. 2020. "AGV Localization System Based on Ultra-Wideband and Vision Guidance" Electronics 9, no. 3: 448. https://doi.org/10.3390/electronics9030448

APA StyleHu, X., Luo, Z., & Jiang, W. (2020). AGV Localization System Based on Ultra-Wideband and Vision Guidance. Electronics, 9(3), 448. https://doi.org/10.3390/electronics9030448