OLIMP: A Heterogeneous Multimodal Dataset for Advanced Environment Perception

Abstract

:1. Introduction

- We introduce OLIMP (https://sites.google.com/view/ihsen-alouani), A HeterOgeneous MuLtimodal Dataset for Advanced EnvIronMent Perception a new heterogeneous dataset collected using a camera, a UWB radar, a narrowband radar and a microphone.

- We present an exhaustive overview of the available public environment perception databases.

- We propose a new fusion framework that combines data acquired from the different sensors used in our dataset to achieve better performances for obstcle detection task. This fusion framework highlights the potential improvement that could be acquired by the community using our dataset.

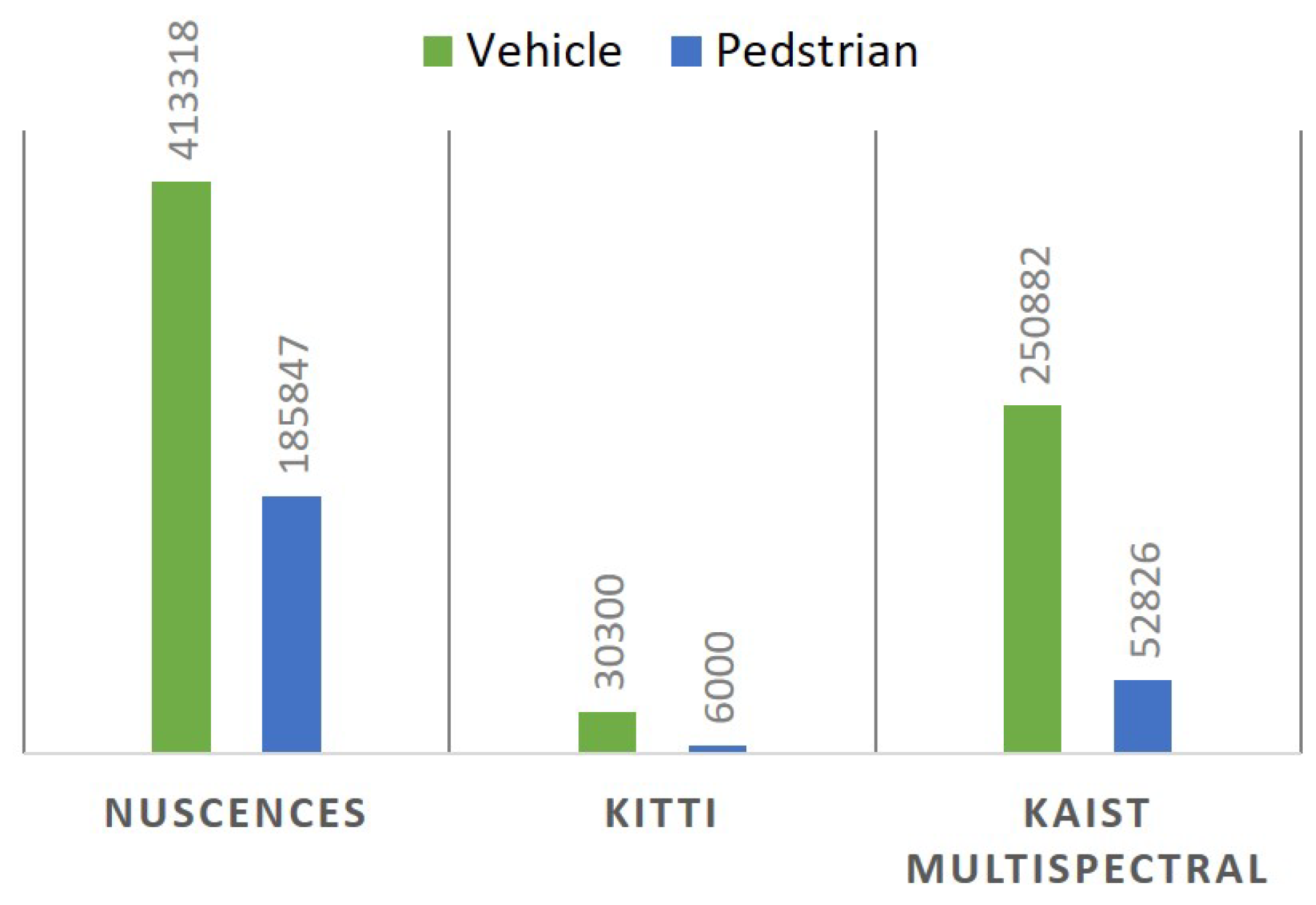

2. Existing Public Multimodal Environment Perception Databases

3. Multi-modal Environment Perception Related Work

- RGB images and lidar point clouds fusion:

- Visible and thermal images fusion:

- Narrow-band radar data and RGB images fusion:

4. Proposed Dataset

4.1. Context

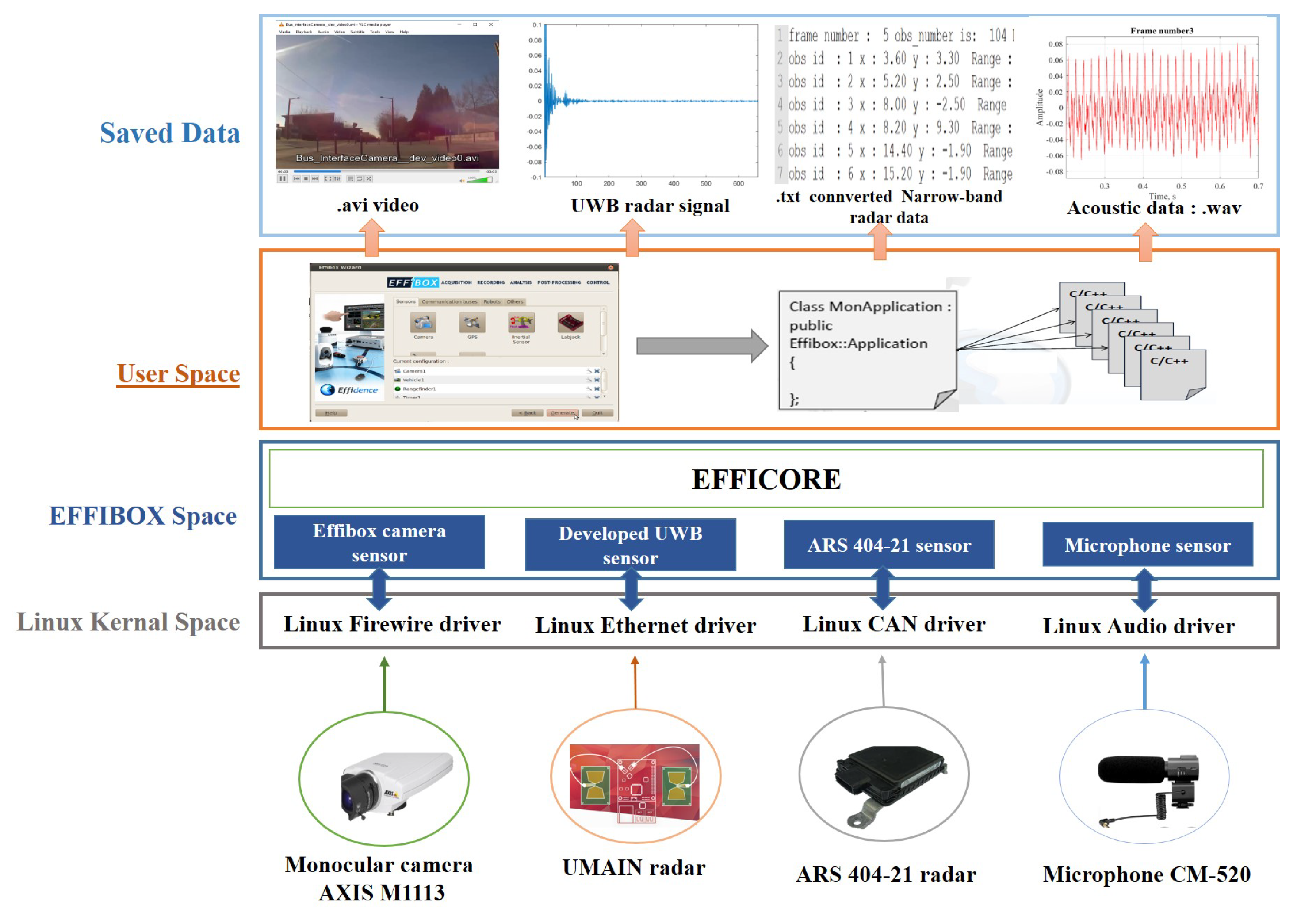

4.2. Hardware and Data Acquisition

- UMAIN radar: it is an UWB radar. The exploited kit is called HST-D3 developed by the UMAIN corporation [53]. The kit comprises a UWB short radar with a Rasperby Pi 3 for the acquisition. Following this, the received radar raw data are transmitted to the computer through the Raspberry Pi that is connected via TCP/IP protocol.

- Narrow band radar (ARS 404-21): This Premium sensor from Continental is a long range radar that is able to detect multiple obstacles up to 250 meters. It genertaes raw data that include: distance, velocity and radar cross section RCS [54]. Data are transmitted to the EFFIBOX platform via CAN bus.

- The EFFIBOX platform: it is a software developed in (C/C++) dedicated to the design of multi-sensor embedded applications. In addition, diverse adequate development functionalities are available such as: acquiring and saving sensor streams, processing/post-processing, visualization, etc.

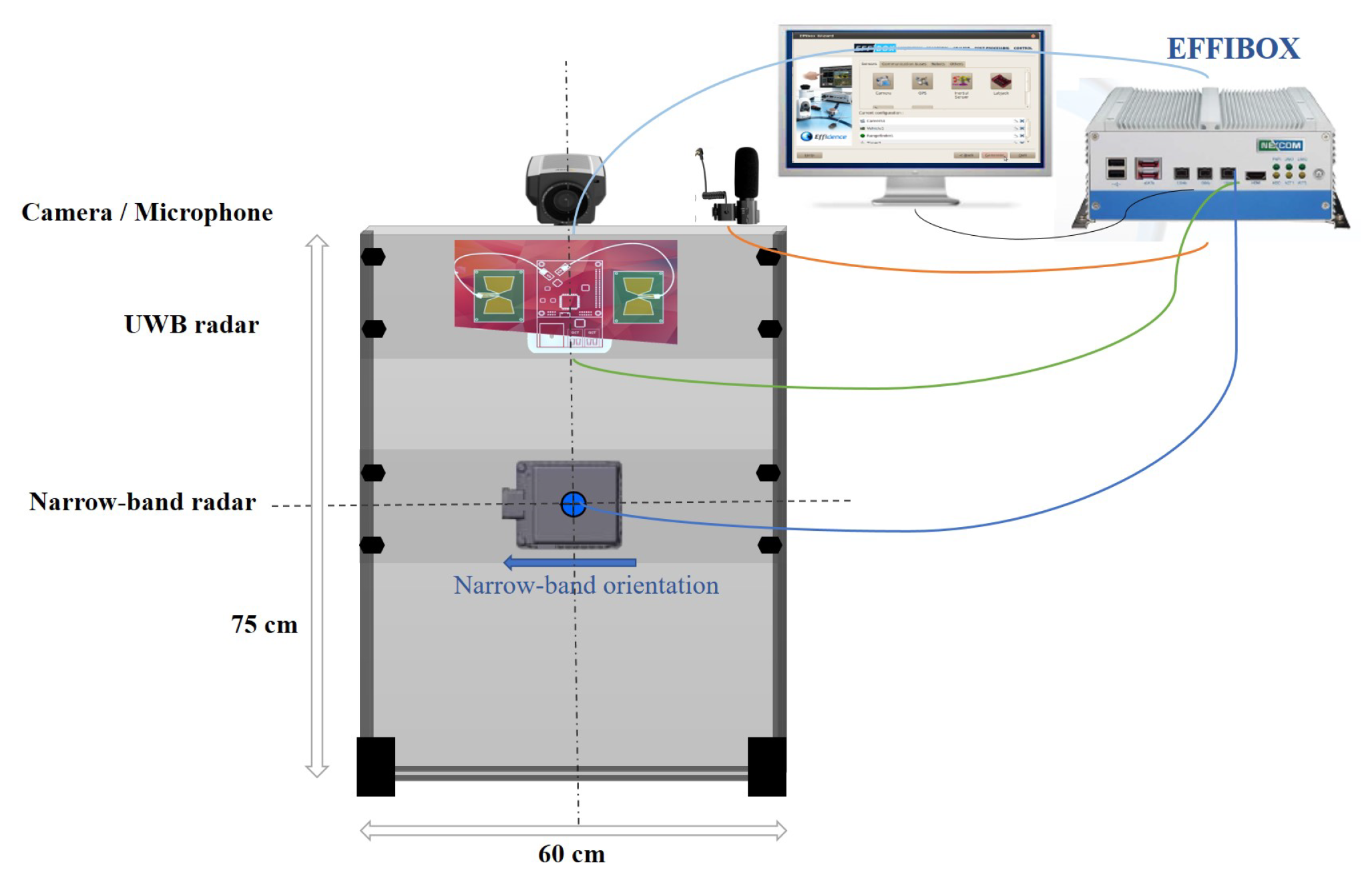

4.3. Sensors Embedding

4.4. Sensor Synchronisation

4.5. Labeling Process

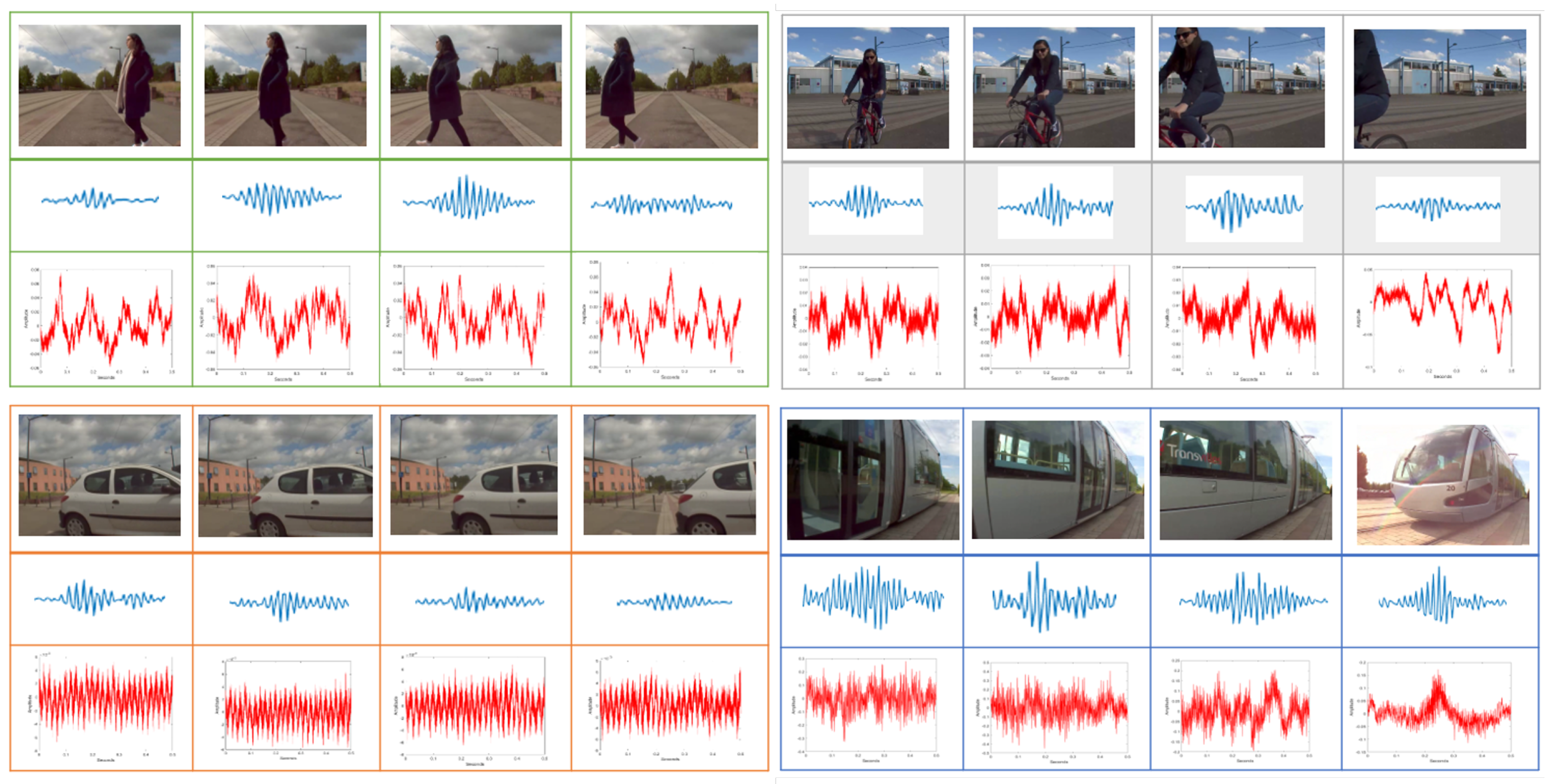

4.6. Scenarios Selection and Data Formats

4.7. Challenges of the Dataset

4.8. Statistics and Dataset Organisation

5. Fusion Framework

5.1. Image-based System

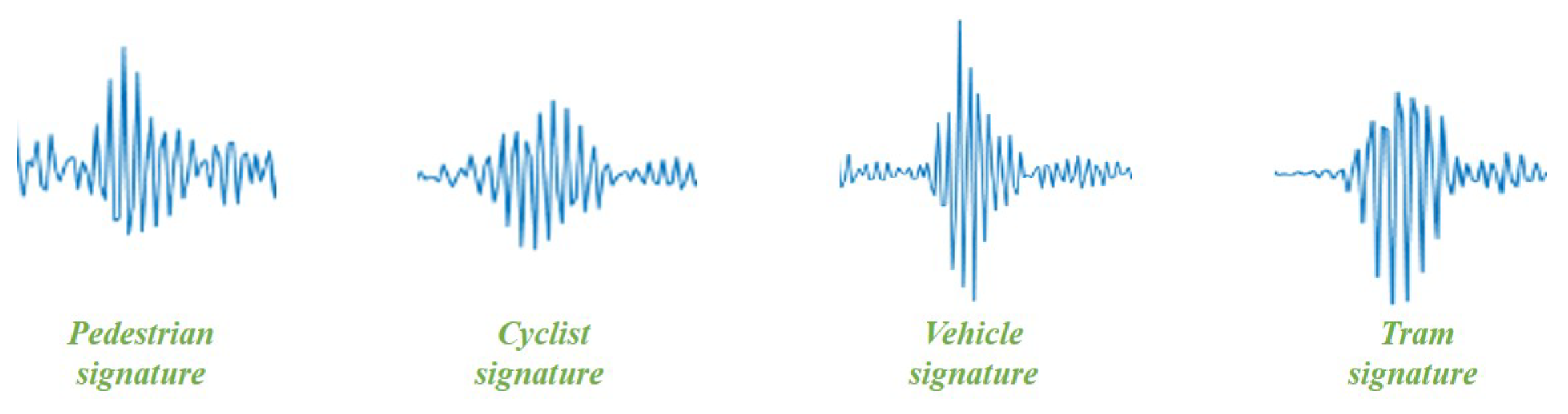

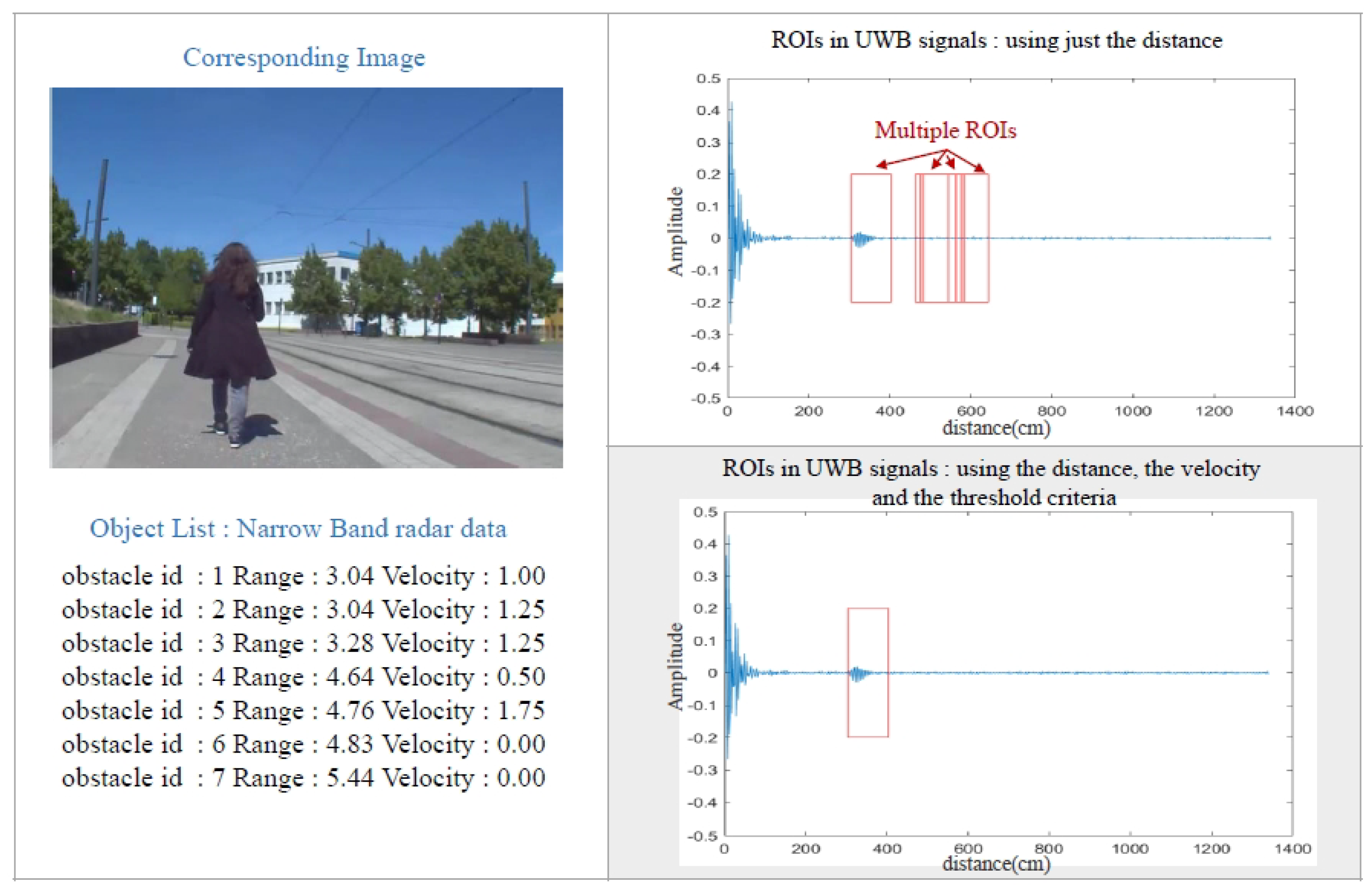

5.2. UWB Radar-based System

5.3. Acoustic-Based System

5.4. Multi-Modalities Fusion System

6. Discussion

7. Conclusions

Author Contributions

Conflicts of Interest

References

- Jegham, I.; Khalifa, A.B.; Alouani, I.; Mahjoub, M.A. MDAD: A Multimodal and Multiview in-Vehicle Driver Action Dataset. Comput. Anal. Images Patterns 2019, 518–529. [Google Scholar]

- Jegham, I.; Khalifa, A.B.; Alouani, I.; Mahjoub, M.A. Safe driving: Driver action recognition using SURF keypoints. In Proceedings of the International Conference on Microelectronics (ICM), Sousse, Tunisia, 16–19 December 2018; pp. 60–63. [Google Scholar]

- Dang, L.M.; Piran, M.; Han, D.; Min, K.; Moon, H. A survey on internet of things and cloud computing for healthcare. Electronics 2019, 8, 768. [Google Scholar] [CrossRef] [Green Version]

- Fridman, L.; Brown, D.E.; Glazer, M.; Angell, W.; Dodd, S.; Jenik, B.; Terwilliger, J.; Patsekin, A.; Kindelsberger, J.; Ding, L.; et al. MIT advanced vehicle technology study: Large-scale naturalistic driving study of driver behavior and interaction with automation. IEEE Access 2019, 8, 102021–102038. [Google Scholar] [CrossRef]

- Xue, J.R.; Fang, J.W.; Zhang, P. A survey of scene understanding by event reasoning in autonomous driving. Int. J. Autom. Comput. 2018, 15, 249–266. [Google Scholar] [CrossRef]

- Femmam, S.; M’Sirdi, N.; Ouahabi, A. Perception and characterization of materials using signal processing techniques. IEEE Trans. Instrum. Meas. 2001, 50, 1203–1211. [Google Scholar] [CrossRef]

- Khalifa, A.B.; Alouani, I.; Mahjoub, M.A.; Amara, N.E.B. Pedestrian detection using a moving camera: A novel framework for foreground detection. Cogn. Syst. Res. 2020, 60, 77–96. [Google Scholar] [CrossRef]

- Jegham, I.; Khalifa, A.B. Pedestrian detection in poor weather conditions using moving camera. In Proceedings of the IEEE/ACS 14th International Conference on Computer Systems and Applications (AICCSA), Hammamet, Tunisia, 30 October–3 November 2017; pp. 358–362. [Google Scholar]

- Chebli, K.; Khalifa, A.B. Pedestrian detection based on background compensation with block-matching algorithm. In Proceedings of the 15th International Multi-Conference on Systems, Signals & Devices (SSD), Hammamet, Tunisia, 19–22 March 2018; pp. 497–501. [Google Scholar]

- Sarkar, S.; Mohan, B. Review on Autonomous Vehicle Challenges. First Int. Conf. Artif. Intell. Cogn. Comput. 2019, 815, 593–603. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Choi, Y.; Kim, N.; Hwang, S.; Park, K.; Yoon, J.S.; An, K.; Kweon, I.S. KAIST multi-spectral day/night data set for autonomous and assisted driving. IEEE Trans. Intell. Transp. Syst. 2018, 19, 934–948. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. arXiv 2019, arXiv:1903.11027. [Google Scholar]

- Feng, D.; Haase-Schuetz, C.; Rosenbaum, L.; Hertlein, H.; Duffhauss, F.; Glaeser, C.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2020, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Ziebinski, A.; Cupek, R.; Erdogan, H.; Waechter, S. A survey of ADAS technologies for the future perspective of sensor fusion. In Proceedings of the International Conference on Computational Collective Intelligence Sithonia, Halkidiki, Greece, 28–30 September 2016; pp. 135–146. [Google Scholar]

- Kang, Y.; Yin, H.; Berger, C. Test Your Self-Driving Algorithm: An Overview of Publicly Available Driving Datasets and Virtual Testing Environments. IEEE Trans. Intell. Veh. 2019, 4, 2379–8858. [Google Scholar] [CrossRef]

- Guo, J.; Kurup, U.; Shah, M. Is It Safe to Drive? An Overview of Factors, Metrics, and Datasets for Driveability Assessment in Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2019. [Google Scholar] [CrossRef]

- Brostow, G.; Shotton, J.; Fauqueur, J.; Cipolla, R. Segmentation and recognition using structure from motion point clouds. In Proceedings of the European conference on computer vision, Marseille Palais, France, 12–18 October 2008; Volume 5302, pp. 44–57. [Google Scholar]

- Yu, F.; Xian, W.; Chen, Y.; Liu, F.; Liao, M.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving video database with scalable annotation tooling. arXiv 2018, arXiv:1805.04687. [Google Scholar]

- Wang, P.; Huang, X.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The apolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abhishek, P.; Srikanth, M.; Haiming, G.; Yi-Ting, C. The H3D Dataset for Full-Surround 3D Multi-Object Detection and Tracking in Crowded Urban Scenes. In Proceedings of the International Conference on Robotics and Automation Montreal Convention Centre, Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Xue, J.; Fang, J.; Li, T.; Zhang, B.; Zhang, P.; Ye, Z.; Dou, J. BLVD: Building A Large-scale 5D Semantics Benchmark for Autonomous Driving. In Proceedings of the International Conference on Robotics and Automation (ICRA) Montreal Convention Centre, Montreal, QC, Canada, 20–24 May 2019; pp. 6685–6691. [Google Scholar]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 year, 1000 km: The Oxford RobotCar dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Udacity Self-Driving Car. 2019. Available online: https://github.com/udacity/self-driving-car (accessed on 25 March 2020).

- Chen, Y.; Wang, J.; Li, J.; Lu, C.; Luo, Z.; Xue, H.; Xue, H. Lidar-video driving dataset: Learning driving policies effectively. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5870–5878. [Google Scholar]

- Ferroukhi, M.; Ouahabi, A.; Attari, M.; Habchi, Y.; Taleb-Ahmed, A. Medical video coding based on 2nd-generation wavelets: Performance evaluation. Electronics 2019, 8, 88. [Google Scholar] [CrossRef] [Green Version]

- Barnes, D.; Gadd, M.; Murcutt, P.; Newman, P.; Posner, I. The Oxford Radar RobotCar Dataset: A Radar Extension to the Oxford RobotCar Dataset. arXiv preprint 2019, arXiv:1909.01300. [Google Scholar]

- Jegham, I.; Khalifa, A.B.; Alouani, I.; Mahjoub, M.A. Vision-based human action recognition: An overview and real world challenges. Forensic Sci. Int. Digit. Investig. 2020, 32, 200901. [Google Scholar]

- Arnold, E.; Al-Jarrah, O.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A survey on 3d object detection methods for autonomous driving applications. IEEE Trans. Intell. Transp. Syst. 2019, 10, 3782–3795. [Google Scholar] [CrossRef] [Green Version]

- González, A.; Vázquez, D.; López, A.M.; Amores, J. On-board object detection: Multicue, multimodal, and multiview random forest of local experts. IEEE Trans. Cybern. 2016, 47, 3980–3990. [Google Scholar] [CrossRef]

- Sindagi, V.; Zhou, Y.; Tuzel, O. MVX-Net: Multimodal voxelnet for 3D object detection. In Proceedings of the International Conference on Robotics and Automation (ICRA) Montreal Convention Centre, Montreal, QC, Canada, 20–24 May 2019; pp. 7276–7282. [Google Scholar]

- Bijelic, M.; Mannan, F.; Gruber, T.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing Through Fog Without Seeing Fog: Deep Sensor Fusion in the Absence of Labeled Training Data. arXiv preprint 2019, arXiv:1902.08913. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint 2014, arXiv:1409.1556. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. Ssd: Single shot multibox detector. In Proceedings of the European conference on computer vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Xu, D.; Anguelov, D.; Jain, A. Pointfusion: Deep sensor fusion for 3d bounding box estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 244–253. [Google Scholar]

- Qi, C.; Liu, W.; Wu, C.; Su, H.; Guibas, L. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Oh, S.I.; Kang, H. Object detection and classification by decision-level fusion for intelligent vehicle systems. Sensors 2017, 17, 207. [Google Scholar] [CrossRef] [Green Version]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Khalid, B.; Khan, A.; Akram, M.U.; Batool, S. Person Detection by Fusion of Visible and Thermal Images Using Convolutional Neural Network. In Proceedings of the 2nd International Conference on Communication, Computing and Digital systems (C-CODE), Islamabad Pakistan, 6–7 March 2019; pp. 143–148. [Google Scholar]

- Wagner, J.; Fischer, V.; Herman, M.; Behnke, S. Multispectral Pedestrian Detection using Deep Fusion Convolutional Neural Networks. ESANN 2016. [Google Scholar]

- Li, C.; Song, D.; Tong, R.; Tang, M. Illumination-aware faster R-CNN for robust multispectral pedestrian detection. Pattern Recognit. 2019, 85, 161–171. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; He, K.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 91–99. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-road vehicle detection and tracking using MMW radar and monovision fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Bouain, M.; Berdjag, D.; Fakhfakh, N.; Atitallah, R.B. Multi-Sensor Fusion for Obstacle Detection and Recognition: A Belief-Based Approach. In Proceedings of the 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 1217–1224. [Google Scholar]

- Wang, J.G.; Chen, S.J.; Zhou, L.B.; Wan, K.W.; Yau, W.Y. Vehicle Detection and Width Estimation in Rain by Fusing Radar and Vision. In Proceedings of the 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1063–1068. [Google Scholar]

- Redmon, J.; Divvala, S.; Divvala, S.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 16 June–1 July 2016; pp. 779–788. [Google Scholar]

- Nabati, R.; Qi, H. RRPN: Radar Region Proposal Network for Object Detection in Autonomous Vehicles. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3093–3097. [Google Scholar]

- Chadwick, S.; Maddetn, W.; Newman, P. Distant vehicle detection using radar and vision. In Proceedings of the International Conference on Robotics and Automation (ICRA) Montreal Convention Centre, Montreal, QC, Canada, 20–24 May 2019; pp. 8311–8317. [Google Scholar]

- Guan, D.; Cao, Y.; Yang, J.; Cao, Y.; Yang, M.Y. Fusion of multispectral data through illumination-aware deep neural networks for pedestrian detection. Information Fusion 2019, 50, 148–157. [Google Scholar] [CrossRef] [Green Version]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection. Sens. Data Fusion: Trends, Solut. Appl. (SDF) 2019, 1–7. [Google Scholar]

- The Effidence Organization. 2018. Available online: https://www.effidence.com/ (accessed on 25 March 2020).

- Umain corporation. 2018. Available online: https://www.umain.co.kr/en/ (accessed on 25 March 2020).

- Continental. 2018. Available online: https://www.conti-engineering.com/en-US/Industrial-Sensors/Sensors/ (accessed on 25 March 2020).

- Axis. 2018. Available online: https://www.axis.com/products/axis-m1113 (accessed on 25 March 2020).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Qin, Z.; Zhang, Z.; Chen, X.; Wang, C.; Peng, Y. Fd-Mobilenet: Improved Mobilenet with a Fast Downsampling Strategy. In Proceedings of the 25th (ICIP), Beijing, China, 17–20 September 2017; pp. 1363–1367. [Google Scholar]

- Serizel, R.; Bisot, V.; Essid, S.; Richard, G. Acoustic features for environmental sound analysis. Comput. Anal. Sound Scenes Events 2018, 71–101. [Google Scholar]

| Dataset | Year | Modalities | Size | Annotation | Varity | Categories | Recording Cities | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2D | 3D | Daytime | Nighttime | Fog | Rain | Snow | ||||||

| CamVid [19] | 2008 | Camera Camera | 4 sequences | × | - | × | - | - | - | - | 32 classes | Cambridge |

| Kitti [11] | 2012 | Lidar Inertial sensors | 22 sequences | × | × | × | - | - | - | 8 classes | Karlsruhe | |

| Cityscapes [12] | 2016 | Camera | - | × | - | × | - | - | - | - | 30 classes | 50 cities |

| BDD100k [20] | 2017 | Camera Camera(stereo) Thermal camera | 100k | × | - | × | × | - | × | × | 10 classes | Four regions in US |

| Kaist Multispectral [13] | 2018 | 3D lidar GNSS Inertial sensors 3 camera(stereo) | - | × | - | × | × | - | - | - | - | Seoul |

| ApolloScape [21] | 2018 | 3D lidar GNSS | - | × | × | × | × | - | - | - | 35 classes | Four regions in China |

| Inertial sensors 3 cameras | ||||||||||||

| H3D [22] | 2019 | Lidar GPS | 160 | - | × | × | - | - | - | - | 8 classes | San Francisco |

| Inertial sensors 3 cameras | ||||||||||||

| BLVD [23] | 2019 | Lidar GPS | - | - | × | × | × | - | - | - | 3 classes | Changshu |

| Inertial sensors 6 cameras | ||||||||||||

| nuScenes [14] | 2019 | Lidar 5 radars camera | 1000 | × | × | × | × | × | × | - | 23 classes | Boston & Singapore |

| OLIMP | 2020 | UWB radar Narrow-band radar Microphone | 407 sequences | × | - | × | - | × | - | × | 4 classes | France |

| Dataset | Autonomous Driving Task | |||||

|---|---|---|---|---|---|---|

| Multi-Object Detection | Object Tracking | Lane Detection | Semantic Segmentation | SLAM | 3D Vision | |

| CamVid [19] | × | × | × | |||

| kitti [11] | × | × | × | × | × | × |

| Cityscapes [12] | × | × | ||||

| BDD100k [20] | × | × | × | × | ||

| Kaist Multispectral [13] | × | × | × | × | ||

| ApolloScape [21] | × | × | ||||

| H3D [22] | × | × | ||||

| BLVD [23] | × | |||||

| nuScenes [14] | × | × | ||||

| OLIMP | × | × | ||||

| Ref | Object Class | Sensing Modalities Processing | Hand Crafted Features | Network Pipeline | Fusion Level | Used Dataset |

|---|---|---|---|---|---|---|

| Lidar and Camera fusion | ||||||

| [31] | Pedestrian | -Depth maps generated from lidar sensor -RGB images | -HOG -LBP | - | Intermediate late | Kitti |

| [32] | 3D car | -Lidar voxel -RGB images are processed via 2D image detector | - | Early Intermediate | kitti | |

| [33] | 2D cars (foggy weather) | -Depth, intensity and the height information acquired from lidar and processed via VGG16 -RGB images are processed via VGG16 | - | Early and intermediate layers | Self-recorded dataset | |

| [36] | Car Pedestrian Cyclist | -Lidar raw data are processded by PointNet -RGB images features are extracted via CNN | - | CNN | Early | Kitti SUN-RGBD |

| Infrared and visible camera fusion | ||||||

| [40] | Persons | -RGB images and thermal images are encoded for early fusion | - | ResNet-152 | Early Intermediate | KAIST-Multispectral |

| [41] | Pedestrian | -RGB images and thermal images are processed via CaffeNet | - | R-CNN | Early Late | KAIST Pedestrian dataset |

| [50] | Pedestrian | -RGB images and thermal images are processed via VGG16 | - | Faster R-CNN | Early Intermediate Late | KAIST Pedestrian dataset |

| Radar and camera fusion | ||||||

| [44] | Vehicles | -Tracks from radar sensor -RGB images | -Symmetry detection algoritm -Active contour detection | - | Intermediate | Real-workd recorded dataset |

| [45] | Pedestrian | -Radar generates a list of tracks -RGB images | ACF object detector | - | Late | Real-workd recorded dataset |

| [46] | Vehicles | -Detections from radar -RGB images | - | Yolo | Late | Self-recorded dataset under rainny weather |

| [48] | Car, Person, Motorcycle, Truck, Bicycle and Bus | -Tracks from rear radars -RGB images from the rear camera | - | Fast R-CNN | Early | Two substes from the nuScenes dataset |

| [49] | 2D Vehicle | -Radar range proceeded by ResNet -RGB images proceeded by ResNet | - | One stage detector | Intermediate | Self recorded dataset |

| [51] | Car, Bus, Motorcyle, Truck, Trailer, Bicyle, Human | -Radar data transformed to an image plane -RGB images | - | VGG | low | nuScences TMU Self recorded dataset |

| Sensor | Sepcification | Measure Latency |

|---|---|---|

| AXISM1113 [55] | -A monocular camera, RGB images, 25 FPS, 640x480 resolution, angle of view: 65–25, 50 Hz | 20 ms |

| UMAIN radar [53] | -≤ to 6 range, provides signals, each obstacle has its own signature, 4 GHz. | 22.5 ms |

| ARS 404-21 [54] | -≤ to 250 range, provides distance; velocity and RCS, 77 GHz, accuracy for far range, accuracy for near range | 72 ms |

| Microphone CM-520 | -≤ to 20 range, +10dB sensitivity, it fits well with video cameras, 50Hz–16Khz for frequency response | Not Applicable |

| P | R | AP | mAP | |

|---|---|---|---|---|

| Pedestrian | 84 | 54 | 53 | |

| Cyclist | 77 | 70 | 67 | 60.5 |

| Vehicle | 81 | 48 | 47 | |

| Tram | 86 | 76 | 75 |

| P | R | |

|---|---|---|

| Pedestrian | 46 | 36 |

| Cyclist | 45 | 52 |

| Vehicle | 8 | 0 |

| Tram | 0 | 0 |

| P | R | |

|---|---|---|

| Pedestrian | 20 | 17 |

| Cyclist | 44 | 15 |

| Vehicle | 40 | 38 |

| Tram | 61 | 64 |

| P | R | AP | mAP | |

|---|---|---|---|---|

| Pedestrian | 86 | 54 | 53 | |

| Cyclist | 81 | 69 | 67 | |

| Vehicle | 82 | 48 | 47 | 60.5 |

| Tram | 90 | 76 | 75 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mimouna, A.; Alouani, I.; Ben Khalifa, A.; El Hillali, Y.; Taleb-Ahmed, A.; Menhaj, A.; Ouahabi, A.; Ben Amara, N.E. OLIMP: A Heterogeneous Multimodal Dataset for Advanced Environment Perception. Electronics 2020, 9, 560. https://doi.org/10.3390/electronics9040560

Mimouna A, Alouani I, Ben Khalifa A, El Hillali Y, Taleb-Ahmed A, Menhaj A, Ouahabi A, Ben Amara NE. OLIMP: A Heterogeneous Multimodal Dataset for Advanced Environment Perception. Electronics. 2020; 9(4):560. https://doi.org/10.3390/electronics9040560

Chicago/Turabian StyleMimouna, Amira, Ihsen Alouani, Anouar Ben Khalifa, Yassin El Hillali, Abdelmalik Taleb-Ahmed, Atika Menhaj, Abdeldjalil Ouahabi, and Najoua Essoukri Ben Amara. 2020. "OLIMP: A Heterogeneous Multimodal Dataset for Advanced Environment Perception" Electronics 9, no. 4: 560. https://doi.org/10.3390/electronics9040560