Abstract

Optical wireless communications (OWC) are emerging as cost-effective and practical solutions to the congested radio frequency-based wireless technologies. As part of OWC, optical camera communications (OCC) have become very attractive, considering recent developments in cameras and the use of fitted cameras in smart devices. OCC together with visible light communications (VLC) is considered within the framework of the IEEE 802.15.7m standardization. OCCs based on both organic and inorganic light sources as well as cameras are being considered for low-rate transmissions and localization in indoor as well as outdoor short-range applications and within the framework of the IEEE 802.15.7m standardization together with VLC. This paper introduces the underlying principles of OCC and gives a comprehensive overview of this emerging technology with recent standardization activities in OCC. It also outlines the key technical issues such as mobility, coverage, interference, performance enhancement, etc. Future research directions and open issues are also presented.

1. Introduction

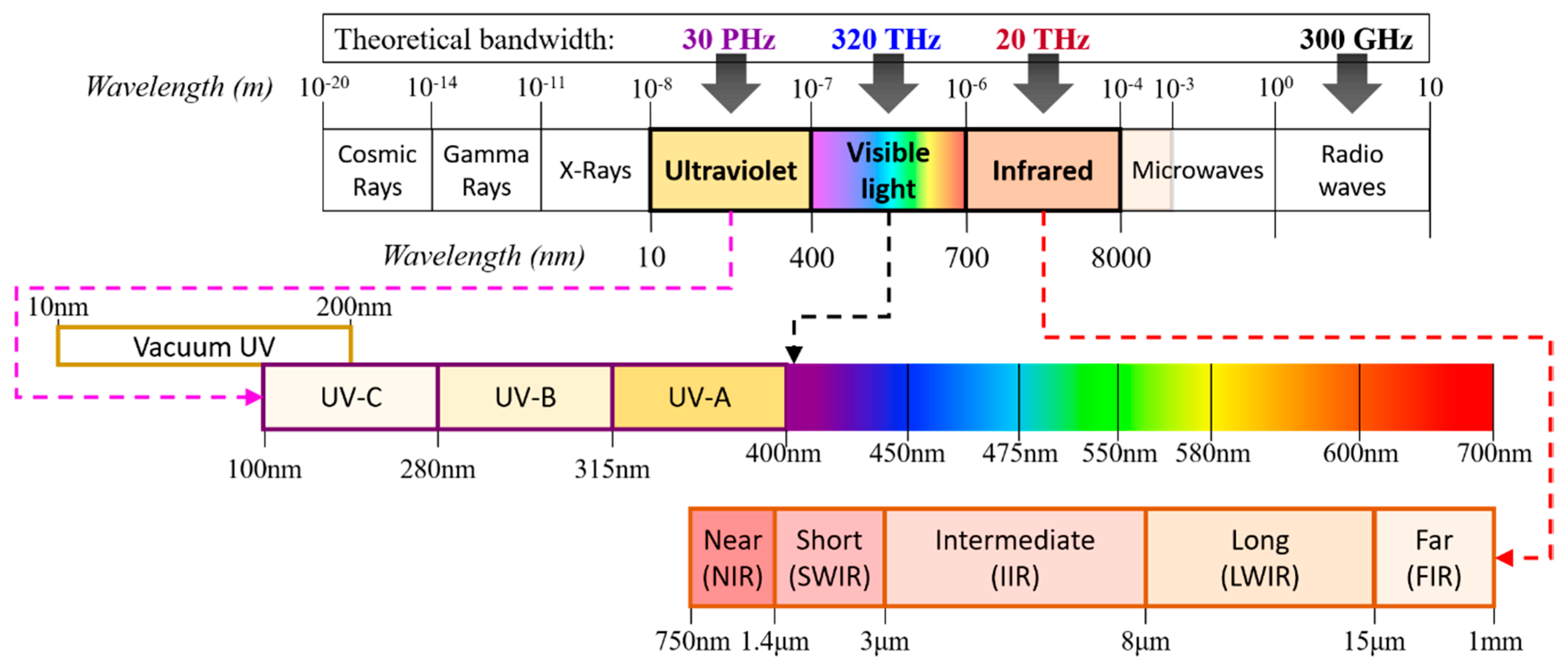

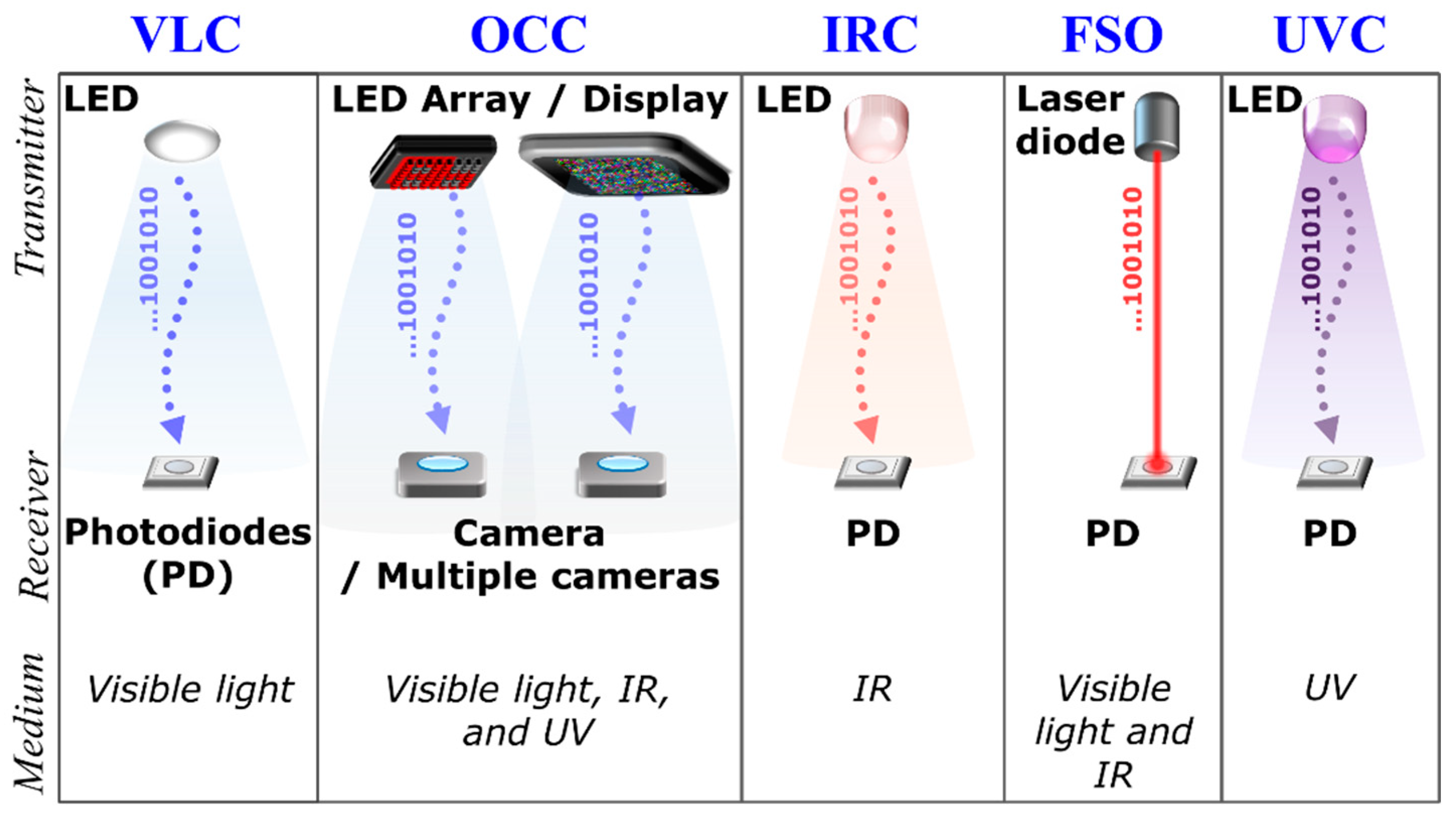

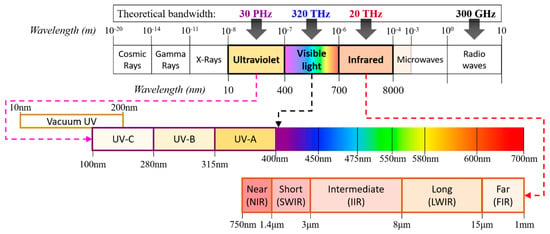

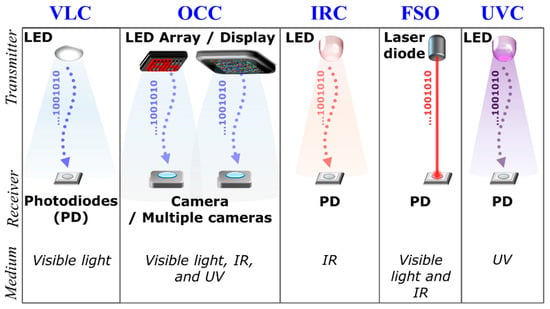

The rapid increase in the data being generated and the growing demand for capacity have pushed existing congested radio frequency (RF)-based wireless communications to their limits. In addition, we are witnessing incommensurate spectral efficiency as well as bandwidth with increasing traffic demands and requirements in the RF domain [1]. From a futuristic perspective, it is foreseen that high-rate and high-capacity RF communications coexist with more affordable, offloading, and unlimited bandwidth wireless communications. As an attractive complementary solution, optical wireless communications (OWC) have been considered for easing the bandwidth usage pressure on RF wireless systems in a harmonious manner. OWC covers the entire optical spectrum of ultraviolet (UV), visible and infrared (IR) [1,2], see Figure 1, thus offering a massive theoretical bandwidth of 20 THz (IR), 320 THz (visible) and 30 PHz (UV) compared with the bandwidth of 300 GHz in the RF technology [1,3,4]. Within the OWC umbrella, there are a number of transmission schemes for both indoor and outdoor environments including infrared communications (IRC), free space optics (FSO), visible light communications (VLC) and ultraviolet communications (UVC) [1,3,4,5,6], as shown in Figure 2. The VLC and IRC are generally considered for short-range indoor applications, while FSO (using the IR band) and UVC (using the C band) are predominantly employed for medium-long range outdoor environments. Note that FSO can also use the visible band for short to medium range transmissions.

Figure 1.

The electromagnetic spectrum.

Figure 2.

Overview of optical wireless communications (OWC) transmission schemes.

The VLC technology, a subset of OWC as defined in the IEEE 802.15.7, has specifically been envisioned for high capacity mobile data networks since 2000, to complement the RF-based mobile communications [1,7,8,9,10]. The popularity of VLC development is driven by its efficiency in utilizing existing light-emitting diodes (LEDs)-based lighting infrastructure as the transmitter (Tx) to offer significantly higher luminous efficacy, data communications and indoor localization [1,9]. In contrast to other lighting sources, LEDs can be switched at a very high speed, thus facilitating data transmission that can be picked up using photodiodes (PDs) or image sensors (ISs).

A VLC system employing an IS, i.e., a camera, is known as optical camera communication (OCC) according to the standards, which requires no hardware modifications [5,11,12]. The IS in a camera, which is a massive matrix of μ-scale PDs, together with a lens, can be used to provide an imaging massive multiple-input multiple-output (MIMO) system, i.e., enabling spatial separation of light sources on the IS. In addition, the camera (IS)-based receiver (RX) is capable of capturing information not only from multiple TXs (i.e., LEDs) but also from digital displays such as liquid crystal display (LCD) and organic LED (OLED)-based screens [5,12].

Furthermore, an OCC image capturing increases the communication range in outdoor environments up to a kilometer, due to the use of image processing, long sampling duration and lenses [5]. Nevertheless, most OCC schemes reported in the literature are intended for indoor applications because of smart-devices availability and a lower intensity of ambient light in indoor environment [5].

With regard to the OCC, it is important to note that OCC-based systems are mainly targeted for a low-rate transmission, due to the reception-sampling rate, which is determined by the camera frame rate FR, which is typically in the range of 30 to 60 frames per second (fps) at a common resolution of 1920 × 1080 pixels. However, with current advances made in cameras, for the same resolution, FR of new smartphone cameras can reach up to 960 fps [13]. Different from VLC that uses a PD to process the received optical intensity, OCC is based on capturing the source using the IS, i.e., a two-dimension (2D) RX containing millions of pixels, and carrying out a significant amount of image processing to acquire the information. Therefore, the data transmission rate of OCC is mostly limited to either kilobits per second (Kbps) or megabits per second (Mbps) in contrast to multi-gigabit per second (Gbps) transmission rates in PD-based VLC systems [1,5,11,14].

The IEEE 802.15.7 standard was initially formulated in 2011 for VLC without considering the IS-based RX [1,11]. In 2014, however, OCC was included in the revised IEEE 802.15.7r1 standard [11,15], which was managed by the TG7r1 task group and covered wider light spectra such as near infrared (NIR) and near UV [5,11]. OCC is currently organized by the updated task group TG7m that finalized the initial draft in 2018 [14,16]. The task group is a joint consortium of academic researchers from multiple countries including United States, South Korea, Turkey, Taiwan and China [16].

Due to the inclusion of nearly the entire optical spectrum in the standardization, there are a wide range of potential applications for OCC within the context of Internet-of-things (IoT) such as augmented reality, color code, device-to-device communications, digital signage, exhibition/store service, in-flight service, indoor positioning, vehicular communications and underwater/seaside communications [5,17,18]. At a global level, a number of companies including Fraunhofer Heinrich Hertz Institute (Germany), Intel (United States), Panasonic (Japan), Casio (Japan), Huawei Technologies (China), Electronics and Telecommunications Research Institute (Korea), LG Electronics (Korea) and China Telecom (China) have been working on the application of OCC [5].

In recent years, the interest in OCC has been growing, judging from four surveys published in the past four years, mostly driven by the early standardization activity (i.e., IEEE 802.15.7r1) [5,11,17,18]. The first survey focused on key technologies used in OCC [11], while the second survey investigated various implementation challenges associated with OCC with no comprehensive structures [17]. The third survey covered concise performance analysis of OCC intended for inclusion in the fifth generation (5G) wireless networks [5]. Finally, the fourth survey, which was the most recently published work, is a broad survey of OCC covering localization, navigation, motion capture, and some insights into future research direction [18,19]. This survey, however, only introduced several challenges and future research directions in the limited sense.

This paper, on the other hand, presents a comprehensive survey on OCC in such a way that it focuses heavily on the principles and modulations of OCC to date, together with potential applications and future research directions in mind. It also outlines key technologies and standardization as well as modulation schemes that have been proposed and investigated. The paper aims to enhance practical implementations of OCC, while disseminating promising OCC technologies and future challenges.

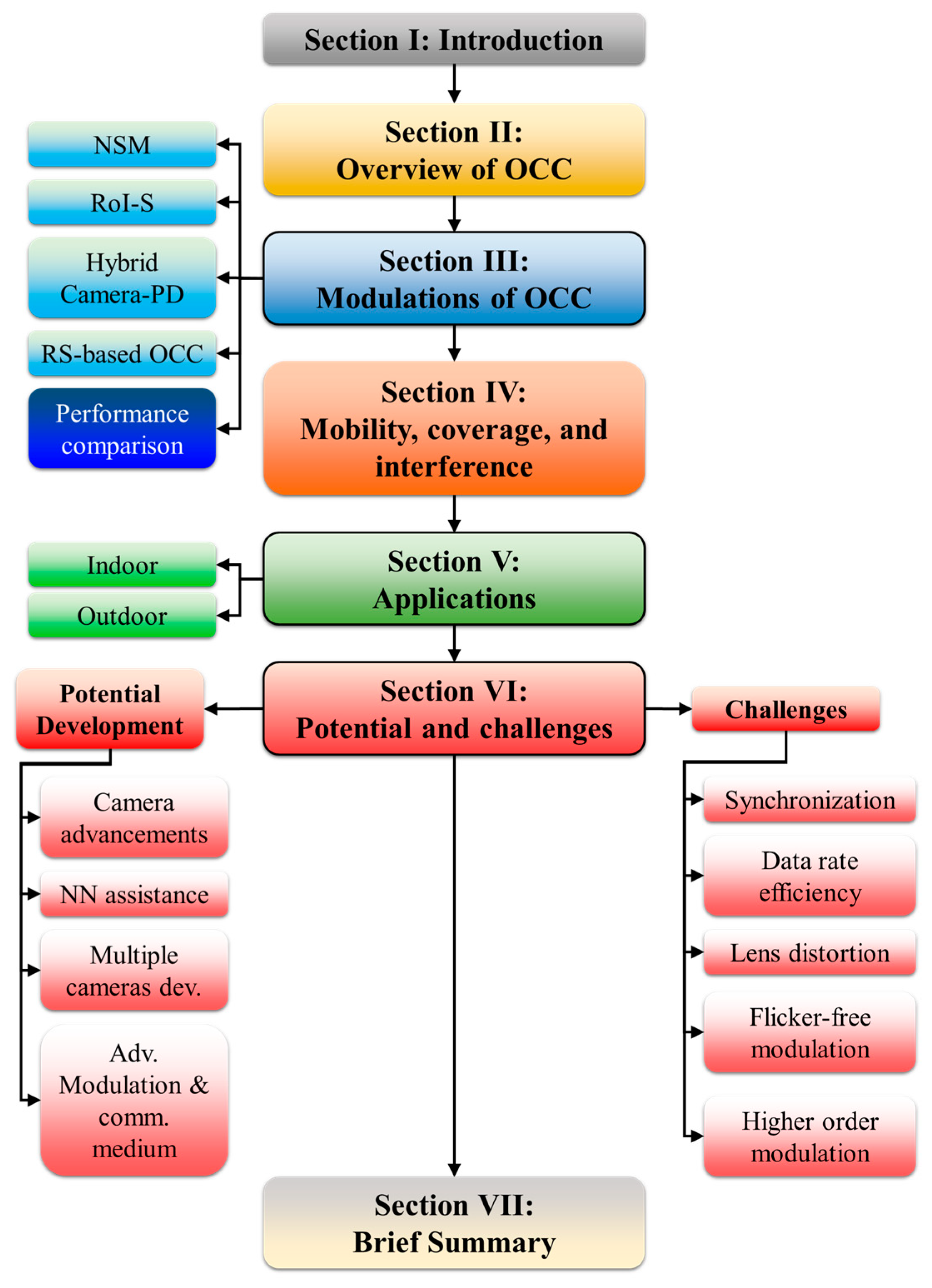

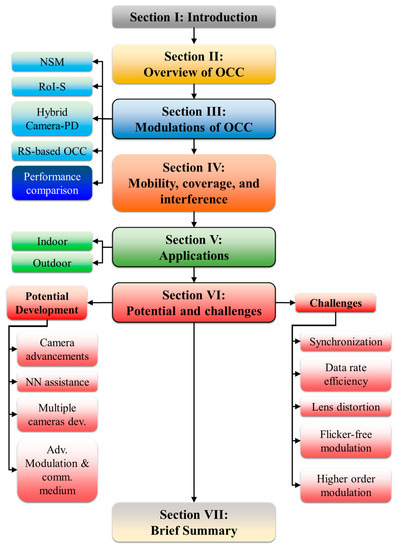

The rest of the paper, as illustrated in Figure 3, is organized as follows. Section 2 describes OCC in detail and Section 3 reviews existing modulations of OCC. Section 4 presents various performance enhancements in terms of mobility, coverage, and interference. Section 5 is dedicated to recent OCC schemes for both indoor and outdoor applications. Section 6 discusses future potential developments, enhancements, and challenges in OCC. Finally, Section 7 closes the paper with a brief summary. In addition, Appendix A shows a list of both abbreviations and acronyms used throughout the paper.

Figure 3.

The paper organization.

2. Optical Camera Communication

2.1. Principles

OCC employs either a single or multiple-camera setup as the RX, which can now be found in most mobile devices such as mobile phones, smart watches, laptops, tablets, as well as vehicles and buildings [2]. Therefore, the existing LED lighting fixtures and widely used cameras, i.e., cheaper and off-the-shelf devices, can be employed in OCC. While the majority of VLC systems are limited to utilizing the visible light spectrum, OCC employing different types of camera can utilize the entire light spectrum from visible light, NIR to UV-C band. In OCC, the camera captures images/video streams of the intensity modulated light sources, i.e., a single LED, multiple LEDs and digital display screens, and extracts the information by means of image processing [17]. Thus, camera-based OCC can be used in both indoor and outdoor environments for a range of applications [11]. The development of complementary metal-oxide semiconductor (CMOS) sensor technologies has created a new generation of high-pixel-resolution and high-speed built-in cameras [11,17]. Although most cameras capture images at a FR of 30 to 60 fps, the OCC standardization covers high-speed cameras, which can support a FR of up to thousands of fps [5,11].

Similar to VLC, OCC predominantly relies on the directional line-of-sight (LOS) transmission mode. In addition, OCC conveniently offers a MIMO capability by extracting the data from captured multiple light sources. Therefore, for these reasons, OCC can be considered as a subset of VLC. It is interesting to note that the imaging MIMO capability of the camera can also be exploited for other applications, such as range estimation, shape detection, scene detection, etc.

2.2. Standardization

OCC has been in the process of standardization since 2014 by the IEEE 802.15.7r1 task group TG7r1, which is a revised version of IEEE 802.15.7 standard for VLC [11,20,21]. OCC is considered as an extension of the VLC standard in IEEE 802.15.7 that was initially defined as a specific task group to formulate the standard revision for VLC, which is called the IEEE 802.15.7-2011 standard [1]. The task group for OCC was renamed to IEEE 802.15.7m OWC Task Group TG7m in order to develop technical specifications for the OCC standard [14]. TG7m covers NIR (700–1000 nm wavelengths), UV (100–400 nm wavelengths) and visible light (400–700 nm wavelengths) bands. The TG7m task group has contributed to various OWC solutions including OCC, LED Identification (LED-ID) and LiFi [14]. OCC is alternatively known as IS communications (ISC) or camera-based VLC, since it utilizes an IS as the RX, which provides a supplementary functionality of communications in addition to video streaming and image capturing [22,23,24].

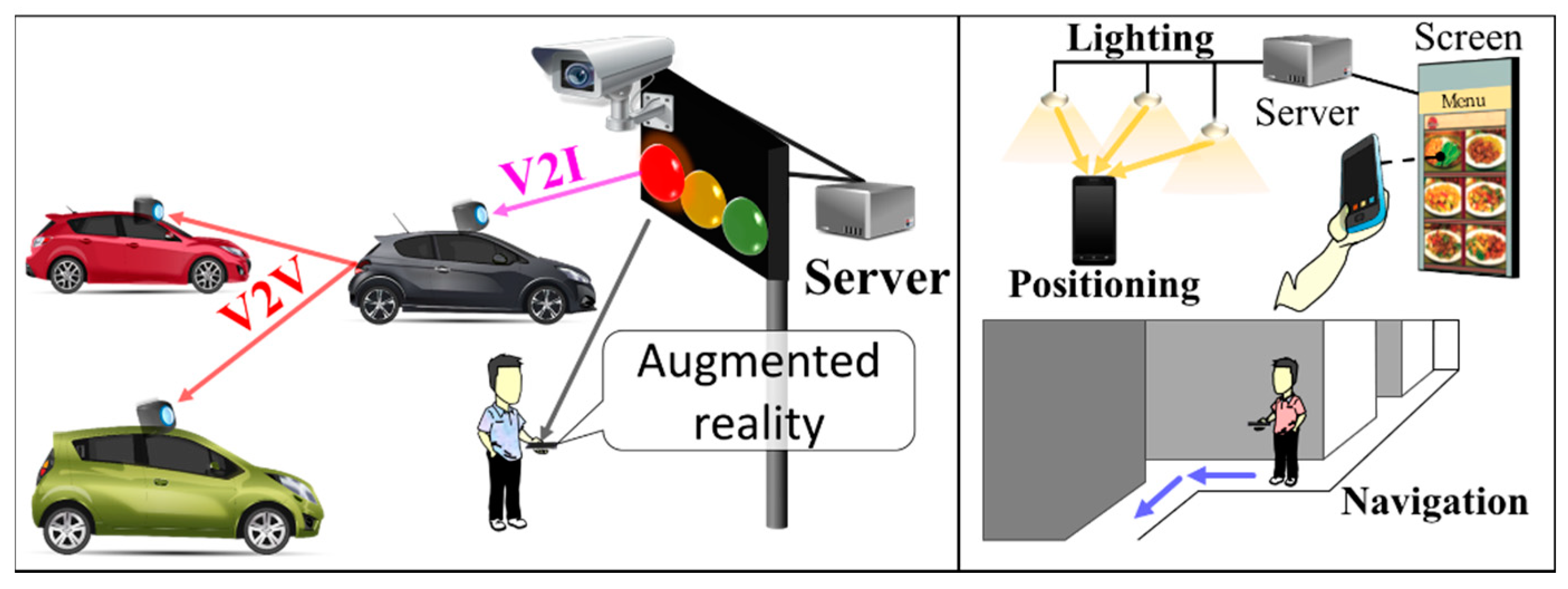

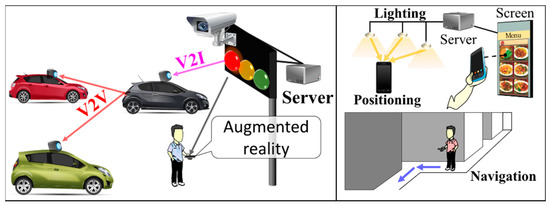

Figure 4 illustrates different examples of OCC considered in TG7m, highlighting vehicular communications (vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I)) and indoor applications [14]. Additional standardized applications were proposed in late 2018 by TG7m, such as 2D color code, TX/RX relay, digital signage, exhibition/store service, in-flight service, underwater (or seaside/coastal) communications, drone-to-drone, smart living, street-lamp access point, smart city, smart factory, and smart house [14,25,26]. These applications are classified into flicker and flicker-free physical (PHY) layer modes, depending on the switching rate of the TX being perceptible or imperceptible to human eyes, respectively.

Figure 4.

Example of promising services in IEEE 802.15.7m.

Regarding modulations, five additional schemes are standardized to deliver optimum performance in OCC. That is, according to the finalized document in 2017, the TG7m Committee approved additional sets of OWC PHY operating modes, i.e., PHY IV to PHY VIII, as shown in Table 1. PHY IV, V, and VI are directly related to OCC developments, while PHY VII and VIII are intended for LiFi. In direct detection VLC systems, the simple on-off keying (OOK) and variable pulse position modulation (VPPM) schemes were adopted in the initial version of the IEEE 802.15.7-2011 standard. Table 2 details the OCC modulation schemes that were introduced in the IEEE 802.15.7m standard for PHY IV, PHY V, and PHY VI. The next revised draft of the standard, known as IEEE 802.15.7-2018, was finalized in 2018 [27,28]. More detailed OCC technologies are presented in Section 3 and Section 4.

Table 1.

IEEE 802.15.7m initial operating modes.

Table 2.

IEEE 802.15.7m: Characteristics and performance estimation of OCC technologies.

2.3. Transceivers

The operation and performance of IS-based OCC systems are affected by both the IS characteristics and camera’s image processing [17]. In digital cameras, the IS is typically based on the CMOS architecture, which is a mature, highly developed and less costly technology than charge-coupled device (CCD)-based cameras [29]. Note that the size of CMOS-based ISs is increasingly smaller, while still offering higher resolution images with high pixel density. Smaller sized CMOS ISs are made possible using the advanced electronics fabrication technology with a 7 nm manufacturing scale [30].

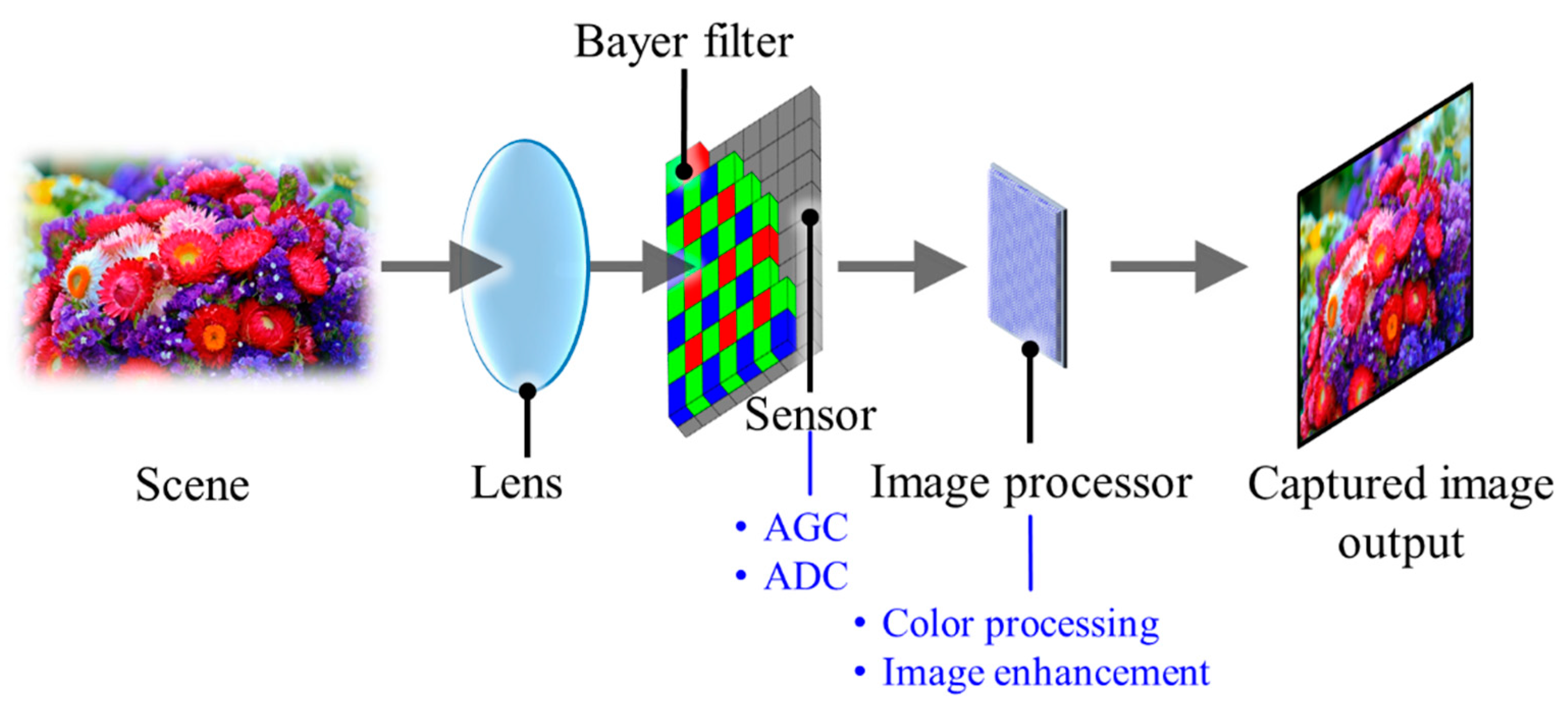

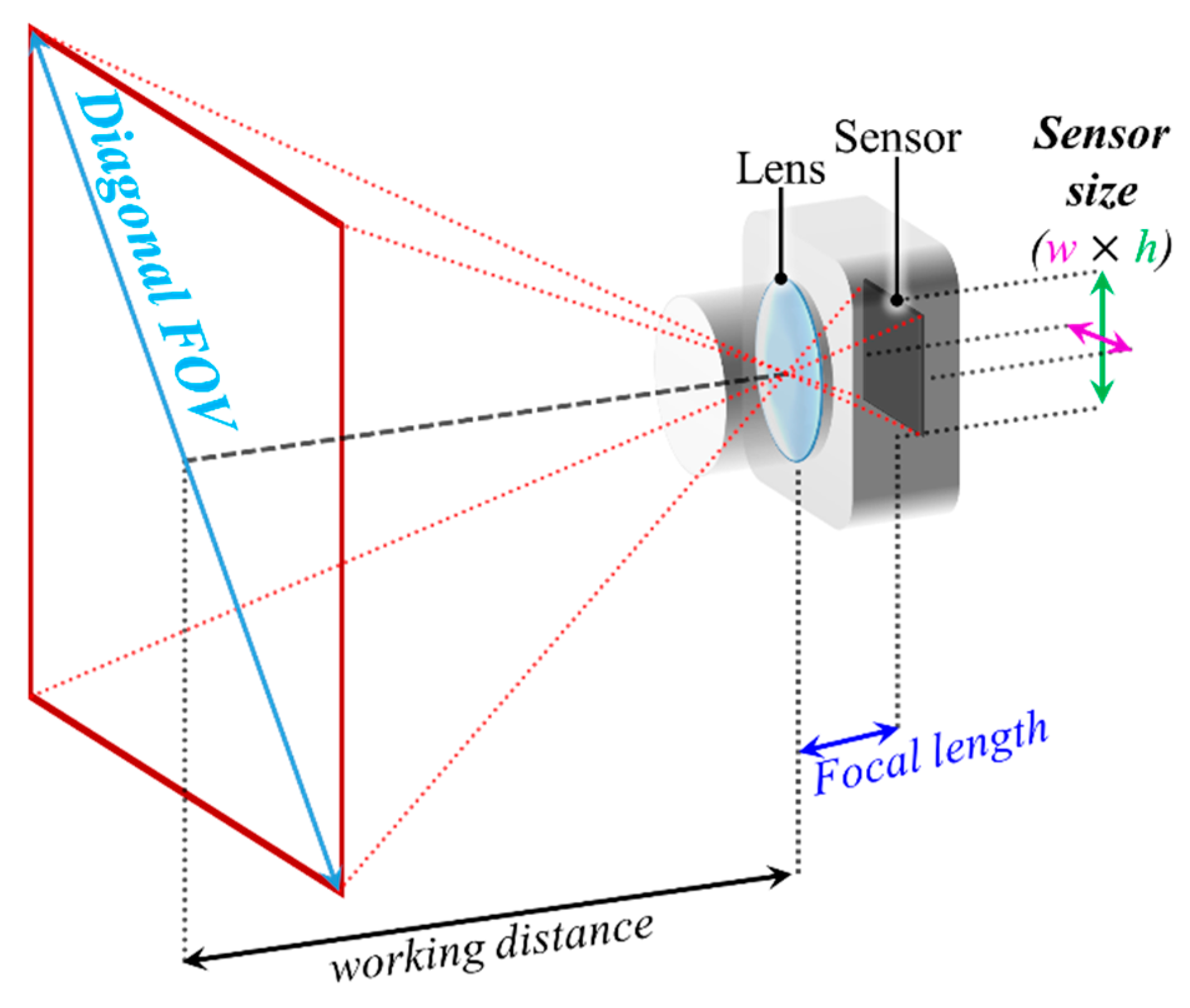

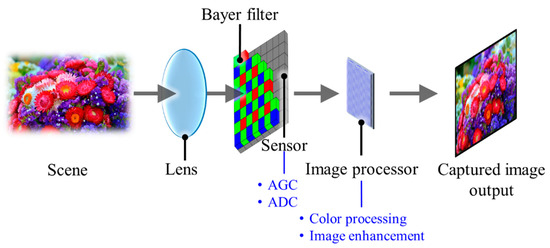

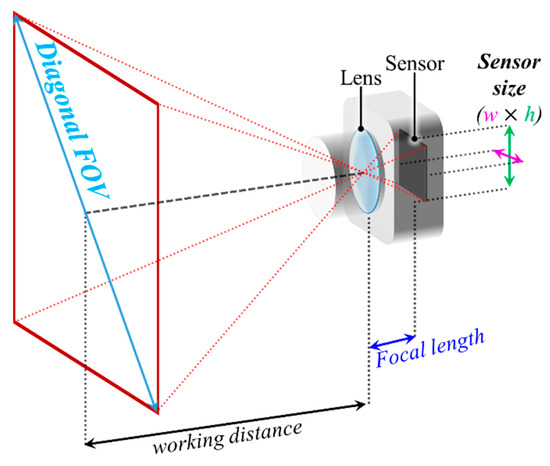

As shown in Figure 5, a typical camera structure is composed of an imaging lens, a color filter array (CFA), an image sensor (IS) and an image processing unit (IPU) [29]. The lens projects the light illuminated or reflected from a light source or an object on the IS, respectively. The diagonal field of view (FOV) of the camera is determined by the focal length of the camera lens and the sensor size as illustrated in Figure 6. In addition, the working distance defines the distance for the camera to capture the image in focus.

Figure 5.

A brief schematic of a digital camera.

Figure 6.

A diagonal field of view (FOV) of a camera.

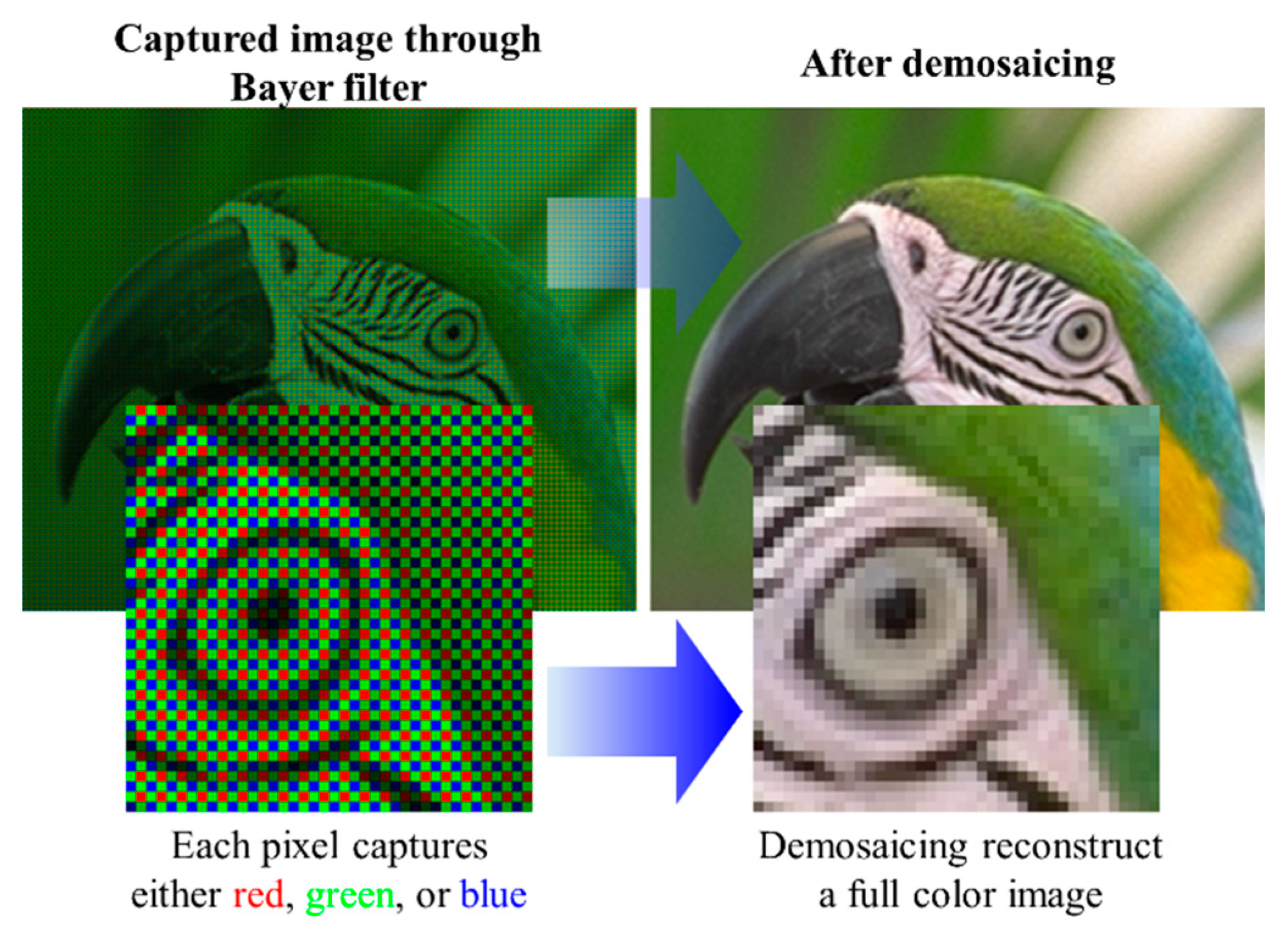

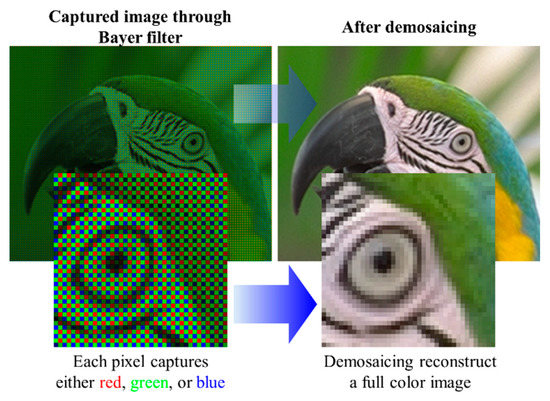

A CFA typically with Bayer pattern records the color information of the illuminated light in a spatial configuration [31]. Bayer pattern is structured based on 2 × 2 blocks of filters (i.e., one blue, one red, and two green), which can have different arrangements of BGGR, RGBG, GRGB or RGGB. The reason for more green filters is the human eye, which is more sensitive to the green light; hence, more information about the green color is required [32]. The image is then processed by an image processor utilizing a demosaicing algorithm, also known as deBayering, to reconstruct the image colors similar to the real scene, as illustrated in Figure 7 [31,32]. The output of the IPU is then processed to extract the information in the image.

Figure 7.

An example of the demosaicing process.

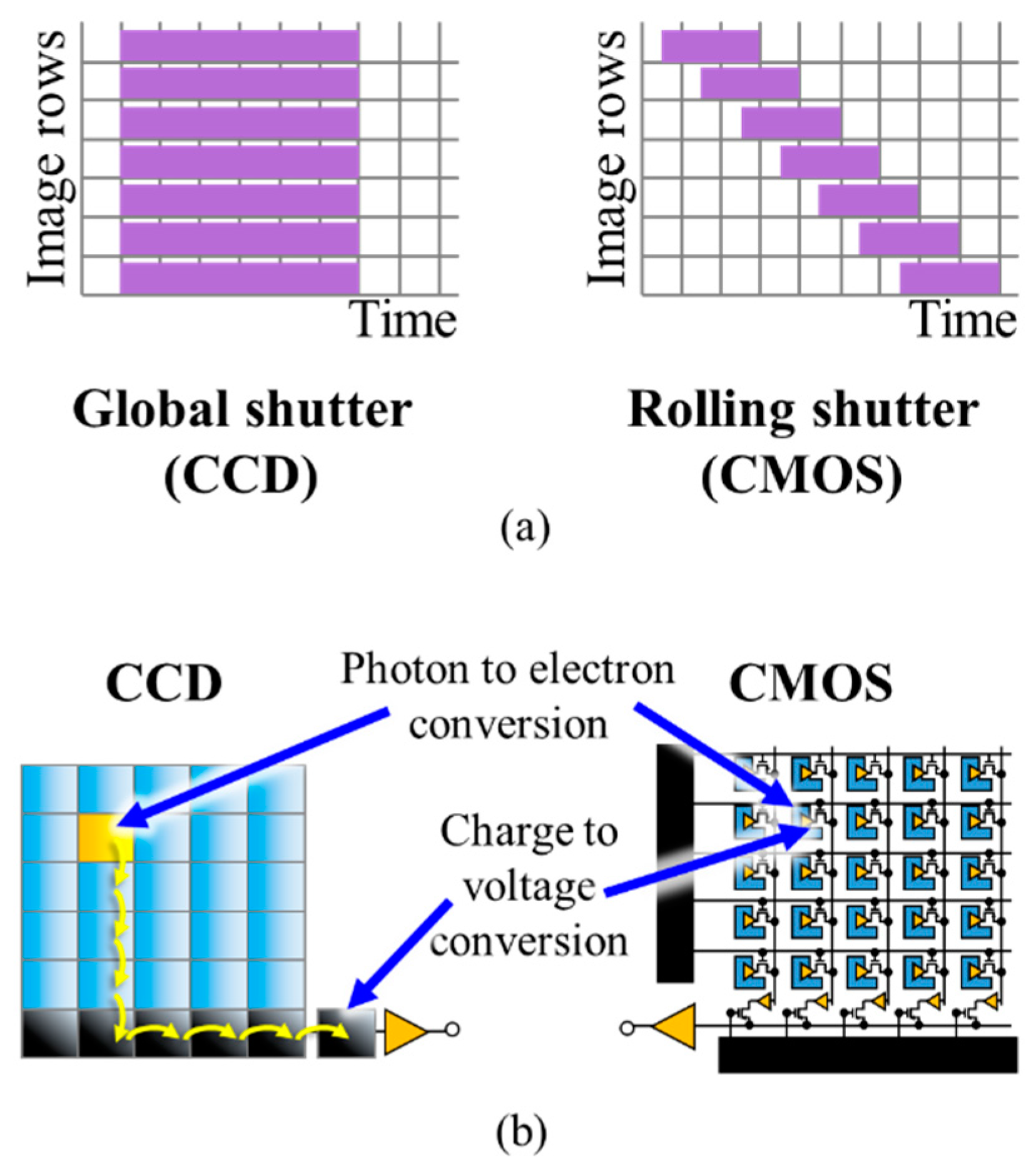

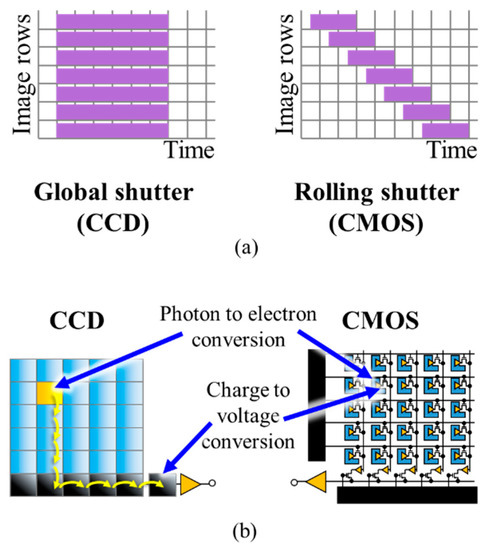

The internal shutter mechanism of cameras determines the exposure of pixels in the IS. Two main types of ISs in terms of the architecture are CCD and CMOS [33]. A CCD sensor has a larger physical dimension due to the utilization of a relatively larger analog-to-digital converter (ADC), compared with a CMOS IS [29,34]. Therefore, CCD is not generally implemented in mobile devices, especially smartphones. In comparison with CCD, CMOS cameras provide lower power consumption, smaller IS, faster readout, lower cost and more programmability. In addition, since pixel scanning is based on the X–Y address scheme in the CMOS architecture, direct access to a desired part of the IS is possible, which can increase the FR at the cost of reduced FOV [35,36]. Cameras can have two different exposure methods, i.e., global shutter (GS) and rolling shutter (RS), as illustrated in Figure 8a. In the global-shutter-based ISs, the entire sensor is exposed to the light at the same time, whereas in the rolling-shutter-based cameras, each row is exposed to the light at a time, thereby considerably reducing the power consumption. CCD sensors inherently capture images using the GS, while the exposure method in most CMOS ISs is based on RS [29,33].

Figure 8.

(a) A comparison between the GS and RS; (b) a structural comparison between CCD and CMOS sensors.

It should be noted that in the global-shutter-based CCD ISs, considerably longer frame capture time is needed to convert the charge into a voltage signal [29]. Figure 8b shows the structures of CCD and CMOS sensors [29]. Due to the fabrication of much more compact ADC for each pixel, the CMOS sensor is preferable nowadays compared with relatively larger CCD sensors. CMOS is also employed for high-speed cameras with FR > 1000 fps, which cannot be accomplished using the CCD sensors due to slower ADCs. Note that the CMOS sensor in smartphone cameras is capable of reaching an FR of 1000 fps [13,37]. The shutter methods are implemented within the sensor itself; however, the information can be treated differently as part of image processing following image capturing, which is related to the OCC modulation schemes. The detailed description of modulation schemes based on RS in OCC is provided in Section 3.

As mentioned previously, OCC only utilizes CMOS sensors, since it is widely employed in the majority of mobile devices including smartphones [11,17]. With regards to image capturing in OCC, CMOS sensors can have either GS or RS mechanism, depending on the image processing algorithms and the TX switching rate [5,17,22,38,39]. For example, a camera with a full high definition (FHD) resolution (1920 × 1080 pixels) and a frame rate of 60 fps employing the GS mechanism can sample 60 complete frames every second. On the other hand, the identical camera with RS processes individual rows on each frame as a sample, thus yielding a sampling rate of 64,800 (60 × 1080) samples per second (sps).

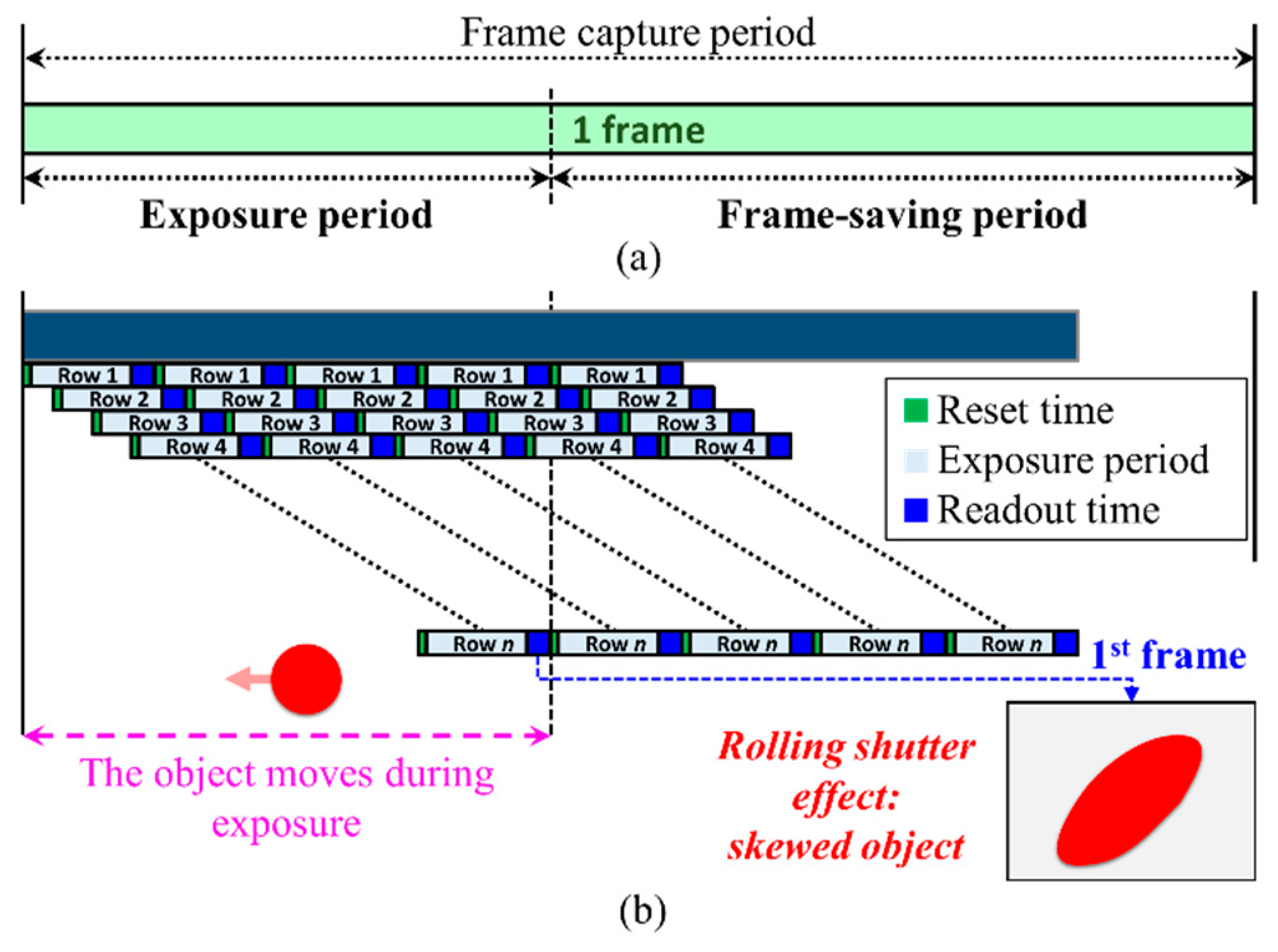

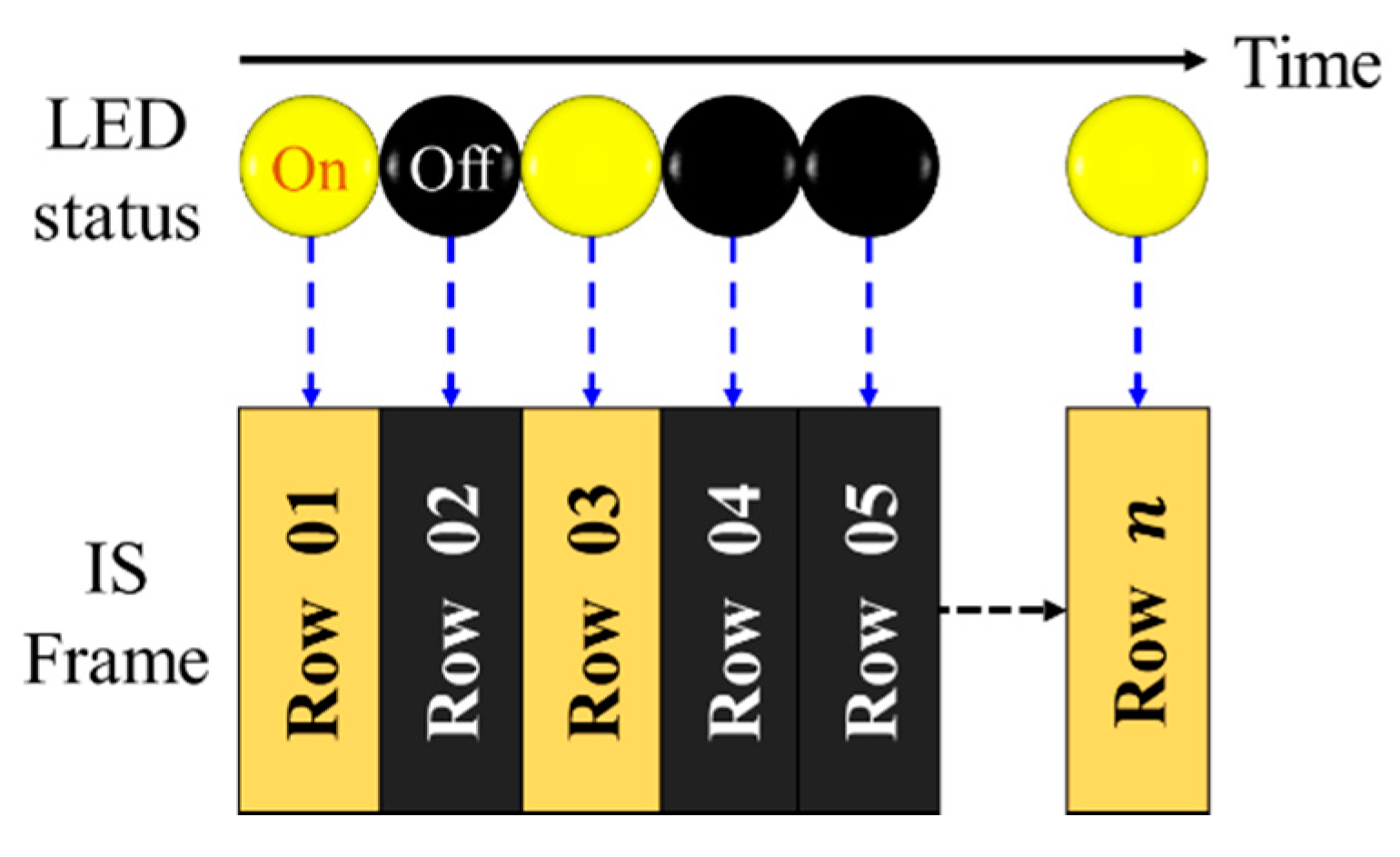

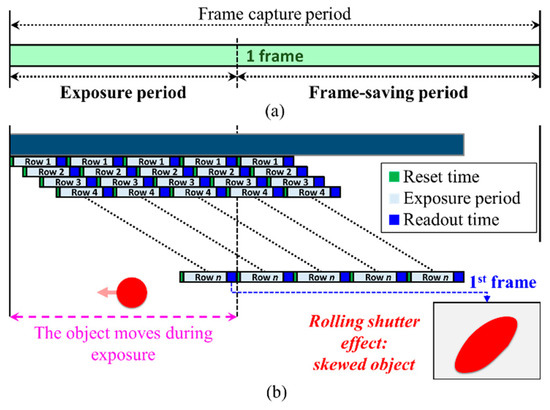

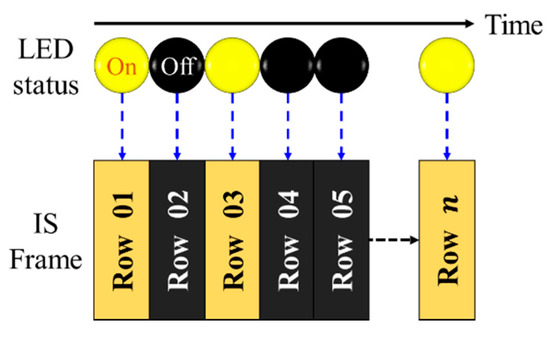

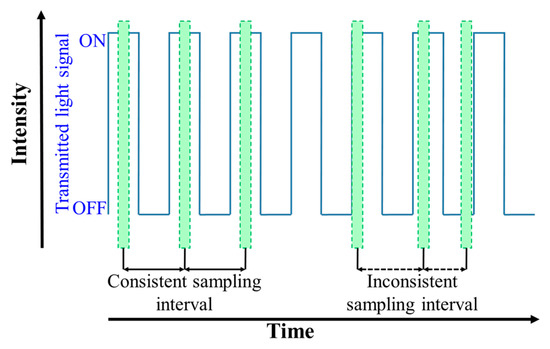

The complete frame capture period comprises the exposure and frame-saving periods as shown in Figure 9a. The exposure time, which can be set by the camera controller, is a period when the IS is exposed to the light. In the RS cameras, the rows of the IS are exposed sequentially. Therefore, each pixel in a row of frame is treated as an individual sample, which increases the sampling rate by thousand times, thus offering data rates much higher than FR. Figure 10 demonstrates the LED status and the intensity in each row of the IS against the time. It is important to note that the switching rate of the transmitting LED should be set to be lower than the sampling rate of the RS mechanism.

Figure 9.

(a) Frame capture period; (b) RS distortion causing a skewed object in the capture frame.

Figure 10.

RS mechanism on image sensor for data capture.

Following the exposure time Texp, the camera’s shutter is closed, followed by the image processing (including image compression) as well as saving the frame. Customarily, the image processing and frame saving are called the frame saving period. Obviously, the frame saving period is determined by the speed of the IPU and the storage device. In the RS, the frame-saving period is compressed by pipelining the readout time sequentially on each row as illustrated in Figure 9b.

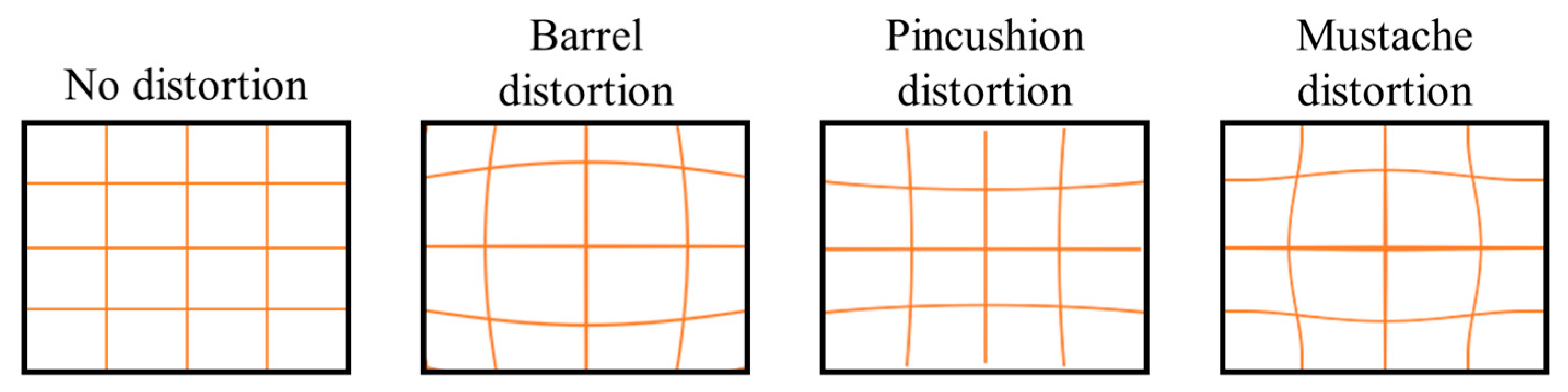

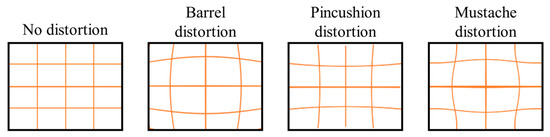

The RS mechanism in CMOS, however, has an undesirable skew in the captured frame (i.e., RS distortion) during object capturing. This is due to the fact that the object moves at a high speed during the exposure period as illustrated in Figure 9b [29,39]. In order to avoid this RS distortion for a 10-percent blur threshold, the exposure time must meet the following condition [39]:

where and denote the object’s displacement and velocity, respectively. However, the image distortion is largely discounted as the image-capture functionality of the camera is ignored. The primary concerns of the RS-based OCC are image skew, link range and the limited camera resolution.

Meanwhile, in the GS-based OCC with an N × M pixels IS with each pixel producing G-level grayscale signals and FR, a theoretical maximum achievable data rate limit is given by [5,40,41]:

The constant value of 1/8 is a rate reduction factor for three-dimensional signals, which is a downsampling factor for the data rate in a camera to avoid spatial aliasing, since the pixels in an image sensor are densely organized [41]. The rate reduction factor can only be omitted from the equation when each pixel in the image sensor is placed with adequate gap [41]. For example, a camera with a full high definition (FHD) resolution (1920 × 1080 pixels), 256 levels of grayscale in the IS with a of 60 fps yields a theoretical maximum achievable data rate of 124.42 Mbps [41]. However, this data rate can only be obtained when each IS pixel is illuminated by an independent TX. Unfortunately, this individual illumination is impractical due to the transmission distance between the camera and the TX.

To carry out a more accurate measurement of the captured image, a minimum of two pixels per the smallest feature is required. The smallest feature indicates the size of a smallest identifiable property in an image, i.e., a clear shape and color. The minimum IS (camera) resolution is then two times the size of FOV (), either horizontal or vertical FOV, divided by the smallest feature . Therefore, to capture a clear image, the minimum resolution of the camera is given as [42]:

For example, if the FOV covers 100° and the smallest feature is 1 mm, the required minimum resolution is 200 pixels. Thus, a camera with a resolution of 640 × 480 pixels would be adequate. This is because 200 pixels are less than 480 pixels, which is the smallest dimension. In other words, the number of pixels (in IS) define the maximum distance of capturing a clear image, related to the FOV in Equation (3) and its working distance illustrated in Figure 6.

In a camera-based OCC, a range of TXs can be adopted, e.g., digital display modules and LEDs [5,17,22,36,38]. Color filter arrays, wavelength division multiplexing (WDM) and color shift keying (CSK) based on RGB LEDs or displays have been employed in OCC [5]. Interestingly, the typical refresh rate of a digital display is ~60 Hz, which is in the same range of FR [38]. The type of digital display used as the TX is either LED, LCD screen, OLED, or e-paper [5,17,38,43]. In display-to-camera communications, multiple colors with multiple intensity levels are deployed for each pixel, since the illumination footprint is mapped on the spatially separated PDs of the IS. Since current display devices support FHD resolution, higher data rates can be achieved.

2.4. Channel Characteristics

Although the OCC channel is largely considered to be free space, other mediums such as vacuum, gas and water can also be used [5,14]. In OCC, the transmission mode can be LOS [1,2,3], non-LOS (NLOS) [4,5] or a combination of both. The channel impulse response for the LOS link can be represented as a matrix , where and are the numbers of rows and columns of the image sensor, respectively and is the channel impulse response of the -th pixel. Using a single LED and a Gaussian mixture model, is estimated as [6]:

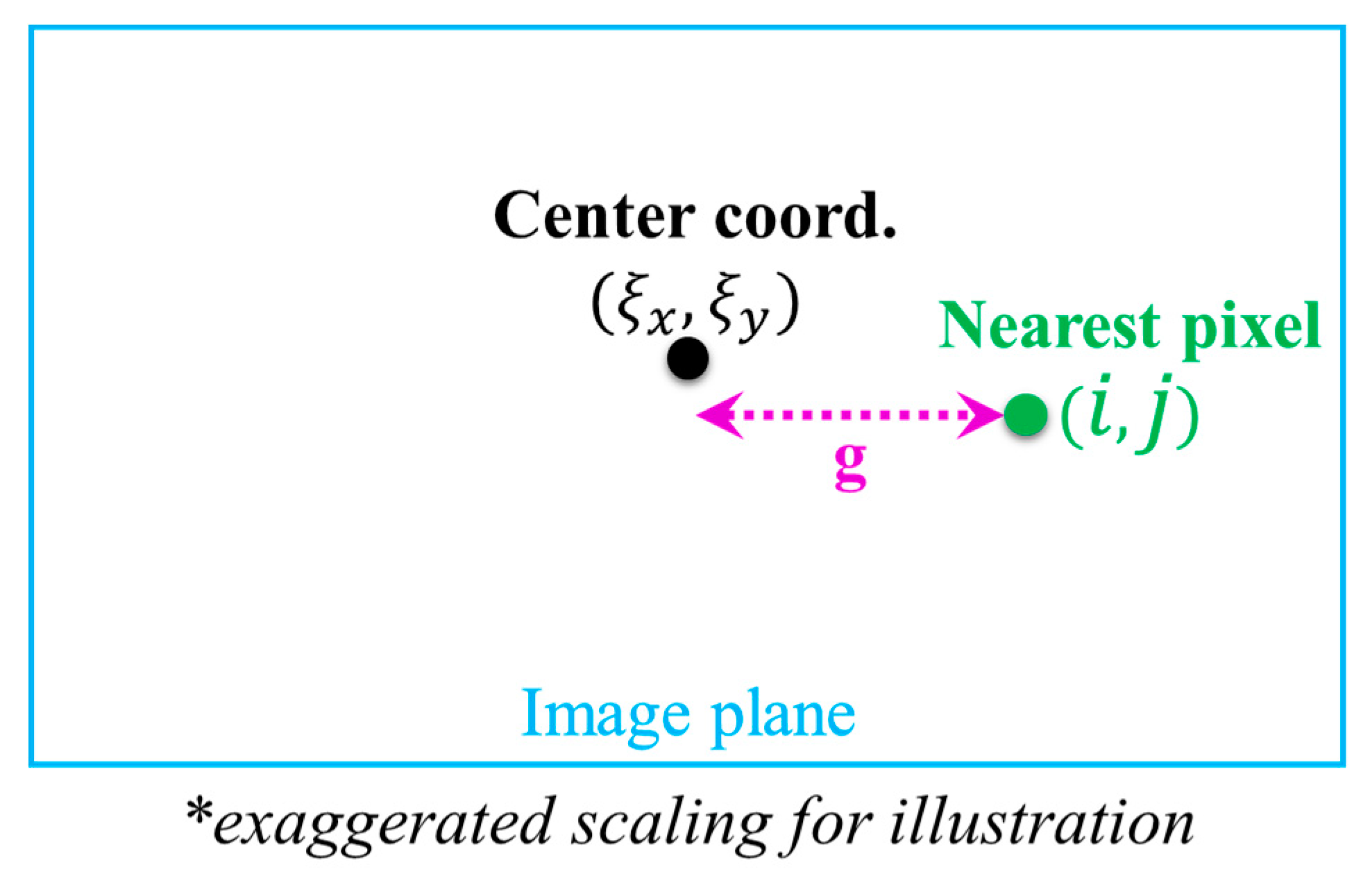

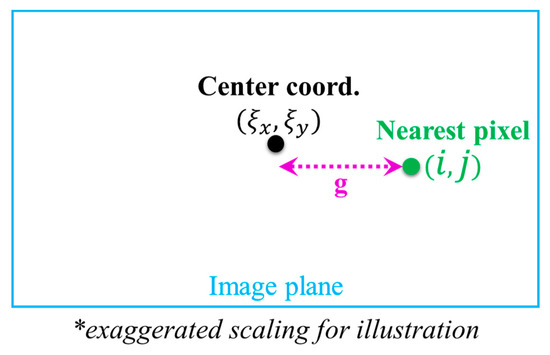

where , is the pixel length, and are the magnification factors of the camera at a reference link span and at a distance of , respectively. and with denoting the parameter of the model and and are the standard deviations in the x and y directions on the image plane, respectively. In (4), and , where and are the coordinates of the center of the image and nearest pixel to this center, respectively and is the gap between two pixels. Figure 11 illustrates the coordinates system visually.

Figure 11.

Image plane coordinates system.

Most OCC systems reported are based on the LOS configuration. However, in scenarios where TXs are not within FOV of the LOS, such as two vehicles approaching crossroads, the channel will be via NLOS paths. In NLOS links, reflected beams normally have large off-axis projected optical illumination footprints that can increase link tolerance to the camera movements.

Considering the NLOS OCC link, the camera’s -th pixel is given by [44]:

where and denote the area of the aperture and the reflection coefficient, respectively. and are radiation patterns of the LED and the reflection element, respectively. and are the boundaries of the area covered by pixels in and dimensions, respectively. is the distance between the TX and the reflecting element , and is the distance between the camera and the reflecting element. For a matte surface, reflections follow the 1st order Lambertian pattern.

For the camera, the incident angle is given by:

where and are the unitized incident beam and the camera plain normal vector, respectively, and the dot is the inner product. For the CMOS active pixel, the frequency response as a function of the amplification gain G per pixel and the angular frequency is given by [45]:

In (7), the 2nd term represents the delay of between the center of the exposure and when the sample is actually read, while the 3rd term is the frequency response of integrating over .

One advantage of OWC against RF communications is the channel modeling, which involves considerably higher frequencies of the optical signal, compared with the optical clock signal [17]. Thus, the multipath fading and the Doppler effect can be ignored. For OCC, the signal-to-noise ratio () can be obtained as [17]:

where and denote the noise and received signal powers, respectively. is the channel impulse response (the channel DC gain), denotes the camera’s responsivity, denotes transmit power of -th light source, denotes the electron charge, represents ambient light per unit area, is the area of the PD (area of the IS) and .

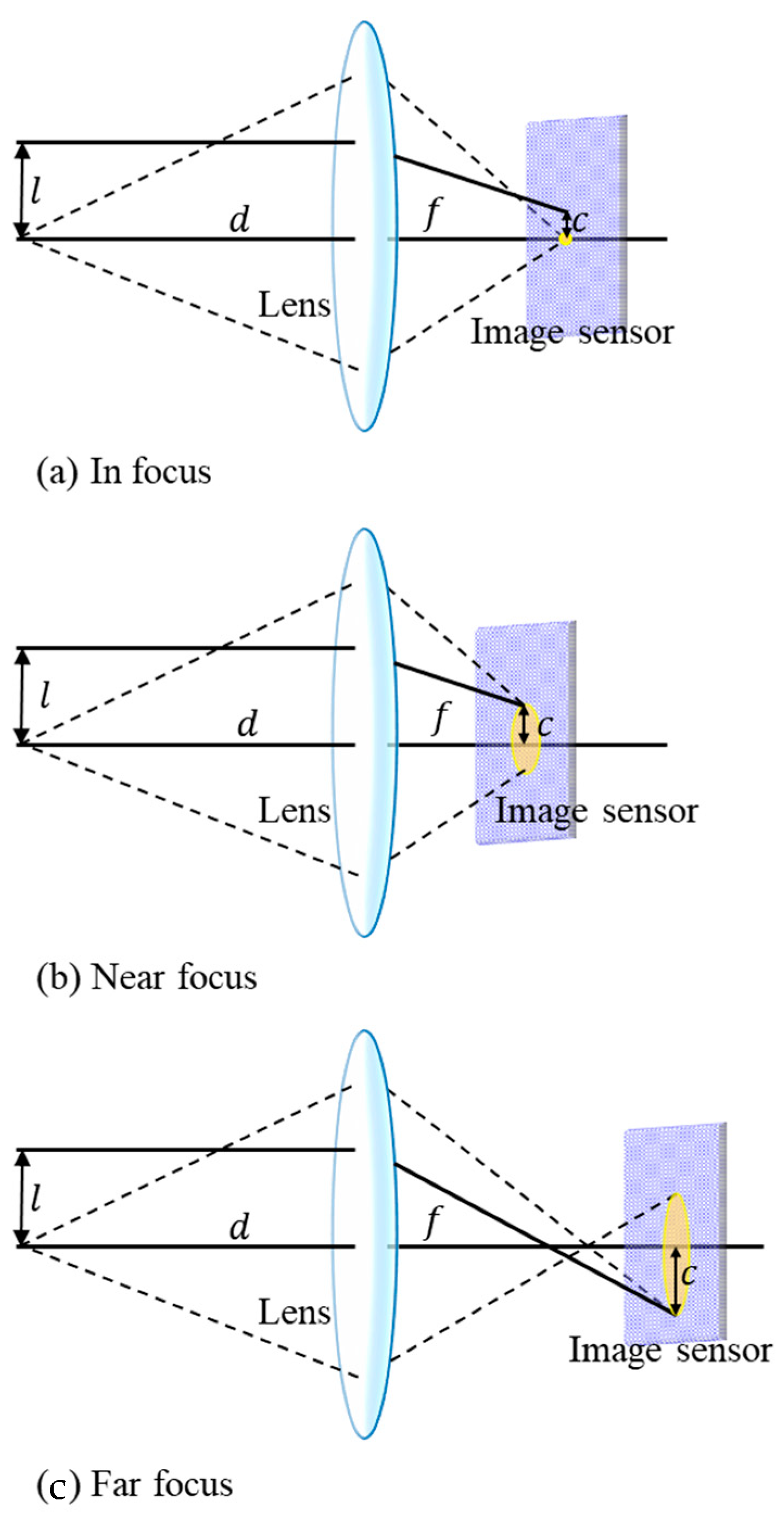

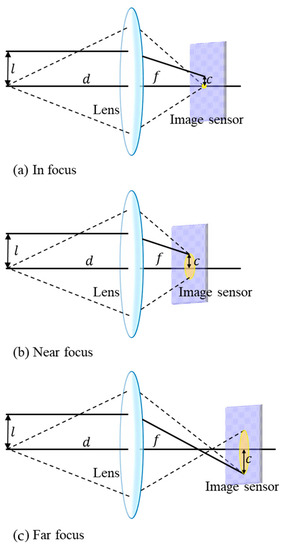

Depending on the focusing distance of the camera lens, the received power can be projected on a single PD or multiple PDs, which are known as focused and defocused modes, respectively. Figure 12 shows different focusing scenarios based on the TX’s location [17], in which and indicate the height of the object and its projected height on the sensor, respectively. Moreover, shows the distance between the lens and the object, whereas is the distance from the lens to the IS. When the camera is defocused, the image of a point source will illuminate an area of IS with the same shape of the lens aperture, which is typically a circle. This area is known as a circle of confusion (CoC). The shape of the defocused image can be obtained as [46,47]:

where, is the CoC disc function, is Heaviside step function, is the intensity function of the image when the TX is in focus and is the 2D convolution operation. is the diameter of the CoC and is given by:

where , , and are the lens aperture diameter, camera lens focal length and f-stop of the aperture, respectively.

Figure 12.

Lens focuses incoming light on the IS: (a) in focus; (b) near focus; (c) far focus [17].

While SNR calculates signal-to-noise intensity on a sensor as a single unit, a more specific approach for the energy to noise ratio () calculation on the IS of a camera is provided to estimate the amount of light reaching each pixel of the sensor, compared with the light interference [5]. In this regard, the value per pixel on the IS, which is directly related to the amount of illuminance received, which is given by:

where denotes the energy per bit, denotes the noise spectral density, is the pixel value, is the amplitude of the signal (light), is the camera exposure duration as a ratio of the signal cycle, is noise value and both and are noise model fit parameters. In addition to the signal quality evaluation for OCC reception, the peak signal-to-noise ratio (PSNR) approach is often considered for the image quality evaluation in OCC with the GS and reflects the quality of OCC channel in terms of 2D image degradation [48]. The PSNR is formulated in OCC as a ratio of the original image quality (transmitted) to the captured image/frame quality, which is degraded by the channel impairments, and is given by [37,48]:

where denotes a matrix containing the mean of the maximum pixel value of RGB color channels in the originally transmitted image. is the average value of mean squared error () from all RGB color channels. Calculating a noise-free monochrome original image () with a resolution of pixels and the noisy captured frame (), the for each color channel (either R, G, or B) is defined as:

3. Modulations

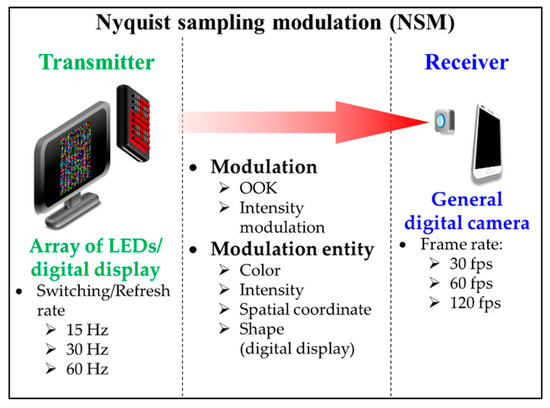

Modulations and coding schemes are the key drivers in the performance of a communications system. In OCC where a camera is employed as the RX to capture the 2D image of the TX, the information data can be used to modulate color, intensity, spatial coordinate (defined by camera resolution) and the form of shape of the source [5,14,17]. It is worth noting that these modulation options are limited, due mainly to the number of cameras; therefore, the TX is manipulated in order to compensate for the number of the RX that could be employed. In OCC, there are several factors that determine the modulation schemes, such as IS type, exposure time, ISO, image processing, type of TXs and human eye perception. Broadly speaking, the OCC modulation can be classified into four major categories: Nyquist sampling modulation (NSM), region-of-interest signaling (RoI-S), hybrid camera-PD and RS-based modulation.

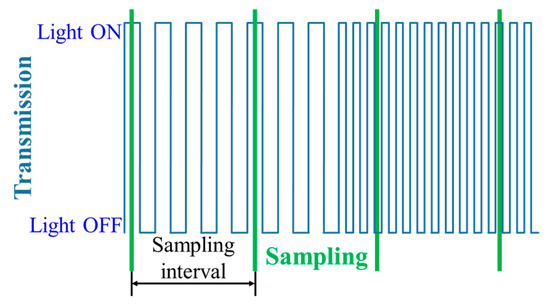

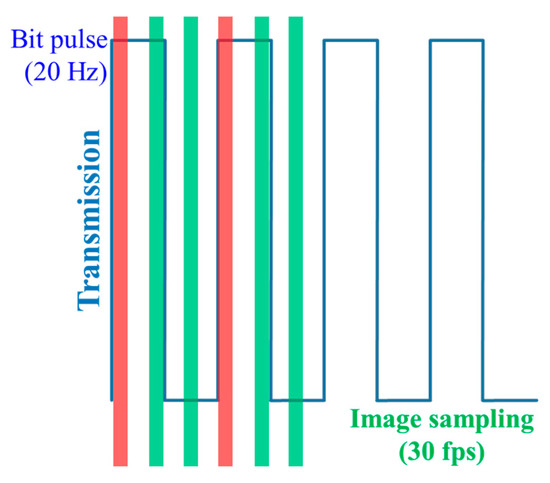

3.1. Nyquist Sampling Modulation

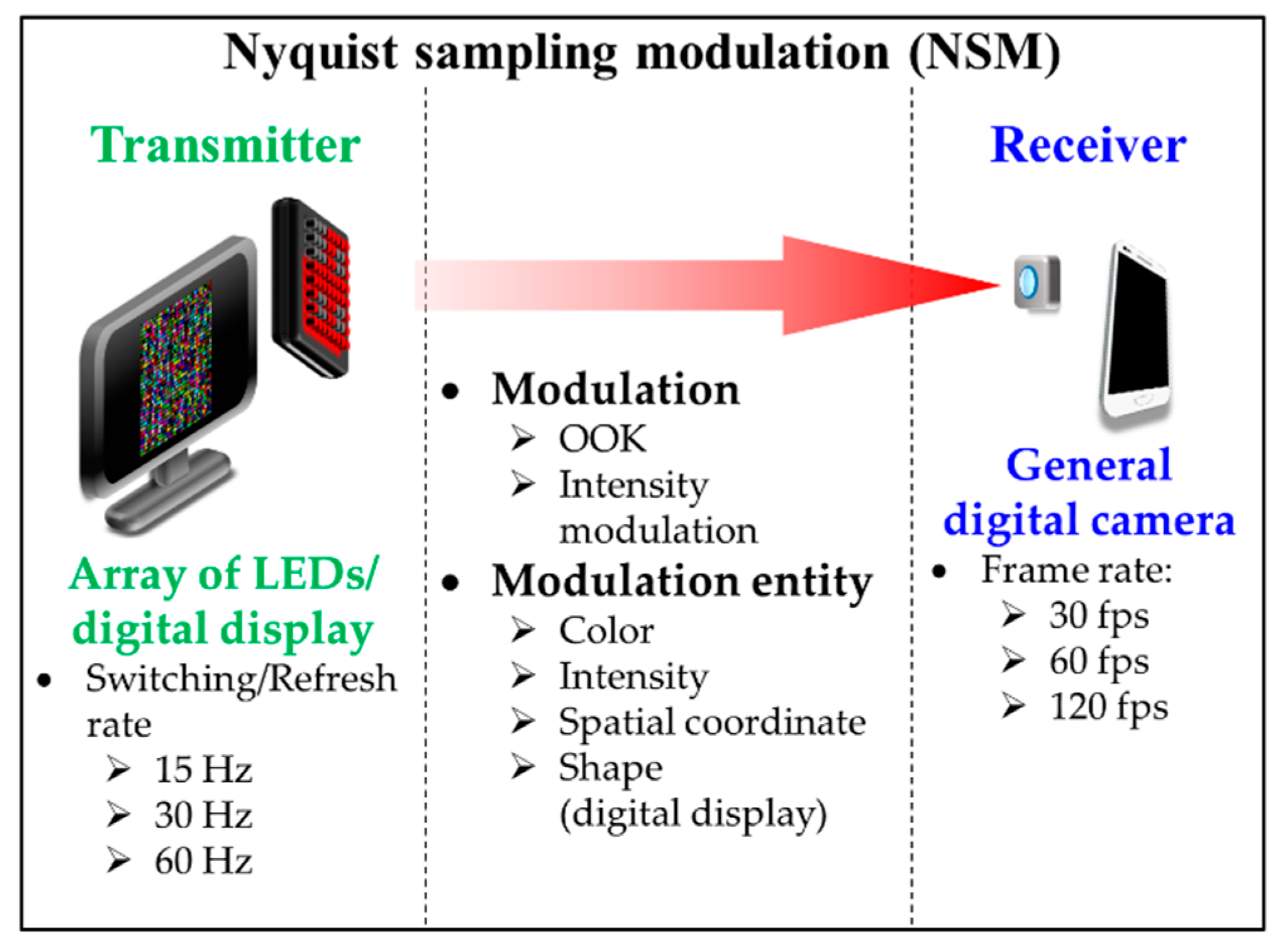

The principle of NSM is based on limiting a switching/refresh rate Rs of the TX to be less than or equal to 0.5FR in order to comply with Nyquist sampling theorem as shown in Figure 13 [5]. Since FR is mostly limited to 60 fps, the TX’s Rs is generally set to 30 Hz for NSM. Furthermore, due to a low Rs of the TX that causes perceptible flicker to the human eyes, NSM generally utilizes a digital display as the TX instead of LEDs, since display flickering is less discomforting than LED [5,17,49]. Moreover, a single LED TX is not used in NSM since the effective data rate is limited to be lower than 60 bps.

Figure 13.

Nyquist sampling modulation.

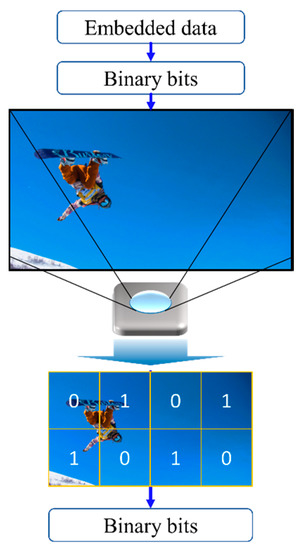

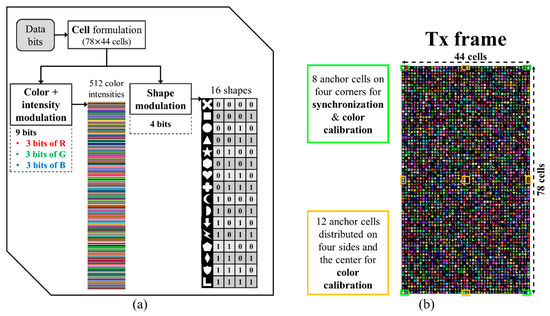

In NSM, the data transmission throughput is limited by FR. To increase the data rate, multi-dimension modulation schemes based on colors, intensity levels, and shapes spread across space (spatial coordinate) are employed [5,11,17]. Initial studies related to NSM OCC based on digital display are PixNet and COBRA codes [50,51]. PixNet encodes the bits in the frequency domain using 2D orthogonal frequency division multiplexing (OFDM) modulation, thus offering throughputs in the order of several Mbps with the processing time limited by the clock rate (i.e., capping the TX to 5 Hz) [50]. In [51], an enhanced 2D color code known as COBRA codes (i.e., in the form of colored QR codes) was reported in order to optimize real-time streaming using five colors and three optional colors with a higher transmission rate of 10 Hz. It introduced a concept of embedding anchors, at the corners (CT) and borders (TRB) of the transmission frame to synchronize between adjacent frames during reception [51]. The synchronization between camera frame rates and the switching rate of TXs using LightSync was investigated in [52].

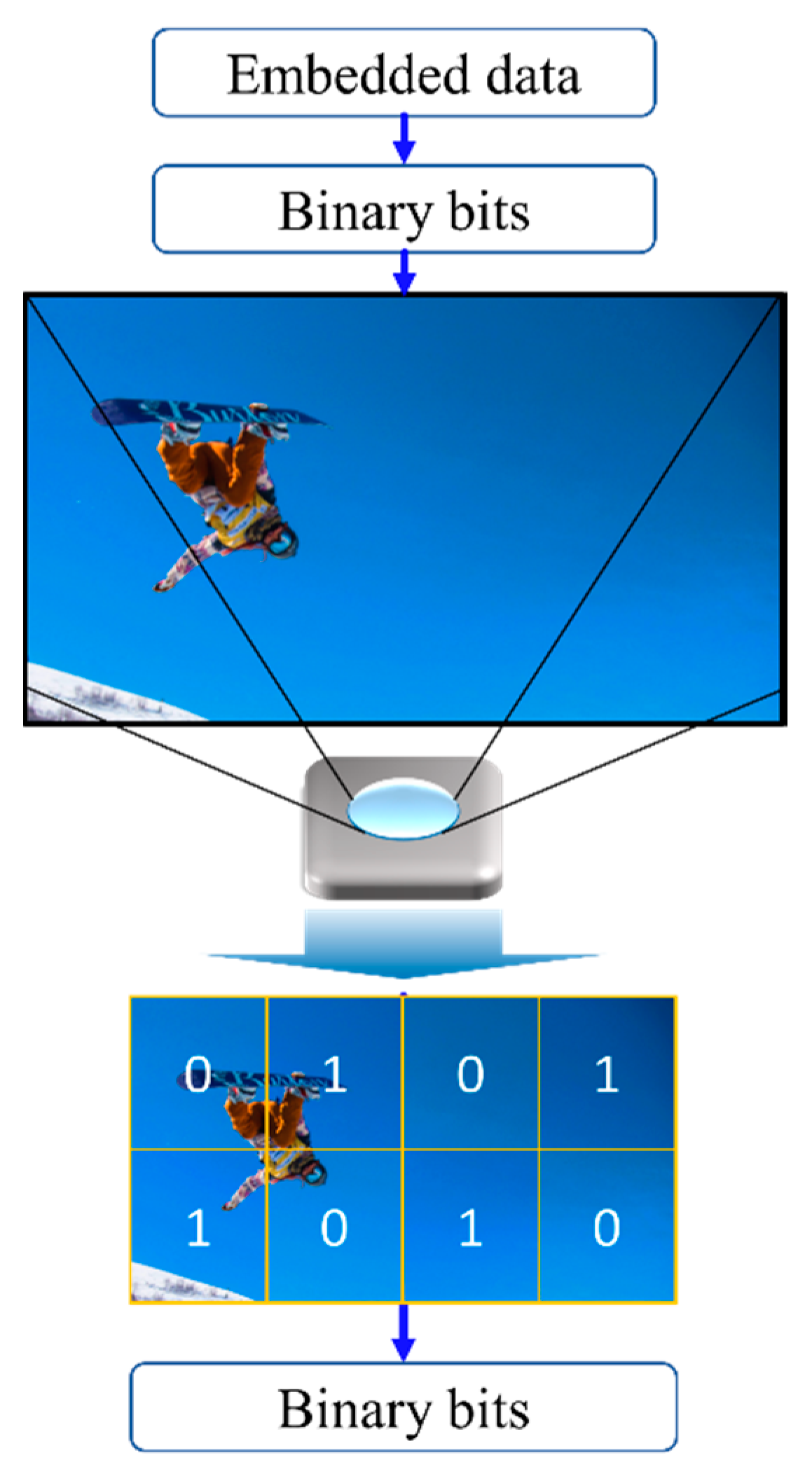

The 2D sequential coding scheme was the first used in the NSM following COBRA, which employed modulation entities of spatial coordinate (cell) and color with the support of anchors on the corners [53]. This scheme functions as a dynamic colored QR code and performs synchronization. In addition to the 2D-sequential coding, an invisible watermarking was proposed to reduce discomfort from perceptible flickers by varying the color chromaticity slightly as part of modulation [12,54]. The transmission frame was divided into m × n embedded cells as shown in Figure 14 with 4 × 2 embedded cells. The change in chromaticity within each cell, i.e., the mean value of blue, is imperceptible by human eyes since they are less sensitive to the blue; however, these changes in chromaticity can be perceived by the camera and thus decoded as invisible watermark codes.

Figure 14.

Invisible watermarking OCC.

Several studies employed NSM with an array of LED TXs and provided a higher light intensity, compared with digital display, thereby achieving a longer transmission range [22,55,56,57]. In [22], some additional LEDs were employed for illumination, camera focusing and light metering that are required due to the flickering LEDs. On the other hand, the color-intensity modulation multi-input-multi-output (CIM-MIMO) scheme was proposed using an accelerated smartphone camera with the image processing carried out in FPGA to achieve a higher FR of 330 fps [55]. It achieved a data rate of 126.72 Kbps over a longer transmission range of 1.4 m. An alternate approach, termed the split-shutter, was presented using a pair of camera setup to increase the FR by splitting the captured image between two cameras; thus, it yields a data rate of 11.52 Kbps over a link span of 144 cm using off-the-shelf smartphone cameras [43].

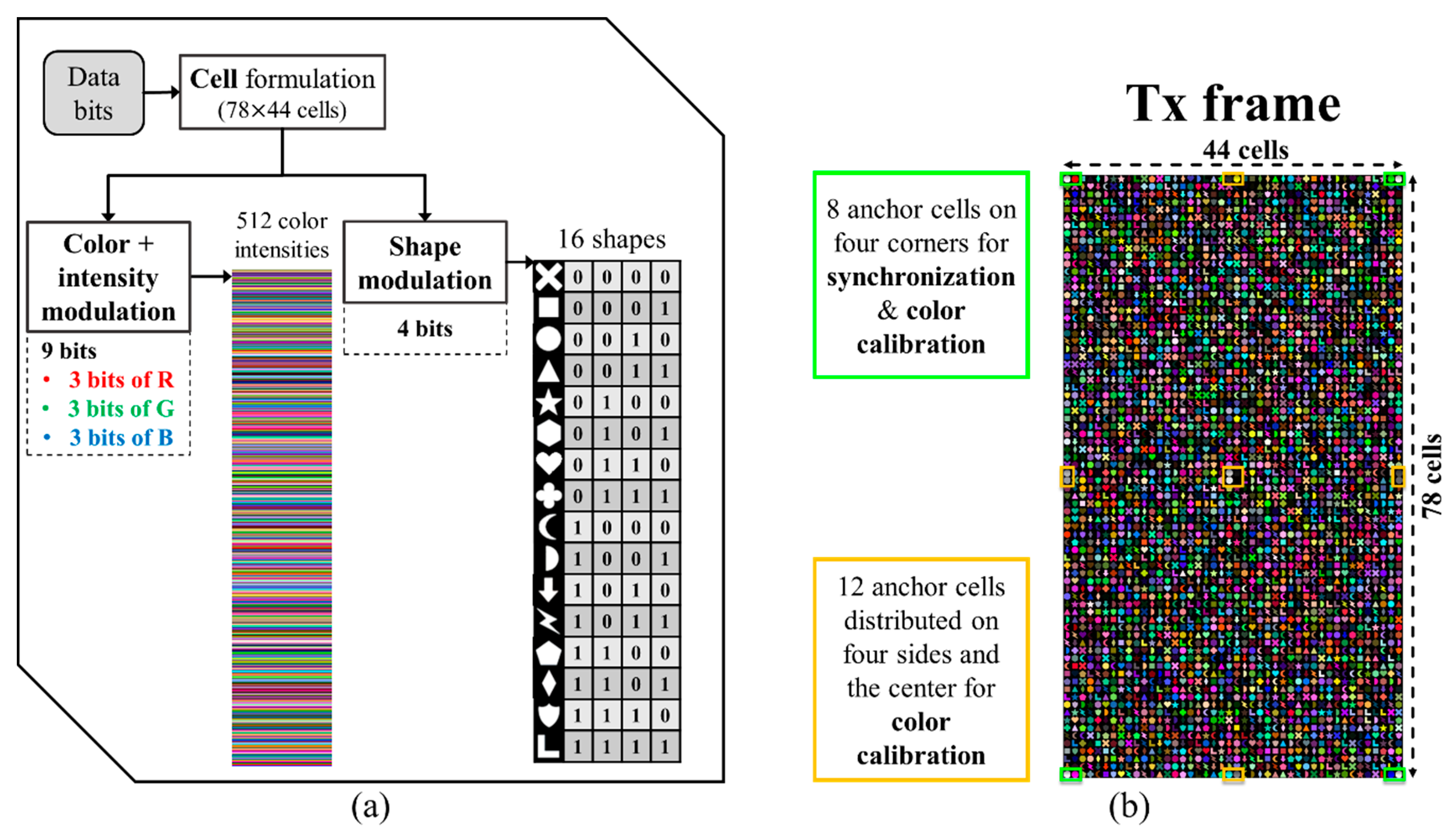

In the IEEE 802.15.7m standard, an interesting modulation scheme that may increase the OCC data rate dramatically was proposed [14,16]. It is a shape modulation called variable transparent amplitude shape code (VTASC) as illustrated in Figure 15. VTASC was experimentally evaluated in OCC in a form of high-density modulation (HDM) with a neural network (NN) assisted demodulation scheme [37]. The HDM, forming the most complex NSM, modulates color, intensity and shape simultaneously in a cell (a spatial coordinate). Thus, yielding 13-bit modulated data on each cell. The NN was adopted for classifying the shapes efficiently on the receiver side [58,59]. The HDM achieved an effective data rate of 2.66 Mbps over a short transmission distance of 20 cm, which can be considered appropriate for device-to-device (D2D) communications.

Figure 15.

(a) A high-density modulation scheme and (b) example of an HDM-generated TX frame.

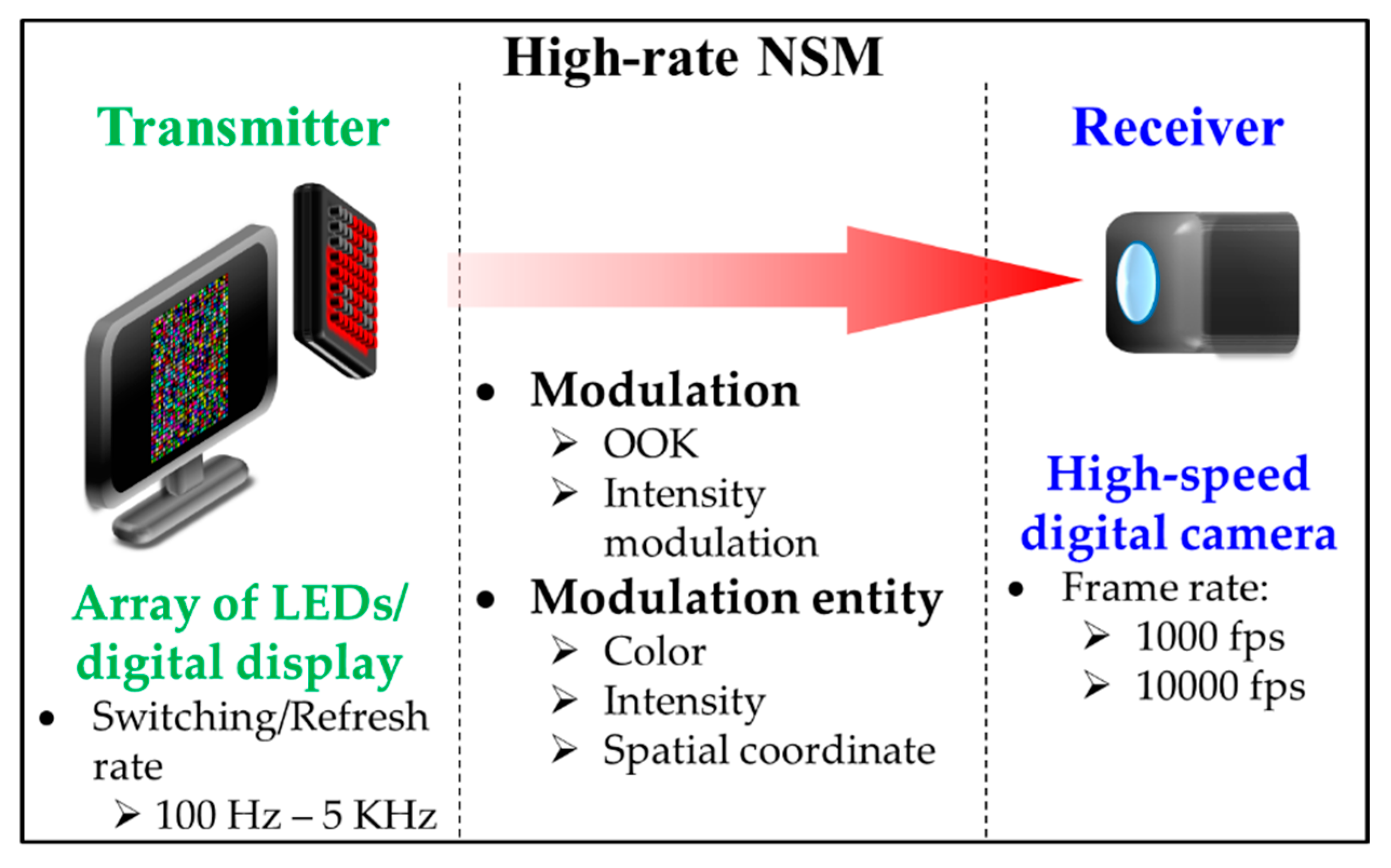

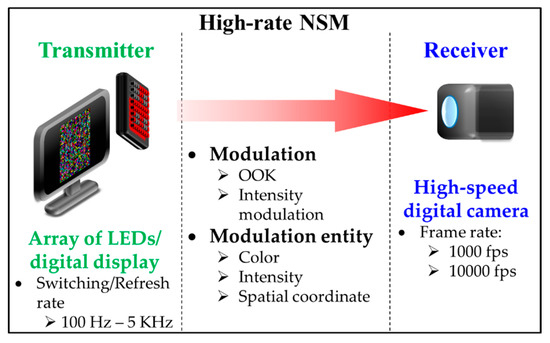

On the other hand, a high-rate NSM shown in Figure 16 is similar to NSM except for the use of a high-speed camera with the FR of 1–10,000 fps [5,60,61], thus increasing the Rs of the TX (i.e., LEDs) to be higher than 100 Hz. However, a digital display can also be used with Rs still limited to 144 Hz for FHD resolution. Note that a high-rate NSM offers an advantage when utilizing LEDs with Rs higher than the flicker fusion threshold, i.e., perceptible flicker by human eyes, thus preventing discomfort to the users [43,62].

Figure 16.

High-rate Nyquist sampling modulation (NSM).

The high-rate NSM is, however, not readily applicable to smartphones. Furthermore, the use of a high-speed camera is envisioned for V2V and V2I outdoor applications only, since vehicles are traveling at high speeds, thus requiring flicker-free transmission due to traffic regulations [5,60,63]. Even though these schemes are proven to be reliable, they are, in general, relatively unattractive for OCC due to a considerable cost of both high-speed cameras and high-performance image processing hardware.

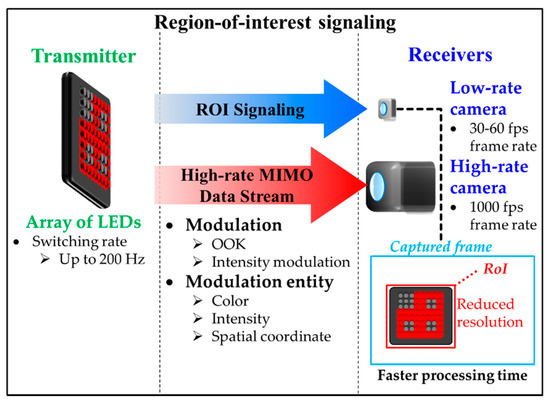

3.2. Region-of-Interest Signaling

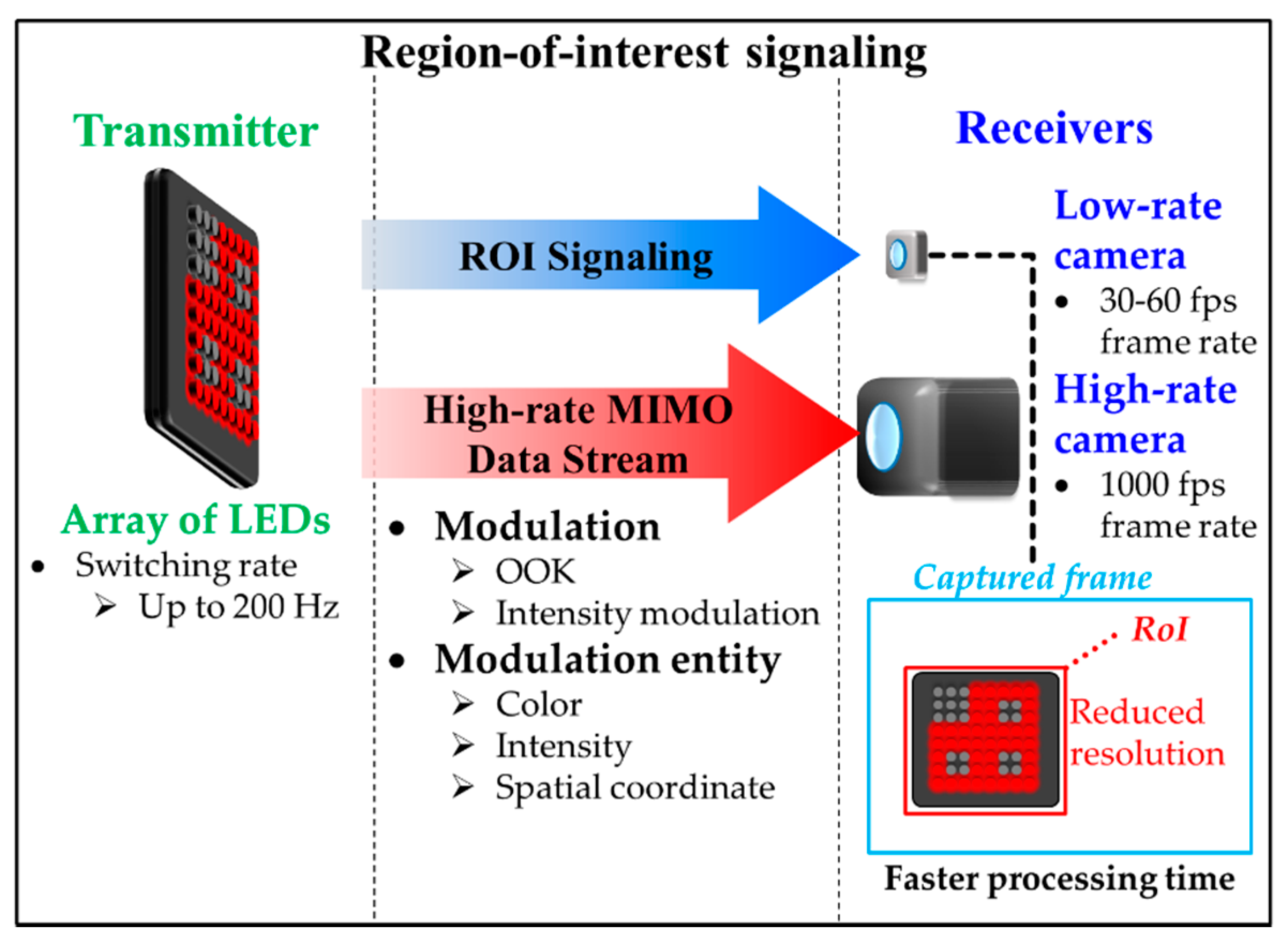

Region-of-interest signaling (RoI-S) is an improvement of NSM that utilizes the region of interest (RoI) in image post-processing in order to reduce the portion of the image capture [5,64,65]. RoI reduces the resolution of the image being processed at the RX and thus the proposed RoI-S method offers a more efficient and faster processing time than the NSM-based OCC. The implementation of RoI-S is based on the combination of NSM and high-rate NSM schemes.

ROI-S utilizes a low-rate camera to fix the ROI first, then carries out the actual reception using a high-rate camera employing the previously set ROI. It is necessary for ROI-S to use two cameras since the original ROI method is post-processing (after the capture has been completed) and the rate cannot be changed anymore afterward.

It is indeed possible to use a single camera with a variable-sized capture frame (different from post-processing ROI) that effectively reduces the resolution and increases the capture rate during the frame capture, which is called Selective Capture as proposed in [33]. Selective Capture is an alternate form of the RoI-S that was proposed as part of pre-processing (i.e., during the capture) in order to shorten the frame capture period, thus resulting in an overall higher FR [36].

Figure 17 shows that the RoI-S carries two reception streams with different frame rates, i.e., a low rate stream for determining the RoI (i.e., RoI signaling) and a high rate stream for the high-rate OCC (i.e., high-rate MIMO data stream) [17,64]. Note that the low and high rate streams are used to define the specific RoI and carry the actual data, respectively.

Figure 17.

Region-of-interest signaling.

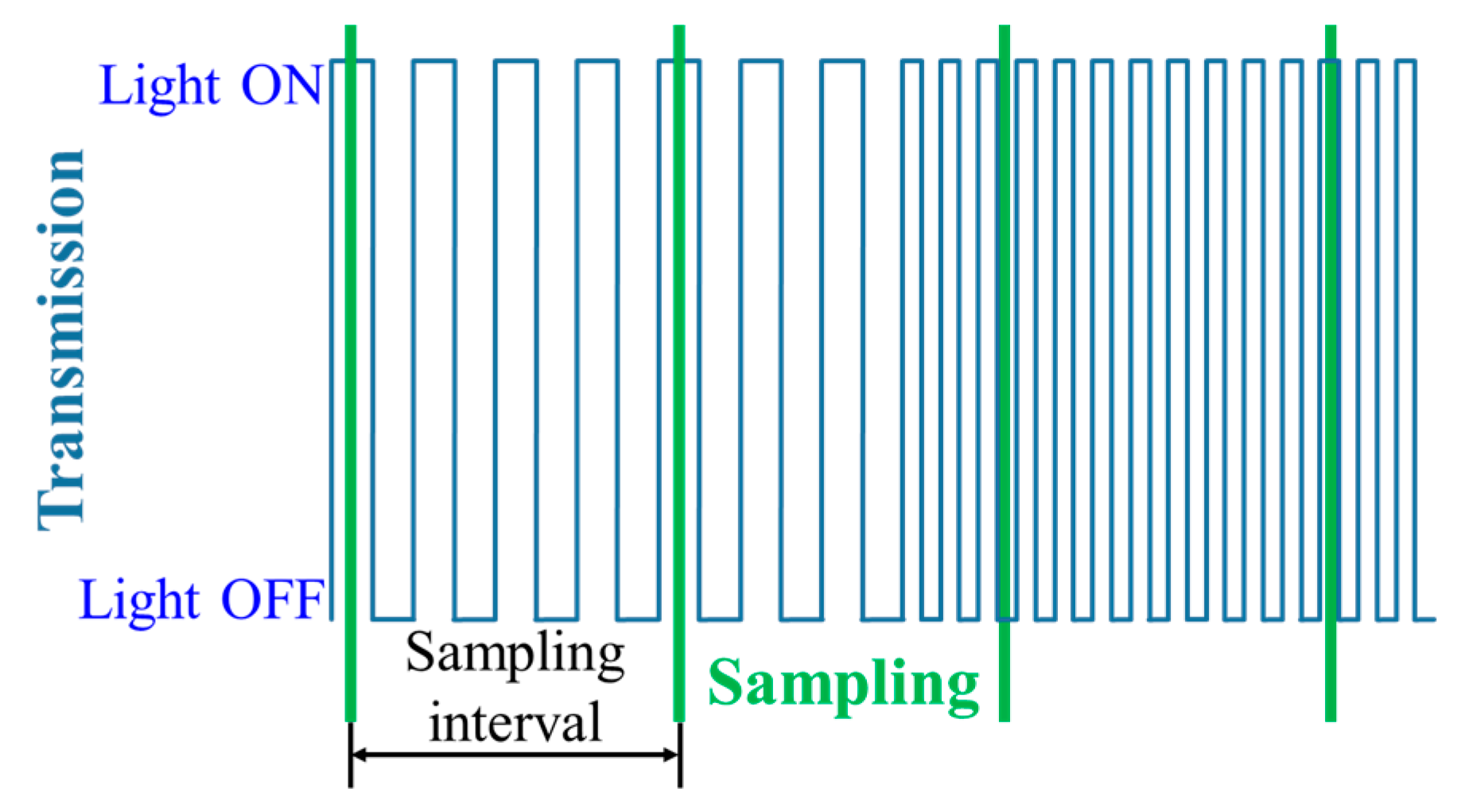

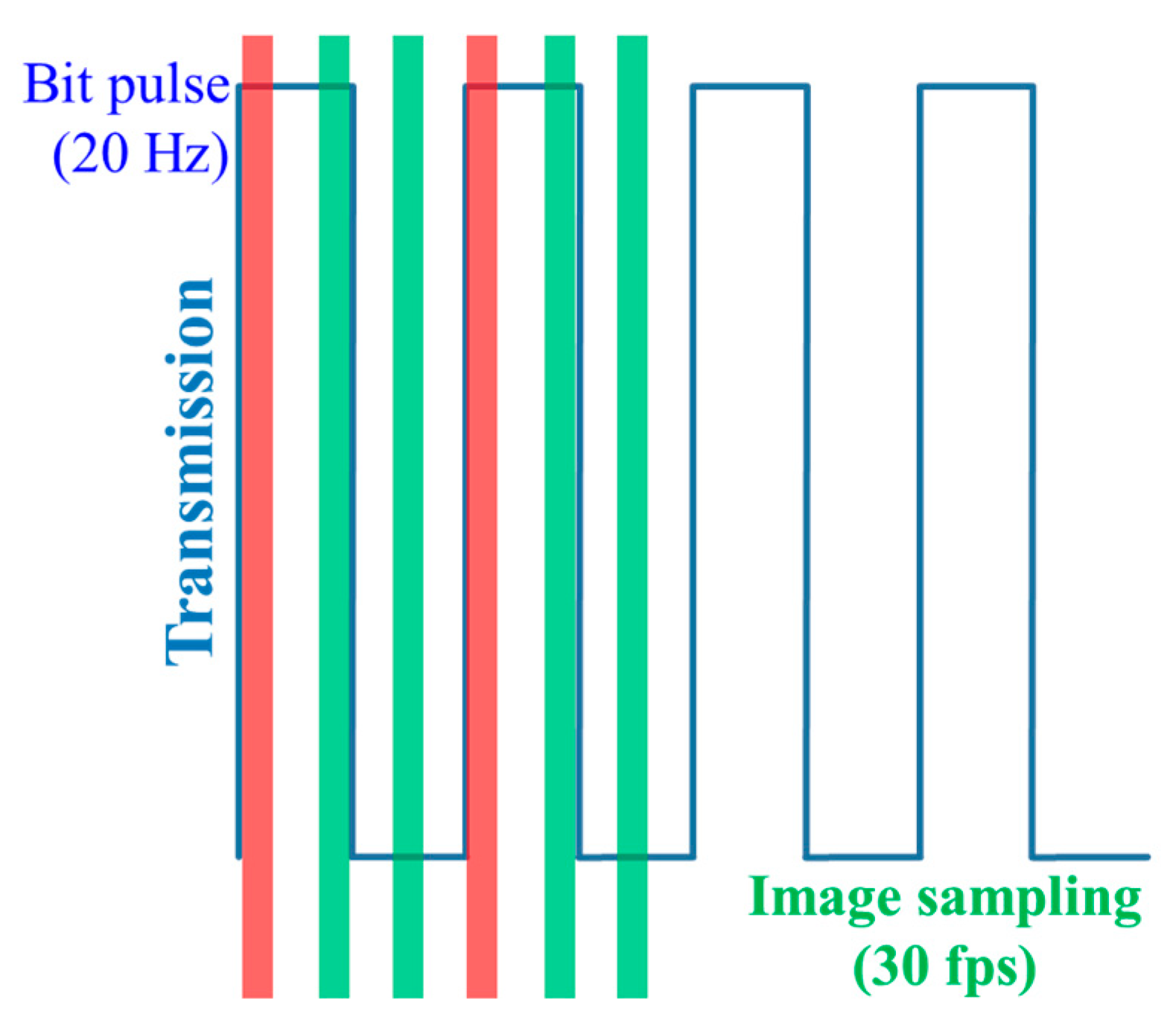

3.2.1. RoI Signaling

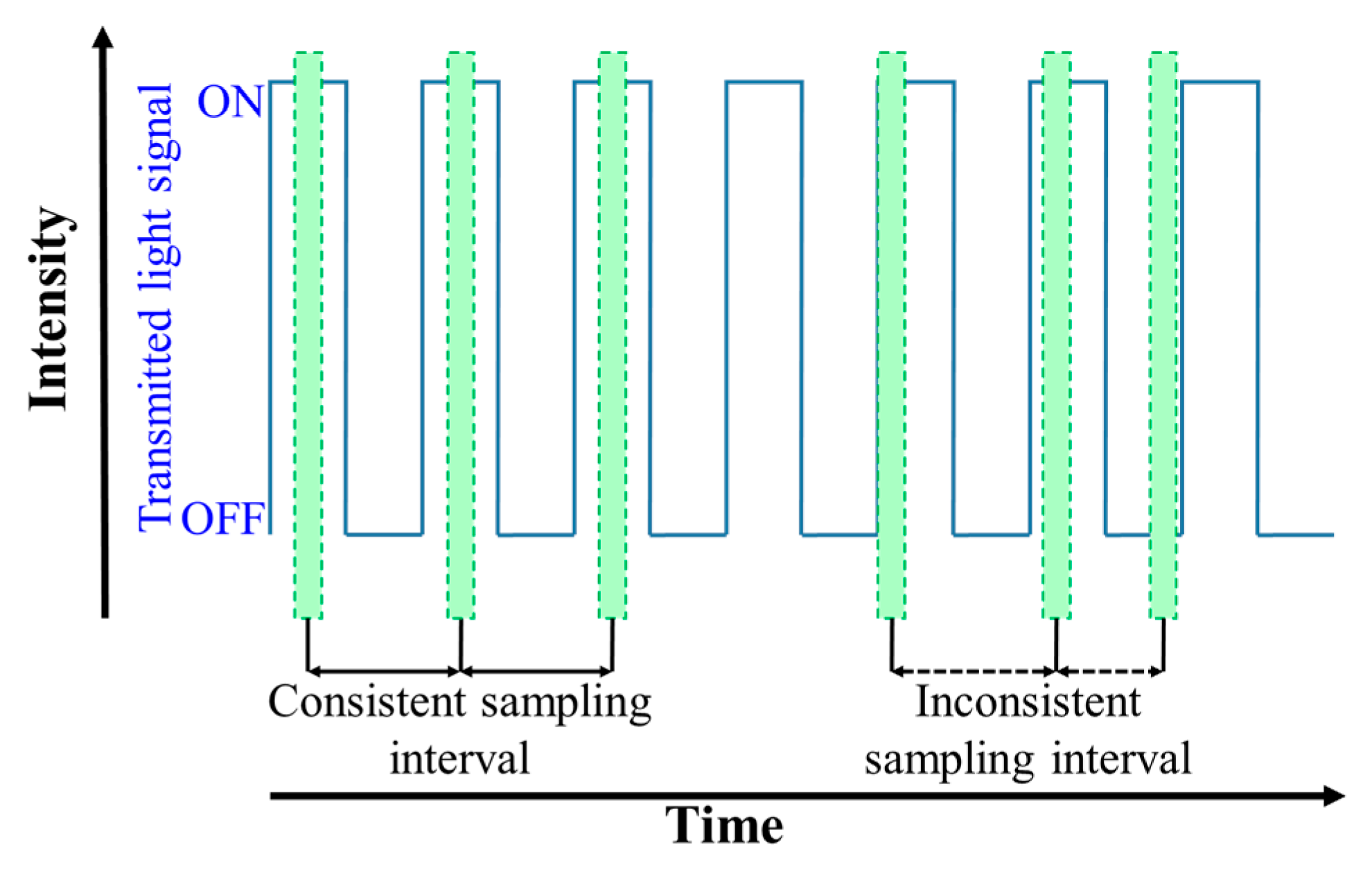

Since the switching rate of the LED is defined to be flicker free (i.e., >100 Hz), the undersampled frequency shift on-off keying (UFSOOK) scheme was proposed for RoI signaling [66,67]. The principle of undersampling is based on having the camera’s sampling rate (i.e., FR) to be lower than Rs of the TX [5,68]. At the RX end, the differentiation between bits ‘1’ and ‘0’ is based on the difference of the frequency of the transmitted light pulse as in FSK. The encoding is illustrated in Figure 18. The decoding is performed based on a series of several adjacent samples. UFSOOK allows the use of both RS and GS mechanisms. This compatibility of two shutter mechanisms is the key for practical UFSOOK.

Figure 18.

UFSOOK encoding of a logic “0 1” bit pattern.

An enhanced version of the UFSOOK modulation was reported with the encoding performed on the phase shift rather than the frequency. It is known as undersampled phase shift on-off keying (UPSOOK) [68,69]. UPSOOK offers improved transmission efficiency of 1 bit/frame/LED, compared with only 0.5 bit/frame/LED for UFSOOK [68]. In [66], it was reported that the UPSOOK with dual LEDs with a designated mapping and framing method offers a data rate of 150 b/s over a transmission link span of longer than 10 m. Alternatively, undersampling for the low-rate data stream can also be performed spatially using a spatial modulation scheme, i.e., spatial 2-phase shift keying (S2-PSK), which was submitted to TG7m [14,64]. The capability of S2-PSK to demodulate with random sampling allows it to be used with time-variant frame rate cameras.

3.2.2. High Rate MIMO Data Stream

There are two proposed schemes for a high-rate MIMO in RoI-S, i.e., the twinkle variable pulse position modulation (VPPM) and hybrid spatial phase shift keying (HS-PSK) [5,67,70]. The former is a combination of UFSOOK and VPPM, wherein VPPM is achieved by changing the duty cycle of high rate pulses. The low-frequency UFSOOK signal is the amplitude envelope of the high-rate data signal, which is used for intensity modulation of the LED. The term “twinkle” in the twinkle VPPM stems from the LED lights being perceived as flashing rapidly on the RX [67]. On the other hand, HS-PSK is a combination of both S2-PSK and dimmable spatial multiple-PSK (DSM-PSK). Comparable to the twinkle VPPM, the amplitude modulated signal overlaps the S2-PSK signals with that of generated by DSM-PSK (i.e., multiple VPPM signals with delays). DSM-PSK is also considered for the NIR at a wavelength of 850 nm [67].

3.2.3. Compatible Encoding Scheme in the Time Domain

A study adopted a multiple frame rate encoding scheme for OCC that enables data transmission through different shutter speeds, sampling rates, as well as resolutions and distances [71]. This scheme encodes a special data packet on the TX that transmits both data and clock information. In case of a frame rate drop on the receiver camera, the error can later be corrected using external codes [71].

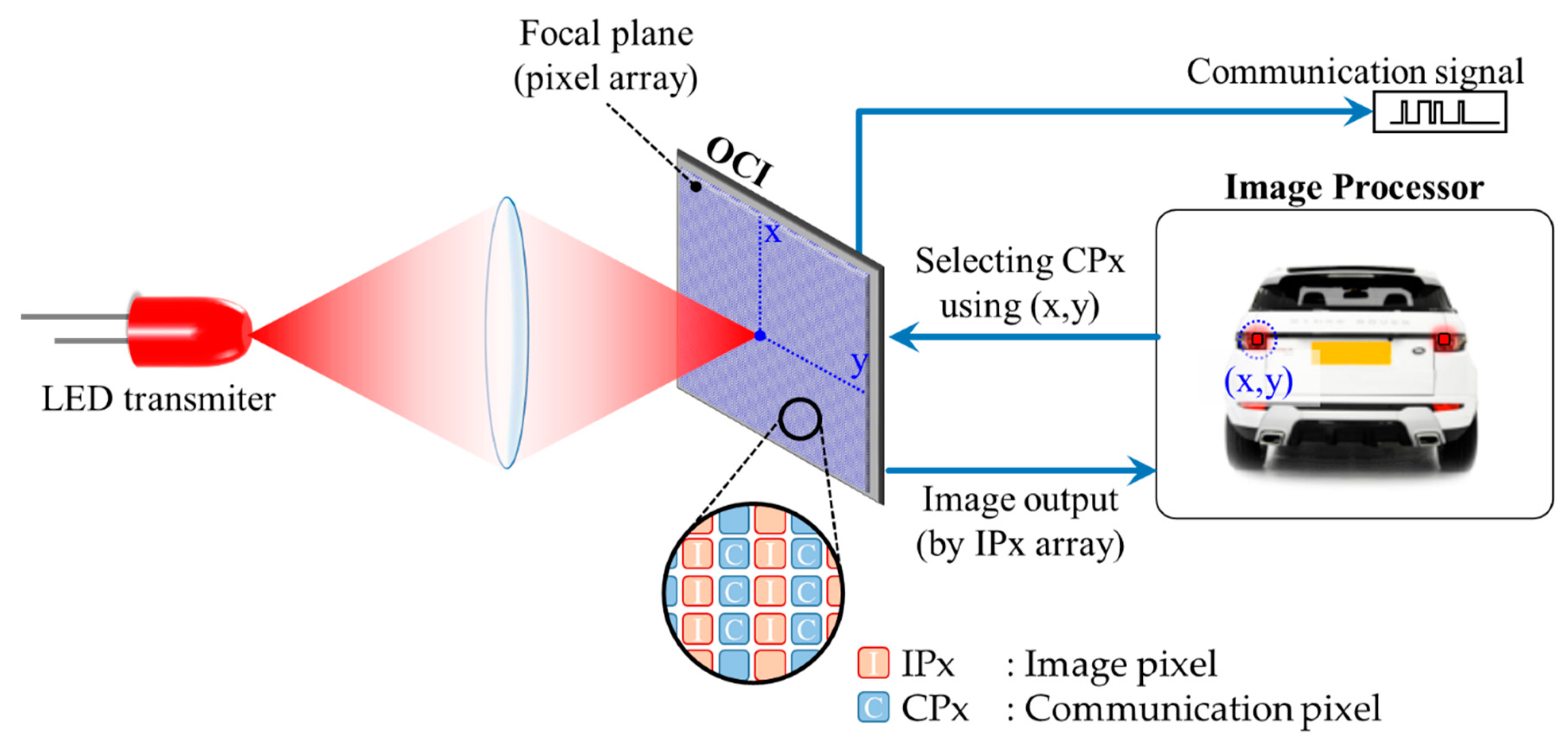

3.3. Hybrid Camera-PD Modulation

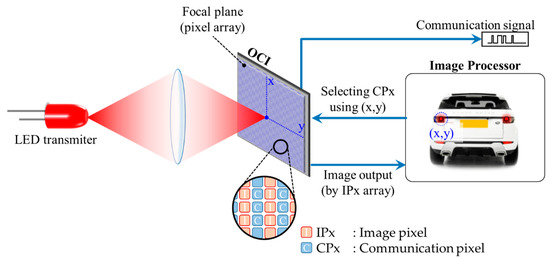

A hybrid camera-PD optical communication known as optical communication image sensor (OCI) has been proposed. The OCI employs an LED, camera and PD to provide a substantially improved response to light intensity variation compared with conventional imaging pixels [5,35,72,73]. In OCI, the detection of the light source from the image cells is performed prior to establishing a communication link and activating the PD cells. In addition, the detection of RoI is required; however, there is no RoI signaling and therefore a computer vision-based RoI algorithm is required. Figure 19 illustrates the operation, where a transmission range of 100 m was experimentally demonstrated. This range is considered exceptionally large for OCC and could provide a huge bandwidth of up to 10 MHz due to the use of PD cells [5,72]. Unfortunately, the hybrid camera-PD concept is not applicable to mobile devices in general, since OCI is still at the prototype stage at the time of writing and is not widely available. The advantage of OCI is the provision of a significantly higher SNR for real-time communications in both V2V and V2I outdoor OCC applications [72]. OCI is also envisioned for future indoor LiFi since it offers the benefit of spatial separation between OCC and VLC, while satisfying the 5G data rate requirement [5,14].

Figure 19.

Basic operation of IS-OWC system.

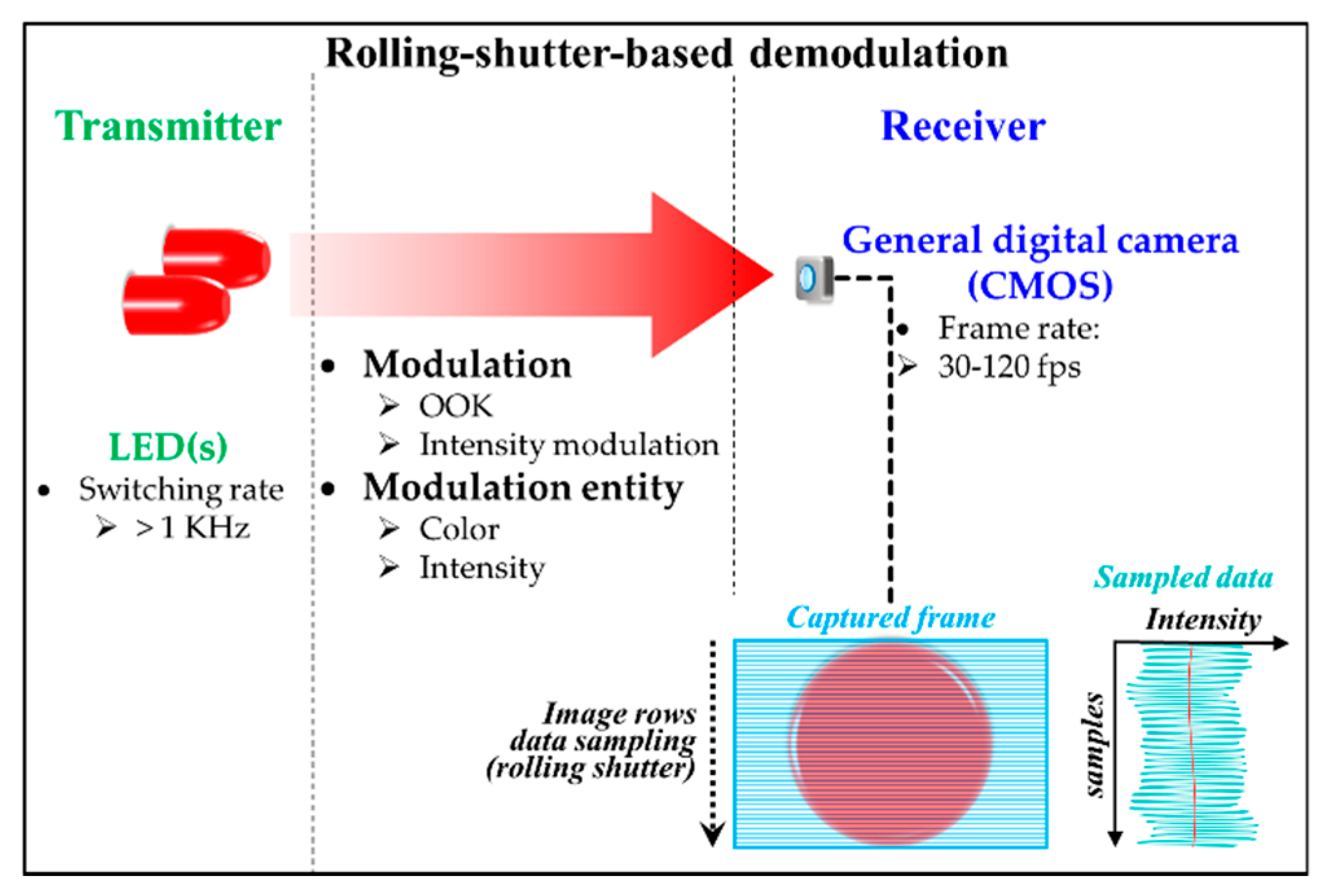

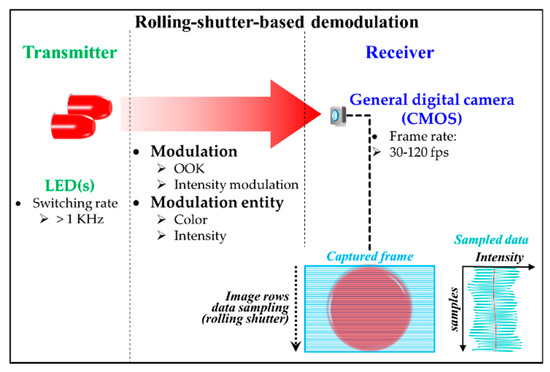

3.4. Rolling-Shutter-Based OCC

In order to increase the data throughput, a dedicated IS with a built-in PD (i.e., PIN) array was proposed in [59]. It is too complex to fabricate and not commercially available yet. However, by adopting the RS effect of a CMOS-based IS, it is possible to increase the data rate of OCC [5,24,74,75]. The RS-based OCC is the second commonly used scheme following NSM, since the implementation is practical on any CMOS-based cameras widely available. Moreover, the RS is also termed a RS patterning demodulation due to the focus on the demodulation [76]. Figure 20 shows the RS process where the data is sampled sequentially by the pixel rows. In RS, the sampling rate is the product of FR and the number of pixel rows (i.e., vertical resolution). For example, with a 30-fps camera having a resolution of FHD (1920 × 1080 pixels), the RS samples each image row, thus yielding 30 × 1080 samples on each frame. This leads to an effective rate of 32.4 Ksps. Therefore, it is obvious that in RS, the effective data rate depends on both the number of image rows (i.e., the camera’s vertical resolution) and FR. Note that in RS, the sampling rate of the LED TXs could be 1 KHz or higher.

Figure 20.

RS-based demodulation process.

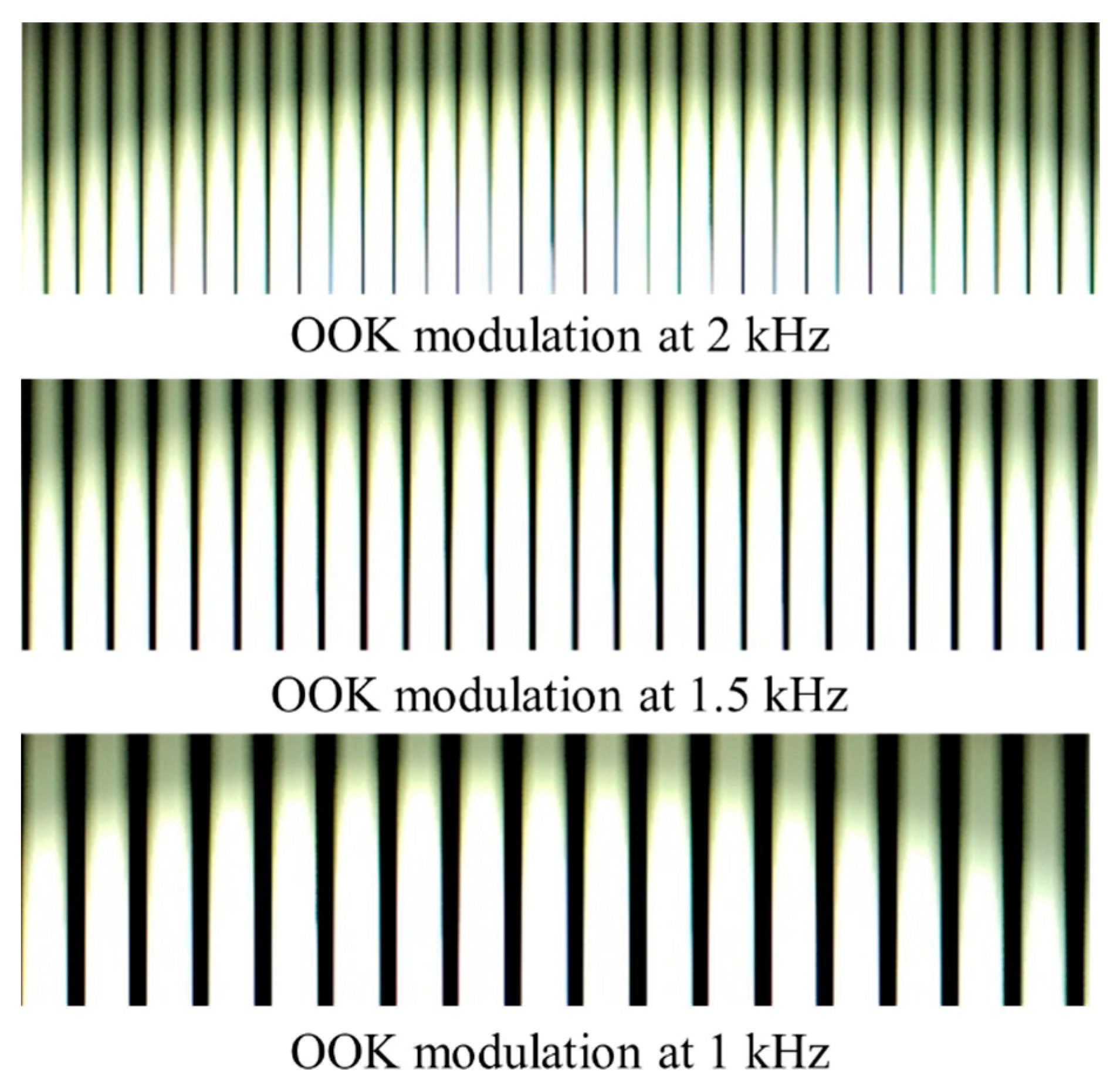

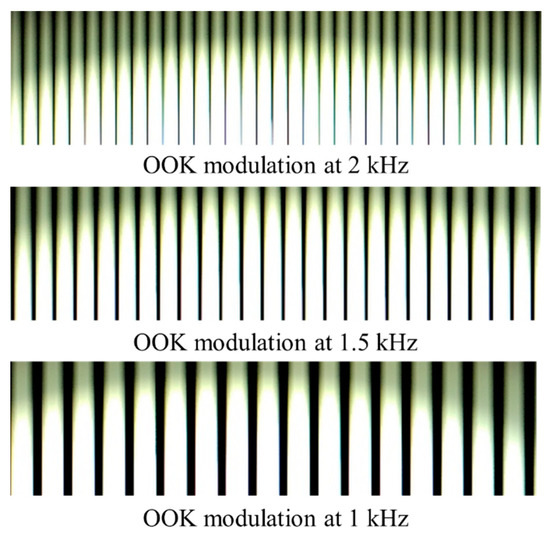

The width of different light intensity bands in the capture frame is proportional to the symbol rate of the LED TXs and the exposure period of the camera [17]. Adjusting the TX frequency affects the width of the captured light intensity bands as illustrated in Figure 21.

Figure 21.

Captured frames of OOK modulation with different frequencies.

With respect to the operation of the CMOS-based camera, the number of samples (i.e., pixel rows) acquired from the captured image of the light source in RS is given as [5]:

where denotes the image width (i.e., horizontal resolution) and is the normalized length (i.e., the diameter) of the light source along the width of the image sensor. indicates the distance between the light source (TX) and the camera (RX). The number of samples acquired from an image determines the amount of information that an image can capture in RS.

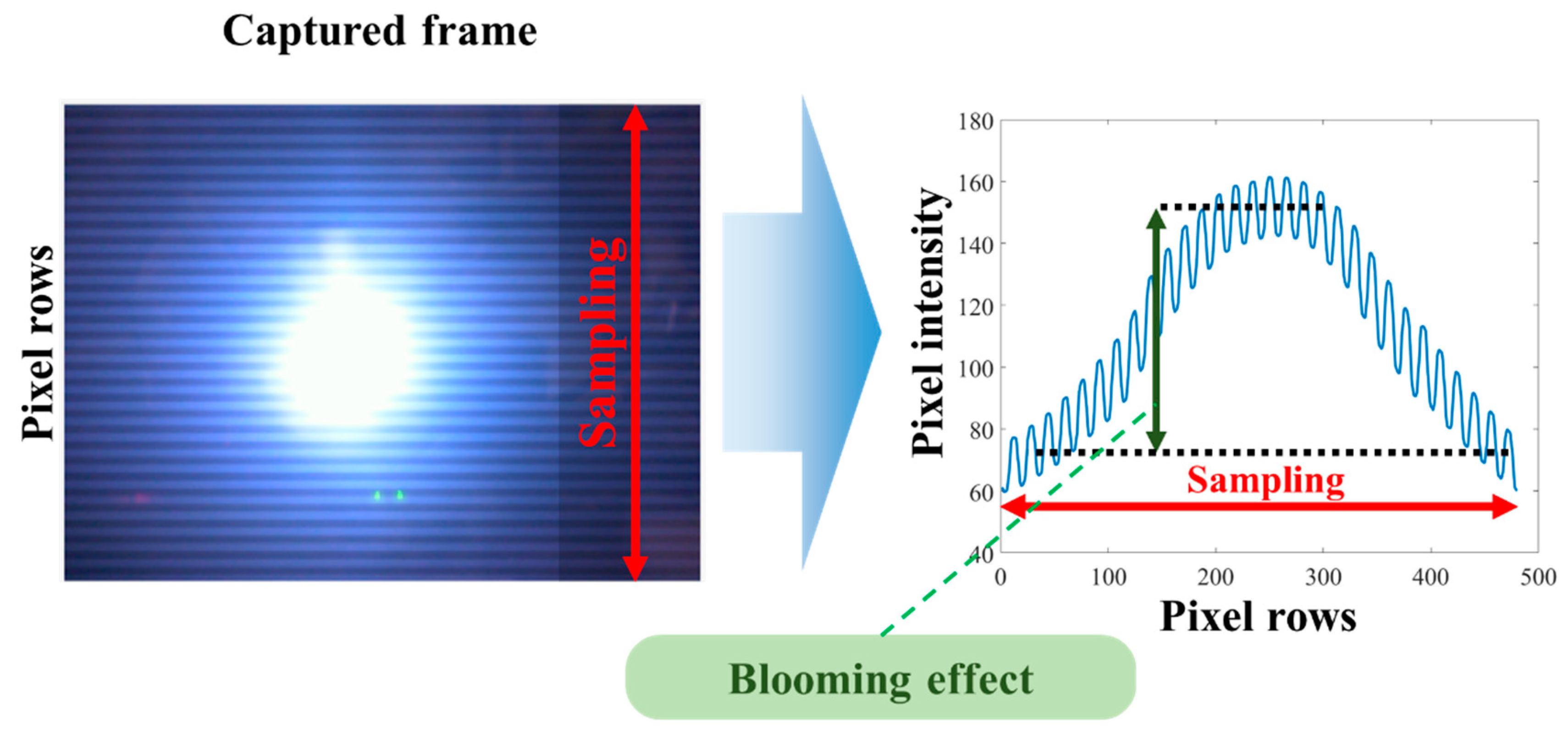

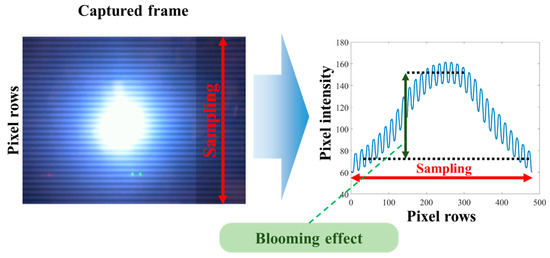

In RS, however, we have the following issues: (i) the blooming effect, which is the difference of light intensity between the area around the light source and the remaining frame area [23,74,77,78] and (ii) demodulation of the bright and dark fringes, which is challenging, due to its high data fluctuation and a large variation in the extinction ratio (ER), i.e., a significant blooming effect, see Figure 22. To mitigate the blooming effect, a suitable threshold scheme is required to level out the intensity across the captured frames so that an accurate binary threshold can be applied in order to demodulate the captured bit stream [74,79]. There are a number of image processing-based threshold schemes including 3rd order polynomial curve fitting, the iterative method [80], and quick adaptive method [81].

Figure 22.

Blooming effect on RS-OCC.

The quick adaptive thresholding utilizes a moving average calculation of grayscale values of pixels across the image rows [79,81]. The binary threshold is then set at the center row of the moving average results. The iterative method, on the other hand, divides the image rows into 24 sections and then applies iterative operation within each section. The iterative operation determines an initial grayscale value per each section and then divides the section into two subsets prior to averaging using addition and division by 2. Thus, it yields the ultimate grayscale values that are employed in the binary thresholding scheme [79].

These binary thresholding schemes were investigated for the RS in [79]. Note that they were implemented at the post-processing phase following image capturing. It was shown that the iterative and quick adaptive threshold schemes were able to reduce the processing time by 97% and 6%, respectively, when compared with [75].

In [79], it was shown that the adaptive scheme offered the lowest bit error rate (BER) performance compared with the other two but at the cost of increased processing time (i.e., 7 ms). The 3rd order polynomial curve fitting offers the least processing time (i.e., 0.95 ms) for the same image processing hardware [79]. The variations of n-th order polynomial curve fitting and the iterative schemes are preferable in most RS-OCCs due to their lower processing times and less complex implementation [23,74,76,77,82].

It should be noted that the RS-based OCC has more limited transmission distance compared with the RoI-S, due to the dependence on the requirement that the TX needs to be captured by whole pixel rows [83]. The transmission data rate can be increased using a 2D LED array (i.e., spatial division multiplexing) [56]. However, decoding the captured 2D RS pattern using a CMOS IS-based camera is a challenging task due to the merger of the RS pattern produced by different LEDs in the array. Hence, there is a need for advanced signal processing in order to separate these patterns. It is important to note that decoding of the 2D RS pattern with reduced offset tolerance and flexibility requires capturing lights from all LEDs using the IS [82].

3.5. Performance Comparison of Modulation Schemes

NSM-based OCC schemes are mostly intended for short-range communications, i.e., several meters with a data rate of Kbps to Mbps. The RoI-S provides improved efficiency in image processing and thus yields higher data rates of at least tens of Kbps for use in outdoor applications such as V2V and V2I communications. In addition, an OCI sensor provides an enhanced version of RoI-S with a novel IS, which is a hybrid between IS and PD, thus potentially increasing the data rate up to Gbps for future applications similar to VLC. Finally, RS-based schemes utilize the inherent RS properties of the IS to multiply the sampling rate based on the number of pixel rows. Thus, RS is intended for indoor applications and can also comply with LED-based lighting as TXs. In this regard, RS yields a data rate of up to tens of Kbps. Table 3 lists a concise performance comparison of representative OCC schemes.

Table 3.

Performance comparison of representative OCC scheme.

OCI exhibits the highest data rate of 55 Mbps due to its higher sampling rate by using PD-based RXs (communication cells) [35]. It is interesting to note that both RoI-S and high-rate NSM offer a longer transmission distance of up to 30 m, which is evidently suitable for long-range V2V applications [60,64]. CeilingTalk provides the longest RS-OCC transmission distance of 5 m at the cost of a lower data rate of 1 Kbps due to the implementation of systematic raptor coding in multiple LED-based TXs [84].

4. Mobility, Coverage, and Interference

As discussed in previous sections, the low data rate of OCC (i.e., due to the limitations of the camera) is the main issue in applications where there are demands for higher transmission rates. However, OCC can be conceived as an affordable and viable short-range transmission technology in the emerging field of IoT, where a range of information from different sources can be transmitted and captured in a cost-effective manner. In other words, OCC is best suited to ubiquitous, low-rate tolerable and license-free data communications rather than conventional high-speed data transmission systems. For OCC to evolve further, several important issues need to be resolved including mobility, coverage and interference. Recently, extensive studies have been focused on addressing these issues. Some mitigation techniques are elaborated in this section.

4.1. Mobility and Coverage

Both mobility and coverage are important in OCC since mobile devices are rarely static in indoor and outdoor environments. Seemingly, a high-speed camera RX is a prerequisite to solve these issues [35,60,72]. The mobility issue is more pronounced in OCC-based V2V and V2I communications. A number of studies have shown that the performance of OCC with no high-speed camera can be enhanced [17,35,78,85,86,87]. To support user mobility, tracking the RX’s position is essential in mobile environments. In [78], a tracking scheme based on the region-grow algorithm for the fixed column matrix selection technique was reported. However, there are still a number of issues in this scheme: (i) captured images may not have the TXs with the user mobility; (ii) high computational complexity; (iii) system performance being affected by the link span between the TX and the mobile RX due to light intensity fluctuation; (iv) inter-symbol interference (ISI), i.e., each row of pixels suffers more from the neighboring pixels induced interference, compared with stationary OCCs.

Although mobility can involve the movement of both the TX and the RX, the mobility in OCC studies is largely evaluated from the camera perspective [17,78,86]. In this approach, the movement is calculated from the captured frames as well as the orientation and acceleration data provision from additional sensors in smartphone-based OCC systems. A straightforward approach was proposed through an asynchronous unidirectional scheme by employing an oversampling technique to sample more frames at the RX [85]. It is termed asynchronous since there is no uplink channel for synchronization. Note that a data-shower method without an uplink channel is based on this approach.

An enhanced RS-based OCC is proposed to mitigate signal degradation caused by translational and rotational motions [88]. The solutions are Row Grayscale Redistribution (RGR) scheme and Differential Grayscale Packet Selection (DGPS) scheme. These schemes work by redistributing the varying intensity (in grayscale values) between neighboring pixel rows to improve the demodulation of the binary thresholding employed in the RS-based OCC [88]. The study proved that these schemes can correctly identify the data packet captured at 100 cm at a data rate of 1.51 Kbit/s, while being under the edge-to-edge translational motion and random rotational motion.

The oversampling method has an optimum number of oversampling defined by Equation (15) [85], which provides redundant frames during data capturing as illustrated in Figure 23. With the RX having redundant frames for the same bit, it selects the best frame with less degradation in the image quality.

where pps denotes the number of pulses per second (i.e., TX switching rate) and k is a constant indicating an extra frame captured beyond the number of transmitted frames. (k − 1) is the number of transmitted pulses, which is also equal to the captured frame. For example, in Equation (15), if pps is set to 667, then the minimum fps of the camera should be at least 1000.

Figure 23.

Selecting two good frames among three adjacent captured frames.

Most OCC schemes are based on the LOS transmission mode due to the inherent nature of photography. However, reflected lights (i.e., non-LOS (NLOS) link) can also be captured using a camera, although it is not possible for RS-based OCC requiring only the image rows [44]. In [76], a unique NLOS OCC system for an indoor environment was investigated in [76]. The blooming effect [75] was mitigated using a background compensation algorithm combined with Bradley adaptive thresholding. Note that the blooming mitigation scheme can only work well when the TX is located at the center of captured images at the RX. The proposed NLOS OCC link achieved a total transmission distance of 1.5 m. Assuming that the transmission of each independent frame instead of a complete video stream with a series of images was performed, the data rates (the net data per frame) were approximately 96 and 172 bits/frame over the link spans of 1.5 and 0.40 m, respectively. In [89], to avoid the blooming effect, a dynamic column matrix selection algorithm matrix with a high extinction-ratio was proposed for OCC with mobility, achieving a data throughput of 1.08 Kbps. The experimental results showed that the link performance degraded when increasing both the transmission span and the user’s speed.

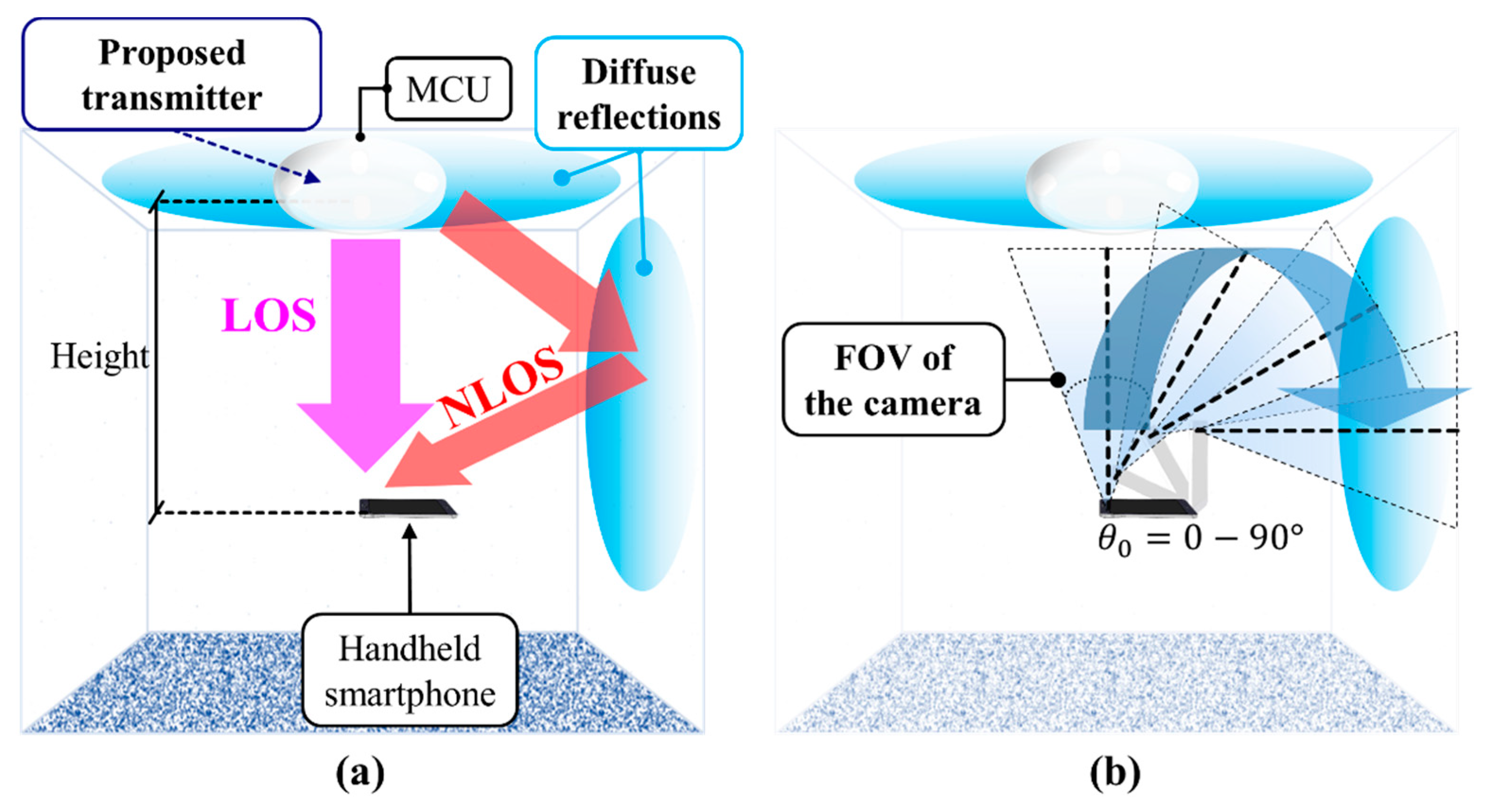

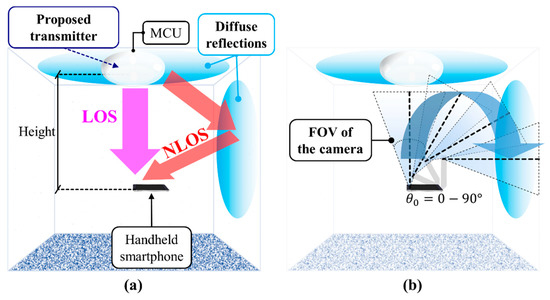

Another approach to address both the OCC coverage and mobility was reported, which used a wide RX orientation (WRO) for LOS and NLOS links simultaneously, as shown in Figure 24 [86]. WRO was proposed along with the design of the TX in order to distribute the illumination equally over a 120° irradiance angle, thus providing a wider coverage compared with existing OCC systems, albeit with a tradeoff in distance. Mobility is supported by utilizing a short exposure time, which successfully mitigated the need for the static RX. An adaptive blooming mitigation algorithm was employed to provide robust blooming mitigation for both LOS and NLOS based OCC systems [86].

Figure 24.

Wide RX orientation scheme: (a) providing both LOS and NLOS communication links; (b) wide RX orientation.

The WRO scheme provides a free orientation of 90°, confirmed on a small-scale indoor testbed. It provides maximum transmission distances of up to 50 and 141 cm for LOS and NLOS, respectively and a data rate of 6.72 kbps. The scheme was omnidirectional, i.e., reflections from all walls within the room, and the mobility was achieved by means of a handheld RX, which was not strictly static even under a hand movement of up to 0.30 m/s. Similarly, higher mobility was further evaluated in the LOS RS-based OCC link for up to 0.80 m/s [89].

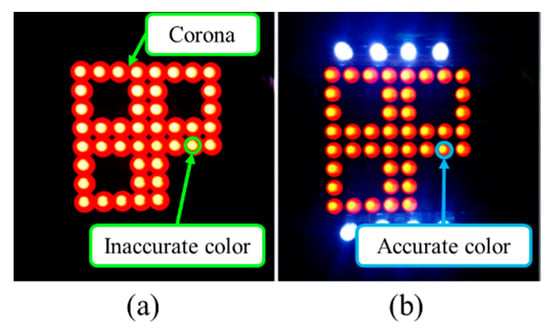

4.2. Interference Mitigation

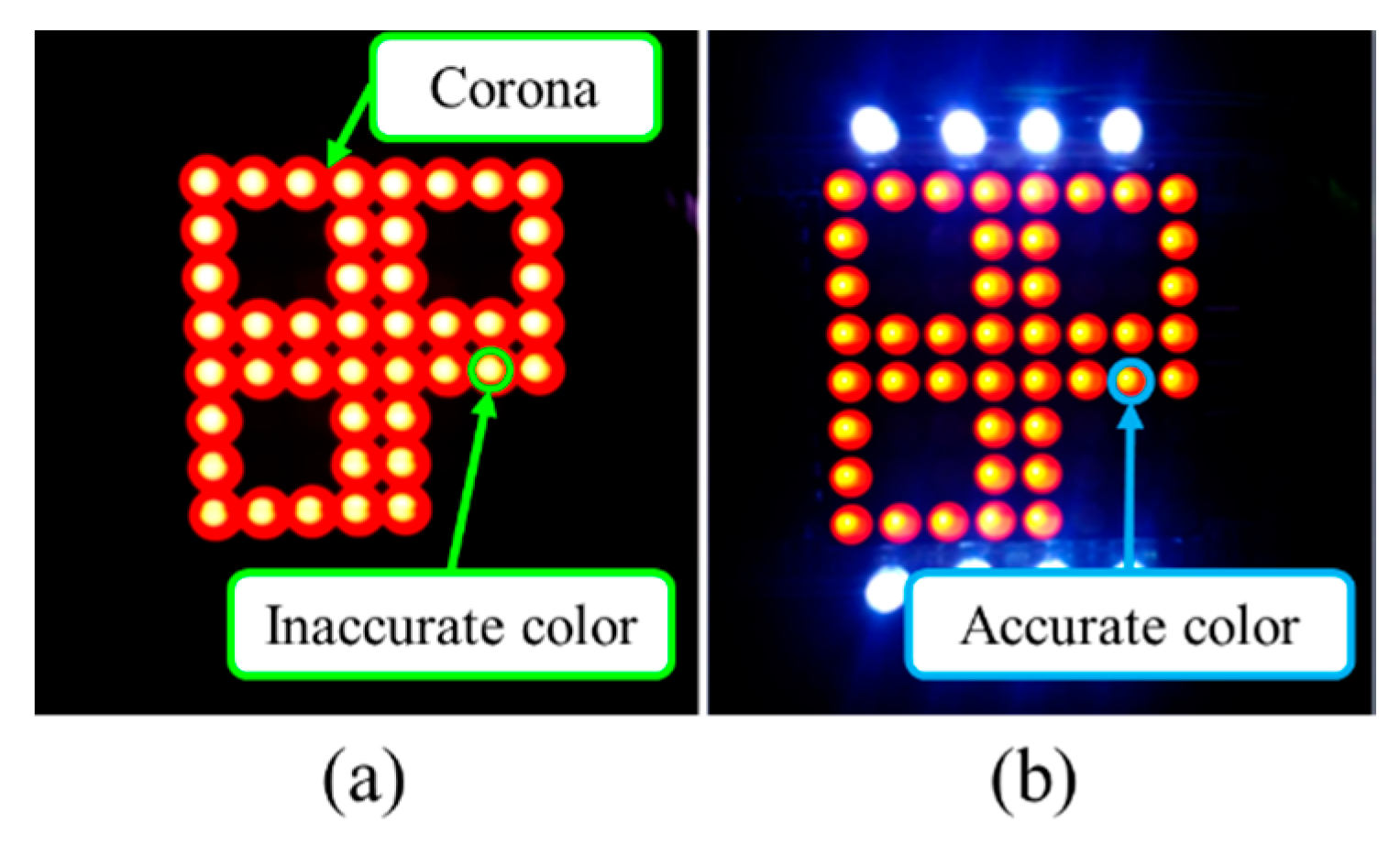

Another performance enhancement feature of OCC is the interference mitigation capability due to the versatility of the camera for capturing light over a wide range spectrum. In OCC, one of the important interferences observed in the captured image is adjacent TXs induced inter-pixel interference (IPI) [17,22]. IPI could also be due to improper focusing when capturing flickering lights [22]. In general, cameras’ autofocusing feature is used prior to capturing the image [29]. The proposed IAC scheme includes dedicated illumination LEDs (i.e., TX) to mitigate the interference by providing fixed references for focusing for the camera [5,22]. Most cameras have automatic focus and light metering functionalities. Since OCC utilizes flickering LEDs, these flickers might confuse the camera’s automatic focusing and light-metering function. The illumination LEDs are thus kept always on (no flickering) to serve as fixed references for auto focusing and light metering in the camera. As illustrated in Figure 25, IAC successfully minimizes the corona/blooming (inter-pixel interferences between the adjacent LEDs) around LEDs with higher accurate color demodulation.

Figure 25.

Camera capture frame of IAC: (a) corona/blooming causing inter-pixel interference; (b) correct focus and accurate color interpretation [22].

Alternately, the IPI can be mitigated by using visual MIMO, specifically utilizing an image processing technique to extract the specific RoI prior to demodulation as illustrated in Figure 26 [17]. RoI is extracted by detecting the difference in intensity between the TXs and the background lights. It is true that visual MIMO is a throughput enhancement scheme in OCC. However, since the camera utilizes 2D spatial information, the visual MIMO can also be employed to classify and separate the transmitter lights (LEDs) and the interfering lights spatially through the captured frames. Therefore, providing an interference mitigation. Focus is indeed one of the major causes for interferences in a camera-based receiver, the solution of which is provided by IAC [22].

Figure 26.

A visual MIMO scheme.

In addition, a tracking functionality is employed in visual MIMO to detect the movement of the TX, which is observable between frames.

Since OCC operation is generally bounded by both the LOS path and the limitations of cameras, there are several issues that need addressing: directionality, focusing, alignment and distance. In [90], a pixelated MIMO scheme with a TX (an array of LEDs) fitted with a collimating lens was experimentally investigated for transmission in the angular domain rather than in the space domain. A series of time-varying coded (i.e., Raptor code) images were captured and decoded using a camera, which was focused at infinity, thus achieving a throughput of 30 bit/frame at a link span of 1 m. In addition, this scheme is robust against misalignment, camera focus and orientation.

It is evident that additional optical filters can be used to reduce the interference, which resides outside the modulated color and intensity of the light [17,56]. However, including additional filters will make the camera bulky, therefore making the OCC system impractical. An alternative interference mitigation scheme would be to shorten the camera’s exposure time, thus capturing a reduced amount of light from surroundings, while focusing mainly on the light from the TXs [86,91].

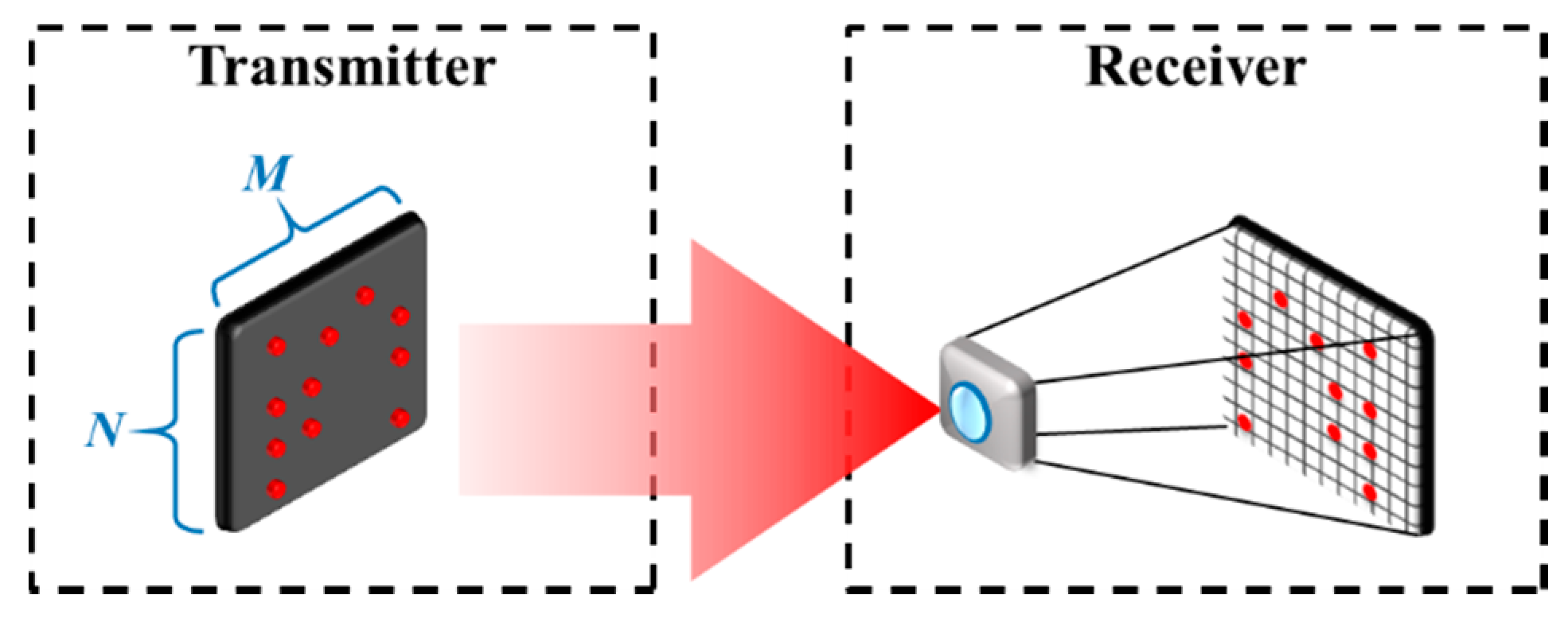

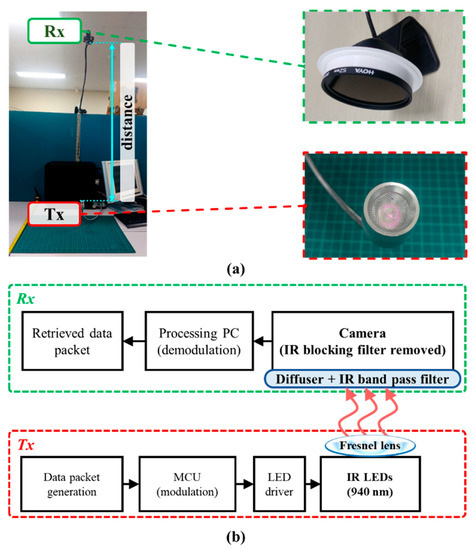

In [91], as illustrated in Figure 27, an interference mitigation scheme was proposed for IR-based OCC (IOCC) operating at the NIR spectrum band, more specifically at a wavelength of 940 nm. Although all cameras can capture NIR lights, the IR-blocking filter attached to the IS reduces the sensitivity at the NIR light. In IOCC, it is customary that the IR blocking filter is replaced with a diffuser in order to increase its robustness for capturing from IR LEDs and thus reducing the interference from other sources [91].

Figure 27.

IOCC: (a) experiment setup; (b) block diagram [91].

In OCC, IOCC was used as an uplink to provide a data rate of 6.72 Kbps over a link distance of 100 cm using IR LEDs with a total rated transmit power of 600 mW. Note that the achieved transmission distance is relatively longer compared with other RS-OCC schemes, due mainly to the effectiveness of mitigating the visible light induced interference. Note that in daytime outdoor applications, sunlight is considered the main interference for OCC schemes [35]. The use of the RoI technique (Visual MIMO) is mostly utilized in outdoor OCC schemes. The 2D captured images from the camera are effective to classify and separate the TXs spatially from the sunlight, thus improving the SNR [17,35]. The employment of additional polarizer would further improve the signal intensity in outdoor OCC applications, especially during daytime. Alternatively, outdoor OCC applications at night can be provided using an NIR light TX such as IOCC. It is applicable at night since NIR is unobtrusive to the human eyes and there is no sunlight that emits strong IR interference.

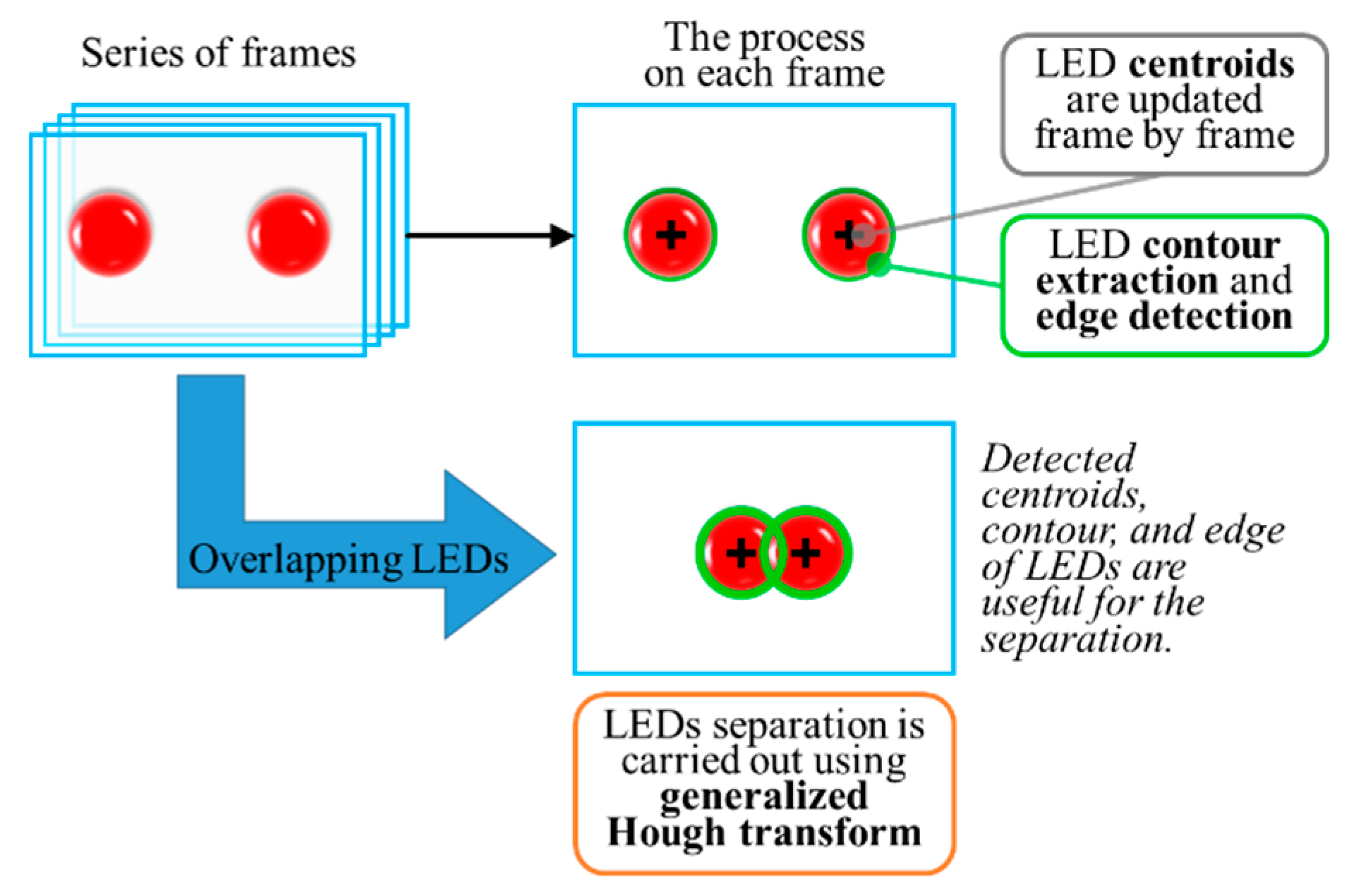

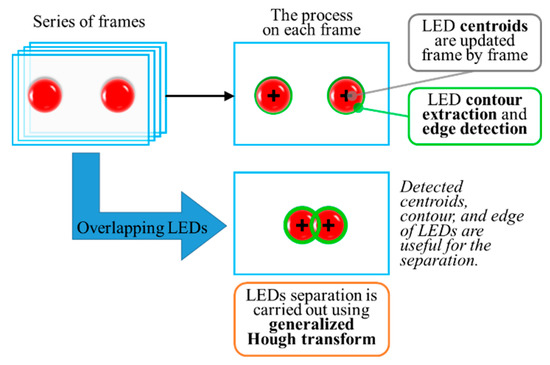

In addition, moving transmitters, generally in vehicular outdoor OCCs, present a unique interference of overlapping LEDs. The solution was proposed by tracking the movement of LEDs on each successive frame [92]. To separate the overlapping LEDs, the proposed method uses a series of consecutive frames as the input to track the movement of each LED based on their centroids (center of circle), as illustrated in Figure 28.

Figure 28.

Detection algorithm for overlapping LEDs [92].

5. Applications

OCC is deemed pragmatic due to the use of existing camera-based RXs that are widely available in smart devices. This versatility of the camera-based RX allows OCC for a range of applications in indoor and outdoor environments [25]. As outlined in the IEEE 802.15.7m services, various OCC applications are supported by PHY IV, V, and VI [14] for RoI-S, RS OCC, and NSM OCC, respectively.

5.1. Indoor Schemes

Indoor OCC schemes are the most prominent, as the majority of the intended applications among the use cases listed in the standardization are for indoor environments [25]. The use cases for indoor schemes include 2D LED color code [43,51,52,55], D2D as well as relay applications [37,38], digital signage [12,52,93], exhibition or store services [23,49,84,94,95] and in-flight services [49,52]. A combined version of these use cases is proposed for a more complex system such as smart factories, smart offices, and smart homes as part of the 4th Industrial Revolution solutions [25]. Several applications of OCC are described in this section to highlight the versatility of indoor OCC systems.

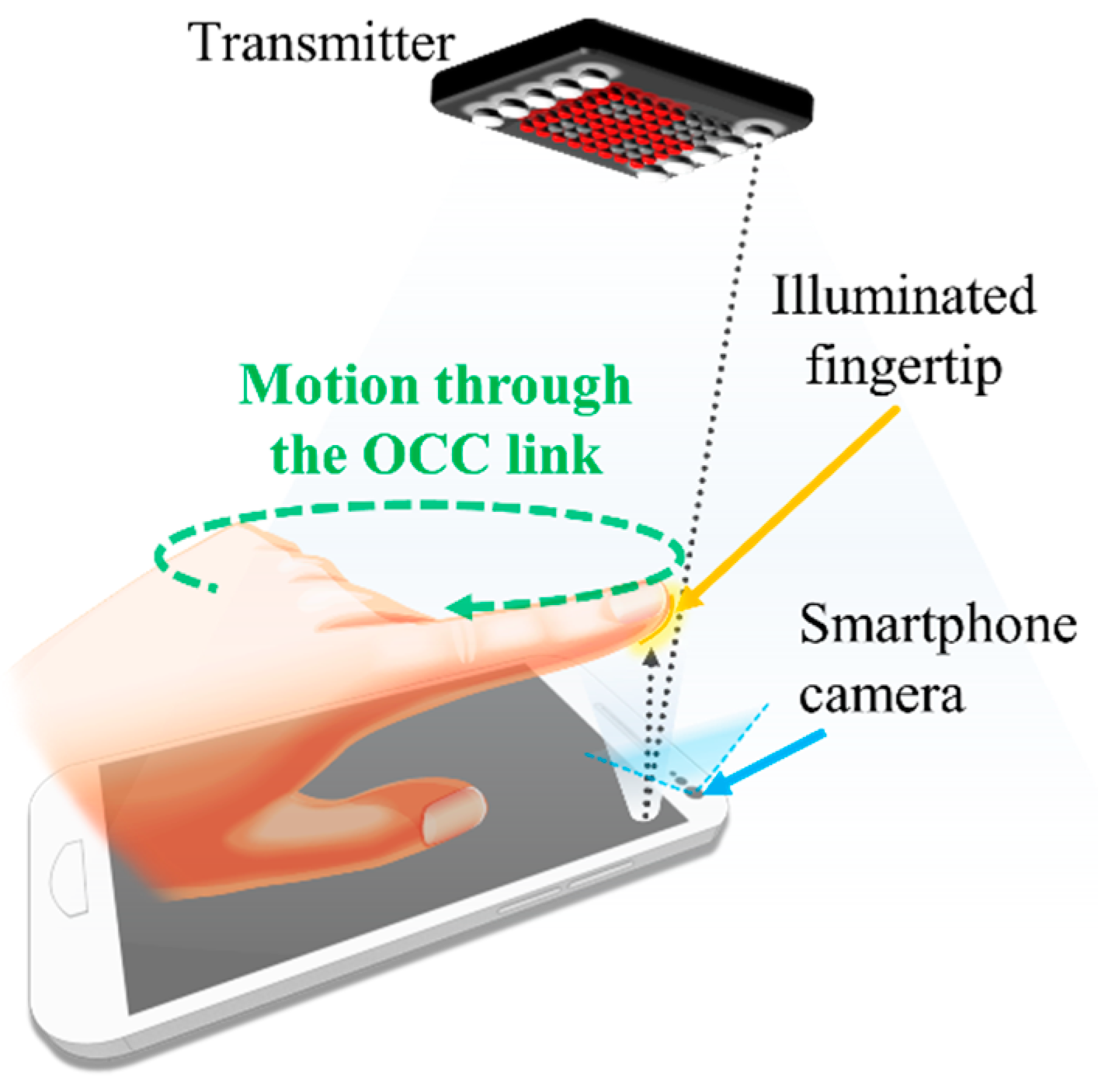

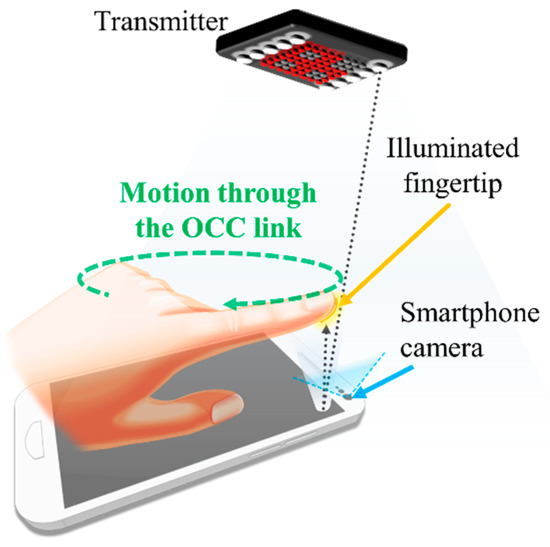

An OCC combined with motion detection was proposed in the framework of motion over camera. Figure 29 shows its concept [96]. The main principle behind this concept is based on the nature of image capturing at the RX, where the differences between adjacent frames can be used to detect the object motion, such as a pointing finger. The motion detection works in conjunction with the OCC through RoI selection, which can further be enhanced using a highly accurate NN-based motion detection scheme [97]. Note that the motion detection scheme is envisioned as an additional control function in OCC for use in smart homes and offices.

Figure 29.

A motion over camera scheme.

While most OCC studies have focused on unidirectional links, a bidirectional OCC utilizing RS was proposed with the camera-based RX for the uplink and the solar-cell-based RX for the downlink for IoT applications [98]. Note that the solar cell was used for a low-light requirement (i.e., as low as 100 lux of illuminance) for the downlink.

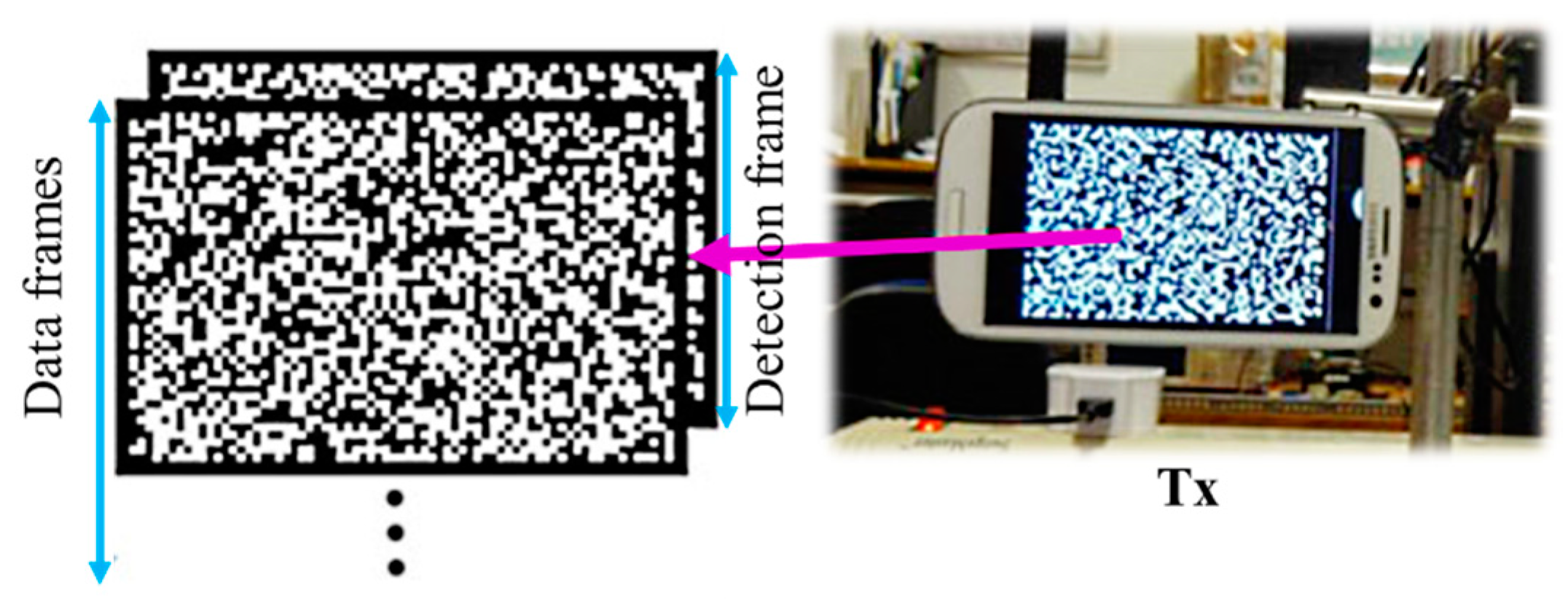

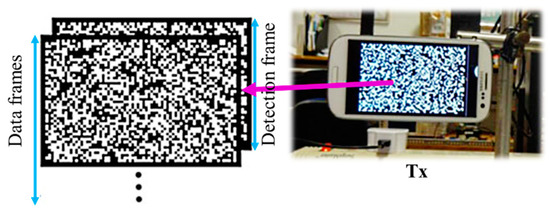

One of the most attractive OCC applications can be in D2D communications employing NSM [37,38,43]. In [38], illustrated in Figure 30, a smartphone VLC (SVLC) link employing a camera-based RX for short-range D2D communications was investigate. In this scheme, the RX captured the data from the screen of the TX and used a robust algorithm for detection. It applied projective transformation to accurately eliminate perspective distortions caused by the displacement of the devices with a 98% success rate. Similar to a dynamic 2D barcode, SVLC offers the possibility of transferring a significant amount of data by simply displaying it on the screen of a smart device. A data rate of 112.5 Kbps was achieved with only single-color modulation. SVLC has the potential of delivering a higher data rate of ~300 Kbps using RGB-based modulation. Despite its limited transmission distance of 15 cm, SVLC is preferable for D2D based financial transactions.

Figure 30.

A screen-based TX for SVLC [38].

An interesting smartphone-based scheme for D2D using NSM was proposed in [37], which used shape modulation to increase the data being modulated on each frame. Due to the high-density modulation on each frame, the achieved data rate was 2.66 Mbps at a transmission distance of 20 cm. Note that there is a tradeoff between the distance and the data rate, i.e., increasing the distance to 200 cm results in reduced data rate of 15.38 bps [93]. The scheme was designed to support the uplink transmission of a more complex OCC system, such as exhibition service or digital signage. The system is robust with an uplink failure rate of 10% [93,94].

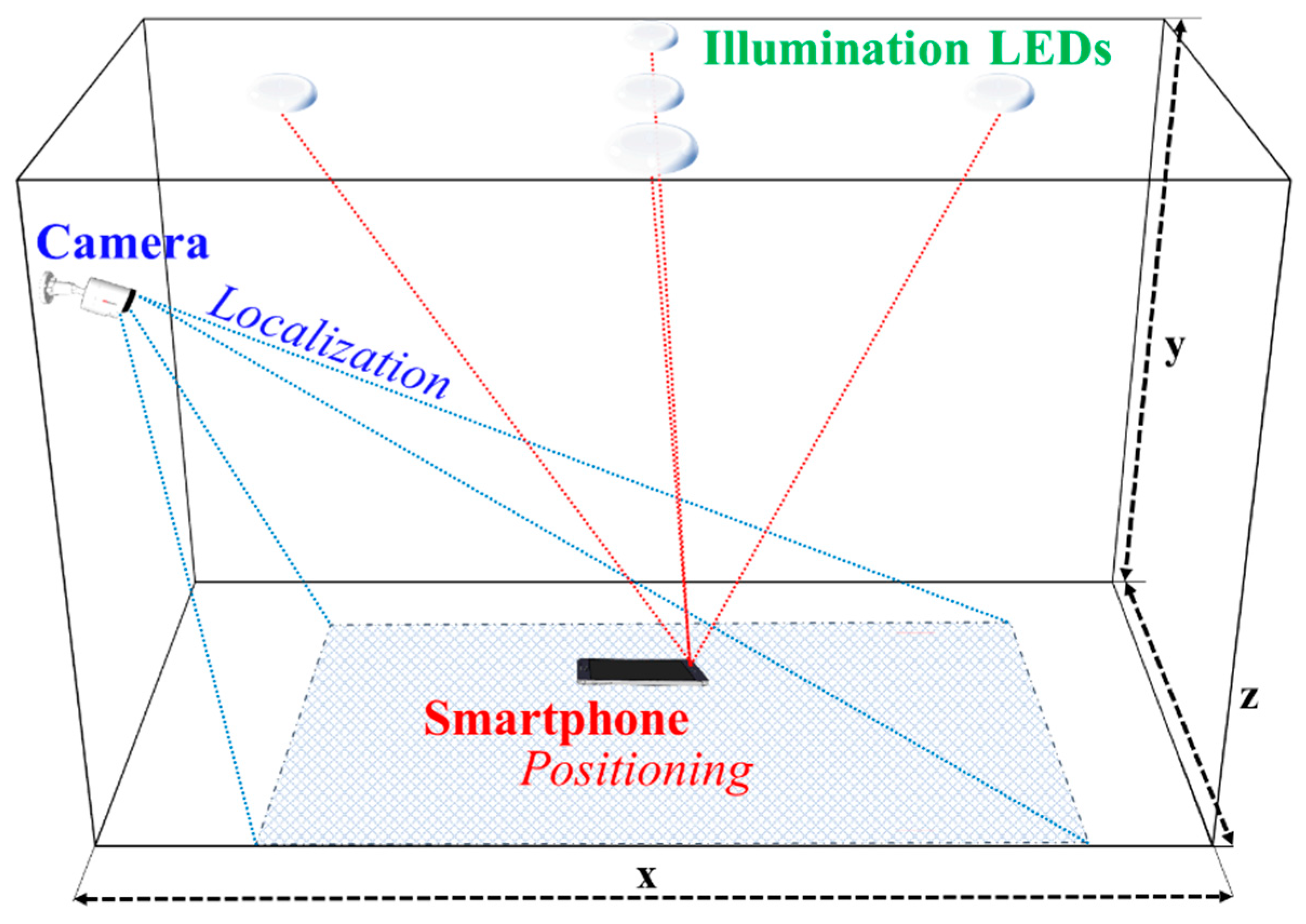

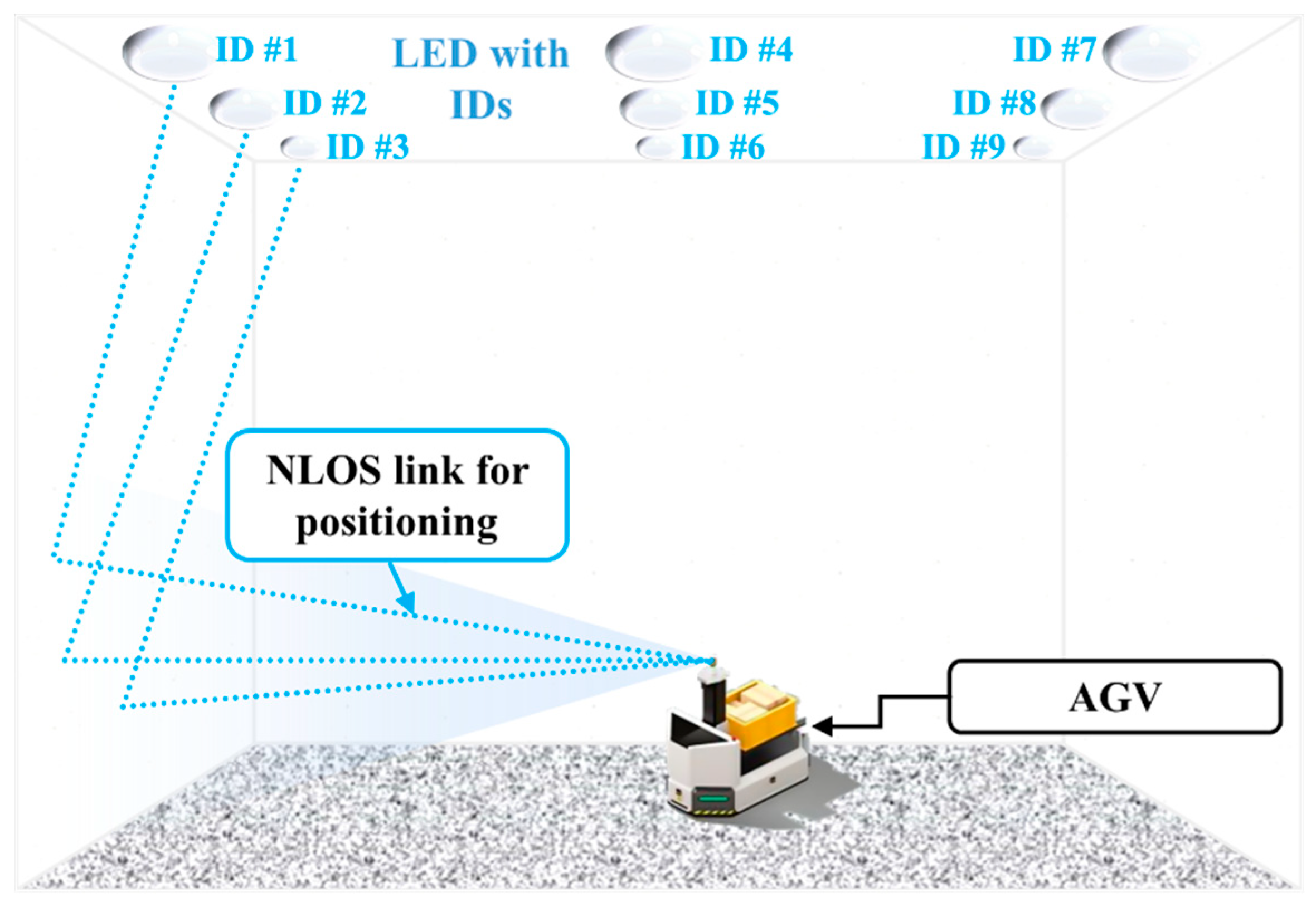

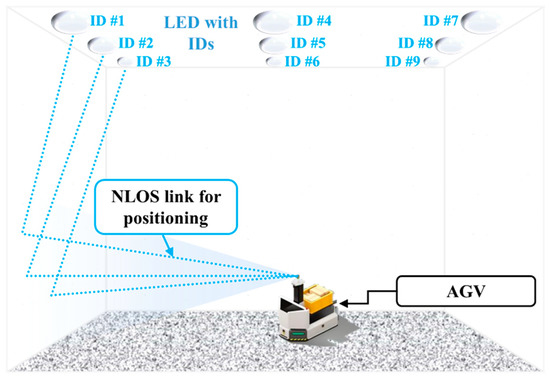

In recent years, we have seen a growing level of interest in indoor positioning systems (IPSs). Currently, there are a number of IPSs such as radio frequency identification (RFID), ultra-wideband (UWB), and Wi-Fi [99,100,101]. However, these schemes suffer from RF electromagnetic interference and are costly. Both camera and PD can be used as the RX to detect intensity modulated light signals from LEDs. OCC-based IPSs have extensively been investigated for IoT applications. The OCC-based IPS for 2D and 3D positioning offers a number of advantages including high positioning accuracy (i.e., less than centimeters), license-free, no RF electromagnetic interference and low cost, since it uses the 2D information from the camera-based RX [68,95,102,103,104,105,106,107,108,109]. The OCC based positioning scheme is also deemed more practical since it can be implemented directly on the smartphones’ front cameras [105,107].

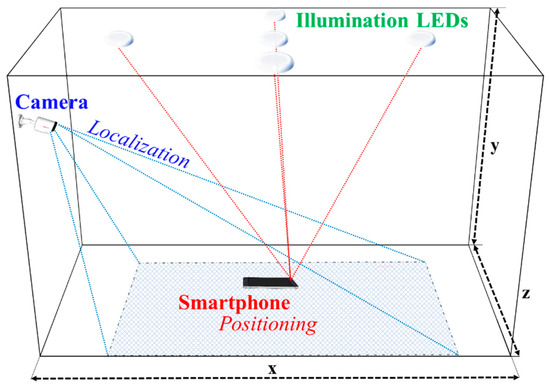

In the camera-based IPS, the RX’s position is determined based on the coordinates of LEDs and the captured image [14,15,16]. There are various algorithms for implementing IPS in OCC, which are classified into two categories based on the reference for positioning, see Figure 31. The illumination LEDs broadcast the references in the form of fixed coordinates relative to the dimension of the room/indoor space [105]. In the first IPS scheme, the smartphone camera acting as the user determines its own position based on the references set by the illumination LEDs. In the second type of IPS scheme, a camera is used as the reference, which actively looks for moving users to perform localization for each user [91,104].

Figure 31.

Indoor positioning schemes for OCC.

The most popular localization algorithm adopted in IPS is the angle-of-arrival (AOA), which calculates the user’s position based on the projected lights from three or more LED TXs [11,102,105]. The AOA is a highly regarded IPS scheme in OCC, since it calculates both 3D position and the orientation of the camera. The optical AOA is commonly employed in OCC with a minimum of three TXs [105].

Among some IPS schemes, a dual-camera implementation was performed for improved accuracy, including the NIR medium that is considered safer to human eyes [91,104,106,110]. Machine learning has also been widely used to solve a range of problems in various applications such as data mining, pattern recognition, medical imaging, artificial intelligence, etc. In addition, NN is utilized to increase the accuracy of single-camera positioning by training the variants of pitch, roll, and yaw at the coordinate of the camera [95]. In [111], an OCC based IPS using NN was reported, where LEDs were grouped into blocks and the block coordinate was encoded on a single LED per block. A camera was used to decode the block coordinate to estimate the RX’s position using a trained back propagation NN. The experimental results showed that the mean positioning error, which is defined as the distance difference between the reference and estimated points, was 1.4 cm.

Philips Lighting launched an OCC-based IPS, which works in conjunction with Bluetooth low energy concept [112]. It showed the practicality of OCC as a complementary wireless IPS to be adopted in IoT and more specifically in indoor environments for commercial purposes. It should be noted that the OCC solution [113] cannot be implemented for long period usage similar to wireless sensor network since the average power consumption for OCC capable devices is approximately 3.5 W [113,114].

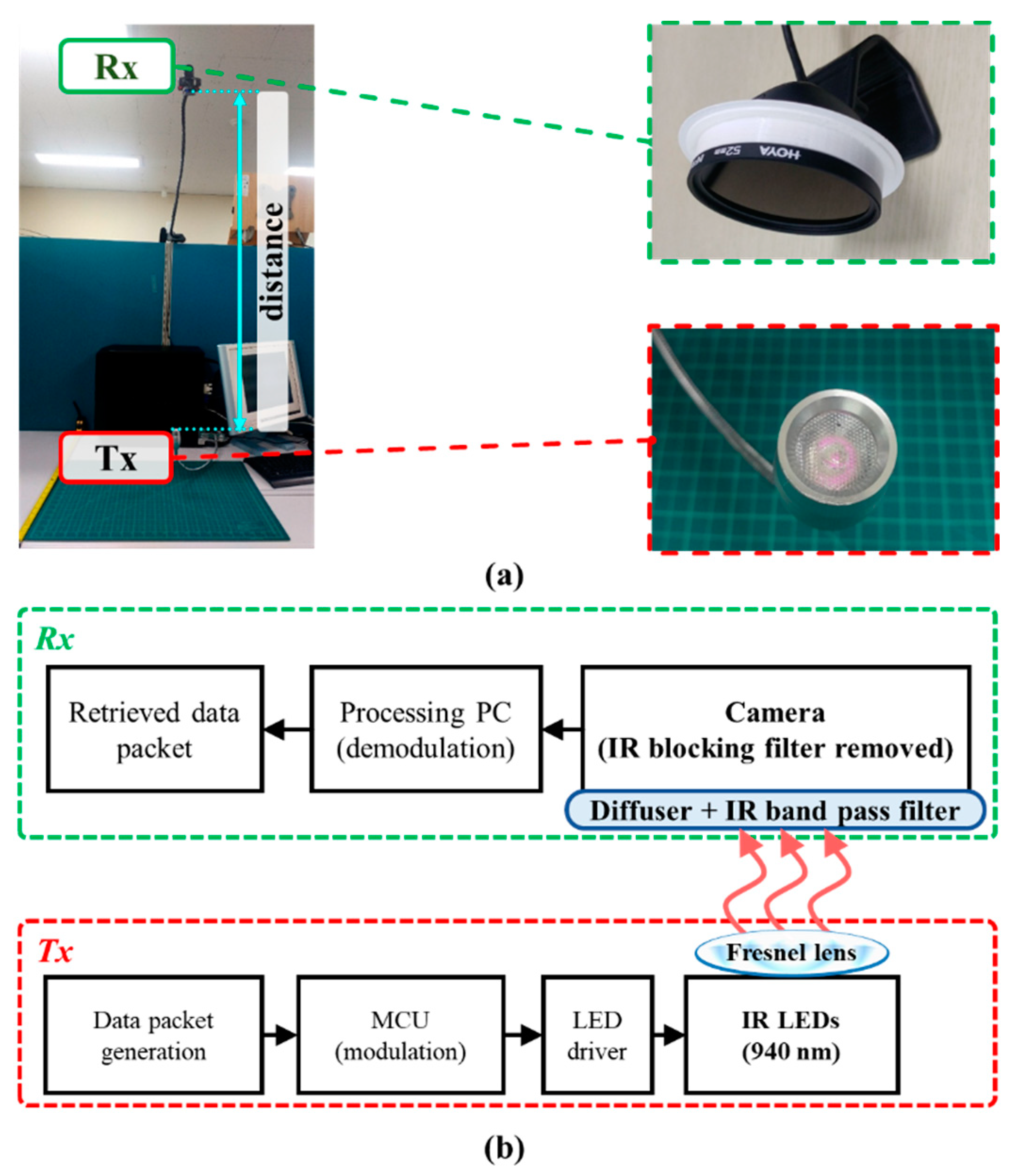

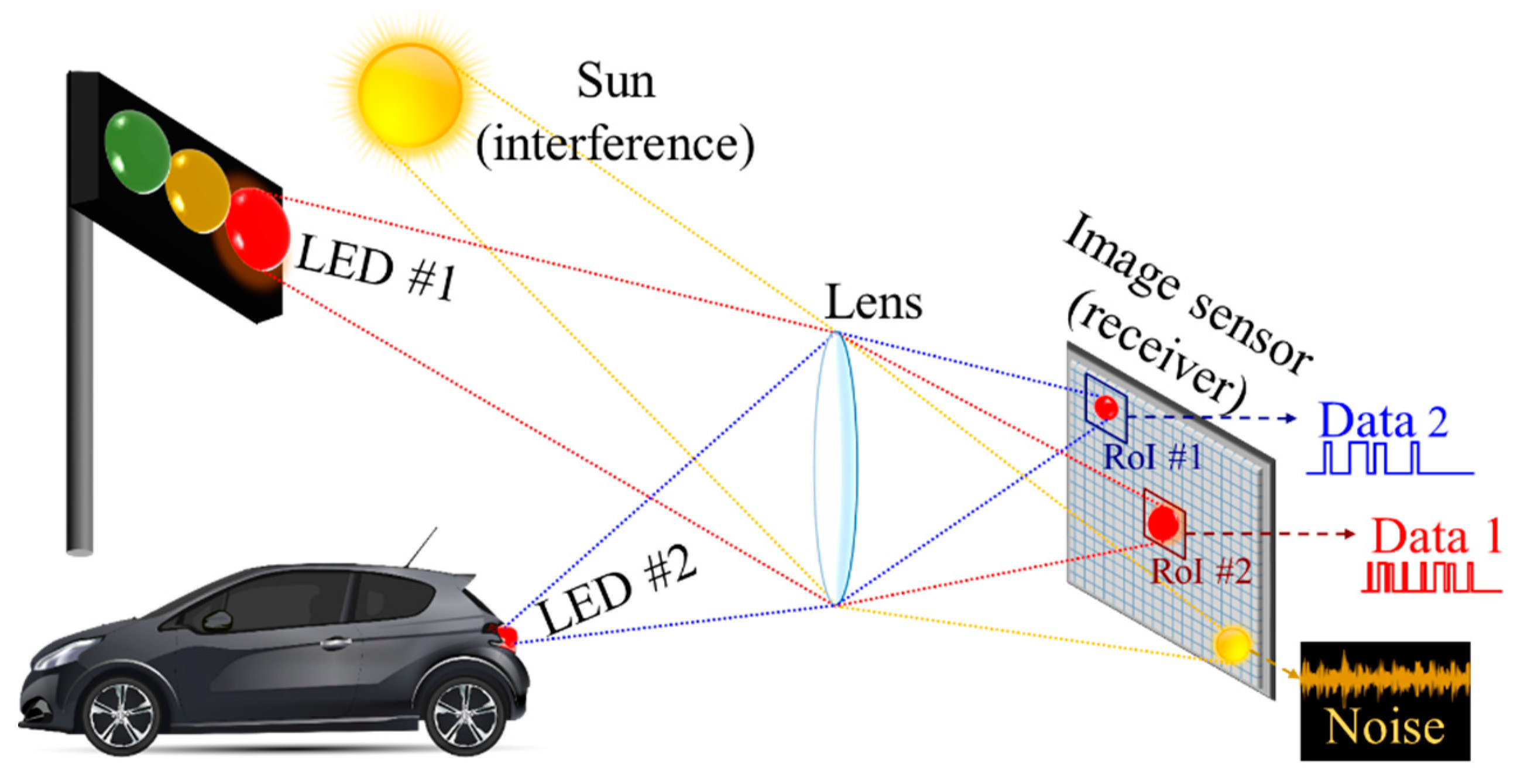

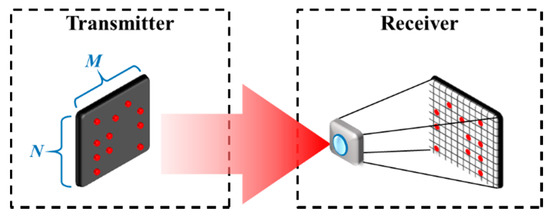

5.2. Outdoor Schemes

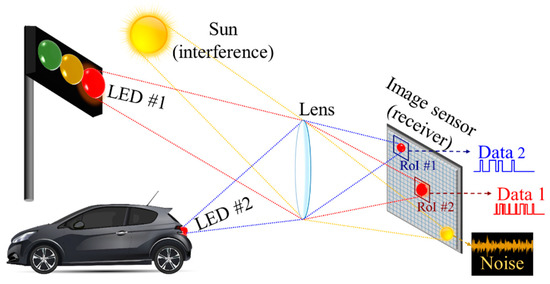

The main outdoor OCC applications are in smart cities for V2V, V2I, and underwater and seaside communications [5,14,25,115]. In V2V and V2I communications, spatial separation is the key advantage of an IS-based RX, as shown in Figure 32 [35,60,64,72,87,116]. Since either the TX or the RX are moving in outdoor environments, most popular modulation schemes for OCC are high-rate NSM, RoI-S, and hybrid camera-PD utilizing OCI sensors [35]. RoI image post-processing is predominantly utilized in outdoor OCC schemes to separate the TXs from severe light interference and noise, such as sunlight, thus improving the signal to noise and interference ratio.

Figure 32.

Spatial separation of multiple sources using an image sensor.

The flickering of the LED-based TXs in both V2V and V2I communications is regulated to be imperceptible to human eyes. Therefore, the camera’s FR must be higher than 100 fps [60]. However, since high-speed and high-cost cameras are not used in vehicles, an OCI sensor is an important breakthrough in OCC for vehicular applications as mentioned previously in Section 3.3. OCI offers the dual benefits of spatial separation of the camera and the higher bandwidth of the PD-based RX, thus offering a practical data rate of 10–55 Mbps in vehicular applications [35,60]. On the other hand, relay-based communications are also investigated for outdoor OCC V2V applications. The relay is especially important between vehicles because it works as a complementary positioning system since the global positioning system (GPS) is inaccessible when vehicles are passing through tunnels [60,117]. It is worth mentioning that the OCC-based V2V and V2I systems require a robust tracking algorithm to identify RoI for the moving TX, while minimizing the amount of light interference in outdoor environments [118,119].

It is worth noting that under abrupt movements in outdoor vehicular applications, LED blurriness occurs due to lens misfocus that affects OCC transmission quality. A robust vehicular OCC system was proposed to address the issues [87]. It was evaluated in simulations, employing two novel algorithms, i.e., average greyscale ratio (AGR) and gradient radial inwardness (GRI), to decode the OCC transmission even when the captured LED transmitters appear blurry. The simulation results exhibited a robust transmission for decoding the OCC transmission at a BER rate of lower than 10−3, up to a distance of 60 m [87].

Underwater and seaside communications are also envisioned within the OCC standardization activity; however, further studies need to be conducted to investigate their full potentials [25,115,120]. In [115], an initial experimental investigation of an OCC link with a camera-based RX compared with the PD-based VLC was reported. In underwater environments with high degrees of absorption and scattering, the visibility of LEDs is not significantly affected. Thus, the camera can capture the transmitting light sources over longer distances compared with the PD-based RXs. A transmission distance of 1 m was achieved at a data rate of ~100 bps with a considerable limitation of the data rate due to a low camera frame rate of 30 fps [115].

6. Potential and Challenges

6.1. Potential Developments of Optical Camera Communication

Based on what has been reported in the literature on OCC, it is clear that the use of camera-based RXs makes communication much more versatile as a complementary wireless technology to existing RF-based wireless solutions. This section of the paper elaborates on several potential developments that would bring about further advancements of OCC within the OWC community.

6.1.1. Camera Hardware Advancements

Camera frame rates are projected to increase in the near future, owing to recent advancements in the IS nanotechnology [8,11,14,121]. Additionally, the camera resolution is also progressively increasing, according to demands on the market and the development of ISs in the smart devices industry. Moreover, the image quality of the miniaturized camera has become a top priority in most recent smartphone developments. These advancements will further drive the next generation OCC systems with a significantly faster and highly accurate camera-based RX.

Currently, research focus is placed on increasing the camera frame rate by allocating a higher storage speed within the camera sensors, employing a faster image processor and miniaturizing the size of a high-speed IS for use in mobile devices [13,61,122,123,124]. In the next decade, cameras with frame rates of thousands of fps in mobile devices are expected to be available in the market. The existing OCI hybrid sensor is also an excellent potential candidate for the RX in OCC-based V2V and V2I communications, offering a data rate of Gbps with a transmission distance of up to hundreds of meters in the near future [35,60].

On the other hand, the NSM-based OCC has an unresolved drawback of perceptible flickering of the TX, which can be mitigated by using higher speed cameras. The perceptible flickering is caused by a switching/refresh rate that is less than 100 Hz, due to the limited camera frame rate of 200 fps or lower [17,49,125]. The perceptible flicker below the flicker-fusion threshold of human eyes (i.e., 100 Hz) introduces fatigue and is therefore considered unhealthy [62].

The future research directions of OCC are to increase the data transmission rate and transmission distance. It can be made possible with a higher frame rate camera (faster image processor) beyond 200 fps and higher image resolution (larger image sensor) beyond 3840 × 2160 pixels in mobile devices, especially smartphones.

6.1.2. Neural Network Assistance

The developments of cameras for use in smartphones and other mobile devices can also be in augmented reality (AR) and mixed reality (MR). In addition, the implementation of intelligent image processing using artificial intelligence (AI) (i.e., NN) has become a prominent method in smartphones, which will be supported by online services such as Google [126,127,128]. The NN performance has also been accelerated with the implementation of dedicated hardware within smartphones in the form of a neural processing unit (NPU) as was first implemented in Apple A11 bionic chip and then Huawei HiSilicon Kirin 970 [121,129,130]. The use of NPUs accelerates the processing speed for NN-based processing including AI-based image processing in smartphones.

The NN implementation has been shown to be beneficial in image processing and in data communications; furthermore, OCC relies on extensive image processing techniques [37,95,97,117]. NN and its complex form of deep/machine learning are mostly employed for pattern recognition [59]. It is worth noting that there are always synchronized patterns between the TX and the RX in a communications scheme, which can be identified by NN in a straightforward manner [37,117,131,132]. The future developments of OCC will strongly benefit from NN advancements in order to offer increased data rates, robustness in reception and improved accuracy of RoI definition. In addition, an invisible watermarking through OCC as introduced in [12] will also be improved by using NN at the RX for higher data rates and longer transmission spans.

6.1.3. Multiple Cameras Development

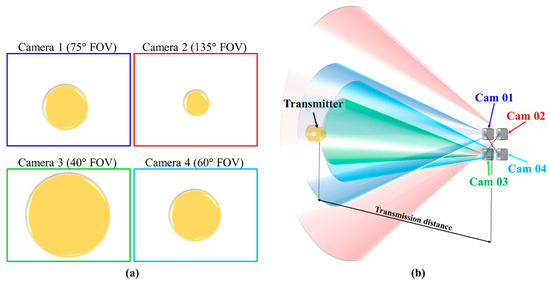

The availability of multiple cameras in recent smartphones over the last five years has also provided additional opportunities and benefits for OCC including several FOVs, different light sensitivities and different resolutions for the RX. These features will result in wider coverage area, increased range of sensitivity for various light spectra, higher image details, increased robustness against the noise and higher data rates in OCC systems.

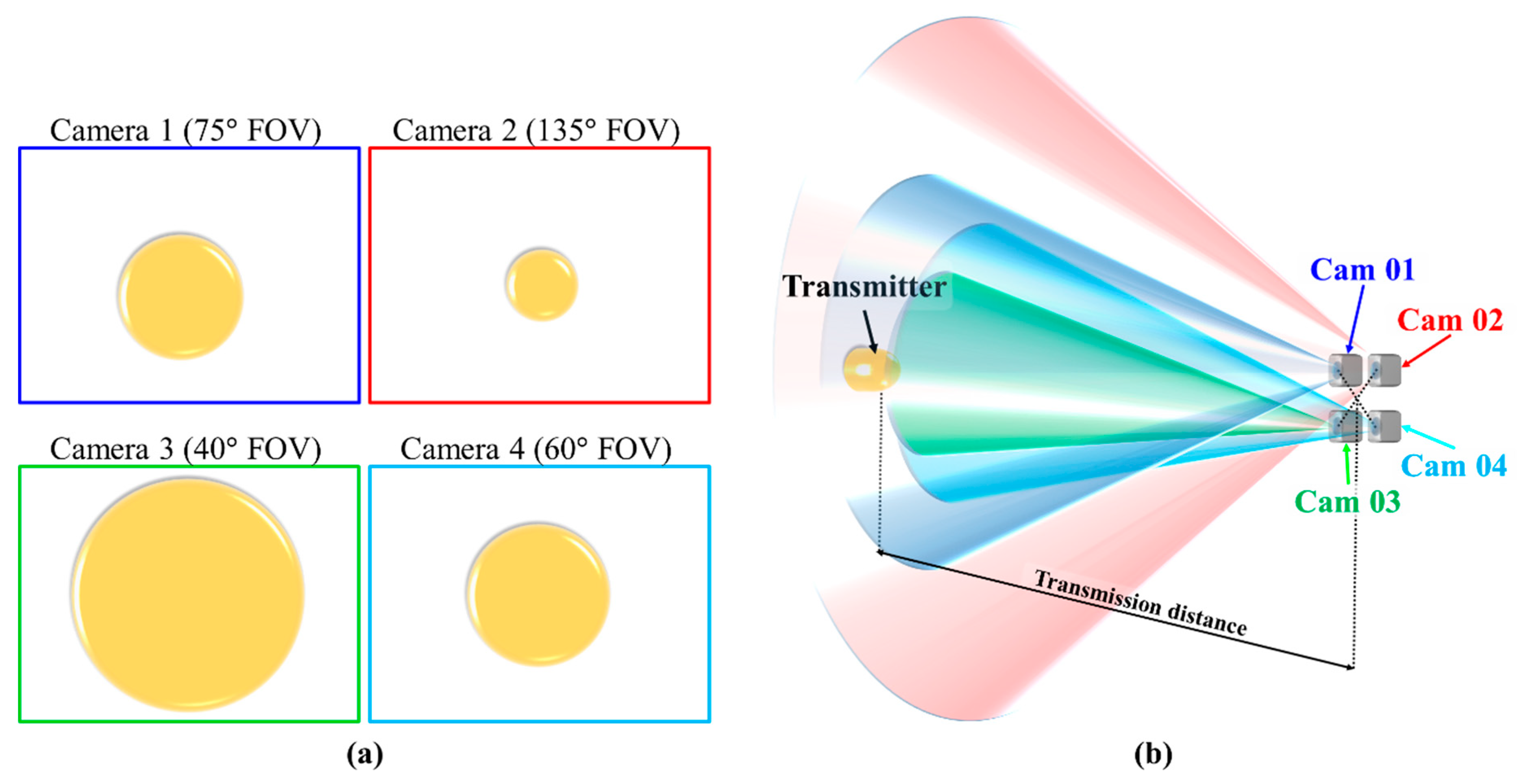

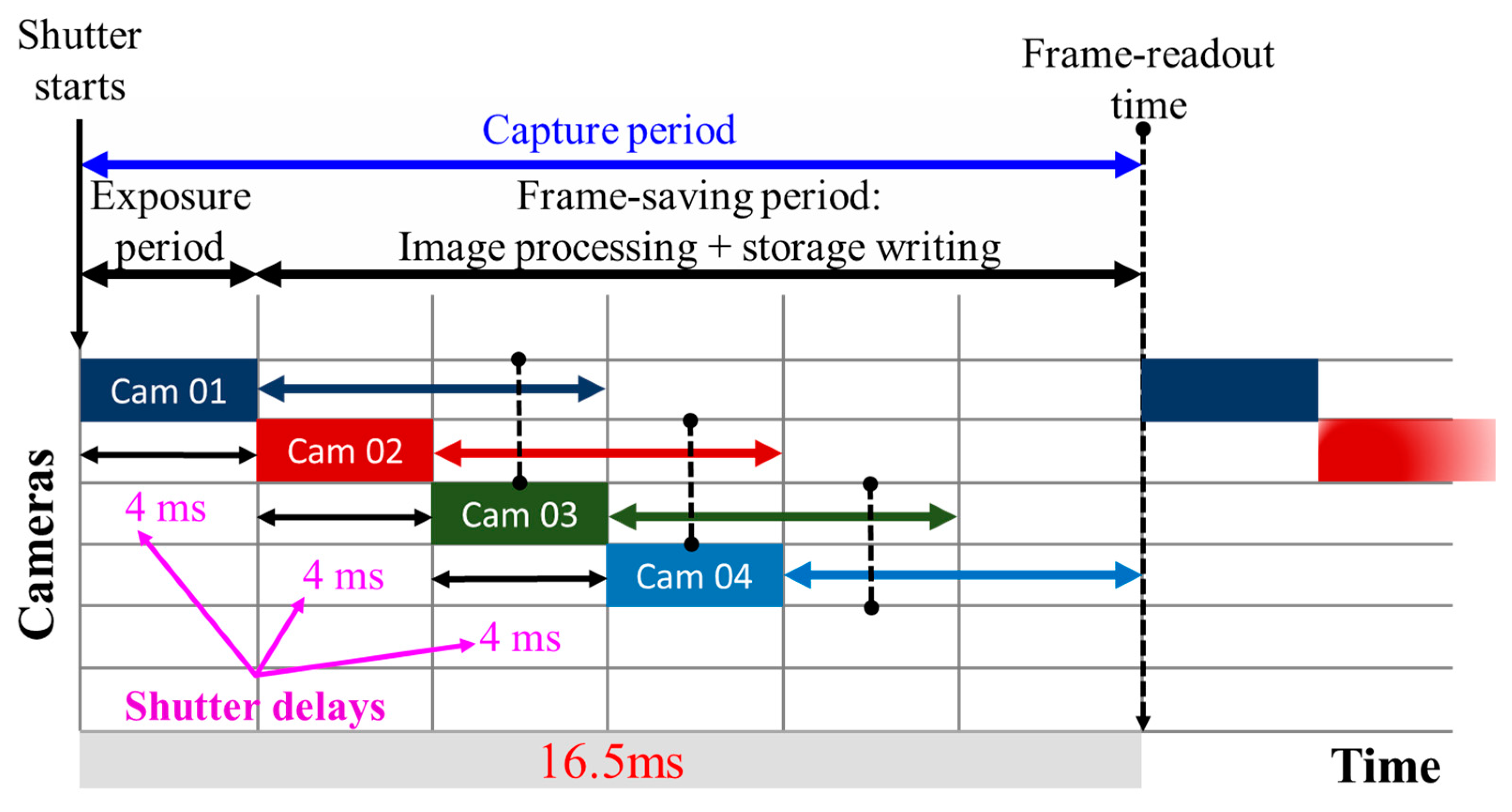

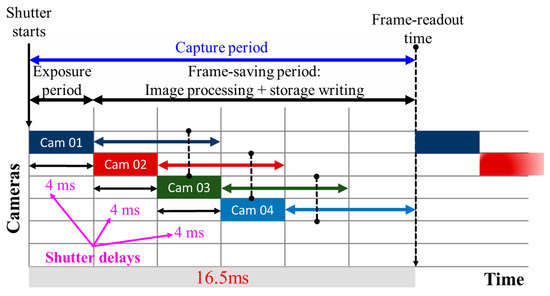

A salient advantage of multiple-camera-based OCC is the image redundancy, i.e., multiple copies of the same captured image of the TX, which can be exploited to increase the data rate by timing the shutter of each camera. An exemplary concept is illustrated in Figure 33, where four cameras in a device with different FOVs are employed to capture the same TX. Let us assume that each camera has a frame rate of 60 fps, i.e., a total capture period of approximately 16.5 ms. It is elaborated visually through Figure 34. Provided that cameras have enough storage for capturing four frames in less than 16.5 ms, then the frame rate of the combined multiple cameras can be increased to up to 240 fps by applying a shutter delay of 4 ms between the cameras. Note that the image processor should also be capable of processing at 240 fps.

Figure 33.

Multiple camera OCC enhancement: (a) perspective of each camera; (b) multiple camera concept.

Figure 34.

Timed shutter for multiple-camera frame rates.

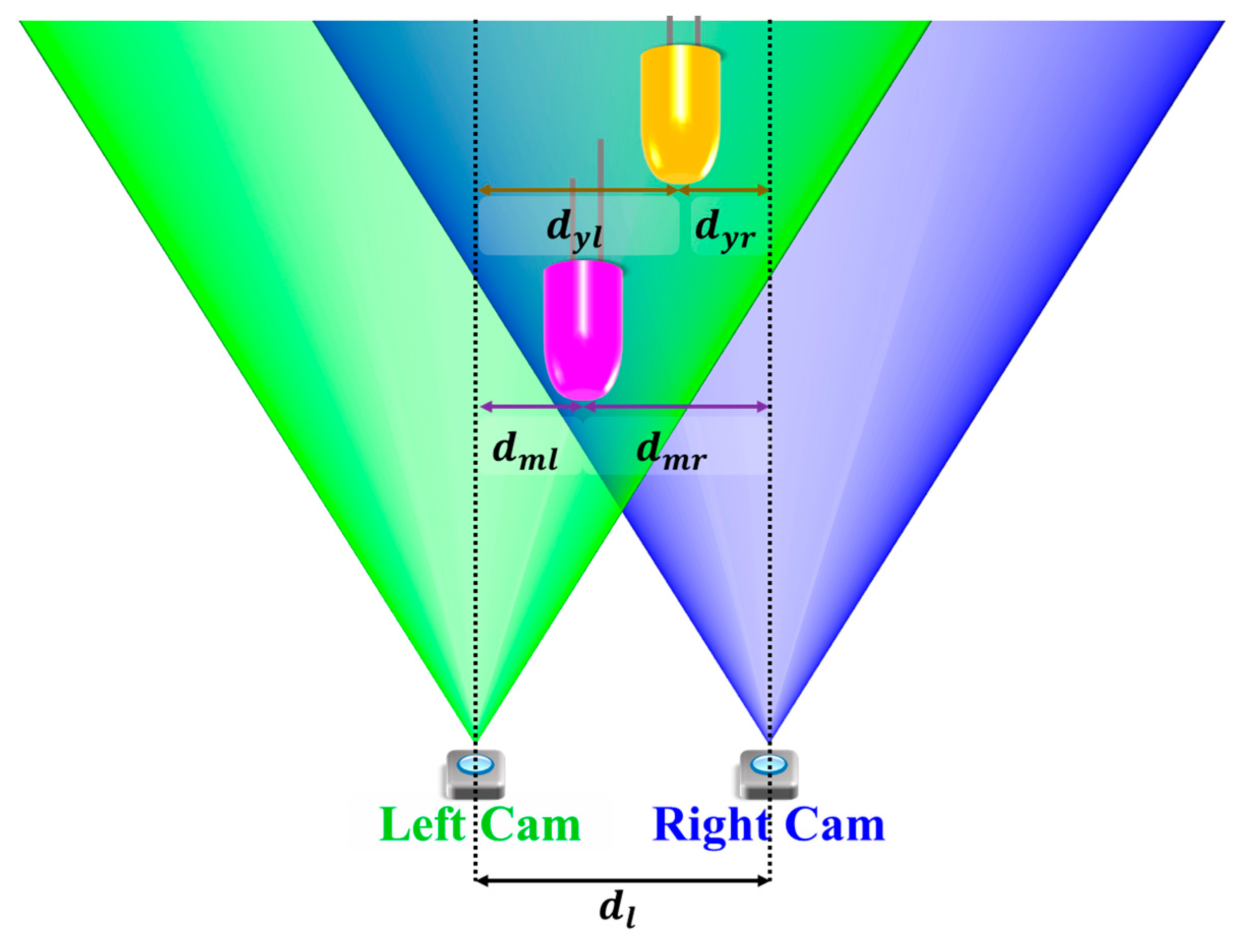

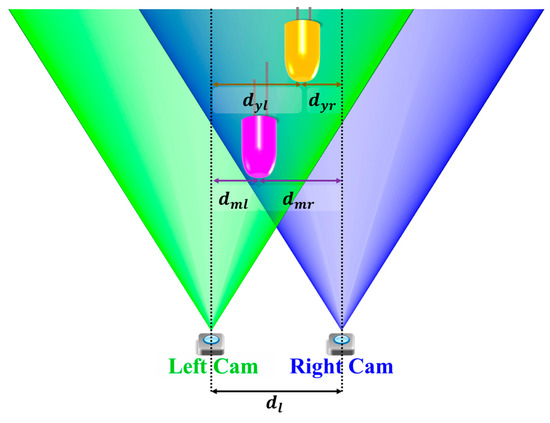

Multiple cameras also provide a potential benefit in the OCC research by elevating the 2D information to 3D. This is achieved by introducing distance/depth information through the stereoscopic vision of at least two cameras or more. Figure 35 shows that multiple copies of the same object are captured by the multiple cameras, thus providing different perspectives of the same captured object. Parallax is formed due to the difference between dml and dmr for the magenta LED relative to the lateral displacement between two cameras (). Similarly, parallax can also be formed from dyl and dyr. A distance estimation between camera and the LED can be produced by calibrating the parallax with the physical distance measurement.

Figure 35.

Stereoscopic vision for distance measurement.

Future OCC can benefit from the availability of multiple camera and stereoscopic vision to calculate distances between the multiple TXs and multiple RXs (cameras). It is a huge potential to overcome several limitations in OCC, such as data rate and mobility.

6.1.4. Advanced Modulation and Communication Medium

Complex modulation schemes (including multi-level and multi-carrier) as well as WDM technologies can be used to increase the data throughput in OCC systems [90,133]. In addition, cameras with increased resolution and the development of more powerful image processing algorithms will enhance the efficiency and robustness of OCC [17,37].