Applying Touchscreen Based Navigation Techniques to Mobile Virtual Reality with Open Clip-On Lenses

Abstract

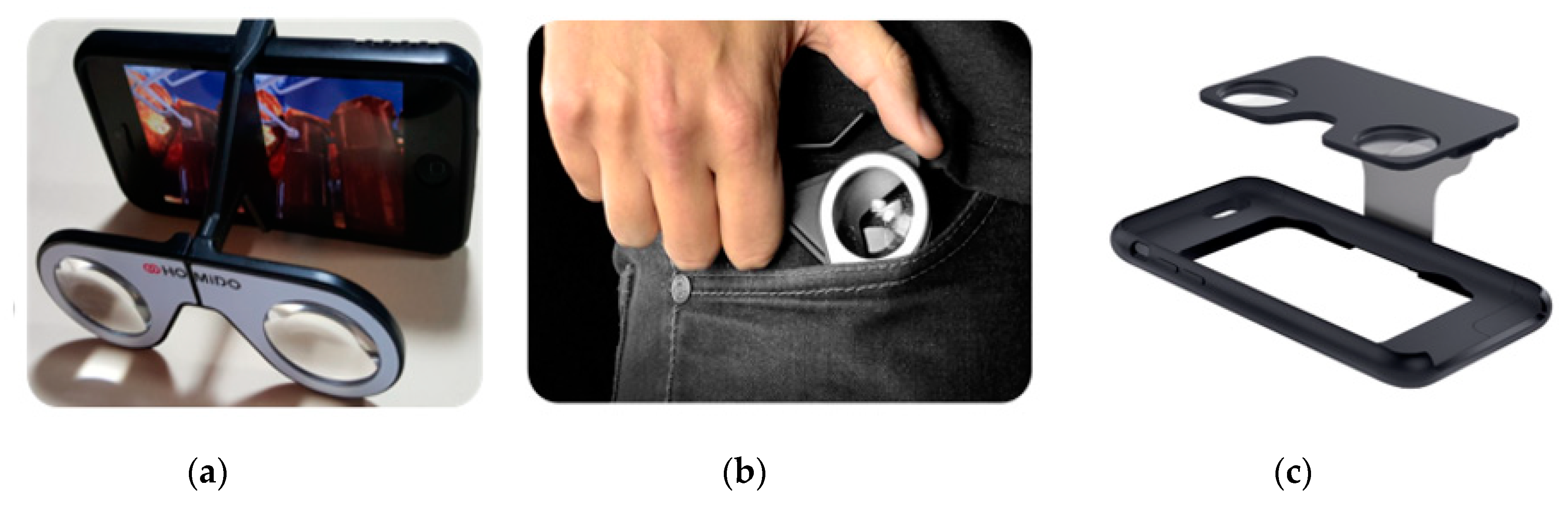

:1. Introduction

2. Related Work

3. Touch Based 3D Navigation Methods for EasyVR

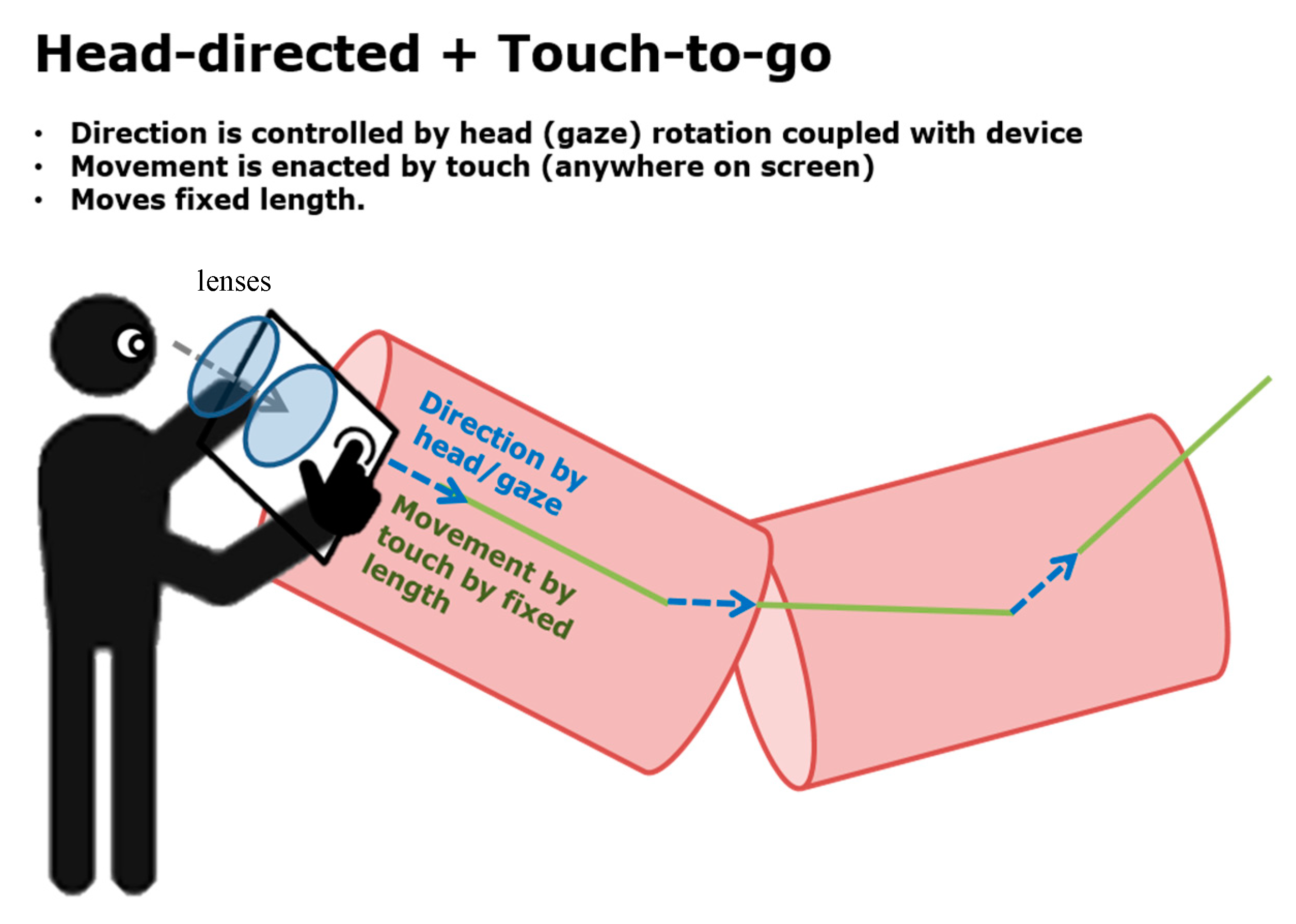

3.1. Head-Directed + Touch-to-Go (HD + TG)

3.2. Head-Directed + Touch-to-Teleport (HD + TT)

3.3. Head-Directed + Joystick-to-Go (HD + JG) and Joystick-Directed + Joystick-to-Go (JD + JG)

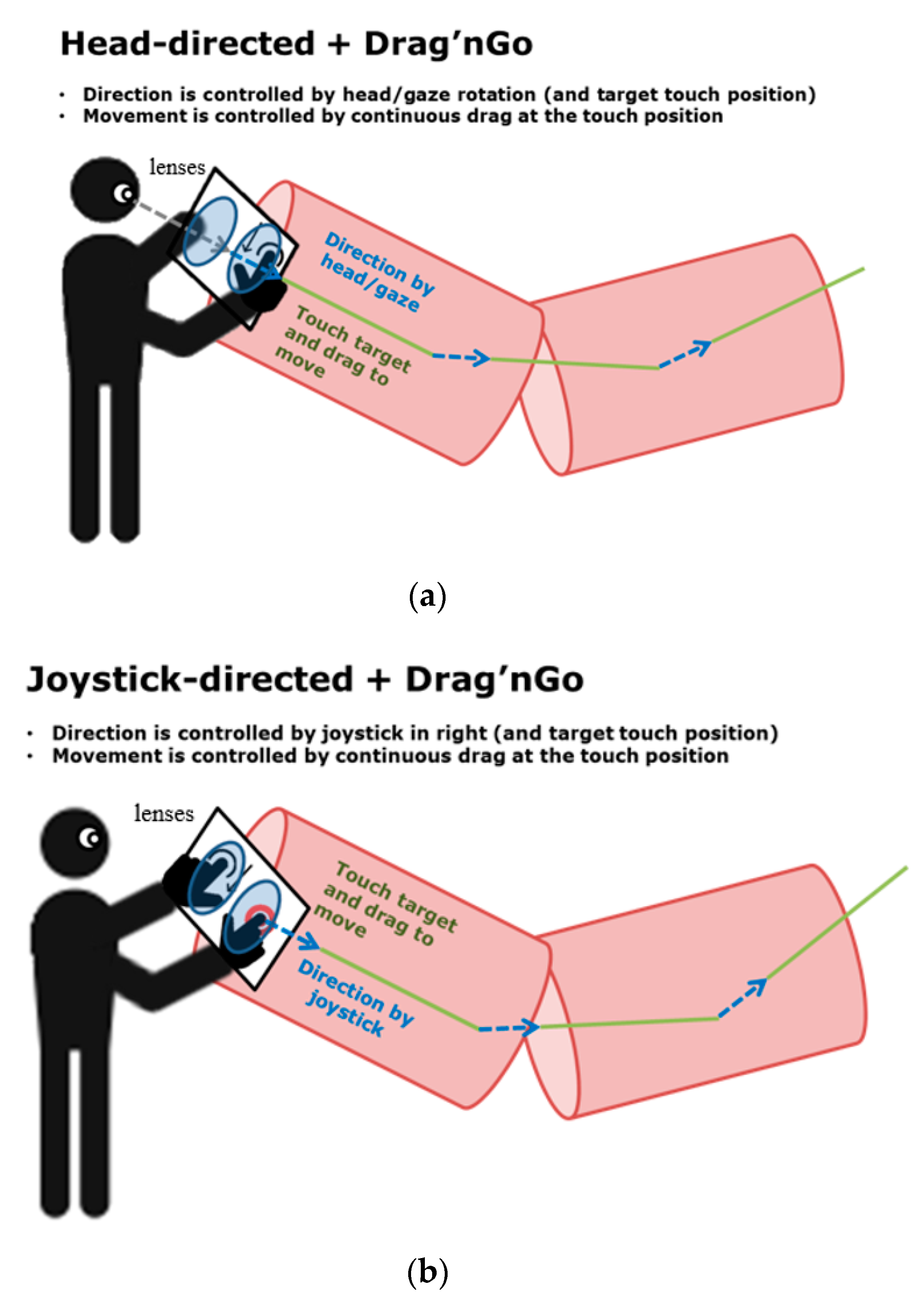

3.4. Head-Directed + Drag’n Go (HD + DG) and Joystick-Directed + Drag’n Go (JD + DG)

4. Experiment

4.1. Experiment Design

4.2. Experimental Task

4.3. Experimental Procedure

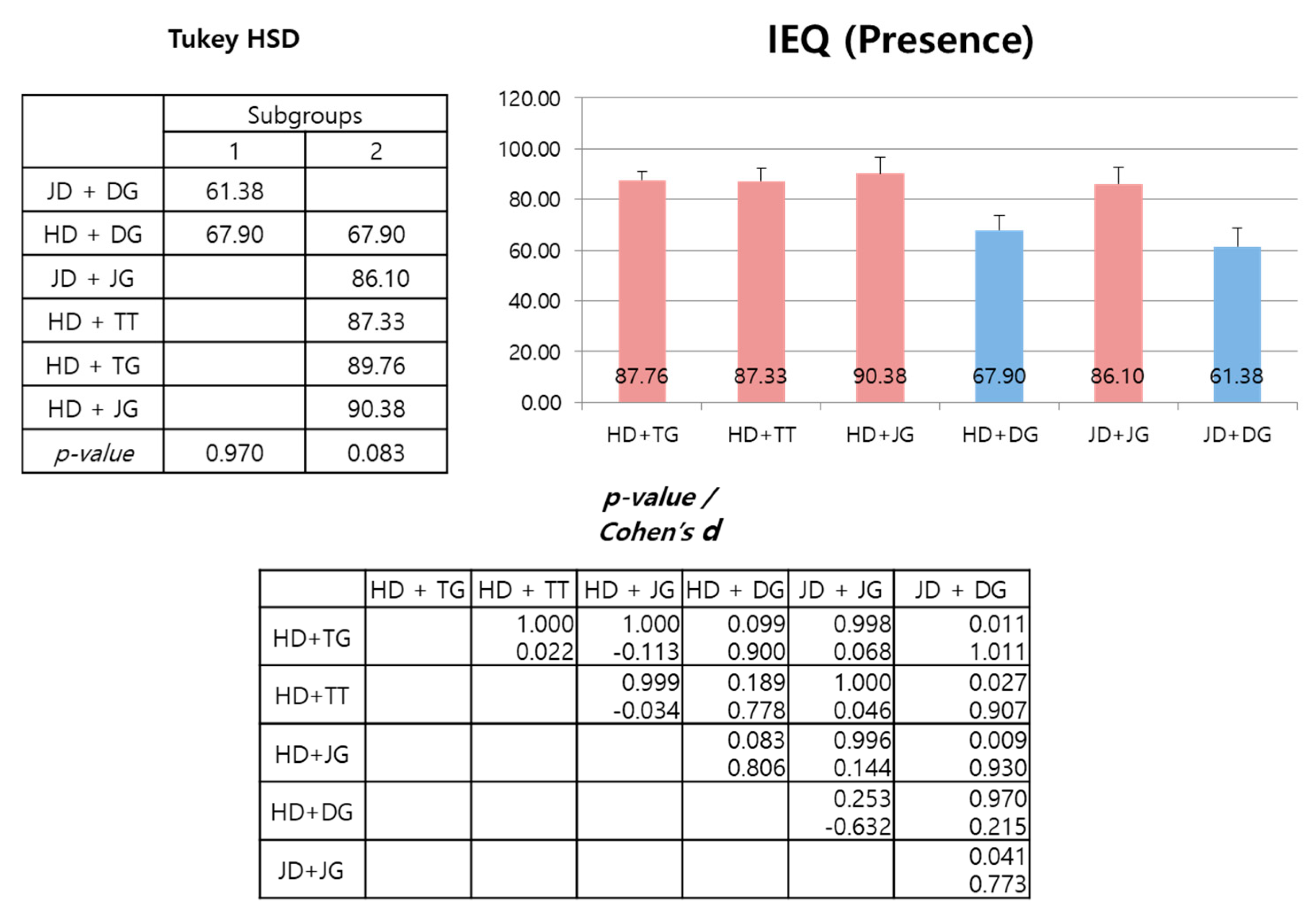

5. Results

5.1. Quantitative Results

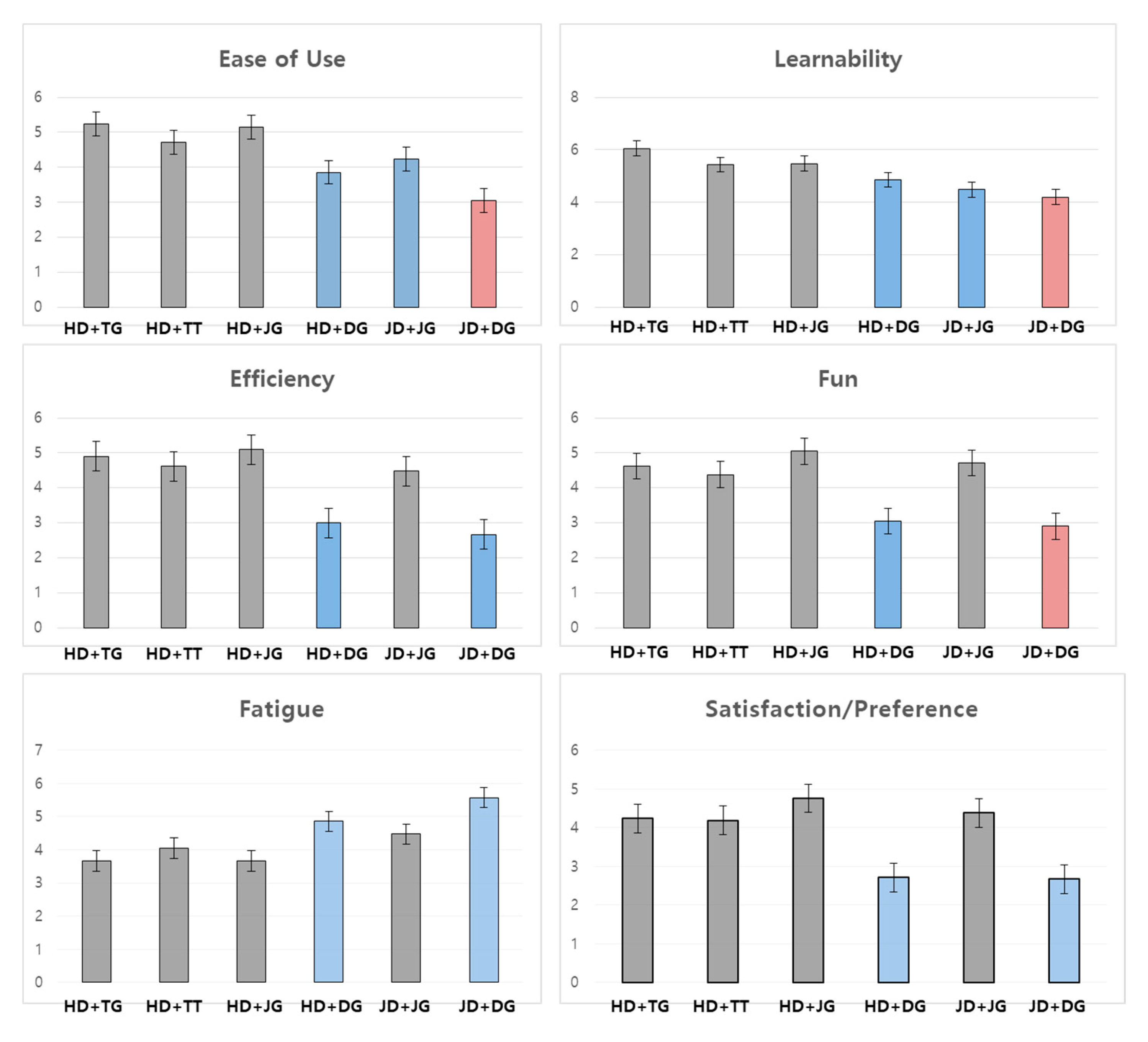

5.2. Qualitative Results

5.3. Experienced vs. Non-Experienced

6. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

- The revised IEQ (immersive experience questionnaire [25])—each question was answered in 5 Likert scale (1-less immersive ~ 5: more immersive) and summed to produce a total score (total possible score = 155 when the immersion is at its maximum).

- To what extend did the VR task hold your attention?

- To what extend did you feel you were focused on the VR task?

- How much effort did you put into carrying out the VR task?

- Did you feel that you were trying your best?

- To what extend did you lose track of time?

- To what extend did you feel consciously aware of being in the real world whilst playing?

- To what extend did you forget about your everyday concerns?

- To what extent were you aware of yourself in your surroundings?

- To what extend did you notice events taking place around you?

- Did you feel the urge at any point to stop and see what was happening around you?

- To what extent did you feel that you were interacting with the VR environment?

- To what extend did you feel as though you were separated from your real world environment?

- To what extend did you feel that the VR content was something you were experiencing, rather than something you were just doing?

- To what extent was your sense of being in the VR environment stronger than your sense of being in the real world?

- At any point did you find yourself become so involved that you were unaware you were even using controls?

- To what extent did you feel as though you were moving through the VR environment according to your own will?

- To what extent did you find the VR task challenging?

- Were there any times during the game in which you just wanted to give up?

- To what extent did you feel motivated while carrying out the VR task?

- To what extent did you find the VR task easy?

- To what extend did you feel like you were making progress towards the end of the VR task?

- How well do you think you performed in the VR task?

- To what extent did you feel emotionally attached to the VR task?

- To what extent were you interested in seeing how the VR task would progress?

- How much did you want to “finish” the VR task?

- Were you in suspense about whether or not you would finish or not the VR task?

- At any point did you find yourself become so involved that you wanted to speak to the VR environment directly?

- To what extent did you enjoy the graphics and the imagery?

- How much would you say you enjoyed VR task?

- When interrupted, were you disappointed that the VR task was over?

- Would you like to carry out the VR task again?

- The SSQ (Simulation Sickness Questionnaire [26,27])—each question was answered in 4 levels (1-less sick ~ 4-more sick). The questions were categorized into three groups; about nausea (N), disorientation (D) and oculomotor (O) aspects. Each category summed scores were weighted by 9.54 (N), 13.92 (D) and 7.58 (O), then multiplied by 3.74 as prescribed in [27] (total possible score = 812 when the sickness is at its maximum).

- General Discomfort (N)

- Fatigue (O)

- Headache (O)

- Eye Strain (O)

- Difficulty Focusing (O, D)

- Salivation Increased (N)

- Sweating (N)

- Nausea (N, D)

- Difficulty Concentrating (N, O)

- Fullness of the Head (O, D)

- Blurred Vision (O, D)

- Dizziness with Eyes Open (D)

- Dizziness with Eyes Closed (D)

- Vertigo (D)

- Stomach Awareness (N)

- Burping/Vomiting (N)

- The Usability Questionnaire—each question was answered in 7 level Likert scale (1-less usable ~ 5-more usable). The questions were categorized into six categories: ease of use, learnability, efficiency, fun, fatigue and satisfaction/preference.

- Ease of use: How easy was the given interface to use?

- Learnability: How easy were you able to learn the given interface?

- Perceived Efficiency: Did you perceive interface to be fast and accurate in achieving the objective of the task?

- Fun/Enjoyment: Did you enjoy using the given interface?

- Fatigue: Did you in anyway feel tired or fatigued in using the given interface?

- Satisfaction/Preference: Simply rate the given interface for general satisfaction.

References

- Google Cardboard. Available online: https://vr.google.com/cardboard/ (accessed on 4 September 2020).

- Samsung. Samsung Gear VR. Available online: http://www.samsung.com/global/galaxy/gear-vr/ (accessed on 4 September 2020).

- HTC. HTC Vive. Available online: http://www.vive.com/kr/ (accessed on 4 September 2020).

- Kim, Y.R.; Kim, G.J. Presence and Immersion of “Easy” Mobile VR with Open Flip-on Lenses. In Proceedings of the 23rd ACM Conference on Virtual Reality Software and Technology, Gothenburg, Sweden, 8–10 November 2017; pp. 8–10. [Google Scholar]

- Homido. Homido Mini VR Glasses. Available online: http://homido.com/en/mini (accessed on 4 September 2020).

- Kickstarter. Figment VR: Virtual Reality Phoneacse. Available online: https://www.kickstarter.com/projects/quantumbakery/figment-vr-virtual-reality-in-your-pocket (accessed on 4 September 2020).

- Bowman, D.; Kruijff, E.; LaViola, J.J., Jr.; Poupyrev, I.P. 3D User Interfaces: Theory and Practice; Addison-Wesley: Redwood City, CA, USA, 2004. [Google Scholar]

- Kim, Y.R.; Kim, G.J. “Blurry Touch Finger”: Touch-based Interaction for Mobile Virtual Reality. Virtual Res. under Review.

- Bowman, D.; Koller, D.; Hodges, L. Travel in immersive virtual environments: An evaluation of viewpoint motion control techniques. In Proceedings of the IEEE Virtual Reality Annual International Symposium, Albuquerque, NM, USA, 1–5 March 1997; pp. 45–52. [Google Scholar]

- Bozgeyikli, E.; Raij, A.; Katkoori, S.; Dubey, R. Point & teleport locomotion technique for virtual reality. In Proceedings of the ACM Annual Symposium on Computer-Human Interaction in Play, Austin, TX, USA, 16–19 October 2016; pp. 205–216. [Google Scholar]

- Burigat, S.; Chittaro, L. Navigation in 3D virtual environments: Effects of user experience and location-pointing navigation aids. Int. J. Hum. Comput. Stud. 2007, 65, 945–958. [Google Scholar] [CrossRef]

- Fuhrmann, A.; Schmalstieg, D.; Gervautz, M. Strolling through cyberspace with your hands in your pockets: Head directed navigation in virtual environments. In Virtual Environments; Springer: Berlin, Germany, 1998; pp. 216–225. [Google Scholar]

- Moerman, C.; Marchal, D.; Grisoni, L. Drag’n Go: Simple and fast navigation in virtual environment. In Proceedings of the IEEE Symposium on 3D User Interfaces, Costa Mesa, CA, USA, 4–5 March 2012; pp. 15–18. [Google Scholar]

- Nybakke, A.; Ramakrishnan, R.; Interrante, V. From virtual to actual mobility: Assessing the benefits of active locomotion through an immersive virtual environment using a motorized wheelchair. In Proceedings of the 2012 IEEE Symposium on 3D User Interfaces, Costa Mesa, CA, USA, 4–5 March 2012; pp. 27–30. [Google Scholar]

- Slater, M.; Steed, A.; Usoh, M. The virtual treadmill: A naturalistic metaphor for navigation in immersive virtual environments. In Virtual Environments; Springer: Berlin, Germany, 1995; pp. 135–148. [Google Scholar]

- Usoh, M.; Arthur, K.; Whitton, M.C.; Bastos, R.; Steed, A.; Slater, M.; Brooks, F.P., Jr. Walking> walking-in-place> flying, in virtual environments. In Proceedings of the ACM Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 8–13 August 1999; pp. 359–364. [Google Scholar]

- HTC. HTC Vive Controllers. Available online: https://www.vive.com/us/support/vive/category_howto/about-thecontrollers.html (accessed on 4 September 2020).

- Ware, C.; Osborne, S. Exploration and virtual camera control in virtual three dimensional environments. ACM SIGGRAPH Comput. Graph. 1990, 24, 175–183. [Google Scholar] [CrossRef]

- Lee, W.S.; Kim, J.H.; Cho, J.H. A driving simulator as a virtual reality tool. In Proceedings of the IEEE International Conference on Robotics and Automation, Leuven, Belgium, 20 May 1998; pp. 71–76. [Google Scholar]

- Bolton, J.; Lambert, M.; Lirette, D.; Unsworth, B. PaperDude: A virtual reality cycling exergame. In Proceedings of the ACM CHI Conference Extended Abstracts on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 475–478. [Google Scholar]

- Rosenberg, R.S.; Baughman, S.L.; Bailenson, J.N. Virtual superheroes: Using superpowers in virtual reality to encourage prosocial behavior. PLoS ONE 2013, 8, e55003. [Google Scholar] [CrossRef]

- Blizzard. “Overwatch”. Available online: https://playoverwatch.com/ (accessed on 4 September 2020).

- Møllenbach, E.; Hansen, J.P.; Lillholm, M. Eye movements in gaze interaction. J. Eye Mov. Res. 2013, 6, 1–15. [Google Scholar]

- Samsung. Samsung Galaxy S7. Available online: http://www.samsung.com/global/galaxy/galaxy-s7/ (accessed on 4 September 2020).

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum. Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Kennedy, R.; Lane, N.; Berbaum, I.K.; Lilenthal, M. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Bouchard, S.; Robillard, G.; Renaud, P. Revising the factor structure of the Simulator Sickness Questionnaire. Annu. Rev. Cyberther. Telemed. 2007, 5, 128–137. [Google Scholar]

- NASA TLX. “NASA Task Load Index”. Available online: https://en.wikipedia.org/wiki/NASA-TLX (accessed on 4 September 2020).

- Lewis, J. IBM computer usability satisfaction questionnaires: Psychometric evaluation and instructions for use. Int. J. Hum. Comput. Stud. 1995, 7, 57–78. [Google Scholar] [CrossRef] [Green Version]

| Interfaces | Direction Control | Movement Control |

|---|---|---|

| Head-directed + Touch-to-go (HD + TG) | Head rotation | Discrete touch |

| Head-directed + Touch-to-teleport (HD + TT) | Head rotation (+Touch) | Discrete touch |

| Head-directed + Joystick-to-go (HD + JG) | Head rotation (+Drag) | Continuous drag |

| Joystick-directed + Joystick-to-go (JD + JG) | Continuous drag | Continuous drag |

| Head-directed + Drag’n Go (HD + DG) | Head rotation (+Touch) | Continuous drag |

| Joystick-directed + Drag’n Go (JD + DG) | Continuous drag | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, Y.R.; Choi, H.; Chang, M.; Kim, G.J. Applying Touchscreen Based Navigation Techniques to Mobile Virtual Reality with Open Clip-On Lenses. Electronics 2020, 9, 1448. https://doi.org/10.3390/electronics9091448

Kim YR, Choi H, Chang M, Kim GJ. Applying Touchscreen Based Navigation Techniques to Mobile Virtual Reality with Open Clip-On Lenses. Electronics. 2020; 9(9):1448. https://doi.org/10.3390/electronics9091448

Chicago/Turabian StyleKim, Youngwon Ryan, Hyeonah Choi, Minwook Chang, and Gerard J. Kim. 2020. "Applying Touchscreen Based Navigation Techniques to Mobile Virtual Reality with Open Clip-On Lenses" Electronics 9, no. 9: 1448. https://doi.org/10.3390/electronics9091448