1. Introduction

Mobile eye-tracking devices, i.e., eye-tracking glasses usually comprise eye camera(s) for detecting pupils and a world camera for capturing the image of the scene. Gaze is calculated from the pupil images and projected to the image of the scene, which can reveal the information of a human being’s visual intention. Gazes can be identified as different eye movements. Fixation and saccade are two of the most common types of eye movement event. Fixation can be viewed as gaze being stably kept in a small region and saccade can be viewed as rapid eye movement [

1]. They can be computationally identified from eye tracking signals by different approaches, such as Identification by Dispersion Threshold (I-DT) [

2], Identification by Velocity Threshold (I-VT) [

2], Bayesian-method-based algorithm [

3] and machine-learning-based algorithm [

4].

In gaze-based HRI, fixation is often used as an indication of the visual intention of a human. In [

5], when a fixation was detected, an image patch is cropped around the fixation point and fed to a neural network to detect a drone. In [

6], fixation is used to determine if a human is looking at Areas of Interests (AOIs) in a mixed-initiative HRI study. In [

7,

8,

9], fixations were used for selecting an object to grasp. They were also used in the selection of a grasping plane of an object in [

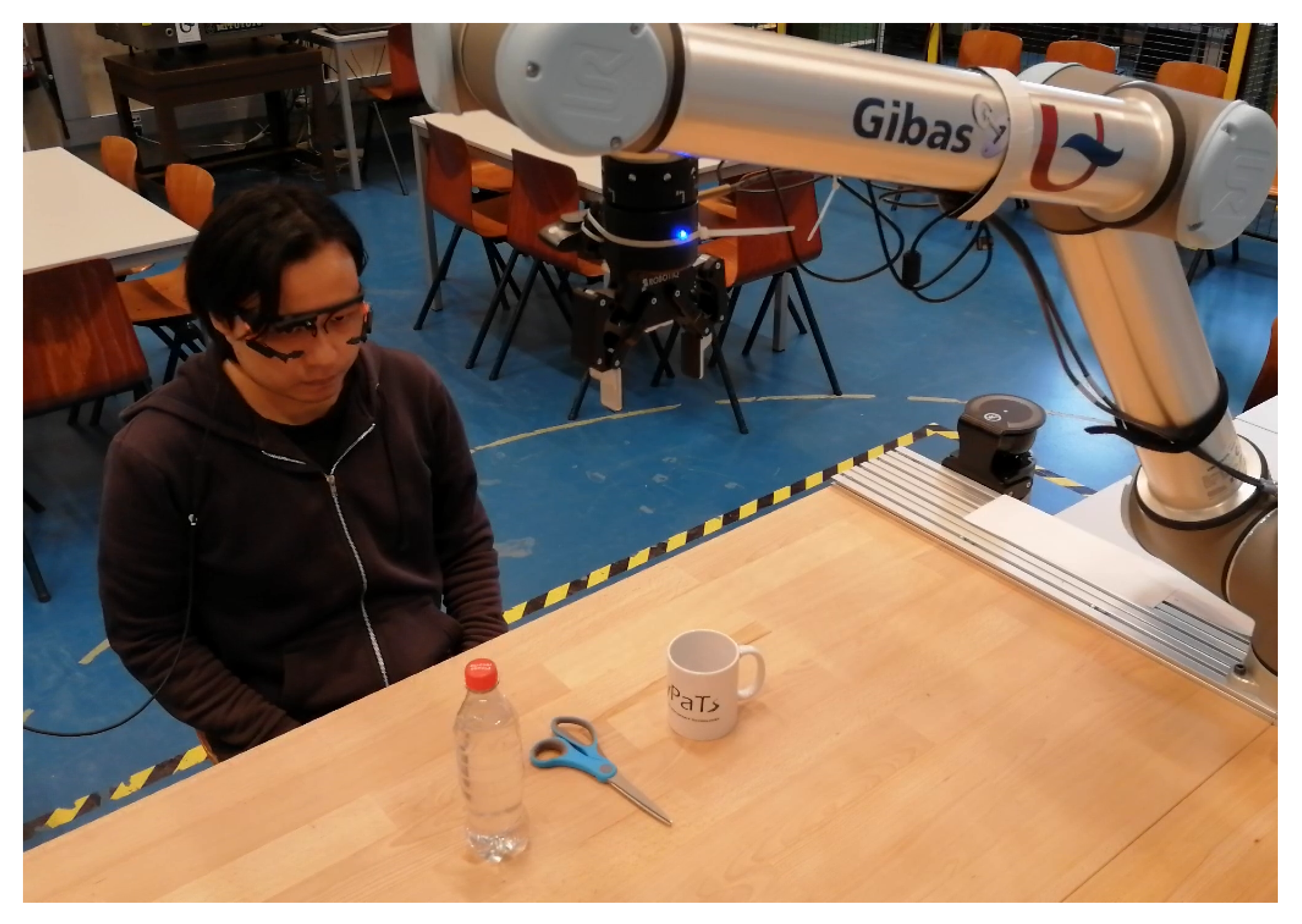

7]. However, there are limitations to using fixation to select an object for further actions. Consider a scenario such as that displayed in

Figure 1, where a human is wearing a mobile eye-tracking device and can select detected objects on the table by gaze and then let a robot pick the object up for him or her. The robot will receive the information of the selected object and plan the grasping task; it does not share the human gaze points.

One approach to determine if the human visual intention is focused on an object is to use fixation. When a fixation event is detected, the gaze points in the event are in a small region in the world image. If the fixation center is on the object, the visual intention is considered to be on the object. Using fixation to select an object requires that the human looks at a very small region of the object and tries to not move the eyes. When observing an object, the human gaze is not fixating on a single point (or a small region). The authors in [

10] demonstrated that human gazes, when observing an object, spread over different regions of the object.

Figure 2a displays the gazes within a duration spent looking at scissors. The human gazes over time are plotted in yellow dots. This implies that saccade may occur during the process of observation. When using the fixation-based approach, the visual intention may cause information loss in the sense that, when a saccade has happened, the human is still looking at the object.

Intuitively, considering all gaze points can overcome the problem that the saccadic gazes on the object are lost. HitScan [

11,

12] uses all gaze points when determining if the visual intention is on the object. However, there exists one more kind of noise which we refer to as gaze drift error. Both fixation based method and HitScan have this problem. On some occasions, the center of a gaze point may fall out of the bounding box while the human is still looking at the object. This kind of noise has various sources. First is the fluctuation of the size of the bounding box caused by the object detection algorithm.

Figure 2b gives one example. The figure shows two consecutive frames in one sequence. The gazes in two frames are located at the same position of the object, but the bounding box of the object changes. Second, a poor calibration would also result in this error. Moreover, the head-mounted mobile eye tracking device may accidentally be moved after calibration and the detected gaze will be shifted. Both fixation based method and HitScan based on checking if the gaze/fixation center is inside the object bounding box. Both will suffer from the gaze drift error. In addition, fixation based method will have information loss due to the saccades inside the bounding box.

When using gaze to select an object to interact with a robot, one issue is that the robot does not know if the human has decided on an object for interaction, even if the human visual intention is on the object. This is the Midas problem [

13]. One solution is to use a long dwell time to confirm the selection [

14]. Using fixation or HitScan with a long dwell time will also be less efficient due to the saccades and gaze drift error.

We propose GazeEMD, an approach to detect human visual intention which can overcome the limitations mentioned above. We form the question of detecting visual intention from a different perspective than checking if the gaze points are inside a bounding box. We compare the hypothetic gaze distribution over an object and the real gaze distribution to determine the visual intention. For a detected object, we generate sample gaze points within the bounding box. They can be interpreted as a hypothesis of where a human being’s visual focus is located. These sample points are formed as the hypothetic gaze distribution. The gaze signals from the mobile eye-tracking device provide information of the actual location a human is looking at. The actual gaze points are formed as the actual gaze distribution. The similarity between hypothetic gaze distribution and actual gaze distribution is calculated by Earth Mover’s Distance (EMD) distance. The EMD similarity score is used to determine if the visual intention is on the object. We conduct three experiments and compare GazeEMD with a Fixation-based approach and HitScan. The results show that the proposed method can significantly increase accuracy in predicting human intention with the presence of saccades and gaze shift noise.

To our best knowledge, we are the first to deploy EMD similarity to detect if the visual intention is on an object. Until recently, the fixation-based method and the method checking all gazes are still widely used in HRI applications, although both have the problem of gaze drift error. Little research focusing on solving this problem has been reported. The novel contributions of our work compared to the state-of-the-art are:

- 1

We proposed GazeEMD, which can overcome the problem of gaze drift error which the current state-of-the-art methods do not solve and capture saccadic eye movements when referring objects to a robot;

- 2

We show that GazeEMD is more efficient than the fixation-based method and HitScan when confirming selection, using a long dwell time which has not been reported in the literature before. The eye gaze is not required to be held in a small region.

The rest of the paper is organized as follows, In

Section 2, we review the related work. In

Section 3, we explain our method in detail. We describe the experimental setup and evaluation in

Section 4 and

Section 5. In

Section 6, the experimental procedure and evaluation method are presented, and

Section 7 is the discussion.

2. Related Work

The I-DT and I-VT [

2] are two widely used algorithms to identify fixation events. I-DT detects fixation based on the location of gazes. If the gazes are located within a small region, i.e., the coordinates of gazes are under de ispersion threshold, the gazes are considered as a fixation event. I-VT detects fixation based on the velocities of gazes. Gazes with velocities are under the velocity threshold are considered as a fixation event.

When a fixation event is detected, the fixation center is compared with the bounding box of the object to determine the visual intention. Some works use all gaze points to detect visual intention on objects. In [

14,

15], the accumulated gazes on objects are used to determine the object at which the user is looking. HitScan [

11] detects the visual intention by counting the number of gazes entering and the number exiting a bounding box. If the counts of gazes inside a bounding box is higher than the entering threshold, then an event is started. If the count of gazes consecutively located outside of a bounding box is higher, then the event is closed.

In gaze-based HRI, an important issue in referring to objects is the Midas Touch problem [

13]. If the gaze dwell-time fixating on an object is too short, then a selection is activated even if that is not the human intention. To overcome this problem, an additional activation needs to be made to confirm the human intention. The activation can be additional input devices [

16,

17], hand gestures [

18,

19] and eye gestures [

20,

21]. A common solution to overcome the Midas Touch problem is using a long gaze dwell-time [

14,

22] to distinguish the involuntary fixating gazes, which are rarely higher than 300 ms. Several HRI works have adopted this solution. In [

23,

24], dwell time is set to 500 ms to activate the selections of AOIs. In [

8,

9], the 2D gazes obtained from eye-tracking glasses are projected into 3D with the help of an RGB-D camera. The fixation duration is set to two seconds to confirm the selection of an object in [

8]. A total of 15 gaze points on the right side of the bounding box are used to determine the selection in [

9]. In [

21], gaze is used to control a drone. A remote eye-tracker is used to capture the gazes on a screen. Several zones are drawn on the screen with different commands to guide the drone. Dwell time from 300 ms to 500 ms is tested to select a command to control the drone. In [

25], a mobile eye-tracker and a manipulator are used to assist surgery in the operating theatre. A user can select an object by looking at the object for four seconds. Extending gaze dwell time to overcome the Midas Touch problem in HRI has proven valid regardless of the type of eye-tracking device and application scenario. However, a long dwell time would increase the user’s cognitive load [

17]. The users deliberately increase the duration time, which means extra effort is needed to maintain the gaze fixation on the object or AOI. Furthermore, if a user fails to select an object, the long dwell time will make the process less efficient and also increase the user’s effort. Such disadvantages are critical to users who need to use the device frequently, such as disabled people using gaze to control wheelchairs.

Dwell time has also been evaluated with other modalities of selection. In [

26], dwell time is compared with clicker, on-device input, gesture and speech in VR/AR application. Dwell time is preferred as a hands-free modality. In [

17], dwell time obtains a worse performance than the combination of dwell time and single-stroke gaze gesture in wheelchair application. When controlling drone [

21], using gaze gestures is more accurate than using dwell time, although it takes more time to issue a selection. Depending on the different dwell times and applications, the results of these works differ. There is no rule of thumb to select the best specific modality; dwell time still has the potential to reduce the user discomfort and increase efficiency, provided that the problems mentioned in

Section 1 are overcome.

Our proposed approach uses EMD as the metric to measure the similarity of two distributions. EMD was first introduced into the computer vision field in [

27,

28]. The EMD distance was also used in image retrieval [

27,

29]. The information of histograms of images were derived to construct the image signatures

and

where

,

,

m and

,

,

n are the cluster mean, weighting factor and number of clusters of the respective signature. Distance matrix

is the ground distance between

P and

Q and flow matrix

describes the cost of moving “mass” from

P to

Q. EMD distance is the normalized optimal work for transferring the “mass”. In [

29], EMD is compared with other metrics, i.e., Histogram Intersection, Histogram Correlation,

statistics, Bhattacharyya distance and Kullback–Leibler (KL) divergence to measure image dissimilarity in color space. EMD had a better performance than the other metrics. It was also shown that EMD can avoid saturation and maintain good linearity when the mean of target distribution changes linearly.

A similar work [

30] also uses EMD distance to compare the gaze scan path collected from the eye tracker and the gaze scan path generated from images. The main differences between their work and ours are: (i) In [

30], the authors use a remote eye tracker to record gaze scan paths when the participants are watching images. We use First Person View (FPV) images and gazes record by a head-mounted eye tracking device. (ii) We want to detect if the human intention is on a certain object, and their work focuses on generating the gaze scan path based on the image.

4. Experiment

We conduct three experiments to evaluate the performance of our proposed algorithm. First, we see the performance on single objects. Second, we evaluate the case with multiple objects and last is free viewing. In all experiments, three daily objects, i.e., bottle, cup and scissors, are used. Although we only test with three objects, GazeEMD can generalize well on different objects, since the hypothetic gaze distribution is generated based on the size of the bounding box. The objects are placed on the table. The participant wears the eye-tracking glasses and sits next to the table and conducts the experiments.

4.1. Experiment Procedure

4.1.1. Single Objects

In this experiment, each participant performs three experiment sessions. In each session, a different object is used. At the beginning of one session, the participants look at the object first and then look away from the object. During the “look away” period, the participants can freely look at any place in the scene except the object.

4.1.2. Multiple Objects

In this experiment, each participant performs one experiment session. In the session, all objects are placed on the table at the same time. Participants look at objects one by one, in order, and repeat the process several times. For instance, a participant looks at the scissors, bottle and cup sequentially, and then looks back at the scissors and performs the same sequence.

4.1.3. Free Viewing

The scene setup of the experiment is the same as Multiple Objects: instead of looking at objects with a sequence, the participants can freely look at anything, anywhere in the scene.

4.2. Data Collection and Annotation

We asked seven people to participate in the experiments. All participants are aged between 20 and 40, and all of them are researchers with backgrounds in engineering. All the people voluntarily participated in the experiments. One of the participants has experience in eye tracking. The rest had no prior experience in eye tracking.

A researcher with eye-tracking knowledge and experience labelled the dataset. The annotator used the world image to label the data. The object-bounding boxes and gaze points are drawn in the world images. All datapoints are annotated sample by sample. For the Single Objects experiment, an algorithm can be viewed as a binary classifier, i.e., whether the visual intention is on an object or not. The annotations are clear since the visual intention on and off the object is distinguishable. In the phase of looking at the object, even if gazes are outside of the bounding box, they can be labeled as “intention on object”. Conversely, in the phase of looking away from the object, all data can be labeled as “intention not on object”. In the Multiple Objects and Free Viewing experiment, an algorithm acts as a multi-class classifier. If a participant looks at none of the objects, it is treated as a null class. The labeling in the Multiple Objects experiment is also clear, since the sequence of shifting visual intention between objects is distinguishable. During the free viewing period, the annotator subjectively labels the data by experience.

4.3. Implementation

We used Pupil Labs eye-tracking glasses [

33] for eye tracking. The frame rate of the world image and the eye-tracking rate are both set to 60 fps. The YOLOv2 object detector is implemented by [

34].

For the GazeEMD, we calculated the optimal thresholds for each object by Equation (

5). The calculation used the data from the Single Objects experiments. The thresholds were applied in the Multiple Objects and the Free Viewing cases too. The number of bins and the histogram range in GazeEMD is 10 and 715. They were used in all experiments. The I-DT for fixation detection we used is also implemented by [

33]. The dispersion value for the I-DT is three degrees. It was used in all experiments. The selection of parameter

and

are described in

Section 5.1.

7. Discussion and Conclusions

In this work, we propose a new approach to determine visual intention for gaze-based HRI applications. More specifically, our algorithm GazeEMD determines which object a human is looking at by calculating the similarity between the hypothetical gaze points on the object and the actual gaze points acquired by mobile eye tracking glasses. We evaluate our algorithm in different scenarios by conducting three experiments: Single Objects, Multiple Objects and Free Viewing. There are two constraints in the Single Objects. The scene is rather clean—only one object exists at a time—and the participants are asked to look at the object first and then look away. We use this constrained setting for three reasons. First, it is easier to evaluate the performance of GazeEMD as a binary classifier and select appropriate threshold values for different gaze lengths. Second, we can remove the noise from annotation to better evaluate the noises caused by gaze drift error and variations in bounding boxes. Finally, we can create sequences with medium and long gaze lengths (1000 ms and 2000 ms), which are essential for confirming the selection of an object by long dwell time. Evaluating the long gaze lengths is equivalent to solving the Midas problem with a long dwell time in HRI applications.

The results demonstrate that the GazeEMD has excellent performance, as well as the ability to reject the gaze drift error.

Table 2 and

Table 3 show that GazeEMD has the highest mean Kappa and F1 scores on both the sample-to-sample level and event level. When the gaze length is 1000 ms and 2000 ms, the mean Kappa and F1 scores are significantly higher than the fixation-based method and HitScan. For the bottle, when the participants look at the object,

of the gazes are outside of the bounding box. This indicates that GazeEMD has a better performance than the fixation-based method and HitScan, when gaze drift errors occur. The same conclusion can be drawn for the cup and scissors. We extend the evaluation from the single-object case to the scene with multiple objects and free viewing. GazeEMD still has higher Kappa and F1 scores (

Table 5 and

Table 7) than Fixation and HitScan, except the cases of 90 ms and 2000 ms in the Multiple Objects experiment, where the F1 scores are 0.055 and 0.031 lower than the HitScan. Nevertheless, the results are still comparable in these two cases.

In a lot of gaze-based HRI applications, a human needs to interact with objects. A common case is the selection of an object to be picked up by a robotic manipulator. One key issue in this kind of interaction is confirming the selection of an object. The robot knows which object a human is looking at, but does not know that he or she has confirmed the selection of a certain object without additional information, i.e., the Midas problem. One approach to this is looking at the intended object for a longer time, i.e., a long dwell time. This scenario is equivalent to the 2000 ms in the Single Objects experiment. If the gaze dwells on the object for 2000 ms, the object is considered to be selected and confirmed. The robot can perform further steps. For the fixation-based approach, voluntarily increasing the fixation duration will be helpful to increase the number of successful confirmations, but it will also increase the cognitive load [

38]. Another downside of fixation is that it cannot capture the saccadic gazes located within the bounding box. This means that using the fixation-based approach will miss the information from gained from the human gaze moving to different parts of the object. This will interrupt a long fixation; hence, the human needs to try harder to select an object for interaction, which will increase the cognitive load. By using an algorithm such as HitScan, which considers all gazes within the bounding box, this problem could be eliminated. However, both the fixation-based approach and HitScan still cannot deal with the gaze drift problem. The trials would potentially be increased to confirm the selection. However, GazeEMD can overcome these problems. GazeEMD detects more events and has higher accuracy than fixation and HitScan (

Table 3 and

Table 4). This indicates that GazeEMD has more successful confirmations of the selection than the other two methods. This is important in the real application, where the users constantly use gaze for interaction, such as disabled people who will need wheelchairs and manipulators to help with daily life. The GazeEMD also has an excellent performance with a short dwell time (90 ms). It can be applied to the cases in which the gaze and object need to be evaluated but interaction with the object is not required, such as analyzing gaze behavior during assembly tasks [

39], or during the time in which an object is handed between humans or human and robot [

40].

We propose using GazeEMD to detect whether the human intention is on an object or not. We compared GazeEMD with the fixation-based method and HitScan in three experiments. The results show that GazeEMD has a higher sample-to-sample accuracy. Since the experimental data contain gaze drift error, i.e., the intention is on the object while the gaze points are outside of the object bounding box, the higher accuracy of GazeEMD indicates that it can overcome the gaze drift error. In HRI applications, a human often needs to confirm the selection of an object so that the robot can perform further actions. The event analysis with long gaze lengths in Single Objects experiments shows the effect of using a long dwell time for confirmation. The results show that GazeEMD has higher accuracy on the event level and more detected events, which indicates that GazeEMD is more efficient than the fixation. The proposed method now can detect the human intention in the scenario where the detected bounding boxes of the objects are not overlapped. One future research direction could be further developing the algorithm so that the gaze intention can be detected correctly when two bounding boxes are overlapped.