1. Introduction

Chile pepper, as the signature crop of the state of New Mexico (NM), plays an important role for many small acreage growers in the predominately Hispanic and Native American population areas in the state [

1,

2]. The green chile (

Capsicum annuum) crop is entirely hand harvested in New Mexico, requiring a large number of farm laborers during a relatively narrow harvest window. High cost and limited labor availability, as reported throughout the U.S. agriculture sector [

3,

4], are main contributing reasons for reduced chile pepper production in the state of New Mexico over the past two decades. In 2020, the harvested area for the chile pepper was reported to be 3440 ha (8500 acres) [

5], which was significantly declined from 13,962 ha (34,500 acres) in 1992 [

6]. Harvest labor costs up to 50 percent of the total production cost in USA. The studies have shown that the cost can be reduced up to 10 percent with mechanization or use of robotic harvesting [

6]. Despite the extensive attempts to use mechanized chile pepper harvester as early as 1972 [

7], these systems have not been adopted by industry for chile pepper harvest. Mechanized harvesting for the chile, specifically, and other crops, in general, has four major tasks [

8]. The first task is dividing that the machine separates the fruit from the bushes and leaves as it goes from one plant to another, to avoid damage to the plant and the harvested fruit. Second, being the most important is the detachment of the fruit from the plant. The third step is to grasp and convey the fruit, after being removed from the plant. Fourth is the transportation from the field to the post-processing site and the consumer market. Detachment task in robotic harvesting has extra importance because it is challenging, crop-specific, and has the highest effects on the economic value of the fruit.

In New Mexico, NM-type red chile and paprika, NM-type long green chile, and Cayenne peppers are the three main types of chile peppers that contribute the most to the overall chile industry. Each of these three types of chile requires a different type of mechanization for harvesting, as they differ in size, shape, and uses. Additionally, the product damage for the chile peppers which are sold as fresh market item, like green chile pepper, is not acceptable, which makes the mechanization of harvesting more challenging. Heavy-duty and large harvesting machines can perform in larger field areas where only one harvesting cycle is needed for the crop, as the mechanized harvesters, mostly destroy the plant in their first harvesting process [

9]. Moreover, mechanized harvesting is not selective harvesting, so it brings up the bushes and sometimes soil with the fruit. On the other hand, cultivation in a greenhouse and on small farms has been increasing with time, where these harvesters cannot be used because of their larger size. These facts point to the necessity of using other advanced technologies, such as robotic harvesters, for selective and adaptive harvesting, which should be small enough to work in a canopy and harvest from a variety of plants in greenhouses. Such robotic harvesting solutions can also be used in fields.

Robotic systems have been used in farming systems to address growing global challenges in agriculture [

3,

4,

10]. Harvesting has been significantly affected by a consistently shrinking and increasingly more expensive labor force; therefore, there has been substantial research related to the design and development of field robots for harvesting [

10,

11]. Robotic arms (manipulators) are designed or selected from commercially available platforms with degrees-of-freedom (DoF) varying between two to seven with the majority of them having three [

10,

12,

13,

14]. However, none of the past research in harvesting robots has offered systematic analysis and design for the selection of the number of DoF [

10]. On the other hand, robotic end-effectors are mostly custom made and specialized for specific crop harvesting. Some of these robotic end-effector grasp and hold the fruit using suction cups [

15,

16] or a combination of suction cups and robotic fingers [

17,

18]. The detachment process is carried out using thermal cutting, scissor cutting, or physical twist and pulling for detaching the fruits at the stem [

12,

14,

19].

There have been a lot of research efforts in robotic harvesting for fruits, which are hanging on small plants near the ground [

20], including berry and tomato [

14,

21,

22,

23]. The fruit removal mechanism adopted by these robots includes: (1) cutting the stem [

13,

15] and (2) gripping or grasping the fruit and applying a normal force to destem. For example, Gunderman et al. [

21] used a soft gripper for berry harvesting which takes into account the fingertip force, fruit size and shape, and nature of the plant. Hohimer et al. [

24] recently presented a 5-DoF apple harvesting robotic arm with a soft gripper as end-effector and the detachment mechanism. Some of the other robotic systems use the manipulator’s movement to apply force for the detachment [

16,

25], rather than just using the torque by the wrist joints. Despite the variation in their core concept, all of the designs considered the requirement of delicate grasping to avoid fruit damage [

26,

27].

All these harvesting techniques, which grip the fruit and pluck it by applying force have been mainly applied for trees and rigid stem plants. However, for delicate plants, this method can create a lot of damage to the unharvested fruit and plant itself. Harvesting the fruit by cutting the pedicle (the stem holding the fruit with plant) is very useful when the subject plant is very delicate and cannot sustain a lot of force from the robotic manipulator. In this method, there is no extra force applied on the plant, rather the robot simply cuts the pedicle and takes off the fruit. For instance, Liu et al. [

15] developed a litchi picking robot with a cutting mechanism. The pneumatic force is transmitted to the cutting blades through a tendon and rigid bar. Two designs are discussed and compared, where a detailed quality analysis comparison is shown for harvesting, through hand picking, manual mechanized picking, and the harvester picking. Laser cutting is also studied as the cutting technique for detachment of spherical fruit by Liu et al. [

28]. Jia et al. [

29] developed an integrated end-effector which can grip the fruit and then cut the stem, as a single device, using a clutching mechanism. The actions of holding and cutting are designed as a logical sequence action.

Task and motion planning are also essential parts of robotic harvesting systems. A few works studied task planning strategies for harvesting which are mainly based on coverage path planning to pick all available fruits in a scene [

16] or to minimize the required time for moving between fruits [

30]. The direct displacement towards the desired position of the end-effector is the most common approach for path planning of the robotic manipulators. This approach was achieved using position-based control [

19] and visual feedback control [

31]. Other control algorithms, like fuzzy control [

19], combined PD and linear quadratic technique [

32], and impedance control method [

33], for coordinating robotic manipulators and grasp modulation, have been employed.

Despite all of these efforts for harvesting different crops [

10], there has not been any effort towards design and development of robots/intelligent machines for harvesting chile pepper. Aforementioned issue in regards to mechanized machines has been a huge factor in preventing transition to mechanical harvest, since human laborers can selectively pick marketable green fruit, while mechanical harvesters completely strip all fruit, including immature pods that could provide for a later harvest [

8,

9]. Robotic harvesters could potentially selectively harvest marketable green chile fruit and successful implementation of robots for NM green chile could reverse the decline of acreage in the state (from 38,000 acres in mid-1990s to 8400 in 2018). However, the complexity of the chile pepper crops environment would be a challenging factor regarding hardware design, computer vision, and motion/control algorithms to achieve an effective robotic harvester. The best strategy is to break down the complexity of the problem.

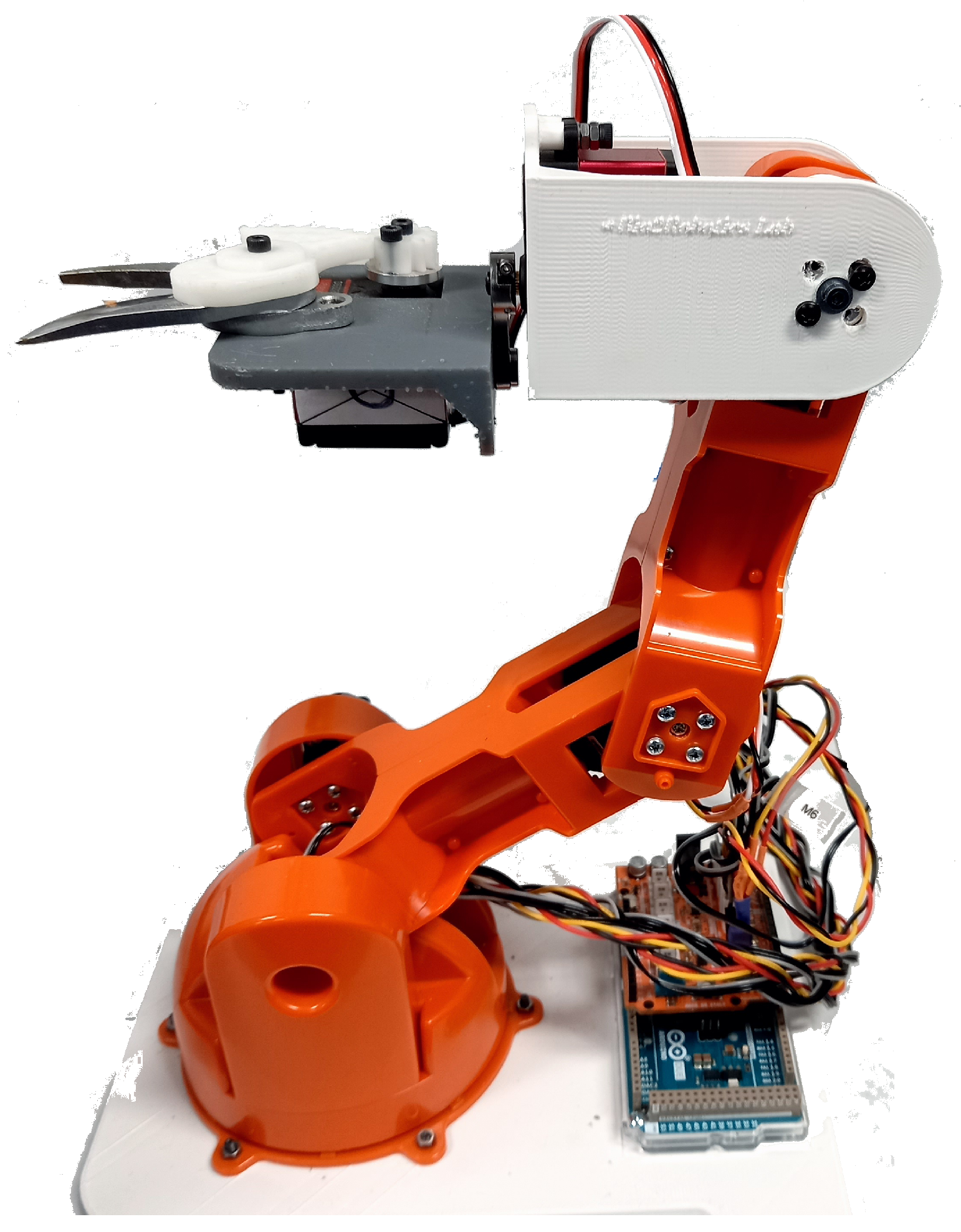

This work presents a feasibility study of using a robotic arm and customized end-effector for harvesting chile pepper. The structure of green chile pepper plant and random distribution of fruits all around it would require use of a more dexterous robotic arm with 5 to 7 degrees-of-freedom. A rigid link commercial robot Tinkerkit Braccio Arm with 5 DoF is modified and used along with a cutting mechanism to detach the chile pods from the plant in a laboratory setting. Similar to the other low-hanging crops on small plants, like berries and tomato, the cutting mechanism was considered as the primary option for the end-effector. The scope of this paper is to cut the fruit stem with a cutting mechanism while to keep the plant and other unharvested fruit safe. A MATLAB™-based software is developed which localizes the fruit in 3D space, based on identification of the fruit in the image by the human operator. For this purpose, Intel RealSense Depth Camera D435i is used for collection of stereo and RGB images. The reachable and dexterous workspace of the robot was studied with respect to the spatial distribution of chile peppers to determine the optimal configuration of the robot for maximum harvesting rate. Motion planning in Cartesian space was carried out for manipulation and harvesting tasks. The operation of the robotic harvesting for chile pepper was examined in a series of bench-top testing for a total of 77 chile peppers. The obtained data were analyzed, and harvesting metrics were determined, to assess the proof-of-concept and feasibility of using the robotic system for chile pepper harvesting.

The organization of this paper is as follows.

Section 2 gives an overview about the overall robotic harvesting system and its components. In

Section 3, the details of the Harvesting robotic arm, forward and inverse kinematic, workspace analysis, customized end-effector design, and prototyping will be discussed.

Section 4 introduces the vision-based fruit localization, camera setup, definition of the frames, and the error analysis.

Section 5 presents the study of chile pepper’s geometrical features and distribution of chile pods on the plant grow in a pot. In

Section 6, motion planing strategy and the harvesting scheme will be introduced. Finally,

Section 7 discusses the results of the experimental harvesting and obtained harvesting indicators.

4. Vision-Based Fruit Localization

A vision-based fruit localization was used to determine the coordinates of the chile pepper and its stem. Location of an object in camera frame

can be estimated in 3D space, given the camera intrinsic and extrinsic. For this purpose, Intel RealSense™ Depth Camera D435i is used, which provides an RGB and depth images. Additionally, the camera is equipped with an Inertial Measurement Unit (IMU), which is used to determine the relative orientation of the camera frame

with respect to the world frame

. Vision-based identification or classification of the chile fruit is not the scope of this research, so RGB image plays an important role for the user (human operator) to identify chile pods in the image and click on its location with possibly minimal error, as compared to a greyscale depth image. The camera provides 3D image, as well, which may be taken as 3D point cloud data in correlation to every depth image pixel. By combining both RGB and depth image, we can co-relate an image pixel with its 3D location in the camera frame. The location of the targeted point is required to be known in the robot frame

. Another frame called the world frame

is introduced at a known point in the robot frame. All these frames and the camera setup is shown in

Figure 9.

The robot is placed on a lab bench top with its coordinates shown in

Figure 9, and the coordinate frame is denoted as

. The

plane of this frame is aligned with the bench top. A global frame, called world frame and denoted as

, is introduced. Location of camera and the origin of the robot frame can be measured in

as there are fixed for the whole operation. Z-axis of frame

is perfectly aligned with gravity whether the frame

may not be aligned because of inherent inclination of the table and the floor. The rotation matrix

describes the camera frame with respect to the world frame. The accelerometer readings (

,

, and

) were used to solve for the roll and pitch angles (

and

), and we assumed the yaw angle (

) known due the fixed and known pose of the camera with respect to the benchtop test setup in this study. We externally verified the yaw rotation by aligning the horizontal grid line (see

Figure 9).

where

and

are normalized accelerometer readings and gravity vectors, respectively. Solving for the roll and pitch angles (

and

) gives:

which yields:

substituting these terms back into

with

yields:

where

In a practical real-world setting with a moving camera, as opposed to the current case, an extra orientation measuring sensor (e.g., electronic compasses or 9-DoF IMU sensor) will be used to calculate the required rotation angles and the rotation matrix.

In Equation (

4),

,

, and

are the normalized accelerations with the units of

g being the gravitational acceleration. When camera is setup on the tripod, these values can be taken once from the Intel RealSense Viewer software and entered into the MATLAB™ program. The origin point of the world frame was marked on the table and can be seen in the camera image. Using a measuring, the 3D location

of camera frame origin can be extracted in the world frame. The homogeneous transformation from

frame to

frame can be written as

The rotation of the robot frame with respect to the world frame takes into account any tilt in the table top. There is no rotation around the

z-

because both the frames are on the table top. The table tilt angles

and

about the

x-

and

y-

, respectively, were determined once, using an inclinometer, after the table had setup. This rotation matrix can be written as

where

and where , .

The position of the world frame

W is already known in the robot frame. Thus, the transformation is given as:

The combined homogeneous transformation, mapping the camera frame into the robot frame, is obtained as follows: .

Given the position of any point

in the camera frame, that can be transformed into the position of the same point in the robot frame. This can be written as:

4.1. Performance of 3D Localization and Camera

A test setup was developed to measure the accuracy of the 3D location estimation.

Figure 9 shows the test setup with a known grid marked on the table top. As the measurements are estimated in 3D, there must be some known position elevated from the table’s surface. A grid marked bar was designed with a flat surface which may be placed at any known point in 2D plane to raise its

z coordinate value. Note that the vertical bar should be at right angle with the table, but it may not be aligned with gravity vector, as the inclination of table has already been accommodated in the transformations. Following are the possible sources of the error in the 3D localization system. The overall error in the measurement system is given in

Table 3.

4.1.1. Human Operator Error

As mentioned previously, the human operator identifies chile peppers in the image and additionally by clicking on the fruit location it provides a reference point for the robot to position its end-effector for the detachment task. The human operator error, in this case, is due to either the challenge of repeatability of clicking on the same pixel on the image (repeatability) or clicking on a point off the fruit (accuracy). This is very important factor since our robot positioning of stems relies on the reference point location provided by the human operator. Thus, human error is one of the most contributing factor in the integrated error found in this vision-based localization measurement system.

4.1.2. Depth Camera

The depth camera installed does have an inherent error in the depth measurement. This error is unsystematic and needs to be taken as the Original Equipment Manufacturer (OEM) claims. It should be considered that up to 2% error may be there in the measurement of depth when measured in the range of 2 m. Most of these error contributions can be minimized by taking an average of multiple readings. Taking multiple readings is only possible when the image frame or the scene being captured, is stationary. For moving frames, reducing this error can be a challenging task.

4.1.3. Accelerometer

Camera is always in stationary condition for this specific application. In this case, the only acceleration component, that the embedded accelerometer can read is the gravitational vector. The gravitational vector is divided into three Cartesian components, which are normalized and used for orientation of the camera, as given in Equation (

4). But there is a known issue with this estimation of orientation. Any rotation about the gravitation vector does not reflect in the orientation matrix. This part was externally verified before taking measurements, by ensuring that each horizontal (along

x-axis of the robot frame) grid line in the camera frame should be perfectly horizontal in the frame. This means that all the points on each horizontal line should have same

x-pixel value in the image frame. Due to this, there may have some random error in the external verification.

7. Results and Discussion

A study has been conducted through a series of experiments to verify the performance, show feasibility of using robotic system, and formulate the potential factors for unsuccessful cases. Generally, performing this study in an indoor (Laboratory) setting has eliminated some of the common challenges in outdoor settings due to the controlled environment, such as working in a structured environment (fixed table and fixed camera view), consistent and controlled light, and disturbances due to wind. Some of the other simplifying assumptions we made in this work include: (1) fruits are fully exposed to the computer vision and the robot (we moderately trimmed the leaves); (2) average geometrical features of chile pepper pods were used for the robot positioning; and (3) the stems are vertically oriented.

The cutting mechanism and technique is proper for the hanging fruits only when the pedicle is separated from other stems and plant objects, and the length of the pedicle is long enough to selectively cut it. As shown in

Figure 15a, in some cases, the length of the pedicle is short for the cutting mechanism to selectively cut it for detachment. A higher precision robot may be able to do this job, provided that the width of the cutter is also small enough. Another issue is that, in some cases, there is a big cluster of fruits on the same point in the plant (

Figure 15b). First, it is very hard for the vision-based localization system to capture the exact chile pepper’s 3D location; secondly, it is a hard job for the cutting mechanism, as well, to cut the correct chile, in that case. It may increase the damage rate, as well. Another challenging case is shown in

Figure 15c, where the chile pepper pedicle (target stem) is very close to the main or sub-branch of the plant. In this case, the robot may damage the plant and, eventually, all the unharvested fruit on that sub branch.

In addition to the aforementioned limitations, the 5-DoF robot can only reach to chile peppers which is approachable from the center of the robot without any obstacle in pathway. The main stem of the plant is one major obstacle, in such a case, when the robotic manipulator is static on a platform. All the chile pepper which have the main stem of the plant in the pathway of robot, cannot be localized by the robot. Using, the same data of 57 chile pepper, as in

Section 5, a MATLAB™ simulation is run to see the effect of the main stem of the plant in the pathway to the chile pepper.

Figure 16 shows that, out of 57 chile peppers, 21 (which makes it 36.8%) are in the region which not reachable for the robot because of the stem. The simulation shows that the orientation of the plant may change the percentage value. But, in this study, the orientation of the plant was not intentionally selected to increase the success rate; rather, it was arbitrarily chosen.

Chile harvesting was performed by an image click on the MATLAB™-based program, which finds the location of the chile and sends the coordinates to Arduino. The same test setup shown in

Figure 2 was used. The robot was raised 120 mm high reference to the chile pot, through a platform. The chile pots were placed at 180 mm at x-axis in the robot frame. These values were found out as the best optimum location of the robot where it can reach maximum number of chile pepper as per the chile distribution data. Because the camera is fixed at one location, some of the view-blocking leaves were trimmed, in order to see all the chile pepper in the image frame. An example of harvesting operation is shown in

Figure 17. For the recorded videos of the operation, please refer to the

Supplementary Materials, Video S1.

A number of trials were performed to asses the localization success rate, detachment success rate, harvesting success rate, cycle time, and damage success rate. These performance metric are defined by Reference [

10] for harvesting in a canopy. They are interpreted for lab testing as follows:

Fruit localization success [%]: The number of successfully localized ripe fruit per total number of ripe fruit taken for testing in the lab setup.

Detachment success [%]: The number of successfully harvested ripe fruit per total number of localized fruit.

Harvest success [%]: The number of successfully harvested ripe fruit per total number of ripe fruit taken for testing in the lab setup.

Cycle time [s]: Average time of a full harvest operation, including human identification, localization, and fruit detachment.

Damage rate [%]: The number of damaged fruit of pedicles per total number of localized ripe fruit, caused by the robot.

Localization is considered correct only if the stem is found to be in the center of two blades of the cutter. Based on 77 ripe fruit taken for harvesting, the summary of harvest testing results are shown in

Table 5. For more details and the collected raw data used for these calculation please refer to the

Supplementary Materials, Tables S1 and S2.

Comparison of performance of a robotic harvester to human laborer is a valid point which can indicate whether the use of robot is effective/efficient and economical or not. However, to the best of our knowledge, direct information regarding hand-pick harvesting performance indicators has not been collected. Although, based on our personal experience in the lab, the human clipping, for example, is faster but taking into account the burden of this repetitive task and the capability of robots to perform repetitive tasks in a longer period of time compared to human laborer, the obtained 7 s cycle is justified. Additionally, the average cycle time for different harvesting robots, reported in Reference [

10], is 33 s with a large range of 1 to 277 s, which makes our robotic harvester’s cycle time performance to be in a reasonable range.

Based on the previous studies, in this matter, 6.9% is an acceptable rate for fruit damages using mechanized/robotic harvesting. Bac et al. [

10] reported on average the fruit damage rate for robotic harvesters (for different crops) is 5% with the low of 0% and high of 13%. Particularly, the fruit damage rate was reported for chile pepper mechanized harvester as low as 7% to 11% which is stated as “acceptably low” [

9]. In some other aggressive mechanized harvesters, the fruit damage rate was reported as high as 20% [

8]. In our future work, we will use in-hand visual servoing and related motion planning to improve this harvesting factor.

In this study, the localization of stem was carried out by a combination of the human operator fruit detection and robot pre-motion planning algorithm. Therefore, the real localization which must be vision-based had not be implemented. In this study, we defined the localization in a slightly different way, and it was considered correct only if the stem is found to be in the center of two blades of the cutter. As it is mentioned, this is the result of the fruit identification by the human operator and other pre-adjustment in the robot motion planning based on our knowledge from the fruit geometrical analysis section. Despite the simplified assumptions, this task was facing a higher rate of failure compared to the other factors due to more complexity of stem and plant structure; please refer to

Figure 15. One solution to resolve this issue and improve the rate is to use an in-hand camera (camera mounted at the wrist) to provide real-time and close-up information about the stem geometrical feature, and then the robot would be able to perform on-the-fly adjustment to position the cutter in an optimal position for a successful detachment.

The obtained results for robotic harvesting using a 5-DoF robot show promising for future studies based on the presented feasibility study and the observed challenges, which are specific to the case of chile pepper harvesting. The results indicate that using a robotic arm with more degrees-of-freedom could improve the chile pepper harvesting performance. Moreover, the outcomes of this study indicate the importance of mobility of manipulator to achieve higher harvesting rate. For example, if the same manipulator is mounted on top of a mobile robot, then it can reach all the plants from all sides, which would increase the localization and harvesting success rate. To achieve fully autonomous harvesting robot for chile pepper, our overarching goal, integration of two major components into the current system are needed. First is computer vision which requires fruit identification and localization. As many robotic harvesting applications rely on color differences, it will be critical to establish features capable of distinguishing between the green foliage of the chile plants and the green chile pods themselves, as in

Figure 3. Work in detection of other crops with a similar identification challenge, such as green bell peppers [

35,

36] and cucumber [

37], provides some guidance on identifying green fruits based on other features (e.g., slight variations in color or texture (i.e., smoothness) or spectral differences in NIR). Leveraging machine learning and the capabilities of convolutional neural networks (CNNs), which have shown excellent performance in image classification and segmentation, could overcome this challenge. Second, from the standpoint of robot operation, using more advance motion planning and control algorithms are required to perform them.

8. Conclusions

This paper presents a feasibility study on the robotic harvesting for chile pepper, in a laboratory setting, using a 5-DoF serial manipulator. A simple and easy to develop 3D location estimation system was developed with considerably minimal mean error which can be efficiently used for various research applications which involve data logging. The estimation system is designed linear, which does not consider affinity and other hardcore computer vision theories, in the trade-off of a considerable effort to setup for each data logging session. The first of its nature, a detailed study is performed on the chile pepper plant and fruit to study the feasibility and challenges for robotic harvesting of chile pepper, which is immensely needed for researchers who are contributing in this field. Based on the collected data, it was shown that an optimum location of the robotic arm can be extracted. For Braccio robot arm, the optimum location was found to be , with respect to the world frame. The forward and inverse kinematics of Braccio robot was derived for workspace analysis and motion planning. A chile pepper harvesting robot is developed with a customized cutting mechanism, in which its performance was examined in a series of in-laboratory harvesting tests. The developed harvesting robot showed promising results with localization success rate, detachment success rate, harvest success rate, damage rate, and cycle time of 37.7%, 65.5%, 24.7%, 6.9%, and 7 s, respectively. With this study, it is shown that the chile pepper harvesting is feasible with robotics, and, in the future, more efforts should be put into this area by the researchers. By eliminating the inherent drawbacks and limitations in the presented system, the success rate can be increased.