Graph-Based Framework on Bimanual Manipulation Planning from Cooking Recipe

Abstract

1. Introduction

2. Related Works

3. Proposed Method

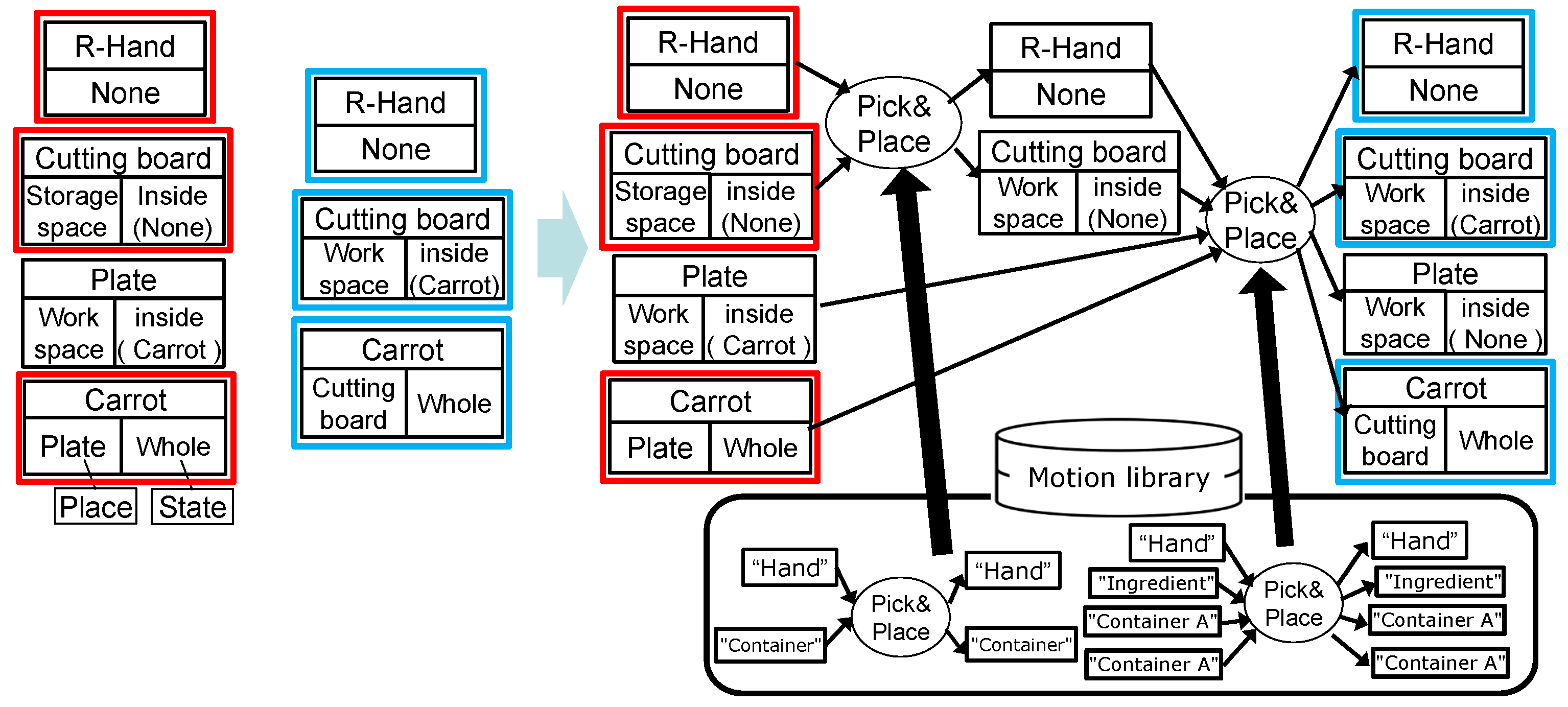

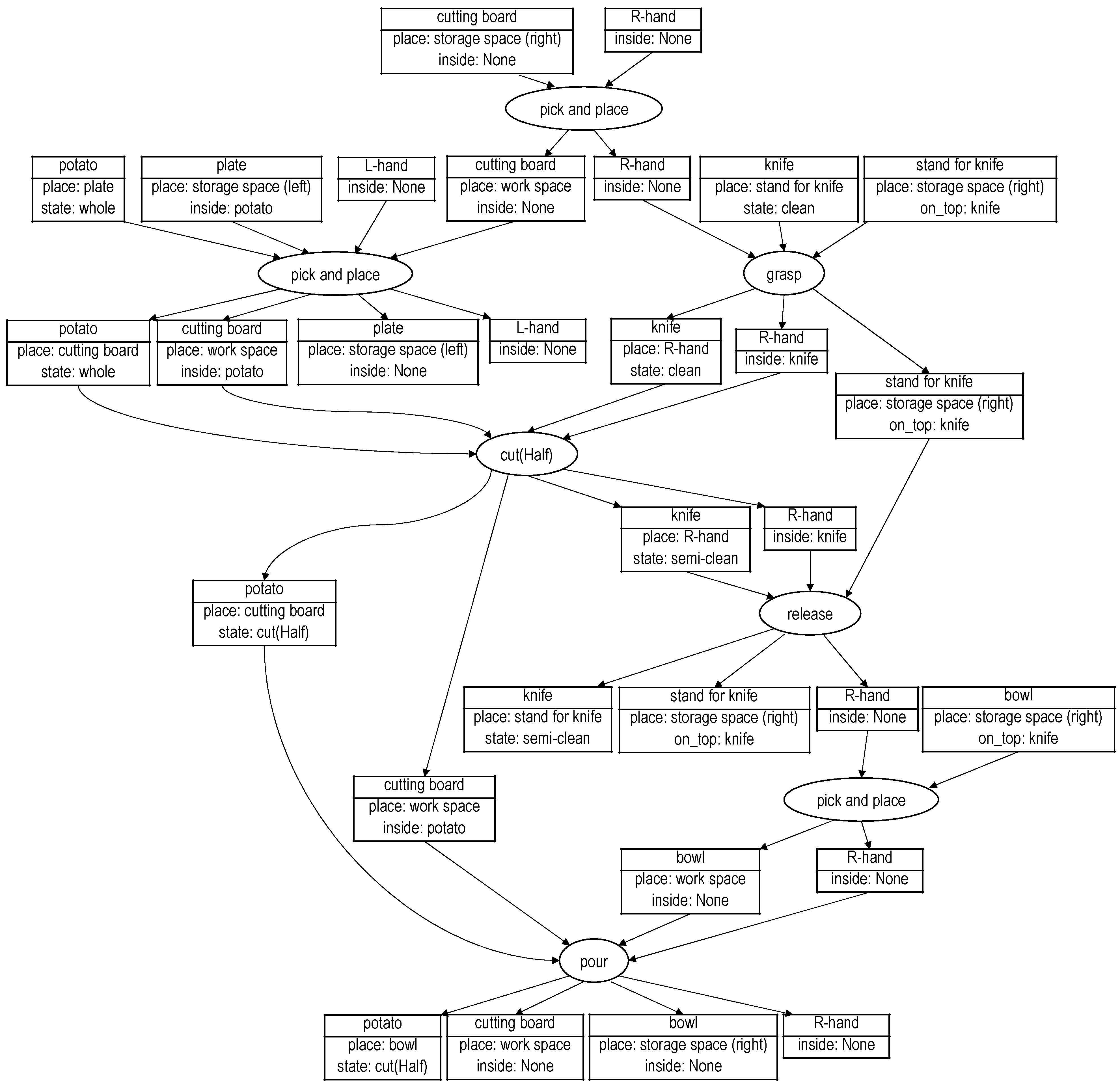

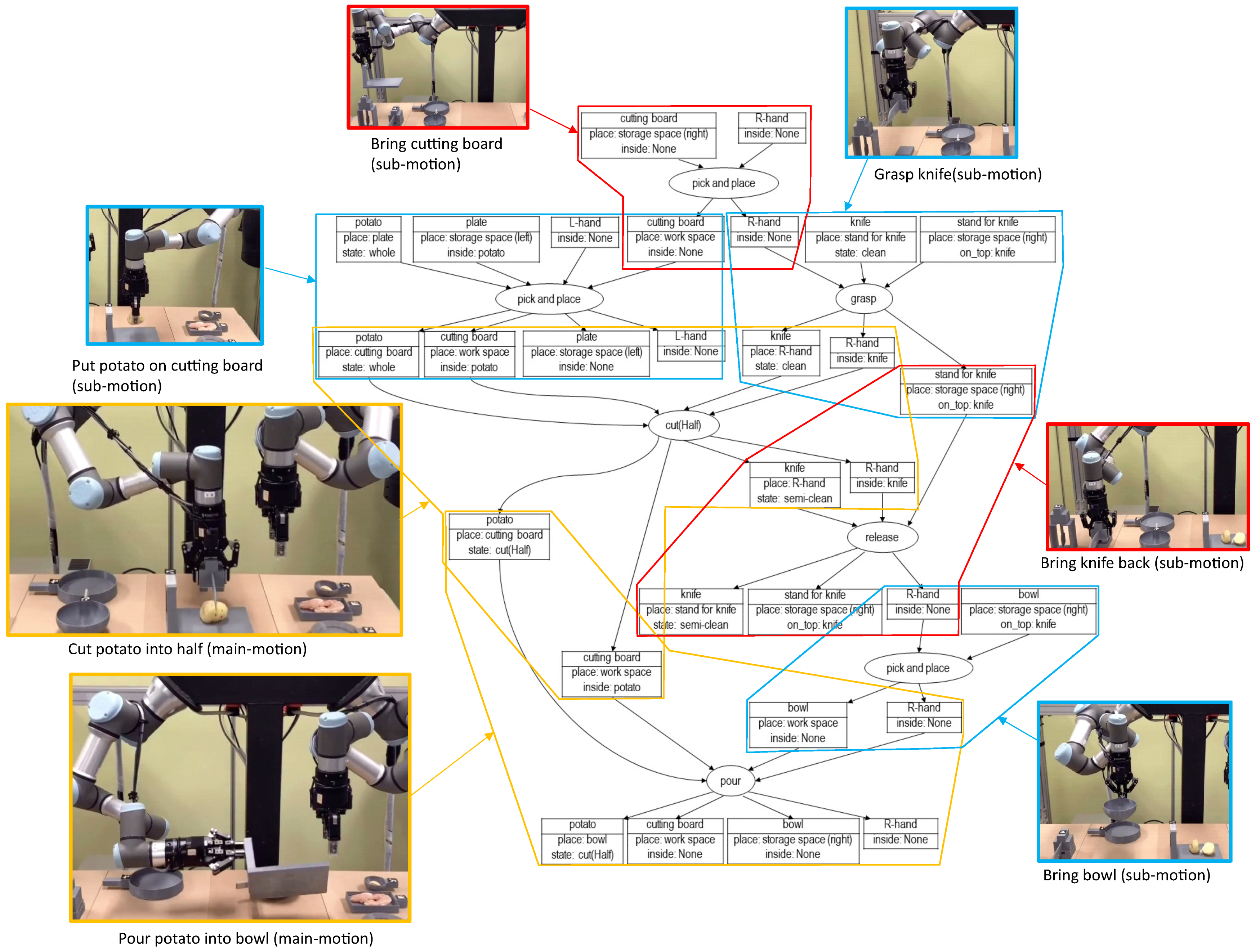

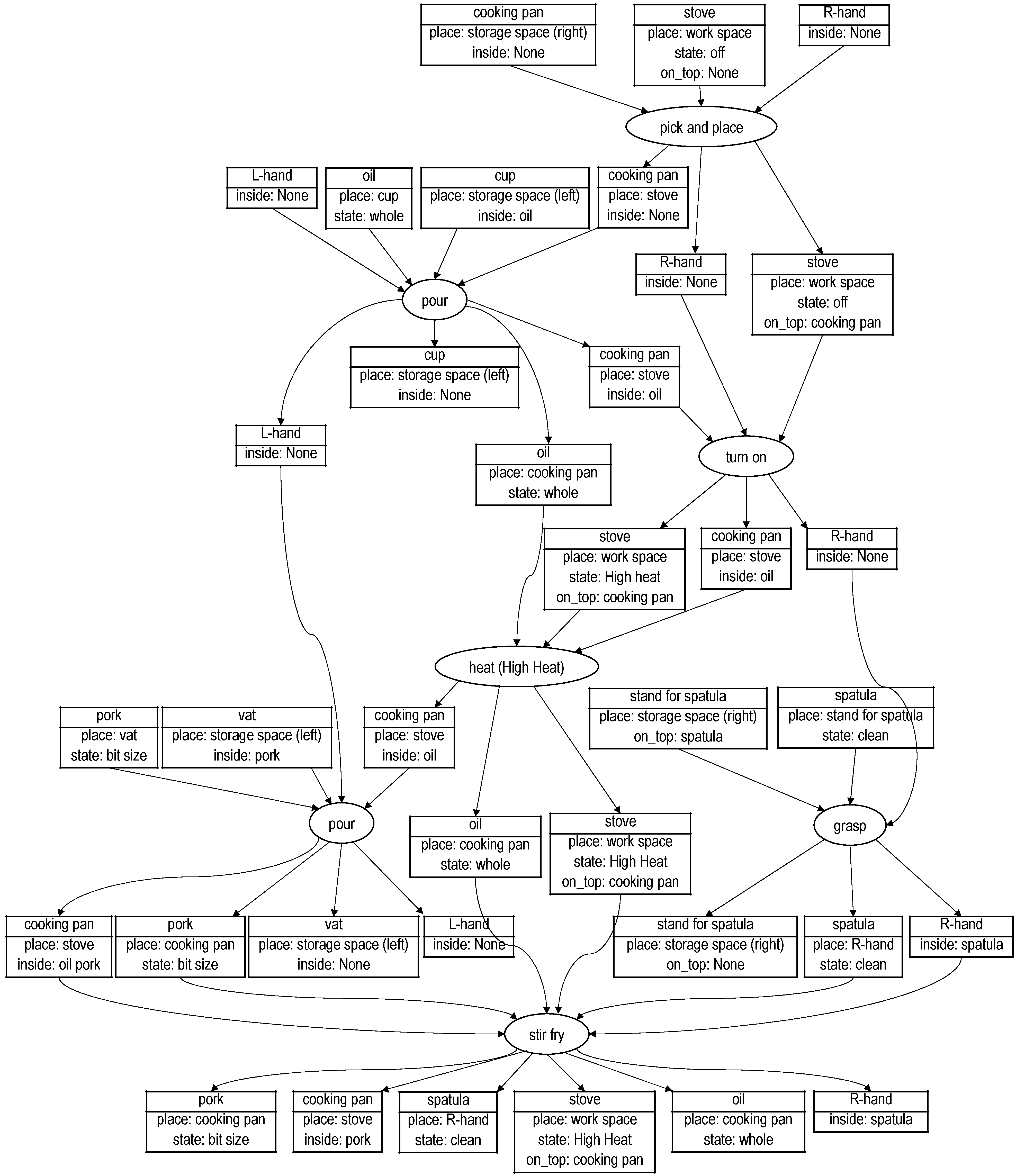

3.1. Graph Structure

3.1.1. Object Node

3.1.2. Hand Node

3.1.3. Motion Node

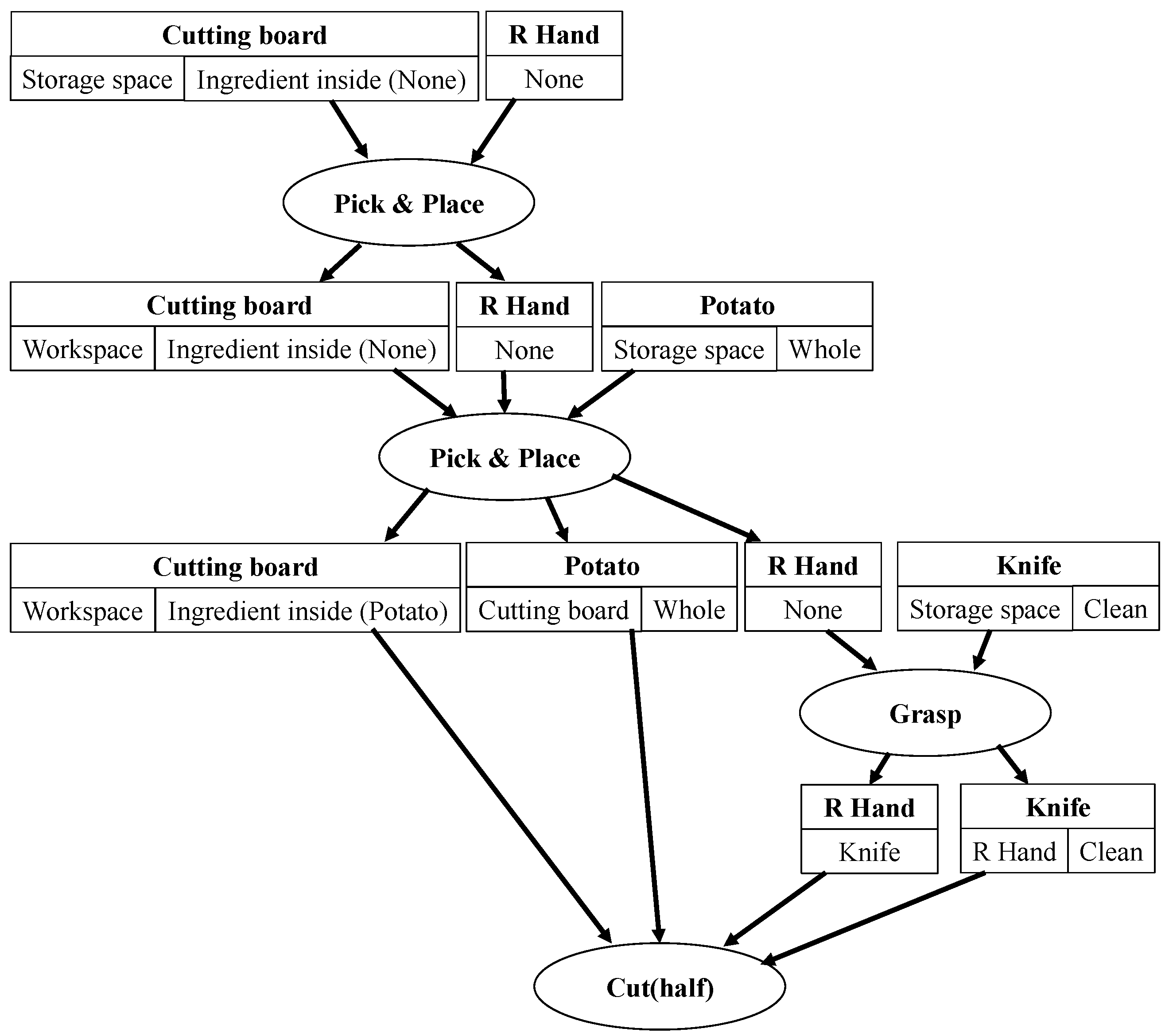

3.1.4. Functional Unit

3.1.5. Discussion

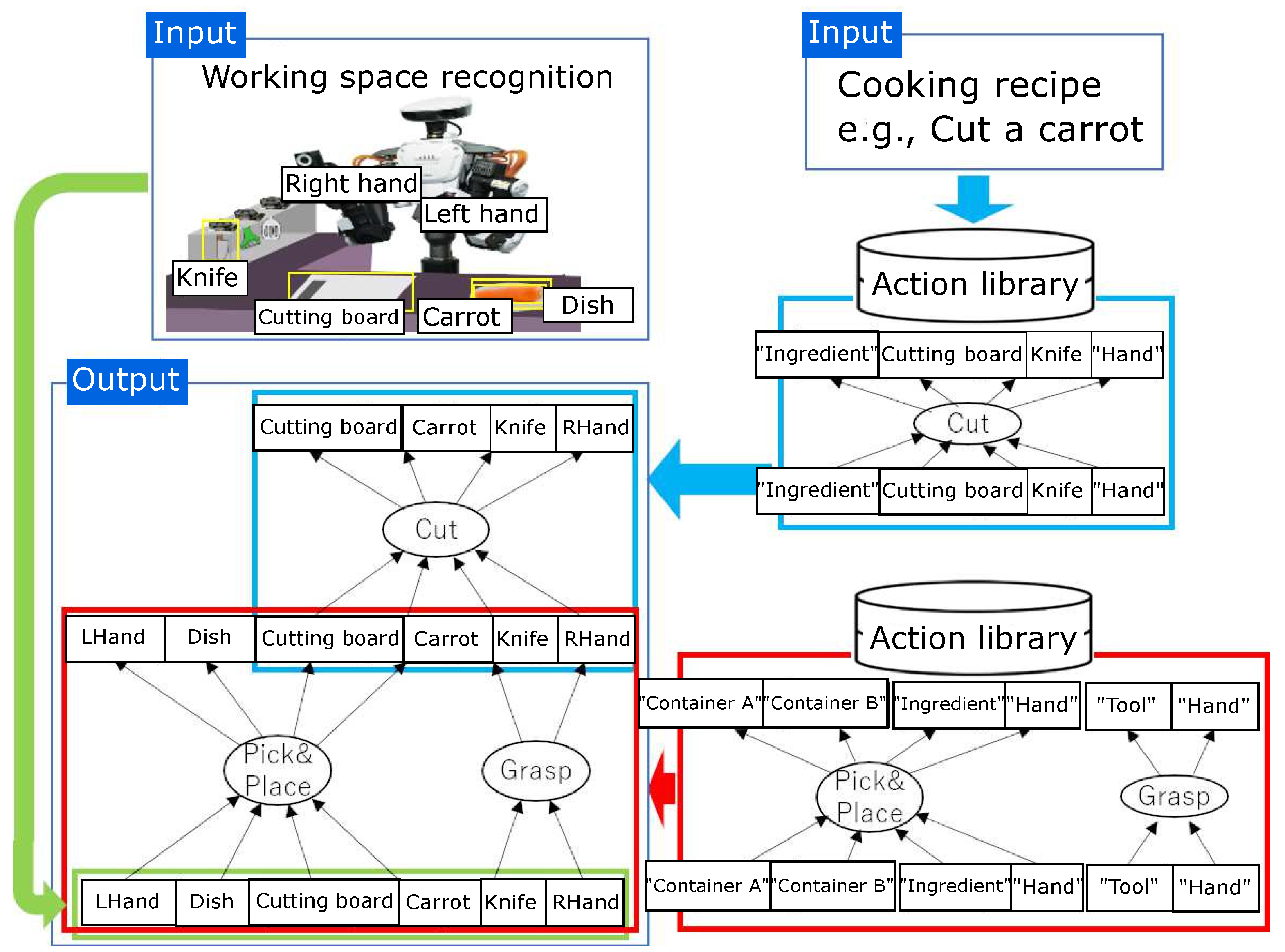

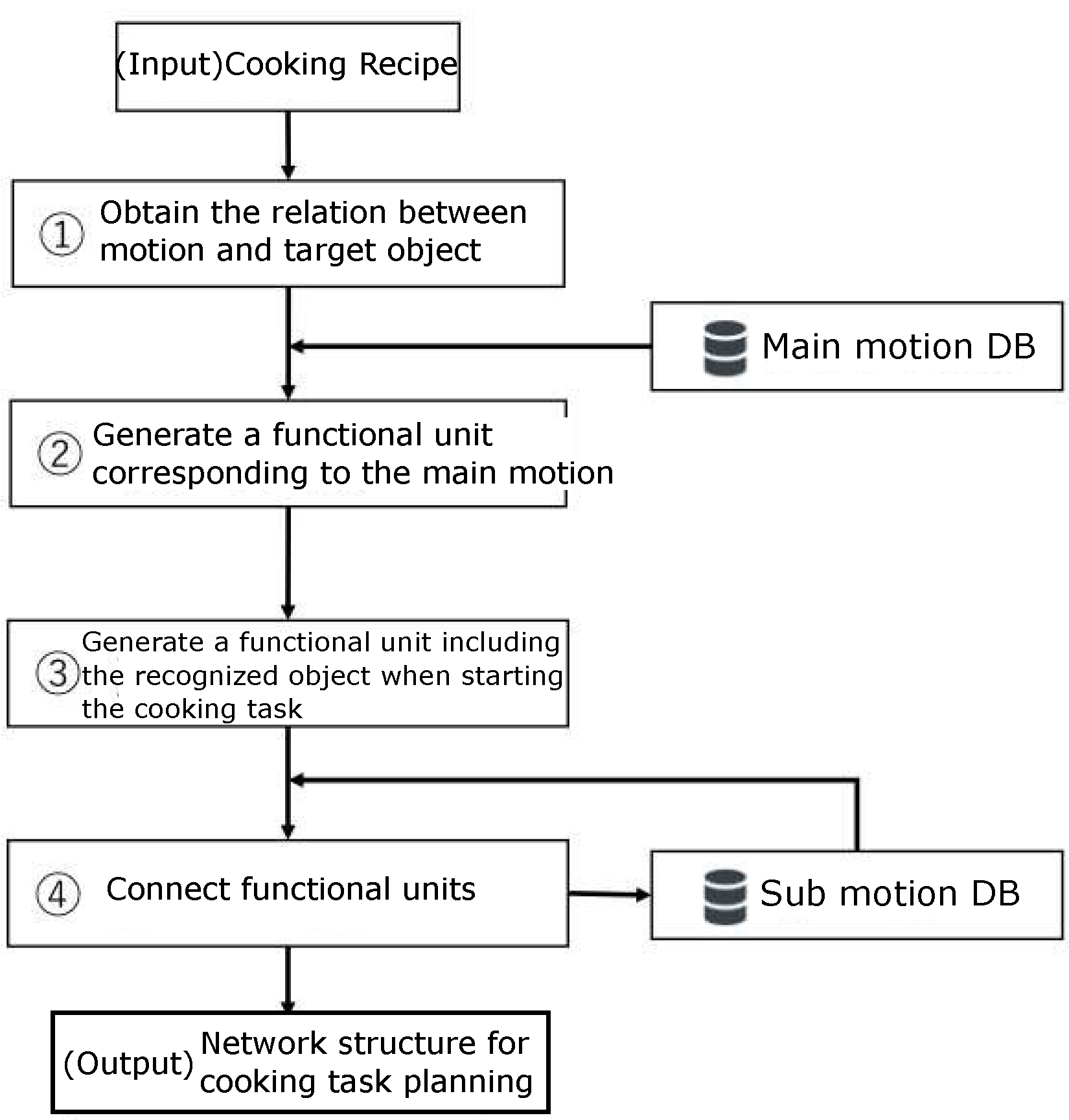

3.2. Graph Construction

3.2.1. Motion Database

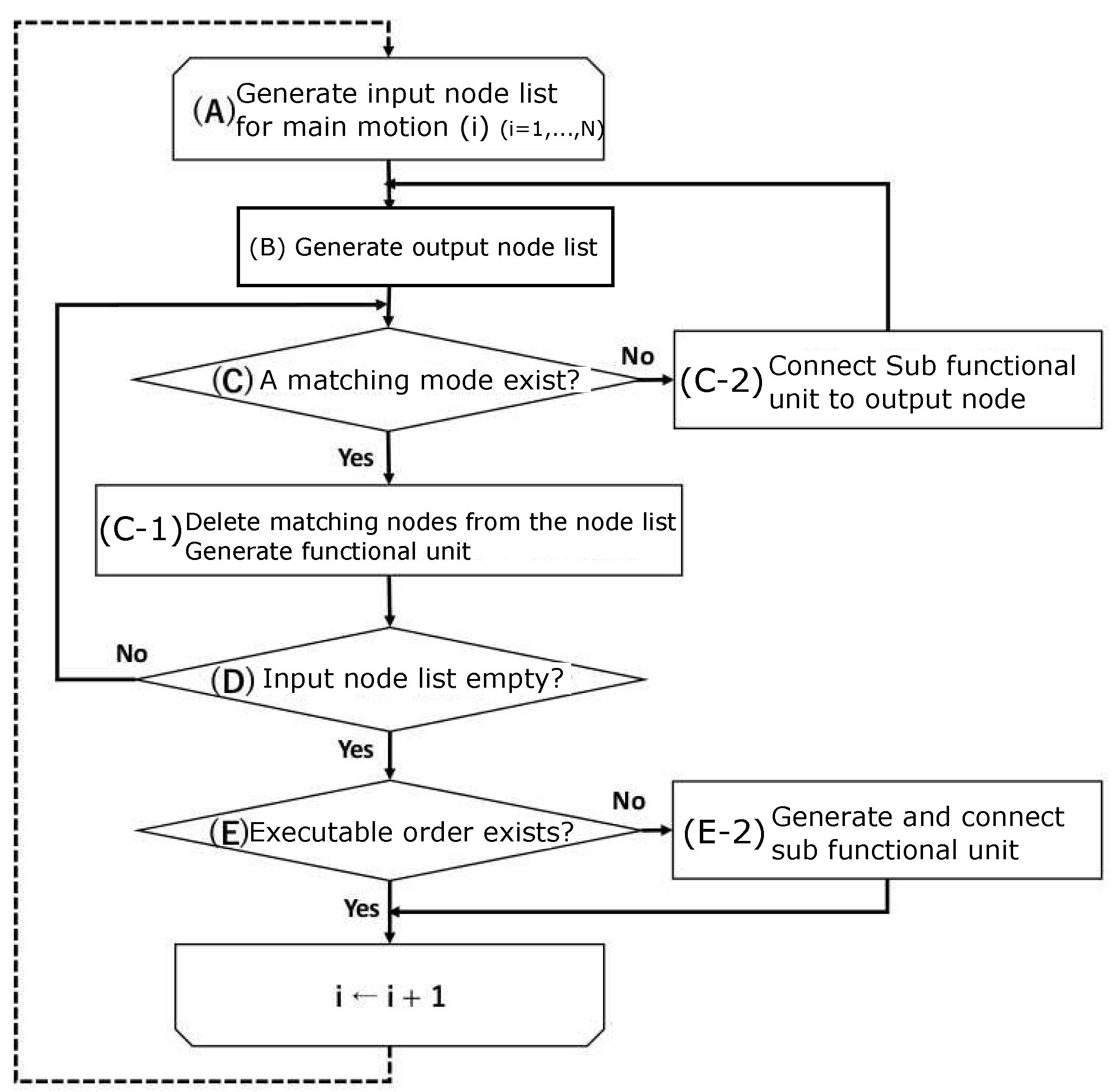

3.2.2. Graph Connection

3.3. Bimanual Task Planning

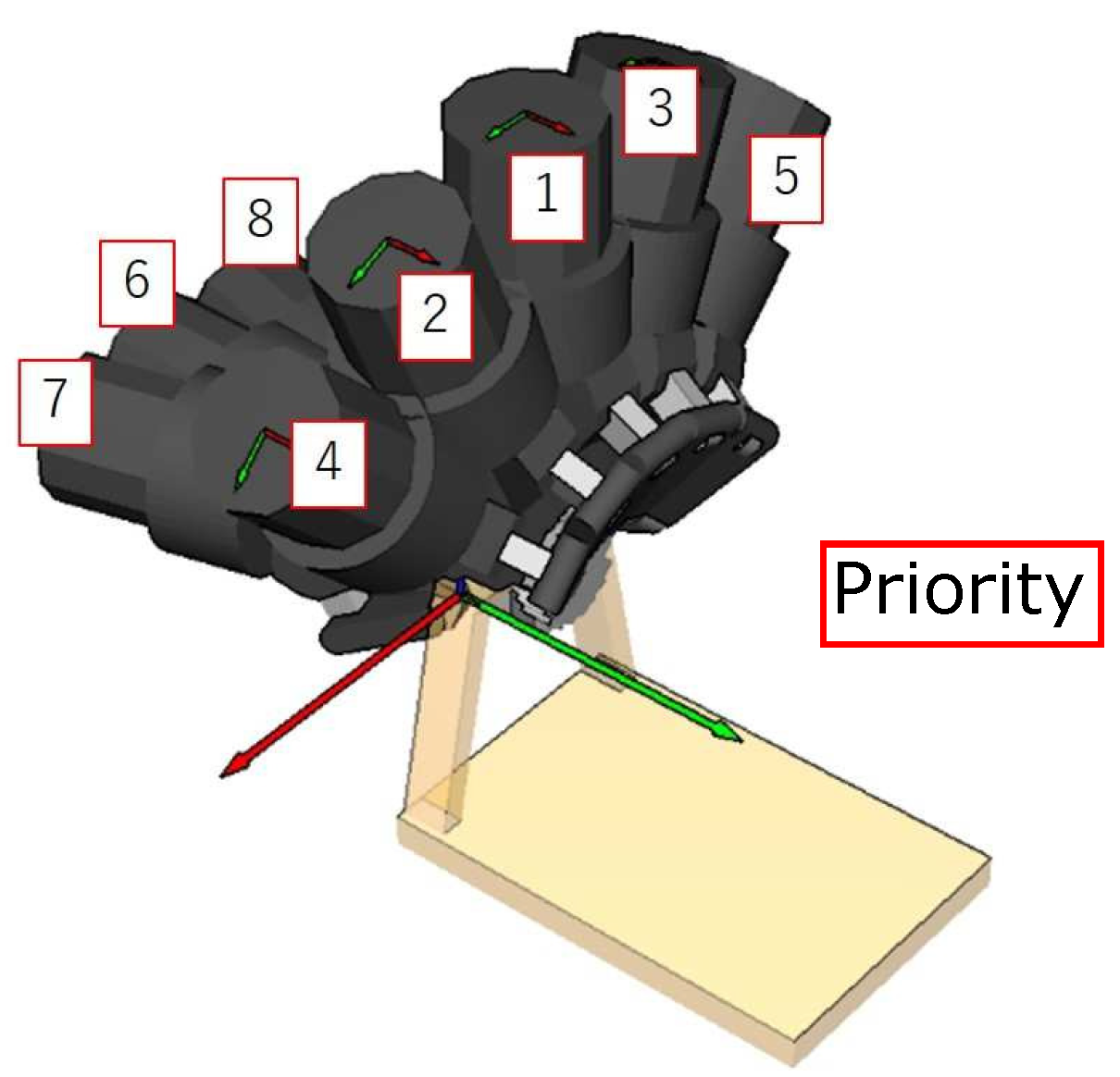

3.4. Motion Planning

4. Experiment

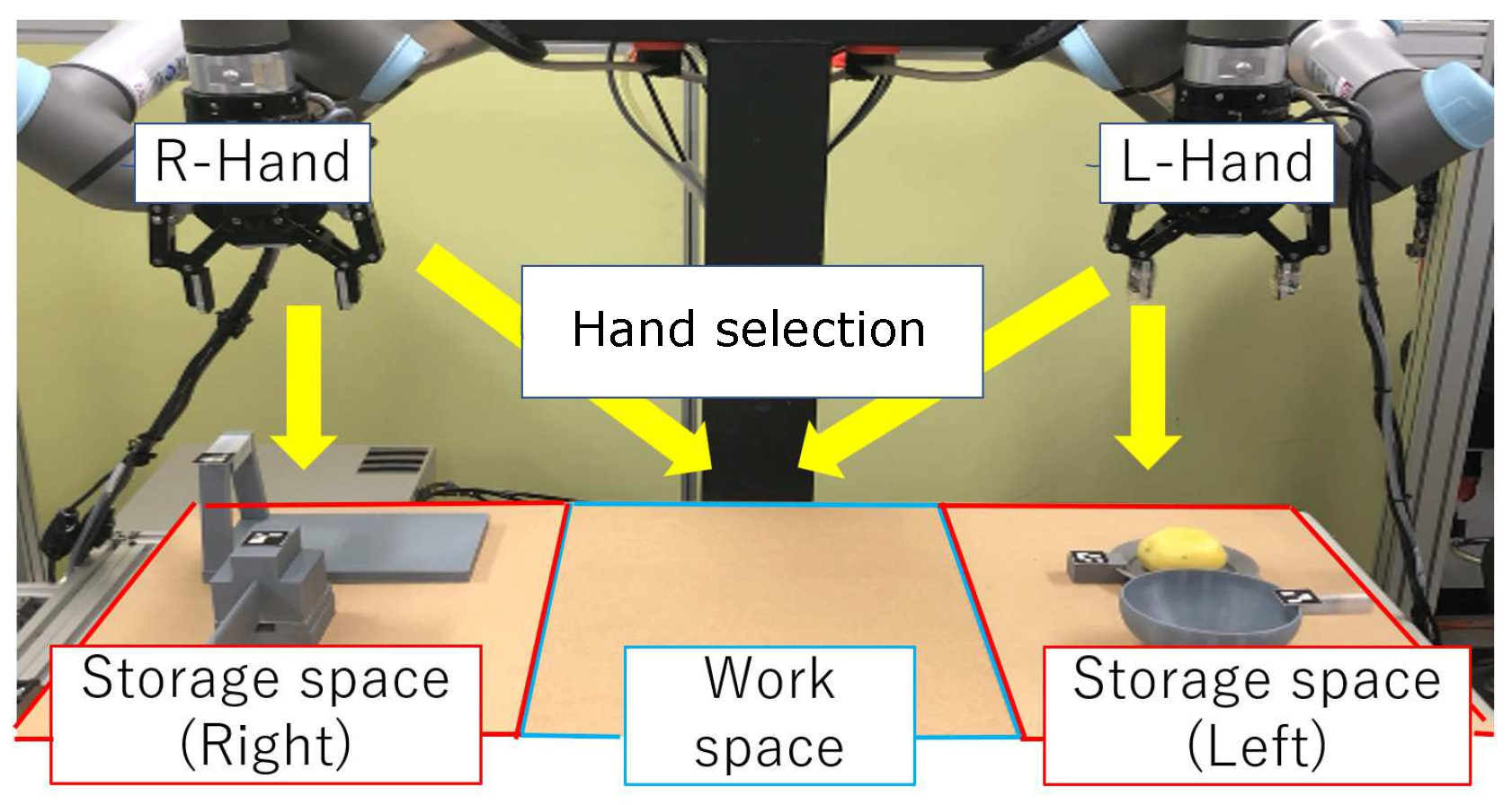

4.1. Experimental Setup

4.2. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wolfe, J.; Marthi, B.; Russell, S. Combined task and motion planning for mobile manipulation. In Proceedings of the 20th International Conference on Automated Planning and Scheduling, Toronto, ON, Canada, 12–16 May 2010. [Google Scholar]

- Wally, B. Flexible production systems: Automated generation of operations plans based on ISA-95 and PDDL. IEEE Robot. Autom. Lett. 2019, 4, 4062. [Google Scholar] [CrossRef]

- Zhang, S.; Jiang, Y.; Sharon, G.; Stone, P. Multirobot symbolic planning under temporal uncertainty. In Proceedings of the 16th Conference on Autonomous Agents and Multi-Agent Systems, São Paulo, Brazil, 8–12 May 2017; pp. 501–510. [Google Scholar]

- Ratliff, N.; Zucker, M.; Bagnell, J.; Srinivasa, S. CHOMP: Gradient optimization techniques for efficient motion planning. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Schulman, J.; Duan, Y.; Ho, J.; Lee, A.; Awwal, I.; Bradlow, H.; Pan, J.; Patil, S.; Goldberg, K.; Abbeel, P.; et al. Motion planning with sequential convex optimization and convex collision checking. Int. J. Robot. Res. 2014, 33, 1251–1270. [Google Scholar] [CrossRef]

- Simeon, T.; Laumond, J.; Cortes, J.; Shbani, A. Manipulation planning with probabilistic roadmaps. Int. J. Robot. Res. 2004, 23, 729–746. [Google Scholar] [CrossRef]

- Kaelbling, L.; Lozano-Perez, T. Integrated task and motion planning in belief space. Int. J. Robot. Res. 2013, 32, 1194–1227. [Google Scholar] [CrossRef]

- Garrett, C.; Lozano-Pérez, T.; Kaelbling, L. Sampling-based methods for factored task and motion planningm. Int. J. Robot. Res. 2018, 37, 1796–1825. [Google Scholar] [CrossRef]

- Woosley, B.; Dasgupta, P. Integrated real-time task and motion planning for multiple robots under path and communication uncertainties. Robotica 2018, 36, 353–373. [Google Scholar] [CrossRef]

- Wan, W.; Harada, K. Developing and comparing single-arm and dual-arm regrasp. IEEE Robot. Autom. Lett. 2016, 1, 243–250. [Google Scholar] [CrossRef]

- Wan, W.; Harada, K. Regrasp planning using 10,000 grasps. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 1929–1936. [Google Scholar]

- Siciliano, B. Advanced bimanual manipulation: Results from the dexmart project. In Advanced Bimanual Manipulation: Results from the Dexmart Project; Springer Science & Business Media: New York, NY, USA, 2012; Volume 80. [Google Scholar]

- Krüger, J.; Schreck, G.; Surdilovic, D. Dual arm robot for flexible and cooperative assembly. CIRP Ann. 2011, 60, 5–8. [Google Scholar] [CrossRef]

- Cohen, B.; Phillips, M.; Likhachev, M. Planning single-arm manipulations with n-arm robots. In Proceedings of the 8th Annual Symposium on Combinatorial Search, Ein Gedi, Israel, 11–13 June 2015. [Google Scholar]

- Kurosu, J.; Yorozu, A.; Takahashi, M. Simultaneous dual-arm motion planning for minimizing operation time. Appl. Sci. 2017, 7, 2110. [Google Scholar] [CrossRef]

- Ramirez-Alpizar, I.; Harada, K.; Yoshida, E. Human-based framework for the assembly of elastic objects by a dual-arm robot. Robomech. J. 2017, 4, 20. [Google Scholar] [CrossRef]

- Stavridis, S.; Doulgeri, Z. Bimanual assembly of two parts with relative motion generation and task related optimization. In Proceedings of the 2018 IEEE/RSJ Int.l Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 7131–7136. [Google Scholar]

- Moriyama, R.; Wan, W.; Harada, K. Dual-arm assembly planning considering gravitational constraints. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Macau, China, 4–8 November 2019; pp. 5566–5572. [Google Scholar]

- Yamazaki, K.; Watanabe, Y.; Nagahama, K.; Okada, K.; Inaba, M. Recognition and manipulation integration for a daily assistive robot working on kitchen environments. In Proceedings of the 2010 IEEE International Conference on Robotics and Biomimetics, Tianjin, China, 14–18 December 2010; pp. 196–201. [Google Scholar]

- Mu, X.; Xue, Y.; Jia, Y.B. Robotic cutting: Mechanics and control of knife motion. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3066–3072. [Google Scholar]

- Yamaguchi, A.; Atkeson, C.G. Stereo Vision of Liquid and Particle Flow for Robot Pouring. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; pp. 1173–1180. [Google Scholar]

- Inagawa, M.; Takei, T.; Imanishi, E. Japanese Recipe Interpretation for Motion Process Generation of Cooking Robot. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration, Honolulu, HI, USA, 12–15 January 2020; pp. 1394–1399. [Google Scholar]

- Beetz, M.; Klank, U.; Kresse, I.; Maldonado, A.; Mösenlechner, L.; Pangercic, D.; Rühr, T.; Tenorth, M. Robotic roommates making pancakes. In Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; pp. 529–536. [Google Scholar]

- Lisca, G.; Nyga, D.; Bálint-Benczédi, F.; Langer, H.; Beetz, M. Towards robots conducting chemical experiments. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–3 October 2015; pp. 5202–5208. [Google Scholar]

- Kazhoyan, G.; Beetz, M. Programming Robotic Agents with Action Descriptions. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Chen, H.; Tan, H.; Kuntz, A.; Bansal, M.; Alterovitz, R. Enabling robots to understand incomplete natural language instructions using commonsense reasoning. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1963–1969. [Google Scholar]

- Paulius, D.; Huang, Y.; Milton, R.; Buchanan, W.D.; Sam, J.; Sun, Y. Functional object-oriented network for manipulation learning. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016. [Google Scholar]

- Paulius, D.; Jelodar, A.B.; Sun, Y. Functional Object-Oriented Network: Construction & Expansion. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Paulius, D.; Dong, K.S.P.; Sun, Y. Task Planning with a Weighted Functional Object-Oriented Network. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021. [Google Scholar]

- Takata, K.; Kiyokawa, T.; Ramirez-Alpizar, I.; Yamanobe, N.; Wan, W.; Harada, K. Efficient Task/Motion Planning for a Dual-arm Robot from Language Instructions and Cooking Images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- CaboCha. Available online: https://taku910.github.io/cabocha/ (accessed on 14 October 2022).

- UR Robot. Available online: https://www.universal-robots.com/ (accessed on 14 October 2022).

| Type | Name | Attribute1 (Place) | Attribute2 (State) |

|---|---|---|---|

| Food | Potato, Beef | Storage space, Cutting board | Whole, Cut, Chopped |

| Seasoning | Sugar, Salt, Water | Storage space, Cup, Bowl | Whole, Mixed |

| Tool | Knife, Spatula, Ladle | Storage space, Hand | Clean, Dirty |

| Container | Cutting board, Bowl | Storage space, Work space | ingredient inside (Potato) |

| Motion | Name |

|---|---|

| Cut (half) | |

| Main motion | Poar |

| Boil | |

| Pick & Place | |

| Sub-motion | Grasp |

| Release |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Takata, K.; Kiyokawa, T.; Yamanobe, N.; Ramirez-Alpizar, I.G.; Wan, W.; Harada, K. Graph-Based Framework on Bimanual Manipulation Planning from Cooking Recipe. Robotics 2022, 11, 123. https://doi.org/10.3390/robotics11060123

Takata K, Kiyokawa T, Yamanobe N, Ramirez-Alpizar IG, Wan W, Harada K. Graph-Based Framework on Bimanual Manipulation Planning from Cooking Recipe. Robotics. 2022; 11(6):123. https://doi.org/10.3390/robotics11060123

Chicago/Turabian StyleTakata, Kota, Takuya Kiyokawa, Natsuki Yamanobe, Ixchel G. Ramirez-Alpizar, Weiwei Wan, and Kensuke Harada. 2022. "Graph-Based Framework on Bimanual Manipulation Planning from Cooking Recipe" Robotics 11, no. 6: 123. https://doi.org/10.3390/robotics11060123

APA StyleTakata, K., Kiyokawa, T., Yamanobe, N., Ramirez-Alpizar, I. G., Wan, W., & Harada, K. (2022). Graph-Based Framework on Bimanual Manipulation Planning from Cooking Recipe. Robotics, 11(6), 123. https://doi.org/10.3390/robotics11060123