Hierarchical Plan Execution for Cooperative UxV Missions

Abstract

:1. Introduction

1.1. Scope

1.2. Motivation

- The realization of planned actions is undertaken by individual units, and as such has to be described, and performed, in a specific manner. This empowers other units to act meaningfully and enables multiple units to perform combined arms operations.

- Given that militaries operate under rules of engagement and are subject to legal and ethical guidelines, plans must be such that their impact can be understood by decision takers. Military conflict operates at the fringes of ethics, and no comprehensive set of if–then rules exists; we can, however, calculate and offer possible choices to a human commander, who uses his expertise and understanding of the situation to decide.

- Military operations are inherently dangerous, and we believe that human life is more important/valuable than robotic hardware.

- Tactical military operations are inherently complex, and computer systems are faster and more reliable than humans at processing large amounts of real-time information.

- Human personnel will always be comprised of individuals with subjective talents and performances. Cyber-physical systems are significantly more consistent in their performance and durability and are furthermore not susceptible to fatigue and exhaustion.

1.3. Contributions of This Article

- We present an automated reasoning approach that facilitates the planning and execution of combined tactical operations. The approach specifically allows the cooperation between multiple, physically separate platforms.

- The presented approach does not require replanning in case that the actually encountered situation differs from the initially assumed state of affairs.

- The approach offers choices which are stated in the form of concise descriptions of the high-level actions (and their consequences) and are comprehensive (complete). Our system is specifically designed with an informed human in the loop.

1.4. Structure and Overview of This Article

2. Background

2.1. Application Domain

- Information gathering: Intelligence, Surveillance and Reconnaissance (ISR): Groups of heterogeneous platforms with various (sensing) capabilities can be used to continuously and tirelessly provide surveillance for, e.g., protection for forward bases and troops on the move. This requires an interface with the human commander to task the autonomous team with overall objectives and provide the rules of engagement, and an existing and agreed upon plan between the platforms of how to collectively react to specific events.

- Infrastructure maintenance: The extremely dull (and potentially quite dangerous) task of continuously checking infrastructure, such as pipelines or mine fields, can be undertaken by autonomous platforms which are expendable yet precise and reliable. Additionally, here, a commander has to be able to state high-level directives and operational boundaries while the platforms operate according (and possibly adapt dynamically) to a plan agreed to in advance.

- Protective mother—child systems: New military platforms—tanks, ships, etc.—are increasingly equipped with support UxV systems that can serve as extended sensors and/or effectors as part of the protection system. The primary tasks and objectives can be set by the human commander, but the execution of protective actions has to happen within such a short time that in order to be meaningful, autonomous cooperative operation is necessary.

2.2. Requirements for Autonomous Operations

- R1:

- Plan executable missions for autonomous platforms in dynamic environments.The system needs the ability to collectively plan and execute a mission which may require reactive decisions (decisions to be taken based on the perceived situation in a dynamically changing environment, e.g., atmospheric conditions [20]).

- R2:

- Return a set of choices (plans) to a human decision maker for the final decision.We have to offer a high-level description of the various choices to the human operator such that informed and timely operator control is possible.

- R3:

- Enable the human decision maker to make an informed choice.This requires the plan to be described in a concise (short), understandable but also comprehensive (complete) way.

2.3. Operational Constraints

- C1:

- Mission execution in dynamic environments: Autonomous platforms operating collaboratively in the real world to achieve some high level goals and objectives must be able to operate under changing conditions. These platforms need to be able to react to new information obtained during execution in the environment (changing weather conditions, an unforeseen change in terrain conditions, etc.). Furthermore, deployed platforms have to be able to react to the behavior and goals of other actors (be they friendly, neutral, or hostile), be they human or cyber-physical, and be able to handle anomalies in their own systems, such as sub-system failures or draining batteries.

- C2:

- Team coordination: Multiple unmanned vehicles may be deployed in a cooperating team to achieve the overall objectives, but they will do so through individual tasks. These tasks, or more precisely, the effects of these tasks, need to be coordinated to achieve the best results. Cooperative operations may thus require coordination between vehicles as a vital aspect of the mission execution. Ensuring coordination between the platforms should therefore be a pivotal concept in the plan. Given the domain (military, or at least, adversarial), the coordination between vehicles may not be able to rely on perfect communication between the platforms in the collective, something that, e.g., Cognitive Radio (CR) [34] could offer. The matter of how the communication is realized (e.g., [35]) and to which extend it is trustworthy is ignored in this manuscript, as it is work outside the scope of the project. As stated elsewhere in this paper, the actual deployment of such platforms in uncontrolled settings still faces a number of challenges. By the time this can realistically be attempted, new or emerging technologies (e.g., StarLink/StarShield, https://www.space.com/spacex-starshield-satellite-internet-military-starlink, accessed on 1 February 2023) may have permanently changed the battlefield. For the remainder of this paper, the technical details of how communication is realized are omitted.

- C3:

- Predictable and accountable behavior: Military doctrine (see, e.g., https://english.defensie.nl/topics/doctrine/defence-doctrine, accessed on 1 February 2023) [36,37] is used extensively in the armed forces. It ensures a shared understanding and results in a certain level of predictability in mission execution. At least the same level of predictability that exists between humans should be expected from autonomous platforms, since those vehicles will cooperate with other platforms, and eventually, also in collaboration with human soldiers. Simultaneously, the platforms should act in an accountable way and in accordance with their roles and responsibilities.

- C4:

- Human approval: Military decisions are subject to final human approval. For this statement to be meaningful (i.e., the decision to be an informed one), the human decision maker must be able to understand the implications and consequences of a plan. This means that the plans must be expressed in a high-level, human-readable manner. Constructing a comprehensive plan in advance (before execution) involves planning for a (possibly) very large time horizon. Creating such a long-term plan is computationally infeasible without providing contextual information that helps to decide on tasks and the behavior of the platforms.

2.4. Automated Planning and Execution

2.4.1. Planning

2.4.2. Hierarchical Task Network Planning

2.5. Discrete Event Simulation

3. ICTUS: Intelligent Coordination of Tactical Unmanned Systems

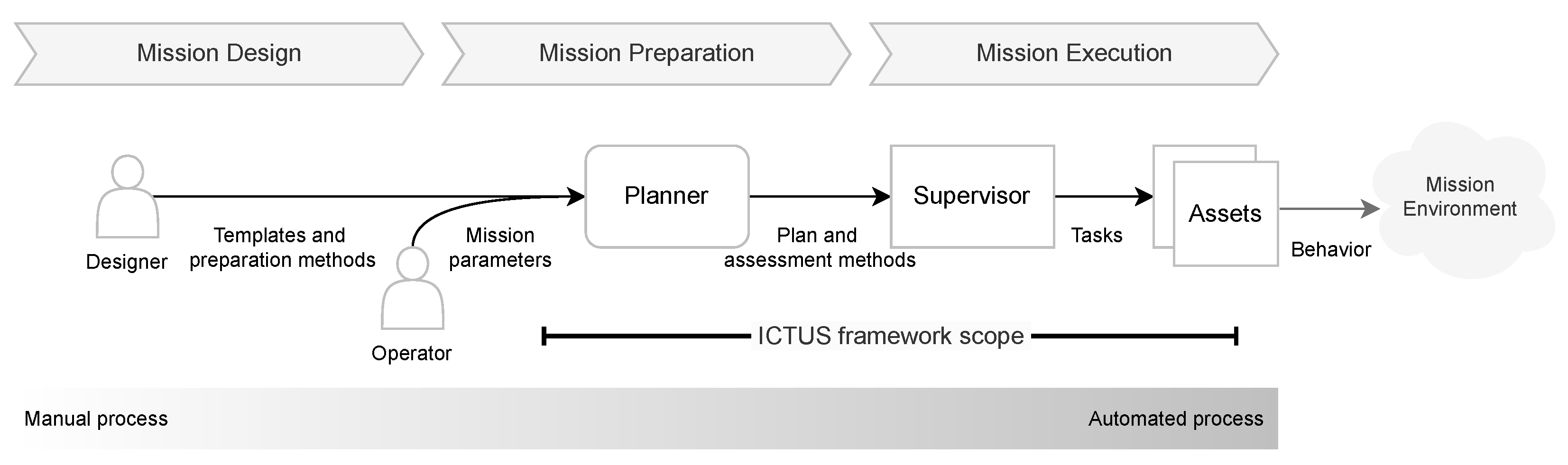

3.1. The Framework

- 1.

- Mission design: Design doctrine based mission templates, choose matching analysis methods, and specify necessary asset task capabilities for the preparation phase.

- 2.

- Mission preparation: Generate a specific plan based on mission parameters and available assets; and choose matching assessment methods for the execution phase.

- 3.

- Mission execution: In real-time, assess the situation regarding the environment and assets, determine actionable runtime information, and dispatch planned tasks.

What about Replanning?

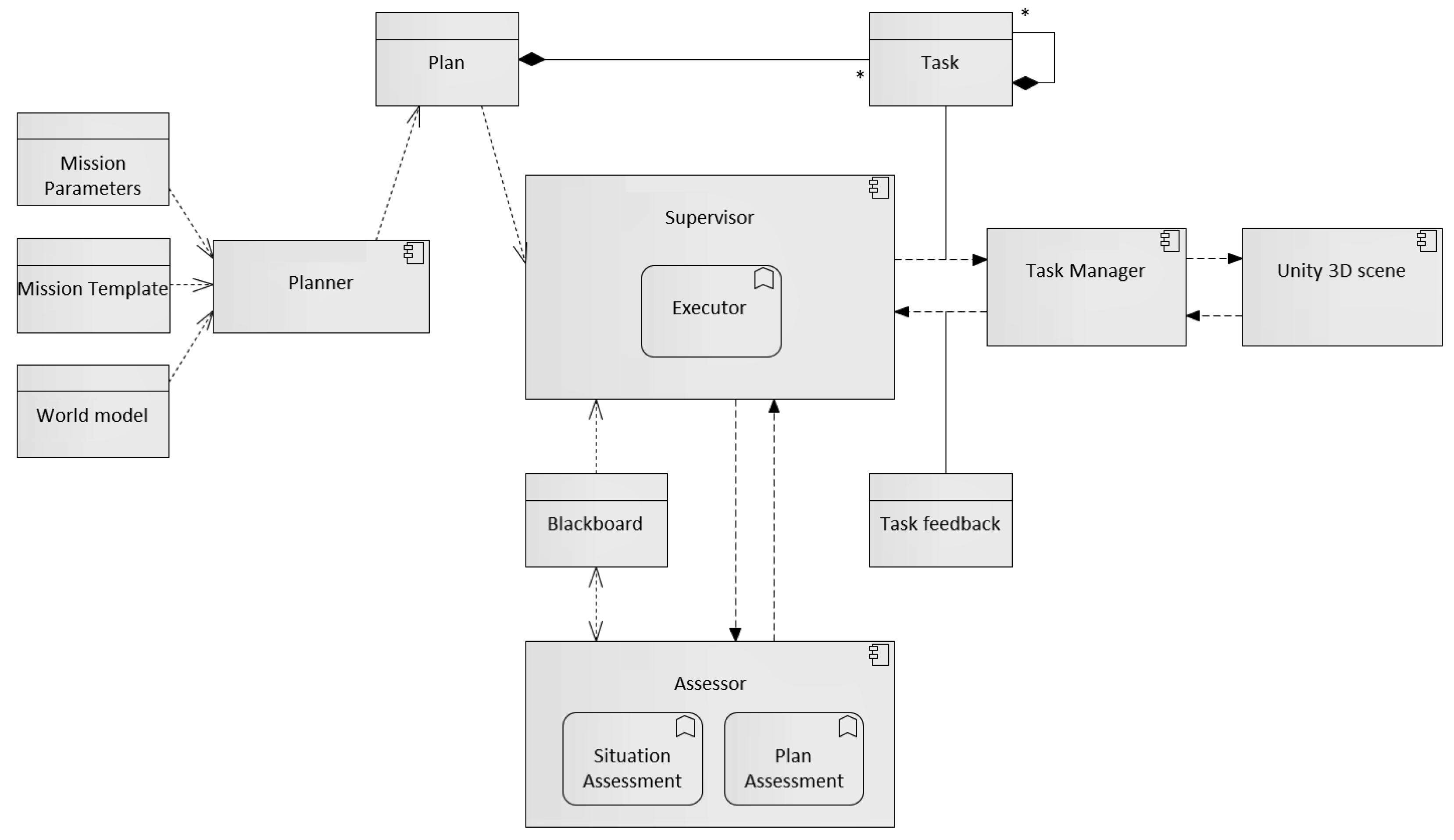

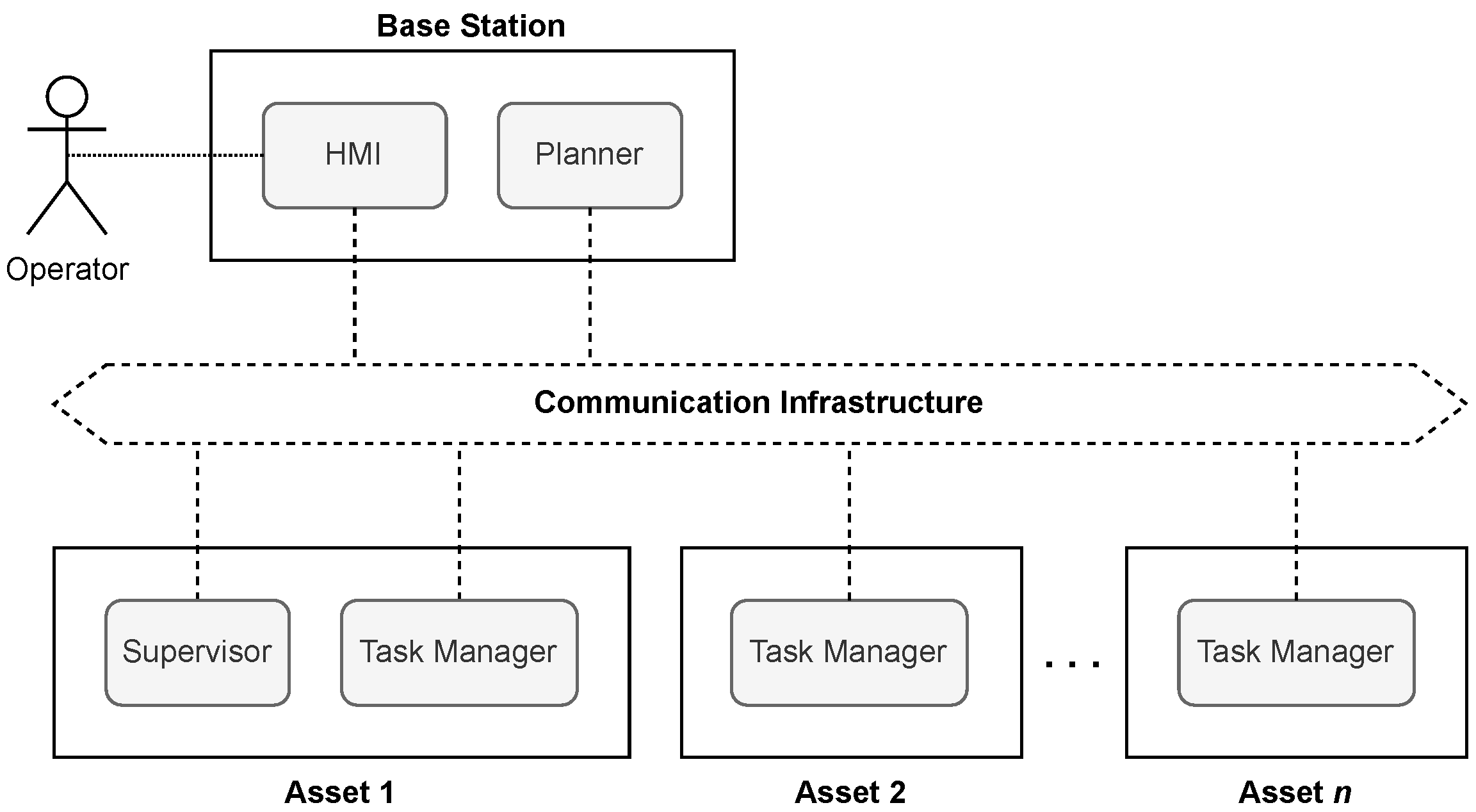

3.2. System Modules

3.2.1. The Planner Module

3.2.2. The Supervisor Module

3.2.3. The Task Manager Module

4. Task Execution

4.1. Task Execution Requirements

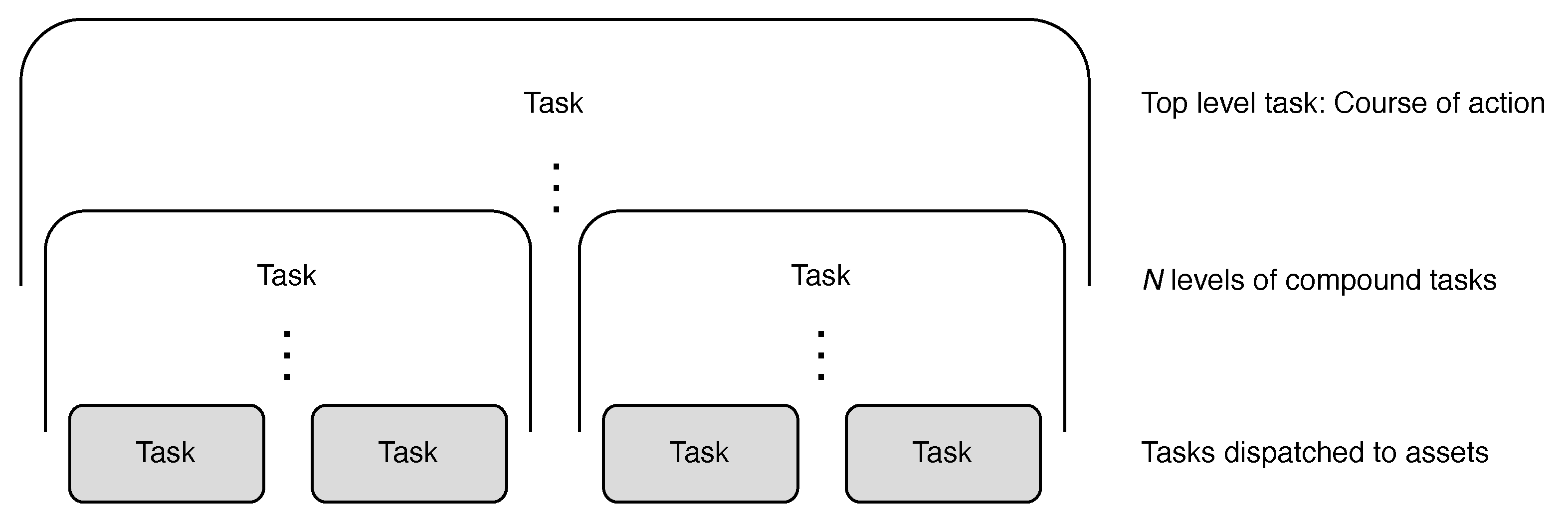

- Tasks have to be grouped into a meaningful hierarchy of tasks consisting of (higher-level) compounded tasks—which have subtasks—and basic tasks as the leaves of the hierarchy. Using this hierarchy, the overall intended behavior can be constructed through (reusable) task definitions. The same definitions can be used to keep track of mission progress and to provide sufficient context for when the outcome of the task execution differs from what was expected.

- Tasks can be executed concurrently. While an individual asset executes tasks in series, the supervisor must manage several tasks and subtasks in parallel.

- Plan execution must allow for runtime resolving of task parameters. For example, after the observation of a contact, it should be possible to assign a follow contact task using the information from that observation. This specific information will only be available during execution, that is, at runtime; therefore, the plan must provide for placeholders of that information. In ICTUS, this information is called runtime data.

- To allow for the composition of complex team behaviors, a (compound) task must allow for repetition. In other words, a task should repeat while (or, alternatively, until) predefined conditions are met. Examples include the continuous scanning of an area or the repeated engagement of targets during an attack.

- A branching mechanism is required to support decisions made during execution. While conditional execution of tasks is an important approach to supporting this, we introduced a decision task to explicitly account for this functionally in the plan object.

- There should be a detailed description of the basic tasks that the assets can perform autonomously. These tasks can be performed without coordination by the supervisor and are possible while interacting with other assets. The basic task descriptions determine the interface to the task managers of the assets. For this reason, the implementation of this interface will be application and use-case-specific.

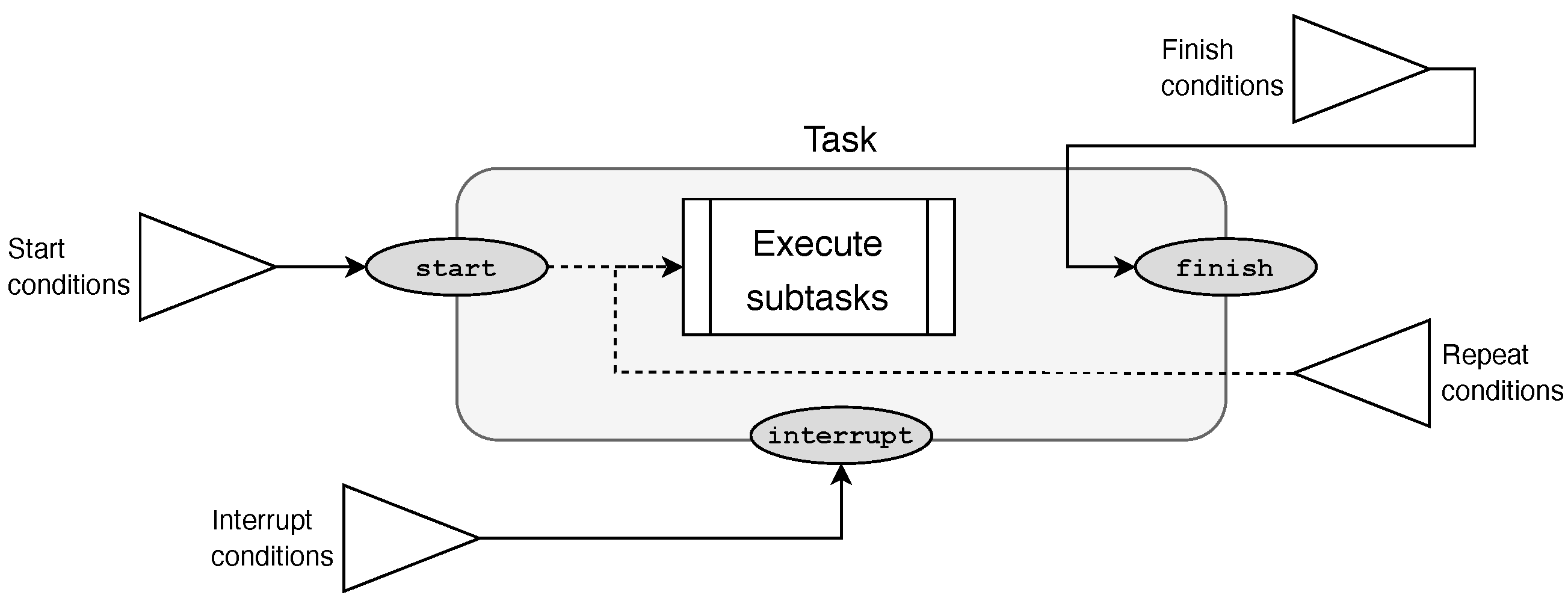

4.2. The Task Execution Model

4.3. The Task Execution Process

4.3.1. Execution Conditions

- 1.

- Start conditions: When all start conditions are satisfied, the task is started. Take a simple example plan involving two tasks, task A and task B, where task B starts after task A has finished: taskB.starts_after(taskA.finish), where taskA.finish is the finish event of task A.

- 2.

- Interrupt conditions: When all of these conditions are satisfied, the task and its subtasks are interrupted as soon as possible. As an example, consider an external event enemy_observed being triggered after an appropriately assessed observation: taskA.interrupt_when(enemy_observed).

- 3.

- Repeat conditions: Whenever all conditions are satisfied, the task is restarted. If applicable, all its subtasks are recreated and processed. Consider the following example: taskA.repeat_after(taskB.finish).

- 4.

- Finish conditions: When all are satisfied, the task is finished and will produce a finished event. An example: taskC.finish_after(any(taskA.finish, taskB.finish)).

4.3.2. The Execution Process

- 1.

- Initially, the task awaits for its start conditions to be satisfied.

- 2.

- The start of a task could be disabled (by an event), which will result in the interruption of the task, which means that the task will not execute and hence never start.

- 3.

- When started, all subtasks are concurrently processed (all await their start conditions), and the task will await either its repeat or its finish conditions. When either of these conditions is triggered, all active (started) subtasks will be interrupted, and in the case of the repeat conditions, the task is restarted. Alternatively, when the finish condition is satisfied, the task finishes.

- 4.

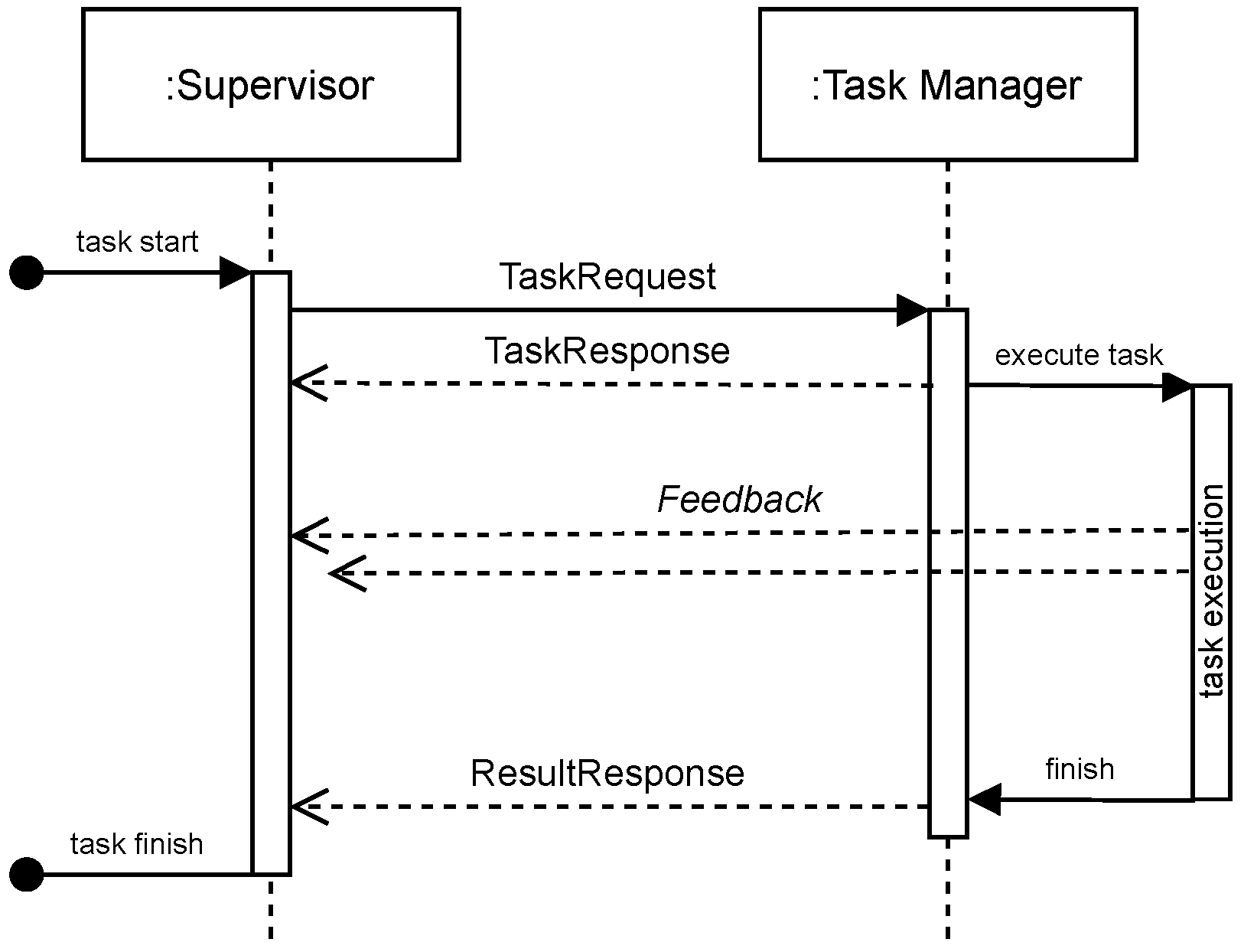

- In the case of a basic task—which is a leaf in the task tree—there are no subtasks. When a basic task starts, a TaskRequest is sent to the appropriate asset, and the process awaits its response. When the request is accepted, the task is marked as started and the task awaits a response (that is, a task result message) from the asset. Upon receiving this response, the task finishes. When the task is rejected by the asset, the task is disabled. If the task has to be interrupted, a cancel request is sent to the asset in order to stop its execution.

| Algorithm 1 Task execution procedure. |

|

5. Mission Planning and Execution

5.1. Mission Planning: The Planner Module

5.1.1. Input to the Planning Process

- 1.

- Task templates. The hierarchically structured templates for tasks are provided in the design phase by the designer (see Figure 1). These task templates and their (hierarchical) relations ensure that a mission with a large time horizon, consisting of many tasks, can be planned comprehensively before mission execution. The hierarchy of task templates yields a mission-specific decomposition into subtasks.

- 2.

- World model. Information about the world and algorithms to reason over elements in the world are also provided by the designer (as can be seen in Figure 1). Examples of reasoning algorithms include methods for analyzing the environment, e.g., weather conditions and terrain information, for analysis of the enemy’s possible courses of action, and for the selection of assets to execute certain subtasks, based on situation specific circumstances and/or asset capabilities.

- 3.

- Mission parameters. This situation specific information is provided by the operator, as shown in Figure 1 and Figure 2. The mission parameter specification contains information about, e.g., the assets that are available for this mission, the type of mission for which a plan needs to generated, an area of operation, and information on expected threats and other contacts which might be present during the operation.

5.1.2. Task Relations

- 1.

- Causal dependencies: Tasks relate to each other and to detected changes in the environment via events and conditions. These provide a natural mechanism to describe causal relations between tasks. Typically, the parent task in the tree of tasks is responsible for specifying what the conditions are for subtasks to be started, interrupted, or finished. Simple examples include parallel starting of multiple tasks or a set of tasks that need to be executed sequentially.

- 2.

- Information propagation: Reasoning components typically require (mission specific) input parameters that allow them to generate output. The input needs to be provided during the process of decomposition. Therefore, we allow tasks to explicitly share information via so-called task outputs which will be linked to task inputs, similarly to what was done in [31]. Tasks can only be decomposed into its subtasks if all inputs of the task are linked with outputs of other tasks. Such tasks are known as grounded tasks. The parent task of a task that requires input is responsible for providing that information, either directly or by creating a link with the output of another task.An example of passing on information involves an area of operation that needs to be split into regions, which is done using a reasoning method associated with a compound task. This region information in turn can serve as input to a task that identifies a strategy for surveying that region. This information can be already available in the planning phase, but it is also possible to share runtime data, a placeholder, which will be resolved during execution. An example of a runtime data source as task input is an observed hostile contact which requires follow-up in the plan, without knowing the location and other details about that contact in the preparation phase.

5.1.3. The Mission Planning Process

- 1.

- Reasoning methods are triggered to generate/calculate required information.

- 2.

- The subtasks are instantiated, based on their respective task templates.

- 3.

- Input for the subtasks is provided, either directly or by linking task output from a task to a task input of another task. This allows compound subtasks to become grounded, which means that these tasks can be decomposed themselves. Ultimately, the propagation of input information results in basic task parameters that are required for the execution of these tasks by (one of) the assets.

- 4.

- Causal relations between the subtasks are explicated by linking task events and external events to task conditions.

| Algorithm 2 Plan construction. |

|

| Algorithm 3 Task decomposition. |

|

5.2. Mission Execution: The Supervisor Module

- Execution of the plan: managing tasks, conditions, and events in the planned hierarchy. The Supervisor implements the task execution process using a discrete event simulation. Starting from the top level task in the plan, all subtasks are processed according to their condition sets, as described in Section 4.3.

- Messaging: The Supervisor is the central node that requests tasks from the worker assets and receives results and feedback. Messaging is done by a publish–subscribe messaging system on which all team members are subscribed. The interpretation and content of task-related messages are outlined below; note that the precise technical implementation is not essential for ICTUS. In addition to this, all messaging with the worker assets is done and received information is passed to the (mission-specific) Assessor module. The assessment leads to triggering of events to move forward in the plan execution.

- Assessment: Working together with the Assessor and a data store for runtime information (the blackboard), the Supervisor manages and acts upon relevant information about mission progress and results. As the complete planned hierarchy is known during the entire execution, mission progress can be closely logged and monitored by the Supervisor. In future systems, this can be exploited to give operators insight in mission progress both during and after execution.

5.2.1. Messaging

5.2.2. The Assessor Module

5.3. Mission Execution: The Task Manager Module

- Consider as asset a UGV, capable of moving covertly through a terrain with forests and open fields. If its Task Manager receives a move task that needs to be executed covertly, the terrain is analyzed to determine a path on which the probability of detection by other (non-friendly) assets is minimized. At the same time, the covert path length should not be much larger compared to a shortest path. Concretely, the path will be such that the asset is close to forest edges and/or through shrubbery.

- During the execution of a follow task, an asset will first execute a move towards to object to follow (if it is not yet in sight). After the object is observed, the asset will reposition itself (regularly) if needed to make sure the object will remain in sight.

- If an engagement task is received, the asset’s goal is to eliminate a target, by using one or more of its weapon systems. Engagement continues until the target is no longer a threat, or if the target is no longer within line of fire or line of sight. If the target is eliminated, the task was executed successfully; otherwise, the task is considered to have been failed. Note that the execution of the eliminate task involves not only repeatedly actuating weapons, but also the processing of sensor information.

6. Implementation and Demonstration

6.1. Implementation

6.2. Demonstration

6.2.1. Demonstration: An Area Defense Operation

6.2.2. The Mission Plan

- First, three UGVs position themselves at so-called post locations, close to the three bridges. A fourth vehicle positions itself at a reserve location, which allows it to move covertly and quickly on any of the three "post" locations (see the bottom right picture in Figure 11). In parallel, the UAV searches the area east of the river. The post locations are such that they provide concealment while ensuring that any contact approaching one of the three bridges will be observed.

- The three UGVs continuously scan their respective sectors, while receiving updates on locations of contacts—that is, red vehicles—from the UAV. When the Assessor establishes which bridge(s) will be crossed, the UGVs are requested to regroup themselves as follows: If a single red vehicle is approaching a bridge, one UGV is tasked to help in the defense. If the UGV at the reserve location is available, this asset is involved. If in parallel (or shortly after) a second red vehicle is approaching another bridge, the UGV that is guarding the remaining (third) bridge will be requested to join the UGV at the post near that second bridge. If, on the other hand, both red vehicles are approaching the same bridge, all four UGVs position themselves at the post near the soon-to-be-crossed bridge. The (early) detections of the UAV ensure that the UGVs have ample time to adjust their positions before the red vehicles are within firing range.

- The red vehicles aim to enter blue territory via the bridges, and a fight between blue and red takes place. After a successful repulsion of an attack, the UGVs distribute themselves again. The three posts near the bridges are populated first, and if a fourth asset is available, which means that there are no losses for the blue team, this remaining asset will position itself at the reserve location.

6.2.3. Plan Execution with Simulated Assets

- In the top-left sub-figure, the four blue assets are moving towards their respective positions: the three post locations near the bridges and the reserve location. The red vehicles are on the far-east side of the terrain and start approaching the blue territory.

- After some time, the blue Assessor has established—through the UAV’s observations—that the bridges in the north and in the south will be approached by one red vehicle. The Attack events are triggered, and as a result, the Supervisor instructs two of the blue UGVs to reposition themselves. In the top-right sub-figure, the asset at the reserve location has joined the asset in the north, and the asset that guarded the bridge in the center left its post location and is moving towards the southernmost bridge.

- In the middle two sub-figures, the encounter between red and blue near the bridge in the south can be seen. As soon as the red vehicle is observed by the blue assets, the Engagement tasks start. The red vehicle returns fire, but by virtue of the concealment of the blue assets, its disadvantage is significant. For that reason, the red vehicle is unable to eliminate the blue assets and is eliminated.

- In the bottom-left sub-figure, the attack in the south has finished, and the encounter in the north is bound to happen. This is rendered in the visualization by graying out (the symbol of) the eliminated red vehicle.

- After the attack by red is deflected, the defense phase is finished, and the remaining blue assets redistribute themselves in the regroup phase of the plan, cf. Figure 10.

- In the bottom right sub-figure, the blue assets have returned to their starting positions.

7. Conclusions and Future Work

7.1. Summary

7.2. Revisiting the Constraints

- C3 (accountability): the design phase provides a mechanism (a doctrine model) to ensure that military doctrine is always followed by the unmanned systems.

- C3 (predictability): To further insure predictable behavior in line with the rules of engagement, mission templates and reasoning modules (determined in the design phase) can, and should, be constructed under the guidance of a subject-matter expert.

- C1 (planning for dynamic environments): The construction of plans, based on design-time determined templates is such that plans can span a long period of time, whereas the automated planning process does not take a long time.

- C4 (human approval): This enables feedback from the human operators in advance, allowing them to understand the behaviors of the unmanned systems and to adjust mission parameters and ultimately provide consent for the execution of the plan.

- C1 (execution in dynamic environments): Mission execution can take place even when external conditions have changed. The approach was specifically designed for dynamic environments: the availability of contingencies and placeholders (runtime data) in the plan, together with the event based execution of the plan, allows for incorporating and responding to information that becomes available during execution.

- C2 (cooperative execution): The hierarchically structured plan and the explicit causal relations between tasks in that plan enable coordinated and collaborative execution of the mission plan. In addition, including the execution of the higher-level tasks, in parallel with the basic tasks, helps coordinating the autonomous systems and keeping track of overall mission progress.

- C2 (cooperative execution): The unmanned systems execute their assigned tasks individually, and team level behavior, that is, coordinated and collaborative execution of the overall mission, is guaranteed.

7.3. Conclusions

7.4. Future Work

- In the mission-preparation phase, the plan is constructed based on the iterative decomposition of tasks into subtasks. The current process of decomposition uses the provided mission parameters to tailor the plan to the specific situation, but does not include an optimization towards, e.g., measures of effectiveness for acting in that situation. Including an optimization process is certainly part of the future work that will be implemented in follow-up projects.

- In Section 5.1.3, we pointed out that the reasoning in the assessment module is assumed to be directly available in that module itself. Extending the concept of a task such that it can be used to provide this information to the module is considered (and ongoing), but it will require tuning to the specific application, and thus our preliminary insights are omitted here.

- Providing feedback to, and more generally, involving human operators during the execution of the mission by the autonomous vehicles, will receive more attention in future projects. As mentioned in Section 5.2, information on the current state of the unmanned systems, and their perceived information, together with the assessed situation and progress of the mission, are already available, and this information can be shared with operators. In addition, explicit consent from human operators for the execution of certain tasks during the mission might be required in specific cases and hence should be provided for.

- More broadly speaking, there is ample room for additional and more fleshed-out scenarios. We can go a number of ways in that, including (a) more advanced terrain models, possibly including new features; (b) increasing the number of vehicles and vehicle classes, and their capabilities; or (c) increasing the complexity of the modelled rules of engagement. None of these extensions would require fundamental changes in the developed system, but any of them means a significant increase in the time required to build the reasoning component.

- We have conceded (see Section 1.1) that we cannot offer a performance evaluation or a comparison of our approach to the state of the art on the basis of numerical results. This is due to the specialized nature of our work and because implementing reference approaches for bench marking and comparison is not feasible (in part because we aim at a different functionality, namely, one that can do without replanning). We do understand, however, that in the long run, the approach has to be further refined, amended, and augmented with the state of the art. While we do not claim to plan this for our own immediate future work, we do acknowledge the need for a more formal performance evaluation and encourage the interested reader to contact us.

- Finally, as pointed out in Section 2.3, the abstract nature of our work allowed us to gloss over the details regarding the communication for tactical operations. Suffice it to say that a lot of efforts are directed at this challenge; and indeed, current operations of the RNLA and the Royal Netherlands Navy (RNLN) make use of state-of-the-art communication infrastructure. The approach presented here will operate within this existing infrastructure. As this is a fundamental aspect of the system, these details will have to be discussed and presented to the field in due time.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Broad, W.J. The U.S. Flight from Pilotless Planes. Science 1981, 213, 188–190. [Google Scholar] [CrossRef] [PubMed]

- Hildmann, H.; Kovacs, E. Review: Using Unmanned Aerial Vehicles (UAVs) as Mobile Sensing Platforms (MSPs) for Disaster Response, Civil Security and Public Safety. Drones 2019, 3, 59. [Google Scholar] [CrossRef]

- Dousai, N.M.K.; Loncaric, S. Detection of Humans in Drone Images for Search and Rescue Operations. In Proceedings of the 2021 3rd Asia Pacific Information Technology Conference (APIT 2021), Bangkok, Thailand, 15–17 January 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 69–75. [Google Scholar] [CrossRef]

- Mandirola, M.; Casarotti, C.; Peloso, S.; Lanese, I.; Brunesi, E.; Senaldi, I. Use of UAS for damage inspection and assessment of bridge infrastructures. Int. J. Disaster Risk Reduct. 2022, 72, 102824. [Google Scholar] [CrossRef]

- Nooralishahi, P.; Ibarra-Castanedo, C.; Deane, S.; López, F.; Pant, S.; Genest, M.; Avdelidis, N.P.; Maldague, X.P.V. Drone-Based Non-Destructive Inspection of Industrial Sites: A Review and Case Studies. Drones 2021, 5, 106. [Google Scholar] [CrossRef]

- Bucknell, A.; Bassindale, T. An investigation into the effect of surveillance drones on textile evidence at crime scenes. Sci. Justice 2017, 57, 373–375. [Google Scholar] [CrossRef]

- Mohd Daud, S.M.S.; Mohd Yusof, M.Y.P.; Heo, C.C.; Khoo, L.S.; Chainchel Singh, M.K.; Mahmood, M.S.; Nawawi, H. Applications of drone in disaster management: A scoping review. Sci. Justice 2022, 62, 30–42. [Google Scholar] [CrossRef]

- Saffre, F.; Hildmann, H.; Karvonen, H.; Lind, T. Self-Swarming for Multi-Robot Systems Deployed for Situational Awareness. In New Developments and Environmental Applications of Drones; Lipping, T., Linna, P., Narra, N., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 51–72. [Google Scholar]

- Sabino, H.; Almeida, R.V.; de Moraes, L.B.; da Silva, W.P.; Guerra, R.; Malcher, C.; Passos, D.; Passos, F.G. A systematic literature review on the main factors for public acceptance of drones. Technol. Soc. 2022, 71, 102097. [Google Scholar] [CrossRef]

- Wu, H.; Wu, P.F.; Shi, Z.S.; Sun, S.Y.; Wu, Z.H. An aerial ammunition ad hoc network collaborative localization algorithm based on relative ranging and velocity measurement in a highly-dynamic topographic structure. Def. Technol. 2022. [Google Scholar] [CrossRef]

- Husodo, A.Y.; Jati, G.; Octavian, A.; Jatmiko, W. Switching target communication strategy for optimizing multiple pursuer drones performance in immobilizing Kamikaze multiple evader drones. ICT Express 2020, 6, 76–82. [Google Scholar] [CrossRef]

- Calcara, A.; Gilli, A.; Gilli, M.; Marchetti, R.; Zaccagnini, I. Why Drones Have Not Revolutionized War: The Enduring Hider-Finder Competition in Air Warfare. Int. Secur. 2022, 46, 130–171. [Google Scholar] [CrossRef]

- Aksu, O. Potential Game Changer for Close Air Support; Joint Air Power Competence Centre: Kalkar, Germany, 2022; Volume 3. [Google Scholar]

- Mgdesyan, A. Drones A Game Changer in Nagorno-Karabakh. Eurasia Rev. 2020, 2. [Google Scholar]

- Lesire, C.; Bailon-Ruiz, R.; Barbier, M.; Grand, C. A Hierarchical Deliberative Architecture Framework based on Goal Decomposition. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 9865–9870. [Google Scholar] [CrossRef]

- Shriyam, S.; Gupta, S.K. Incorporation of Contingency Tasks in Task Allocation for Multirobot Teams. IEEE Trans. Autom. Sci. Eng. 2020, 17, 809–822. [Google Scholar] [CrossRef]

- Ghallab, M.; Nau, D.; Traverso, P. The actor’s view of automated planning and acting: A position paper. Artif. Intell. 2014, 208, 1–17. [Google Scholar] [CrossRef]

- Peters, F.; Den Breejen, E. Integrating autonomous system of systems in the Royal Netherlands Navy. In Proceedings of the International Ship Control Systems Symposium, Delft, The Netherlands, 5–9 October 2020. [Google Scholar] [CrossRef]

- Konert, A.; Balcerzak, T. Military autonomous drones (UAVs)—From fantasy to reality. Legal and Ethical implications. Transp. Res. Procedia 2021, 59, 292–299. [Google Scholar] [CrossRef]

- Azar, A.T.; Serrano, F.E.; Koubaa, A.; Ibrahim, H.A.; Kamal, N.A.; Khamis, A.; Ibraheem, I.K.; Humaidi, A.J.; Precup, R.E. Chapter 5—Robust fractional-order sliding mode control design for UAVs subjected to atmospheric disturbances. In Unmanned Aerial Systems: Theoretical Foundation and Applications; Advances in Nonlinear Dynamics and Chaos (ANDC) Series; Koubaa, A., Azar, A.T., Eds.; Academic Press: Cambridge, MA, USA, 2021; pp. 103–128. [Google Scholar] [CrossRef]

- Leonhard, R. The Art of Maneuver: Maneuver Warfare Theory and Airland Battle; Random House Publishing Group: New York, NY, USA, 2009. [Google Scholar]

- von Clausewitz, C.; Werner, H. Vom Kriege; Nikol: Hamburg, Germany, 2011. [Google Scholar]

- Sun, T.; Sawyer, R. The Art of War; Basic Books: New York, NY, USA, 1994. [Google Scholar]

- Capek, K.; Novack-Jones, C.; Klima, I. R.U.R.(Rossum’s Universal Robots); Penguin Classics; Penguin Publishing Group: London, UK, 2004. [Google Scholar]

- Takayama, L.; Nass, C. Beyond dirty, dangerous and dull: What everyday people think robots should do. In Proceedings of the HRI ’08: International Conference on Human Robot Interaction, Amsterdam, The Netherlands, 12–15 March 2008; pp. 25–32. [Google Scholar] [CrossRef]

- Goddard, M.A.; Davies, Z.G.; Guenat, S.; Ferguson, M.J.; Fisher, J.C.; Akanni, A.; Ahjokoski, T.; Anderson, P.M.L.; Angeoletto, F.; Antoniou, C.; et al. A global horizon scan of the future impacts of robotics and autonomous systems on urban ecosystems. Nat. Ecol. Evol. 2021, 5, 219–230. [Google Scholar] [CrossRef]

- Haider, A.; Schmidt, A. Defining the Swarm; Joint Air Power Competence Centre: Kalkar, Germany, 2022; Volume 34. [Google Scholar]

- Dorigo, M. SWARM-BOT: An experiment in swarm robotics. In Proceedings of the 2005 IEEE Swarm Intelligence Symposium (SIS), Pasadena, CA, USA, 8–10 June 2005; pp. 192–200. [Google Scholar] [CrossRef] [Green Version]

- Ropero, F.; Muñoz, P.; R-Moreno, M.D. TERRA: A path planning algorithm for cooperative UGV–UAV exploration. Eng. Appl. Artif. Intell. 2019, 78, 260–272. [Google Scholar] [CrossRef]

- Marvin, S.; While, A.; Kovacic, M.; Lockhart, A.; Macrorie, R. Urban Robotics and Automation: Critical Challenges, International Experiments and Transferable Lessons for the UK; EPSRC UK Robotics and Autonomous Systems (RAS) Network: London, UK, 2018. [Google Scholar] [CrossRef]

- der Sterren, W.V. Hierarchical Plan-Space Planning for Multi-unit Combat Maneuvers; Game AI Pro 360: Guide to Architecture; CRC Press: Boca Raton, FL, USA, 2013; Chapter 13; pp. 169–183. [Google Scholar]

- West, A.; Tsitsimpelis, I.; Licata, M.; Jazbec, A.; Snoj, L.; Joyce, M.J.; Lennox, B. Use of Gaussian process regression for radiation mapping of a nuclear reactor with a mobile robot. Sci. Rep. 2021, 11, 13975. [Google Scholar] [CrossRef] [PubMed]

- Beautement, P.; Allsopp, D.; Greaves, M.; Goldsmith, S.; Spires, S.; Thompson, S.G.; Janicke, H. Autonomous Agents and Multi-agent Systems (AAMAS) for the Military—Issues and Challenges. In Proceedings of the Defence Applications of Multi-Agent Systems; Thompson, S.G., Ghanea-Hercock, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–13. [Google Scholar]

- Chithaluru, P.; Stephan, T.; Kumar, M.; Nayyar, A. An enhanced energy-efficient fuzzy-based cognitive radio scheme for IoT. Neural Comput. Appl. 2022, 34, 19193–19215. [Google Scholar] [CrossRef]

- Chithaluru, P.; Al-Turjman, F.; Kumar, M.; Stephan, T. Computational Intelligence Inspired Adaptive Opportunistic Clustering Approach for Industrial IoT Networks. IEEE Internet of Things J. 2023, 1. [Google Scholar] [CrossRef]

- Hunjet, R. Autonomy and self-organisation for tactical communications and range extension. In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Van Wiggen, O.; Pollaert, T. Tactics, Made Easy; Onkenhout Groep: Diemen, The Netherlands, 2009. [Google Scholar]

- Ghallab, M.; Nau, D.; Traverso, P. Automated Planning and Acting; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar] [CrossRef]

- Campbell, M.; Hoane, A.; Hsiung Hsu, F. Deep Blue. Artif. Intell. 2002, 134, 57–83. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Hildmann, H. Computer Games and Artificial Intelligence. In Encyclopedia of Computer Graphics and Games; Lee, N., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 1–11. [Google Scholar] [CrossRef]

- Lohatepanont, M.; Barnhart, C. Airline Schedule Planning: Integrated Models and Algorithms for Schedule Design and Fleet Assignment. Transp. Sci. 2004, 38, 19–32. [Google Scholar] [CrossRef]

- Georgievski, I.; Aiello, M. HTN planning: Overview, comparison, and beyond. Artif. Intell. 2015, 222, 124–156. [Google Scholar] [CrossRef]

- Mahmoud, I.M.; Li, L.; Wloka, D.; Ali, M.Z. Believable NPCs in serious games: HTN planning approach based on visual perception. In Proceedings of the 2014 IEEE Conference on Computational Intelligence and Games, Dortmund, Germany, 26–29 August 2014; pp. 1–8. [Google Scholar] [CrossRef]

- Fishman, G. Discrete-Event Simulation: Modeling, Programming, and Analysis; Springer Series in Operations Research and Financial Engineering; Springer: New York, NY, USA, 2013. [Google Scholar]

- Bagchi, S.; Buckley, S.; Ettl, M.; Lin, G. Experience using the IBM Supply Chain Simulator. In Proceedings of the 1998 Winter Simulation Conference. Proceedings (Cat. No. 98CH36274), Washington, DC, USA, 13–16 December 1998; Volume 2, pp. 1387–1394. [Google Scholar] [CrossRef]

- Franke, J.; Hughes, A.; Jameson, S. Holistic Contingency Management for Autonomous Unmanned Systems. In Proceedings of the AUVSIs Unmanned Systems North America, Orlando, FL, USA, 29–31 August 2006. [Google Scholar]

- MahmoudZadeh, S.; Powers, D.M.W.; Bairam Zadeh, R. State-of-the-Art in UVs’ Autonomous Mission Planning and Task Managing Approach. In Autonomy and Unmanned Vehicles: Augmented Reactive Mission and Motion Planning Architecture; Springer: Singapore, 2019; pp. 17–30. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Gier, J.; Bergmans, J.; Hildmann, H. Hierarchical Plan Execution for Cooperative UxV Missions. Robotics 2023, 12, 24. https://doi.org/10.3390/robotics12010024

de Gier J, Bergmans J, Hildmann H. Hierarchical Plan Execution for Cooperative UxV Missions. Robotics. 2023; 12(1):24. https://doi.org/10.3390/robotics12010024

Chicago/Turabian Stylede Gier, Jan, Jeroen Bergmans, and Hanno Hildmann. 2023. "Hierarchical Plan Execution for Cooperative UxV Missions" Robotics 12, no. 1: 24. https://doi.org/10.3390/robotics12010024

APA Stylede Gier, J., Bergmans, J., & Hildmann, H. (2023). Hierarchical Plan Execution for Cooperative UxV Missions. Robotics, 12(1), 24. https://doi.org/10.3390/robotics12010024