A Trust-Assist Framework for Human–Robot Co-Carry Tasks

Abstract

:1. Introduction

2. Related Work

3. Modeling Methodology

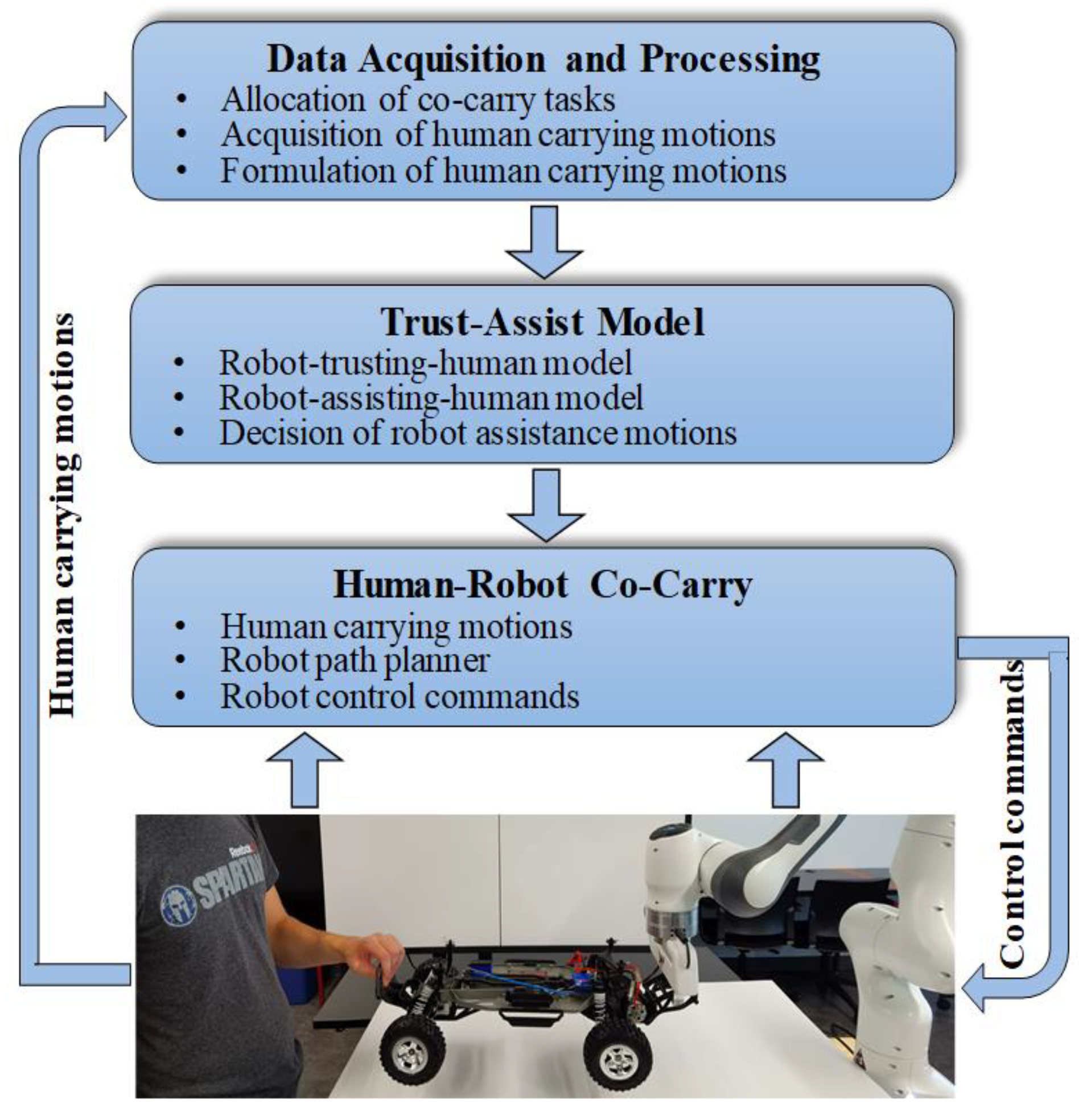

3.1. Overview of Trust-Assist Framework

3.2. Data Acquisition

3.3. Formulation of Human-Carrying Motions

3.4. Robot-Trusting -Human Model

3.5. Robot-Assisting-Human Model

3.6. Robot-Path-Planning Algorithm

| Algorithm 1. Robot path planning |

| Input: The executable waypoints generated via trust-assist model |

| Output: Planning paths |

|

4. Experimental Results and Analysis

4.1. Experimental Setup

4.2. Human-Carrying-Motion Recognition Results

4.3. Analysis of Robot-to-Human Trust

4.4. Assisting Human in Co-Carry Tasks

5. Discussions

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tsarouchi, P.; Makris, S.; Chryssolouris, G. Human–robot interaction review and challenges on task planning and programming. Int. J. Comput. Integr. Manuf. 2016, 29, 916–931. [Google Scholar] [CrossRef]

- Maurtua, I.; Ibarguren, A.; Kildal, J.; Susperregi, L.; Sierra, B. Human–robot collaboration in industrial applications: Safety, interaction and trust. Int. J. Adv. Robot. Syst. 2017, 14, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Shi, J.; Jimmerson, G.; Pearson, T.; Menassa, R. Levels of human and robot collaboration for automotive manufacturing. In Proceedings of the Workshop on Performance Metrics for Intelligent Systems, College Park, MD, USA, 20–22 March 2012; pp. 95–100. [Google Scholar]

- Sauppé, A. Designing effective strategies for human-robot collaboration. In Proceedings of the Companion Publication of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing, Baltimore, MD, USA, 15–19 February 2014; pp. 85–88. [Google Scholar]

- Dragan, A.D.; Bauman, S.; Forlizzi, J.; Srinivasa, S.S. Effects of robot motion on human-robot collaboration. In Proceedings of the 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Portland, OR, USA, 2–5 March 2015; pp. 51–58. [Google Scholar]

- Wang, W.; Li, R.; Chen, Y.; Jia, Y. Human Intention Prediction in Human-Robot Collaborative Tasks. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 279–280. [Google Scholar]

- Li, Y.; Ge, S.S. Human–robot collaboration based on motion intention estimation. IEEE/ASME Trans. Mechatron. 2013, 19, 1007–1014. [Google Scholar] [CrossRef]

- Hu, B.; Chen, J. Optimal task allocation for human–machine collaborative manufacturing systems. IEEE Robot. Autom. Lett. 2017, 2, 1933–1940. [Google Scholar] [CrossRef]

- Peternel, L.; Tsagarakis, N.; Caldwell, D.; Ajoudani, A. Robot adaptation to human physical fatigue in human–robot co-manipulation. Auton. Robot. 2018, 42, 1011–1021. [Google Scholar] [CrossRef]

- Lynch, K.M.; Liu, C. Designing motion guides for ergonomic collaborative manipulation. In Proceedings of the 2000 ICRA, Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; pp. 2709–2715. [Google Scholar]

- Kana, S.; Tee, K.-P.; Campolo, D. Human–Robot co-manipulation during surface tooling: A general framework based on impedance control, haptic rendering and discrete geometry. Robot. Comput.-Integr. Manuf. 2021, 67, 102033. [Google Scholar] [CrossRef]

- Mateus, J.C.; Claeys, D.; Limère, V.; Cottyn, J.; Aghezzaf, E.-H. A structured methodology for the design of a human-robot collaborative assembly workplace. Int. J. Adv. Manuf. Technol. 2019, 102, 2663–2681. [Google Scholar] [CrossRef]

- Oyekan, J.O.; Hutabarat, W.; Tiwari, A.; Grech, R.; Aung, M.H.; Mariani, M.P.; López-Dávalos, L.; Ricaud, T.; Singh, S.; Dupuis, C. The effectiveness of virtual environments in developing collaborative strategies between industrial robots and humans. Robot. Comput.-Integr. Manuf. 2019, 55, 41–54. [Google Scholar] [CrossRef]

- Colan, J.; Nakanishi, J.; Aoyama, T.; Hasegawa, Y. A Cooperative Human-Robot Interface for Constrained Manipulation in Robot-Assisted Endonasal Surgery. Appl. Sci. 2020, 10, 4809. [Google Scholar] [CrossRef]

- Donner, P.; Christange, F.; Lu, J.; Buss, M. Cooperative dynamic manipulation of unknown flexible objects. Int. J. Soc. Robot. 2017, 9, 575–599. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ososky, S.; Schuster, D.; Phillips, E.; Jentsch, F.G. Building appropriate trust in human-robot teams. In Proceedings of the 2013 AAAI Spring Symposium, Palo Alto, CA, USA, 25–27 March 2013; pp. 60–65. [Google Scholar]

- Ullman, D.; Aladia, S.; Malle, B.F. Challenges and opportunities for replication science in HRI: A case study in human-robot trust. In Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 110–118. [Google Scholar]

- Xu, J.; Howard, A. Evaluating the impact of emotional apology on human-robot trust. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Napoli, Italy, 29 August–2 September 2022; pp. 1655–1661. [Google Scholar]

- Esterwood, C.; Robert, L.P. Having the Right Attitude: How Attitude Impacts Trust Repair in Human—Robot Interaction. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Sapporo, Japan, 7–10 March 2022; pp. 332–341. [Google Scholar]

- Ezenyilimba, A.; Wong, M.; Hehr, A.; Demir, M.; Wolff, A. Impact of transparency and explanations on trust and situation awareness in human–robot teams. J. Cogn. Eng. Decis. Mak. 2022, 17, 15553434221136358. [Google Scholar] [CrossRef]

- Malle, B.F.; Ullman, D. A multidimensional conception and measure of human-robot trust. In Trust in Human-Robot Interaction; Elsevier: Amsterdam, The Netherlands, 2021; pp. 3–25. [Google Scholar]

- Esterwood, C.; Robert, L.P. Three Strikes and you are out!: The impacts of multiple human-robot trust violations and repairs on robot trustworthiness. Comput. Human Behav. 2023, 142, 107658. [Google Scholar] [CrossRef]

- Hannum, C.; Li, R.; Wang, W. Trust or Not?: A Computational Robot-Trusting-Human Model for Human-Robot Collaborative Tasks. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5689–5691. [Google Scholar]

- Hanna, A.; Larsson, S.; Götvall, P.-L.; Bengtsson, K. Deliberative safety for industrial intelligent human–robot collaboration: Regulatory challenges and solutions for taking the next step towards industry 4.0. Robot. Comput.-Integr. Manuf. 2022, 78, 102386. [Google Scholar] [CrossRef]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Development and validation of guidelines for safety in human-robot collaborative assembly systems. Comput. Ind. Eng. 2022, 163, 107801. [Google Scholar] [CrossRef]

- Choi, S.H.; Park, K.-B.; Roh, D.H.; Lee, J.Y.; Mohammed, M.; Ghasemi, Y.; Jeong, H. An integrated mixed reality system for safety-aware human-robot collaboration using deep learning and digital twin generation. Robot. Comput.-Integr. Manuf. 2022, 73, 102258. [Google Scholar] [CrossRef]

- Cai, J.; Du, A.; Liang, X.; Li, S. Prediction-Based Path Planning for Safe and Efficient Human–Robot Collaboration in Construction via Deep Reinforcement Learning. J. Comput. Civ. Eng. 2023, 37, 04022046. [Google Scholar] [CrossRef]

- Faccio, M.; Granata, I.; Minto, R. Task allocation model for human-robot collaboration with variable cobot speed. J. Intell. Manuf. 2023, 474, 1–14. [Google Scholar] [CrossRef]

- Tariq, U.; Muthusamy, R.; Kyrki, V. Grasp planning for load sharing in collaborative manipulation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 6847–6854. [Google Scholar]

- Wang, W.; Li, R.; Chen, Y.; Diekel, Z.M.; Jia, Y. Facilitating Human–Robot Collaborative Tasks by Teaching-Learning-Collaboration From Human Demonstrations. IEEE Trans. Autom. Sci. Eng. 2018, 16, 640–653. [Google Scholar] [CrossRef]

- Argall, B.D.; Chernova, S.; Veloso, M.; Browning, B. A survey of robot learning from demonstration. Robot. Auton. Syst. 2009, 57, 469–483. [Google Scholar] [CrossRef]

- Gu, Y.; Thobbi, A.; Sheng, W. Human-robot collaborative manipulation through imitation and reinforcement learning. In Proceedings of the 2011 IEEE International Conference on Information and Automation, Shanghai, China, 9–13 May 2011; pp. 151–156. [Google Scholar]

- Arai, H.; Takubo, T.; Hayashibara, Y.; Tanie, K. Human-robot cooperative manipulation using a virtual nonholonomic constraint. In Proceedings of the 2000 ICRA, Millennium Conference, IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; pp. 4063–4069. [Google Scholar]

- Cervera, E.; del Pobil, A.P.; Marta, E.; Serna, M.A. A sensor-based approach for motion in contact in task planning. In Proceedings of the 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems, Human Robot Interaction and Cooperative Robots, Pittsburgh, PA, USA, 5–9 August 1995; pp. 468–473. [Google Scholar]

- Kruse, D.; Radke, R.J.; Wen, J.T. Collaborative human-robot manipulation of highly deformable materials. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, DC, USA, 26–30 May 2015; pp. 3782–3787. [Google Scholar]

- Demolombe, R. Reasoning about trust: A formal logical framework. In Proceedings of the International Conference on Trust Management, Oxford, UK, 29 March–1 April 2004; pp. 291–303. [Google Scholar]

- Wang, Y.; Shi, Z.; Wang, C.; Zhang, F. Human-robot mutual trust in (semi) autonomous underwater robots. In Cooperative Robots and Sensor Networks 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 115–137. [Google Scholar]

- Billings, D.R.; Schaefer, K.E.; Chen, J.Y.; Hancock, P.A. Human-robot interaction: Developing trust in robots. In Proceedings of the seventh annual ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012; pp. 109–110. [Google Scholar]

- Rossi, A.; Dautenhahn, K.; Koay, K.L.; Saunders, J. Investigating human perceptions of trust in robots for safe HRI in home environments. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 375–376. [Google Scholar]

- Stormont, D.P. Analyzing human trust of autonomous systems in hazardous environments. In Proceedings of the Human Implications of Human-Robot Interaction Workshop at AAAI, Chicago, IL, USA, 13–17 July 2008; pp. 27–32. [Google Scholar]

- Freedy, A.; DeVisser, E.; Weltman, G.; Coeyman, N. Measurement of trust in human-robot collaboration. In Proceedings of the 2007 International Symposium on Collaborative Technologies and Systems, Orlando, FL, USA, 21–25 May 2007; pp. 106–114. [Google Scholar]

- Chen, M.; Nikolaidis, S.; Soh, H.; Hsu, D.; Srinivasa, S. Planning with trust for human-robot collaboration. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 307–315. [Google Scholar]

- Kaniarasu, P.; Steinfeld, A.; Desai, M.; Yanco, H. Robot confidence and trust alignment. In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 155–156. [Google Scholar]

- Basili, P.; Huber, M.; Brandt, T.; Hirche, S.; Glasauer, S. Investigating human-human approach and hand-over. In Human Centered Robot Systems; Springer: Berlin/Heidelberg, Germany, 2009; pp. 151–160. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, San Francisco, CA, USA, 24–28 August 1981; pp. 121–130. [Google Scholar]

- LaValle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning. The Annual Research Report. 1998, 1–4. Available online: http://msl.cs.illinois.edu/~lavalle/papers/Lav98c.pdf (accessed on 2 February 2023).

- Kavraki, L.; Svestka, P.; Overmars, M.H. Probabilistic Roadmaps for Path Planning in High-Dimensional Configuration Spaces. IEEE Trans. Robot. Autom. 1994, 1994, 566–580. [Google Scholar] [CrossRef] [Green Version]

- Kuffner, J.J.; LaValle, S.M. RRT-connect: An efficient approach to single-query path planning. In Proceedings of the Robotics and Automation, 2000, Proceedings ICRA’00, San Francisco, CA, USA, 24–28 April 2000; pp. 995–1001. [Google Scholar]

- Janson, L.; Schmerling, E.; Clark, A.; Pavone, M. Fast marching tree: A fast marching sampling-based method for optimal motion planning in many dimensions. Int. J. Robot. Res. 2015, 34, 883–921. [Google Scholar] [CrossRef] [PubMed]

- Bohlin, R.; Kavraki, L.E. Path planning using lazy PRM. In Proceedings of the 2000 ICRA. Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; pp. 521–528. [Google Scholar]

- Hsu, D.; Latombe, J.-C.; Motwani, R. Path planning in expansive configuration spaces. Int. J. Comput. Geom. Appl. 1999, 9, 495–512. [Google Scholar] [CrossRef]

- Stilman, M.; Schamburek, J.-U.; Kuffner, J.; Asfour, T. Manipulation Planning among Movable Obstacles; Georgia Institute of Technology: Atlanta, GA, USA, 2007. [Google Scholar]

- Bier, S.; Li, R.; Wang, W. A Full-Dimensional Robot Teleoperation Platform. In Proceedings of the 2020 IEEE International Conference on Mechanical and Aerospace Engineering, Athens, Greece, 14–17 July 2020; pp. 186–191. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; pp. 1–6. [Google Scholar]

- Diamantopoulos, H.; Wang, W. Accommodating and Assisting Human Partners in Human-Robot Collaborative Tasks through Emotion Understanding. In Proceedings of the 2021 International Conference on Mechanical and Aerospace Engineering (ICMAE), Athens, Greece, 16–19 July 2021; pp. 523–528. [Google Scholar]

- Chitta, S.; Sucan, I.; Cousins, S. Moveit! IEEE Robot. Autom. Mag. 2012, 19, 18–19. [Google Scholar] [CrossRef]

- The Experimental Demo. Available online: https://www.youtube.com/watch?v=2sVAmwHPHOc (accessed on 2 February 2023).

- Bütepage, J.; Kjellström, H.; Kragic, D. Anticipating many futures: Online human motion prediction and synthesis for human-robot collaboration. arXiv 2017, arXiv:1702.08212. [Google Scholar]

- Ding, H.; Reißig, G.; Wijaya, K.; Bortot, D.; Bengler, K.; Stursberg, O. Human arm motion modeling and long-term prediction for safe and efficient human-robot-interaction. In Proceedings of the Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 5875–5880. [Google Scholar]

- Kinugawa, J.; Kanazawa, A.; Arai, S.; Kosuge, K. Adaptive Task Scheduling for an Assembly Task Coworker Robot Based on Incremental Learning of Human’s Motion Patterns. IEEE Robot. Autom. Lett. 2017, 2, 856–863. [Google Scholar] [CrossRef]

- Elfring, J.; van de Molengraft, R.; Steinbuch, M. Learning intentions for improved human motion prediction. Robot. Auton. Syst. 2014, 62, 591–602. [Google Scholar] [CrossRef]

- Song, D.; Kyriazis, N.; Oikonomidis, I.; Papazov, C.; Argyros, A.; Burschka, D.; Kragic, D. Predicting human intention in visual observations of hand/object interactions. In Proceedings of the Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 1608–1615. [Google Scholar]

- Nguyen, H.-T.; Cheah, C.C. Analytic Deep Neural Network-Based Robot Control. IEEE/ASME Trans. Mechatron. 2022, 27, 2176–2184. [Google Scholar] [CrossRef]

- Khan, A.T.; Li, S.; Cao, X. Control framework for cooperative robots in smart home using bio-inspired neural network. Measurement 2021, 167, 108253. [Google Scholar] [CrossRef]

- Watanabe, K.; Tang, J.; Nakamura, M.; Koga, S.; Fukuda, T. A fuzzy-Gaussian neural network and its application to mobile robot control. IEEE Trans. Control Syst. Technol. 1996, 4, 193–199. [Google Scholar] [CrossRef]

- Thor, M.; Kulvicius, T.; Manoonpong, P. Generic neural locomotion control framework for legged robots. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4013–4025. [Google Scholar] [CrossRef]

- Sun, F.; Sun, Z.; Woo, P.-Y. Neural network-based adaptive controller design of robotic manipulators with an observer. IEEE Trans. Neural Netw. 2001, 12, 54–67. [Google Scholar] [PubMed]

- Wang, N.; Chen, C.; Yang, C. A robot learning framework based on adaptive admittance control and generalizable motion modeling with neural network controller. Neurocomputing 2020, 390, 260–267. [Google Scholar] [CrossRef]

- Wang, W.; Li, R.; Diekel, Z.M.; Chen, Y.; Zhang, Z.; Jia, Y. Controlling Object Hand-Over in Human–Robot Collaboration Via Natural Wearable Sensing. IEEE Trans. Hum.-Mach. Syst. 2019, 49, 59–71. [Google Scholar] [CrossRef]

- Weiss, K.R.; Khoshgoftaar, T.M. An investigation of transfer learning and traditional machine learning algorithms. In Proceedings of the 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI), San Jose, CA, USA, 6–8 November 2016; pp. 283–290. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hannum, C.; Li, R.; Wang, W. A Trust-Assist Framework for Human–Robot Co-Carry Tasks. Robotics 2023, 12, 30. https://doi.org/10.3390/robotics12020030

Hannum C, Li R, Wang W. A Trust-Assist Framework for Human–Robot Co-Carry Tasks. Robotics. 2023; 12(2):30. https://doi.org/10.3390/robotics12020030

Chicago/Turabian StyleHannum, Corey, Rui Li, and Weitian Wang. 2023. "A Trust-Assist Framework for Human–Robot Co-Carry Tasks" Robotics 12, no. 2: 30. https://doi.org/10.3390/robotics12020030