How AI from Automated Driving Systems Can Contribute to the Assessment of Human Driving Behavior

Abstract

1. Introduction

Aim

2. Method

2.1. Setup

2.2. Scenarios

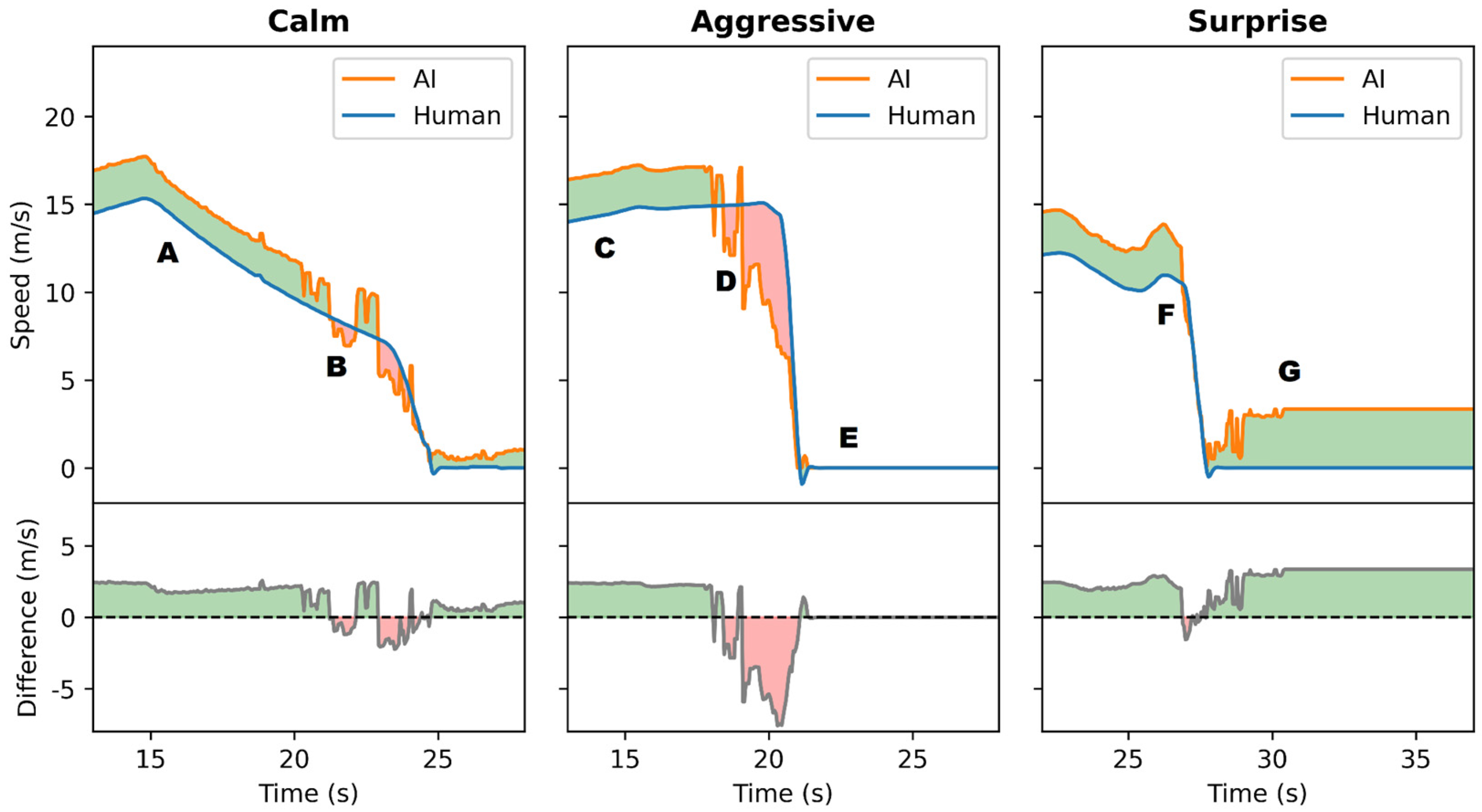

2.2.1. Calm Scenario (Driver of Ego-Vehicle Did Not Brake Hard)

2.2.2. Aggressive Scenario (Driver of Ego-Vehicle Braked Hard, but Hard Braking Was Avoidable)

2.2.3. Surprise Scenario (Driver of Ego-Vehicle Braked Hard, and Hard Braking Was Unavoidable)

2.3. Analysis

- A 1928 × 1208 pixel forward-facing driving video recorded at 20 frames per second, simulating the visual input an autonomous system would receive.

- A corresponding CSV file with a row for each video frame containing vehicle state data, including speed, bearing, steering angle, brake, and throttle inputs.

3. Results

3.1. Calm Scenario

3.2. Aggressive Scenario

3.3. Surprise Scenario

4. Discussion

4.1. Limitations

4.2. Recommendations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Openpilot Overview and Modifications

Appendix A.2. Other Variables

Appendix A.3. Further Notes

References

- Admiral. Black Box Insurance. 2024. Available online: https://www.admiral.com/black-box-insurance (accessed on 6 November 2024).

- Allianz. BonusDrive. 2024. Available online: https://www.allianz.de/auto/kfz-versicherung/telematik-versicherung (accessed on 6 November 2024).

- Allstate. Drivewise. 2024. Available online: https://www.allstate.com/drivewise (accessed on 6 November 2024).

- ANWB. Veilig Rijden [Safe Driving]. 2024. Available online: https://www.anwb.nl/verzekeringen/autoverzekering/veilig-rijden/hoe-werkt-het (accessed on 6 November 2024).

- Direct Assurance. YouDrive. 2024. Available online: https://www.direct-assurance.fr/nos-assurances/assurance-auto-connectee (accessed on 6 November 2024).

- Nationwide. SmartRide. 2024. Available online: https://www.nationwide.com/personal/insurance/auto/discounts/smartride (accessed on 6 November 2024).

- Tesla. Safety Score Beta: Version 2.1. Tesla Support. 2024. Available online: https://www.tesla.com/support/insurance/safety-score#version-2.1 (accessed on 6 November 2024).

- Cai, M.; Yazdi, M.A.A.; Mehdizadeh, A.; Hu, Q.; Vinel, A.; Davis, K.; Xian, H.; Megahed, F.M.; Rigdon, S.E. The association between crashes and safety-critical events: Synthesized evidence from crash reports and naturalistic driving data among commercial truck drivers. Transp. Res. Part C Emerg. Technol. 2021, 126, 103016. [Google Scholar] [CrossRef]

- Driessen, T.; Dodou, D.; De Waard, D.; De Winter, J.C.F. Predicting damage incidents, fines, and fuel consumption from truck driver data: A study from the Netherlands. Transp. Res. Rec. 2024, 2678, 1026–1042. [Google Scholar] [CrossRef]

- Hunter, M.; Saldivar-Carranza, E.; Desai, J.; Mathew, J.K.; Li, H.; Bullock, D.M. A proactive approach to evaluating intersection safety using hard-braking data. J. Big Data Anal. Transp. 2021, 3, 81–94. [Google Scholar] [CrossRef]

- Ma, Y.-L.; Zhu, X.; Hu, X.; Chiu, Y.-C. The use of context-sensitive insurance telematics data in auto insurance rate making. Transp. Res. Part A Policy Pract. 2018, 113, 243–258. [Google Scholar] [CrossRef]

- Guillen, M.; Pérez-Marín, A.M.; Nielsen, J.P. Pricing weekly motor insurance drivers’ with behavioral and contextual telematics data. Heliyon 2024, 10, e36501. [Google Scholar] [CrossRef] [PubMed]

- Melman, T.; Abbink, D.; Mouton, X.; Tapus, A.; De Winter, J. Multivariate and location-specific correlates of fuel consumption: A test track study. Transp. Res. Part D Transp. Environ. 2021, 92, 102627. [Google Scholar] [CrossRef]

- Moosavi, S.; Ramnath, R. Context-aware driver risk prediction with telematics data. Accid. Anal. Prev. 2023, 192, 107269. [Google Scholar] [CrossRef] [PubMed]

- Masello, L.; Castignani, G.; Sheehan, B.; Guillen, M.; Murphy, F. Using contextual data to predict risky driving events: A novel methodology from explainable artificial intelligence. Accid. Anal. Prev. 2023, 184, 106997. [Google Scholar] [CrossRef] [PubMed]

- Reig Torra, J.; Guillen, M.; Pérez-Marín, A.M.; Rey Gámez, L.; Aguer, G. Weather conditions and telematics panel data in monthly motor insurance claim frequency models. Risks 2023, 11, 57. [Google Scholar] [CrossRef]

- Waymo. Waymo One. 2024. Available online: https://waymo.com (accessed on 6 November 2024).

- Hu, X.; Zheng, Z.; Chen, D.; Sun, J. Autonomous vehicle’s impact on traffic: Empirical evidence from Waymo open dataset and implications from modelling. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6711–6724. [Google Scholar] [CrossRef]

- Lyft. Lyft. 2024. Available online: https://www.lyft.com (accessed on 6 November 2024).

- Li, T.; Han, X.; Ma, J.; Ramos, M.; Lee, C. Operational safety of automated and human driving in mixed traffic environments: A perspective of car-following behavior. Proc. Inst. Mech. Eng. Part O J. Risk Reliab. 2023, 237, 355–366. [Google Scholar] [CrossRef]

- NVIDIA. Self-Driving Vehicles. 2024. Available online: https://www.nvidia.com/en-us/self-driving-cars (accessed on 6 November 2024).

- comma.ai. Openpilot. 2024. Available online: https://comma.ai/openpilot (accessed on 6 November 2024).

- Dorr, B. Prius Sets ‘Autonomous’ Cannonball Run Record with AI Driving Assistant. 2024. Available online: https://www.yahoo.com/tech/prius-sets-autonomous-cannonball-run-180347033.html (accessed on 6 November 2024).

- Tesla. Full Self-Driving (Supervised). 2024. Available online: https://www.tesla.com/ownersmanual/modely/en_us/GUID-2CB60804-9CEA-4F4B-8B04-09B991368DC5.html (accessed on 6 November 2024).

- Waymo. Fleet Response: Lending a Helpful Hand to Waymo’s Autonomously Driven Vehicles. 2024. Available online: https://waymo.com/blog/2024/05/fleet-response (accessed on 6 November 2024).

- Zoox. How Zoox Uses Teleguidance to Provide Remote Human Assistance to Its Autonomous Vehicles [Video]. YouTube. 2020. Available online: https://www.youtube.com/watch?v=NKQHuutVx78 (accessed on 6 November 2024).

- Lu, S.; Shi, W. Teleoperation in vehicle computing. In Vehicle Computing: From Traditional Transportation to Computing on Wheels; Lu, S., Shi, W., Eds.; Springer: Cham, Switzerland, 2024; pp. 181–209. [Google Scholar] [CrossRef]

- Michon, J.A. (Ed.) Generic Intelligent Driver Support; Taylor Francis Ltd.: London, UK, 1993. [Google Scholar]

- Michon, J.A. GIDS: Generic Intelligent Driver Support. 1993. Available online: https://www.jamichon.nl/jam_writings/1993_car_driver_support.pdf (accessed on 6 November 2024).

- comma.ai. Openpilot: An Operating System for Robotics. GitHub. 2024. Available online: https://github.com/commaai/openpilot (accessed on 6 November 2024).

- Chen, L.; Tang, T.; Cai, Z.; Li, Y.; Wu, P.; Li, H.; Shi, J.; Yan, J.; Qiao, Y. Level 2 autonomous driving on a single device: Diving into the devils of Openpilot. arXiv 2022. [Google Scholar] [CrossRef]

- comma. A Drive to Taco Bell [Video]. YouTube. 2022. Available online: https://www.youtube.com/watch?v=SUIZYzxtMQs (accessed on 6 November 2024).

- Greer Viau. I Turned My Toyota Corolla into a Self Driving Car [Video]. YouTube. 2021. Available online: https://www.youtube.com/watch?v=NmBfgOanCyk (accessed on 6 November 2024).

- Beckers, N.; Siebinga, O.; Giltay, J.; Van der Kraan, A. JOAN: A framework for human-automated vehicle interaction experiments in a virtual reality driving simulator. J. Open Source Softw. 2023, 8, 4250. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; Available online: https://proceedings.mlr.press/v78/dosovitskiy17a.html (accessed on 6 November 2024).

- Driessen, T.; Picco, A.; Dodou, D.; De Waard, D.; De Winter, J.C.F. Driving examiners’ views on data-driven assessment of test candidates: An interview study. Transp. Res. Part F Traffic Psychol. Behav. 2021, 83, 60–79. [Google Scholar] [CrossRef]

- Hennessey, M.P.; Shankwitz, C.; Donath, M. Sensor-based virtual bumpers for collision avoidance: Configuration issues. In Proceedings of the PHOTONICS EAST ’95, Philadelphia, PA, USA, 22–26 October 1995; Volume 2592, pp. 48–59. [Google Scholar] [CrossRef]

- Li, L.; Gan, J.; Ji, X.; Qu, X.; Ran, B. Dynamic driving risk potential field model under the connected and automated vehicles environment and its application in car-following modeling. IEEE Trans. Intell. Transp. Syst. 2020, 23, 122–141. [Google Scholar] [CrossRef]

- Kolekar, S.; Petermeijer, B.; Boer, E.; De Winter, J.; Abbink, D. A risk field-based metric correlates with driver’s perceived risk in manual and automated driving: A test-track study. Transp. Res. Part C Emerg. Technol. 2021, 133, 103428. [Google Scholar] [CrossRef]

- comma.ai. Development Speed Over Everything [Blog]. 11 October 2022. Available online: https://blog.comma.ai/dev-speed (accessed on 6 November 2024).

- Allen, R.W.; Rosenthal, T.J.; Aponso, B.L. Measurement of behavior and performance in driving simulation. In Proceedings of the Driving Simulation Conference North America, 2005, Orlando, FL, USA, 30 November–2 December 2005; pp. 240–250. Available online: https://www.nads-sc.uiowa.edu/dscna/2005/papers/Measurement_Behavior_Performance_Driving_Simulation.pdf (accessed on 6 November 2024).

- Michon, J.A. A critical view of driver behavior models: What do we know, what should we do? In Human Behavior and Traffic Safety; Evans, L., Schwing, R.C., Eds.; Springer: Boston, MA, USA, 1985; pp. 485–524. [Google Scholar] [CrossRef]

- Fridman, L.; Ding, L.; Jenik, B.; Reimer, B. Arguing machines: Human supervision of black box AI systems that make life-critical decisions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

- Waymo-Research. Waymo-Open-Dataset. GitHub. 2024. Available online: https://github.com/waymo-research/waymo-open-dataset (accessed on 6 November 2024).

- Tesla. Teslamotors. GitHub. 2024. Available online: https://github.com/teslamotors (accessed on 6 November 2024).

- Sivakumar, P.; Neeraja Lakshmi, A.; Angamuthu, A.; Sandhya Devi, R.S.; Vinoth Kumar, B.; Studener, S. Automotive Grade Linux. An open-source architecture for connected cars. In Software Engineering for Automotive Systems; CRC Press: Boca Raton, FL, USA, 2022; pp. 91–110. [Google Scholar] [CrossRef]

- comma.ai. Openpilot in Simulator. GitHub. 2024. Available online: https://github.com/commaai/openpilot/tree/master/tools/sim (accessed on 6 November 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Driessen, T.; Siebinga, O.; de Boer, T.; Dodou, D.; de Waard, D.; de Winter, J. How AI from Automated Driving Systems Can Contribute to the Assessment of Human Driving Behavior. Robotics 2024, 13, 169. https://doi.org/10.3390/robotics13120169

Driessen T, Siebinga O, de Boer T, Dodou D, de Waard D, de Winter J. How AI from Automated Driving Systems Can Contribute to the Assessment of Human Driving Behavior. Robotics. 2024; 13(12):169. https://doi.org/10.3390/robotics13120169

Chicago/Turabian StyleDriessen, Tom, Olger Siebinga, Thomas de Boer, Dimitra Dodou, Dick de Waard, and Joost de Winter. 2024. "How AI from Automated Driving Systems Can Contribute to the Assessment of Human Driving Behavior" Robotics 13, no. 12: 169. https://doi.org/10.3390/robotics13120169

APA StyleDriessen, T., Siebinga, O., de Boer, T., Dodou, D., de Waard, D., & de Winter, J. (2024). How AI from Automated Driving Systems Can Contribute to the Assessment of Human Driving Behavior. Robotics, 13(12), 169. https://doi.org/10.3390/robotics13120169