Nonbinary Voices for Digital Assistants—An Investigation of User Perceptions and Gender Stereotypes

Abstract

:1. Introduction

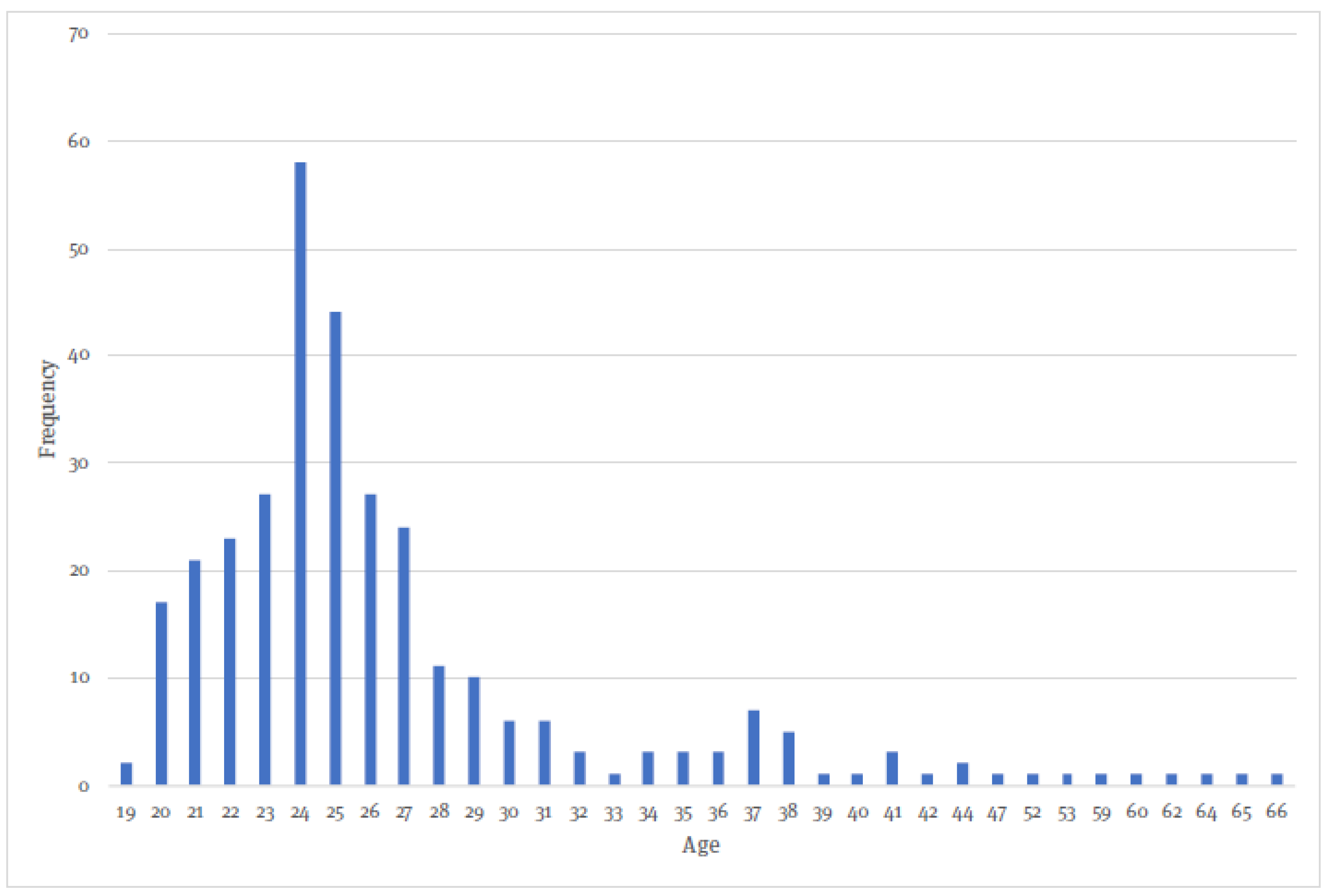

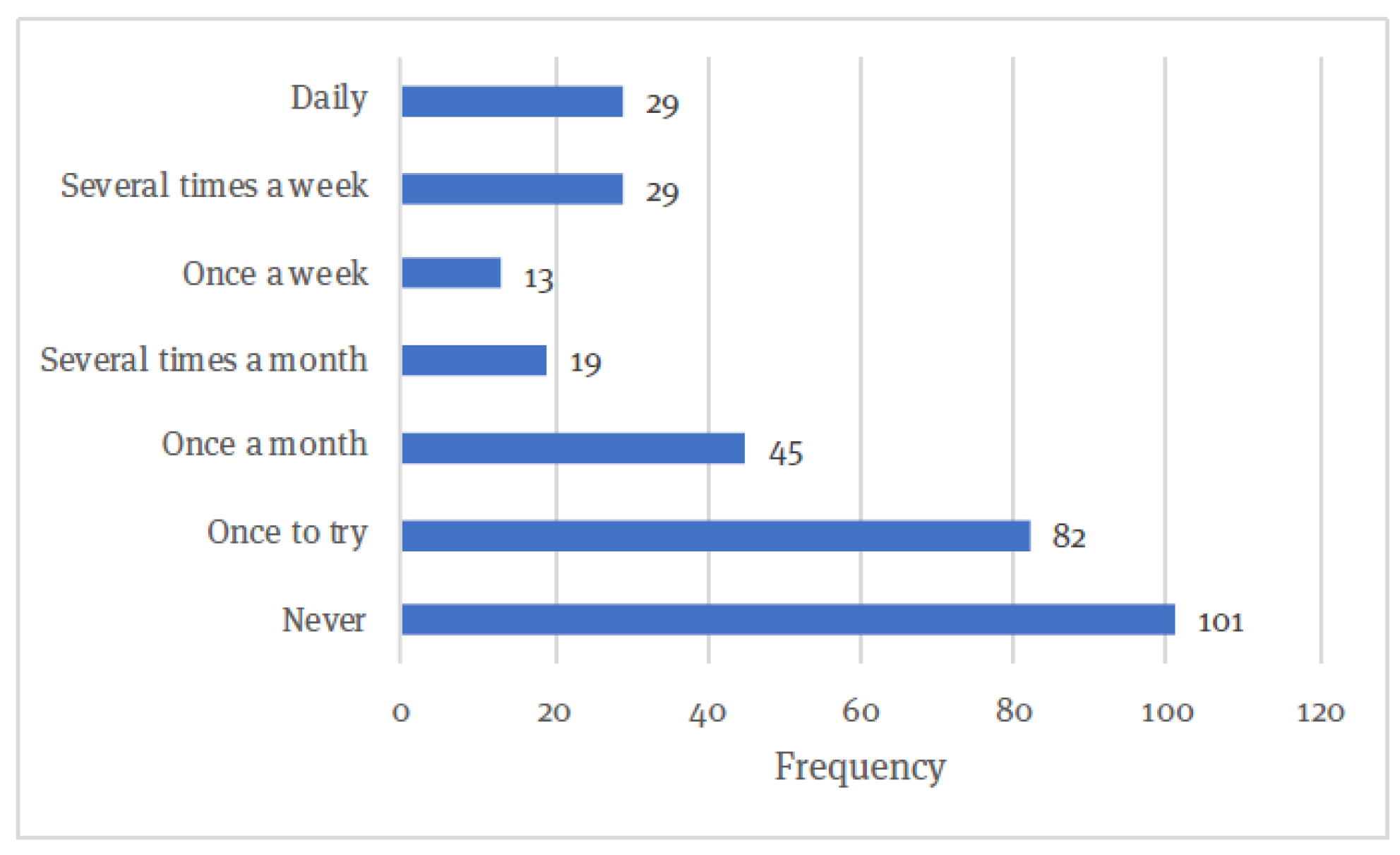

How do people perceive nonbinary digital voices, and to what extent do they elicit gender stereotypes?

2. Theoretical Concepts and Related Work

2.1. Voice-Based Human–Machine Interaction

2.2. The Gender Binary

2.3. Gender and Digital Voice Assistants

2.4. Promoting a Nonbinary Option for Digital Voice Assistants

3. Methodology

3.1. Research Model

3.2. Hypotheses

3.3. Materials

3.4. Measures

3.4.1. Perceived Voice Gender

3.4.2. Likability of Voices

3.4.3. Bem Sex Role Inventory

3.4.4. Big Five Inventory

3.4.5. Gender Role Stereotype Scale

3.4.6. Affinity for Technology Interaction

3.5. Procedure

3.6. Statistical Analyses

4. Results

4.1. Perceived Gender

4.2. Elicitation of Gender Stereotypes (H1)

4.2.1. Female Voice (H1a)

4.2.2. Male Voice (H1b)

4.2.3. Nonbinary Voice (H1c)

4.3. Factors Influencing Likability (H2)

4.3.1. Respondents’ Gender (H2a)

4.3.2. Respondents’ Personality (H2b)

4.3.3. Respondents’ Egalitarianism, Age and Education (H2c–e)

5. Discussion

5.1. Gender Perception in DVA Voices

5.2. Elicitation of Gender Stereotypes

5.3. Factors Influencing Likability

6. Conclusions, Limitations and Future Outlook

6.1. Limitations and Areas for Future Work

6.2. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DVA | Digital Voice Assistant |

| BSRI | Bem Sex Role Inventory |

| BFI | Big Five Inventory |

| GRSS | Gender Role Stereotype Scale |

| ATI | Affinity for Technology Interaction |

| SFF | Speaking Fundamental Frequency |

| ANOVA | Analysis of Variance |

| CASA | Computers are Social Actors |

References

- Synup Corporation. 80+ Industry Specific Voice Search Statistics for 2024. Available online: https://www.synup.com/voice-search-statistics (accessed on 8 March 2024).

- Semrush Blog. 7 Up-to-Date Voice Search Statistics (+3 Best Practices). Available online: https://www.semrush.com/blog/voice-search-statistics/ (accessed on 8 March 2024).

- Yaguara. 79+ Voice Search Statistics for 2024 (Data, Users & Trends). Available online: https://www.yaguara.co/voice-search-statistics/ (accessed on 8 March 2024).

- Serpwatch. Voice Search Statistics: Smart Speakers, Voice Assistants, and Users in 2024. Available online: https://serpwatch.io/blog/voice-search-statistics/ (accessed on 8 March 2024).

- UNESCO. I’d Blush If I Could: Closing Gender Divides in Digital Skills through Education. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000367416.locale=en/ (accessed on 8 March 2024).

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Otterbacher, J.; Talias, M. S/he’s too Warm/Agentic! The Influence of Gender on Uncanny Reactions to Robots. In Proceedings of the 2017 12th ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 214–223. [Google Scholar]

- Butler, J.; Trouble, G. Feminism and the Subversion of Identity. Gend. Troubl. 1990, 3, 1–25. [Google Scholar]

- McTear, M.F.; Callejas, Z.; Griol, D. The Conversational Interface—Talking to Smart Devices; Springer: Berlin/Heidelberg, Germany, 2016; Volume 6. [Google Scholar]

- Nass, C.I.; Brave, S. Wired for Speech: How Voice Activates and Advances the Human-Computer Relationship; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Nass, C.; Gong, L. Speech interfaces from an evolutionary perspective. Commun. ACM 2000, 43, 36–43. [Google Scholar] [CrossRef]

- McTear, M.F. Spoken dialogue technology: Enabling the conversational user interface. ACM Comput. Surv. 2002, 34, 90–169. [Google Scholar] [CrossRef]

- Politt, R.; Pollock, J.; Waller, E. Day-to-Day Dyslexia in the Classroom; Routledge: Oxfordshire, UK, 2004. [Google Scholar]

- Kiss, G. Autonomous agents, AI and chaos theory. In Proceedings of the First International Conference on Simulation of Adaptive Behavior (From Animals to Animats), Paris, France, 14 February 1991; Citeseer: Princeton, NJ, USA, 1991. [Google Scholar]

- Braun, M.; Mainz, A.; Chadowitz, R.; Pfleging, B.; Alt, F. At your service: Designing voice assistant personalities to improve automotive user interfaces. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–11. [Google Scholar]

- Wooldridge, M.; Jennings, N.R. Intelligent agents: Theory and practice. Knowl. Eng. Rev. 1995, 10, 115–152. [Google Scholar] [CrossRef]

- Hamill, L. Controlling smart devices in the home. Inf. Soc. 2006, 22, 241–249. [Google Scholar] [CrossRef]

- Gaiani, M.; Benedetti, B. A methodological proposal for representation and scientific description of the great archaeological monuments. In Proceedings of the 2014 International Conference on Virtual Systems & Multimedia (VSMM), Hong Kong, China, 9–12 December 2014; pp. 122–129. [Google Scholar]

- Seeger, A.M.; Pfeiffer, J.; Heinzl, A. When do we need a human? Anthropomorphic design and trustworthiness of conversational agents. In Proceedings of the ACM CHI onference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017. [Google Scholar]

- Wagner, K.; Nimmermann, F.; Schramm-Klein, H. Is it human? The role of anthropomorphism as a driver for the successful acceptance of digital voice assistants. In Proceedings of the 52nd Hawaii International Conference on System Sciences, Maui, HI, USA, 8–11 January 2019. [Google Scholar]

- Hoy, M.B. Alexa, Siri, Cortana, and more: An introduction to voice assistants. Med Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef] [PubMed]

- Cambre, J.; Kulkarni, C. One Voice Fits All? Social Implications and Research Challenges of Designing Voices for Smart Devices. Proc. Acm Hum. Comput. Interact. 2019, 3, 1–19. [Google Scholar] [CrossRef]

- Byrne, D.; Nelson, D. The effect of topic importance and attitude similarity-dissimilarity on attraction in a multistranger design. Psychon. Sci. 1965, 3, 449–450. [Google Scholar] [CrossRef]

- Zuckerman, M.; Driver, R.E. What sounds beautiful is good: The vocal attractiveness stereotype. J. Nonverbal Behav. 1989, 13, 67–82. [Google Scholar] [CrossRef]

- Kramer, J.; Noronha, S.; Vergo, J. A user-centered design approach to personalization. Commun. ACM 2000, 43, 44–48. [Google Scholar] [CrossRef]

- Cole, E.R. Intersectionality and research in psychology. Am. Psychol. 2009, 64, 170. [Google Scholar] [CrossRef] [PubMed]

- Haslanger, S. Ontology and social construction. Philos. Top. 1995, 23, 95–125. [Google Scholar] [CrossRef]

- Piper, A.M. Stereotyping Femininity in Disembodied Virtual Assistants. Master’s Thesis, Iowa State University, Ames, Iowa, 2016. [Google Scholar]

- Hyde, J.S.; Bigler, R.S.; Joel, D.; Tate, C.C.; van Anders, S.M. The future of sex and gender in psychology: Five challenges to the gender binary. Am. Psychol. 2019, 74, 171. [Google Scholar] [CrossRef]

- Bryant, D.; Borenstein, J.; Howard, A. Why Should We Gender? The Effect of Robot Gendering and Occupational Stereotypes on Human Trust and Perceived Competency. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 13–21. [Google Scholar]

- Søraa, R.A. Mechanical genders: How do humans gender robots? Gender, Technol. Dev. 2017, 21, 99–115. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y.; Green, N. Are machines gender neutral? Gender-stereotypic responses to computers with voices. J. Appl. Soc. Psychol. 1997, 27, 864–876. [Google Scholar] [CrossRef]

- Nomura, T. Robots and gender. Gend. Genome 2017, 1, 18–25. [Google Scholar] [CrossRef]

- Prentice, D.A.; Carranza, E. What women and men should be, shouldn’t be, are allowed to be, and don’t have to be: The contents of prescriptive gender stereotypes. Psychol. Women Q. 2002, 26, 269–281. [Google Scholar] [CrossRef]

- Brahnam, S.; De Angeli, A. Gender affordances of conversational agents. Interact. Comput. 2012, 24, 139–153. [Google Scholar] [CrossRef]

- Eyssel, F.; Kuchenbrandt, D.; Bobinger, S.; De Ruiter, L.; Hegel, F. ‘If you sound like me, you must be more human’: On the interplay of robot and user features on human-robot acceptance and anthropomorphism. In Proceedings of the HRI’12—Proceedings of the 7th Annual ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012; pp. 125–126. [Google Scholar] [CrossRef]

- Adams, R.; Loideáin, N.N. Addressing indirect discrimination and gender stereotypes in AI virtual personal assistants: The role of international human rights law. Camb. Int. Law J. 2019, 8, 241–257. [Google Scholar] [CrossRef]

- Bergen, H. ‘I’d blush if I could’: Digital assistants, disembodied cyborgs and the problem of gender. Word Text, J. Lit. Stud. Linguist. 2016, 6, 95–113. [Google Scholar]

- Oudshoorn, N.; Rommes, E.; Stienstra, M. Configuring the User as Everybody: Gender and Design Cultures in Information and Communication Technologies. Sci. Technol. Hum. Values 2004, 29, 30–63. [Google Scholar] [CrossRef]

- Balsamo, A.M. Technologies of the Gendered Body: Reading Cyborg Women; Duke University Press: Durham, NC, USA, 1996. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Ackerman, P.L. A longitudinal field investigation of gender differences in individual technology adoption decision-making processes. Organ. Behav. Hum. Decis. Process. 2000, 83, 33–60. [Google Scholar] [CrossRef]

- Crowell, C.R.; Scheutz, M.; Schermerhorn, P.; Villano, M. Gendered voice and robot entities: Perceptions and reactions of male and female subjects. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2009, St. Louis, MO, USA, 10–15 October 2009; pp. 3735–3741. [Google Scholar] [CrossRef]

- Schermerhorn, P.; Scheutz, M.; Crowell, C.R. Robot social presence and gender: Do females view robots differently than males? In Proceedings of the HRI 2008—Proceedings of the 3rd ACM/IEEE International Conference on Human-Robot Interaction: Living with Robots, Amsterdam, The Netherlands, 12–15 March 2008; pp. 263–270. [Google Scholar] [CrossRef]

- Kuo, I.H.; Rabindran, J.M.; Broadbent, E.; Lee, Y.I.; Kerse, N.; Stafford, R.M.Q.; MacDonald, B.A. Age and gender factors in user acceptance of healthcare robots. In Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 214–219. [Google Scholar]

- Wang, Y.; Young, J.E. Beyond “pink” and “blue”: Gendered attitudes towards robots in society. In Gender and IT Appropriation. Science and Practice on Dialogue—Forum for Interdisciplinary Exchange; European Society for Socially Embedded Technologies: Siegen, Germany, 2014; pp. 49–59. [Google Scholar]

- Siegel, M.; Breazeal, C.; Norton, M.I. Persuasive robotics: The influence of robot gender on human behavior. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2009, St. Louis, MO, USA, 11–15 October 2009; pp. 2563–2568. [Google Scholar] [CrossRef]

- Rhim, J.; Kim, Y.; Kim, M.S.; Yim, D.Y. The effect of gender cue alterations of robot to match task attributes on user’s acceptance perception. In Proceedings of the HCI Korea 2015, Seoul, Republic of Korea, 10–12 December 2014; pp. 51–57. [Google Scholar]

- Curry, A.C.; Rieser, V. # MeToo Alexa: How conversational systems respond to sexual harassment. In Proceedings of the Second Acl Workshop on Ethics in Natural Language Processing, New Orleans, LA, USA, 5 June 2018; pp. 7–14. [Google Scholar]

- Schiebinger, L.; Klinge, I. Gendered innovations. In How Gender Analysis Contributes to Research; Publications Office of the European Union, Directorate General for Research & Innovation: Brussels, Belgium, 2013. [Google Scholar]

- Søndergaard, M.L.J.; Hansen, L.K. Intimate Futures: Staying with the Trouble of Digital Personal Assistants through Design Fiction. In Proceedings of the 2018 Designing Interactive Systems Conference, Hong Kong, China, 9–13 June 2018; pp. 869–880. [Google Scholar]

- Phan, T. The Materiality of the Digital and the Gendered Voice of Siri. Transformations 2017, 29, 24–33. [Google Scholar]

- Davies, S.; Papp, V.G.; Antoni, C. Voice and communication change for gender nonconforming individuals: Giving voice to the person inside. Int. J. Transgenderism 2015, 16, 117–159. [Google Scholar] [CrossRef]

- Schmid, M.; Bradley, E. Vocal pitch and intonation characteristics of those who are gender non-binary. In Proceedings of the 19th International Conference of Phonetic Sciences, Melbourne, Australia, 5–9 August 2019; pp. 2685–2689. [Google Scholar]

- Stoicheff, M.L. Speaking fundamental frequency characteristics of nonsmoking female adults. J. Speech Lang. Hear. Res. 1981, 24, 437–441. [Google Scholar] [CrossRef] [PubMed]

- Titze, I. Principles of Voice Production; Prentice Hall: Hoboken, NJ, USA, 1994. [Google Scholar]

- Zheng, J.F.; Jarvenpaa, S. Negative Consequences of Anthropomorphized Technology: A Bias-Threat-Illusion Model. In Proceedings of the 52nd Hawaii International Conference on System Sciences, Maui, HI, USA, 8–11 January 2019. [Google Scholar]

- Jørgensen, S.H.; Baird, A.; Juutilainen, F.T.; Pelt, M.; Højholdt, N.C. [multi’vocal]: Reflections on engaging everyday people in the development of a collective non-binary synthesized voice. In Proceedings of the EVA Copenhagen 2018, Aalborg University, Copenhagen, Denmark, 15–17 May 2018. [Google Scholar] [CrossRef]

- Turner, J.C.; Oakes, P.J. The significance of the social identity concept for social psychology with reference to individualism, interactionism and social influence. Br. J. Soc. Psychol. 1986, 25, 237–252. [Google Scholar] [CrossRef]

- Lee, E.J.; Nass, C.; Brave, S. Can computer-generated speech have gender? An experimental test of gender stereotype. In In Proceedings of the CHI’00 extended abstracts on Human factors in computing systems, The Hague, The Netherlands, 1–6 April 2000; pp. 289–290. [Google Scholar]

- Strait, M.; Briggs, P.; Scheutz, M. Gender, more so than age, modulates positive perceptions of language-based human-robot interactions. In Proceedings of the AISB Convention, Canterbury, UK, 20–22 April 2015. [Google Scholar]

- Nass, C.; Moon, Y.; Fogg, B.J.; Reeves, B.; Dryer, C. Can computer personalities be human personalities? In Proceedings of the Conference Companion on Human Factors in Computing Systems, Denver, CO, USA, 7–11 May 1995; pp. 228–229. [Google Scholar]

- Moskowitz, G.B.; Li, P. Egalitarian goals trigger stereotype inhibition: A proactive form of stereotype control. J. Exp. Soc. Psychol. 2011, 47, 103–116. [Google Scholar] [CrossRef]

- Chang, R.C.S.; Lu, H.P.; Yang, P. Stereotypes or golden rules? Exploring likable voice traits of social robots as active aging companions for tech-savvy baby boomers in Taiwan. Comput. Hum. Behav. 2018, 84, 194–210. [Google Scholar] [CrossRef]

- Nomura, T.; Takagi, S. Exploring effects of educational backgrounds and gender in human-robot interaction. In Proceedings of the 2011 International conference on user science and engineering (i-user), Selangor, Malaysia, 29 November–1 December 2011; pp. 24–29. [Google Scholar]

- Monahan, J.L. I don’t know it but I like you: The influence of nonconscious affect on person perception. Hum. Commun. Res. 1998, 24, 480–500. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Bem, S.L. The measurement of psychological androgyny. J. Consult. Clin. Psychol. 1974, 42, 155. [Google Scholar] [CrossRef] [PubMed]

- Rammstedt, B.; John, O.P. Measuring personality in one minute or less: A 10-item short version of the Big Five Inventory in English and German. J. Res. Personal. 2007, 41, 203–212. [Google Scholar] [CrossRef]

- Mills, M.J.; Culbertson, S.S.; Huffman, A.H.; Connell, A.R. Assessing gender biases: Development and initial validation of the gender role stereotypes scale. Gend. Manag. Int. J. 2012, 27, 520–540. [Google Scholar] [CrossRef]

- Attig, C.; Wessel, D.; Franke, T. Assessing personality differences in humantechnology interaction: An overview of key self-report scales to predict successful interaction. In Proceedings of the International Conference on Human-Computer Interaction, Vancouver, BC, Canada, 9–14 July 2017; pp. 19–29. [Google Scholar]

- George, D.; Mallery, P. SPSS for Windows Step by Step: A Simple Guide and Reference, 17.0 Update; Allyn & Bacon: Boston, MA, USA, 2010. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Academic Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Nass, C.; Steuer, J.; Tauber, E.R. Computers are social actors. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 24–28 April 1994; pp. 72–78. [Google Scholar]

- Tay, B.; Jung, Y.; Park, T. When stereotypes meet robots: The double-edge sword of robot gender and personality in human–robot interaction. Comput. Hum. Behav. 2014, 38, 75–84. [Google Scholar] [CrossRef]

- Powers, A.; Kramer, A.D.; Lim, S.; Kuo, J.; Lee, S.L.; Kiesler, S. Eliciting information from people with a gendered humanoid robot. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Nashville, TN, USA, 13–15 August 2005; Volume 2005, pp. 158–163. [Google Scholar] [CrossRef]

- Danielescu, A. Eschewing Gender Stereotypes in Voice Assistants to Promote Inclusion. In Proceedings of the CUI ’20: Proceedings of the 2nd Conference on Conversational User Interfaces, Bilbao, Spain, 22–24 July 2020; ACM Association for Computing Machinery. ACM: New York, NY, USA, 2020; pp. 1–3. [Google Scholar]

- Habler, F.; Schwind, V.; Henze, N. Effects of smart virtual assistants’ gender and language. In Proceedings of the Mensch und Computer 2019, ACM International Conference Proceeding Series. ACM Association for Computing Machinery, Hamburg, Germany, 8–11 September 2019; pp. 469–473. [Google Scholar]

- Cartei, V.; Bond, R.; Reby, D. What makes a voice masculine: Physiological and acoustical correlates of women’s ratings of men’s vocal masculinity. Horm. Behav. 2014, 66, 569–576. [Google Scholar] [CrossRef] [PubMed]

- Kerr, A.D. Alexa and the Promotion of Oppression. In Proceedings of the 2018 ACM Celebration of Women in Computing (womENcourage’18), Belgrade, Serbia, 3–5 October 2018; ACM: New York, NY, USA, 2018. [Google Scholar]

- Heilman, M.E. Gender stereotypes and workplace bias. Res. Organ. Behav. 2012, 32, 113–135. [Google Scholar] [CrossRef]

- Gaucher, D.; Friesen, J.; Kay, A.C. Evidence That Gendered Wording in Job Advertisements Exists and Sustains Gender Inequality. J. Personal. Soc. Psychol. 2011, 101, 109–128. [Google Scholar] [CrossRef]

- Alexander, E.; Bank, C.; Yang, J.J.; Hayes, B.; Scassellati, B. Asking for Help from a Gendered Robot. In Proceedings of the Annual Meeting of the Cognitive Science Society, Quebec City, QC, Canada, 23–26 July 2014. [Google Scholar]

- Cambre, J.; Colnago, J.; Maddock, J.; Tsai, J.; Kaye, J. Choice of Voices: A Large-Scale Evaluation of Text-to-Speech Voice Quality for Long-Form Content. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020. CHI ’20. pp. 1–13. [Google Scholar] [CrossRef]

- Costa, P.T.; McCrae, R.R. The five-factor model of personality and its relevance to personality disorders. Sci. Ment. Heal. Vol. Personal. Personal. Disord. 1992, 6, 17–33. [Google Scholar] [CrossRef]

| Product | Envisioned User Prompt | DVA Response Uttered by a Female, Male or Nonbinary Voice |

|---|---|---|

| Software | Ich will ein Textverarbeitungsprogramm kaufen. (en: I want to buy a text processing software.) | Ein Topergebnis ist Microsoft Office 365 Home multilingual Jahresabonnement sechs Nutzer Box. Der Preis beträgt neunundneunzig Euro und neunundneunzig Cent inklusive deutscher Mehrwertssteuer mit Lieferung bis zwanzigsten Mai. (en: A top result is Microsoft Office 365 Home multilingual annual subscription six user box. The price is ninety-nine euros and ninety-nine cents including German VAT with delivery by the twentieth of May.) |

| Pen | Ich will einen Kugelschreiber kaufen. (en: I want to buy a pen.) | Ein Topergebnis ist Faber Castell Kugelschreiber Poly Ball XB schwarz Schreibfarbe blau. Der Preis beträgt vier Euro und neunundachzig Cent inklusive deutscher Mehrwertssteuer. Wird voraussichtlich am zwanzigsten Mai geliefert. (en: A top result is Faber Castell ballpoint pen Poly Ball XB black writing color blue. The price is four euros and eighty-nine cents including German VAT. Expected to be delivered on the twentieth of May.) |

| Perceived Gender | |||||

|---|---|---|---|---|---|

| Female | Male | Nonbinary | Correctly Identified | ||

| Actual Gender | Female | 79 | 1 | 1 | % |

| Male | 0 | 71 | 5 | % | |

| Nonbinary | 16 | 105 | 40 | % | |

| Sum of Squares | df | Mean Square | F | Sig. | ||

|---|---|---|---|---|---|---|

| Between Groups | 2 | |||||

| Feminine traits | Within Groups | 315 | ||||

| Total | 317 | |||||

| Between Groups | 2 | |||||

| Masculine traits | Within Groups | 315 | ||||

| Total | 317 |

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| Gender-stereotypical traits | Equal variances assumed | 160 | ||||||||

| Equal variances not assumed | ||||||||||

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| Gender-stereotypical traits | Equal variances assumed | 150 | ||||||||

| Equal variances not assumed | ||||||||||

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| Gender-stereotypical traits | Equal variances assumed | 320 | ||||||||

| Equal variances not assumed | ||||||||||

| Model Summary | ||||||

| Model | R | R Square | Adjusted R Square | Std. Error of the Estimate | ||

| 1 | a | |||||

| ANOVA b | ||||||

| Model | Sum of Squares | df | Mean Square | F | Sig. | |

| Regression | 2 | c | ||||

| 1 | Residual | 158 | ||||

| Total | 160 | |||||

| Coefficients d | ||||||

| Model | Unstandardized Coefficients | t | Sig. | |||

| B | Std. Error | |||||

| (Constant) | ||||||

| 1 | Agreeability | |||||

| Neuroticism | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Längle, S.T.; Schlögl, S.; Ecker, A.; van Kooten, W.S.M.T.; Spieß, T. Nonbinary Voices for Digital Assistants—An Investigation of User Perceptions and Gender Stereotypes. Robotics 2024, 13, 111. https://doi.org/10.3390/robotics13080111

Längle ST, Schlögl S, Ecker A, van Kooten WSMT, Spieß T. Nonbinary Voices for Digital Assistants—An Investigation of User Perceptions and Gender Stereotypes. Robotics. 2024; 13(8):111. https://doi.org/10.3390/robotics13080111

Chicago/Turabian StyleLängle, Sonja Theresa, Stephan Schlögl, Annina Ecker, Willemijn S. M. T. van Kooten, and Teresa Spieß. 2024. "Nonbinary Voices for Digital Assistants—An Investigation of User Perceptions and Gender Stereotypes" Robotics 13, no. 8: 111. https://doi.org/10.3390/robotics13080111