Augmented Reality Guidance with Multimodality Imaging Data and Depth-Perceived Interaction for Robot-Assisted Surgery

Abstract

:1. Introduction

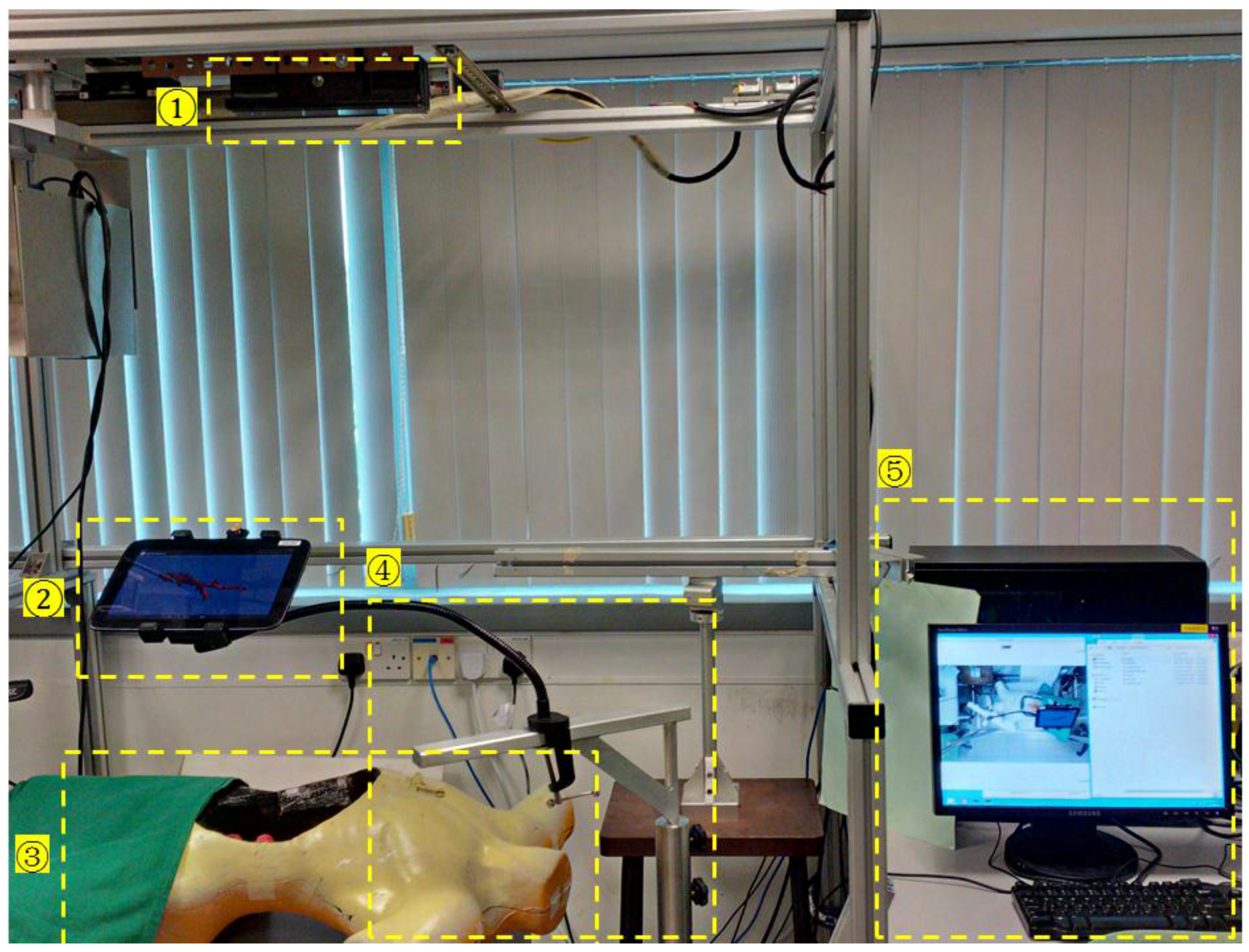

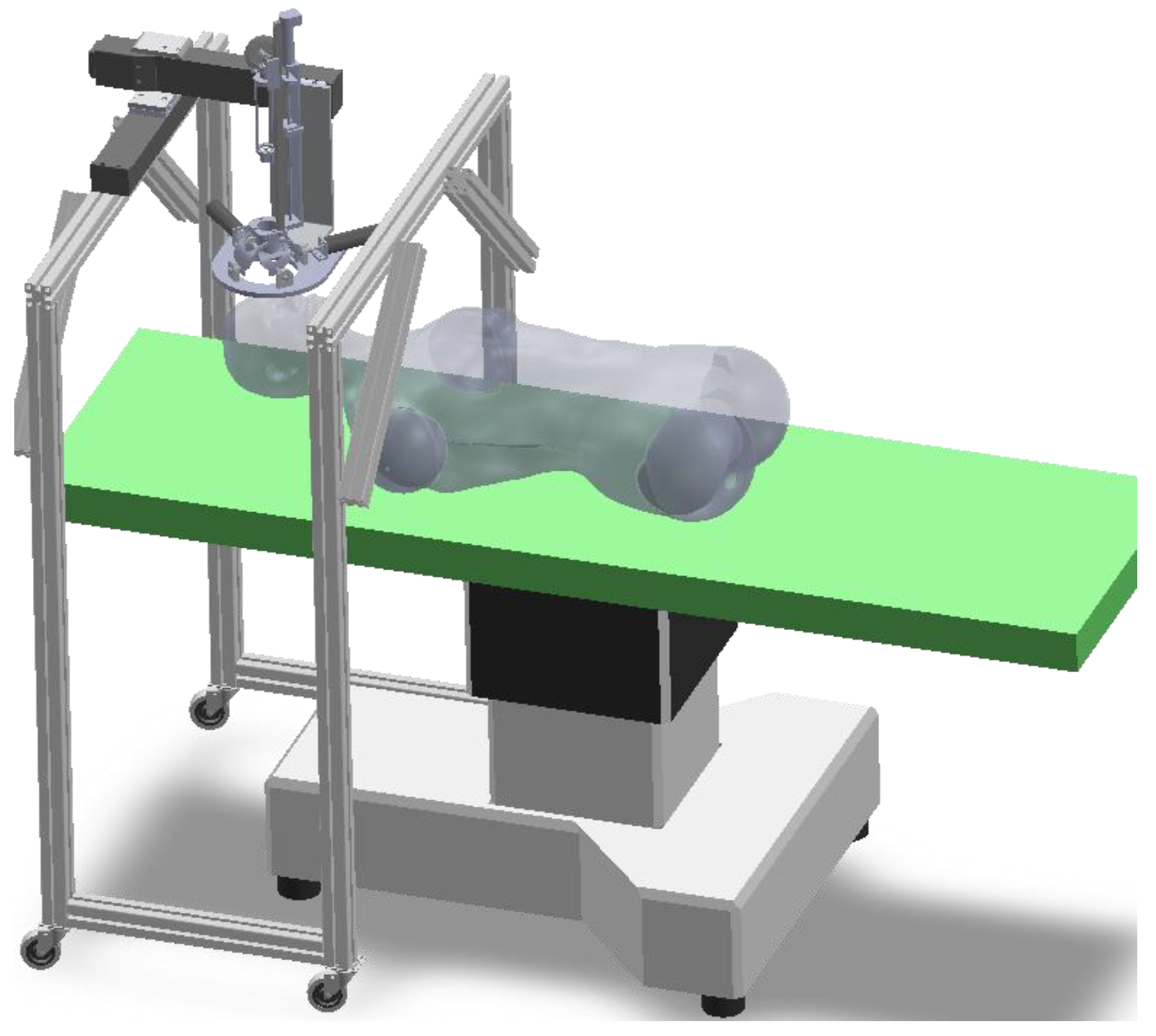

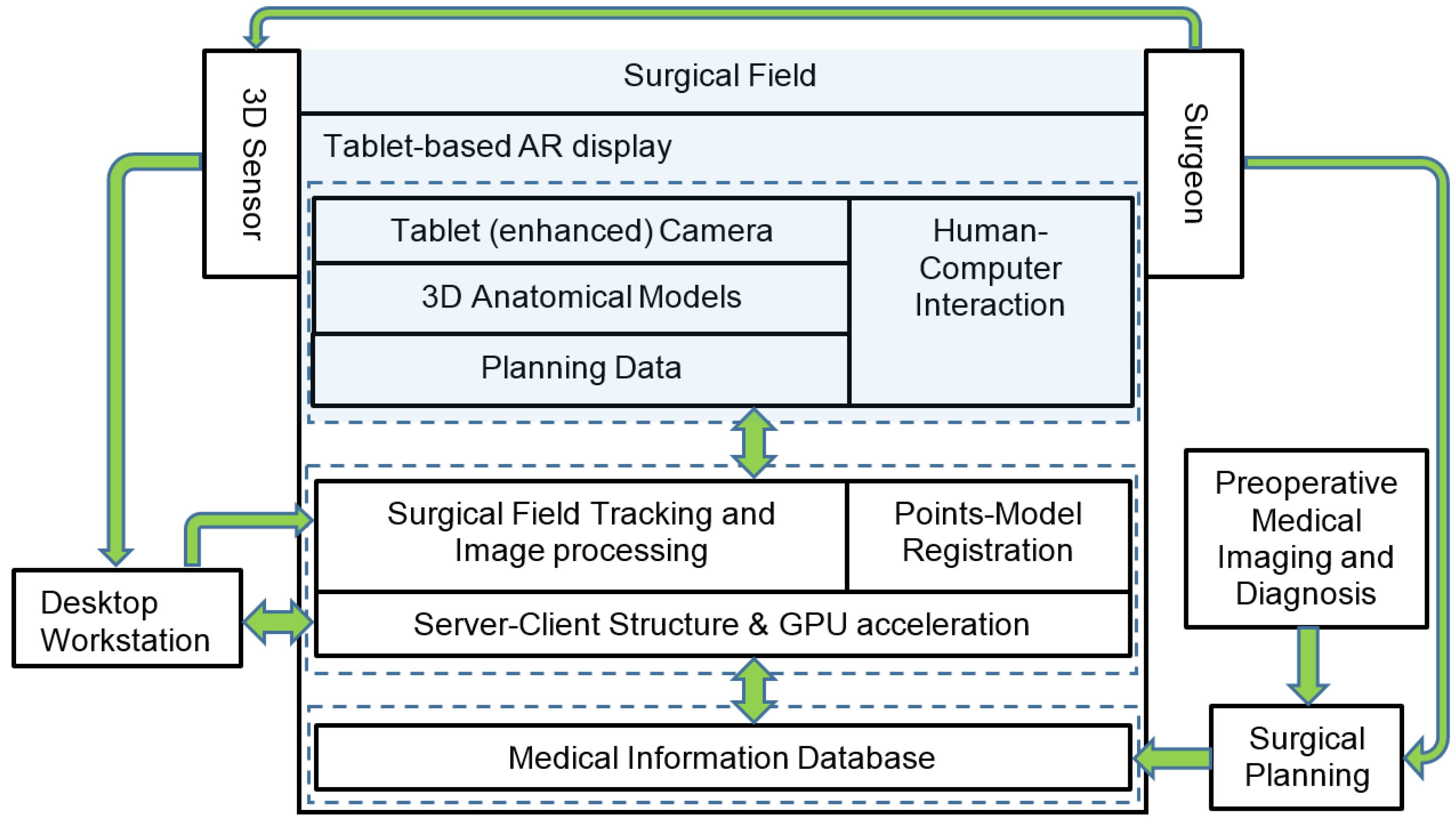

2. Materials and Methods

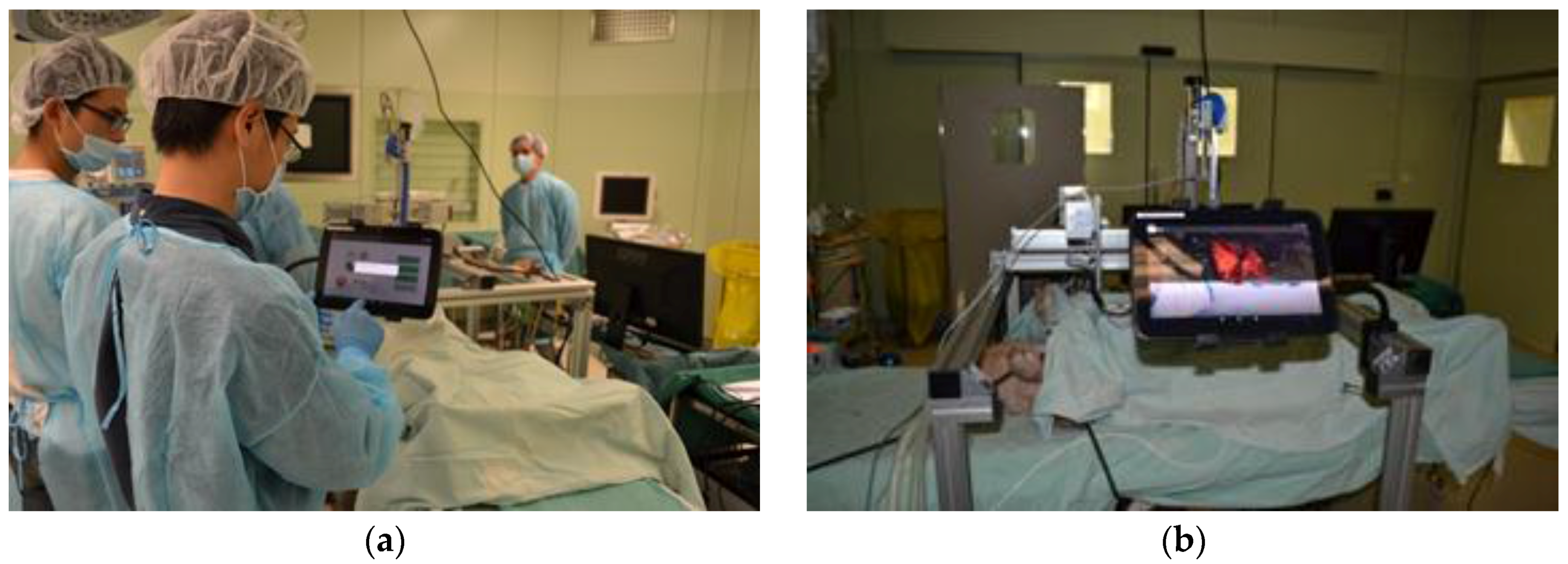

2.1. Overview of the AR Surgical Guidance System

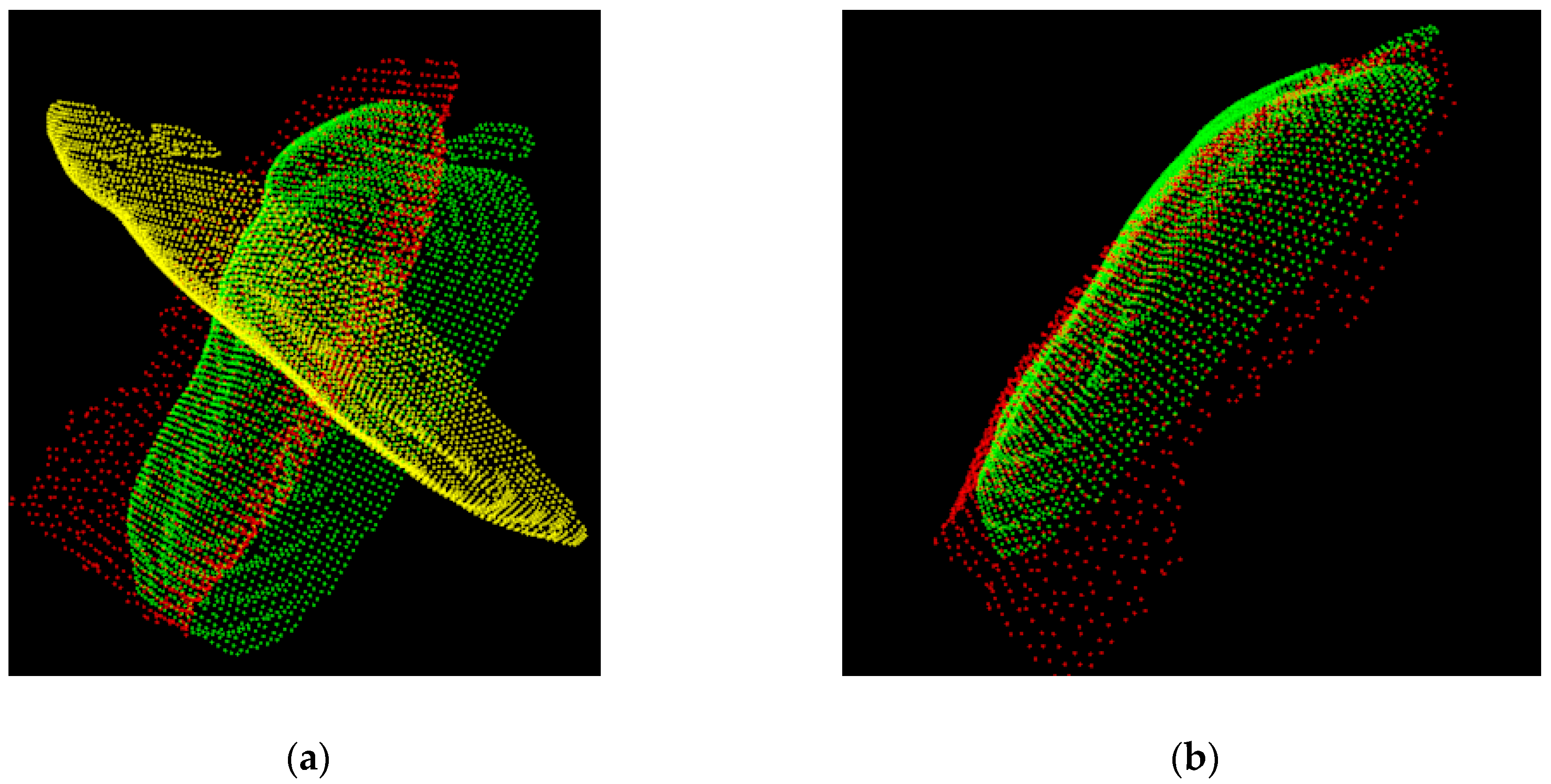

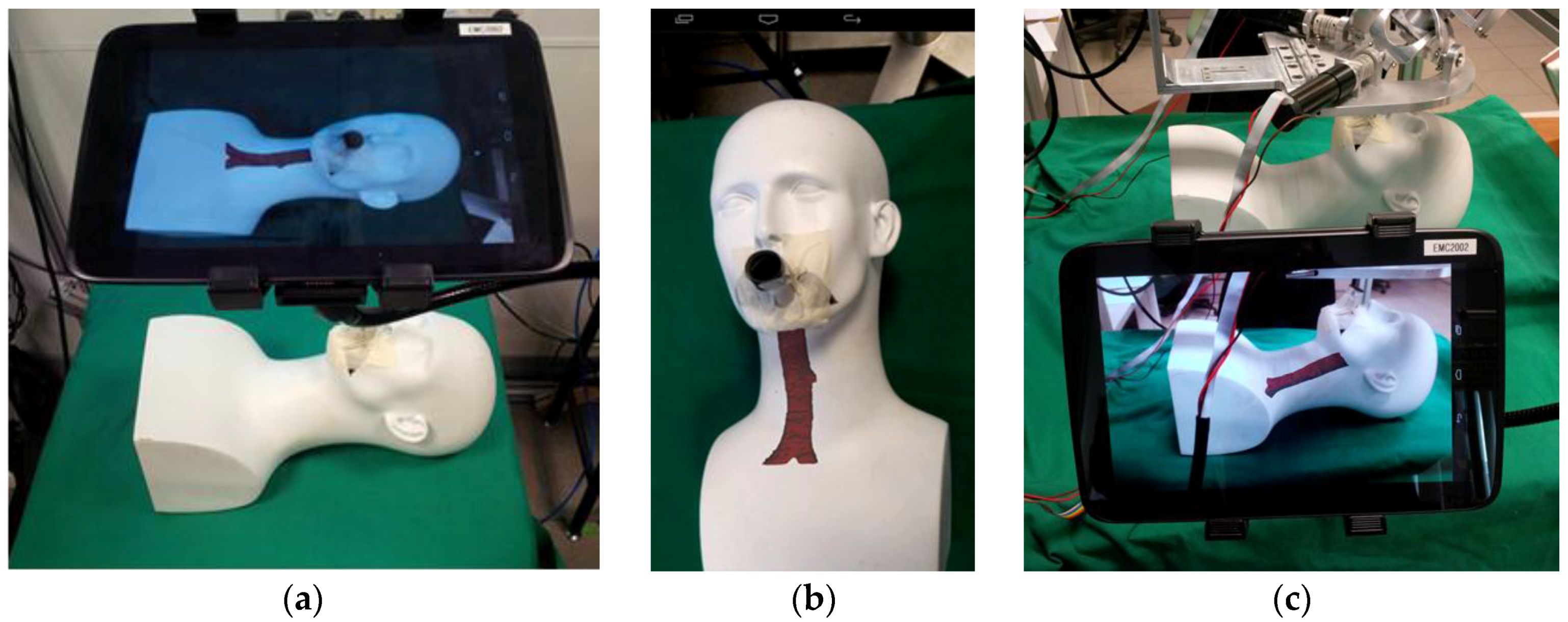

2.2. Patient Registration

2.2.1. Three-dimensional Model Representation

2.2.2. Registration between the Point Cloud and the 3D Model

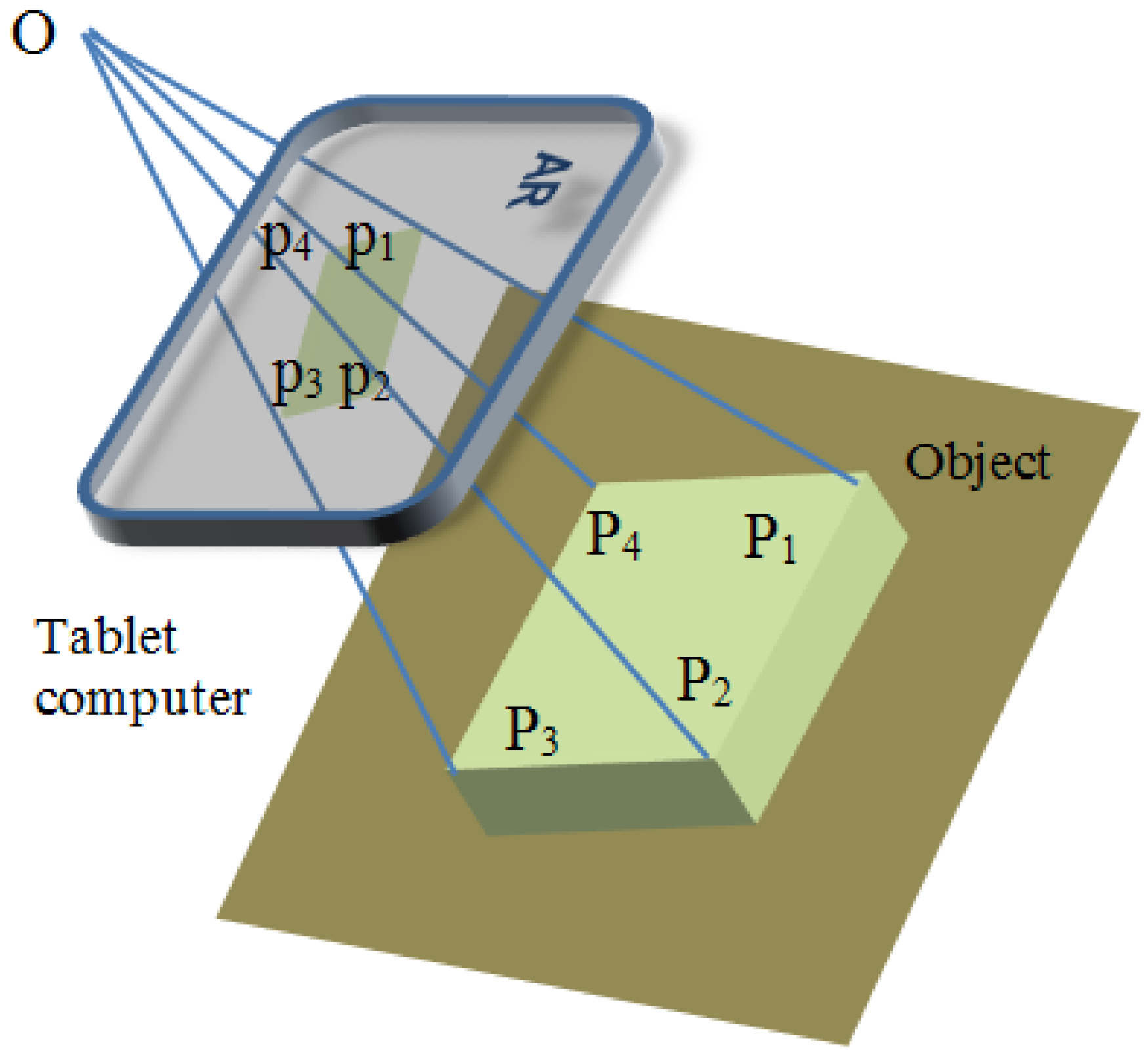

2.3. Augmented Reality Pose Estimation

2.3.1. Feature Detection and Tracking

2.3.2. AR Pose Estimation and Update

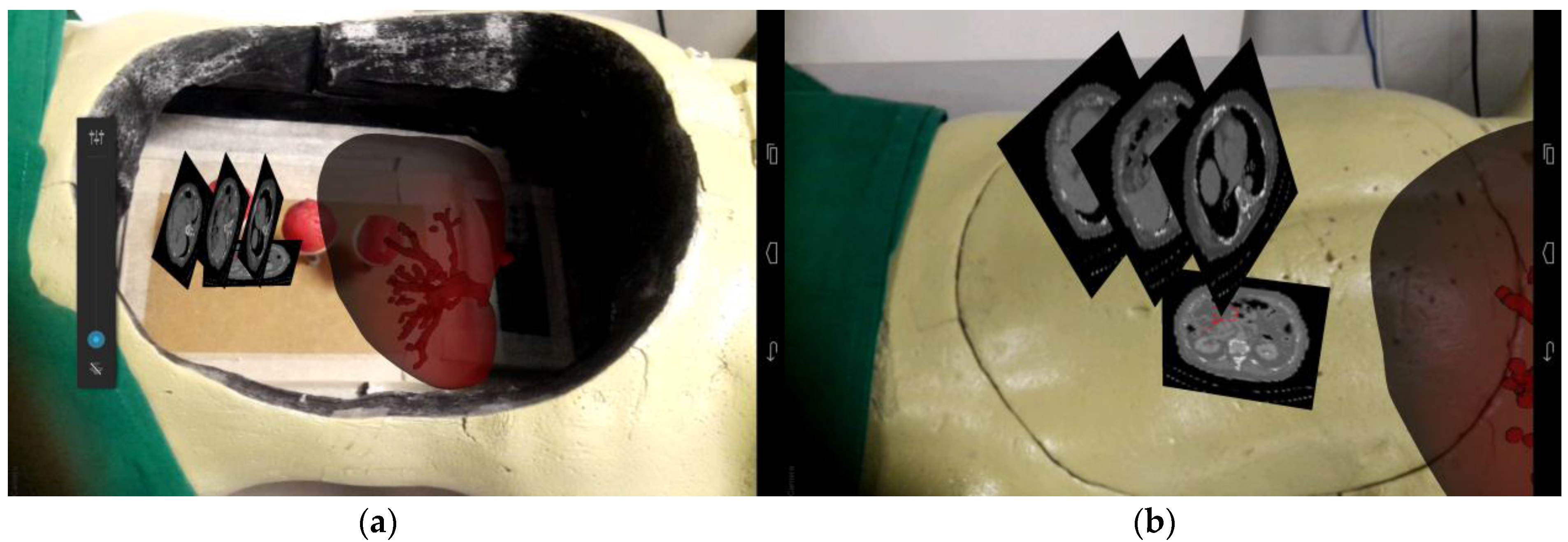

2.4. Interactive Surgical Guidance

2.4.1. Touch Control for 3D AR Object Manipulation

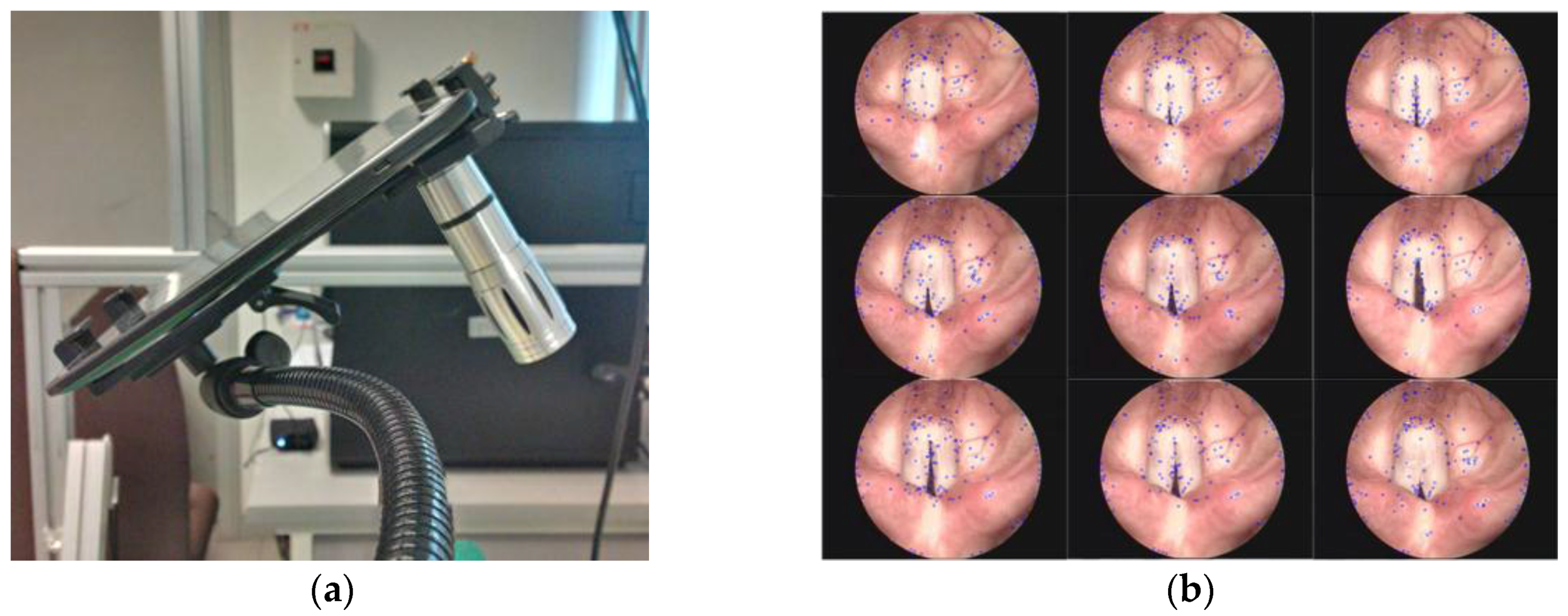

2.4.2. Motion Tracking and Depth Integration

3. Results

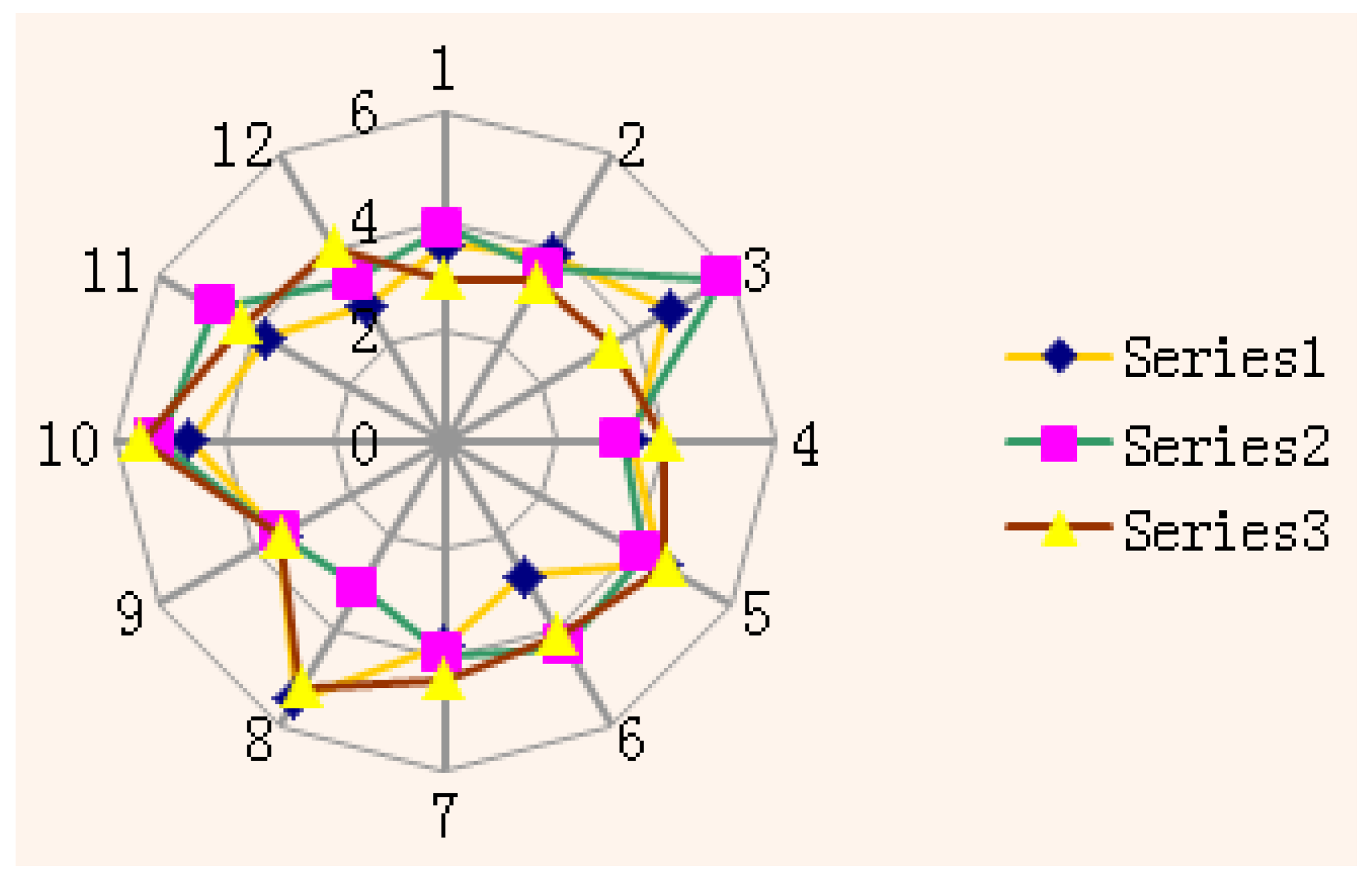

3.1. System Calibration

3.2. AR Display for Interactive Visual Guidance

4. Discussion

5. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Perrin, D.P.; Vasilyev, N.V.; Novotny, P.; Stoll, J.; Howe, R.D.; Dupont, P.E.; Salgo, I.S.; Nido, P.J. Image guided surgical interventions. Curr. Probl Surg. 2009, 46, 730–766. [Google Scholar] [CrossRef] [PubMed]

- Thomas, G.W.; Johns, B.D.; Kho, J.Y.; Anderson, D.D. The Validity and Reliability of a Hybrid Reality Simulator for Wire Navigation in Orthopedic Surgery. IEEE Trans. Hum. Mach. Syst. 2015, 45, 119–125. [Google Scholar] [CrossRef]

- Yang, C.; Chen, Y.; Tseng, C.; Ho, H.; Wu, C.; Wang, K. Non-invasive, fluoroscopy-based, image-guided surgery reduces radiation exposure for vertebral compression fractures: A preliminary survey. Formos. J. Surg. 2012, 45, 12–19. [Google Scholar] [CrossRef]

- Cura, J.L.; Zabala, R.; Iriarte, J.I.; Unda, M. Treatment of Renal Tumors by Percutaneous Ultrasound-Guided Radiofrequency Ablation Using a Multitined Electrode: Effectiveness and Complications. Eur. Urol. 2010, 57, 459–465. [Google Scholar] [CrossRef]

- Wen, R.; Chui, C.; Ong, S.; Lim, K.; Chang, S.K. Projection-based visual guidance for robot-aided RF needle insertion. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 1015–1025. [Google Scholar] [CrossRef] [PubMed]

- Wen, R.; Yang, L.; Chui, C.; Lim, K.; Chang, S. Intraoperative visual guidance and control interface for augmented reality robotic surgery. In Proceedings of the 8th IEEE International Conference on Control and Automation 2010, Xiamen, China, 9–11 June 2010. [Google Scholar]

- Birkfellner, W.; Huber, K.; Watzinger, F.; Figl, M.; Wanschitz, F.; Hanel, R.; Rafolt, D.; Ewers, R.; Bergmann, H. Development of the Varioscope AR. A see-through HMD for computer-aided surgery. In Proceedings of the IEEE and ACM International Symposium on Augmented Reality, Munich, Germany, 6 October 2000. [Google Scholar]

- Fichtinger, G.; Deguet, A.; Masamune, K.; Balogh, E.; Fischer, G.S.; Mathieu, H.; Taylor, R.H.; Zinreich, S.J.; Fayad, L.M. Image overlay guidance for needle insertion in CT scanner. IEEE Trans. Biomed. Eng. 2005, 52, 1415–1424. [Google Scholar] [CrossRef] [PubMed]

- Mercier-Ganady, J.; Lotte, F.; Loup-Escande, E.; Marchal, M.; Lecuyer, A. The Mind-Mirror: See your brain in action in your head using EEG and augmented reality. In Proceedings of the IEEE Virtual Reality Conference, Minneapolis, MN, USA, 29 March–2 April 2014. [Google Scholar]

- Rassweiler, J.J.; Müller, M.; Fangerau, M.; Klein, J.; Goezen, A.S.; Pereira, P.; Meinzer, H.P.; Teber, D. iPad-assisted percutaneous access to the kidney using marker-based navigation: Initial clinical experience. Eur. Urol. 2012, 61, 628–631. [Google Scholar] [CrossRef] [PubMed]

- Müller, M.; Rassweiler, M.; Klein, J.; Seitel, A.; Gondan, M.; Baumhauer, M.; Teber, D.; Rassweiler, J.J.; Meinzer, H.; Maier-Hein, L. Mobile augmented reality for computer-assisted percutaneous nephrolithotomy. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 663–675. [Google Scholar] [CrossRef] [PubMed]

- Yaniv, Z.; Cleary, K. Image-guided procedures: A review. Comput. Aided Interv. Med. Robot. 2006, 3, 1–63. [Google Scholar]

- Inoue, D.; Cho, B.; Mori, M.; Kikkawa, Y.; Amano, T.; Nakamizo, A.; Hashizume, M. Preliminary study on the clinical application of augmented reality neuronavigation. J. Neurol. Surg. Part A Cent. Eur. Neurosurg. 2013, 74, 071–076. [Google Scholar] [CrossRef] [PubMed]

- Figl, M.; Rueckert, D.; Edwards, P. Registration of a Cardiac Motion Model to Video for Augmented Reality Image Guidance of Coronary Artery Bypass. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Munich, Germany, 7–12 September 2009. [Google Scholar]

- Fornaro, J.; Keel, M.; Harders, M.; Marincek, B.; Székely, G.; Frauenfelder, T. An interactive surgical planning tool for acetabular fractures: Initial results. J. Orthop. Surg. Res. 2010, 5, 50. [Google Scholar] [CrossRef] [PubMed]

- Zheng, B.; Takamatsu, J.; Ikeuchi, V. An adaptive and stable method for fitting implicit polynomial curves and surfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 561–568. [Google Scholar] [CrossRef] [PubMed]

- Rouhani, M.; Sappa, A.D. A fast accurate implicit polynomial fitting approach. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010. [Google Scholar]

- Jolliffe, I.T. Principal component analysis and factor analysis. Princ. Compon. Anal. 2002, 150–166. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes in Robotics-DL tentative. In Proceedings of the International Society for Optics and Photonics, Boston, MA, USA, 30 April 1992. [Google Scholar]

- Agrawal, M.; Konolige, K.; Blas, M.R. Censure: Center surround extremas for realtime feature detection and matching. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features (SURF). Comput. Vis. Imag. Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Wen, R.; Tay, W.L.; Nguyen, B.P.; Chng, C.B.; Chui, C.K. Hand gesture guided robot-assisted surgery based on a direct augmented reality interface. Comput. Method. Progr. Biomed. 2014, 116, 68–80. [Google Scholar] [CrossRef] [PubMed]

- Lazarus, J.; Williams, J. The Locator: Novel percutaneous nephrolithotomy apparatus to aid collecting system puncture—A preliminary report. J. Endourol. 2011, 25, 747–750. [Google Scholar] [CrossRef] [PubMed]

- Ritter, M.; Siegel, F.; Krombach, P.; Martinschek, A.; Weiss, C.; Häcker, A.; Pelzer, A.E. Influence of surgeon’s experience on fluoroscopy time during endourological interventions. World J. Urol. 2013, 31, 183–187. [Google Scholar] [CrossRef] [PubMed]

- Xiao, C.; Zhang, L. Implementation of mobile augmented reality based on Vuforia and Rawajali. In Proceedings of the IEEE 5th International Conference on Software Engineering and Service Science, Beijing, China, 27–29 June 2014. [Google Scholar]

- Mukherjee, S.; Mondal, I. Future practicability of Android application development with new Android libraries and frameworks. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 5575–5579. [Google Scholar]

- Wolf, I.; Vetter, M.; Wegner, I.; Böttger, T.; Nolden, M.; Schöbinger, M.; Hastenteufel, M.; Kunert, T.; Meinzer, H. The medical imaging interaction toolkit. Med. Imag. Anal. 2005, 9, 594–604. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, R.; Chng, C.-B.; Chui, C.-K. Augmented Reality Guidance with Multimodality Imaging Data and Depth-Perceived Interaction for Robot-Assisted Surgery. Robotics 2017, 6, 13. https://doi.org/10.3390/robotics6020013

Wen R, Chng C-B, Chui C-K. Augmented Reality Guidance with Multimodality Imaging Data and Depth-Perceived Interaction for Robot-Assisted Surgery. Robotics. 2017; 6(2):13. https://doi.org/10.3390/robotics6020013

Chicago/Turabian StyleWen, Rong, Chin-Boon Chng, and Chee-Kong Chui. 2017. "Augmented Reality Guidance with Multimodality Imaging Data and Depth-Perceived Interaction for Robot-Assisted Surgery" Robotics 6, no. 2: 13. https://doi.org/10.3390/robotics6020013

APA StyleWen, R., Chng, C.-B., & Chui, C.-K. (2017). Augmented Reality Guidance with Multimodality Imaging Data and Depth-Perceived Interaction for Robot-Assisted Surgery. Robotics, 6(2), 13. https://doi.org/10.3390/robotics6020013