Research on Feature Extraction Method of Indoor Visual Positioning Image Based on Area Division of Foreground and Background

Abstract

1. Introduction

2. Basic Concepts

2.1. Features of the Image

2.2. Features Matching

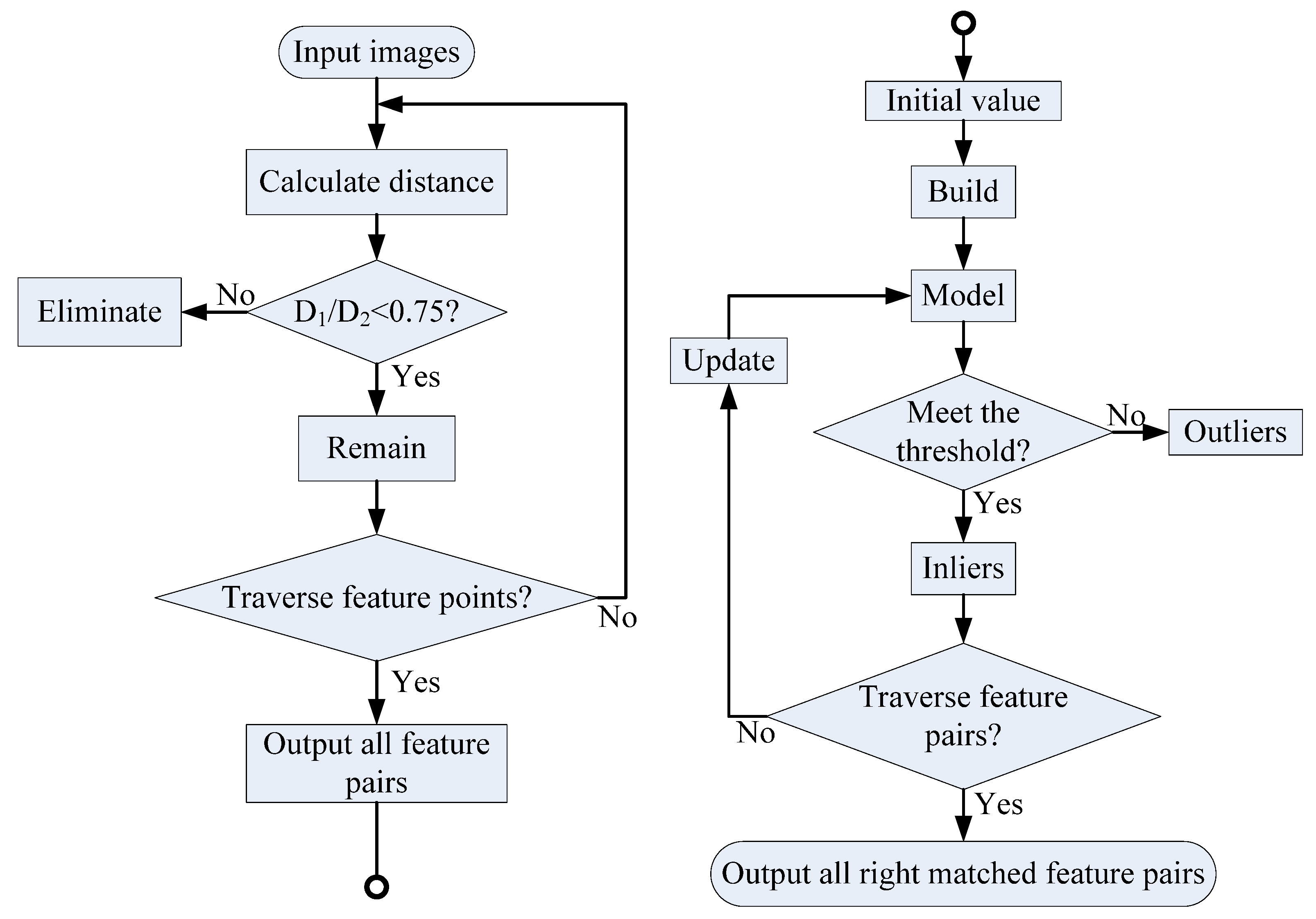

2.2.1. FLANN

2.2.2. RANSAC

2.3. Open Source Computer Vision Library

3. Division of Foreground and Background Area and Feature Extraction

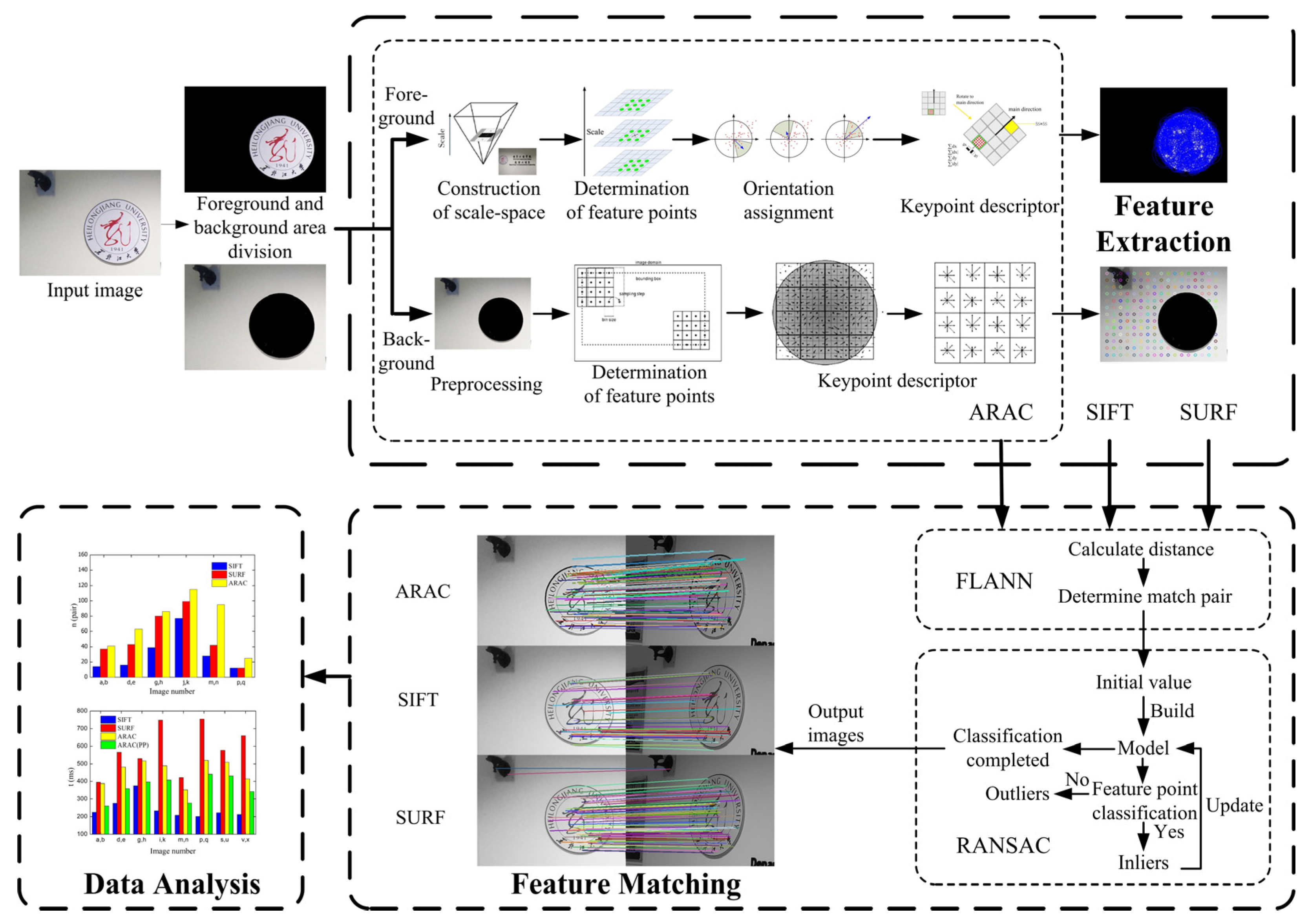

3.1. Feature Extraction Model Construction

- This feature extraction model takes the corridor on the seventh floor of the experimental building of Heilongjiang University as an example. The first step is to read the image to be feature extracted in Visual Studio Code;

- Grab Cut in OpenCV is used to adaptively divide the input image into foreground and background. Then different areas are processed separately;

- In the foreground area, the method proposed in this paper firstly builds a scale-space, and secondly compares the response value of the candidate feature points with the Hessian matrix threshold to determine the feature points. Then it calculates the Haar response value to determine the main direction, and finally generates the 64-dimensional feature descriptors of the foreground area;

- In the background area, ARAC firstly preprocesses the image to limit the size of the image and divides the background area into grids of equal size. It extracts features in each grid, and finally generates 128-dimensional feature descriptors of the background area;

- This completes the feature extraction of the Heilongjiang University badge in the foreground area of the corridor and the wall and lamp in the background.

3.2. Adaptive Region Division

3.3. Feature Extraction in Foreground Area

3.3.1. Introduction of Calculation Template

3.3.2. Construction of Scale-Space

3.3.3. Determination of Foreground Feature Points

3.3.4. Foreground Feature Point Descriptor

3.4. Feature Extraction in Background Area

3.4.1. Image Background Preprocessing

3.4.2. Determination of Background Feature Points

3.4.3. Background Feature Point Descriptor

4. Match Test and Performance Analysis

4.1. Match Condition Construction

4.2. Feature Extraction Performance Analysis

4.2.1. Test results of Each Feature Point Detection Method

4.2.2. Feature Extraction Performance Analysis

4.3. Feature Matching Effect Comparison

4.3.1. Matching Effect under Affine and Illumination Conditions

4.3.2. Matching Effect under Large-Angle Affine and Illumination

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ang, J.L.F.; Lee, W.K.; Ooi, B.Y. GreyZone: A Novel Method for Measuring and Comparing Various Indoor Positioning Systems. In Proceedings of the 2019 International Conference on Green and Human Information Technology (ICGHIT), Kuala Lumpur, Malaysia, 16–18 January 2019; pp. 30–35. [Google Scholar]

- Zafari, F.; Gkelias, A.; Leung, K.K. A Survey of Indoor Localization Systems and Technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef]

- Gezici, S.; Zhi, T.; Giannakis, G.B.; Kobayashi, H.; Molisch, A.F.; Poor, H.V.; Sahinoglu, Z. Localization via ultra-wideband radios: A look at positioning aspects for future sensor networks. IEEE Signal Process. Mag. 2005, 22, 70–84. [Google Scholar] [CrossRef]

- Yujin, C.; Ruizhi, C.; Mengyun, L.; Aoran, X.; Dewen, W.; Shuheng, Z. Indoor Visual Positioning Aided by CNN-Based Image Retrieval: Training-Free, 3D Modeling-Free. Sensors 2018, 18, 2692. [Google Scholar]

- Mautz, R.; Tilch, S. Survey of optical indoor positioning systems. In Proceedings of the 2011 International Conference on Indoor Positioning and Indoor Navigation, Guimaraes, Portugal, 21–23 September 2011; pp. 1–7. [Google Scholar]

- Jinhong, X.; Zhi, L.; Yang, Y.; Dan, L.; Xu, H. Comparison and analysis of indoor wireless positioning techniques. In Proceedings of the 2011 International Conference on Computer Science and Service System (CSSS), Nanjing, China, 27–29 June 2011; pp. 293–296. [Google Scholar]

- Yang, A.; Yang, X.; Wu, W.; Liu, H.; Zhuansun, Y. Research on Feature Extraction of Tumor Image Based on Convolutional Neural Network. IEEE Access 2019, 7, 24204–24213. [Google Scholar] [CrossRef]

- Jiang, J.; Ma, Q.; Lu, T.; Wang, Z.; Ma, J. Feature Matching Based on Top K Rank Similarity. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2316–2320. [Google Scholar]

- Mentzer, N.; Payá-Vayá, G.; Blume, H. Analyzing the Performance-Hardware Trade-off of an ASIP-based SIFT Feature Extraction. J. Signal Process. Syst. 2016, 85, 83–99. [Google Scholar] [CrossRef]

- Ebrahim, K.; Siva, P.; Shehata, M. Image matching using SIFT, SURF, BRIEF and ORB. Performance comparison for distorted images. In Proceedings of the 2015 Newfoundland Electrical and Computer Engineering Conference, St. johns, NL, Canada, 5–6 November 2015. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Yu, H.; Fu, Q.; Yang, Z.; Tan, L.; Sun, W.; Sun, M. Robust Robot Pose Estimation for Challenging Scenes With an RGB-D Camera. IEEE Sens. J. 2019, 19, 2217–2229. [Google Scholar] [CrossRef]

- Shan, Y.; Li, S. Descriptor Matching for a Discrete Spherical Image With a Convolutional Neural Network. IEEE Access 2018, 6, 20748–20755. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the Computer Vision—ECCV 2006, Berlin/Heidelberg, Germany, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.; Gwun, O. A comparison of SIFT, PCA-SIFT and SURF. Int. J. Image Process. (IJIP) 2009, 3, 143–152. [Google Scholar]

- Srinivasa, K.G.; Shree Devi, B.N. GPU Based N-Gram String Matching Algorithm with Score Table Approach for String Searching in Many Documents. J. Inst. Eng. (India) Ser. B 2017, 98, 467–476. [Google Scholar] [CrossRef]

- Wang, R.; Wan, W.; Di, K.; Chen, R.; Feng, X. A High-Accuracy Indoor-Positioning Method with Automated RGB-D Image Database Construction. Remote Sens. 2019, 11, 2572. [Google Scholar] [CrossRef]

- Li, Z.; Cai, X.; Liu, Y.; Zhu, B. A Novel Gaussian–Bernoulli Based Convolutional Deep Belief Networks for Image Feature Extraction. Neural Process. Lett. 2019, 49, 305–319. [Google Scholar] [CrossRef]

- Suju, D.A.; Jose, H. FLANN: Fast approximate nearest neighbour search algorithm for elucidating human-wildlife conflicts in forest areas. In Proceedings of the 2017 Fourth International Conference on Signal Processing, Communication and Networking (ICSCN), Chennai, India, 16–18 March 2017; pp. 1–6. [Google Scholar]

| Group | Detection Method | Number of Feature Points | Time (ms) |

|---|---|---|---|

| (i) | SIFT | 40 | 199.13 |

| SURF | 232 | 197.42 | |

| ARAC | 165 | 190.86 | |

| ARAC(PP) | 165 | 174.31 | |

| (ii) | SIFT | 166 | 141.82 |

| SURF | 1256 | 283.19 | |

| ARAC | 897 | 241.24 | |

| ARAC(PP) | 897 | 218.73 | |

| (iii) | SIFT | 342 | 200.76 |

| SURF | 1581 | 264.94 | |

| ARAC | 1381 | 258.36 | |

| ARAC(PP) | 1381 | 229.09 | |

| (iv) | SIFT | 479 | 216.43 |

| SURF | 1116 | 240.48 | |

| ARAC | 658 | 239.17 | |

| ARAC(PP) | 658 | 174.71. | |

| (v) | SIFT | 337 | 195.45 |

| SURF | 890 | 234.63 | |

| ARAC | 380 | 226.53 | |

| ARAC(PP) | 380 | 163.54 | |

| (vi) | SIFT | 213 | 168.99 |

| SURF | 997 | 247.19 | |

| ARAC | 633 | 256.07 | |

| ARAC(PP) | 633 | 192.58 | |

| (vii) | SIFT | 249 | 181.26 |

| SURF | 1075 | 244.54 | |

| ARAC | 738 | 276.22 | |

| ARAC(PP) | 738 | 209.97 | |

| (viii) | SIFT | 193 | 138.67 |

| SURF | 1257 | 256.58 | |

| ARAC | 718 | 258.19 | |

| ARAC(PP) | 718 | 197.23 |

| Matching Pictures | Feature Extraction Method | Number of Feature Points (Left) | Number of Feature Points (Right) | Number of Correct Matching Pairs | Dimension of Feature | Extraction Time (ms) | Matching Time (ms) | Total Time (ms) |

|---|---|---|---|---|---|---|---|---|

| a, b | SIFT | 40 | 29 | 14 | 128 | 208.76 | 16.01 | 224.77 |

| SURF | 232 | 218 | 37 | 64 | 378.19 | 16.67 | 394.86 | |

| ARAC | 465 | 423 | 41 | 64 + 128 | 371.50 | 16.44 | 387.94 | |

| ARAC(PP) | 465 | 423 | 41 | 64 + 128 | 253.49 | 8.00 | 261.47 | |

| d, e | SIFT | 166 | 91 | 16 | 128 | 261.03 | 16.24 | 277.27 |

| SURF | 1256 | 650 | 43 | 64 | 510.73 | 55.64 | 566.37 | |

| ARAC | 897 | 600 | 63 | 64 + 128 | 437.86 | 44.64 | 482.51 | |

| ARAC(PP) | 897 | 600 | 63 | 64 + 12 | 322.60 | 34.98 | 357.58 | |

| g, h | SIFT | 342 | 360 | 39 | 128 | 342.76 | 31.98 | 374.74 |

| SURF | 1581 | 1619 | 80 | 64 | 459.43 | 70.46 | 529.89 | |

| ARAC | 1381 | 1315 | 86 | 64 + 128 | 446.99 | 69.73 | 516.72 | |

| ARAC(PP) | 1381 | 1315 | 86 | 64 + 128 | 344.30 | 52.23 | 396.53 | |

| j, k | SIFT | 479 | 379 | 77 | 128 | 177.47 | 46.20 | 233.67 |

| SURF | 1116 | 1065 | 99 | 64 | 682.24 | 65.63 | 747.87 | |

| ARAC | 658 | 705 | 115 | 64 + 128 | 471.74 | 51.32 | 523.06 | |

| ARAC(PP) | 658 | 705 | 115 | 64 + 128 | 368.20 | 38.83 | 407.03 | |

| m, n | SIFT | 337 | 147 | 28 | 128 | 180.27 | 27.58 | 207.85 |

| SURF | 890 | 410 | 42 | 64 | 376.11 | 44.77 | 420.88 | |

| ARAC | 380 | 345 | 95 | 64 + 128 | 370.59 | 22.18 | 392.77 | |

| ARAC(PP) | 380 | 345 | 95 | 64 + 128 | 266.62 | 11.79 | 278.41 | |

| p, q | SIFT | 213 | 129 | 12 | 128 | 159.24 | 42.64 | 201.88 |

| SURF | 997 | 718 | 12 | 64 | 619.95 | 134.65 | 754.60 | |

| ARAC | 633 | 517 | 25 | 64 + 128 | 511.58 | 47.99 | 559.57 | |

| ARAC(PP) | 633 | 517 | 25 | 64 + 128 | 403.91 | 36.49 | 440.40 | |

| s, t | SIFT | 249 | 185 | 15 | 128 | 172.63 | 49.57 | 222.20 |

| SURF | 1075 | 719 | 42 | 64 | 499.61 | 78.15 | 577.76 | |

| ARAC | 738 | 827 | 57 | 64 + 128 | 499.04 | 56.27 | 555.31 | |

| ARAC(PP) | 738 | 827 | 57 | 64 + 128 | 386.00 | 45.47 | 431.47 | |

| v, w | SIFT | 193 | 220 | 27 | 128 | 190.16 | 22.00 | 212.16 |

| SURF | 1257 | 883 | 38 | 64 | 552.19 | 106.86 | 659.05 | |

| ARAC | 718 | 492 | 92 | 64 + 128 | 416.60 | 43.31 | 459.91 | |

| ARAC(PP) | 718 | 492 | 92 | 64 + 128 | 310.67 | 30.98 | 341.65 |

| Matching Pictures | Feature Extraction Method | Number of Feature Points (Left) | Number of Feature Points (Right) | Number of Correct Matching Pairs | Dimension of Feature | Extraction Time (ms) | Matching Time (ms) | Total Time (ms) |

|---|---|---|---|---|---|---|---|---|

| a, c | SIFT | 40 | 55 | 21 | 128 | 204.48 | 13.25 | 217.73 |

| SURF | 232 | 444 | 57 | 64 | 380.90 | 23.99 | 404.89 | |

| ARAC | 465 | 389 | 91 | 64 + 128 | 370.96 | 19.97 | 390.93 | |

| ARAC(PP) | 465 | 389 | 91 | 64 + 128 | 255.85 | 12.00 | 267.85 | |

| d, f | SIFT | 166 | 114 | 17 | 128 | 302.13 | 16.99 | 319.12 |

| SURF | 1256 | 395 | 64 | 64 | 520.91 | 46.98 | 567.89 | |

| ARAC | 897 | 261 | 65 | 64 + 128 | 401.43 | 40.48 | 441.91 | |

| ARAC(PP) | 897 | 261 | 65 | 64 + 128 | 288.00 | 25.98 | 313.98 | |

| g, i | SIFT | 342 | 450 | 48 | 128 | 400.15 | 31.65 | 431.80 |

| SURF | 1581 | 945 | 122 | 64 | 411.69 | 57.47 | 469.16 | |

| ARAC | 1381 | 625 | 151 | 64 + 128 | 385.70 | 59.97 | 442.67 | |

| ARAC(PP) | 1381 | 625 | 151 | 64 + 128 | 344.30 | 50.99 | 395.29 | |

| j, l | SIFT | 479 | 421 | 97 | 128 | 185.34 | 36.31 | 221.65 |

| SURF | 1116 | 761 | 124 | 64 | 576.36 | 55.30 | 631.66 | |

| ARAC | 658 | 476 | 159 | 64 + 128 | 388.47 | 42.36 | 430.83 | |

| ARAC(PP) | 658 | 476 | 159 | 64 + 128 | 288.48 | 29.15 | 317.63 | |

| m, o | SIFT | 337 | 218 | 21 | 128 | 187.75 | 32.12 | 219.87 |

| SURF | 890 | 620 | 39 | 64 | 457.04 | 49.57 | 506.61 | |

| ARAC | 380 | 308 | 71 | 64 + 128 | 372.93 | 22.78 | 395.71 | |

| ARAC(PP) | 380 | 308 | 71 | 64 + 128 | 264.63 | 11.79 | 276.42 | |

| p, r | SIFT | 213 | 143 | 11 | 128 | 187.33 | 31.96 | 219.29 |

| SURF | 997 | 665 | 28 | 64 | 615.67 | 132.29 | 747.96 | |

| ARAC | 633 | 389 | 39 | 64 + 128 | 386.01 | 42.97 | 428.98 | |

| ARAC(PP) | 633 | 389 | 39 | 64 + 128 | 291.82 | 30.65 | 322.47 | |

| s, u | SIFT | 249 | 165 | 14 | 128 | 166.37 | 29.78 | 196.15 |

| SURF | 1075 | 607 | 39 | 64 | 482.56 | 95.36 | 577.92 | |

| ARAC | 738 | 704 | 74 | 64 + 128 | 466.31 | 58.08 | 524.39 | |

| ARAC(PP) | 738 | 704 | 74 | 64 + 128 | 357.96 | 45.14 | 403.10 | |

| v, x | SIFT | 193 | 244 | 24 | 128 | 176.11 | 23.44 | 199.55 |

| SURF | 1257 | 733 | 41 | 64 | 545.57 | 113.28 | 658.85 | |

| ARAC | 718 | 494 | 63 | 64 + 128 | 460.16 | 55.93 | 516.09 | |

| ARAC(PP) | 718 | 494 | 63 | 64 + 128 | 354.21 | 43.60 | 397.81 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, P.; Qin, D.; Han, B.; Ma, L.; Berhane, T.M. Research on Feature Extraction Method of Indoor Visual Positioning Image Based on Area Division of Foreground and Background. ISPRS Int. J. Geo-Inf. 2021, 10, 402. https://doi.org/10.3390/ijgi10060402

Zheng P, Qin D, Han B, Ma L, Berhane TM. Research on Feature Extraction Method of Indoor Visual Positioning Image Based on Area Division of Foreground and Background. ISPRS International Journal of Geo-Information. 2021; 10(6):402. https://doi.org/10.3390/ijgi10060402

Chicago/Turabian StyleZheng, Ping, Danyang Qin, Bing Han, Lin Ma, and Teklu Merhawit Berhane. 2021. "Research on Feature Extraction Method of Indoor Visual Positioning Image Based on Area Division of Foreground and Background" ISPRS International Journal of Geo-Information 10, no. 6: 402. https://doi.org/10.3390/ijgi10060402

APA StyleZheng, P., Qin, D., Han, B., Ma, L., & Berhane, T. M. (2021). Research on Feature Extraction Method of Indoor Visual Positioning Image Based on Area Division of Foreground and Background. ISPRS International Journal of Geo-Information, 10(6), 402. https://doi.org/10.3390/ijgi10060402