An Efficient, Platform-Independent Map Rendering Framework for Mobile Augmented Reality

Abstract

:1. Introduction

2. Related Work

2.1. Augmented Reality Visualization

2.2. Augmented Reality and GIS Visualization

3. Materials and Methods

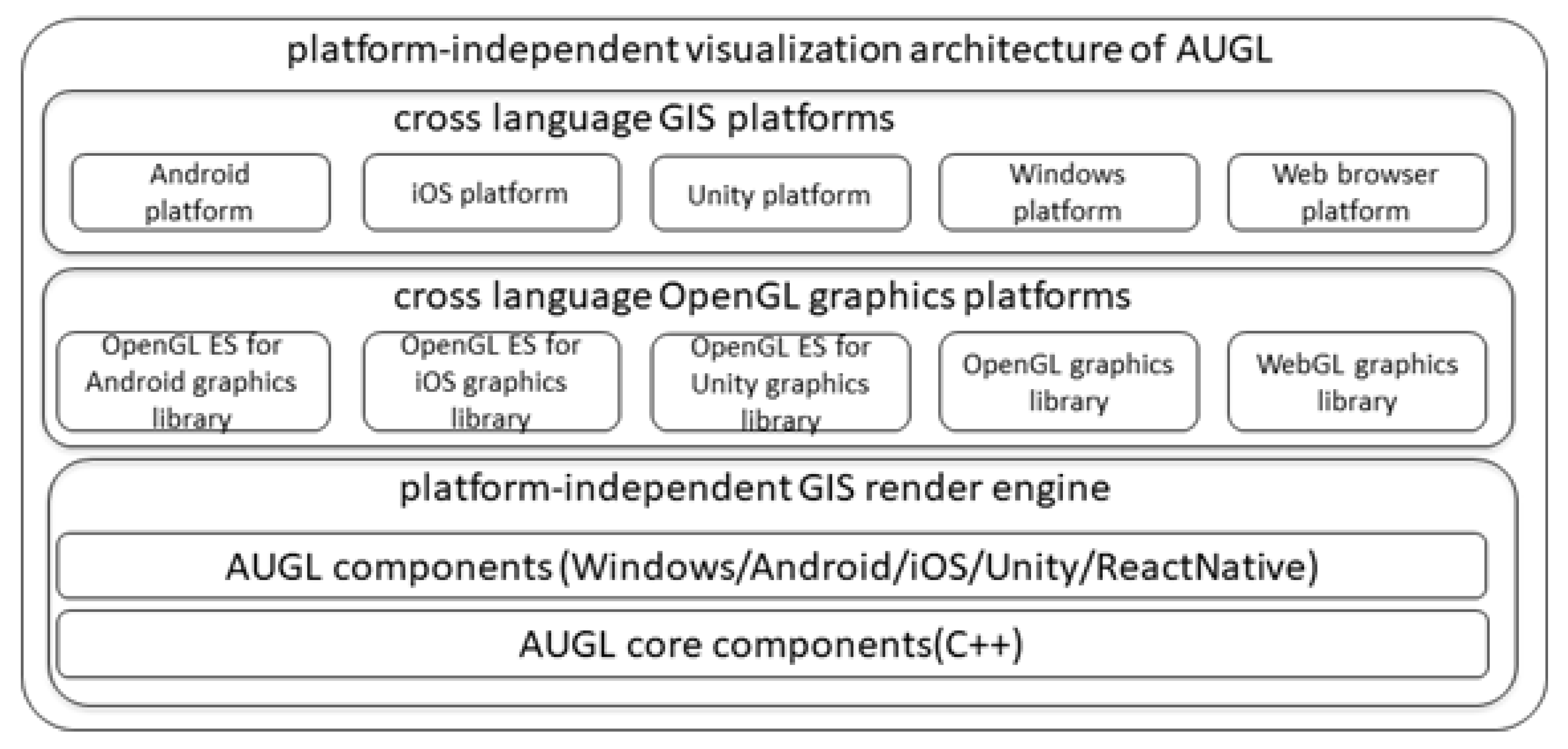

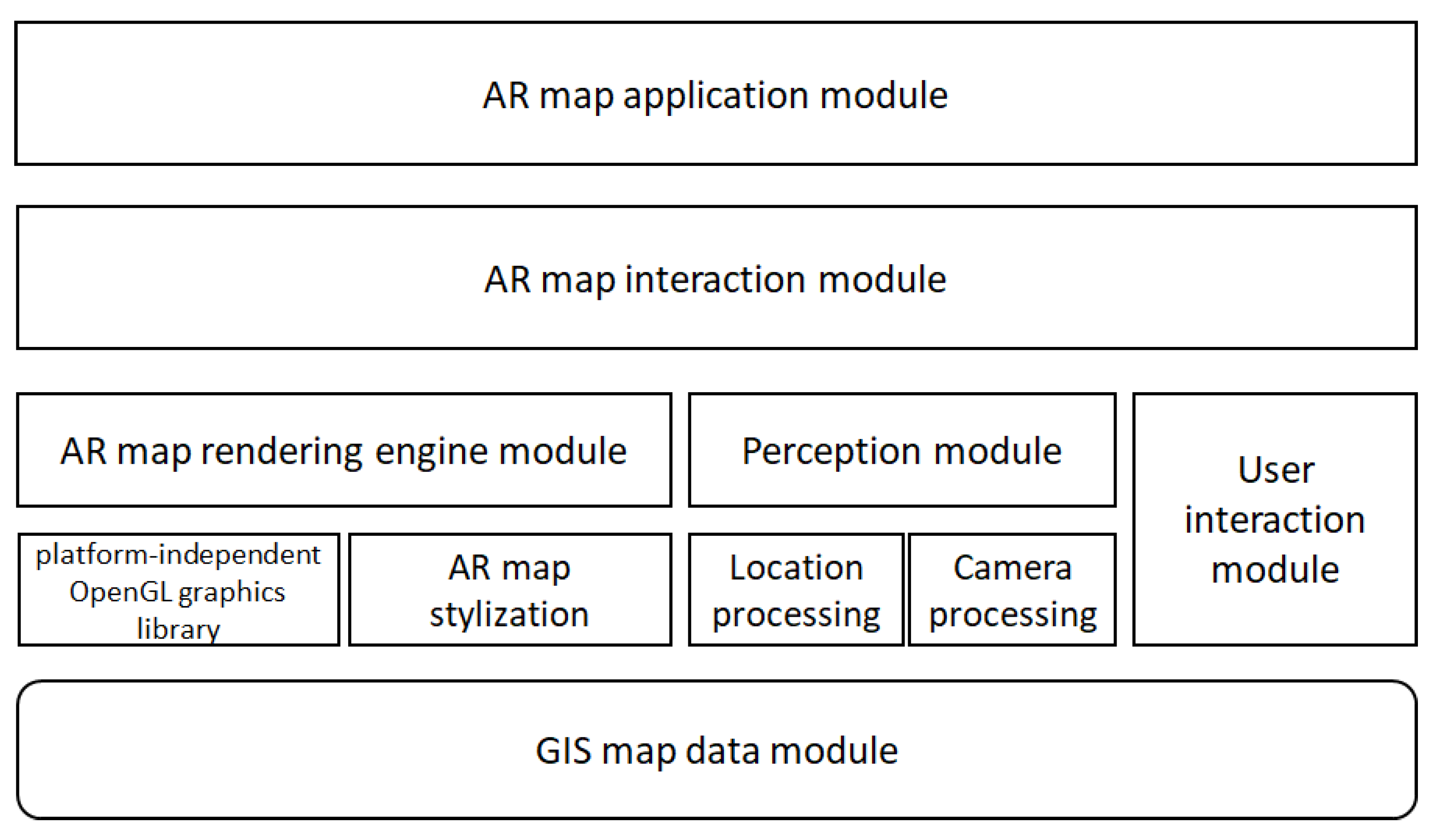

3.1. Architecture of the Augmented-Reality Universal Graphics Library Engine

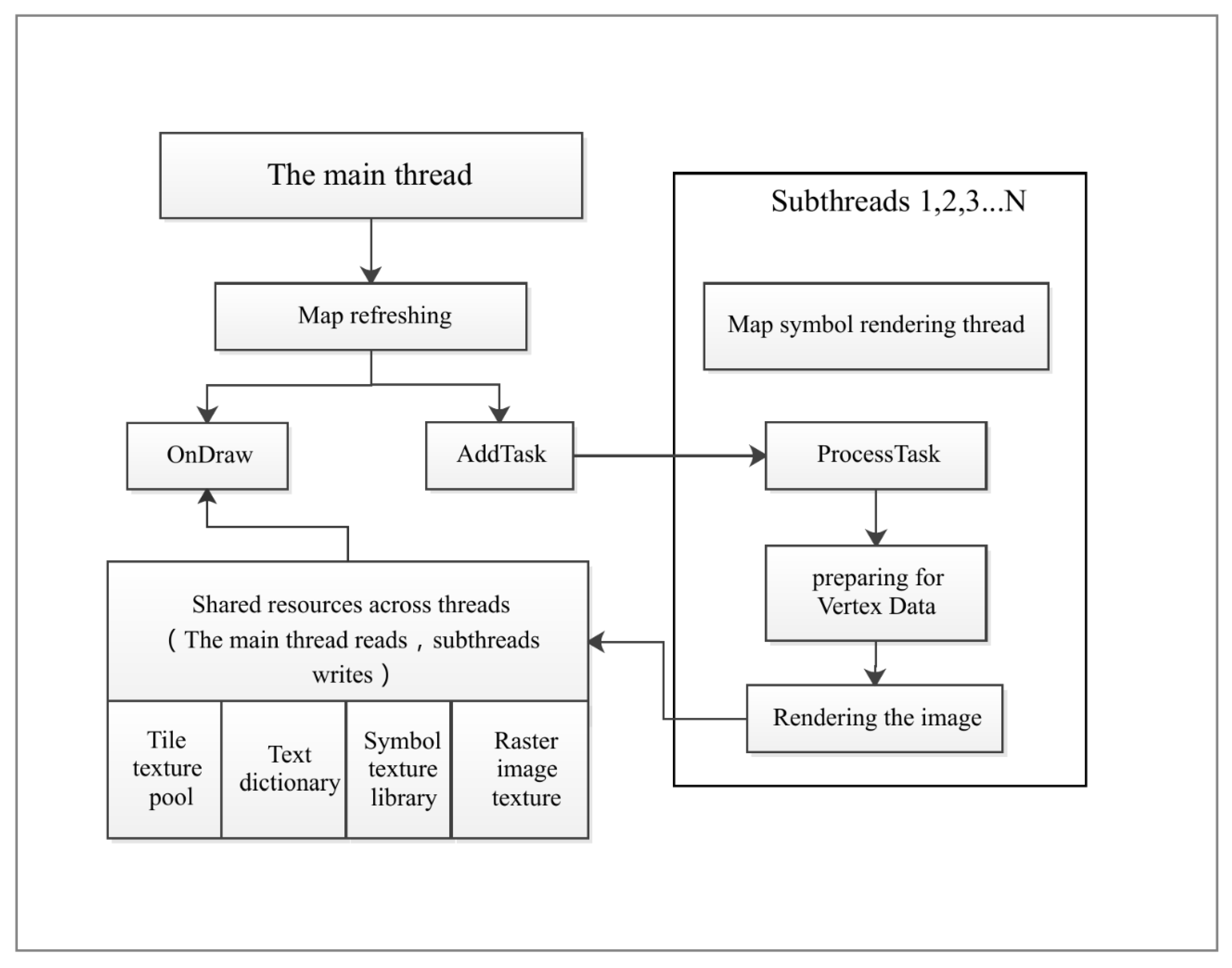

3.2. High-Performance AR-GIS Rendering Engine Parallel Computation

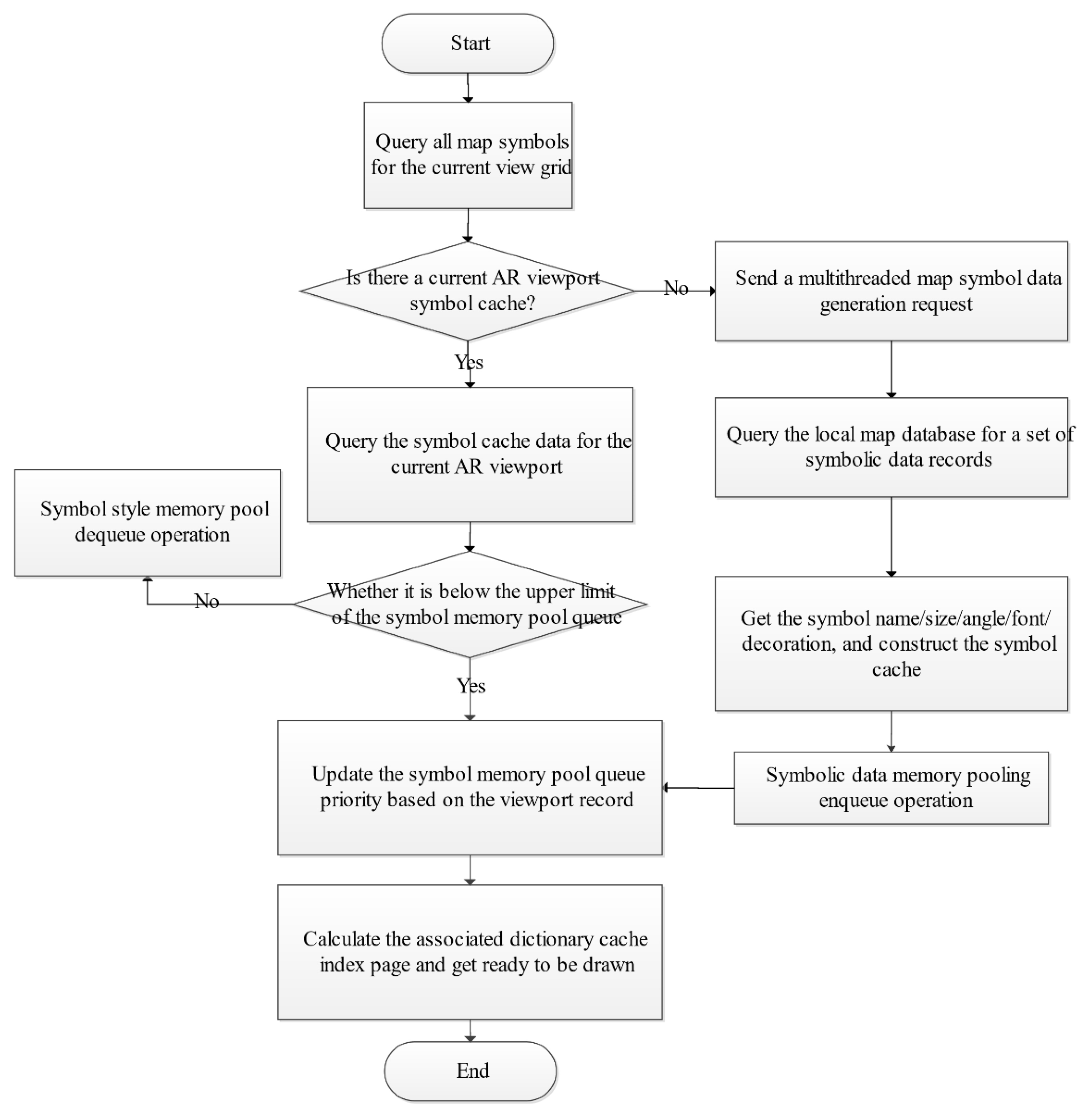

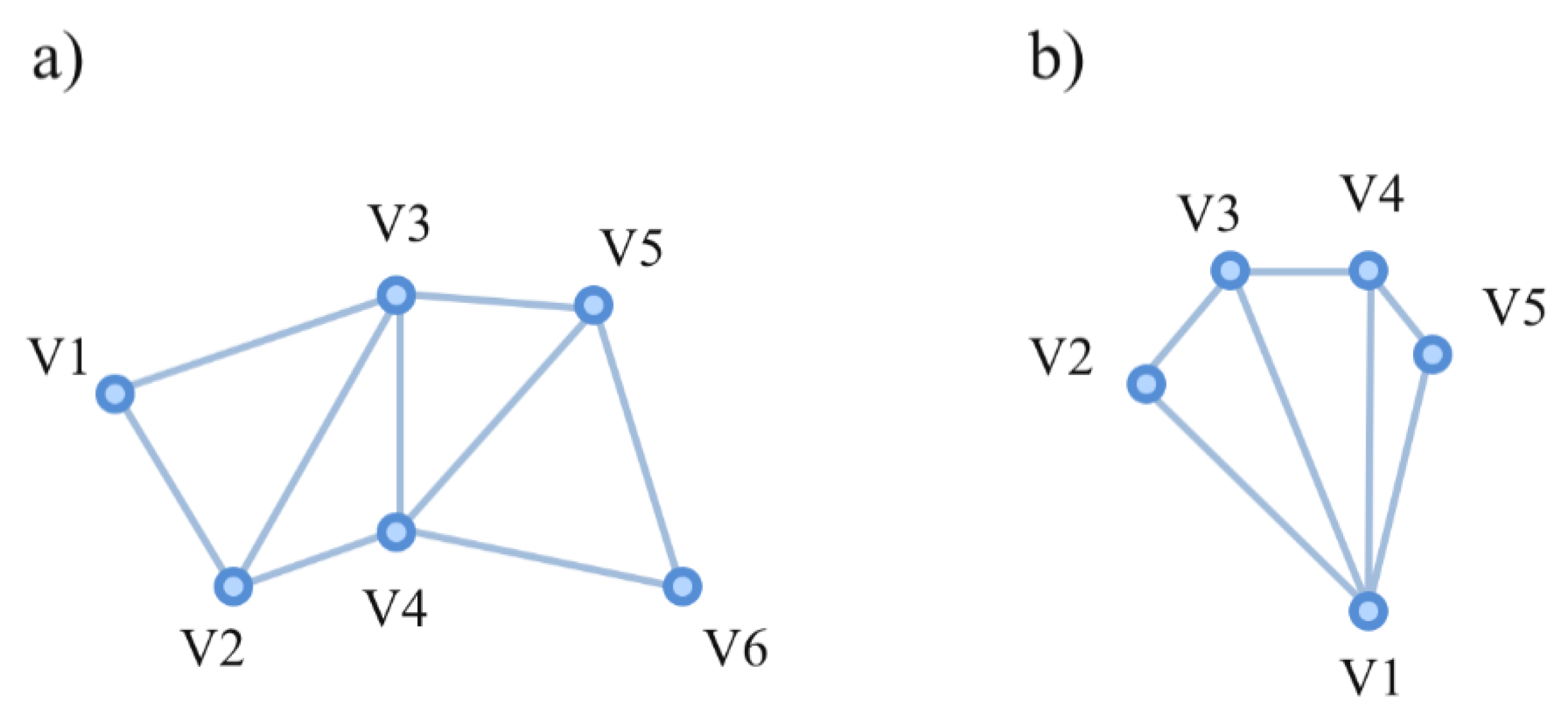

3.2.1. AR-GIS Map Symbol Parallel Processing Framework

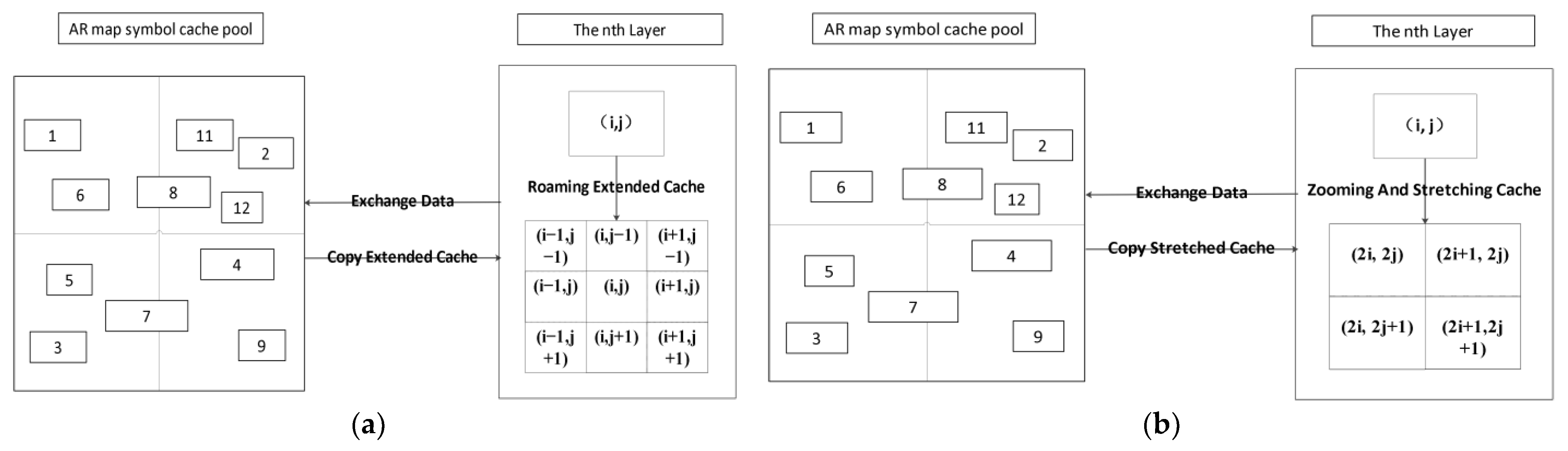

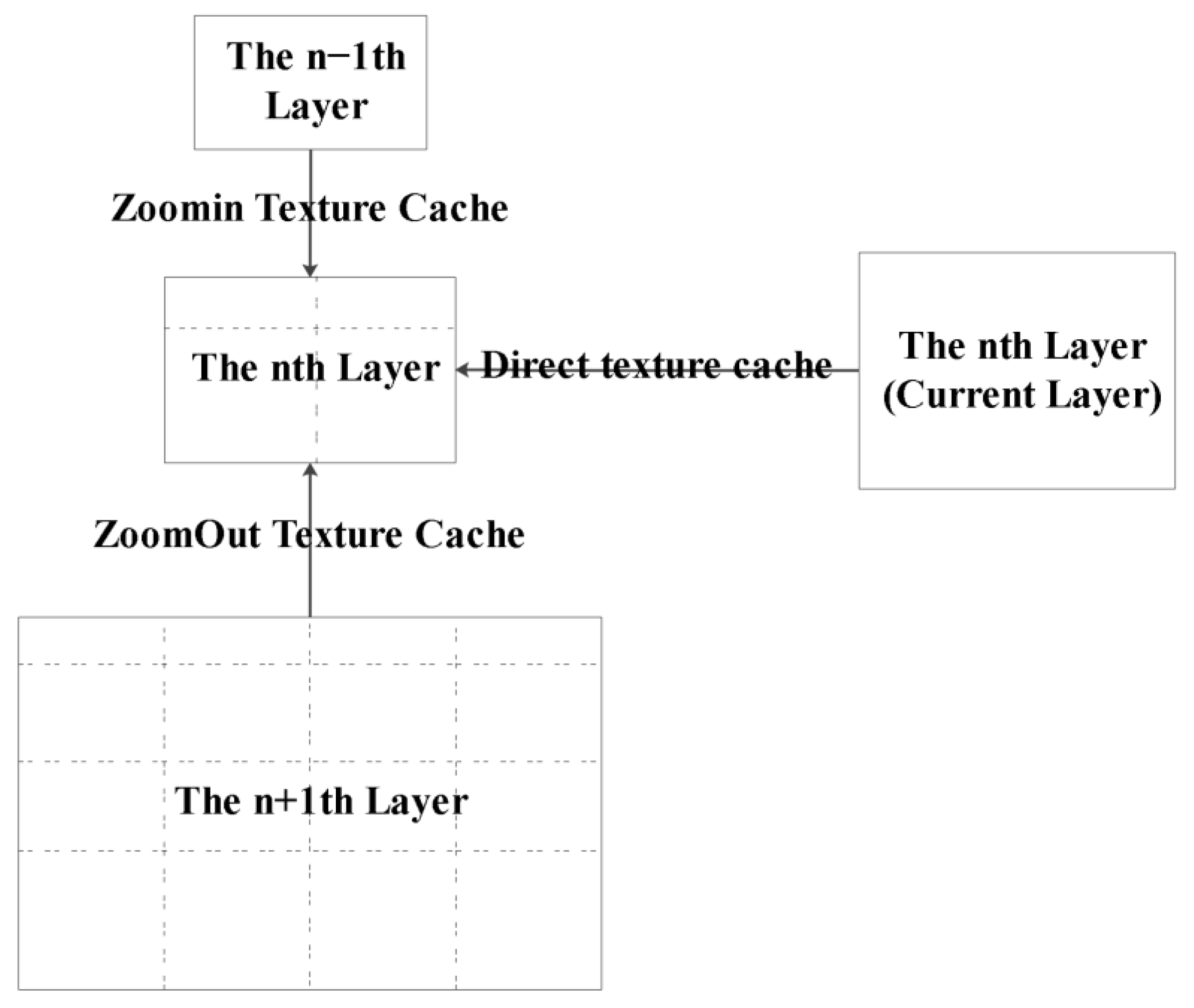

3.2.2. High-Speed AR-GIS Map Symbol Cache

- (1)

- The user browses the map to the nth layer and sends a display request to the system.

- (2)

- The system automatically traverses all of the images of the nth layer in the cache pool. The images are transferred directly to graphics memory for display. Images in the blank area are overwritten to visualize the latest images in the current area intuitively.

- (3)

- Traversing the image of the n + 1th layer in the cache pool. If an image intersects the current view area and has a scale bar greater than the current view area, the texture cache is stretched into the graphics memory for display via texture mapping technology, overwriting the blank area of the image.

- (4)

- Traversing the n − 1th layer image in the cache pool. If an image intersects the current view area with a scale less than the current view area, the texture is stretched to the graphics memory for display via texture mapping technology, overwriting the blank area of the image.

- (5)

- Traversal of all images in the nth layer. All images that intersect the current view are copied to memory, and images of the n + 1th or n − 1th layer are replaced to ensure that the final displayed image is up-to-date with no remaining stretched images.

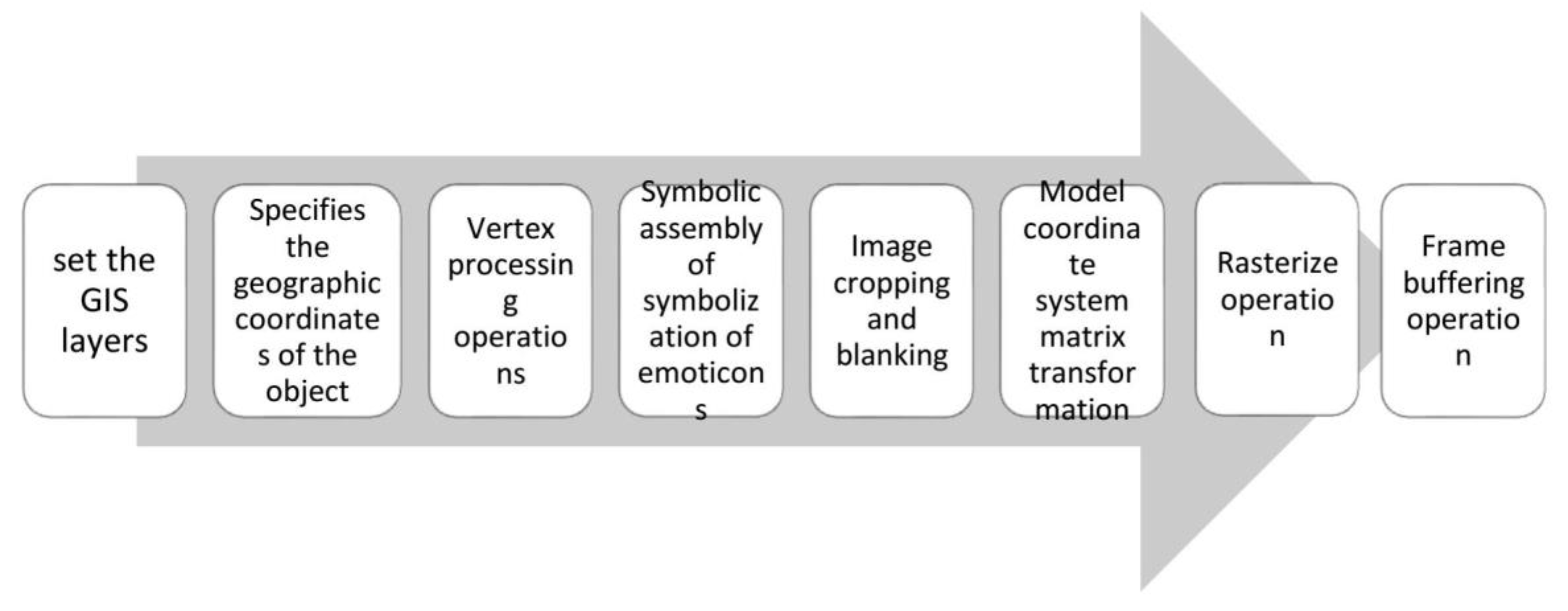

3.2.3. GPU-Based High-Speed Pre-Processing for OpenGL Render Objects (RO)

3.3. The AR-GIS Rendering Engine Map Symbolization Core

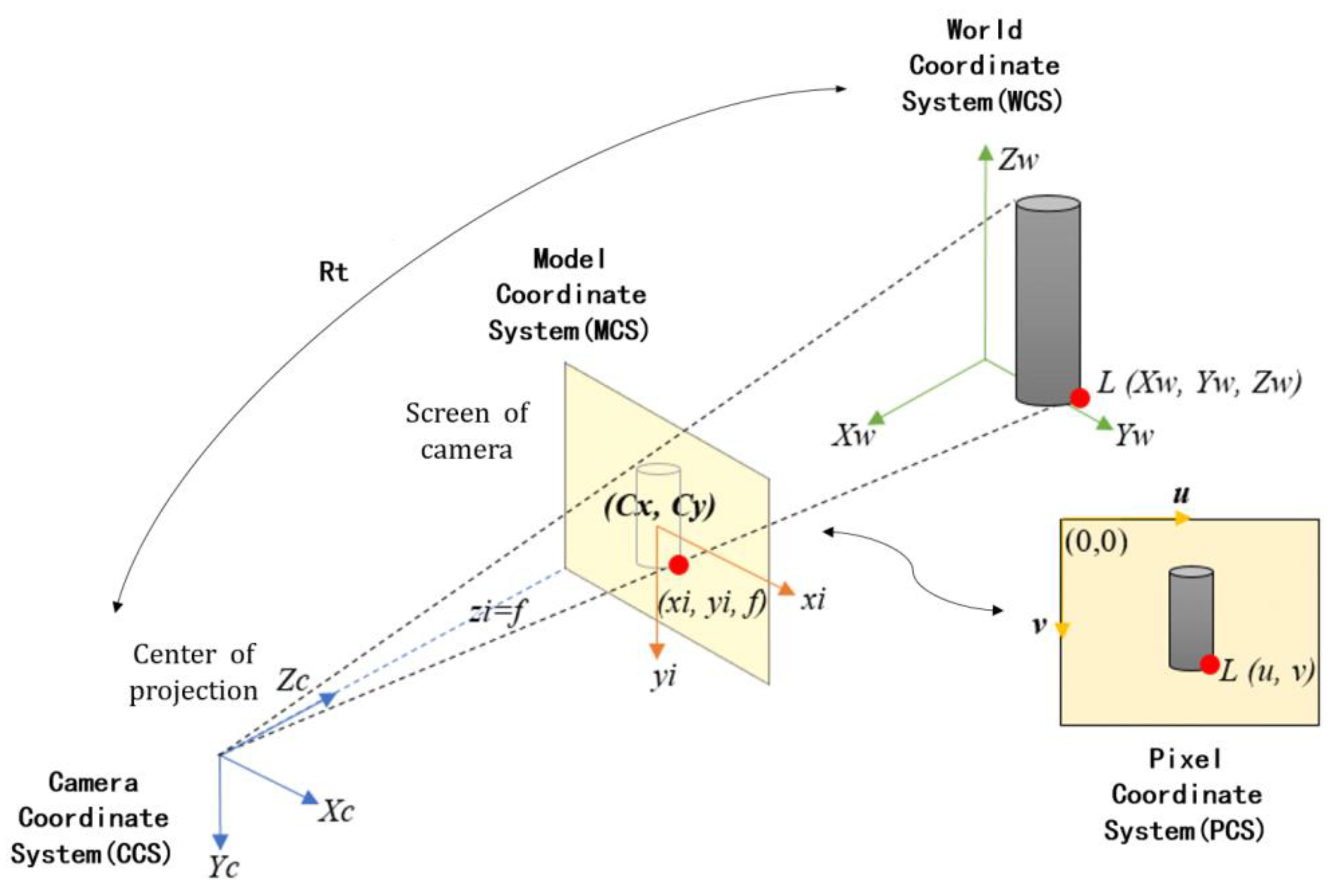

3.3.1. The AR-GIS Map Symbol Drawing Model

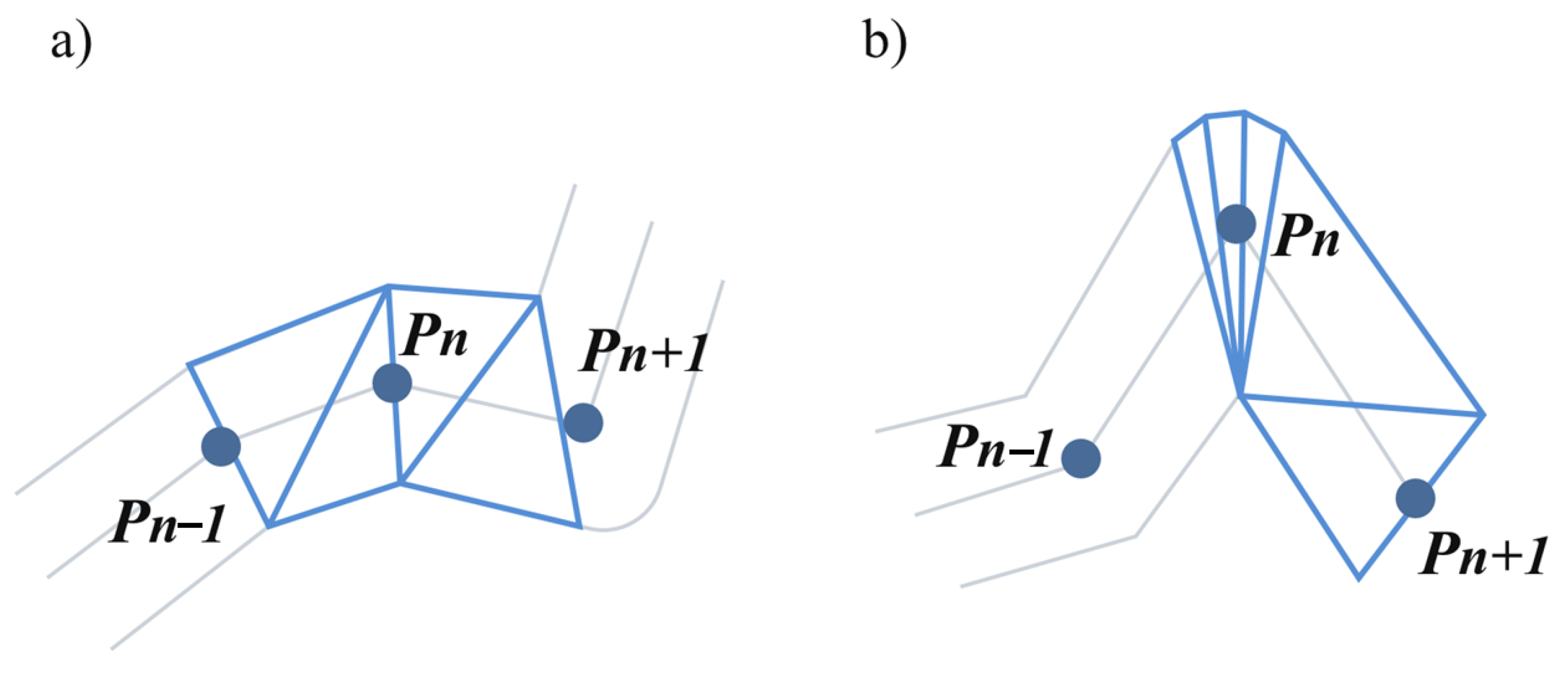

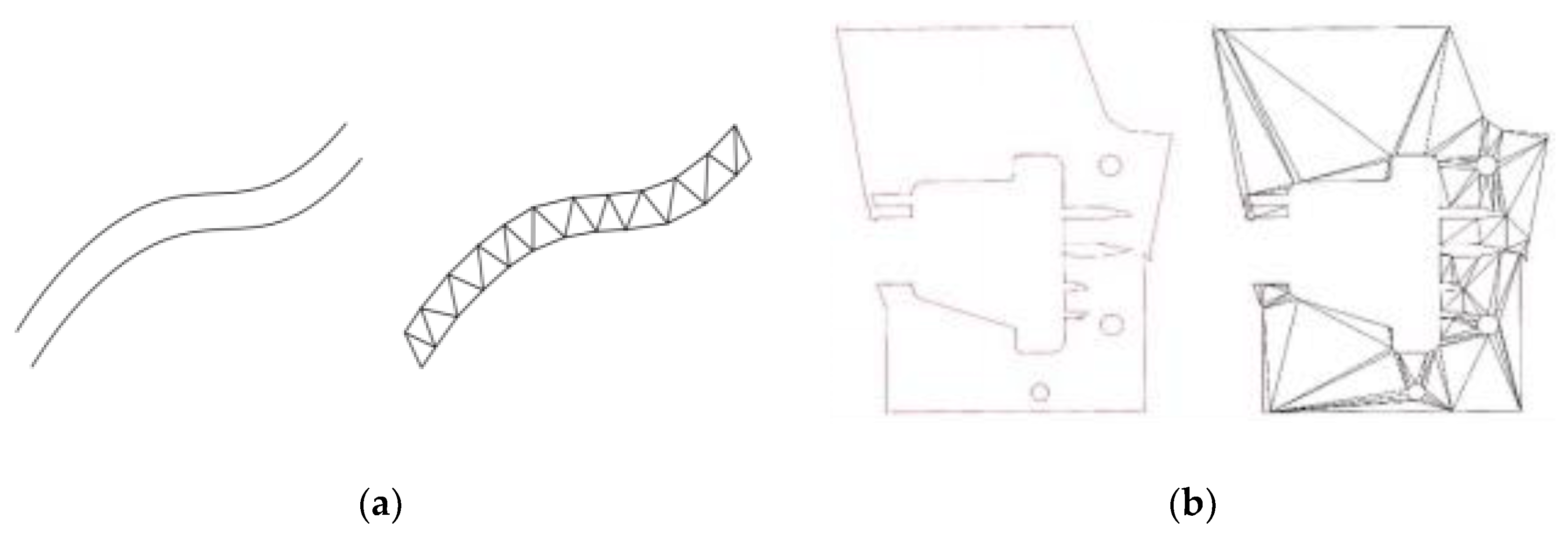

3.3.2. AR Vector Feature Triangulation Rendering

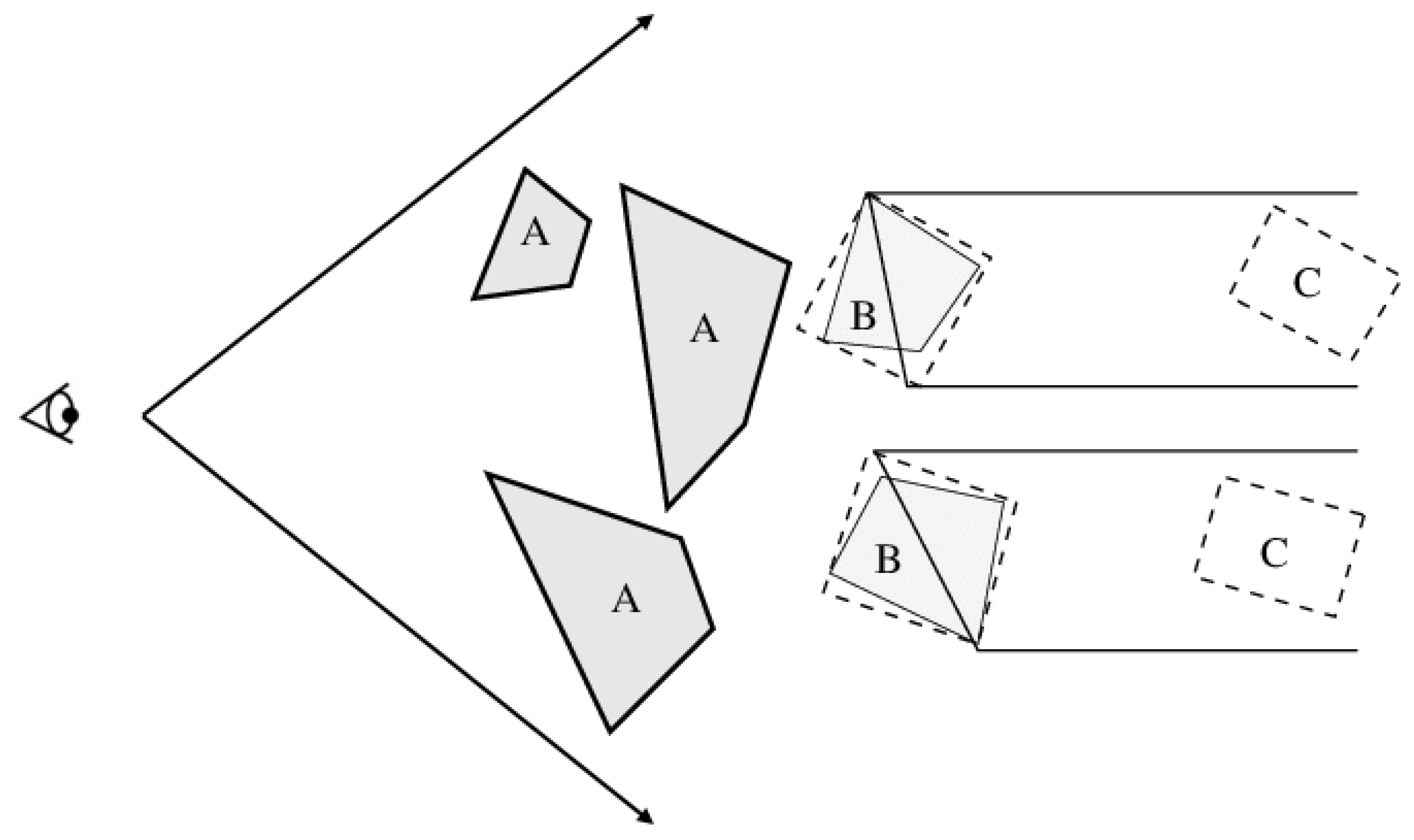

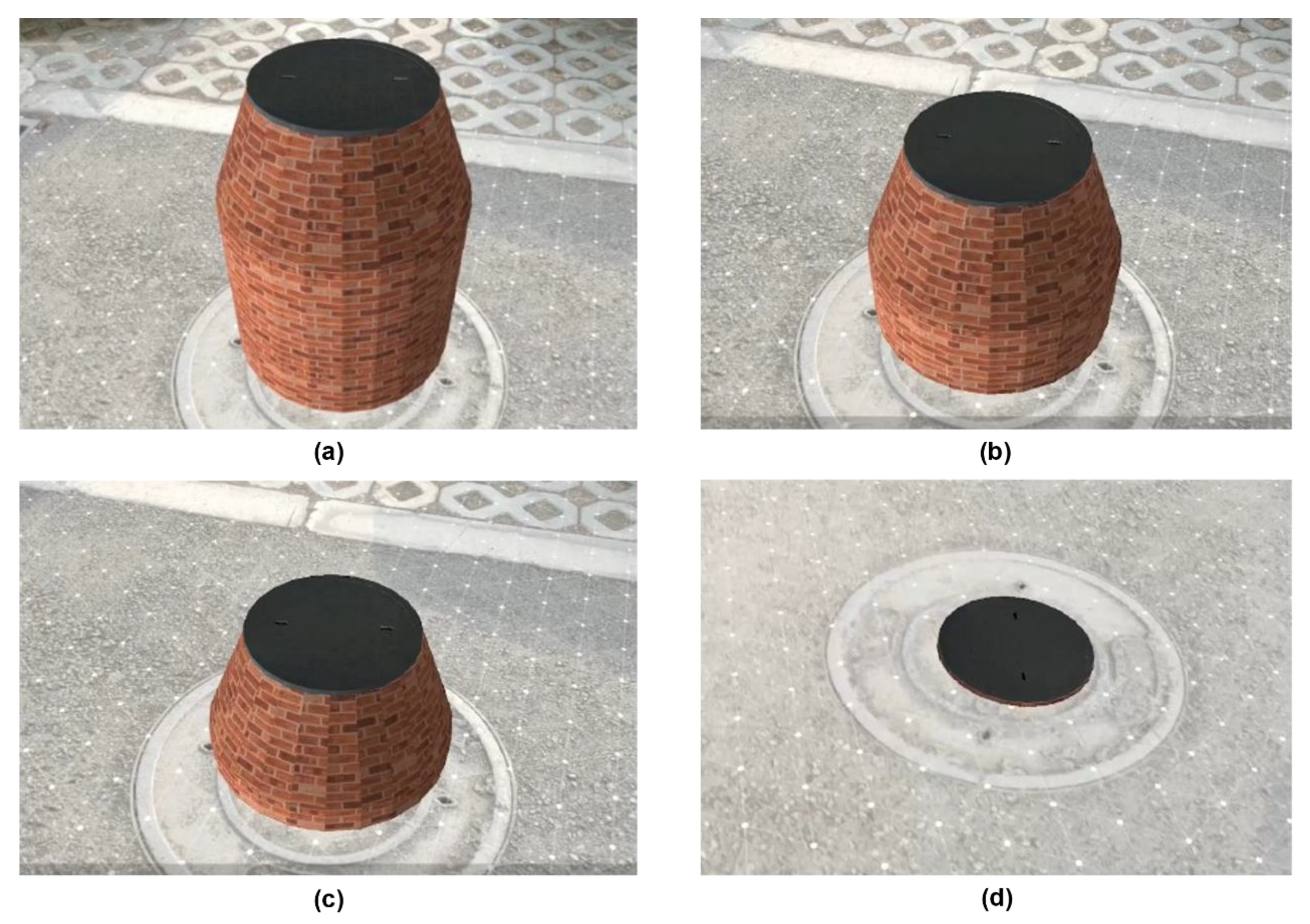

3.3.3. AR Stereoscopic Masking Culling

4. Experiments and Results

4.1. Experiments Design

4.2. Time Test of Single AR Map Visualization

4.3. Comparison Test of AR Map Visualization

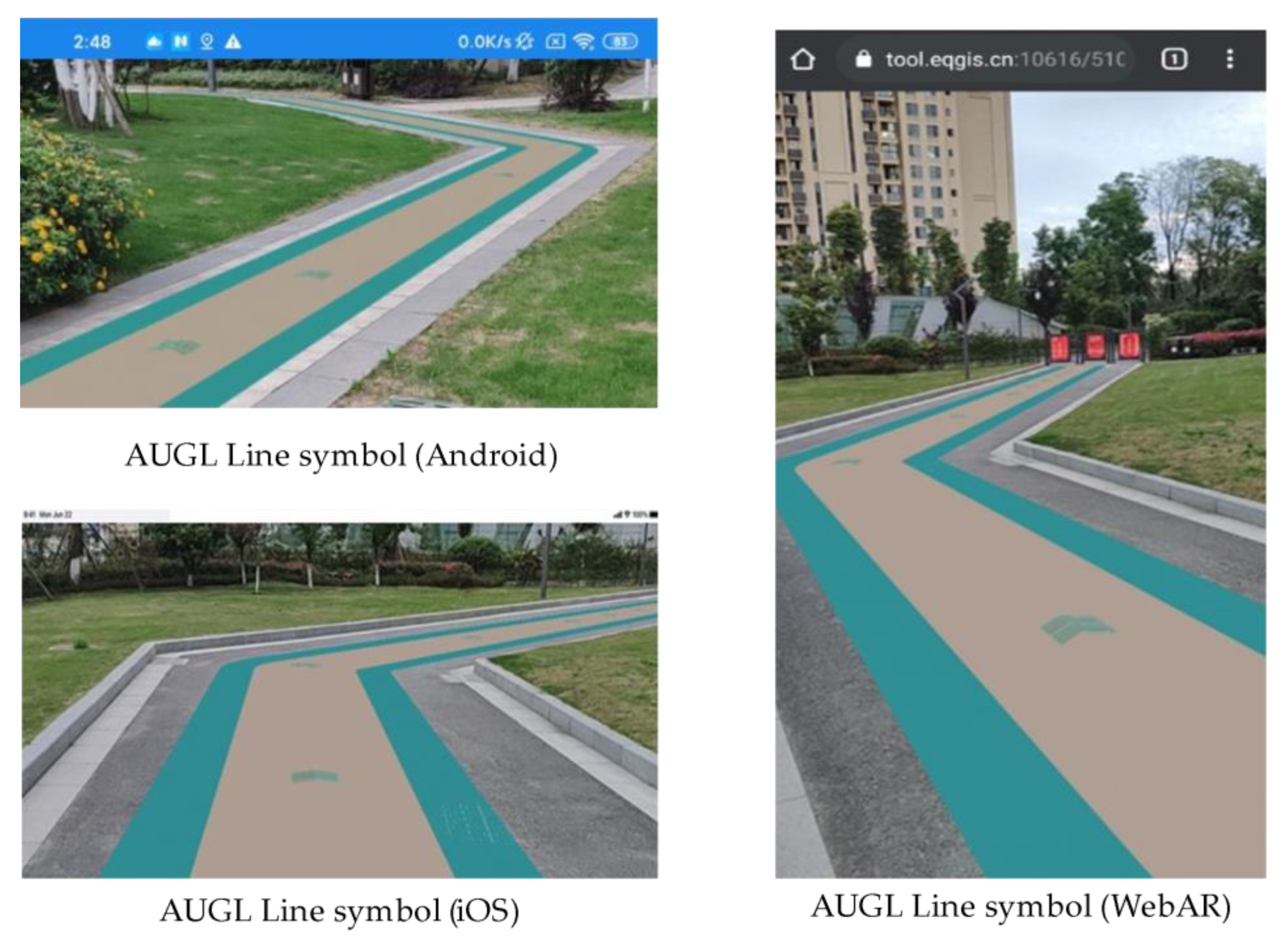

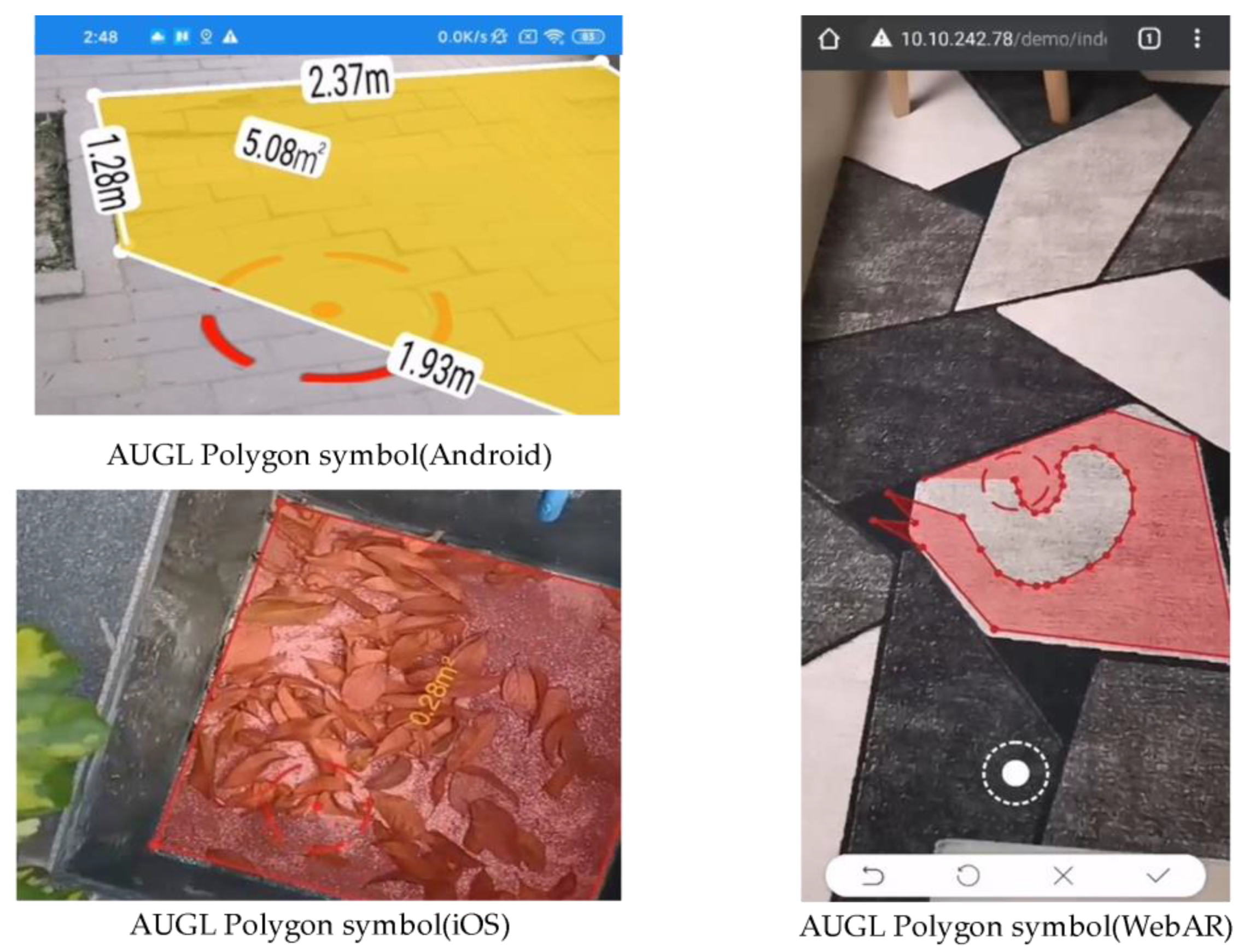

4.4. Consistency Test of AR Visualization Cross Platform Algorithm

5. Conclusions and Further Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Kim, W.; Kerle, N.; Gerke, M. Mobile augmented reality in support of building damage and safety assessment. Nat. Hazards Earth Syst. Sci. 2016, 16, 287–298. [Google Scholar] [CrossRef] [Green Version]

- Zollmann, S.; Langlotz, T.; Grasset, R.; Lo, W.H.; Mori, S.; Regenbrecht, H. Visualization Techniques in Augmented Reality: A Taxonomy, Methods and Patterns. IEEE Trans. Vis. Comput. Graph. 2020, 27, 3808–3825. [Google Scholar] [CrossRef] [PubMed]

- Kilimann, J.-E.; Heitkamp, D.; Lensing, P. An Augmented Reality Application for Mobile Visualization of GIS-Referenced Landscape Planning Projects. In Proceedings of the 17th International Conference on Virtual-Reality Continuum and Its Applications in Industry, Brisbane, Australia, 14–16 November 2019; ACM: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Papadopoulou, E.-E.; Kasapakis, V.; Vasilakos, C.; Papakonstantinou, A.; Zouros, N.; Chroni, A.; Soulakellis, N. Geovisualization of the Excavation Process in the Lesvos Petrified Forest, Greece Using Augmented Reality. ISPRS Int. J. Geo-Inf. 2020, 9, 374. [Google Scholar] [CrossRef]

- Kamat, V.; El-Tawil, S. Evaluation of Augmented Reality for Rapid Assessment of Earthquake-Induced Building Damage. J. Comput. Civ. Eng. 2007, 21, 303–310. [Google Scholar] [CrossRef]

- Siekański, P.; Michoński, J.; Bunsch, E.; Sitnik, R. CATCHA: Real-Time Camera Tracking Method for Augmented Reality Applications in Cultural Heritage Interiors. ISPRS Int. J. Geo-Inf. 2018, 7, 479. [Google Scholar] [CrossRef] [Green Version]

- Panou, C.; Ragia, L.; Dimelli, D.; Mania, K. An Architecture for Mobile Outdoors Augmented Reality for Cultural Heritage. ISPRS Int. J. Geo-Inf. 2018, 7, 463. [Google Scholar] [CrossRef] [Green Version]

- Rapprich, V.; Lisec, M.; Fiferna, P.; Zavada, P. Application of Modern Technologies in Popularization of the Czech Volcanic Geoheritage. Geoheritage 2016, 9, 413–420. [Google Scholar] [CrossRef]

- Werner, P.A. Review of Implementation of Augmented Reality into the Georeferenced Analogue and Digital Maps and Images. Information 2018, 10, 12. [Google Scholar] [CrossRef] [Green Version]

- Ortega, S.; Wendel, J.; Santana, J.M.; Murshed, S.M.; Boates, I.; Trujillo, A.; Nichersu, A.; Suárez, J.P. Making the Invisible Visible—Strategies for Visualizing Underground Infrastructures in Immersive Environments. ISPRS Int. J. Geo-Inf. 2019, 8, 152. [Google Scholar] [CrossRef] [Green Version]

- Haynes, P.; Hehl-Lange, S.; Lange, E. Mobile Augmented Reality for Flood Visualisation. Environ. Model. Softw. 2018, 109, 380–389. [Google Scholar] [CrossRef]

- Schmid, F.; Frommberger, L.; Cai, C.; Freksa, C. What You See is What You Map: Geometry-Preserving Micro-Mapping for Smaller Geographic Objects with mapIT. In Lecture Notes in Geoinformation and Cartography; Springer: Cham, Switzerland, 2013; pp. 3–19. ISBN 9783319006147. [Google Scholar]

- King, G.R.; Piekarski, W.; Thomas, B.H. ARVino—Outdoor augmented reality visualisation of viticulture GIS data. In Proceedings of the Fourth IEEE and ACM International Symposium on Symposium on Mixed and Augmented Reality, Vienna, Austria, 5–8 October 2005; IEEE: New York, NY, USA, 2005; pp. 52–55. [Google Scholar]

- Liarokapis, F.; Greatbatch, I.; Mountain, D.; Gunesh, A.; Brujic-Okretic, V.; Raper, J. Mobile Augmented Reality Techniques for GeoVisualisation. In Proceedings of the Ninth International Conference on Information Visualisation (IV’05), London, UK, 6–8 July 2005; IEEE: New York, NY, USA, 2005; pp. 745–751. [Google Scholar]

- Zaher, M.; Greenwood, D.; Marzouk, M. Mobile augmented reality applications for construction projects. Constr. Innov. 2018, 18, 152–166. [Google Scholar] [CrossRef] [Green Version]

- Behzadan, A.H.; Dong, S.; Kamat, V. Augmented reality visualization: A review of civil infrastructure system applications. Adv. Eng. Inform. 2015, 29, 252–267. [Google Scholar] [CrossRef]

- Meriaux, A.; Wittner, E.; Hansen, R.; Waeny, T. VR and AR in ArcGIS: An Introduction. In Proceedings of the 2019 ESRI User Conference-Technical Workshops, San Diego, CA, USA, 8–12 July 2019. [Google Scholar]

- Palmarini, R.; Erkoyuncu, J.A.; Roy, R.; Torabmostaedi, H. A systematic review of augmented reality applications in maintenance. Robot. Comput. Manuf. 2018, 49, 215–228. [Google Scholar] [CrossRef] [Green Version]

- Kim, K.; Billinghurst, M.; Bruder, G.; Duh, H.B.-L.; Welch, G.F. Revisiting Trends in Augmented Reality Research: A Review of the 2nd Decade of ISMAR (2008–2017). IEEE Trans. Vis. Comput. Graph. 2018, 24, 2947–2962. [Google Scholar] [CrossRef]

- Brum, M.R.; Rieder, R. Virtual Reality Applications for Smart Cities in Health: A Systematic Review. In Proceedings of the 2015 XVII Symposium on Virtual and Augmented Reality, Sao Paulo, Brazil, 25–28 May 2015; IEEE: New York, NY, USA, 2015; pp. 154–159. [Google Scholar]

- Keil, J.; Korte, A.; Ratmer, A.; Edler, D.; Dickmann, F. Augmented Reality (AR) and Spatial Cognition: Effects of Holographic Grids on Distance Estimation and Location Memory in a 3D Indoor Scenario. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2020, 88, 165–172. [Google Scholar] [CrossRef]

- Collins, J.; Regenbrecht, H.; Langlotz, T. Visual Coherence in Mixed Reality: A Systematic Enquiry. Presence Teleoperators Virtual Environ. 2017, 26, 16–41. [Google Scholar] [CrossRef]

- Kruijff, E.; Swan, J.E.; Feiner, S. Perceptual issues in augmented reality revisited. In Proceedings of the 2010 IEEE International Symposium on Mixed and Augmented Reality, Seoul, Korea, 13–16 October 2010; IEEE: New York, NY, USA, 2010; pp. 3–12. [Google Scholar]

- Bowman, D.A.; North, C.; Chen, J.; Polys, N.F.; Pyla, P.S.; Yilmaz, U. Information-rich virtual environments: Theory, tools, and research agenda. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Osaka, Japan, 1–3 October 2013; ACM: New York, NY, USA, 2003; Volume F1290, pp. 81–90. [Google Scholar]

- Bowman, D.; Hodges, L.F. Formalizing the Design, Evaluation, and Application of Interaction Techniques for Immersive Virtual Environments. J. Vis. Lang. Comput. 1999, 10, 37–53. [Google Scholar] [CrossRef]

- Elmqvist, N.; Tsigas, P. A Taxonomy of 3D Occlusion Management for Visualization. IEEE Trans. Vis. Comput. Graph. 2008, 14, 1095–1109. [Google Scholar] [CrossRef]

- Willett, W.; Jansen, Y.; Dragicevic, P. Embedded Data Representations. IEEE Trans. Vis. Comput. Graph. 2016, 23, 461–470. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosser, J.; Morley, J.; Smith, G. Modelling of Building Interiors with Mobile Phone Sensor Data. ISPRS Int. J. Geo-Inf. 2015, 4, 989–1012. [Google Scholar] [CrossRef] [Green Version]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Eng. Med. Boil. Mag. 2001, 21, 34–47. [Google Scholar] [CrossRef] [Green Version]

- Narzt, W.; Pomberger, G.; Ferscha, A.; Kolb, D.; Müller, R.; Wieghardt, J.; Hörtner, H.; Lindinger, C. Augmented reality navigation systems. Univers. Access Inf. Soc. 2005, 4, 177–187. [Google Scholar] [CrossRef]

- Devaux, A.; Hoarau, C.; Brédif, M.; Christophe, S. 3D Urban Geovisualization: In Situ Augmented and Mixed Reality Experiments. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 41–48. [Google Scholar] [CrossRef] [Green Version]

- Banville, S.; Diggelen, F.V. Precise GNSS for Everyone: Precise Positioning Using Raw GPS Measurements from Android Smartphones. GPS World 2016, 27, 43–48. [Google Scholar]

- Wanninger, L.; Heßelbarth, A. GNSS code and carrier phase observations of a Huawei P30 smartphone: Quality assessment and centimeter-accurate positioning. GPS Solut. 2020, 24, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Liu, J.; Li, Z. Research on National 1:50,000 Topographic Cartography Data Organization. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 4, 83–89. [Google Scholar] [CrossRef] [Green Version]

- Netek, R.; Brus, J.; Tomecka, O. Performance Testing on Marker Clustering and Heatmap Visualization Techniques: A Comparative Study on JavaScript Mapping Libraries. ISPRS Int. J. Geo-Inf. 2019, 8, 348. [Google Scholar] [CrossRef] [Green Version]

- Guo, M.; Guan, Q.; Xie, Z.; Wu, L.; Luo, X.; Huang, Y. A spatially adaptive decomposition approach for parallel vector data visualization of polylines and polygons. Int. J. Geogr. Inf. Sci. 2015, 29, 1419–1440. [Google Scholar] [CrossRef]

- Hertel, S.; Hormann, K.; Westermann, R. A Hybrid GPU Rendering Pipeline for Alias-Free Hard Shadows. In Eurographics 2009—Areas Papers; Ebert, D., Krüger, J., Eds.; The Eurographics Association: Goslar, Germany, 2009. [Google Scholar]

- Li, S.; Wang, S.; Guan, Y.; Xie, Z.; Huang, K.; Wen, M.; Zhou, L. A High-performance Cross-platform Map Rendering Engine for Mobile Geographic Information System (GIS). ISPRS Int. J. Geo-Inf. 2019, 8, 427. [Google Scholar] [CrossRef] [Green Version]

- Hu, W.; Li, L.; Wu, C.; Zhang, H.; Zhu, H. A parallel method for accelerating visualization and interactivity for vector tiles. PLoS ONE 2019, 14, e0221075. [Google Scholar] [CrossRef] [Green Version]

- Guo, M.; Han, C.; Guan, Q.; Huang, Y.; Xie, Z. A universal parallel scheduling approach to polyline and polygon vector data buffer analysis on conventional GIS platforms. Trans. GIS 2020, 24, 1630–1654. [Google Scholar] [CrossRef]

- Zhang, J.; Ye, Z.; Zheng, K. A Parallel Computing Approach to Spatial Neighboring Analysis of Large Amounts of Terrain Data Using Spark. Sensors 2021, 21, 365. [Google Scholar] [CrossRef]

- Guo, M.; Huang, Y.; Guan, Q.; Xie, Z.; Wu, L. An efficient data organization and scheduling strategy for accelerating large vector data rendering. Trans. GIS 2017, 21, 1217–1236. [Google Scholar] [CrossRef]

- Gao, B.; Dellandréa, E.; Chen, L. Accelerated dictionary learning with GPU/Multi-core CPU and its application to music classification. In Proceedings of the 2012 IEEE 11th International Conference on Signal Processing, Beijing, China, 21–25 October 2012; IEEE: New York, NY, USA, 2012; pp. 1188–1193. [Google Scholar]

- Milosavljević, A.; Dimitrijević, A.; Rančić, D. GIS-augmented video surveillance. Int. J. Geogr. Inf. Sci. 2010, 24, 1415–1433. [Google Scholar] [CrossRef]

- Milosavljević, A.; Rančić, D.; Dimitrijević, A.; Predić, B.; Mihajlović, V. Integration of GIS and video surveillance. Int. J. Geogr. Inf. Sci. 2016, 1–19. [Google Scholar] [CrossRef]

- Zhang, Z. Camera Calibration. In Computer Vision; Springer: Boston, MA, USA, 2014; pp. 76–77. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Chew, L.P. Constrained delaunay triangulations. Algorithmica 1989, 4, 97–108. [Google Scholar] [CrossRef]

- She, J.; Tan, X.; Guo, X.; Liu, J. Rendering 2D Lines on 3D Terrain Model with Optimization in Visual Quality and Running Performance. Trans. GIS 2016, 21, 169–185. [Google Scholar] [CrossRef] [Green Version]

- Staneker, D. An Occlusion Culling Toolkit for OpenSG PLUS. In OpenSG Symposium; Reiners, D., Ed.; The Eurographics Association: Goslar, Germany, 2003. [Google Scholar]

- Dalenoort, G.J. Towards a general theory of representation. Psychol. Res. 1990, 52, 229–237. [Google Scholar] [CrossRef]

- Wu, M.; Zheng, P.; Lu, G.; Zhu, A.-X. Chain-based polyline tessellation algorithm for cartographic rendering. Cartogr. Geogr. Inf. Sci. 2016, 44, 491–506. [Google Scholar] [CrossRef]

- Chatzopoulos, D.; Bermejo, C.; Huang, Z.; Hui, P. Mobile Augmented Reality Survey: From Where We Are to Where We Go. IEEE Access 2017, 5, 6917–6950. [Google Scholar] [CrossRef]

- Wang, S.; Zhong, Y.; Wang, E. An integrated GIS platform architecture for spatiotemporal big data. Futur. Gener. Comput. Syst. 2018, 94, 160–172. [Google Scholar] [CrossRef]

- Heitzler, M.; Lam, J.C.; Hackl, J.; Adey, B.T.; Hurni, L. GPU-Accelerated Rendering Methods to Visually Analyze Large-Scale Disaster Simulation Data. J. Geovisualization Spat. Anal. 2017, 1, 3. [Google Scholar] [CrossRef]

| Map Symbol Type | AR Camera Viewport Map Query | Map Symbols Drawn to the Texture Cache | Interactive Rendering of Result | Total Rendering Time | Lags |

|---|---|---|---|---|---|

| Points (500 features) | 15 ms | 25 ms | 14 ms | 54 ms | Running smoothly |

| Lines (500 features) | 16 ms | 21 ms | 13 ms | 50 ms | Running smoothly |

| Polygons (500 features) | 18 ms | 24 ms | 10 ms | 52 ms | Running smoothly |

| Annotations (200 features) | 14 ms | 29 ms | 15 ms | 58 ms | No obvious lag |

| Map Symbol Type | AUGL Engine | ArcGIS Runtime AR Rendering | Mapbox AR Rendering | Battery Power Consumption Ratio (AUGL/ArcGIS/Mapbox) |

|---|---|---|---|---|

| Points (500 features) | 37 ms | 55 ms | 89 ms | 1:1.12:1.17 |

| Lines (500 features) | 45 ms | 53 ms | 117 ms | 1:1.15:1.13 |

| Polygons (500 features) | 41 ms | 62 ms | 106 ms | 1:1.1:1.16 |

| Annotations (200 features) | 48 ms | 67 ms | 109 ms | 1:1.13:1.15 |

| AR-GIS Engine | Smoothed Line Connection | Smoothed Line Endpoint | Annotation Auto Avoidance Display | Real-Time Ground Display | Occlusion Culling Display |

|---|---|---|---|---|---|

| Android (ArcGIS) | Not supported | Not supported | Partially supported | Not supported | Not supported |

| iOS (ArcGIS) | Not supported | Not supported | Partially supported | Not supported | Not supported |

| WebAR (ArcGIS) | Not supported | Not supported | Not supported | Not supported | Not supported |

| Android (Mapbox) | Supported | Supported | Partially supported | Supported | Not supported |

| iOS (Mapbox) | Not supported | Not supported | Partially supported | Not supported | Not supported |

| WebAR (Mapbox) | Not supported | Not supported | Not supported | Not supported | Not supported |

| Android (AUGL) | Supported | Supported | Supported | Supported | Supported |

| iOS (AUGL) | Supported | Partially supported | Supported | Supported | Supported |

| WebAR (AUGL) | Supported | Supported | Supported | Supported | Partially supported |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, K.; Wang, C.; Wang, S.; Liu, R.; Chen, G.; Li, X. An Efficient, Platform-Independent Map Rendering Framework for Mobile Augmented Reality. ISPRS Int. J. Geo-Inf. 2021, 10, 593. https://doi.org/10.3390/ijgi10090593

Huang K, Wang C, Wang S, Liu R, Chen G, Li X. An Efficient, Platform-Independent Map Rendering Framework for Mobile Augmented Reality. ISPRS International Journal of Geo-Information. 2021; 10(9):593. https://doi.org/10.3390/ijgi10090593

Chicago/Turabian StyleHuang, Kejia, Chenliang Wang, Shaohua Wang, Runying Liu, Guoxiong Chen, and Xianglong Li. 2021. "An Efficient, Platform-Independent Map Rendering Framework for Mobile Augmented Reality" ISPRS International Journal of Geo-Information 10, no. 9: 593. https://doi.org/10.3390/ijgi10090593

APA StyleHuang, K., Wang, C., Wang, S., Liu, R., Chen, G., & Li, X. (2021). An Efficient, Platform-Independent Map Rendering Framework for Mobile Augmented Reality. ISPRS International Journal of Geo-Information, 10(9), 593. https://doi.org/10.3390/ijgi10090593