Identifying Urban Wetlands through Remote Sensing Scene Classification Using Deep Learning: A Case Study of Shenzhen, China

Abstract

:1. Introduction

2. Materials and Methods

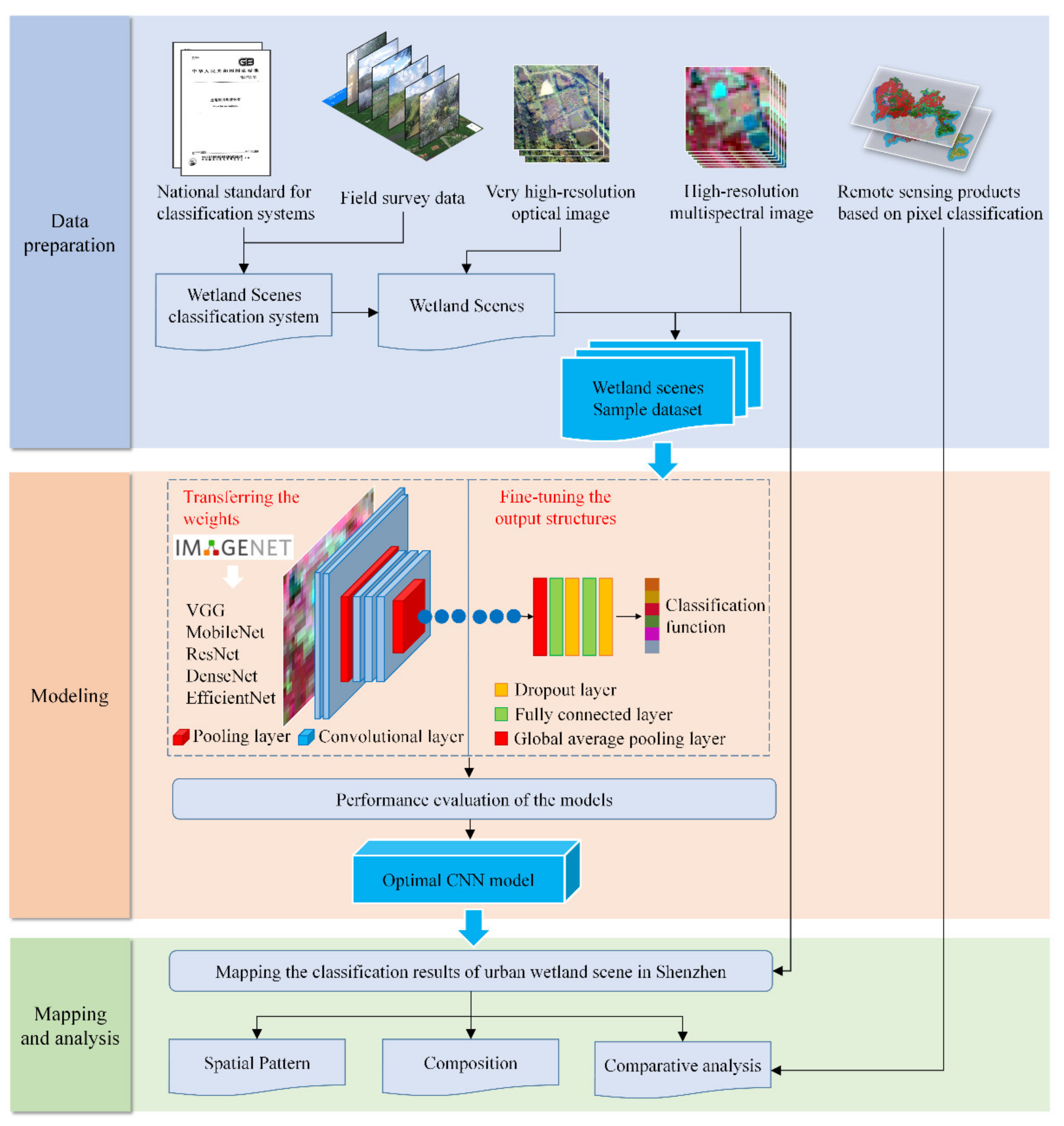

2.1. Overall Framework

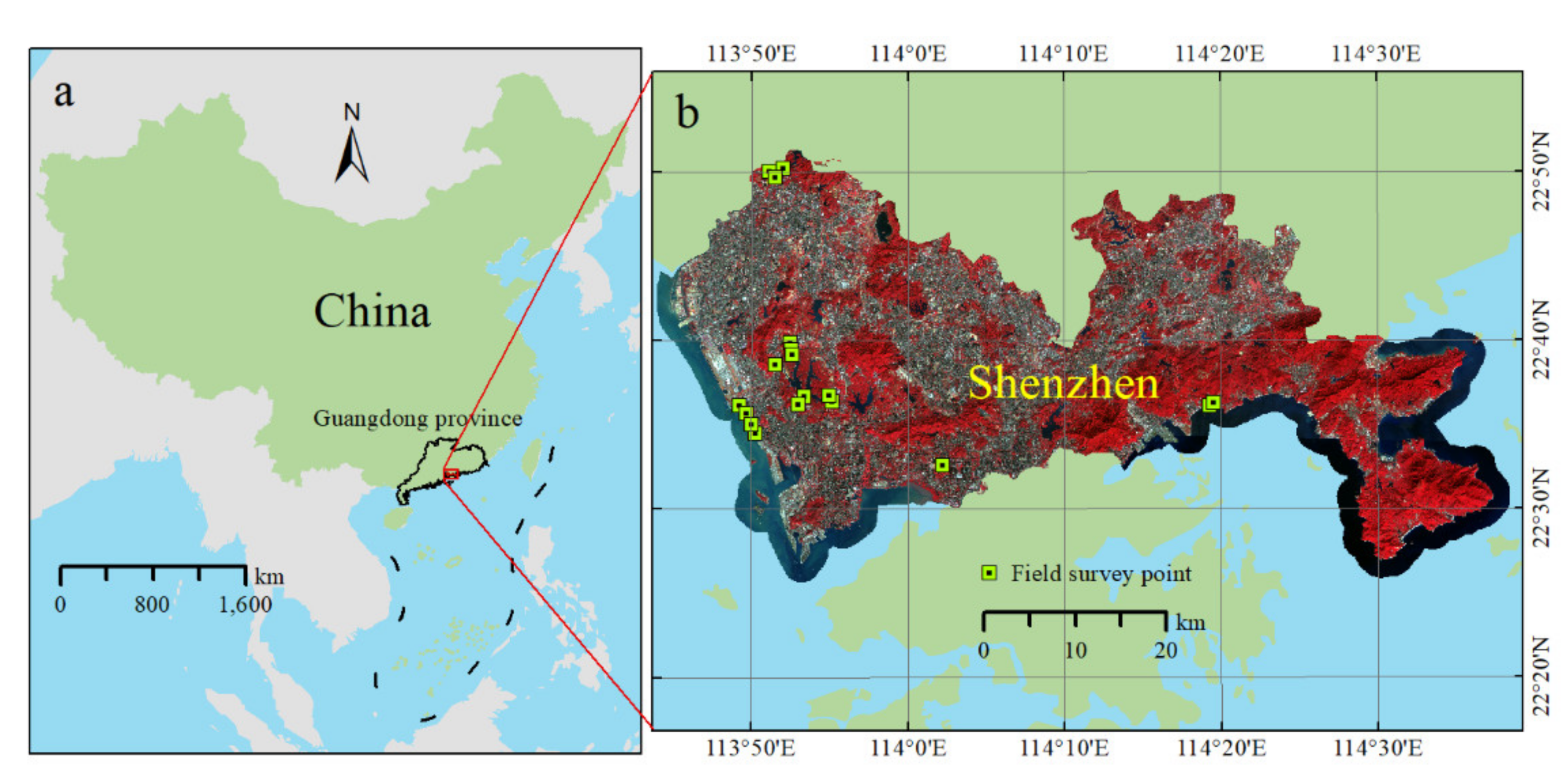

2.2. Study Area

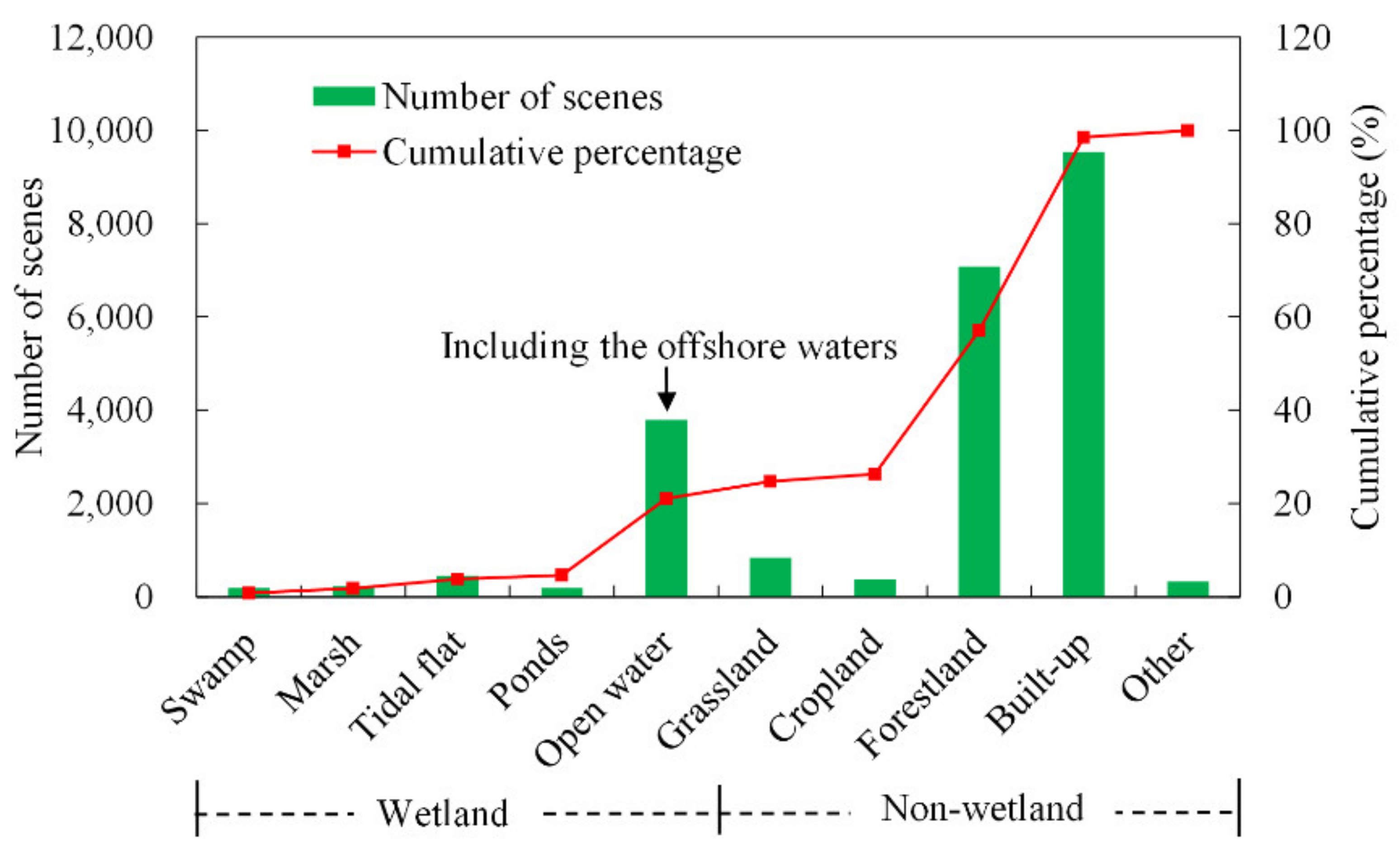

2.3. Classification System and Datasets

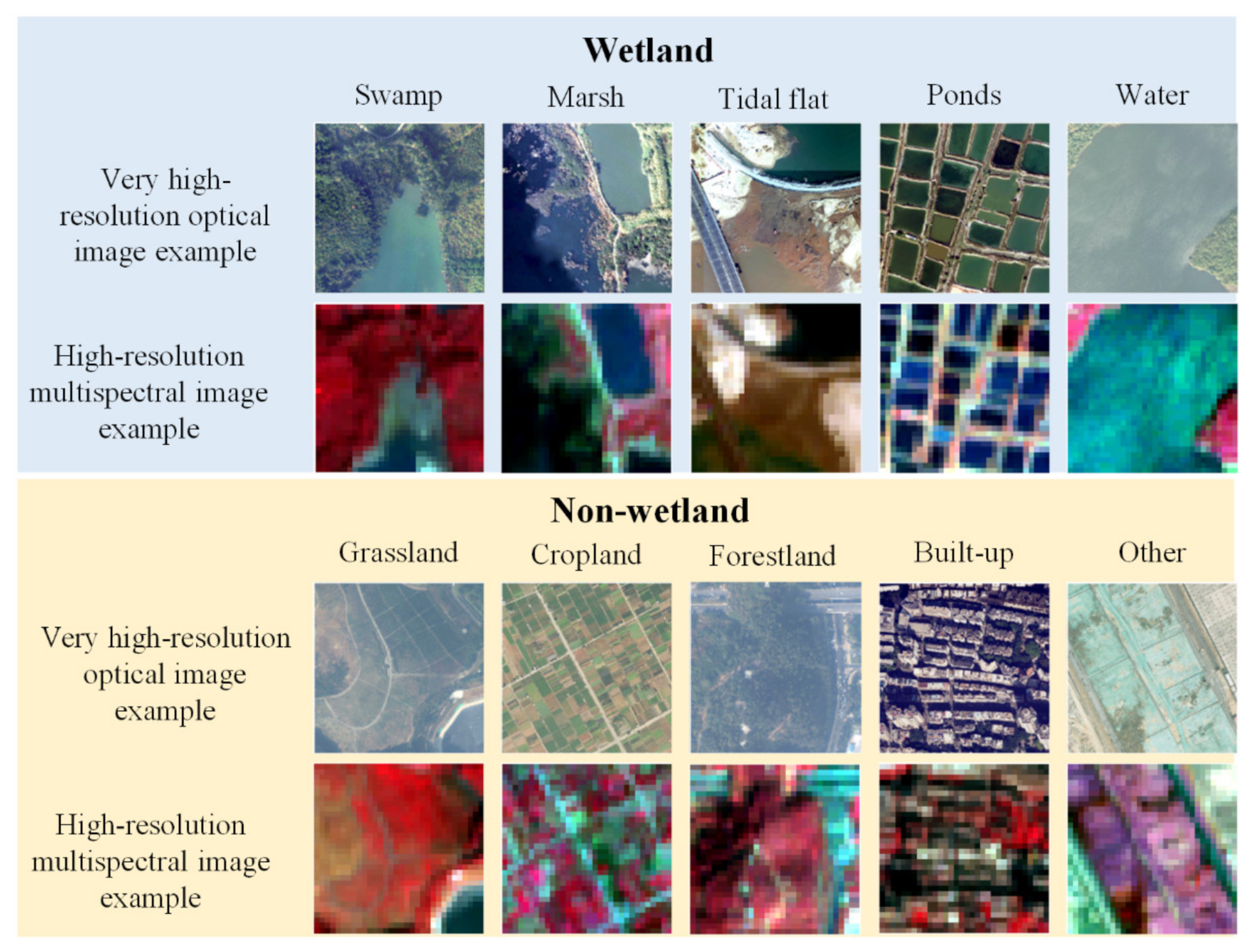

2.3.1. Classification System

2.3.2. Reference Data of the Very High-Resolution Optical Images

2.3.3. Classification Data of the High-Resolution Multispectral Images

2.3.4. Comparison Dataset of Land Cover Products

2.4. Deep Learning Scene Classification Model

2.5. Evaluation Metrics

2.6. Pattern Detection Method

3. Results

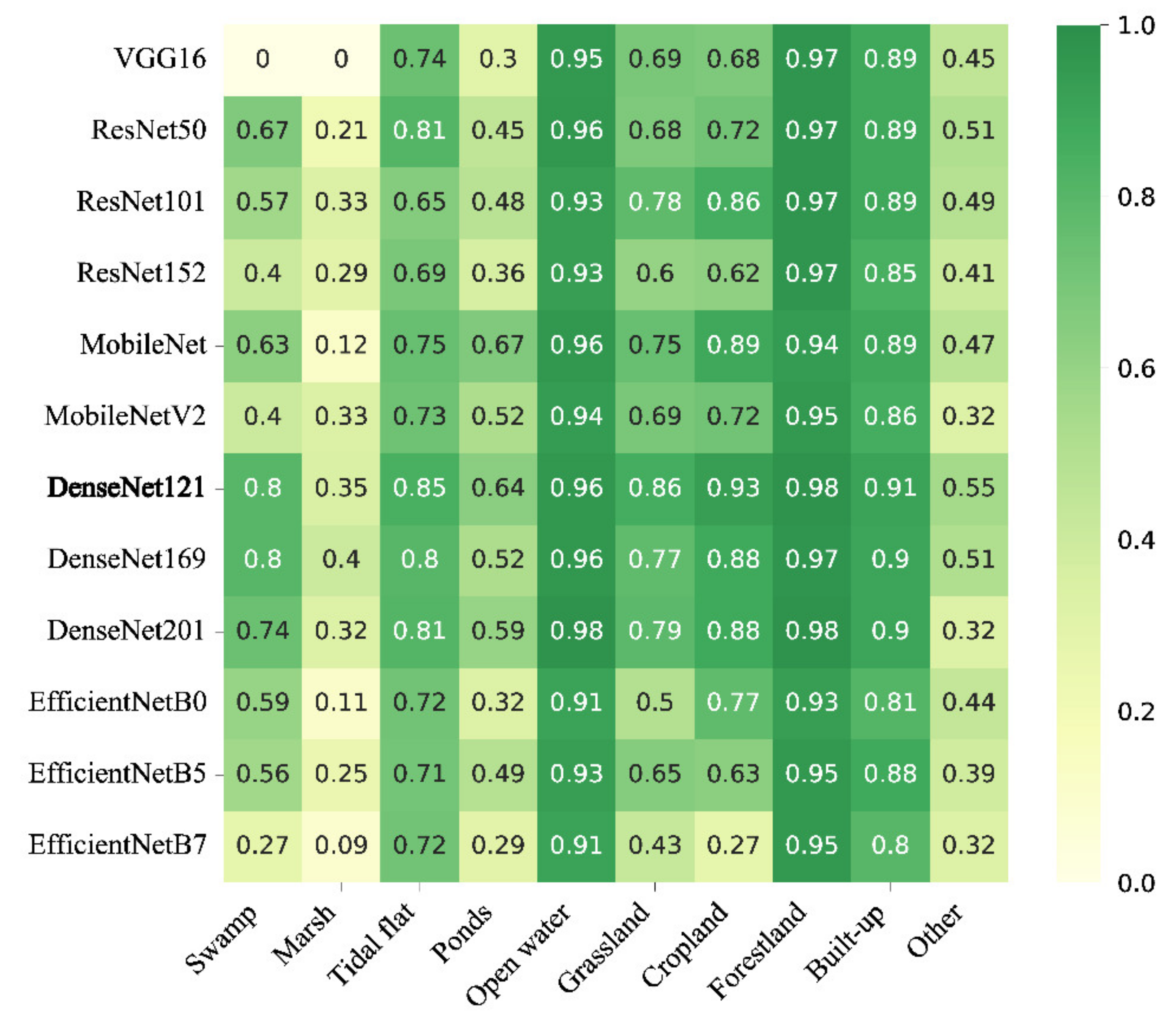

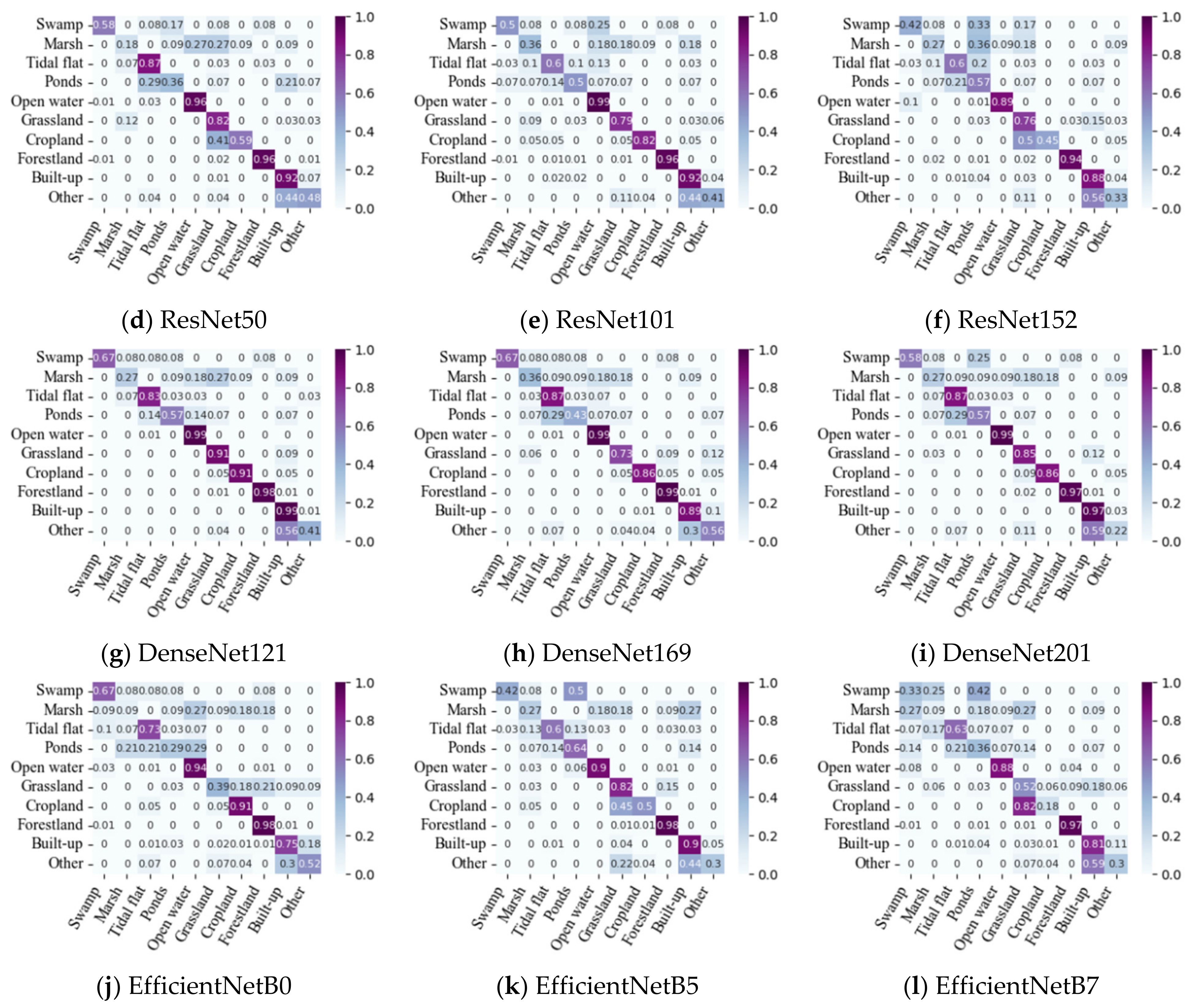

3.1. Classification Performances of Models

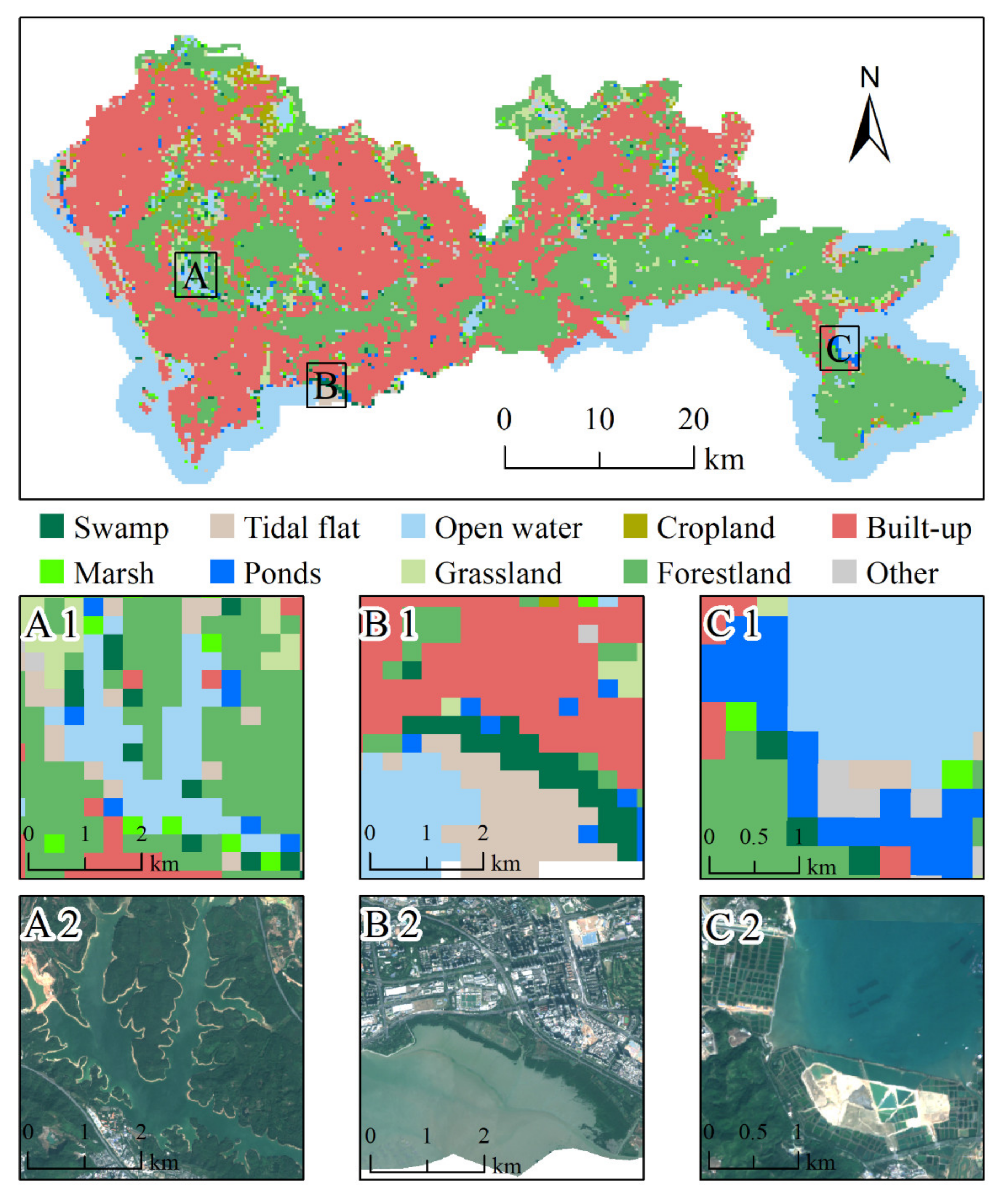

3.2. Scene Classification Results in Shenzhen

3.3. Comparison with Pixel Classification Products

3.4. Spatial Pattern of Wetland Scenes in Shenzhen

3.5. Composition of Wetland Scenes in Shenzhen

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bolund, P.; Hunhammar, S. Ecosystem services in urban areas. Ecolog. Econ. 1999, 29, 293–301. [Google Scholar] [CrossRef]

- Ehrenfeld, J.G. Evaluating wetlands within an urban context. Ecol. Eng. 2000, 15, 253–265. [Google Scholar] [CrossRef]

- Boyer, T.; Polasky, S. Valuing urban wetlands: A review of non-market valuation studies. Wetlands 2004, 24, 744–755. [Google Scholar] [CrossRef]

- Gómez-Baggethun, E.; Barton, D.N. Classifying and valuing ecosystem services for urban planning. Ecolog. Econ. 2013, 86, 235–245. [Google Scholar] [CrossRef]

- Xue, Z.S.; Hou, G.L.; Zhang, Z.S.; Lyu, X.G.; Jiang, M.; Zou, Y.C.; Shen, X.J.; Wang, J.; Liu, X.H. Quantifying the cooling-effects of urban and peri-urban wetlands using remote sensing data: Case study of cities of Northeast China. Landsc. Urban Plan. 2019, 182, 92–100. [Google Scholar] [CrossRef]

- Patz, J.A.; Daszak, P.; Tabor, G.M.; Aguirre, A.A.; Pearl, M.; Epstein, J.; Wolfe, N.D.; Kilpatrick, A.M.; Foufopoulos, J.; Molyneux, D.; et al. Unhealthy landscapes: Policy recommendations on land use change and infectious disease emergence. Environ. Health Perspect. 2004, 112, 1092–1098. [Google Scholar] [CrossRef] [Green Version]

- Carew, M.E.; Pettigrove, V.; Cox, R.L.; Hoffmann, A.A. The response of Chironomidae to sediment pollution and other environmental characteristics in urban wetlands. Freshw. Biol. 2007, 52, 2444–2462. [Google Scholar] [CrossRef]

- Straka, T.M.; Lentini, P.E.; Lumsden, L.F.; Wintle, B.A.; van der Ree, R. Urban bat communities are affected by wetland size, quality, and pollution levels. Ecol. Evol. 2016, 6, 4761–4774. [Google Scholar] [CrossRef] [Green Version]

- Townsend, K.R.; Lu, H.C.; Sharley, D.J.; Pettigrove, V. Associations between microplastic pollution and land use in urban wetland sediments. Environ. Sci. Pollut. Res. 2019, 26, 22551–22561. [Google Scholar] [CrossRef]

- Ozesmi, S.L.; Bauer, M.E. Satellite remote sensing of wetlands. Wetl. Ecol. Manag. 2002, 10, 381–402. [Google Scholar] [CrossRef]

- Shaikh, M.; Green, D.; Cross, H. A remote sensing approach to determine environmental flows for wetlands of the Lower Darling River, New South Wales, Australia. Int. J. Remote Sens. 2001, 22, 1737–1751. [Google Scholar] [CrossRef]

- Guan, Y.A.; Bai, J.H.; Tian, X.; Zhi, L.H.; Yu, Z.B. Integrating ecological and socio-economic systems by carbon metabolism in a typical wetland city of China. J. Clean. Prod. 2021, 279, 123342. [Google Scholar] [CrossRef]

- Rashid, I.; Aneaus, S. Landscape transformation of an urban wetland in Kashmir Himalaya, India using high-resolution remote sensing data, geospatial modeling, and ground observations over the last 5 decades (1965–2018). Environ. Monit. Assess. 2020, 192, 635. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.P.; Jiang, H.; Zhou, G.M.; Song, X.D.; Yu, S.Q.; Chang, J.; Liu, S.R.; Jiang, Z.S.; Jiang, B. Monitoring the change of urban wetland using high spatial resolution remote sensing data. Int. J. Remote Sens. 2010, 31, 1717–1731. [Google Scholar] [CrossRef]

- Zeng, Z.; Liu, Y. Fractal analysis of urban wetland shape changes using remote sensing—A case study of Nanhu Lake in Wuhan. In Proceedings of the 2008 International Workshop on Education Technology and Training & 2008 International Workshop on Geoscience and Remote Sensing, Shanghai, China, 21–22 December 2008; pp. 298–301. [Google Scholar]

- Xu, X.; Ji, W. Knowledge-based algorithm for satellite image classification of urban wetlands. In Proceedings of the International Conference of Computational Methods in Sciences and Engineering, Athens, Greece, 4–7 April 2014; pp. 285–288. [Google Scholar]

- Bareuther, M.; Klinge, M.; Buerkert, A. Spatio-temporal dynamics of algae and macrophyte cover in urban lakes: A remote sensing analysis of Bellandur and Varthur Wetlands in Bengaluru, India. Remote Sens. 2020, 12, 3843. [Google Scholar] [CrossRef]

- Ji, W.; Xu, X.F.; Murambadoro, D. Understanding urban wetland dynamics: Cross-scale detection and analysis of remote sensing. Int. J. Remote Sens. 2015, 36, 1763–1788. [Google Scholar] [CrossRef]

- He, C.Y.; Tian, J.; Shi, P.J.; Hu, D. Simulation of the spatial stress due to urban expansion on the wetlands in Beijing, China using a GIS-based assessment model. Landsc. Urban Plan. 2011, 101, 269–277. [Google Scholar] [CrossRef]

- Cheng, G.; Li, Z.P.; Yao, X.W.; Guo, L.; Wei, Z.L. Remote sensing image scene classification using bag of convolutional features. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1735–1739. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.W.; Lu, X.Q. Remote Sensing Image Scene Classification: Benchmark and State of the Art; IEEE: New York, NY, USA, 2017; pp. 1865–1883. [Google Scholar]

- Blaschke, T.; Strobl, J. What’s wrong with pixels? Some recent developments interfacing remote sensing and GIS. Z. Geoinf. 2001, 6, 12–17. [Google Scholar]

- Amiri, K.; Farah, M.; Leloglu, U.M. BoVSG: Bag of visual SubGraphs for remote sensing scene classification. Int. J. Remote Sens. 2020, 41, 1986–2003. [Google Scholar] [CrossRef]

- Zhang, J.M.; Lu, C.Q.; Li, X.D.; Kim, H.J.; Wang, J. A full convolutional network based on DenseNet for remote sensing scene classification. Math. Biosci. Eng. 2019, 16, 3345–3367. [Google Scholar] [CrossRef] [PubMed]

- Cheng, G.; Xie, X.X.; Han, J.W.; Guo, L.; Xia, G.S. Remote sensing image scene classification meets deep learning: Challenges, methods, benchmarks, and opportunities. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Identity mappings in meep residual networks. arXiv 2014, arXiv:1603.05027v3. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.L.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Tan, M.X.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Wang, H.; Wen, X.; Wang, Y.; Cai, L.; Liu, Y. China’s land cover fraction change during 2001–2015 based on remote sensed data fusion between MCD12 and CCI-LC. Remote Sens. 2021, 13, 341. [Google Scholar] [CrossRef]

- Rezaee, M.; Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep convolutional neural network for complex wetland classification using optical remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very deep convolutional neural networks for complex land cover mapping using multispectral remote sensing imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef] [Green Version]

- Gunen, M.A. Performance comparison of deep learning and machine learning methods in determining wetland water areas using EuroSAT dataset. Environ. Sci. Pollut. Res. 2021, in press. [Google Scholar] [CrossRef]

- Shi, P.J.; Yuan, Y.; Zheng, J.; Wang, J.A.; Ge, Y.; Qiu, G.Y. The effect of land use/cover change on surface runoff in Shenzhen region, China. Catena 2007, 69, 31–35. [Google Scholar] [CrossRef]

- Li, T.H.; Li, W.K.; Qian, Z.H. Variations in ecosystem service value in response to land use changes in Shenzhen. Ecol. Econ. 2010, 69, 1427–1435. [Google Scholar]

- Meng, L.T.; Sun, Y.; Zhao, S.Q. Comparing the spatial and temporal dynamics of urban expansion in Guangzhou and Shenzhen from 1975 to 2015: A case study of pioneer cities in China’s rapid urbanization. Land Use Pol. 2020, 97, 104753. [Google Scholar] [CrossRef]

- Liengpunsakul, S. Artificial intelligence and sustainable development in China. Chin. Econ. 2021, 54, 235–248. [Google Scholar] [CrossRef]

- Yu, B.B. Ecological effects of new-type urbanization in China. Renew. Sust. Energ. Rev. 2021, 135, 110239. [Google Scholar] [CrossRef]

- Li, H.; Chen, P.J.; Grant, R. Built environment, special economic zone, and housing prices in Shenzhen, China. Appl. Geogr. 2021, 129, 102429. [Google Scholar] [CrossRef]

- Zhao, R.; Wu, K.N.; Li, X.L.; Gao, N.; Yu, M.M. Discussion on the unified survey and evaluation of cultivated land quality at county scale for China’s 3rd National Land Survey: A case study of Wen County, Henan Province. Sustainability 2021, 13, 2513. [Google Scholar] [CrossRef]

- Ji, F.J.; Meng, J.H.; Cheng, Z.Q.; Fang, H.T.; Wang, Y.N. Crop yield estimation at field scales by assimilating time series of Sentinel-2 data into a modified CASA-WOFOST coupled model. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4400914. [Google Scholar] [CrossRef]

- Slagter, B.; Tsendbazar, N.E.; Vollrath, A.; Reiche, J. Mapping wetland characteristics using temporally dense Sentinel-1 and Sentinel-2 data: A case study in the St. Lucia wetlands, South Africa. Int. J. Appl. Earth Obs. Geoinf. 2020, 86, 102009. [Google Scholar] [CrossRef]

- Eid, A.N.M.; Olatubara, C.O.; Ewemoje, T.A.; El-Hennawy, M.T.; Farouk, H. Inland wetland time-series digital change detection based on SAVI and NDWI indecies: Wadi El-Rayan lakes, Egypt. Remote Sens. Appl. Soc. Environ. 2020, 19, 100347. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.; Qiu, C.; Zhu, X.X. Aggregating cloud-free Sentinel-2 images with Google Earth Engine. In Proceedings of the PIA19: Photogrammetric Image Analysis, Munich, Germany, 16 September 2019; pp. 145–152. [Google Scholar]

- Chen, J.; Ban, Y.; Li, S. Open access to Earth land-cover map. Nature 2014, 514, 434. [Google Scholar]

- Zhang, X.; Liu, L.; Chen, X.; Gao, Y.; Xie, S.; Mi, J. GLC_FCS30: Global land-cover product with fine classification system at 30 m using time-series Landsat imagery. Earth Syst. Sci. Data 2021, 13, 2753–2776. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Wen, Y.D.; Zhang, K.P.; Li, Z.F.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 499–515. [Google Scholar]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In Proceedings of the ECIR 2005: Advances in Information Retrieval, Santiago de Compostela, Spain, 21–23 March 2005; pp. 345–359. [Google Scholar]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and evaluation of deep learning architectures for efficient land cover mapping with UAS hyper-spatial imagery: A case study over a wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef] [Green Version]

- Peng, J.; Chen, S.; Lu, H.L.; Liu, Y.X.; Wu, J.S. Spatiotemporal patterns of remotely sensed PM2.5 concentration in China from 1999 to 2011. Remote Sens. Environ. 2016, 174, 109–121. [Google Scholar] [CrossRef]

- Korn, M. The dike pond concept: Sustainable agriculture and nutrient recycling in China. Ambio 1996, 25, 6–13. [Google Scholar]

- Huang, X.; Han, X.P.; Ma, S.; Lin, T.J.; Gong, J.Y. Monitoring ecosystem service change in the City of Shenzhen by the use of high-resolution remotely sensed imagery and deep learning. Land Degrad. Dev. 2019, 30, 1490–1501. [Google Scholar] [CrossRef]

- Yang, R.; Ren, F.; Xu, W.; Ma, X.; Zhang, H.; He, W. China’s ecosystem service value in 1992–2018: Pattern and anthropogenic driving factors detection using Bayesian spatiotemporal hierarchy model. J. Environ. Manag. 2022, 302, 114089. [Google Scholar] [CrossRef]

- Peng, J.; Liu, Y.X.; Wu, J.S.; Lv, H.L.; Hu, X.X. Linking ecosystem services and landscape patterns to assess urban ecosystem health: A case study in Shenzhen City, China. Landsc. Urban Plan. 2015, 143, 56–68. [Google Scholar] [CrossRef]

- Zhou, H.J.; Shi, P.J.; Wang, J.A.; Yu, D.Y.; Gao, L. Rapid urbanization and implications for river ecological services restoration: Case study in Shenzhen, China. J. Urban Plan. Dev 2011, 137, 121–132. [Google Scholar] [CrossRef]

| Band Name | Spectral Region | Spatial Resolution (m) |

|---|---|---|

| Band 1 | Coastal Aerosol | 60, resampled to 10 |

| Band 2 | Blue | 10 |

| Band 3 | Green | 10 |

| Band 4 | Red | 10 |

| Band 5 | Vegetation red edge1 | 20, resampled to 10 |

| Band 6 | Vegetation red edge2 | 20, resampled to 10 |

| Band 7 | Vegetation red edge3 | 20, resampled to 10 |

| Band 8 | Near-infrared | 10 |

| Band 8A | Narrow Near Infrared | 20, resampled to 10 |

| Band 9 | Water vapor | 60, resampled to 10 |

| Band 10 | Shortwave infrared-Cirrus | 60, resampled to 10 |

| Band 11 | Shortwave infrared 1 | 20, resampled to 10 |

| Band 11 | Shortwave infrared 1 | 20, resampled to 10 |

| Band 12 | Shortwave infrared 2 | 20, resampled to 10 |

| Reclassification Categories | Original Categories of GlobeLand30 | Original Categories of GLC_FCS30 | ||

|---|---|---|---|---|

| Name | Code | Name | Code | |

| Wetland | Wetland Water Sea areas | 50 60 255 | Wetlands Water body | 180 210 |

| Non-wetland | Cropland Forest Grassland Shrubland Tundra Impervious Surface Bareland Snow/Ice No data | 10 20 30 40 70 80 90 100 0 | Rainfed cropland Herbaceous cover Tree or shrub cover Irrigated cropland Open evergreen broadleaved forest Closed evergreen broadleaved forest Open deciduous broadleaved forest Closed deciduous broadleaved forest Open evergreen needle-leaved forest Closed evergreen needle-leaved forest Open deciduous needle-leaved forest Closed deciduous needle-leaved forest Open mixed leaf forest Closed mixed leaf forest Shrubland Evergreen shrubland Deciduous shrubland Grassland Lichens and mosses Sparse vegetation Sparse shrubland Sparse herbaceous Impervious surfaces Bare areas Consolidated bare areas Unconsolidated bare areas Permanent ice and snow Filled value | 10 11 12 20 51 52 61 62 71 72 81 82 91 92 120 121 122 130 140 150 152 153 190 200 201 202 220 250 |

| Model | Metric | Metric | |||

|---|---|---|---|---|---|

| OA | Kappa | OA | Kappa | ||

| VGG16 | 0.819 | 0.778 | DenseNet121 | 0.887 | 0.863 |

| ResNet50 | 0.831 | 0.796 | DenseNet169 | 0.856 | 0.827 |

| ResNet101 | 0.828 | 0.793 | DenseNet201 | 0.861 | 0.832 |

| ResNet152 | 0.769 | 0.723 | EfficientNetB0 | 0.762 | 0.716 |

| MobileNet | 0.840 | 0.807 | EfficientNetB5 | 0.793 | 0.750 |

| MobileNetV2 | 0.807 | 0.763 | EfficientNetB7 | 0.706 | 0.647 |

| Dataset | Number of Scenes | Evaluation Metric | ||

|---|---|---|---|---|

| Related | Overlapping | OA | Kappa | |

| Global30 | 4139 | 4028 | 0.959 | 0.870 |

| GLC30 | 3954 | 3900 | 0.956 | 0.859 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, R.; Luo, F.; Ren, F.; Huang, W.; Li, Q.; Du, K.; Yuan, D. Identifying Urban Wetlands through Remote Sensing Scene Classification Using Deep Learning: A Case Study of Shenzhen, China. ISPRS Int. J. Geo-Inf. 2022, 11, 131. https://doi.org/10.3390/ijgi11020131

Yang R, Luo F, Ren F, Huang W, Li Q, Du K, Yuan D. Identifying Urban Wetlands through Remote Sensing Scene Classification Using Deep Learning: A Case Study of Shenzhen, China. ISPRS International Journal of Geo-Information. 2022; 11(2):131. https://doi.org/10.3390/ijgi11020131

Chicago/Turabian StyleYang, Renfei, Fang Luo, Fu Ren, Wenli Huang, Qianyi Li, Kaixuan Du, and Dingdi Yuan. 2022. "Identifying Urban Wetlands through Remote Sensing Scene Classification Using Deep Learning: A Case Study of Shenzhen, China" ISPRS International Journal of Geo-Information 11, no. 2: 131. https://doi.org/10.3390/ijgi11020131

APA StyleYang, R., Luo, F., Ren, F., Huang, W., Li, Q., Du, K., & Yuan, D. (2022). Identifying Urban Wetlands through Remote Sensing Scene Classification Using Deep Learning: A Case Study of Shenzhen, China. ISPRS International Journal of Geo-Information, 11(2), 131. https://doi.org/10.3390/ijgi11020131