An Adaptive Embedding Network with Spatial Constraints for the Use of Few-Shot Learning in Endangered-Animal Detection

Abstract

:1. Introduction

- (1)

- We construct a new image dataset dedicated to detecting endangered animals, including independent animal categories, whether they are endangered or not. The endangered species are represented in only a few samples, while the unendagered species are represented in more examples.

- (2)

- We propose a new framework for endangered-animal detection using few-shot learning, which can be useful in unknown scenarios by augmenting the synthetically generated parts of separate images.

- (3)

- We provide a spatial-constraints model with two main components: a knowledge dictionary and a relation network, thus enabling the avoidance of mixing foreground species with incompatible background images from a geospatial perspective.

2. Related Works

2.1. Data-Driven Detection of Wild Animals

2.2. Few-Shot Learning

3. Proposed Method

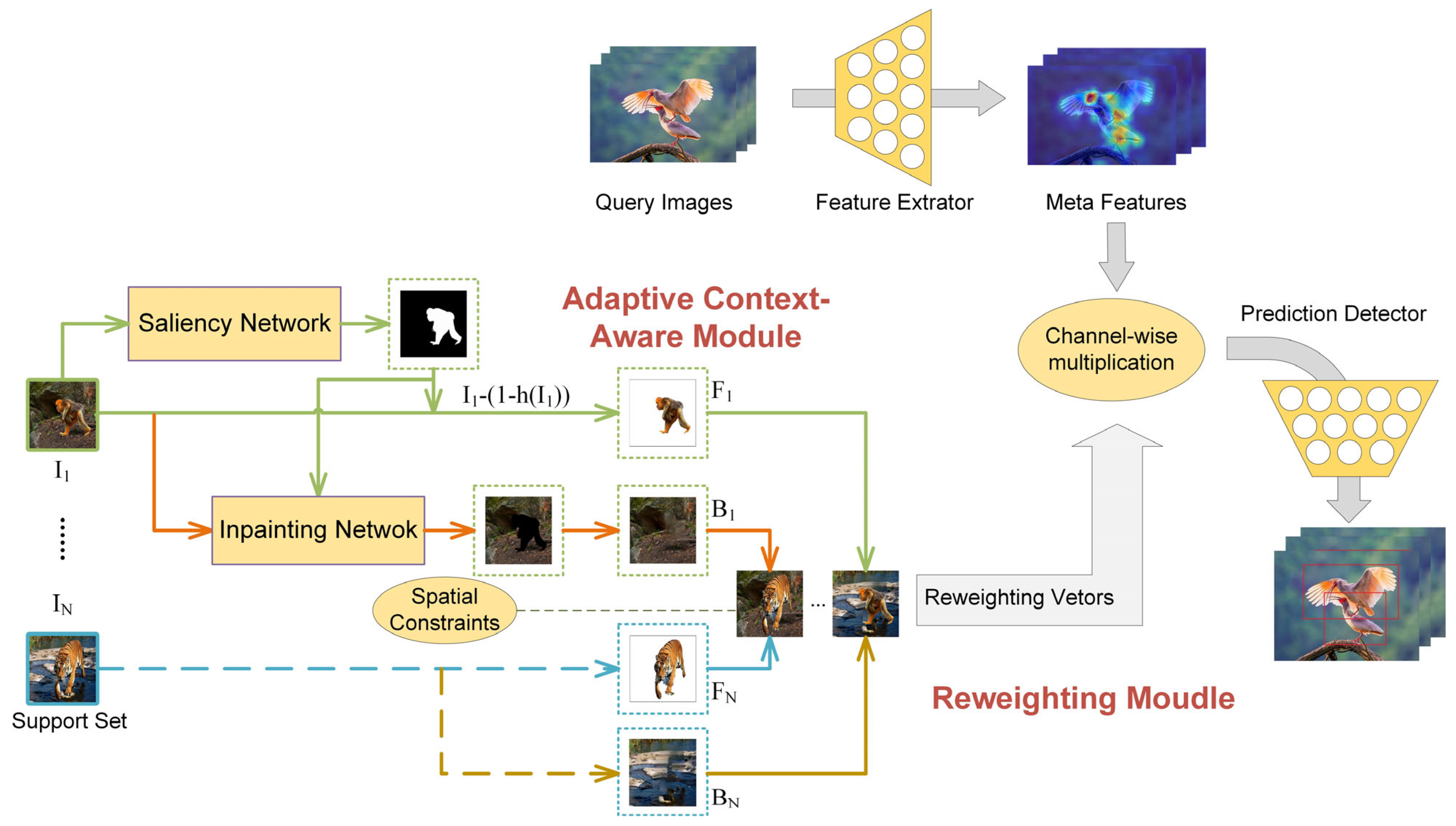

3.1. Overview

3.2. Problem Definition

3.3. The Adaptive Context-Aware Module

3.3.1. Saliency Network

3.3.2. Inpainting Network

3.3.3. Spatial Constraints

3.4. Reweighting Module

3.5. Optimization

| Algorithms 1 Detection Algorithm of the FRW-ACA Model |

| Input: Auxiliary set and support set . |

| Output: Object position (x, y, h, w) and confidence score c. |

| 1: Reorganize the training images with multiple few-shot tasks . |

| 2: for training epoch = 1 to 500 do. |

| 3: Add each task into the training and adjust the , , to optimize Equation (10). |

| 4: end for. |

| 5: Generate additional images via the ACA module and add them to the . |

| 6: for training epoch = 1 to 20 do. |

| 7: Conduct the few-shot training to fine-tune the model using images . |

| 8: end for. |

| 9: Load the model and input the query image to get the result. |

4. Experiments

4.1. Dataset

4.2. Experimental Settings

4.3. Baseline

4.4. Results and Comparisons

4.5. Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Haucke, T.; Steinhage, V. Exploiting depth information for wildlife monitoring. arXiv 2021, arXiv:2102.05607. [Google Scholar]

- Caravaggi, A.; Banks, P.; Burton, C.; Finlay, C.M.V.; Haswell, P.M.; Hayward, M.W.; Rowcliffe, M.; Wood, M. A review of camera trapping for conservation behaviour research. Remote Sens. Ecol. Conserv. 2017, 3, 109–122. [Google Scholar] [CrossRef]

- Yang, L.; Luo, P.; Change, L.C.; Tang, X. A large-scale car dataset for fine-grained categorization and verification. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ji, Z.; Liu, X.; Pang, Y.; Ouyang, W.; Li, X. Few-Shot Human-Object Interaction Recognition with Semantic-Guided Attentive Prototypes Network. IEEE Trans. Image Process. 2020, 30, 1648–1661. [Google Scholar] [CrossRef]

- Li, X.; Wu, J.; Sun, Z.; Ma, Z.; Cao, J.; Xue, J.-H. BSNet: Bi-Similarity Network for Few-shot Fine-grained Image Classification. IEEE Trans. Image Process. 2020, 30, 1318–1331. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Yu, X.; Yu, A.; Zhang, P.; Wan, G.; Wang, R. Deep Few-Shot Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2290–2304. [Google Scholar] [CrossRef]

- Gu, K.; Zhang, Y.; Qiao, J. Ensemble Meta-Learning for Few-Shot Soot Density Recognition. IEEE Trans. Ind. Inform. 2020, 17, 2261–2270. [Google Scholar] [CrossRef]

- Ma, X.; Shahbakhti, M.; Chigan, C. Connected Vehicle Based Distributed Meta-Learning for Online Adaptive Engine/Powertrain Fuel Consumption Modeling. IEEE Trans. Veh. Technol. 2020, 69, 9553–9565. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Zeng, Y.; Lin, Z.; Lu, H.; Patel, V.M. Cr-fill: Generative image inpainting with auxiliary contextual reconstruction. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef] [Green Version]

- Crouse, D.; Jacobs, R.L.; Richardson, Z.; Klum, S.; Jain, A.; Baden, A.L.; Tecot, S.R. LemurFaceID: A face recognition system to facilitate individual identification of lemurs. BMC Zool. 2017, 2, 562. [Google Scholar] [CrossRef] [Green Version]

- Witham, C.L. Automated face recognition of rhesus macaques. J. Neurosci. Methods 2017, 300, 157–165. [Google Scholar] [CrossRef] [PubMed]

- Deb, D.; Wiper, S.; Gong, S.; Shi, Y.; Tymoszek, C.; Fletcher, A.; Jain, A.K. Face recognition: Primates in the wild. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), Long Beach, CA, USA, 22–25 October 2018. [Google Scholar]

- Weinstein, B.G. A computer vision for animal ecology. J. Anim. Ecol. 2017, 87, 533–545. [Google Scholar] [CrossRef] [PubMed]

- Koniar, D.; Hargaš, L.; Loncová, Z.; Duchoň, F.; Beňo, P. Machine vision application in animal trajectory tracking. Comput. Methods Programs Biomed. 2015, 127, 258–272. [Google Scholar] [CrossRef]

- Yudin, D.; Sotnikov, A.; Krishtopik, A. Detection of Big Animals on Images with Road Scenes using Deep Learning. In Proceedings of the 2019 International Conference on Artificial Intelligence: Applications and Innovations (IC-AIAI), Vrdnik, Banja, Serbia, 30 September–4 October 2019. [Google Scholar]

- Koochaki, F.; Shamsi, F.; Najafizadeh, L. Detecting mtbi by learning spatio-temporal characteristics of widefield calcium imaging data using deep learning. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020. [Google Scholar]

- Schofield, D.; Nagrani, A.; Zisserman, A.; Hayashi, M.; Matsuzawa, T.; Biro, D.; Carvalho, S. Chimpanzee face recognition from videos in the wild using deep learning. Sci. Adv. 2019, 5, eaaw0736. [Google Scholar] [CrossRef] [Green Version]

- Kuncheva, L. Animal reidentification using restricted set classification. Ecol. Inform. 2021, 62, 101225. [Google Scholar] [CrossRef]

- Lai, N.; Kan, M.; Han, C.; Song, X.; Shan, S. Learning to Learn Adaptive Classifier–Predictor for Few-Shot Learning. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3458–3470. [Google Scholar] [CrossRef]

- Munkhdalai, T.; Yu, H. Meta networks. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, NSW, Australia, 6–11 August 2017. [Google Scholar]

- Wong, A.; Yuille, A.L. One shot learning via compositions of meaningful patches. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Boston, MA, USA, 7–13 December 2015. [Google Scholar]

- Hariharan, B.; Girshick, R. Low-shot visual recognition by shrinking and hallucinating features. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Chen, Z.; Fu, Y.; Wang, Y.X.; Ma, L.; Liu, W.; Hebert, M. Image deformation meta-networks for one-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Xu, Z.; Zhu, L.; Yang, Y. Few-shot object recognition from machine-labeled web images. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ramalho, T.; Garnelo, M. Adaptive posterior learning: Few-shot learning with a surprise-based memory module. arXiv 2019, arXiv:1902.02527. [Google Scholar]

- Kaiser, Ł.; Nachum, O.; Roy, A.; Bengio, S. Learning to remember rare events. arXiv 2017, arXiv:1703.03129. [Google Scholar]

- Wang, X.; Yu, F.; Wang, R.; Darrell, T.; Gonzalez, J.E. Tafe-net: Task-aware feature embeddings for low shot learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Wang, R.-Q.; Zhang, X.-Y.; Liu, C.-L. Meta-Prototypical Learning for Domain-Agnostic Few-Shot Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–7. [Google Scholar] [CrossRef]

- Li, H.; Dong, W.; Mei, X.; Ma, C.; Huang, F.; Hu, B.G. LGM-Net: Learning to generate matching networks for few-shot learning. In Proceedings of the 36th International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Liu, Q.; Zhang, X.; Liu, Y.; Huo, K.; Jiang, W.; Li, X. Multi-Polarization Fusion Few-Shot HRRP Target Recognition Based on Meta-Learning Framework. IEEE Sensors J. 2021, 21, 18085–18100. [Google Scholar] [CrossRef]

- Rahman, S.; Khan, S.; Porikli, F. A Unified Approach for Conventional Zero-Shot, Generalized Zero-Shot, and Few-Shot Learning. IEEE Trans. Image Process. 2018, 27, 5652–5667. [Google Scholar] [CrossRef] [Green Version]

- Kang, B.; Liu, Z.; Wang, X.; Yu, F.; Feng, J.; Darrell, T. Few-shot object detection via feature reweighting. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–4 November 2019. [Google Scholar]

- Wang, C.; Liu, Z.; Chan, S.-C. Superpixel-Based Hand Gesture Recognition with Kinect Depth Camera. IEEE Trans. Multimed. 2015, 17, 29–39. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, J.; Koniusz, P. Few-shot learning via saliency-guided hallucination of samples. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. (Csur) 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The open images dataset v4. Int. J. Comput. Vision 2020, 128, 1956–1981. [Google Scholar] [CrossRef] [Green Version]

- Xian, Y.; Lampert, C.H.; Schiele, B.; Akata, Z. Zero-shot learning—a comprehensive evaluation of the good, the bad and the ugly. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2251–2265. [Google Scholar] [CrossRef] [Green Version]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2009, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2019. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Yan, X.; Chen, Z.; Xu, A.; Wang, X.; Liang, X.; Lin, L. Meta r-cnn: Towards general solver for instance-level low-shot learning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–4 November 2019. [Google Scholar]

- Li, X.; Cheng, G.; Wang, L.; Wang, J.; Ran, Y.; Che, T.; Li, G.; He, H.; Zhang, Q.; Jiang, X.; et al. Boosting geoscience data sharing in China. Nat. Geosci. 2021, 14, 541–542. [Google Scholar] [CrossRef]

| Method/Shot | 1-Shot | 2-Shot | 3-Shot | 5-Shot | 10-Shot |

|---|---|---|---|---|---|

| EfficientDet-ft [44] | 0.07 | 0.34 | 2.61 | 7.52 | 18.74 |

| EfficientDet-ft-full [44] | 1.14 | 3.58 | 12.96 | 19.46 | 37.86 |

| CenterNet-ft [45] | 0.13 | 0.95 | 3.64 | 8.79 | 19.59 |

| CenterNet-ft-full [45] | 0.89 | 5.31 | 15.37 | 23.54 | 42.76 |

| RetinaNet-ft [46] | 0.26 | 1.29 | 4.15 | 7.43 | 21.31 |

| RetinaNet-ft-full [46] | 1.48 | 6.75 | 14.47 | 22.21 | 44.38 |

| YOLOv4-joint [43] | 0.00 | 0.00 | 0.29 | 0.86 | 19.76 |

| YOLOv4-ft [43] | 0.00 | 0.42 | 5.84 | 8.34 | 25.81 |

| YOLOv4-ft-full [43] | 1.31 | 7.93 | 17.04 | 24.93 | 49.93 |

| FRW [34] | 25.51 | 48.15 | 55.07 | 65.01 | 73.08 |

| Meta R-CNN [47] | 21.13 | 46.37 | 56.92 | 67.34 | 75.13 |

| FRW-ACA(Intra, Ours) | - | 50.67 | 58.97 | 69.70 | 77.01 |

| FRW-ACA(Inter *, Ours) | 42.39 | 53.60 | 60.23 | 70.06 | 78.56 |

| FRW-ACA(Inter, Ours) | 43.18 | 54.51 | 61.49 | 71.18 | 80.42 |

| Method/Shot | 1-Shot | 2-Shot | 3-Shot | 5-Shot | 10-Shot |

|---|---|---|---|---|---|

| EfficientDet-ft [44] | 0.04 | 0.28 | 1.06 | 3.69 | 10.48 |

| EfficientDet-ft-full [44] | 0.12 | 1.37 | 5.52 | 12.16 | 28.63 |

| CenterNet-ft [45] | 0.09 | 0.52 | 1.48 | 4.72 | 12.09 |

| CenterNet-ft-full [45] | 0.15 | 2.84 | 7.09 | 17.12 | 30.23 |

| RetinaNet-ft [46] | 0.12 | 0.39 | 1.27 | 4.93 | 12.64 |

| RetinaNet-ft-full [46] | 0.18 | 2.67 | 7.22 | 16.43 | 33.19 |

| YOLOv4-ft [43] | 0.00 | 0.66 | 1.51 | 3.83 | 17.18 |

| YOLOv4-ft-full [43] | 0.00 | 4.51 | 10.98 | 22.05 | 42.61 |

| FRW [34] | 10.17 | 32.86 | 42.03 | 53.86 | 62.95 |

| Meta R-CNN [47] | 7.38 | 28.91 | 44.97 | 57.23 | 66.42 |

| FRW-ACA(Intra, Ours) | - | 37.32 | 47.28 | 60.56 | 68.27 |

| FRW-ACA(Inter *, Ours) | 26.32 | 38.26 | 50.09 | 60.83 | 71.45 |

| FRW-ACA(Inter, Ours) | 26.91 | 39.05 | 51.27 | 62.34 | 74.91 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, J.; Li, J. An Adaptive Embedding Network with Spatial Constraints for the Use of Few-Shot Learning in Endangered-Animal Detection. ISPRS Int. J. Geo-Inf. 2022, 11, 256. https://doi.org/10.3390/ijgi11040256

Feng J, Li J. An Adaptive Embedding Network with Spatial Constraints for the Use of Few-Shot Learning in Endangered-Animal Detection. ISPRS International Journal of Geo-Information. 2022; 11(4):256. https://doi.org/10.3390/ijgi11040256

Chicago/Turabian StyleFeng, Jiangfan, and Juncai Li. 2022. "An Adaptive Embedding Network with Spatial Constraints for the Use of Few-Shot Learning in Endangered-Animal Detection" ISPRS International Journal of Geo-Information 11, no. 4: 256. https://doi.org/10.3390/ijgi11040256

APA StyleFeng, J., & Li, J. (2022). An Adaptive Embedding Network with Spatial Constraints for the Use of Few-Shot Learning in Endangered-Animal Detection. ISPRS International Journal of Geo-Information, 11(4), 256. https://doi.org/10.3390/ijgi11040256